Abstract

Diabetic retinopathy (DR) is a devastating condition caused by progressive changes in the retinal microvasculature. It is a leading cause of retinal blindness in people with diabetes. Long periods of uncontrolled blood sugar levels result in endothelial damage, leading to macular edema, altered retinal permeability, retinal ischemia, and neovascularization. In order to facilitate rapid screening and diagnosing, as well as grading of DR, different retinal modalities are utilized. Typically, a computer-aided diagnostic system (CAD) uses retinal images to aid the ophthalmologists in the diagnosis process. These CAD systems use a combination of machine learning (ML) models (e.g., deep learning (DL) approaches) to speed up the diagnosis and grading of DR. In this way, this survey provides a comprehensive overview of different imaging modalities used with ML/DL approaches in the DR diagnosis process. The four imaging modalities that we focused on are fluorescein angiography, fundus photographs, optical coherence tomography (OCT), and OCT angiography (OCTA). In addition, we discuss limitations of the literature that utilizes such modalities for DR diagnosis. In addition, we introduce research gaps and provide suggested solutions for the researchers to resolve. Lastly, we provide a thorough discussion about the challenges and future directions of the current state-of-the-art DL/ML approaches. We also elaborate on how integrating different imaging modalities with the clinical information and demographic data will lead to promising results for the scientists when diagnosing and grading DR. As a result of this article’s comparative analysis and discussion, it remains necessary to use DL methods over existing ML models to detect DR in multiple modalities.

1. Introduction

Diabetes mellitus affects millions of adults all over the world. It increases the risk for death and devastating complications related to end-organ damage from the disease. It may lead to nephropathy, neuropathy, retinopathy, and many other diseases such as dementia, non-alcoholic steatohepatitis, psoriasis, metabolic syndrome, cardiovascular disease, and cancer. Most of the diabetes-related complications are caused by overlapping pathophysiology [1,2].

Diabetic retinopathy (DR) is a common diabetic complication, which is the main cause of retinal blindness in the US [3]. DR is a potentially devastating, vision-threatening condition which is considered as an inflammatory, neurovascular complication. It is also associated with microvascular damage, preceded by neuronal injury/dysfunction preceding clinical microvascular damage. Clinical research has demonstrated the factors that predict the development of retinopathy in diabetic patients. The main predictors of retinopathy progression are duration of diabetes and hemoglobin A1c. According to The Diabetes Control and Complications Trial (DCCT), these factors explain 11% of the risk of developing retinopathy [4]. Similarly, the Wisconsin Epidemiologic Study of DR (WESDR), a large population-based study, studied the effect of hemoglobin A1c, cholesterol, and blood pressure. It was found that they all may contribute to retinopathy. The effect of these factors is not enough to explain the risk of progression of the DR. Therefore, many other factors may play a role in the development of DR [5].

Strong genetic factors have been studied in multiple family studies. These have been suggested as one of the factors affecting DR development in both DM Type 1 and Type 2 [6]. Other biochemical pathways are linked to complication development. Other studies have proposed that several biochemical pathways, such as oxidative stress and activation of protein kinase, are linked to hyperglycemia and microvascular complications. These pathological processes are affecting the disease process through effects on signaling, cellular metabolism, and growth factors [7,8,9]. To understand DR pathology, it is important to view it as a shared pathophysiologic process that damages the pancreatic beta-cell. In addition, the same pathophysiological process may cause cell and tissue damage, leading to organ dysfunction. Understanding the common pathophysiology is key to providing a broad range of treatment options for this common and critical complication [10].

It is also highlighted that it is vital to develop more precise and timely methods for detecting the early stages of the disease and predict its course of progression. This is important as it allows techniques that can discover any change in the retinal structure event before any signs or symptoms evolve clinically [10]. With the recent advances in the imaging modalities, clinicians have been using retinal imaging as a major component in grading and diagnosis of DR. Different imaging modalities have been used in the diagnosis and screening of DR. These include fluorescein angiography (FA), optical coherence tomography (OCT), fundus photographs (FP), and OCT angiography (OCTA) [11]. These imaging techniques provide large numbers of detailed images of the retina, which allows detection of small changes with high resolution level. However, the abundantly available images are hard to manually analyze during clinical practice. Moreover, the data related to retinal diseases are affected by increasing age. Therefore, the imaging data may change with the rising life expectancy.

Many earlier reviews have provided an extensive study of comprehensive retina assessment components and correlation with the levels of DR, as well as management for DR [12,13,14,15]. The current review discusses the classification of DR severity, and the role of the different imaging modalities in the management of the DR.

2. Clinical Staging of Diabetic Retinopathy Using Retinal Imaging

Evaluation of DR includes much information that raises the importance of a structured and well-studied framework to standardize terminology and the sharing of data among the healthcare providers who play a role in the management of diabetic patients. Therefore, simplified clinical disease severity scales were developed. The Early Treatment DR Study (ETDRS) and the WESDR are now the cornerstones for the clinical classification systems used internationally [16,17]. Both of these classifications have studied DR and diabetic macular edema (DME), focusing on the risk of progression and correlating each stage to certain level of risk. In addition, this assessment was very helpful in risk stratification and staging the DR findings in various clinical settings and accordingly led to evidence-based clinical recommendations for DR management.

ETDRS guidelines are currently considered the gold standard for staging DR. ETDRS employs data from color FP, intravenous fluorescein angiography (IVFA), and dilated fundus exam findings, and stratifies disease severity using quadrant analysis. Grading criteria consist of multiple findings. It includes cotton wool spots, exudates, microaneurysms, neovascularization, and retinal hemorrhages [18,19,20]. However, the complexity of the severity scale made it impractical in clinical settings. Therefore, the full ETDRS severity scale is not used by most physicians [21]. However, various other systems for grading DR are commonly employed, including the International Clinical DR (ICDR) scale, which is recognized in either clinical or CAD environments. For DME, there are four severity scores, while there are five for DR. This scale requires a smaller field of view for DR. We describe the ICDR levels below, which are also presented in Table 1. Figure 1 and Figure 2 show the normal retina against four severity levels of DR based on FP and FA images (mild NPDR, moderate NPDR, severe NPDR, and PDR).

Table 1.

Characteristics of the DR stages.

Table 1.

Characteristics of the DR stages.

| Stage | Characteristic |

|---|---|

| Normal | No retinal disease. |

| Mild NPDR | This stage contains a microaneurysms which are a small amount of fluid in the retinal blood vessels, causing the macula to swell. |

| Moderate NPDR | Retinal blood vessels become blocked due to their increased swelling, prohibiting the retina from being nourished. |

| Severe NPDR | Larger areas of retinal blood vessels are blocked, sending signals to the body to generate new blood vessels in the retina. |

| PDR | New blood vessels are generated in the retina abnormally, often leading to fluid leakage due to their fragility, causing a reduced field of vision, blurring, or blindness. |

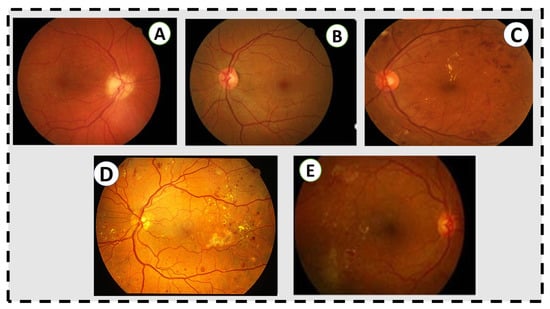

Figure 1.

Grading in the fundus image. (A) No retinal disease. (B) Mild NPDR. (C) Moderate NPDR. There are some microaneurysms, dot and blot hemorrhages in the temporal macula, and a few flecks of lipid exudate, but no venous beading or other microvascular abnormalities. (D) Severe NPDR. There are abundant microaneurysms, dot and blot hemorrhages, extensive lipid exudates, and intraretinal microvascular abnormalities. (E) Active proliferative diabetic retinopathy, untreated, with neovascularization at the arcades and intraretinal lipid exudates and hemorrhages in the temporal macula.

IVFA is for primary assessment of the retinal vasculature. It makes it easy to identify variable vascular abnormalities such as neovascularization, capillary nonperfusion, and disruption of the blood–retinal barrier [22,23,24]. However, histological images are still better than IVFA to examine the lower capillary density values and retinal capillary networks [25]. In conclusion, IVFA has many limitations. It is time-consuming, invasive, and occasionally causes nausea, pruritus, and even anaphylaxis. In addition, it is limited in its resolution [26,27,28].

Eyes with DR are classified according to international classification in different DR severity stages; non-proliferative (NPDR) and proliferative (PDR) stages, with and without macular edema [5]. There are multiple classification systems; however, the most clinically important are those assessing the risk for disease progression and vision loss due to DR. There are five grades of retinopathy classified according to the risk of progression. Eyes with severe NPDR are considered to be at high risk for developing PDR. A more simple clinical approach has been developed for assessing the severity of the DR to avoid the complexity of ETDRS [29].

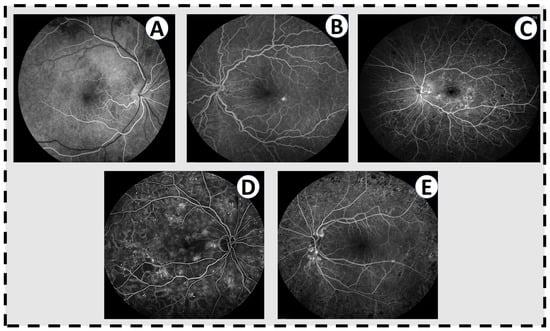

Figure 2.

Fluorescien angiograms for varying levels of DR. (A) A normal angiogram in the early phase, where the arteries have filled with fluorescein dye but the veins have not. There is nothing in this eye. (B) Mild nonproliferative DR with a few scattered microaneuryms and a single pinpoint area of leakage inferotemporal to the fovea. (C) Moderate nonproliferative DR with multiple microaneurysms throughout the fundus, significant leakage of dye throughout the macula, and blockage in the periphery from intraretinal hemorrhages. (D) Severe nonproliferative DR with abundant microaneurysms and dark areas on the angiogram corresponding to capillary non-perfusion. (E) Active proliferative DR with leakage from the optic disc from neovascularization. The retinopathy has been treated with laser (dark spots in the periphery) but there remains some level of neovascular activity.

Reviewing of ETDRS proposed that the 4:2:1 rule should be used as the main simplified method of classification of severe NPDR. Severe NPDR may include eyes with quadrants that have two quadrants containing definite venous beading (VB), extensive retinal hemorrhages (approximately 20/quadrant), or any quadrant containing definite intraretinal microvascular abnormalities (IRMA). Around 17% of eyes with NPDR will develop the high-risk proliferative disease within one year, while 44% will do in 3 years. Difficulty in recognizing IRMA and VB is a major concern. That is why more surrogate markers such as microaneurysms or retinal hemorrhages have been evaluated to be used as a marker for severe NPDR, as they are easy to recognize. Reevaluation of data from the WESDR showed that IRMA and VB are still more reliable in the prediction of the risk of progression to PDR. Microaneurysms and retinal hemorrhages lack the concordance; therefore, microaneurysms and retinal hemorrhages alone are not enough to predict the risk of progression. Moreover, hemorrhages alone were strongly related to risk of progression of either IRMA or VB; however, they were not as strong in predicting the risk of progression to PDR.

Hard or soft exudates are not indicative of the presence of VB or IRMA. Therefore, specific identification of IRMA and VB is crucial and should not depend on exudates or H/MA alone to discriminate among moderate and severe NPDR. Therefore, a description of this clinical disease severity scheme should include photographs of IRMA and VB. Moderate NPDR has findings that are more than exclusively microaneurysm and more than the 4:2:1 rule.

The ICDR clinical disease severity scale is meant to be a practical and reliable method of grading the severity of DR and DME. This system is intended to allow all the healthcare providers dealing with DR to grade the severity of the disease. However, the implementation of this staging system is mainly affected by examiner skills and the available equipment. The more precise and appropriate the grading of the DR, the more effective the management of the cases and timely referrals to highly specialized treatment centers. It is important to know that this system is mainly for grading and not for the treatment of DR. It is recognized that a better staging system would be implemented in the treatment guidelines and protocols, similar to the ETDRS and DR study. However, the variable healthcare delivery systems, as well as specific practice pattern, may lead to different management recommendations. This staging system is mainly targeting experienced ophthalmologists and skilled healthcare teams. However, hopes are high to use this staging system to provide common knowledge that would allow a consistent means of communication between all healthcare providers dealing with patients with DR. Therefore, the success of this system relies mainly on the wide range of exposure to ophthalmologists, other retinal care providers, and all related specialties such as primary care physicians, endocrinologists, podiatrists, and diabetologists. All the healthcare providers dealing with patients with diabetes should be familiar with these scales. The common standards and evaluation structure would provide similar care in managing DR among different providers. Nonetheless, continuous review and evaluation of the usefulness and practicality of using this system should be implemented to adopt any changes that might be needed in certain cases or settings.

In April 2002, the Global DR Group developed a new severity scale for DR to avoid the disadvantages of using the ETDRS severity scale. ETDRS was not easy to use or practical because it may have levels higher than those required for clinical evaluation. Therefore, the assessment becomes more complicated and requires high levels of skills and experience. In addition, complicated data require a standard means of sharing information and common terminology, which was not available [30,31,32]. The new disease severity scale consists of five levels. These levels are arranged in an increasing manner according to the severity and the risk of progression of DR. If there is no apparent retinopathy, it is the first level. The second level is considered mild NPDR, and it includes ETDRS stage 20 (microaneurysms only). For the first and second levels, low risk for progression is expected.

ETDRS levels are included in moderate NPDR, which is considered the third level. In this level, there is a significant risk of progression of the disease. Severe NPDR, which is the fourth level, has the highest risk for progression to PDR. The fifth level is proliferative DR. High risk with significant rate of progression indicates the fifth level. All eyes in this level have vitreous hemorrhage or neovascularization. Differentiating the diseased eyes into eyes with or without DME is a critical initial step. DME is an important structural complication of DR and the most common cause of blindness in DR. However, it has not traditionally been used to grade the overall level of retinopathy, as it can occur at any level of retinopathy. Nonetheless, it is important for clinicians to identify DME because of its effects on vision and our ability to treat it effectively. Two features in these eyes may help to lessen the variations in the examiner’s education and availability. In the first level, lipid in the posterior pole or apparent retinal thickening should be evaluated. In the second level, details of the retinal thickening and lipid from the fovea should be documented. If there is foveal involvement, this eye is considered to show severe DME. The eyes then can be classified according to the distance between the lesion and the macula. While it is distant from the macula, this is considered mild DME. If it is close to the fovea, it is graded as moderate DME. This severity scale helps in appropriate management through proper grading of the severity, and accordingly leads to more consistent referrals to highly specialized treatment centers. The characteristics of DR stages are summarized in Table 1.

3. Imaging Modalities for Diabetic Retinopathy

Multiple imaging techniques have been used widely in ophthalmology evaluation. FA, OCT, OCTA, and FP imaging are recently widely implemented by the ophthalmologists. It is worth noting that the more data that are available, the harder it is becoming to analyze the data manually. Therefore, automated systems to analyze the huge amount of data have been developed. Ophthalmoscopy, both direct and indirect, has been used to evaluate the DR. In addition, colored FP, single-field photography, and FA have been widely used. In the next subsections, we provide individual overviews for each modality used in DR diagnosis/grading.

3.1. Fluorescein Angiography (FA)

FA has historically been an important imaging modality for the assessment of DR, and still remains so to this day. It was the gold standard for evaluating capillary non-perfusion, ischemia, and neovascularization (NV) in the retina. It is particularly sensitive for this last feature, identifying areas of NV that are not identified in clinical examinations. However, this modality is invasive, time-consuming, and unwieldy. It involves placing an intravenous line in a patient, infusing fluorescein, and taking multiple photos of the patient over 10–15 min. Patient cooperation must be high in order to take useful images, something that can be challenging in patients with multiple co-morbidities. Figure 2 shows FA images for varying levels of DR against healthy retina.

3.2. Optical Coherence Tomography (OCT)

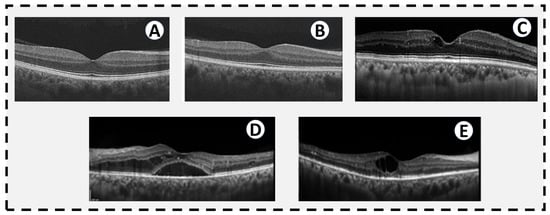

OCT is one of the most common imaging modalities used to evaluate DR. It projects a pair of near-infrared light beams into the eye to provide images of the retina. The reflected images are mainly affected by the thickness and the reflectivity of the retinal structures. The emerging beams are reflected on the measuring system [33]. OCT provides cross-sectional images of the retina and allows the measurement of the thickness of the retina. Cross-sectional images allow quantitative assessment of the thickness of the retina, which is crucial in the evaluation of the DME [34]. Figure 3 shows the different grades of DR against healthy retina.

3.3. Optical Coherence Tomography Angiography (OCTA)

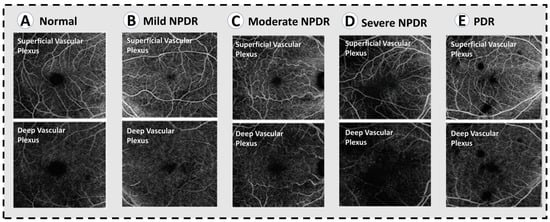

In addition to evaluating the DR using OCT, OCTA is another relatively fast and noninvasive way of doing this. Figure 4 shows different OCT angiograms across varying severities of DR against healthy retina. In addition, the foveal microvasculature can be examined using this imaging modality (Figure 5) [35,36,37,38,39,40,41]. An OCT imaging sequence is processed with OCTA’s motion contrast method. These images are proceeded for obtaining perfusion maps without requiring extrinsic dye injection. OCTA enables measurement of vascular metrics. These metrics are shown to be closely consistent with histology [42,43]. In addition, it is correlated with other in vivo imaging modalities [38,39,44,45]. Ophthalmologists use deep vascular plexus (DVP) and superficial vascular plexus (SVP) of OCTA, which are defined as between the the inner border of the inner nuclear layer and internal limiting membrane, and between the inner border nuclear layer and the outer border of the outer plexiform layer, respectively, as shown in Figure 6; to detect and evaluate DR. One of the important observed findings in the DR is expansion of the foveal avascular zone (FAZ). Multiple studies have studied methods of measuring the foveal avascular zone (FAZ). The loss of capillaries from this region has been linked to substantial visual damage [46,47]. In addition, FAZ enlargement is noticed in sickle cell retinopathy and branch retinal vein occlusion [47,48,49,50,51,52]. Therefore, evaluation and visual assessment of FAZ is essential to determining macular perfusion and the degree of retinal damage. OCTA and IVFA measurements for FAZ dimensions have been found to be different [49,50,51]. Many recent studies have evaluated the role of OCTA in evaluation of FAZ dimensions [36,37,38,39,41]. To measure FAZ dimensions, one additional step is required because axial length measurement is required to account for different retinal enlargement [53,54]. This step is important to ensure the accurate calculations and is not always available. With OCTA, several publications have measured metrics in healthy retina and DR without axial length correction. These studies calculated the acircularity index, a metric in conjunction with adaptive optics imaging for quantifying irregularities in the FAZ, as well as the axis ratio of the FAZ [55]. Methods such as these can be used as biomarkers to identify vascular changes before the development of funduscopically visible DR and may help determine the severity level of DR.

Figure 3.

OCTs of different levels of diabetic retinopathy. (A) A normal OCT in a patient without diabetes. (B) OCT from a diabetic patient without DR by traditional historical criteria, but subtle changes in thickness and reflectivity of some of the retinal layers. (C) Mild NPDR with a small cystic space at the fovea. (D) Severe NPDR with diffuse diabetic macular edema extending into the subretinal space. (E) PDR with a large central cystic space and intraretinal hyperreflective spots temporally indicative of intraretinal lipid exudates. There is mild thinning of the temporal inner retina consistent with ischemia seen in PDR.

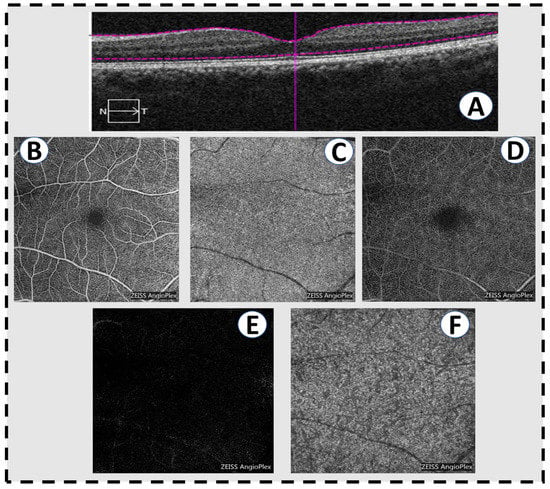

Figure 4.

OCT angiograms across varying severities of diabetic retinopathy. (A) Normal OCTA. (B) Mild NPDR, showing mild loss of vessel density. (C) Moderate NPDR with lower vessel caliber and further loss of vessel density. (D) Severe NPDR, showing significant areas of capillary non-perfusion in the superifical and deep plexuses, as well as microaneurysms. (E) PDR, showing similar vascular changes to severe NPDR.

Figure 5.

Optical coherence tomography angiography scans in a normal patient. (A) A horizontal slice of a conventional OCT scan showing normal macular anatomy. (B) The superficial vascular plexus of the inner retina. (C) The choriocapillaris. (D) The deep vascular plexus of the middle retina. (E) The outer retina is avascular, hence the absence of retinal vessels in a normal eye. (F) The deeper choroid.

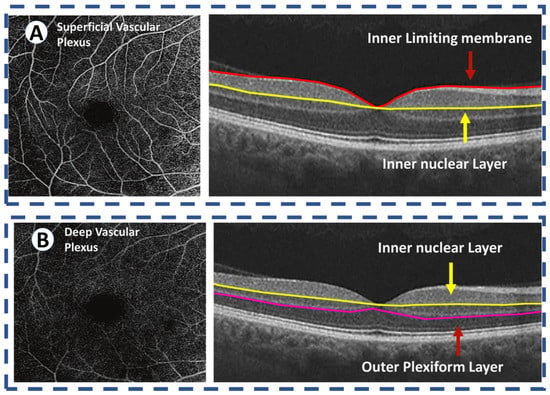

Figure 6.

OCT angiography of the normal retina images the two vascular plexuses. (A) The superficial vascular plexus supplies the inner retina, defined as between the internal limiting membrane and the inner border of the inner nuclear layer. (B) The deep vascular plexus supplies the middle retina, defined as between the inner border nuclear layer and the outer border of the outer plexiform layer. The outer retina is avascular and receives its blood supply from the choriocapillaris.

3.4. Color Fundus Photography

Color FP uses seven standard fields (SSFs) in grading of the DR disease severity [20]. The use of SSFs is reliable and accurate; however, it is considered impractical as it requires high labor, more specialized photographers, and photograph interpreters. In addition, highly complicated photography equipment is needed. Although ophthalmoscopy is the most commonly utilized approach for monitoring DR, it requires specialized eyecare providers to produce highly sensitive results. Therefore, using SSFs color photography is generally considered more sensitive [56,57]. To analyze large volumes of available data, multiple systems have been developed. These systems still may be impractical for screening for DR as they are sophisticated and require highly skilled eye care providers and imaging technicians. The first system is the Joslin vision network [58]. It was found that there is a great agreement between three-field and digital-video color fundus photographs in the determination of DR severity and the rate of referral to highly specialized ophthalmologists for more clinical evaluation. In addition, results of Joslin vision network imaging have been greatly matched by the eye examination by retina specialists [59]. The Inoveon DR system recorded SSF color photography images on both 35 mm film and on proprietary systems [60]. The results were highly sensitive and specific. Although this system is highly accurate in DR referral decisions, it requires pupillary dilation and is expensive, hence it is not commonly used as a screening procedure. The DigiScope is a semiautomated system that evaluates visual acuity, acquires fundus images, and transmits the data through telephone lines to a remote reading center [61]. The results of recent studies are promising; however, more studies are needed to evaluate the test’s usefulness and accuracy. Diabetic patients were also evaluated by single-field FP. An ophthalmologist evaluated images of single-field digital monochromatic nonmydriatic photography (SNMDP) of both a non-pharmacologically dilated pupil and a pharmacologically dilated one using ophthalmoscopy, and then color stereoscopic photographs were obtained in SSFs [62]. The results of both SNMDP and SSFs showed excellent agreement regarding the degree of DR and the rate of referral. In addition, the SNMDP compared with SSFs had a sensitivity of 78% and a specificity of 86%, respectively. In comparison with SSFs, SNMDP outperformed ophthalmoscopy through pharmacologically dilated pupils. The sensitivity and specificity for SNMDP are 100% and 71%, respectively, when compared with direct ophthalmoscopy. SNMDP diagnosed all patients identified by ophthalmoscopy for referral. In multiple studies, SNMDP has been shown to be superior to dilated ophthalmoscopy [63,64]. We need to highlight that single-field photography cannot substitute the comprehensive ophthalmic examination. However, there are many well-designed comparative studies that proposed that single-field FP can be used initially for evaluation of DR by identifying patients with retinopathy before referral to ophthalmic assessment and management.

It is effective and practical because it is easy to use, convenient, affordable, and capable of detecting retinopathy, as one image is only required. Most importantly, it is impractical to manually evaluate these biomarkers, even in a dedicated ophthalmic reading center, because the sheer volume of imaging data exceeds capacities of human readers. Therefore, the future of ophthalmology involves automation of image data evaluation as seen by the large number of studies in this field on the automated segmentation in OCT [65,66,67,68] or automated detection of signs of DR in color FP [66,69,70,71]. Other computational methods using deep learning (DL) and artificial intelligence (AI) have been proposed by many of the recent studies [65,70,72,73,74], which is the new future of the medicine dealing with these diseases [75].

4. Literature on CAD Systems for DR Diagnosis and Grading

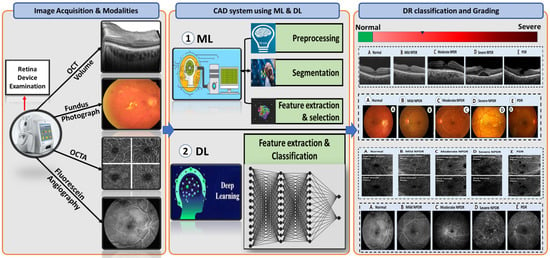

We provide in-depth analysis and present research and developments on identifying and diagnosing DR using a comparative analysis of different imaging modalities. There have been numerous tools and databases developed for the treatment of DR disease. A review of the various image modalities used in CAD research and applications is part of evaluating the work that uses images as data. Our objective is to present data from research concerning DR disease using the different image modalities. Figure 7 introduces an overview of the flow of a generic CAD system for DR classification. Typically, it starts with image acquisition for different image modalities from retina device examination. Then, the CAD system either works on applying different segmentation approaches to segment different lesions related to DR disease and extracting the features using ML-based methods or works on applying DCNN convolutional layers and softmax to extract features and make the DR classification. Finally, by applying DL/ML approaches on these images, the system can decide and grade DR into one of the following grades: healthy retina, NPDR, mild NPDR, moderate NPDR, severe NPDR, and PDR.

Figure 7.

A flow diagram for a generic computer-assisted diagnostic (CAD) for DR classification. Typically, it starts with the image acquisition for possible different retinal modalities (FP, OCT, and OCTA) (left panel). Then, it applyies prepossessing and segmentation techniques on these modalities as well as applies ML methods and DL approaches (middle panel). Finally, the system makes a decision and diagnoses or grades DR based on the extracted features from different retinal modalities (right panel).

An examination of two publicly available databases, PubMed and IEEE Xplore, was conducted for this review. Choosing the databases was based on their accessibility, quality, and availability. Based on our literature review, we considered all relevant journal articles and conference papers up to February 2022. The published articles in the last eight years (from 2015 to 2022) on this topic reveal that AI-assisted DR diagnosis has progressed significantly.

The field of ocular imaging has made significant advances over the past century and it has emerged as a vital aspect of managing ocular disease and clinical management of patients in ophthalmology. A substantial amount of research and development has been conducted on CAD since the early 1980s, based on radiology images and medical images. In this review, we summarize the literature for early detection techniques for DR based on a combination of image processing, ML, and DL approaches, as seen in Table 2.

Table 2.

Recent studies for early detection and grading of DR based on a combination of image processing, ML, and DL approaches.

4.1. CAD System Based on Machine Learning Techniques

In this review, there are some ML algorithms used to diagnose and grade DR. Some of these ML algorithms rely on extracting hand-crafted features from image modality (e.g., texture and shape features [138,139,140,141,142,143,144,145]), as well as employing feature reduction techniques (i.e., linear discriminant analysis (LDA)-based feature selection, minimum redundancy maximum relevance (MRMR) feature selection [146], and principal component analysis (PCA) [147]). In addition, some of the other ML algorithms used in the classification and the prediction of the disease (i.e., k-nearest neighbor (KNN), support vector machine (SVM), logistic regression (LR), gradient boosting tree (XGBoost), artificial neural network (ANN), random forest (RF), and decision trees (DT)). These ML methods can use different imaging techniques for eye examination (i.e., FA, FP, OCT, and OCTA) to detect and grade DR. For example, Liu et al. [126] developed a CAD system that differentiates between healthy eye and DR using OCTA images. They applied the discrete wavelet transform on SVP, DVP, and retinal vascular network (RVN) images to extract the texture features. Then, they fit four classification models, namely, LR, LR with the elastic net penalty (LR_En), SVM, and XGBoost. They achieved 82% accuracy and AUC of 83%. Other ML-based CAD systems use FP images to detect or grade DR [76,78,80,81,82,83,84,88]. Most of them utilized the different ML classifier models fed with extracted features from FP images.

Several other ML-based methods have been designed to use the OCT and OCTA images to diagnose and grade DR, as proposed in [92,127,133,148,149,150]. Eltanboly et al. [92] designed a CAD method for DR diagnosis using OCT images. In this study, the CAD system started with segmenting twelve retinal layers from OCT images using an adaptive shape model based on Markov–Gibbs random field (MGRF) [151]. Then, they started working on extracting morphological and texture features from segmented layers. Finally, they applied an autoencoder classifier to classify the OCT images into normal and DR. Another study [133] introduced a CAD system to differentiate between DR cases and healthy retina based on 3D-OCT images. This system starts by segmenting the OCT into twelve layers and then extracts novel texture features (i.e., MGRF) from these segmented layers. Lastly, an NN is applied at the end of the system with a majority-voting schema to obtain the final diagnosis of DR. Sandhu et al. [118] introduced a CAD system that utilized OCT and OCTA images in addition to clinical information to diagnose DR. This system worked on extracting morphological and texture features from OCT and OCTA images and integrating them with the clinical information. Then, these features were fed into a random forest classifier to obtain the final diagnosis of DR. An automated method to analyze OCT volumes was presented by researchers [152] for DR diagnosis. First, they filter the images properly using specialized masks, and after registering, they create anatomically identical ROIs from different OCT images. In a subsequent step, stochastic gradient descent optimization is used to compute efficient B-spline transformations. Based on 105 subjects’ experiments, they were able to detect DR.

4.2. CAD System Based on Deep Learning Techniques

Most of the literature nowadays uses the current-state of-the-art DL techniques for diagnosing and grading DR using the different image modalities. Furthermore, DL demonstrated promising results in segmenting DR lesions in an automated fashion. In addition, DL can be applied on OCT images to extract the retinal layers and some related lesions to DR disease. For example, recently, Jancy et al. [153] worked on detecting the hard exudates from different retinal image modalities using DCNN. Holmberg et al. [154] used a pretrained DCNN (i.e., U-Net) to extract the retinal OCT layers to calculate the thickness of layers. Another system [155] used a DL technique, which was composed of a multi-scaled encoder–decoder neural network for segmenting the avascular zone area presented on the OCTA images for DR diagnosis. Quellec et al. [94] introduced a DL approach depending on ConvNets and the backpropagation method on two datasets from FP to detect four lesions in DR, namely, exudates, microaneurysms, hemorrhages, and cotton-wool spots. Sayres et al. [110] trained the Inception V4 model on a large dataset from FP to grade DR into five grades (healthy eye, NPDR, moderate NPDR, severe NPDR, and PDR). Hua et al. [156] used a DL approach to identify DR progression risk from FP images. For that, they designed a DCNN called Tri-SDN with a pretrained ResNet50 and applied it on baseline and follow-up information of FP images. Then, they applied ten-fold cross-validation to calculate the performance model on the extracted features from FP images and numerical risk factors. Abramoff et al. [65] used a public dataset sourced from FP images to diagnose DR. Their method applied the DL approach to identify DR, which achieved high efficiency and can be used as a predicting tool to reduce the risk of vision loss. Multiple other studies [93,94,95,96,98,100,103,104,106,109] used pretrained CNN approaches to diagnose and grade DR using FP images. Their approaches are called GoggleNet, AlexNet, ResNet50, ResNet 101, ConvNets, and VGG19. In addition, other studies applied DL approaches on OCT and OCTA images [113,114,115,116,121,131]. All pretrained CNN networks are dealt with as a blackbox, where the image of a given modality (i.e., FP, OCT, and OCTA) represents the input to the network architecture. Then, DL networks use the convolutional layers to extract different sizes of the feature maps. Then, a fully-connected layer with a softmax layer will work as a classifier to detect/diagnose or grade DR. Table 2 describes previous studies from 2015 to 2022 that used DL and ML methods for DR classification/grading.

5. Discussion and Future Directions

In this review, we have described, qualitatively and quantitatively, how ML/DL can be used for DR diagnosis and grading. It may be feasible to use a computer-aided automated DR assessment method in place of manual assessment, especially for rural and semi-urban populations without ready access to qualified ophthalmologists. To the best of our knowledge, this is the first review article that focuses on diagnosing and grading DR using different imaging modalities (i.e., FA, FP, OCT, and OCTA). We selected our reviewed publication articles based on the most visited databases (i.e., PubMed and IEEE Xplore). Our study revealed that DL methods are increasingly popular today, as opposed to ML approaches. In addition, we found that FP images are more popular in diagnosing and grading DR than other imaging modalities, such as OCT and OCTA. This review article is intended to conclude the review of a variety of disciplines such as ML, DL, computer vision, medical image analysis, and different imaging modalities that converge in this field. The following subsection will discuss the future research areas, challenges, and research gaps. We also offer a suggested solution for the researchers to start resolving these gaps.

5.1. Future Research Areas and Challenges

Researchers have extensive possibilities to develop intelligent, automated early detection and grading systems for DR that could provide medical professionals with clinical decision support systems. Based on our in-depth review of existing works, we found some shortcomings that could be improved to enhance existing CAD-based systems for early DR diagnosis and grading. First, the retinal images may differ from one another in terms of image dimensions, contrast, illumination, light incidence angle, etc., due to various camera settings. Training and testing are typically performed on a single dataset. In order to work with data from a variety of imaging machines and patient demographics, it is necessary to build robust models and verify cross-data. Among the possibilities is the use of neural-style transfer models [157]. Second, in order to use CNN models with supervised DL and more complex architectures, it is necessary to have thousands of correctly labeled FP, OCT, OCTA, and FA images with annotations at both pixel and image levels. Obtaining such images is an expensive process, requiring the assistance of specialists. To solve this issue, researchers can use semi-supervised learning such as generative adversarial networks (GANs), which can learn from limited data. Third, currently, the majority of FP images used in existing publications are captured with expensive fundoscopy devices. Thus, the need to utilize portable, low-cost technologies such as smartphones that can capture FP images is highly recommended. Mobile-based early DR screening systems may have a strong potential, especially for the elderly. This could potentially lower costs and promote remote screening without requiring direct contact with patients. Fourth, the researchers can use different modalities of the eye, which will be helpful in the diagnosis and grading of DR. Therefore, the concatenation of the features extracted from each modality from the eye can be fed into any traditional ML or DL algorithm and produce promising results. Fifth, there are currently more studies in the literature that use only the imaging data for early diagnosis and grading DR. There has been little work carried out on the association between patient clinical data and imaging findings (e.g., age, blood sugar level, gender, and blood pressure). Hence, there is a need to explore different imaging modalities and correlate their findings with their clinical demographics in order to improve the accuracy of diagnosing and grading DR, as well as potentially to enhance other retinal conditions. Sixth, retina specialists have demonstrated heterogeneity in their diagnostic decision-making, which is a major problem. As a result, ophthalmic modalities images are interpreted by experts with varying degrees of precision, which may lead to bias during the model training. Lastly, most publicly available datasets have few annotated images because manual annotation is time-consuming. Therefore, the goal is to develop better methods to collect clinical annotations that can be applied to different image modalities, such as the localization of exudates and retinal hemorrhages.

5.2. Research Gap

Survey papers on diagnosing and grading DR are frequently limited to discussion of the ability of different modalities to distinguish between normal and different categories of DR [14,15,158]. These survey articles consider the use of ML/DL on one specific modality (e.g., FP) to grade DR, and failed to analyze other different modalities in recognizing DR and its grades. Thus, our survey article, to the best of our knowledge, is the first one to address and discuss the use of ML and DL approaches on different imaging modalities and their clinical and demographic data for DR grading. We investigate the promising results for DR grading when applying the ML and DL approaches on OCT, OCTA, FP, and clinical information of the patients. As a result, a number of clinical studies have demonstrated the benefits and effectiveness of the application of DL and ML methodologies to retinal imaging assessment. Nevertheless, there are numerous shortcomings with DL approaches in addition to CAD systems, outlined as follows, along with possible improvements. First, for evaluating the performance of DL models, there are no standardized statistical measures. Most recent works have used only the different evaluation matrices such as accuracy, sensitivity, specificity, AUC, F1-score, and kappa score. Therefore, until now, the performance of DL for diagnosing diseases has been challenging to compare. Second, in the near future, it might be possible, and will be helpful for researchers, to include more public datasets that contain different imaging modalities, such as FP, OCT, and OCTA, in addition to clinical and demographic information to more precisely detect and diagnose DR in a more precise manner. Third, other related retina diseases may have different grades of DR characteristics based on the lesions detected in different image modalities. The goal then must be to build fully automated CAD systems based on distinguishing between DR disease and other non-DR diseases based on DL or ML approaches. Fourth, from the previous point, the segmentation-based DL approaches for detecting different lesions are a vital and essential step in the computer vision field. Therefore, testing should be conducted for the detection of many lesions present in FP, OCT, and OCTA images. Fifth, although this review article looks at a broad range of DR diseases, CAD systems are not considered for many other conditions that could be investigated in future studies. Lastly, several DL approaches are concerned with the computational complexity of identifying benchmark datasets when dealing with growing numbers of patients during the following years.

6. Conclusions

The purpose of the survey was to summarize the current developments in ML/DL algorithm models for diagnosing and grading diabetic retinal diseases by different imaging modalities. In this review article, we introduced, at the beginning, an overview of DR disease and its grading, including mild NPDR, moderate NPDR, severe NPDR, and PDR. Then, we discussed the different image modalities (i.e., FP, OCT, and OCTA) used in the diagnoses and grading of DR. In addition, a systematic review of the most recent publications on CAD-based methods for the detection and grading of DR was conducted, including traditional image processing as well as ML- to DL-based methods. We provided an overview of the methodology, number of DR grades identified, system performance, and database information for each work introduced in this survey. This paper’s most important contribution is the discussion of the advantages and challenges of existing methodologies for developing an automated and robust methodology for detecting and grading DR. After that, we discussed the future direction and research plans for how the most recent, state-of-the-art DL architectures work on detecting DR early. We identified the major obstacles associated with the development of DL-based approaches and introduced solutions for diagnosing DR based on integrating the four modalities ML features in addition to the clinical and demographic information for each patient. Finally, we also offered a future direction of using smartphone-based diagnosis of DR, which potentially lowers costs and promotes remote screening without the need for direct contact with the patients.

Author Contributions

Conceptualization, M.E. (Mohamed Elsharkawy), M.E. (Mostafa Elrazzaz), A.S. (Ahmed Sharafeldeen), M.A., F.K., A.S. (Ahmed Soliman), A.E., A.M., M.G., E.E.-D., A.A., H.S.S., and A.E.-B.; project administration, A.E.-B.; supervision, M.G., E.E.-D., A.A., and A.E.-B.; writing—original draft, M.E. (Mohamed Elsharkawy) and M.E. (Mostafa Elrazzaz); writing—review and editing, M.E. (Mohamed Elsharkawy), M.E. (Mostafa Elrazzaz), A.S. (Ahmed Sharafeldeen), M.A., F.K., A.S. (Ahmed Soliman), A.E., A.M., M.G., E.E.-D., A.A., H.S.S., and A.E.-B. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by Abu Dhabi’s Advanced Technology Research Council via the ASPIRE Award for Research Excellence Program.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of University of Louisville (18.0010).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No data available.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Duh, E.J.; Sun, J.K.; Stitt, A.W. Diabetic retinopathy: Current understanding, mechanisms, and treatment strategies. JCI Insight 2017, 2, e93751. [Google Scholar] [CrossRef] [PubMed]

- Schwartz, S.S.; Epstein, S.; Corkey, B.E.; Grant, S.F.; Gavin, J.R., III; Aguilar, R.B.; Herman, M.E. A unified pathophysiological construct of diabetes and its complications. Trends Endocrinol. Metab. 2017, 28, 645–655. [Google Scholar] [CrossRef] [PubMed]

- Ruta, L.; Magliano, D.; Lemesurier, R.; Taylor, H.; Zimmet, P.; Shaw, J. Prevalence of diabetic retinopathy in Type 2 diabetes in developing and developed countries. Diabet. Med. 2013, 30, 387–398. [Google Scholar] [CrossRef] [PubMed]

- Lachin, J.M.; Genuth, S.; Nathan, D.M.; Zinman, B.; Rutledge, B.N. Effect of glycemic exposure on the risk of microvascular complications in the diabetes control and complications trial—Revisited. Diabetes 2008, 57, 995–1001. [Google Scholar] [CrossRef] [Green Version]

- Klein, R.; Klein, B.E.; Moss, S.E.; Cruickshanks, K.J. The Wisconsin Epidemiologic Study of Diabetic Retinopathy: XVII: The 14-year incidence and progression of diabetic retinopathy and associated risk factors in type 1 diabetes. Ophthalmology 1998, 105, 1801–1815. [Google Scholar] [CrossRef]

- Hietala, K.; Forsblom, C.; Summanen, P.; Groop, P.H. Heritability of proliferative diabetic retinopathy. Diabetes 2008, 57, 2176–2180. [Google Scholar] [CrossRef] [Green Version]

- Frank, R.N.; Keirn, R.J.; Kennedy, A.; Frank, K.W. Galactose-induced retinal capillary basement membrane thickening: Prevention by Sorbinil. Investig. Ophthalmol. Vis. Sci. 1983, 24, 1519–1524. [Google Scholar]

- Engerman, R.L.; Kern, T.S. Progression of incipient diabetic retinopathy during good glycemic control. Diabetes 1987, 36, 808–812. [Google Scholar] [CrossRef]

- Giugliano, D.; Ceriello, A.; Paolisso, G. Oxidative stress and diabetic vascular complications. Diabetes Care 1996, 19, 257–267. [Google Scholar] [CrossRef]

- Sinclair, S.H.; Schwartz, S.S. Diabetic retinopathy—An underdiagnosed and undertreated inflammatory, neuro-vascular complication of diabetes. Front. Endocrinol. 2019, 10, 843. [Google Scholar] [CrossRef] [Green Version]

- Gerendas, B.S.; Bogunovic, H.; Sadeghipour, A.; Schlegl, T.; Langs, G.; Waldstein, S.M.; Schmidt-Erfurth, U. Computational image analysis for prognosis determination in DME. Vis. Res. 2017, 139, 204–210. [Google Scholar] [CrossRef]

- Aiello, L.; Gardner, T.; King, G.; Blanken-ship, G.; Cavallerano, J.; Ferris, F. Diabetes Care (technical review). Diabetes Care 1998, 21, 56. [Google Scholar]

- Soomro, T.A.; Gao, J.; Khan, T.; Hani, A.F.M.; Khan, M.A.; Paul, M. Computerised approaches for the detection of diabetic retinopathy using retinal fundus images: A survey. Pattern Anal. Appl. 2017, 20, 927–961. [Google Scholar] [CrossRef]

- Sarki, R.; Ahmed, K.; Wang, H.; Zhang, Y. Automatic detection of diabetic eye disease through deep learning using fundus images: A survey. IEEE Access 2020, 8, 151133–151149. [Google Scholar] [CrossRef]

- Asiri, N.; Hussain, M.; Al Adel, F.; Alzaidi, N. Deep learning based computer-aided diagnosis systems for diabetic retinopathy: A survey. Artif. Intell. Med. 2019, 99, 101701. [Google Scholar] [CrossRef] [Green Version]

- ETDRS Research Group. Early photocoagulation for diabetic retinopathy. ETDRS report number 9. Ophthalmology 1991, 98, 766–785. [Google Scholar] [CrossRef]

- Klein, R.; Klein, B.E.; Moss, S.E.; Davis, M.D.; DeMets, D.L. The Wisconsin Epidemiologic Study of Diabetic Retinopathy: X. Four-year incidence and progression of diabetic retinopathy when age at diagnosis is 30 years or more. Arch. Ophthalmol. 1989, 107, 244–249. [Google Scholar] [CrossRef]

- Diabetic Retinopathy Study Research Group. Diabetic retinopathy study report number 6. Design, methods, and baseline results. Report number 7. A modification of the Airlie House classification of diabetic retinopathy. Prepared by the diabetic retinopathy. Investig. Ophthalmol. Vis. Sci. 1981, 21, 1–226. [Google Scholar]

- Early Treatment Diabetic Retinopathy Study Research Group. Classification of diabetic retinopathy from fluorescein angiograms: ETDRS report number 11. Ophthalmology 1991, 98, 807–822. [Google Scholar] [CrossRef]

- Early Treatment Diabetic Retinopathy Study Research Group. Grading diabetic retinopathy from stereoscopic color fundus photographs—An extension of the modified Airlie House classification: ETDRS report number 10. Ophthalmology 1991, 98, 786–806. [Google Scholar] [CrossRef]

- Wilkinson, C.; Ferris, F.L., III; Klein, R.E.; Lee, P.P.; Agardh, C.D.; Davis, M.; Dills, D.; Kampik, A.; Pararajasegaram, R.; Verdaguer, J.T.; et al. Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology 2003, 110, 1677–1682. [Google Scholar] [CrossRef]

- Ffytche, T.; Shilling, J.; Chisholm, I.; Federman, J. Indications for fluorescein angiography in disease of the ocular fundus: A review. J. R. Soc. Med. 1980, 73, 362–365. [Google Scholar] [CrossRef] [Green Version]

- Novotny, H.R.; Alvis, D.L. A method of photographing fluorescence in circulating blood in the human retina. Circulation 1961, 24, 82–86. [Google Scholar] [CrossRef] [Green Version]

- Rabb, M.F.; Burton, T.C.; Schatz, H.; Yannuzzi, L.A. Fluorescein angiography of the fundus: A schematic approach to interpretation. Surv. Ophthalmol. 1978, 22, 387–403. [Google Scholar] [CrossRef]

- Mendis, K.R.; Balaratnasingam, C.; Yu, P.; Barry, C.J.; McAllister, I.L.; Cringle, S.J.; Yu, D.Y. Correlation of histologic and clinical images to determine the diagnostic value of fluorescein angiography for studying retinal capillary detail. Investig. Ophthalmol. Vis. Sci. 2010, 51, 5864–5869. [Google Scholar] [CrossRef] [Green Version]

- Balbino, M.; Silva, G.; Correia, G.C.T.P. Anafilaxia com convulsões após angiografia com fluoresceína em paciente ambulatorial. Einstein 2012, 10, 374–376. [Google Scholar] [CrossRef] [Green Version]

- Johnson, R.N.; McDonald, H.R.; Schatz, H. Rash, fever, and chills after intravenous fluorescein angiography. Am. J. Ophthalmol. 1998, 126, 837–838. [Google Scholar] [CrossRef]

- Yannuzzi, L.A.; Rohrer, K.T.; Tindel, L.J.; Sobel, R.S.; Costanza, M.A.; Shields, W.; Zang, E. Fluorescein angiography complication survey. Ophthalmology 1986, 93, 611–617. [Google Scholar] [CrossRef]

- Early Treatment Diabetic Retinopathy Study Research Group. Fundus photographic risk factors for progression of diabetic retinopathy: ETDRS report number 12. Ophthalmology 1991, 98, 823–833. [Google Scholar]

- Verdaguer, T. Screening para retinopatia diabetica en Latino America. Resultados. Rev. Soc. Brasil Retina Vitreo 2001, 4, 14–15. [Google Scholar]

- Fukuda, M. Clinical arrangement of classification of diabetic retinopathy. Tohoku J. Exp. Med. 1983, 141, 331–335. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gyawali, R.; Toomey, M.; Stapleton, F.; Zangerl, B.; Dillon, L.; Keay, L.; Liew, G.; Jalbert, I. Quality of the Australian National Health and Medical Research Council’s clinical practice guidelines for the management of diabetic retinopathy. Clin. Exp. Optom. 2021, 104, 864–870. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Swanson, E.A.; Lin, C.P.; Schuman, J.S.; Stinson, W.G.; Chang, W.; Hee, M.R.; Flotte, T.; Gregory, K.; Puliafito, C.A.; et al. Optical coherence tomography. Science 1991, 254, 1178–1181. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rivellese, M.; George, A.; Sulkes, D.; Reichel, E.; Puliafito, C. Optical coherence tomography after laser photocoagulation for clinically significant macular edema. Ophthalmic Surgery Lasers Imaging Retin. 2000, 31, 192–197. [Google Scholar] [CrossRef]

- Agemy, S.A.; Scripsema, N.K.; Shah, C.M.; Chui, T.; Garcia, P.M.; Lee, J.G.; Gentile, R.C.; Hsiao, Y.S.; Zhou, Q.; Ko, T.; et al. Retinal vascular perfusion density mapping using optical coherence tomography angiography in normals and Diabetic Retinopathy patients. Retina 2015, 35, 2353–2363. [Google Scholar] [CrossRef] [PubMed]

- Di, G.; Weihong, Y.; Xiao, Z.; Zhikun, Y.; Xuan, Z.; Yi, Q.; Fangtian, D. A morphological study of the foveal avascular zone in patients with diabetes mellitus using optical coherence tomography angiography. Graefe’s Arch. Clin. Exp. Ophthalmol. 2016, 254, 873–879. [Google Scholar] [CrossRef]

- Freiberg, F.J.; Pfau, M.; Wons, J.; Wirth, M.A.; Becker, M.D.; Michels, S. Optical coherence tomography angiography of the foveal avascular zone in diabetic retinopathy. Graefe’s Arch. Clin. Exp. Ophthalmol. 2016, 254, 1051–1058. [Google Scholar] [CrossRef] [Green Version]

- Hwang, T.S.; Jia, Y.; Gao, S.S.; Bailey, S.T.; Lauer, A.r.K.; Flaxel, C.J.; Wilson, D.J.; Huang, D. Optical coherence tomography angiography features of diabetic RETINOPATHY. Retina 2015, 35, 2371. [Google Scholar] [CrossRef] [Green Version]

- Kim, D.Y.; Fingler, J.; Zawadzki, R.J.; Park, S.S.; Morse, L.S.; Schwartz, D.M.; Fraser, S.E.; Werner, J.S. Noninvasive imaging of the foveal avascular zone with high-speed, phase-variance optical coherence tomography. Investig. Ophthalmol. Vis. Sci. 2012, 53, 85–92. [Google Scholar] [CrossRef] [Green Version]

- Mastropasqua, R.; Di Antonio, L.; Di Staso, S.; Agnifili, L.; Di Gregorio, A.; Ciancaglini, M.; Mastropasqua, L. Optical coherence tomography angiography in retinal vascular diseases and choroidal neovascularization. J. Ophthalmol. 2015, 2015, 343515. [Google Scholar] [CrossRef]

- Takase, N.; Nozaki, M.; Kato, A.; Ozeki, H.; Yoshida, M.; Ogura, Y. Enlargement of foveal avascular zone in diabetic eyes evaluated by en face optical coherence tomography angiography. Retina 2015, 35, 2377–2383. [Google Scholar] [CrossRef]

- Mammo, Z.; Balaratnasingam, C.r.; Yu, P.; Xu, J.; Heisler, M.; Mackenzie, P.; Merkur, A.r.; Kirker, A.r.; Albiani, D.; Freund, K.B.; et al. Quantitative noninvasive angiography of the fovea centralis using speckle variance optical coherence tomography. Investig. Ophthalmol. Vis. Sci. 2015, 56, 5074–5086. [Google Scholar] [CrossRef] [Green Version]

- Tan, P.E.Z.; Balaratnasingam, C.r.; Xu, J.; Mammo, Z.; Han, S.X.; Mackenzie, P.; Kirker, A.r.W.; Albiani, D.; Merkur, A.r.B.; Sarunic, M.V.; et al. Quantitative comparison of retinal capillary images derived by speckle variance optical coherence tomography with histology. Investig. Ophthalmol. Vis. Sci. 2015, 56, 3989–3996. [Google Scholar] [CrossRef]

- Mo, S.; Krawitz, B.; Efstathiadis, E.; Geyman, L.; Weitz, R.; Chui, T.Y.; Carroll, J.; Dubra, A.; Rosen, R.B. Imaging foveal microvasculature: Optical coherence tomography angiography versus adaptive optics scanning light ophthalmoscope fluorescein angiography. Investig. Ophthalmol. Vis. Sci. 2016, 57, OCT130–OCT140. [Google Scholar] [CrossRef] [Green Version]

- Spaide, R.F.; Klancnik, J.M.; Cooney, M.J. Retinal vascular layers imaged by fluorescein angiography and optical coherence tomography angiography. JAMA Ophthalmol. 2015, 133, 45–50. [Google Scholar] [CrossRef]

- Arend, O.; Wolf, S.; Harris, A.; Reim, M. The relationship of macular microcirculation to visual acuity in diabetic patients. Arch. Ophthalmol. 1995, 113, 610–614. [Google Scholar] [CrossRef]

- Parodi, M.B.; Visintin, F.; Della Rupe, P.; Ravalico, G. Foveal avascular zone in macular branch retinal vein occlusion. Int. Ophthalmol. 1995, 19, 25–28. [Google Scholar] [CrossRef]

- Arend, O.; Wolf, S.; Jung, F.r.; Bertram, B.; Pöstgens, H.; Toonen, H.; Reim, M. Retinal microcirculation in patients with diabetes mellitus: Dynamic and morphological analysis of perifoveal capillary network. Br. J. Ophthalmol. 1991, 75, 514–518. [Google Scholar] [CrossRef]

- Bresnick, G.H.; Condit, R.; Syrjala, S.; Palta, M.; Groo, A.; Korth, K. Abnormalities of the foveal avascular zone in DIABETIC RETINOPATHY. Arch. Ophthalmol. 1984, 102, 1286–1293. [Google Scholar] [CrossRef]

- Conrath, J.; Giorgi, R.; Raccah, D.; Ridings, B. Foveal avascular zone in diabetic retinopathy: Quantitative vs. qualitative assessment. Eye 2005, 19, 322–326. [Google Scholar] [CrossRef] [Green Version]

- Mansour, A.; Schachat, A.R.; Bodiford, G.; Haymond, R. Foveal avascular zone in diabetes mellitus. Retina 1993, 13, 125–128. [Google Scholar] [CrossRef]

- Sanders, R.J.; Brown, G.C.; Rosenstein, R.B.; Magargal, L. Foveal avascular zone diameter and sickle cell disease. Arch. Ophthalmol. 1991, 109, 812–815. [Google Scholar] [CrossRef]

- Bennett, A.G.; Rudnicka, A.R.; Edgar, D.F. Improvements on Littmann’s method of determining the size of retinal features by fundus photography. Graefe’s Arch. Clin. Exp. Ophthalmol. 1994, 232, 361–367. [Google Scholar] [CrossRef]

- Popovic, Z.; Knutsson, P.; Thaung, J.; Owner-Petersen, M.; Sjöstrand, J. Noninvasive imaging of human foveal capillary network using dual-conjugate adaptive optics. Investig. Ophthalmol. Vis. Sci. 2011, 52, 2649–2655. [Google Scholar] [CrossRef] [Green Version]

- Tam, J.; Dhamdhere, K.P.; Tiruveedhula, P.; Manzanera, S.; Barez, S.; Bearse, M.A.; Adams, A.J.; Roorda, A. Disruption of the retinal parafoveal capillary network in type 2 diabetes before the onset of DIABETIC RETINOPATHY. Investig. Ophthalmol. Vis. Sci. 2011, 52, 9257–9266. [Google Scholar] [CrossRef]

- Hutchinson, A.; McIntosh, A.; Peters, J.; O’keeffe, C.; Khunti, K.; Baker, R.; Booth, A. Effectiveness of screening and monitoring tests for diabetic retinopathy–a systematic review. Diabet. Med. 2000, 17, 495–506. [Google Scholar] [CrossRef]

- Sussman, E.J.; Tsiaras, W.G.; Soper, K.A. Diagnosis of diabetic eye disease. JAMA 1982, 247, 3231–3234. [Google Scholar] [CrossRef]

- Bursell, S.E.; Cavallerano, J.D.; Cavallerano, A.A.; Clermont, A.C.; Birkmire-Peters, D.; Aiello, L.P.; Aiello, L.M.; Joslin Vision Network Research Team. Stereo nonmydriatic digital-video color retinal imaging compared with Early Treatment Diabetic Retinopathy Study seven standard field 35-mm stereo color photos for determining level of diabetic retinopathy. Ophthalmology 2001, 108, 572–585. [Google Scholar] [CrossRef]

- Cavallerano, A.A.; Cavallerano, J.D.; Katalinic, P.; Tolson, A.M.; Aiello, L.P.; Aiello, L.M. Use of Joslin Vision Network digital-video nonmydriatic retinal imaging to assess diabetic retinopathy in a clinical program. Retina 2003, 23, 215–223. [Google Scholar] [CrossRef]

- Fransen, S.R.; Leonard-Martin, T.C.; Feuer, W.J.; Hildebrand, P.L.; Inoveon Health Research Group. Clinical evaluation of patients with diabetic retinopathy: Accuracy of the Inoveon diabetic retinopathy-3DT system. Ophthalmology 2002, 109, 595–601. [Google Scholar] [CrossRef]

- Zeimer, R.; Zou, S.; Meeder, T.; Quinn, K.; Vitale, S. A fundus camera dedicated to the screening of diabetic retinopathy in the primary-care physician’s office. Investig. Ophthalmol. Vis. Sci. 2002, 43, 1581–1587. [Google Scholar]

- Taylor, D.; Fisher, J.; Jacob, J.; Tooke, J. The use of digital cameras in a mobile retinal screening environment. Diabet. Med. 1999, 16, 680–686. [Google Scholar] [CrossRef] [PubMed]

- Pugh, J.A.; Jacobson, J.M.; Van Heuven, W.; Watters, J.A.; Tuley, M.R.; Lairson, D.R.; Lorimor, R.J.; Kapadia, A.S.; Velez, R. Screening for diabetic retinopathy: The wide-angle retinal camera. Diabetes Care 1993, 16, 889–895. [Google Scholar] [CrossRef] [PubMed]

- Joannou, J.; Kalk, W.; Ntsepo, S.; Berzin, M.; Joffe, B.; Raal, F.; Sachs, E.; van der Merwe, M.; Wing, J.; Mahomed, I. Screening for Diabetic retinopathy in South Africa with 60 retinal colour photography. J. Intern. Med. 1996, 239, 43–47. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.C.; Niemeijer, M. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Niemeijer, M.; Zhang, L.; Lee, K.; Abramoff, M.D.; Sonka, M. Three-Dimensional Segmentation of Fluid-Associated Abnormalities in Retinal OCT: Probability Constrained Graph-Search-Graph-Cut. IEEE Trans. Med. Imaging 2012, 31, 1521–1531. [Google Scholar] [CrossRef] [Green Version]

- Sophie, R.; Lu, N.; Campochiaro, P.A. Predictors of functional and anatomic outcomes in patients with diabetic macular edema treated with ranibizumab. Ophthalmology 2015, 122, 1395–1401. [Google Scholar] [CrossRef] [Green Version]

- Yohannan, J.; Bittencourt, M.; Sepah, Y.J.; Hatef, E.; Sophie, R.; Moradi, A.R.; Liu, H.; Ibrahim, M.; Do, D.V.; Coulantuoni, E.; et al. Association of retinal sensitivity to integrity of photoreceptor inner/outer segment junction in patients with diabetic macular edema. Ophthalmology 2013, 120, 1254–1261. [Google Scholar] [CrossRef]

- Gerendas, B.S.; Waldstein, S.M.; Simader, C.; Deak, G.; Hajnajeeb, B.; Zhang, L.; Bogunovic, H.; Abramoff, M.D.; Kundi, M.; Sonka, M.; et al. Three-dimensional automated choroidal volume assessment on standard spectral-domain optical coherence tomography and correlation with the level of diabetic macular edema. Am. J. Ophthalmol. 2014, 158, 1039–1048. [Google Scholar] [CrossRef] [Green Version]

- Schlegl, T.; Waldstein, S.M.; Vogl, W.D.; Schmidt-Erfurth, U.; Langs, G. Predicting semantic descriptions from medical images with convolutional neural networks. Inf. Process. Med. Imaging 2015, 24, 437–448. [Google Scholar]

- Schmidt-Erfurth, U.; Waldstein, S.M.; Deak, G.G.; Kundi, M.; Simader, C. Pigment epithelial detachment followed by retinal cystoid degeneration leads to vision loss in treatment of neovascular age-related macular degeneration. Ophthalmology 2015, 122, 822–832. [Google Scholar] [CrossRef]

- Ritter, M.; Simader, C.; Bolz, M.; Deák, G.G.; Mayr-Sponer, U.; Sayegh, R.; Kundi, M.; Schmidt-Erfurth, U.M. Intraretinal cysts are the most relevant prognostic biomarker in neovascular age-related macular degeneration independent of the therapeutic strategy. Br. J. Ophthalmol. 2014, 98, 1629–1635. [Google Scholar] [CrossRef]

- Elsharkawy, M.; Sharafeldeen, A.; Soliman, A.; Khalifa, F.; Widjajahakim, R.; Switala, A.; Elnakib, A.; Schaal, S.; Sandhu, H.S.; Seddon, J.M.; et al. Automated diagnosis and grading of dry age-related macular degeneration using optical coherence tomography imaging. Investig. Ophthalmol. Vis. Sci. 2021, 62, 107. [Google Scholar]

- Elsharkawy, M.; Elrazzaz, M.; Ghazal, M.; Alhalabi, M.; Soliman, A.; Mahmoud, A.; El-Daydamony, E.; Atwan, A.; Thanos, A.; Sandhu, H.S.; et al. Role of Optical Coherence Tomography Imaging in Predicting Progression of Age-Related Macular Disease: A Survey. Diagnostics 2021, 11, 2313. [Google Scholar] [CrossRef]

- Gerendas, B.; Simader, C.; Deak, G.G.; Prager, S.G.r.; Lammer, J.; Waldstein, S.M.; Kundi, M.; Schmidt-Erfurth, U. Morphological parameters relevant for visual and anatomic outcomes during anti-VEGF therapy of diabetic macular edema in the RESTORE trial. Investig. Ophthalmol. Vis. Sci. 2014, 55, 1791. [Google Scholar]

- Welikala, R.A.; Fraz, M.M.; Dehmeshki, J.; Hoppe, A.; Tah, V.; Mann, S.; Williamson, T.H.; Barman, S.A. Genetic algorithm based feature selection combined with dual classification for the automated detection of proliferative diabetic retinopathy. Comput. Med. Imaging Graph. 2015, 43, 64–77. [Google Scholar] [CrossRef] [Green Version]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef] [Green Version]

- Prasad, D.K.; Vibha, L.; Venugopal, K. Early detection of diabetic retinopathy from digital retinal fundus images. In Proceedings of the 2015 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Trivandrum, India, 10–12 December 2015; pp. 240–245. [Google Scholar]

- DIARETDB1—Standard Diabetic Retinopathy Database. Available online: http://www2.it.lut.fi/project/imageret/diaretdb1/index.html (accessed on 1 February 2022).

- Mahendran, G.; Dhanasekaran, R. Investigation of the severity level of diabetic retinopathy using supervised classifier algorithms. Comput. Electr. Eng. 2015, 45, 312–323. [Google Scholar] [CrossRef]

- Bhatkar, A.P.; Kharat, G. Detection of diabetic retinopathy in retinal images using MLP classifier. In Proceedings of the 2015 IEEE International Symposium on Nanoelectronic and Information Systems, Indore, India, 21–23 December 2015; pp. 331–335. [Google Scholar]

- Labhade, J.D.; Chouthmol, L.; Deshmukh, S. Diabetic retinopathy detection using soft computing techniques. In Proceedings of the 2016 International Conference on Automatic Control and Dynamic Optimization Techniques (ICACDOT), Pune, India, 9–10 September 2016; pp. 175–178. [Google Scholar]

- Rahim, S.S.; Palade, V.; Shuttleworth, J.; Jayne, C. Automatic screening and classification of diabetic retinopathy and maculopathy using fuzzy image processing. Brain Inform. 2016, 3, 249–267. [Google Scholar] [CrossRef]

- Bhatia, K.; Arora, S.; Tomar, R. Diagnosis of diabetic retinopathy using machine learning classification algorithm. In Proceedings of the 2016 2nd International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 14–16 October 2016; pp. 347–351. [Google Scholar]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Colas, E.; Besse, A.; Orgogozo, A.; Schmauch, B.; Meric, N.; Besse, E. Deep learning approach for diabetic retinopathy screening. Acta Ophthalmol. 2016, 94, 635. [Google Scholar] [CrossRef]

- Ghosh, R.; Ghosh, K.; Maitra, S. Automatic detection and classification of diabetic retinopathy stages using CNN. In Proceedings of the 2017 4th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 2–3 February 2017; pp. 550–554. [Google Scholar]

- Islam, M.; Dinh, A.V.; Wahid, K.A. Automated diabetic retinopathy detection using bag of words approach. J. Biomed. Sci. Eng. 2017, 10, 86–96. [Google Scholar] [CrossRef] [Green Version]

- Carrera, E.V.; González, A.; Carrera, R. Automated detection of diabetic retinopathy using SVM. In Proceedings of the 2017 IEEE XXIV International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Cusco, Peru, 15–18 August 2017; pp. 1–4. [Google Scholar]

- Somasundaram, S.K.; Alli, P. A machine learning ensemble classifier for early prediction of diabetic retinopathy. J. Med. Syst. 2017, 41, 1–12. [Google Scholar]

- Kälviäinen, R.; Uusitalo, H. DIARETDB1 diabetic retinopathy database and evaluation protocol. Medical Image Understanding and Analysis. 2007, Volume 2007, p. 61. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.692.2635&rep=rep1&type=pdf#page=72 (accessed on 28 April 2022).

- ElTanboly, A.; Ismail, M.; Shalaby, A.; Switala, A.; El-Baz, A.; Schaal, S.; Gimel’farb, G.; El-Azab, M. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med. Phys. 2017, 44, 914–923. [Google Scholar] [CrossRef]

- Takahashi, H.; Tampo, H.; Arai, Y.; Inoue, Y.; Kawashima, H. Applying artificial intelligence to disease staging: Deep learning for improved staging of diabetic retinopathy. PLoS ONE 2017, 12, e0179790. [Google Scholar] [CrossRef] [Green Version]

- Quellec, G.; Charrière, K.; Boudi, Y.; Cochener, B.; Lamard, M. Deep image mining for diabetic retinopathy screening. Med. Image Anal. 2017, 39, 178–193. [Google Scholar] [CrossRef] [Green Version]

- Ting, D.S.W.; Cheung, C.Y.L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; San Yeo, I.Y. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Wang, Z.; Yin, Y.; Shi, J.; Fang, W.; Li, H.; Wang, X. Zoom-in-net: Deep mining lesions for diabetic retinopathy detection. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 11–13 September 2017; pp. 267–275. [Google Scholar]

- Eladawi, N.; Elmogy, M.; Fraiwan, L.; Pichi, F.; Ghazal, M.; Aboelfetouh, A.; Riad, A.; Keynton, R.; Schaal, S.; El-Baz, A. Early diagnosis of diabetic retinopathy in octa images based on local analysis of retinal blood vessels and foveal avascular zone. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3886–3891. [Google Scholar]

- Dutta, S.; Manideep, B.; Basha, S.M.; Caytiles, R.D.; Iyengar, N. Classification of diabetic retinopathy images by using deep learning models. Int. J. Grid Distrib. Comput. 2018, 11, 89–106. [Google Scholar] [CrossRef]

- ElTanboly, A.; Ghazal, M.; Khalil, A.; Shalaby, A.; Mahmoud, A.; Switala, A.; El-Azab, M.; Schaal, S.; El-Baz, A. An integrated framework for automatic clinical assessment of diabetic retinopathy grade using spectral domain OCT images. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 1431–1435. [Google Scholar]

- Zhang, X.; Zhang, W.; Fang, M.; Xue, J.; Wu, L. Automatic classification of diabetic retinopathy based on convolutional neural networks. In Proceedings of the 2018 International Conference on Image and Video Processing, and Artificial Intelligence. International Society for Optics and Photonics, Shanghai, China, 15–17 August 2018; Volume 10836, p. 1083608. [Google Scholar]

- Costa, P.; Galdran, A.; Smailagic, A.; Campilho, A. A weakly-supervised framework for interpretable diabetic retinopathy detection on retinal images. IEEE Access 2018, 6, 18747–18758. [Google Scholar] [CrossRef]

- Pires, R.; Jelinek, H.F.; Wainer, J.; Goldenstein, S.; Valle, E.; Rocha, A. Assessing the need for referral in automatic diabetic retinopathy detection. IEEE Trans. Biomed. Eng. 2013, 60, 3391–3398. [Google Scholar] [CrossRef]

- Chakrabarty, N. A deep learning method for the detection of diabetic retinopathy. In Proceedings of the 2018 5th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Gorakhpur, India, 2–4 November 2018; pp. 1–5. [Google Scholar]

- Kwasigroch, A.; Jarzembinski, B.; Grochowski, M. Deep CNN based decision support system for detection and assessing the stage of diabetic retinopathy. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Swinoujscie, Poland, 9–12 May 2018; pp. 111–116. [Google Scholar]

- EyePACS, LLC. Available online: http://www.eyepacs.com/ (accessed on 1 February 2022).

- Li, F.; Liu, Z.; Chen, H.; Jiang, M.; Zhang, X.; Wu, Z. Automatic detection of diabetic retinopathy in retinal fundus photographs based on deep learning algorithm. Transl. Vis. Sci. Technol. 2019, 8, 4. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nagasawa, T.; Tabuchi, H.; Masumoto, H.; Enno, H.; Niki, M.; Ohara, Z.; Yoshizumi, Y.; Ohsugi, H.; Mitamura, Y. Accuracy of ultrawide-field fundus ophthalmoscopy-assisted deep learning for detecting treatment-naïve proliferative diabetic retinopathy. Int. Ophthalmol. 2019, 39, 2153–2159. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Metan, A.C.; Lambert, A.; Pickering, M. Small Scale Feature Propagation Using Deep Residual Learning for Diabetic Retinopathy Classification. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC), Xiamen, China, 5–7 July 2019; pp. 392–396. [Google Scholar]

- Qummar, S.; Khan, F.G.; Shah, S.; Khan, A.; Shamshirband, S.; Rehman, Z.U.; Khan, I.A.; Jadoon, W. A deep learning ensemble approach for diabetic retinopathy detection. IEEE Access 2019, 7, 150530–150539. [Google Scholar] [CrossRef]

- Sayres, R.; Taly, A.; Rahimy, E.; Blumer, K.; Coz, D.; Hammel, N.; Krause, J.; Narayanaswamy, A.; Rastegar, Z.; Wu, D.; et al. Using a deep learning algorithm and integrated gradients explanation to assist grading for diabetic retinopathy. Ophthalmology 2019, 126, 552–564. [Google Scholar] [CrossRef] [Green Version]

- Sengupta, S.; Singh, A.; Zelek, J.; Lakshminarayanan, V. Cross-domain diabetic retinopathy detection using deep learning. Appl. Mach. Learn. Int. Soc. Opt. Photonics 2019, 11139, 111390V. [Google Scholar]

- Hathwar, S.B.; Srinivasa, G. Automated grading of diabetic retinopathy in retinal fundus images using deep learning. In Proceedings of the 2019 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, 17–19 September 2019; pp. 73–77. [Google Scholar]

- Li, X.; Shen, L.; Shen, M.; Tan, F.; Qiu, C.S. Deep learning based early stage diabetic retinopathy detection using optical coherence tomography. Neurocomputing 2019, 369, 134–144. [Google Scholar] [CrossRef]

- Heisler, M.; Karst, S.; Lo, J.; Mammo, Z.; Yu, T.; Warner, S.; Maberley, D.; Beg, M.F.; Navajas, E.V.; Sarunic, M.V. Ensemble deep learning for diabetic retinopathy detection using optical coherence tomography angiography. Transl. Vis. Sci. Technol. 2020, 9, 20. [Google Scholar] [CrossRef] [Green Version]

- Alam, M.; Zhang, Y.; Lim, J.I.; Chan, R.V.; Yang, M.; Yao, X. Quantitative optical coherence tomography angiography features for objective classification and staging of diabetic retinopathy. Retina 2020, 40, 322–332. [Google Scholar] [CrossRef]

- Zang, P.; Gao, L.; Hormel, T.T.; Wang, J.; You, Q.; Hwang, T.S.; Jia, Y. DcardNet: Diabetic retinopathy classification at multiple levels based on structural and angiographic optical coherence tomography. IEEE Trans. Biomed. Eng. 2020, 68, 1859–1870. [Google Scholar] [CrossRef]

- Ghazal, M.; Ali, S.S.; Mahmoud, A.H.; Shalaby, A.M.; El-Baz, A. Accurate detection of non-proliferative diabetic retinopathy in optical coherence tomography images using convolutional neural networks. IEEE Access 2020, 8, 34387–34397. [Google Scholar] [CrossRef]

- Sandhu, H.S.; Elmogy, M.; Sharafeldeen, A.T.; Elsharkawy, M.; El-Adawy, N.; Eltanboly, A.; Shalaby, A.; Keynton, R.; El-Baz, A. Automated diagnosis of diabetic retinopathy using clinical biomarkers, optical coherence tomography, and optical coherence tomography angiography. Am. J. Ophthalmol. 2020, 216, 201–206. [Google Scholar] [CrossRef]

- Narayanan, B.N.; Hardie, R.C.; De Silva, M.S.; Kueterman, N.K. Hybrid machine learning architecture for automated detection and grading of retinal images for diabetic retinopathy. J. Med. Imaging 2020, 7, 034501. [Google Scholar] [CrossRef]

- Shankar, K.; Sait, A.R.W.; Gupta, D.; Lakshmanaprabu, S.; Khanna, A.; Pandey, H.M. Automated detection and classification of fundus diabetic retinopathy images using synergic deep learning model. Pattern Recognit. Lett. 2020, 133, 210–216. [Google Scholar] [CrossRef]

- Ryu, G.; Lee, K.; Park, D.; Park, S.H.; Sagong, M. A deep learning model for identifying diabetic retinopathy using optical coherence tomography angiography. Sci. Rep. 2021, 11, 1–9. [Google Scholar] [CrossRef]

- He, A.; Li, T.; Li, N.; Wang, K.; Fu, H. CABNet: Category Attention Block for Imbalanced Diabetic Retinopathy Grading. IEEE Trans. Med. Imaging 2021, 40, 143–153. [Google Scholar] [CrossRef]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Saeed, F.; Hussain, M.; Aboalsamh, H.A. Automatic diabetic retinopathy diagnosis using adaptive fine-tuned convolutional neural network. IEEE Access 2021, 9, 41344–41359. [Google Scholar] [CrossRef]