State of the Art of BIM Integration with Sensing Technologies in Construction Progress Monitoring

Abstract

:1. Introduction

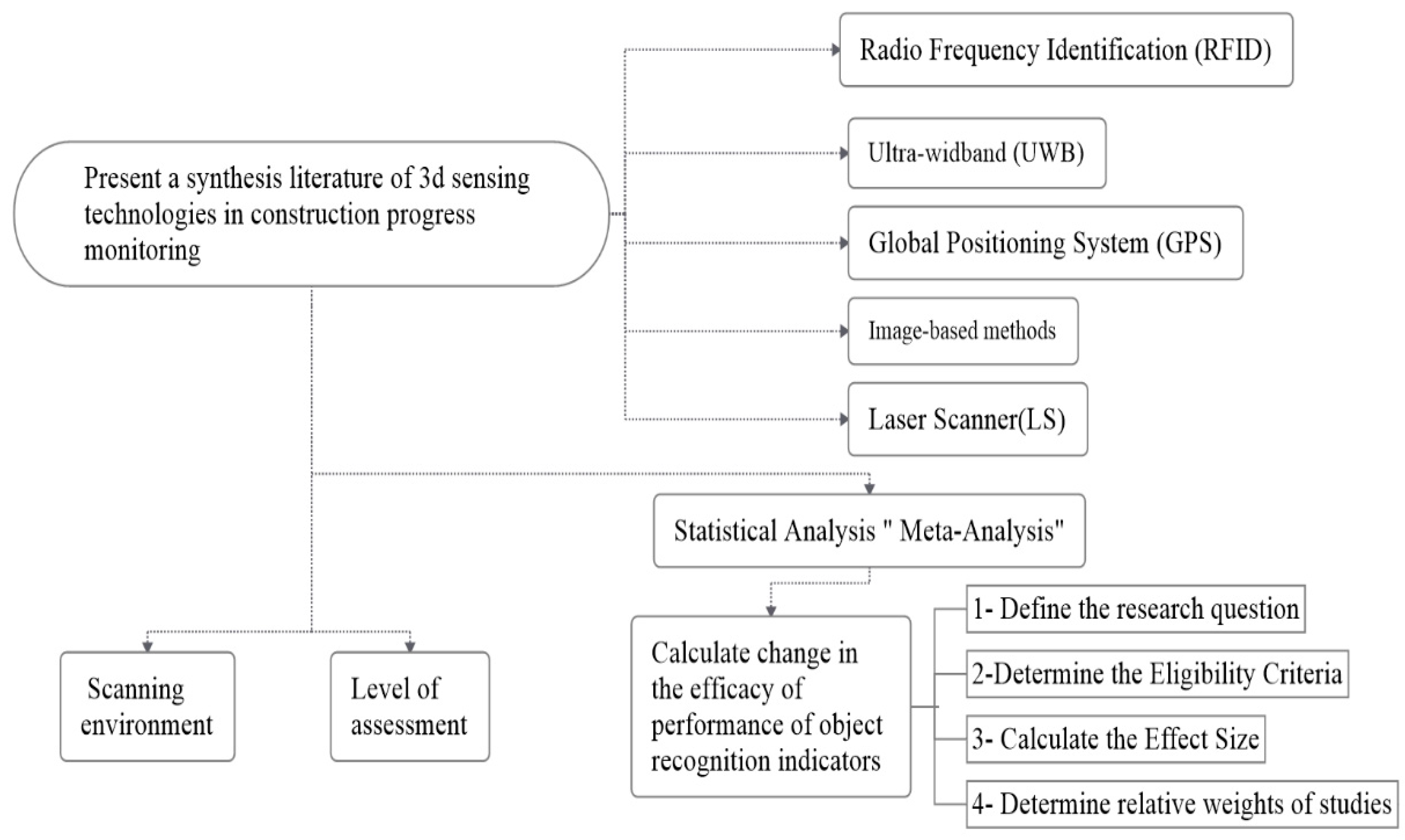

2. Research Outlines

- Scanning environment: the environment where 3D sensing technology captures the necessary as-built data (indoors, outdoors, or both)

- Level of assessment: The level of progress monitoring data between the as-built model and as-planned model [three-dimensional (3D), four-dimensional (4D), or five-dimensional (5D)]

- Performance of object recognition indicators [recall, accuracy, and precision] (see Section 3)

3. Overview on 3D Sensing Technologies in Construction

3.1. Radio Frequency Identification (RFID)

3.2. Ultra-Wideband (UWB)

3.3. Global Positioning System (GPS)

3.4. Image-Based Methods

3.5. Laser Scanners

4. Critical Analysis for Previous Studies

4.1. Summary of the Current State of the Art

4.2. Statistical Analysis Using Meta-Analysis

4.2.1. Meta-Regression Methods and Procedures

4.2.2. Evaluation of Effect Size and Relative Weight

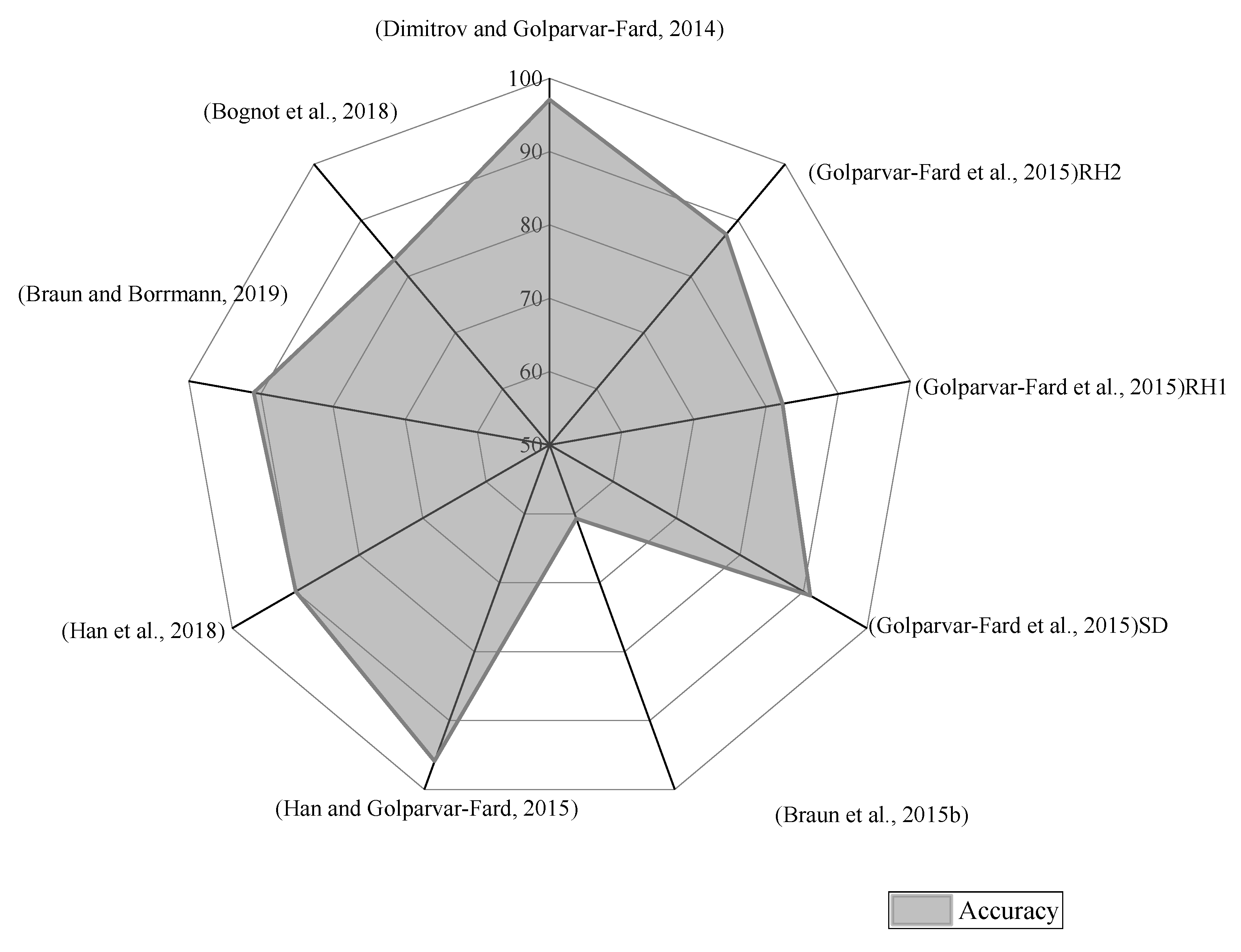

4.2.3. Deliverables

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Fischer, W.A.; Hemphill, W.R.; Kover, A. Progress in remote sensing. Photogrammetria 1976, 32, 33–72. [Google Scholar] [CrossRef]

- Colwell, R.N. Uses and limitations of multispectral remote sensing. In Proceedings of the Fourth Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 12–14 April 1966. [Google Scholar]

- Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Yi, W.; Chan, A.P. Critical review of labor productivity research in construction journals. J. Manag. Eng. 2014, 30, 214–225. [Google Scholar] [CrossRef] [Green Version]

- Zavadskas, E.K.; Vilutienė, T.; Turskis, Z.; Šaparauskas, J. Multi-criteria analysis of Projects’ performance in construction. Arch. Civ. Mech. Eng. 2014, 14, 114–121. [Google Scholar] [CrossRef]

- Azhar, S. Building information modeling (BIM): Trends, benefits, risks, and challenges for the AEC industry. Leadersh. Manag. Eng. 2011, 11, 241–252. [Google Scholar] [CrossRef]

- Hichri, N.; Stefani, C.; De Luca, L.; Veron, P.; Hamon, G. From point cloud to BIM: A survey of existing approaches. In Proceedings of the XXIV International CIPA Symposium, Strasbourg, France, 2–6 September 2013. [Google Scholar]

- Baik, A.H.A.; Yaagoubi, R.; Boehm, J. Integration of Jeddah historical BIM and 3D GIS for documentation and restoration of historical monument. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W7, 29–34. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Sun, W.; Shou, W.; Wang, X.; Wu, C.; Chong, H.Y.; Liu, Y.; Sun, C. Integrating BIM and LiDAR for real-time construction quality control. J. Intell. Robot. Syst. 2015, 79, 417–432. [Google Scholar] [CrossRef]

- Turkan, Y.; Bosche, F.; Haas, C.T.; Haas, R. Automated progress tracking using 4D schedule and 3D sensing technologies. Autom. Constr. 2012, 22, 414–421. [Google Scholar] [CrossRef]

- Patel, T.; Guo, B.H.; Zou, Y. A scientometric review of construction progress monitoring studies. Eng. Constr. Archit. Manag. 2021. [Google Scholar] [CrossRef]

- Wen, Q.J.; Ren, Z.J.; Lu, H.; Wu, J.F. The progress and trend of BIM research: A bibliometrics-based visualization analysis. Autom. Constr. 2021, 124, 103558. [Google Scholar] [CrossRef]

- Mirzaei, K.; Arashpour, M.; Asadi, E.; Masoumi, H.; Bai, Y.; Bernard, A. 3D point cloud data processing with machine learning for construction and infrastructure applications: A comprehensive review. Adv. Eng. Inform. 2022, 51, 101501. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Ekanayake, B.; Wong, J.K.W.; Fini, A.A.F.; Smith, P. Computer vision-based interior construction progress monitoring: A literature review and future research directions. Autom. Constr. 2021, 127, 103705. [Google Scholar] [CrossRef]

- Alizadehsalehi, S.; Yitmen, I. A concept for automated construction progress monitoring: Technologies adoption for benchmarking project performance control. Arab. J. Sci. Eng. 2019, 44, 4993–5008. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. D4AR–a 4-dimensional augmented reality model for automating construction progress monitoring data collection, processing and communication. J. Inf. Technol. Constr. 2009, 14, 129–153. [Google Scholar]

- Hamledari, H.; McCabe, B.; Davari, S.; Shahi, A.; Rezazadeh Azar, E.; Flager, F. Evaluation of computer vision-and 4D BIM-based construction progress tracking on a UAV platform. In Proceedings of the 6th CSCE/ASCE/CRC, Vancouver, BC, Canada, 31 May–3 June 2017. [Google Scholar]

- Braun, A.; Tuttas, S.; Borrmann, A.; Stilla, U. Automated progress monitoring based on photogrammetric point clouds and precedence relationship graphs. In Proceedings of the 32nd International Symposium on Automation and Robotics in Construction, Oulu, Finland, 15–18 June 2015; pp. 1–7. [Google Scholar]

- Bosche, F.; Haas, C.T.; Akinci, B. Automated recognition of 3D CAD objects in site laser scans for project 3D status visualization and performance control. J. Comput. Civ. Eng. 2009, 23, 311–318. [Google Scholar] [CrossRef]

- Dimitrov, A.; Golparvar-Fard, M. Vision-based material recognition for automated monitoring of construction progress and generating building information modeling from unordered site image collections. Adv. Eng. Inform. 2014, 28, 37–49. [Google Scholar] [CrossRef]

- Hammad, A.; Motamedi, A. Framework for lifecycle status tracking and visualization of constructed facility components. In Proceedings of the 7th International Conference on Construction Applications of Virtual Reality, University Park, TX, USA, 22–23 October 2007; pp. 224–232. [Google Scholar]

- Hajian, H.; Becerik-Gerber, B. A research outlook for real-time project information management by integrating advanced field data acquisition systems and building information modeling. In Computing in Civil Engineering, Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Austin, TX, USA, 24–27 June 2009; Caldas, C.H., O’Brien, W.J., Eds.; American Society of Civil Engineers: Reston, VA, USA, 2009; pp. 83–94. [Google Scholar]

- Motamedi, A.; Hammad, A. RFID-assisted lifecycle management of building components using BIM data. In Proceedings of the 26th International Symposium on Automation and Robotics in Construction, Austin, TX, USA, 24–27 June 2009; pp. 109–116. [Google Scholar]

- Xie, H.; Shi, W.; Issa, R.R. Using RFID and real-time virtual reality simulation for optimization in steel construction. J. Inf. Technol. Constr. 2011, 16, 291–308. [Google Scholar]

- Fang, Y.; Cho, Y.K.; Zhang, S.; Perez, E. Case study of BIM and cloud-enabled real-time RFID indoor localization for construction management applications. J. Constr. Eng. Manag. 2016, 142, 05016003. [Google Scholar] [CrossRef]

- Li, C.Z.; Zhong, R.Y.; Xue, F.; Xu, G.; Chen, K.; Huang, G.G.; Shen, G.Q. Integrating RFID and BIM technologies for mitigating risks and improving schedule performance of prefabricated house construction. J. Clean. Prod. 2017, 165, 1048–1062. [Google Scholar] [CrossRef]

- Cho, Y.K.; Youn, J.H.; Martinez, D. Error modeling for an untethered ultra-wideband system for construction indoor asset tracking. Autom. Constr. 2010, 19, 43–54. [Google Scholar] [CrossRef]

- Shahi, A.; Cardona, J.M.; Haas, C.T.; West, J.S.; Caldwell, G.L. Activity-based data fusion for automated progress tracking of construction projects. In Proceedings of the Construction Research Congress 2012: Construction Challenges in a Flat World, West Lafayette, IN, USA, 21–23 May 2012; pp. 838–847. [Google Scholar]

- Shahi, A.; Safa, M.; Haas, C.T.; West, J.S. Data fusion process management for automated construction progress estimation. J. Comput. Civ. Eng. 2015, 29, 04014098. [Google Scholar] [CrossRef]

- Rashid, K.M.; Louis, J.; Fiawoyife, K.K. Wireless electric appliance control for smart buildings using indoor location tracking and BIM-based virtual environments. Autom. Constr. 2019, 101, 48–58. [Google Scholar] [CrossRef]

- Taneja, S.; Akinci, B.; Garrett, J.H.; Soibelman, L.; Ergen, E.; Pradhan, A.; Anil, E.B. Sensing and field data capture for construction and facility operations. J. Constr. Eng. Manag. 2011, 137, 870–881. [Google Scholar] [CrossRef]

- Behnam, A.; Wickramasinghe, D.C.; Ghaffar, M.A.A.; Vu, T.T.; Tang, Y.H.; Isa, H.B.M. Automated progress monitoring system for linear infrastructure projects using satellite remote sensing. Autom. Constr. 2016, 68, 114–127. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Savarese, S.; Peña-Mora, F. Automated model-based recognition of progress using daily construction photographs and IFC-based 4D models. In Construction Research Congress 2010: Innovation for Reshaping Construction Practice, Proceedings of the 2010 Construction Research Congress, Banff, AB, Canada, 8–10 May 2010; Ruwanpura, J., Mohamed, Y., Lee, S.H., Eds.; American Society of Civil Engineers: Reston, VA, USA, 2010; pp. 51–60. [Google Scholar]

- Roh, S.; Aziz, Z.; Pena-Mora, F. An object-based 3D walk-through model for interior construction progress monitoring. Autom. Constr. 2011, 20, 66–75. [Google Scholar] [CrossRef]

- Tuttas, S.; Braun, A.; Borrmann, A.; Stilla, U. Comparision Of Photogrammetric Point Clouds With Bim Building Elements For Construction Progress Monitoring. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-3, 341–345. [Google Scholar] [CrossRef] [Green Version]

- Golparvar-Fard, M.; Pena-Mora, F.; Savarese, S. Automated progress monitoring using unordered daily construction photographs and IFC-based building information models. J. Comput. Civ. Eng. 2015, 29, 04014025. [Google Scholar] [CrossRef]

- Braun, A.; Tuttas, S.; Borrmann, A.; Stilla, U. A concept for automated construction progress monitoring using bim-based geometric constraints and photogrammetric point clouds. J. Inf. Technol. Constr 2015, 20, 68–79. [Google Scholar]

- Teizer, J. Status quo and open challenges in vision-based sensing and tracking of temporary resources on infrastructure construction sites. Adv. Eng. Inform. 2015, 29, 225–238. [Google Scholar] [CrossRef]

- Pazhoohesh, M.; Zhang, C. Automated construction progress monitoring using thermal images and wireless sensor networks. In Proceedings of the CSCE 2015, Building on Our Growth Opportunities, Regina, SK, Canada, 27–30 May 2015; p. 101. [Google Scholar]

- Han, K.K.; Golparvar-Fard, M. Appearance-based material classification for monitoring of operation-level construction progress using 4D BIM and site photologs. Autom. Constr. 2015, 53, 44–57. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Q.; Yang, B.; Wu, T.; Lei, K.; Zhang, B.; Fang, T. Vision-based framework for automatic progress monitoring of precast walls by using surveillance videos during the construction phase. J. Comput. Civ. Eng. 2021, 35, 04020056. [Google Scholar] [CrossRef]

- Arif, F.; Khan, W.A. Smart progress monitoring framework for building construction elements using videography–MATLAB–BIM integration. Int. J. Civ. Eng. 2021, 19, 717–732. [Google Scholar] [CrossRef]

- Pazhoohesh, M.; Zhang, C.; Hammad, A.; Taromi, Z.; Razmjoo, A. Infrared thermography for a quick construction progress monitoring approach in concrete structures. Archit. Struct. Constr. 2021, 1, 91–106. [Google Scholar] [CrossRef]

- Wu, Y.; Kim, H.; Kim, C.; Han, S.H. Object recognition in construction-site images using 3D CAD-based filtering. J. Comput. Civ. Eng. 2010, 24, 56–64. [Google Scholar] [CrossRef]

- Han, K.; Lin, J.; Golparvar-Fard, M. A formalism for utilization of autonomous vision-based systems and integrated project models for construction progress monitoring. In Proceedings of the Conference on Autonomous and Robotic Construction of Infrastructure, Ames, IA, USA, 2–3 June 2015. [Google Scholar]

- Han, K.; Degol, J.; Golparvar-Fard, M. Geometry-and appearance-based reasoning of construction progress monitoring. J. Constr. Eng. Manag. 2018, 144, 04017110. [Google Scholar] [CrossRef] [Green Version]

- Braun, A.; Borrmann, A. Combining inverse photogrammetry and BIM for automated labeling of construction site images for machine learning. Autom. Constr. 2019, 106, 102879. [Google Scholar] [CrossRef]

- Álvares, J.S.; Costa, D.B. Construction progress monitoring using unmanned aerial system and 4D BIM. In Proceedings of the 27th Annual Conference of the International Group for Lean Construction (IGLC), Dublin, Ireland, 3–5 July 2019; pp. 1445–1456. [Google Scholar]

- Asadi, K.; Ramshankar, H.; Noghabaei, M.; Han, K. Real-time image localization and registration with BIM using perspective alignment for indoor monitoring of construction. J. Comput. Civ. Eng. 2019, 33, 04019031. [Google Scholar] [CrossRef]

- Bognot, J.R.; Candido, C.G.; Blanco, A.C.; Montelibano, J.R.Y. Building Construction Progress Monitoring Using Unmanned Aerial System (UAS), Low-Cost Photogrammetry, And Geographic Information System (GIS). Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 41–47. [Google Scholar] [CrossRef] [Green Version]

- Samsami, R.; Mukherjee, A.; Brooks, C.N. Mapping Unmanned Aerial System Data onto Building Information Modeling Parameters for Highway Construction Progress Monitoring. Transp. Res. Rec. 2021, 2676, 669–682. [Google Scholar] [CrossRef]

- Jacob-Loyola, N.; Rivera, M.L.; Herrera, R.F.; Atencio, E. Unmanned aerial vehicles (UAVs) for physical progress monitoring of construction. Sensors 2021, 21, 4227. [Google Scholar] [CrossRef]

- Tuttas, S.; Braun, A.; Borrmann, A.; Stilla, U. Acquisition and consecutive registration of photogrammetric point clouds for construction progress monitoring using a 4D BIM. J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 3–15. [Google Scholar] [CrossRef]

- Kim, H.E.; Kang, S.H.; Kim, K.; Lee, Y. Total variation-based noise reduction image processing algorithm for confocal laser scanning microscopy applied to activity assessment of early carious lesions. Appl. Sci. 2020, 10, 4090. [Google Scholar] [CrossRef]

- Kim, C.; Son, H.; Kim, C. Automated construction progress measurement using a 4D building information model and 3D data. Autom. Constr. 2013, 31, 75–82. [Google Scholar] [CrossRef]

- Turkan, Y.; Bosché, F.; Haas, C.T.; Haas, R. Tracking secondary and temporary concrete construction objects using 3D imaging technologies. In Computing in Civil Engineering, Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Los Angeles, CA, USA, 23–25 June 2013; Brilakis, I., Lee, S.H., Becerik-Gerber, B., Eds.; American Society of Civil Engineers: Reston, VA, USA, 2013; pp. 749–756. [Google Scholar]

- Zhang, C.; Arditi, D. Automated progress control using laser scanning technology. Autom. Constr. 2013, 36, 108–116. [Google Scholar] [CrossRef]

- Bosché, F.; Guillemet, A.; Turkan, Y.; Haas, C.T.; Haas, R. Tracking the built status of MEP works: Assessing the value of a Scan-vs-BIM system. J. Comput. Civ. Eng. 2014, 28, 05014004. [Google Scholar] [CrossRef] [Green Version]

- Bosché, F.; Ahmed, M.; Turkan, Y.; Haas, C.T.; Haas, R. The value of integrating Scan-to-BIM and Scan-vs-BIM techniques for construction monitoring using laser scanning and BIM: The case of cylindrical MEP components. Autom. Constr. 2015, 49, 201–213. [Google Scholar] [CrossRef]

- Son, H.; Bosché, F.; Kim, C. As-built data acquisition and its use in production monitoring and automated layout of civil infrastructure: A survey. Adv. Eng. Inform. 2015, 29, 172–183. [Google Scholar] [CrossRef]

- Pučko, Z.; Šuman, N.; Rebolj, D. Automated continuous construction progress monitoring using multiple workplace real time 3D scans. Adv. Eng. Inform. 2018, 38, 27–40. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D.; Ruwanpura, J.Y. Automatic recognition of common structural elements from point clouds for automated progress monitoring and dimensional quality control in reinforced concrete construction. Remote Sens. 2019, 11, 1102. [Google Scholar] [CrossRef] [Green Version]

- Puri, N.; Turkan, Y. Bridge construction progress monitoring using lidar and 4D design models. Autom. Constr. 2019, 109, 102961. [Google Scholar] [CrossRef]

- Khairadeen Ali, A.; Lee, O.J.; Lee, D.; Park, C. Remote indoor construction progress monitoring using extended reality. Sustainability 2021, 13, 2290. [Google Scholar] [CrossRef]

- Reja, V.K.; Bhadaniya, P.; Varghese, K.; Ha, Q. Vision-Based Progress Monitoring of Building Structures Using Point-Intensity Approach. In Proceedings of the 38th International Symposium on Automation and Robotics in Construction, Dubai, United Arab Emirates, 2–4 November 2021. [Google Scholar]

- Phillips, R.O.; Ulleberg, P.; Vaa, T. Meta-analysis of the effect of road safety campaigns on accidents. Accid. Anal. Prev. 2011, 43, 1204–1218. [Google Scholar] [CrossRef] [PubMed]

- Cumming, G. Understanding the New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis; Taylor Francis Group: Abingdon, UK, 2013. [Google Scholar]

- Høye, A.; Elvik, R. Publication Bias in Road Safety Evaluation: How can It be Detected and how Common is It? Transp. Res. Rec. 2010, 2147, 1–8. [Google Scholar] [CrossRef]

- Duval, S.; Tweedie, R. A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. J. Am. Stat. Assoc. 2000, 95, 89–98. [Google Scholar]

- Higgins, J.P.; Thompson, S.G. Controlling the risk of spurious findings from meta-regression. Stat. Med. 2004, 23, 1663–1682. [Google Scholar] [CrossRef]

- Lipsey, M.W.; Wilson, D.B. Practical Meta-Analysis; SAGE Publications Inc.: Newbury Park, CA, USA, 2001. [Google Scholar]

- Christensen, C.M. The Innovator’s Dilemma: When New Technologies Cause Great Firms to Fail; Harvard Business Review Press: Boston, MA, USA, 2013. [Google Scholar]

| Actual | Positive | Prediction | Negative | |

| True | False | |||

| True Positive (TP) | False Negative (FP) | |||

| It happens when the presence of a point cloud is correctly predicted | It happens when a test fails to reveal the presence of a point cloud | |||

| False Positive (FP) | True Negative (TN) | |||

| It happens when a test incorrectly shows a point cloud is present | It happens when a test correctly predicts the absence of a point cloud | |||

| References | As-Planned vs. As-Built | Performance of Object(s) Recognition | Environment | Notes | |||||

|---|---|---|---|---|---|---|---|---|---|

| 3D | 4D | 5D | Recall (%) | Accuracy (%) | Precision (%) | ||||

| 1 | [22] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Indoor | |

| 2 | [23] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor+Outdoor | It was performed using both RFID and laser scanner |

| 3 | [24] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 4 | [25] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 5 | [26] | ✓ | ⊠ | ⊠ | 89.6 | 88.1 | 84.7 | Indoor | |

| 6 | [27] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Indoor | |

| References | As-Planned vs. As-Built | Performance of Object(s) Recognition | Environment | Notes | |||||

|---|---|---|---|---|---|---|---|---|---|

| 3D | 4D | 5D | Recall (%) | Accuracy (%) | Precision (%) | ||||

| 1 | [29] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor+Outdoor | |

| 2 | [30] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | It was performed using both UWB and Laser scanner |

| 3 | [31] | ✓ | ⊠ | ⊠ | N/A | 100 75 | N/A | Indoor | The case study was conducted in two phases. One phase with LED indicator while the other phase without LED indicator |

| References | As-Planned vs. As-Built | Performance of Object(s) Recognition | Environment | Notes | |||||

|---|---|---|---|---|---|---|---|---|---|

| 3D | 4D | 5D | Recall (%) | Accuracy (%) | Precision (%) | ||||

| 1 | [33] | ✓ | ⊠ | ⊠ | 84.8 73.1 81.1 97.8 | 80.3 72.1 76.9 94.2 | 89.6 72.7 83.7 95.7 | Outdoor | It was conducted using both GPS and image-based method |

| 84.2 | 80.9 | 85.4 | |||||||

| References | Equipment | As-Planned vs. As-Built | Performance of Object(s) Recognition | Environment | Notes | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| UAV | Camera | 3D | 4D | 5D | Recall (%) | Accuracy (%) | Precision (%) | ||||

| 1 | [34] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 2 | [35] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | ||

| 3 | [21] | ✓ | ✓ | ⊠ | ⊠ | N/A | 97.1 | N/A | Outdoor | ||

| 4 | [36] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 5 | [37] | ✓ | ✓ | ✓ | ⊠ | N/A | 87.5 82.89 91.05 | N/A | Outdoor | Golparvar-Ford performed three case studies. Code names were given to these case studies which are RH1, RH2, and SD | |

| 6 | [19] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 7 | [46] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | It was conducted using image-based methods and laser scanning | |

| 8 | [38] | ✓ | ✓ | ✓ | ⊠ | N/A | 60.7 | N/A | Outdoor | ||

| 9 | [40] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 10 | [39] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | It was conducted using both image-based method and laser scanning | |

| 11 | [41] | ✓ | ✓ | ✓ | ⊠ | N/A | 95.9 | N/A | Outdoor | ||

| 12 | [54] | ✓ | ✓ | ✓ | ⊠ | ⊠ | N/A N/A | N/A N/A | N/A N/A | Outdoor | |

| 13 | [18] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Indoor | ||

| 14 | [47] | ✓ | ✓ | ✓ | ⊠ | N/A | 90 | N/A | Outdoor | It was conducted using Image-based and laser scanning methods | |

| 15 | [48] | ✓ | ✓ | ✓ | ⊠ | N/A | 91 | N/A | Outdoor | ||

| 16 | [49] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 17 | [50] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | ||

| 18 | [51] | ✓ | ✓ | ✓ | ⊠ | N/A | 82~84 | 50~72 | Outdoor | ||

| 19 | [55] | ✓ | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | It was conducted using both image-based method and laser scanning |

| 20 | [42] | ✓ | ✓ | ⊠ | ⊠ | 79.5 79.1 | N/A N/A | 93.9 90.7 | Outdoor | There were two case studies, Project 1 and project 2 | |

| 21 | [43] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 22 | [52] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 23 | [53] | ✓ | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | ||

| 24 | [44] | ✓ | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | ||

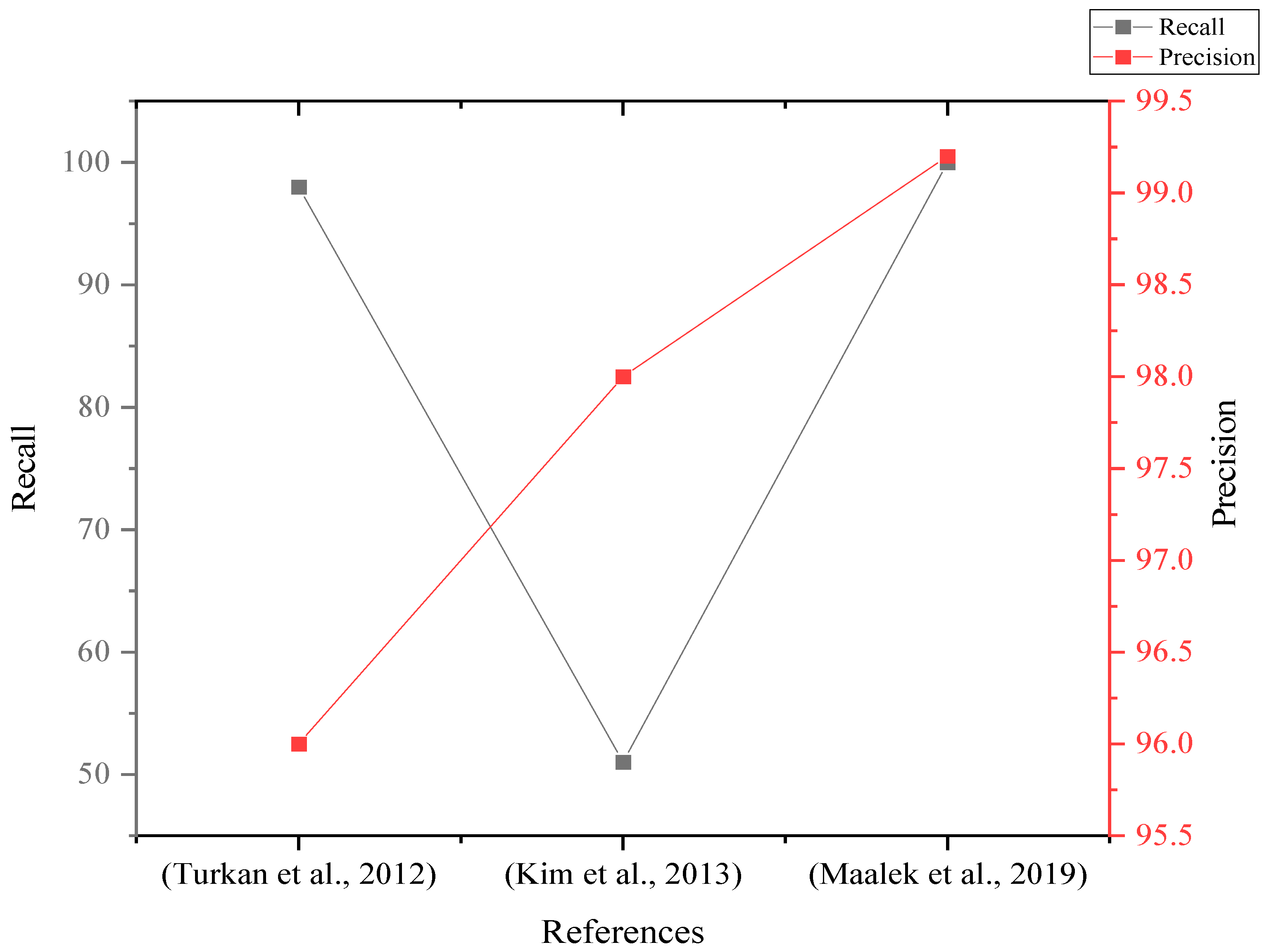

| References | As-Planned vs. As-Built | Performance of Object(s) Recognition | Environment | Notes | |||||

|---|---|---|---|---|---|---|---|---|---|

| 3D | 4D | 5D | Recall (%) | Accuracy (%) | Precision (%) | ||||

| 1 | [23] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor+Outdoor | Mentioned before, in Table 2 |

| 2 | [10] | ✓ | ✓ | ⊠ | 98 | N/A | 96 | Outdoor | |

| 3 | [57] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | |

| 4 | [56] | ✓ | ✓ | ⊠ | 51 | N/A | 98 | Outdoor | |

| 5 | [58] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 6 | [59] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 7 | [60] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 8 | [30] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | Mentioned before, in Table 3 |

| 9 | [61] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor +Indoor | |

| 10 | [46] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | Mentioned before, in Table 5 |

| 11 | [39] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | Mentioned before, in Table 5 |

| 12 | [62] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Indoor | |

| 13 | [47] | ✓ | ⊠ | ⊠ | N/A | 68 | N/A | Outdoor | Mentioned before, in Table 5 |

| 14 | [63] | ✓ | ✓ | ✓ | 100 | 99.3 | 99.2 | Outdoor | The set of results is only for columns. |

| 15 | [64] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | |

| 16 | [55] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Outdoor | Mentioned before in Table 5 |

| 17 | [65] | ✓ | ⊠ | ⊠ | N/A | N/A | N/A | Indoor | |

| 18 | [66] | ✓ | ✓ | ⊠ | N/A | N/A | N/A | Outdoor | |

| Study | Effect Size (ES.) | % Changes in Object Recognition Indicators’ Performance | p-Value | ||||

|---|---|---|---|---|---|---|---|

| Relative Weight | Lower 95% | Estimate | Upper 95% | ||||

| 1 | [26] | −0.56 | 0.161 | +0.27 | +0.6 | +1.17 | |

| 2 | [33] | 2.78 | 0.159 | +7.30 | +16 | +36 | |

| 3 | [38] | 1.79 | 0.135 | +1.2 | +6 | +30.63 | |

| 4 | [49] | 2.93 | 0.168 | +15 | +19 | +23.7 | |

| 5 | [57] | 4.79 | 0.129 | +19.6 | +120 | +735 | |

| 6 | [56] | 1.66 | 0.138 | +1 | +5.3 | +24.75 | |

| 7 | [64] | 4.89 | 0.109 | +7.29 | +133 | +1480 | |

| 2.48 | +3.102 | +11.84 | +45.22 | 0.0003 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

ElQasaby, A.R.; Alqahtani, F.K.; Alheyf, M. State of the Art of BIM Integration with Sensing Technologies in Construction Progress Monitoring. Sensors 2022, 22, 3497. https://doi.org/10.3390/s22093497

ElQasaby AR, Alqahtani FK, Alheyf M. State of the Art of BIM Integration with Sensing Technologies in Construction Progress Monitoring. Sensors. 2022; 22(9):3497. https://doi.org/10.3390/s22093497

Chicago/Turabian StyleElQasaby, Ahmed R., Fahad K. Alqahtani, and Mohammed Alheyf. 2022. "State of the Art of BIM Integration with Sensing Technologies in Construction Progress Monitoring" Sensors 22, no. 9: 3497. https://doi.org/10.3390/s22093497