Abstract

Augmented reality (AR) has gained enormous popularity and acceptance in the past few years. AR is indeed a combination of different immersive experiences and solutions that serve as integrated components to assemble and accelerate the augmented reality phenomena as a workable and marvelous adaptive solution for many realms. These solutions of AR include tracking as a means for keeping track of the point of reference to make virtual objects visible in a real scene. Similarly, display technologies combine the virtual and real world with the user’s eye. Authoring tools provide platforms to develop AR applications by providing access to low-level libraries. The libraries can thereafter interact with the hardware of tracking sensors, cameras, and other technologies. In addition to this, advances in distributed computing and collaborative augmented reality also need stable solutions. The various participants can collaborate in an AR setting. The authors of this research have explored many solutions in this regard and present a comprehensive review to aid in doing research and improving different business transformations. However, during the course of this study, we identified that there is a lack of security solutions in various areas of collaborative AR (CAR), specifically in the area of distributed trust management in CAR. This research study also proposed a trusted CAR architecture with a use-case of tourism that can be used as a model for researchers with an interest in making secure AR-based remote communication sessions.

1. Introduction

Augmented reality (AR) is one of the leading expanding immersive experiences of the 21st century. AR has brought a revolution in different realms including health and medicine, teaching and learning, tourism, designing, manufacturing, and other similar industries whose acceptance accelerated the growth of AR in an unprecedented manner [1,2,3]. According to a recent report in September 2022, the market size of AR and VR reached USD 27.6 billion in 2021, which is indeed estimated to reach USD 856.2 billion by the end of the year 2031 [4]. Big companies largely use AR-based technologies. For instance, Amazon, one of the leading online shopping websites, uses this technology to make it easier for customers to decide the type of furniture they want to buy. The rise in mobile phone technology also acted as an accelerator in popularizing AR. Earlier, mobile phones were not advanced and capable enough to run these applications due to their low graphics. Nowadays, however, smart devices are capable enough to easily run AR-based applications. A lot of research has been done on mobile-based AR. Lee et al. [5] developed a user-based design interface for educational purpose in mobile AR. To evaluate its conduct, fourth-grade elementary students were selected.

The adoption of AR in its various perspectives is backed up by a prolonged history. This paper presents an overview of the different integrated essential components that contribute to the working framework of AR, and the latest developments on these components are collected, analyzed, and presented, while the developments in the smart devices and the overall experience of the users have changed drastically [6]. The tracking technologies [7] are the building blocks of AR and establish a point of reference for movement and for creating an environment where the virtual and real objects are presented together. To achieve a real experience with augmented objects, several tracking technologies are presented which include techniques such as sensor-based [8], markerless, marker-based [9,10], and hybrid tracking technologies. Among these different technologies, hybrid tracking technologies are the most adaptive. As part of the framework constructed in this study, the simultaneous localization and mapping (SLAM) and inertial tracking technologies are combined. The SLAM technology collects points through cameras in real scenes while the point of reference is created using inertial tracking. The virtual objects are inserted on the relevant points of reference to create an augmented reality. Moreover, this paper analyzes and presents a detailed discussion on different tracking technologies according to their use in different realms i.e., in education, industries, and medical fields. Magnetic tracking is widely used in AR systems in medical, maintenance, and manufacturing. Moreover, vision-based tracking is mostly used in mobile phones and tablets because they have screen and camera, which makes them the best platform for AR. In addition, GPS tracking is useful in the fields of military, gaming, and tourism. These tracking technologies along with others are explained in detail in Section 3.

Once the points of reference are collected after tracking, then another important factor that requires significant accuracy is to determine at which particular point the virtual objects have to be mixed with the real environment. Here comes the role of display technologies that gives the users of augmented reality an environment where the real and virtual objects are displayed visually. Therefore, display technologies are one of the key components of AR. This research identifies state-of-the-art display technologies that help to provide a quality view of real and virtual objects. Augmented reality displays can be divided into various categories. All have the same task to show the merged image of real and virtual content to the user’s eye. The authors have categorized the latest technologies of optical display after the advancements in holographic optical elements (HOEs). There are other categories of AR displays, such as video-based, eye multiplexed, and projected onto a physical surface. Optical see-through has two sub-categories, one is a free-space combiner and the other is a wave-guide combiner [11,12]. The thorough details of display technologies are presented in Section 4.

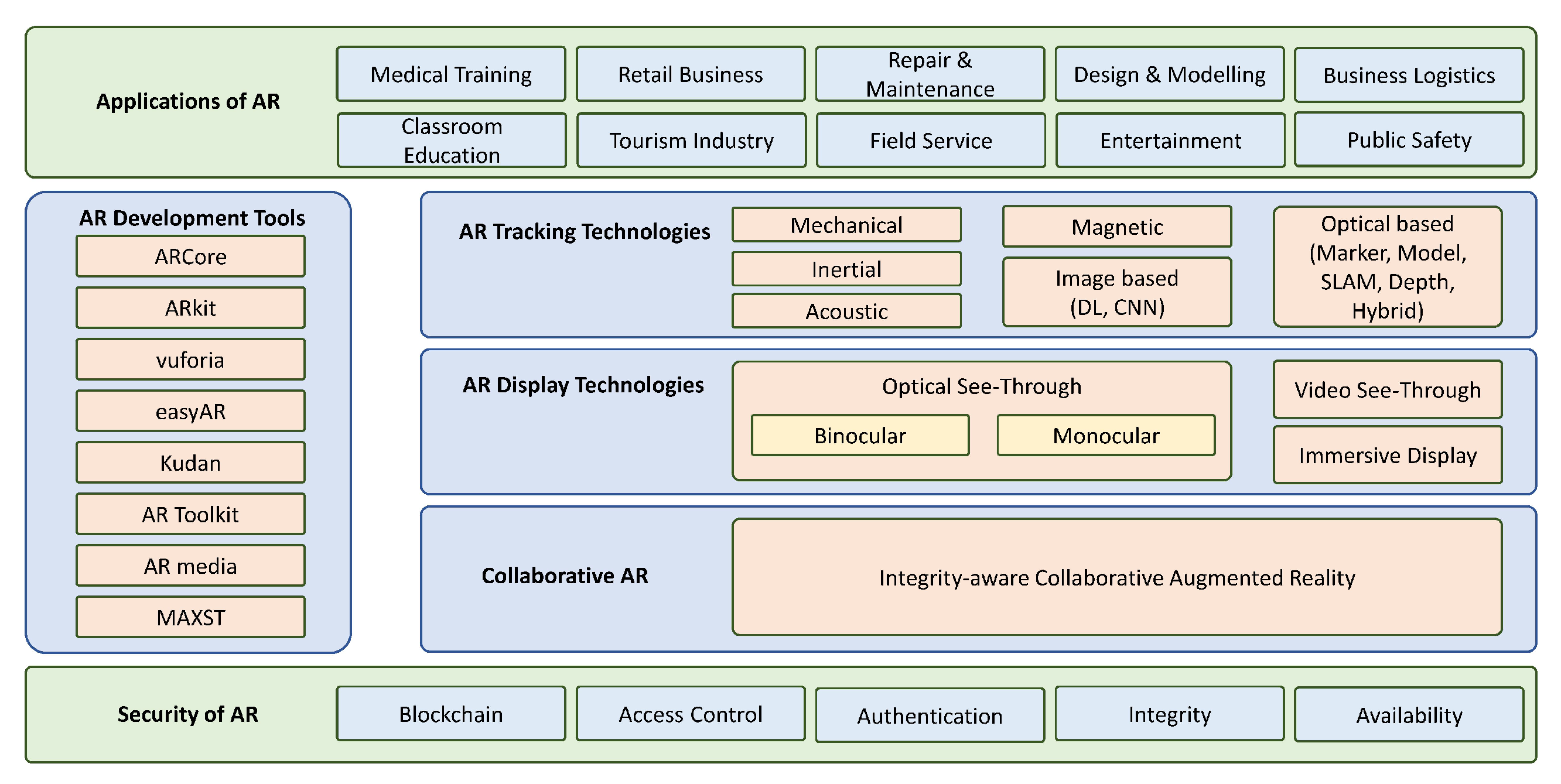

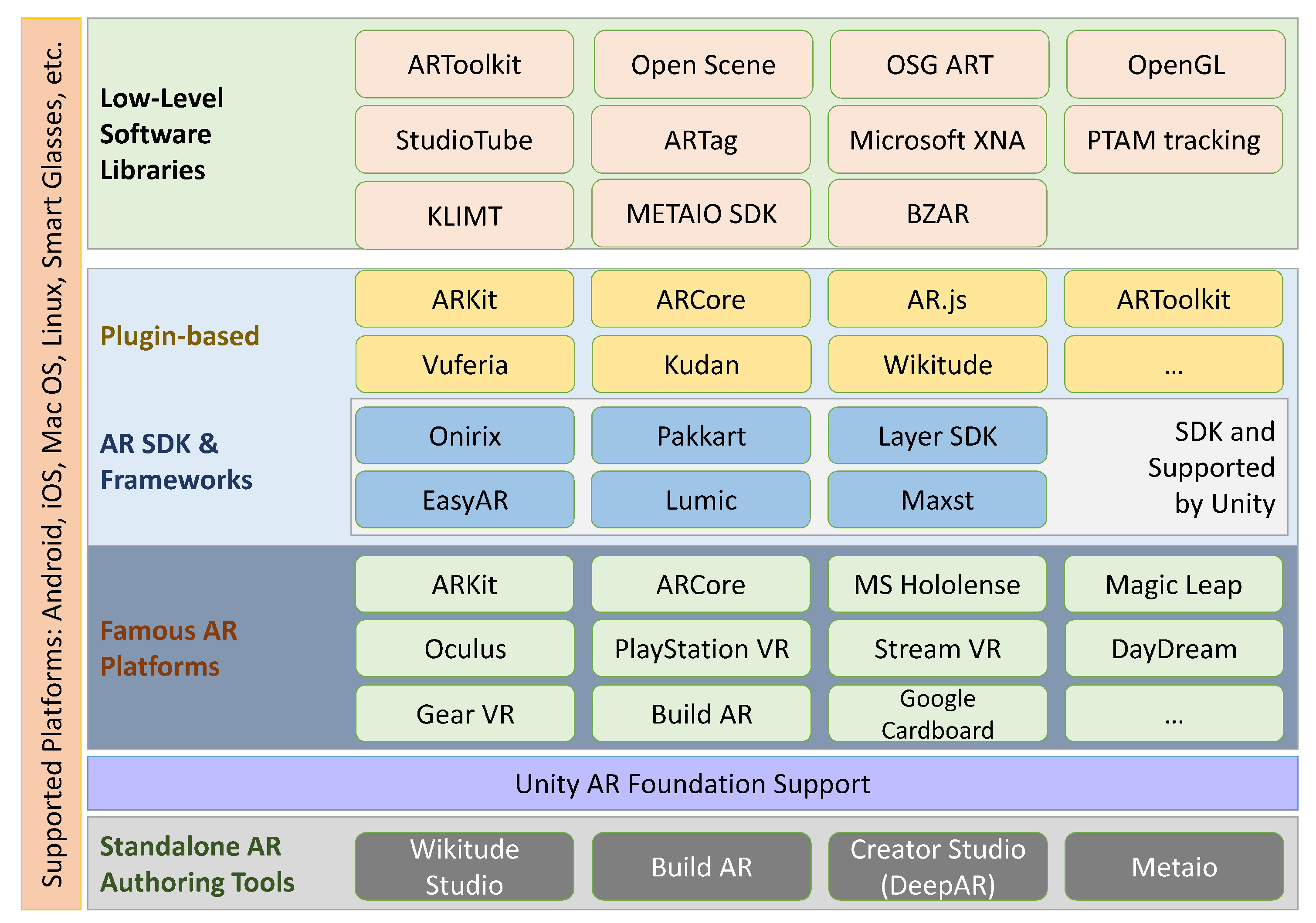

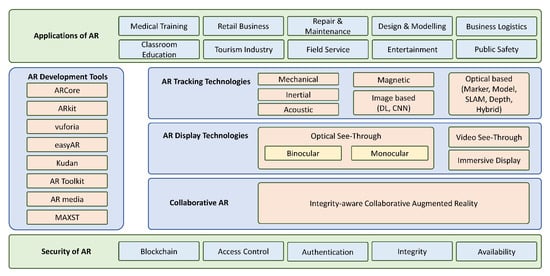

To develop these AR applications, different tools are used depending on the type of application used. For example, to develop a mobile-based AR application for Android or iOS, ARToolKit [13] is used. However, FLARToolKit [14] is used to create a web-based application using Flash. Moreover, there are various plug-ins available that can be integrated with Unity [15] to create AR applications. These development tools are reviewed in Section 6 of this paper. Figure 1 provides an overview of reviewed topics of augmented reality in this paper.

Figure 1.

Overview of AR, VR, and collaborative AR applications, tools, and technologies.

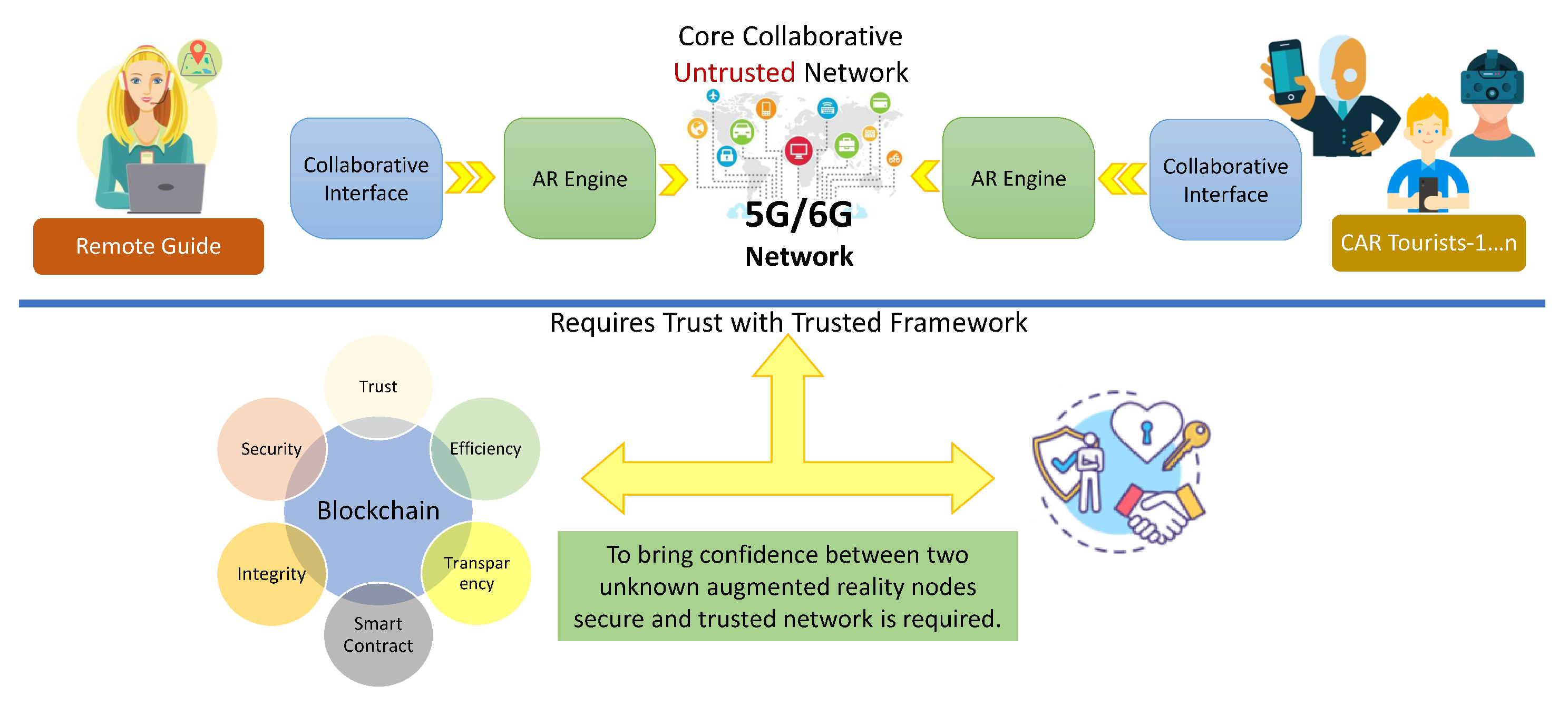

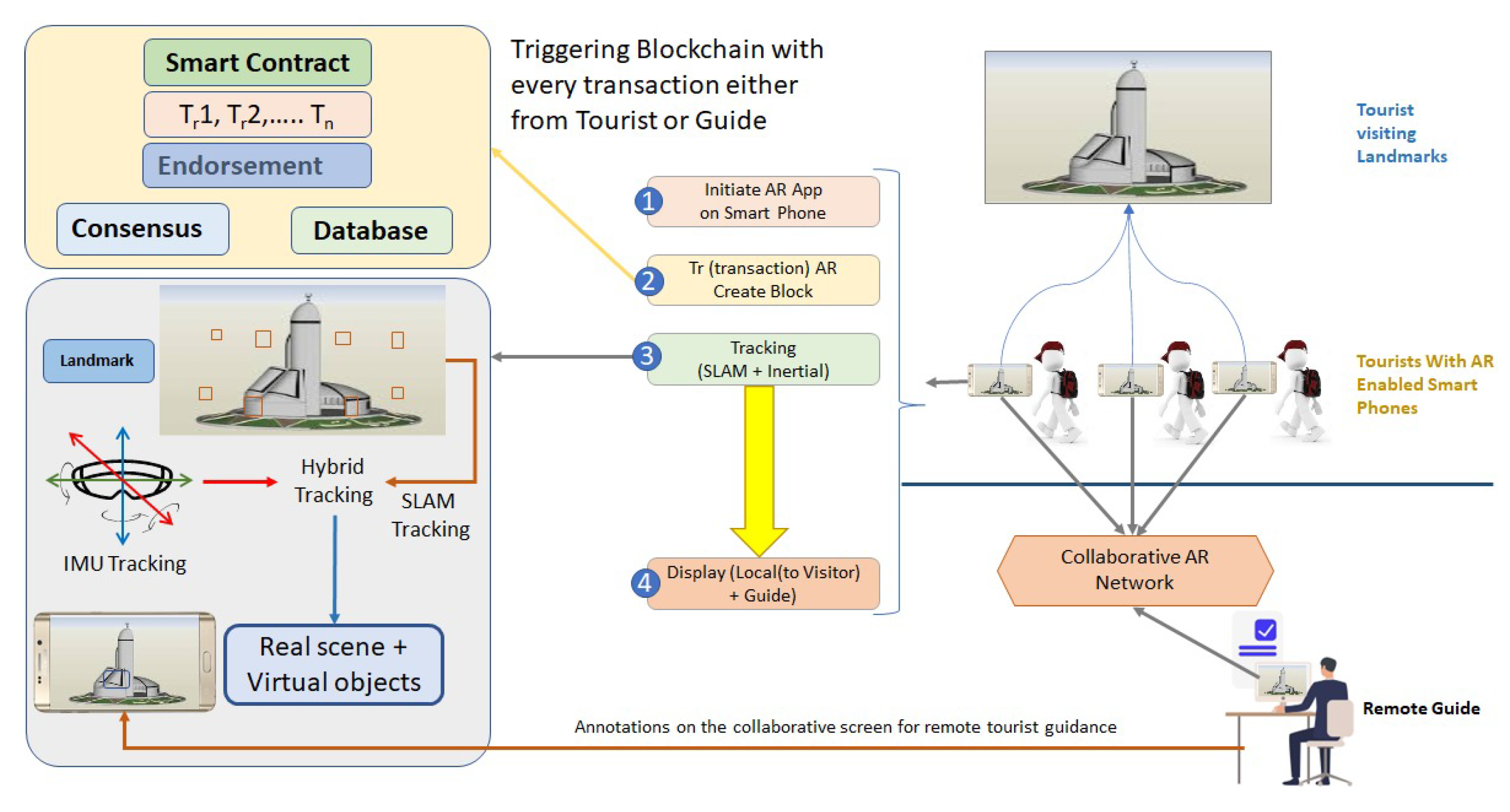

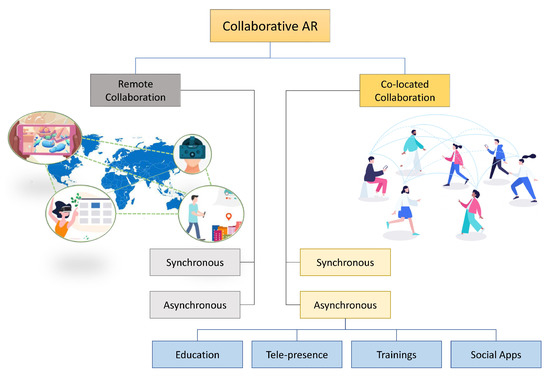

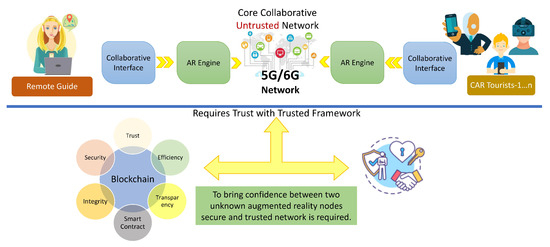

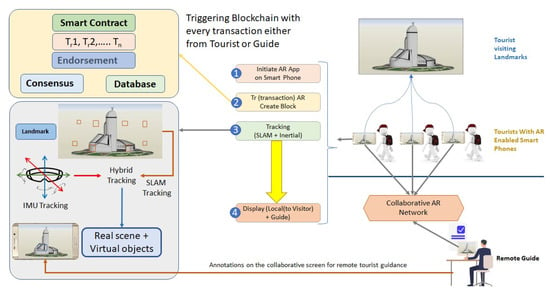

After going through a critical review process of collaborative augmented reality, the research has identified that some security flaws and missing trust parameters need to be addressed to ensure a pristine environment is provided to the users. Hackers and intruders are always active to exploit different vulnerabilities in the systems and software, but the previous research conducted on collaborative augmented reality did not depict reasonable efforts made in this direction to make secure collaboration. To address the security flaws and to provide secure communication in collaborative augmented reality, this research considered it appropriate to come up with a security solution and framework that can limit danger and risks that may be posed in the form of internal and external attacks. To actualize the secure platform, this study came up with an architecture for presenting a secure collaborative AR in the tourism sector in Saudi Arabia as a case study. The focus of the case study is to provide an application that can guide tourists during their visit to any of the famous landmarks in the country. This study proposed a secure and trustful mobile application based on collaborative AR for tourists. In this application, the necessary information is rendered on screen and the user can hire a guide to provide more information in detail. A single guide can provide the services to a group of tourists visiting the same landmark. A blockchain network was used to secure the applications and protect the private data of the users [16,17]. For this purpose, we performed a thorough literature review for an optimized solution regarding security and tracking for which we studies the existing tracking technologies and listed them in this paper along with their limitations. In our use case, we used a GPS tracking system to track the user’s movement and provide the necessary information about the visited landmark through the mobile application.

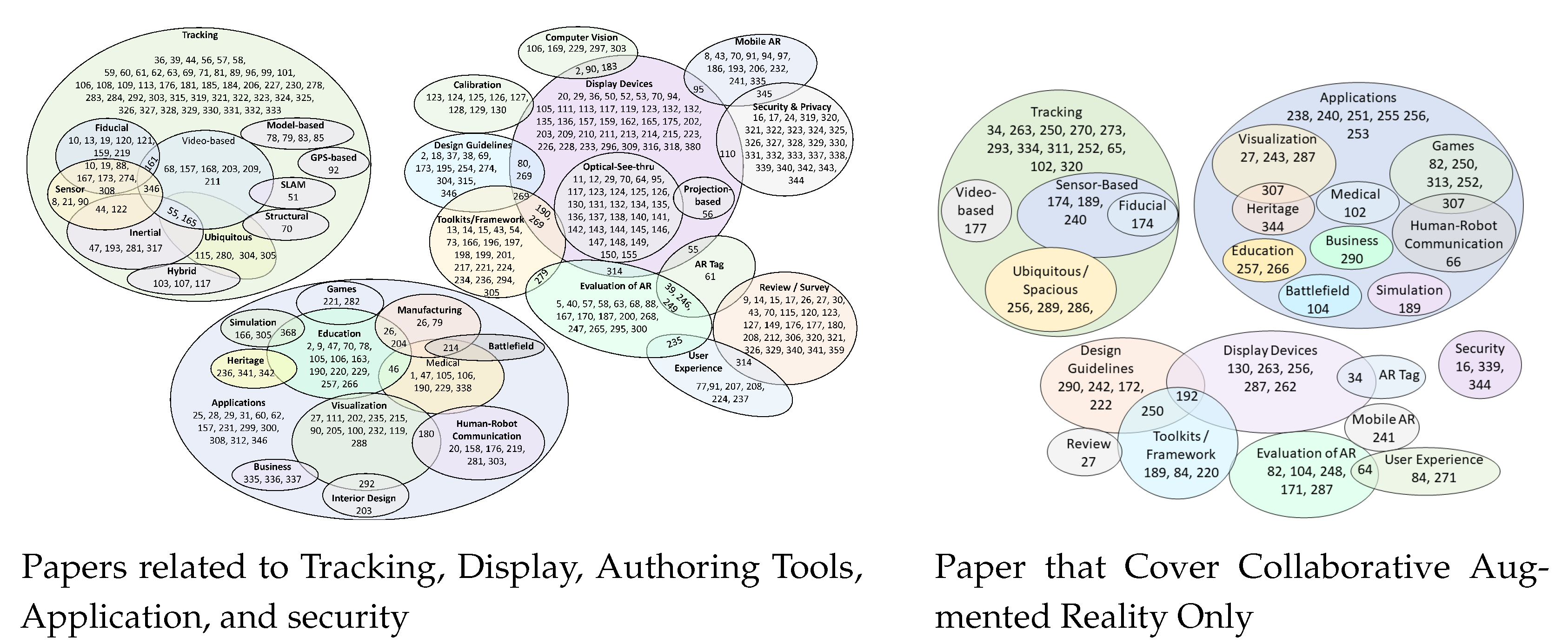

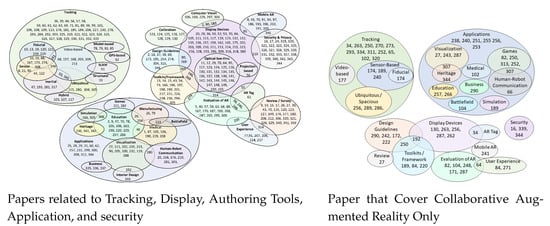

Observing the fact that AR operates in an integrated fashion that combines different technologies including tracking technologies, display technologies, AR tools, collaborative AR, and applications of AR has encouraged us to explore and present these conceptions and technologies in detail. To facilitate researchers on these different techniques, the authors have explored the research previously conducted and presented it in a Venn diagram, as shown in Figure 2. Interested investigators can choose their required area of research in AR. As can be seen in the diagram, most research has been done in the area of tracking technologies. This is further divided into different types of tracking solutions including fiducial tracking, video-based tracking, and inertial tracking. Some papers lie in several categories for, example some papers such as [18,19,20] fall in both the fiducial tracking and sensor categories. Similarly, computer vision and display devices have some common papers, and inertial tracking and video-based tracking also have some papers in common. In addition, display devices share common papers with computer vision, mobile AR, design guidelines, tool-kits, evaluation, AR tags, and security and privacy of AR. Furthermore, visualization has different papers in common with business, interior design, and human–robot communication. While education shares some paper with gaming, simulation, medicine, heritage, and manufacturing. In short, we have tried to summarize all papers and further elaborate in their sections for the convenience of the reader.

Figure 2.

Classification of reviewed papers with respect to tracking, display, authoring tools, application, Collaborative and security.

Contribution: This research presents a comprehensive review of AR and its associated technologies. A review of state-of-the-art tracking and display technologies is presented followed by different essential components and tools that can be used to effectively create AR experiences. The study also presents the newly emerging technologies such as collaborative augmented reality and how different application interactions are carried out. During the review phase, the research identified that the AR-based solutions and particularly collaborative augmented reality solutions are vulnerable to external intrusion. It is identified that these solutions lack security and the interaction could be hijacked, manipulated, and sometimes exposed to potential threats. To address these concerns, this research felt the need to ensure that the communication has integrity; henceforth, the research utilizes the state-of-the-art blockchain infrastructure for the collaborating applications in AR. The paper further proposes complete secure framework wherein different applications working remotely have a real feeling of trust with each other [21].

Outline: This paper presents the overview of augmented reality and its applications in various realms in Section 2. In Section 3, tracking technologies are presented, while a detailed overview of the display technologies is provided in Section 4. Section 6 apprises readers on AR development tools. Section 7 highlights the collaborative research on augmented reality, while Section 8 interprets the AR interaction and input technologies. The paper presents the details of design guidelines and interface patterns in Section 9, while Section 10 discusses the security and trust issues in collaborative AR. Section 12 highlights future directions for research, while Section 13 concludes this research.

2. Augmented Reality Overview

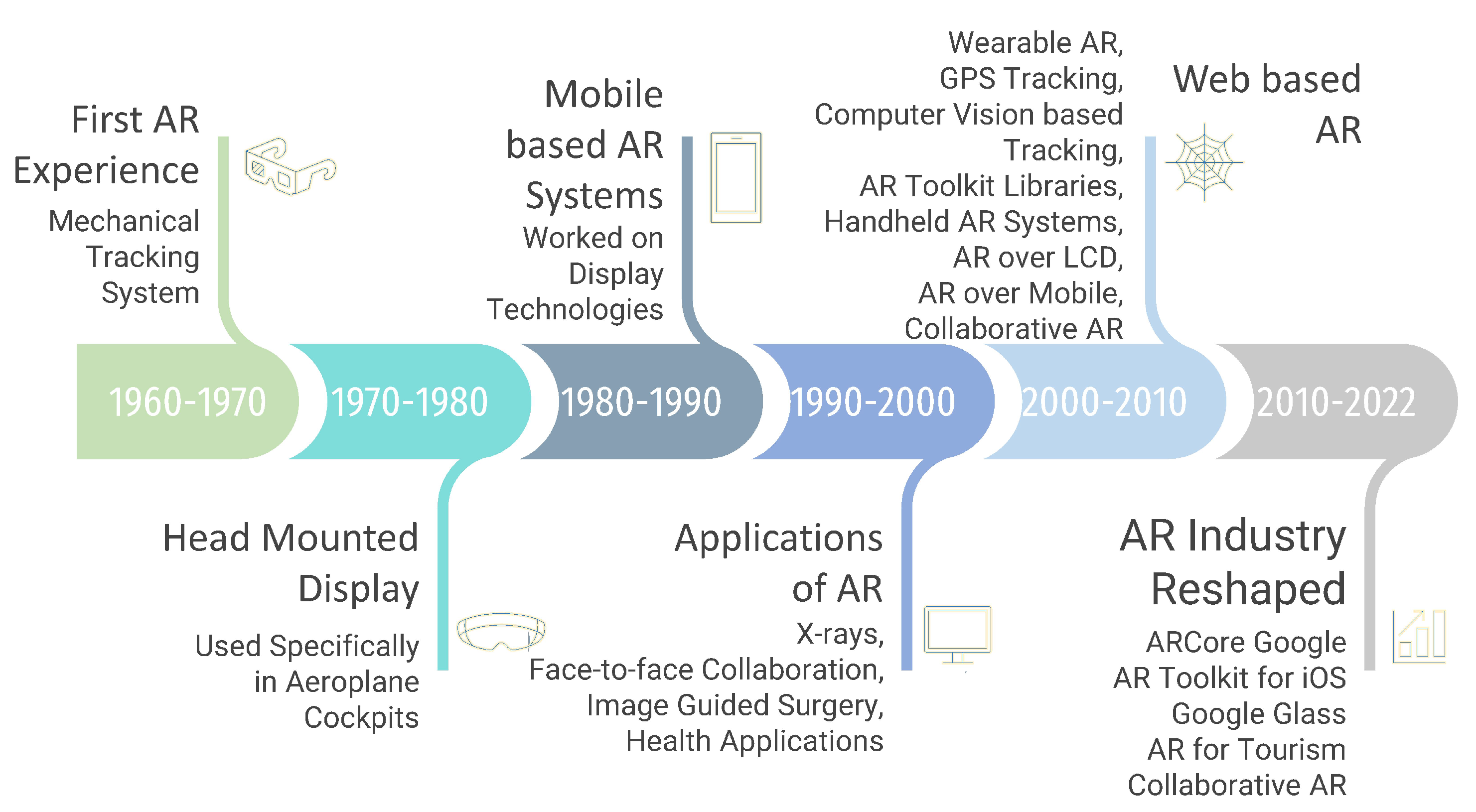

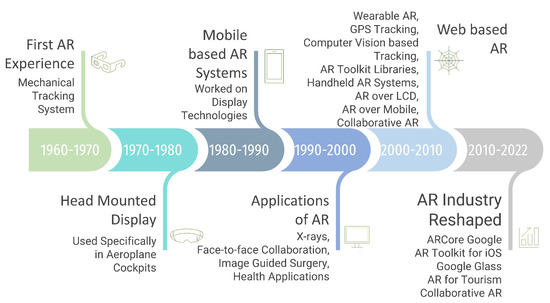

People, for many years, have been using lenses, light sources, and mirrors to create illusions and virtual images in the real world [22,23,24]. However, Ivan Sutherland was the first person to truly generate the AR experience. Sketchpad, developed at MIT in 1963 by Ivan Sutherland, is the world’s first interactive graphic application [25]. In Figure 3, we have given an overview of the development of AR technology from the beginning to 2022. Bottani et al. [26] reviews the AR literature published during the time period of 2006–2017. Moreover, Sereno et al. [27] use a systematic survey approach to detail the existing literature available on the intersection of computer-supported collaborative work and AR.

Figure 3.

Augmented reality advancement over time for the last 60 years.

2.1. Head-Mounted Display

Ens et al. [28] review the existing work on design exploration for mixed-scale gestures where the Hololens AR display is used to interweave larger gestures with micro-gestures.

2.2. AR Towards Applications

ARToolKit tracking library [13] aimed to provide the computer vision tracking of a square marker in real-time which fixed two major problems, i.e., enabling interaction with real-world objects and secondly, the user’s viewpoint tracking system. Researchers conducted studies to develop handheld AR systems. Hettig et al. [29] present a system called “Augmented Visualization Box” to asses surgical augmented reality visualizations in a virtual environment. Goh et al. [30] present details of the critical analysis of 3D interaction techniques in mobile AR. Kollatsch et al. [31] introduce a system that creates and introduces the production data and maintenance documentation into the AR maintenance apps for machine tools which aims to reduce the overall cost of necessary expertise and the planning process of AR technology. Bhattacharyya et al. [32] introduce a two-player mobile AR game known as Brick, where users can engage in synchronous collaboration while inhabiting the real-time and shared augmented environment. Kim et al. [33] suggest that this decade is marked by a tremendous technological boom particularly in rendering and evaluation research while display and calibration research has declined. Liu et al. [34] expand the information feedback channel from industrial robots to a human workforce for human–robot collaboration development.

2.3. Augmented Reality for the Web

Cortes et al. [35] introduce the new techniques of collaboratively authoring surfaces on the web using mobile AR. Qiao et al. [36] review the current implementations of mobile AR, enabling technologies of AR, state-of-art technology, approaches for potential web AR provisioning, and challenges that AR faces in a web-based system.

2.4. AR Application Development

The AR industry was tremendously increasing in 2015, extending from smartphones to websites with head-worn display systems such as Google Glass. In this regard, Agati et al. [18] propose design guidelines for the development of an AR manual assembly system which includes ergonomics, usability, corporate-related, and cognition.

AR for Tourism and Education: Shukri et al. [37] aim to introduce the design guidelines of mobile AR for tourism by proposing 11 principles for developing efficient AR design for tourism which reduces cognitive overload, provides learning ability, and helps explore the content while traveling in Malaysia. In addition to it, Fallahkhair et al. [38] introduce new guidelines to make AR technologies with enhanced user satisfaction, efficiency, and effectiveness in cultural and contextual learning using mobiles, thereby enhancing the tourism experience. Akccayir et al. [39] show that AR has the advantage of placing the virtual image on a real object in real time while pedagogical and technical issues should be addressed to make the technology more reliable. Salvia et al. [40] suggest that AR has a positive impact on learning but requires some advancements.

Sarkar et al. [41] present an AR app known as ScholAR. It introduces enhancing the learning skills of the students to inculcate conceptualizing and logical thinking among sevemth-grade students. Soleiman et al. [42] suggest that the use of AR improves abstract writing as compared to VR.

2.5. AR Security and Privacy

Hadar et al. [43] scrutinize security at all steps of AR application development and identify the need for new strategies for information security, privacy, and security, with a main goal to design and introduce capturing and mapping concerns. Moreover, in the industrial arena, Mukhametshin et al. [44] focus on developing sensor tag detection, tracking, and recognition for designing an AR client-side app for Siemen Company to monitor the equipment for remote facilities.

3. Tracking Technology of AR

Tracking technologies introduce the sensation of motion in the virtual and augmented reality world and perform a variety of tasks. Once a tracking system is rightly chosen and correctly installed, it allows a person to move within a virtual and augmented environment. It further allows us to interact with people and objects within augmented environments. The selection of tracking technology depends on the sort of environment, the sort of data, and the availability of required budgets. For AR technology to meet Azuma’s definition of an augmented reality system, it must adhere to three main components:

- it combines virtual and the real content;

- it is interactive in real time;

- is is registered in three dimensions.

The third condition of being “registered in three dimensions” alludes to the capability of an AR system to project the virtual content on physical surroundings in such a way that it seems to be part of the real world. The position and orientation (pose) of the viewer concerning some anchor in the real world must be identified and determined for registering the virtual content in the real environment. This anchor of the real world may be the dead-reckoning from inertial tracking, a defined location in space determined using GPS, or a physical object such as a paper image marker or magnetic tracker source. In short, the real-world anchor depends upon the applications and the technologies used. With respect to the type of technology used, there are two ways of registering the AR system in 3D:

- Determination of the position and orientation of the viewer relative to the real-world anchor: registration phase;

- Upgrading of viewer’s pose with respect to previously known pose: tracking phase.

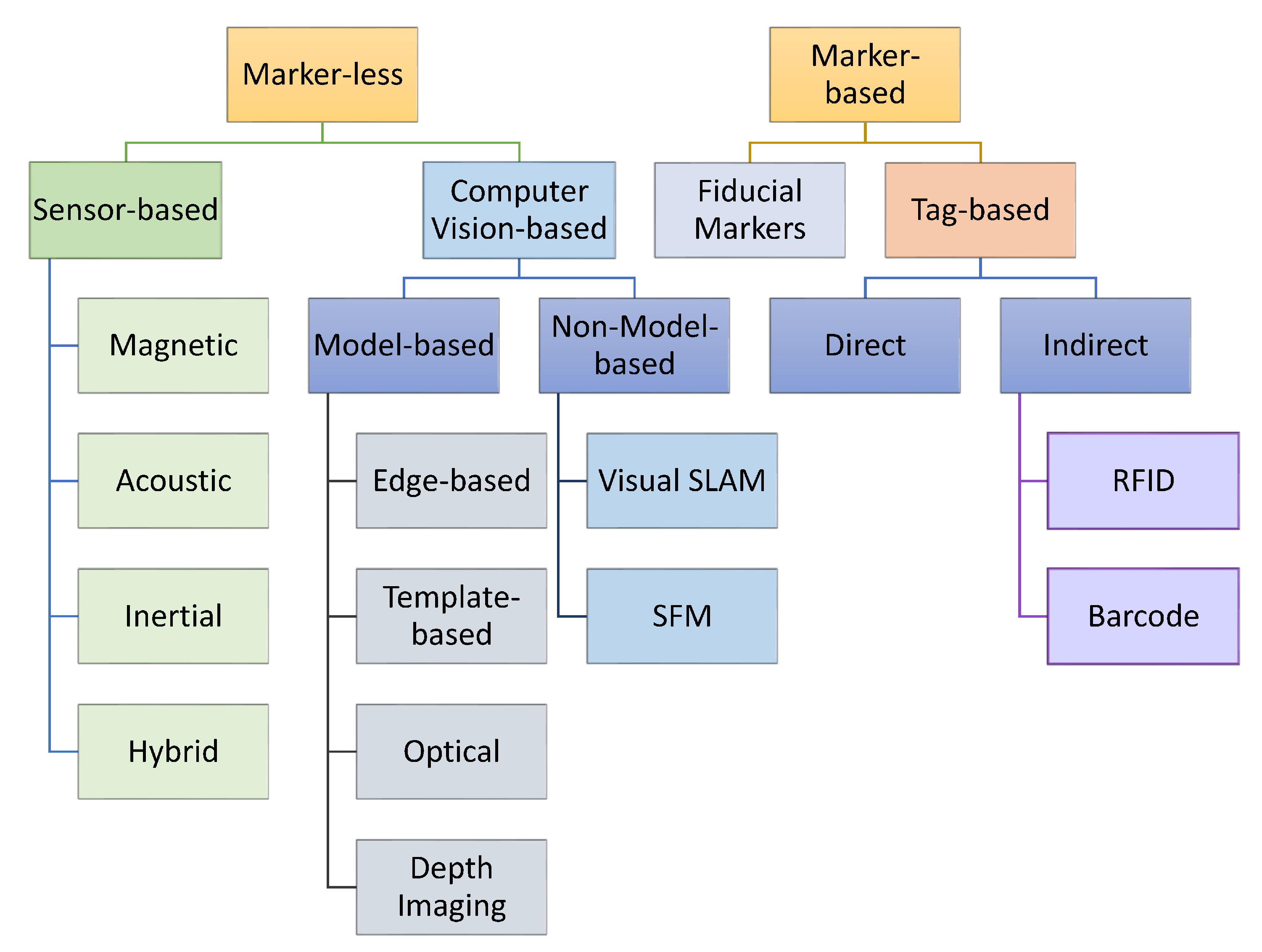

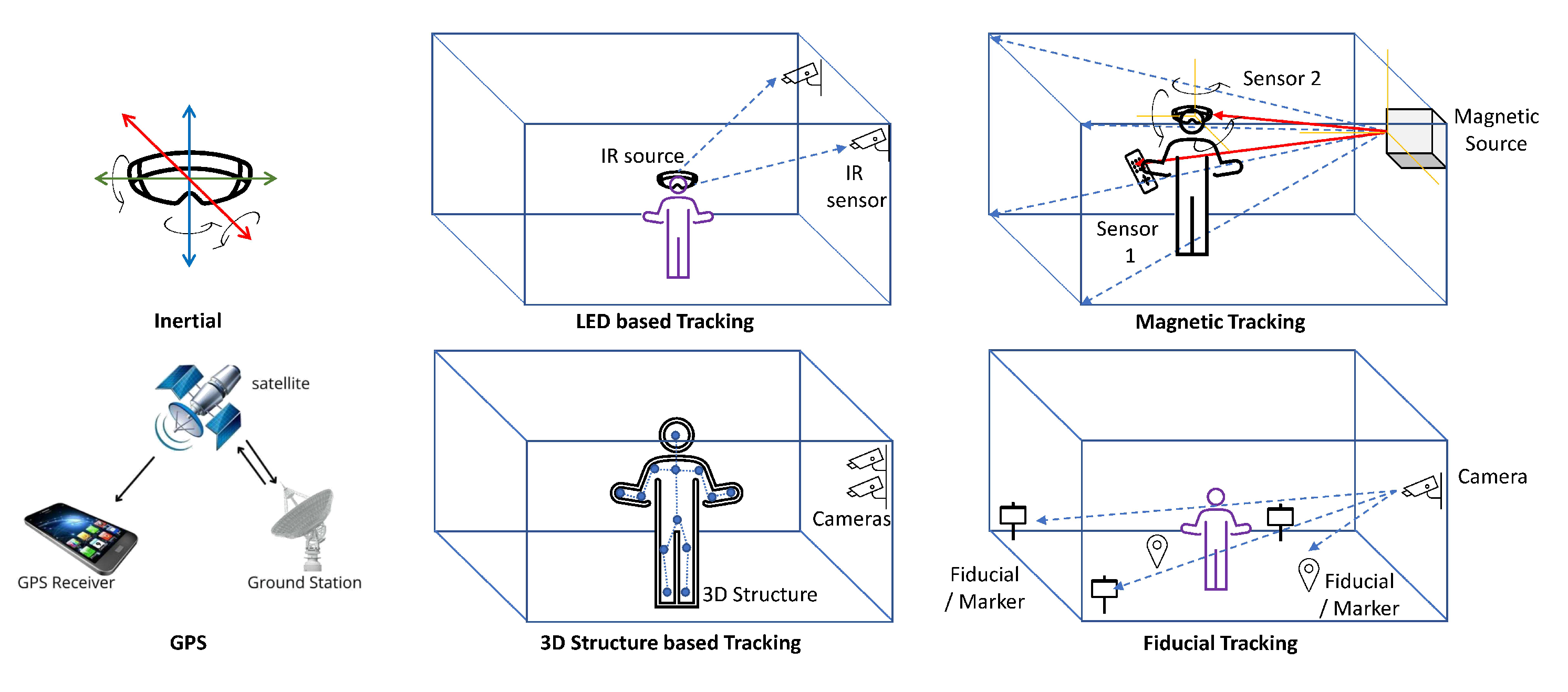

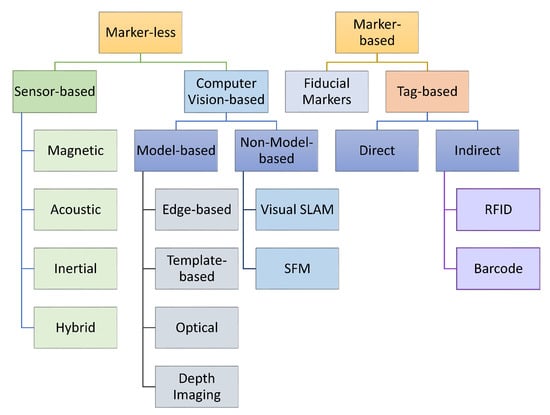

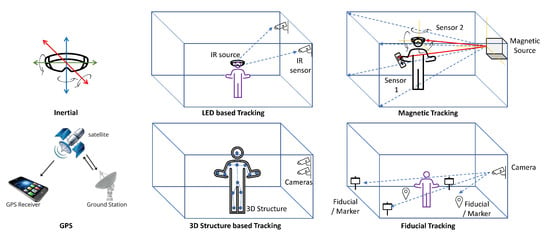

In this document, the word “tracking” would define both phases as common terminology. There are two main types of tracking techniques which are explained as follows (depicted in Figure 4).

Figure 4.

Categorization of augmented reality tracking techniques.

3.1. Markerless Tracking Techniques

Markerless tracking techniques further have two types, one is sensor based and another is vision based.

3.1.1. Sensor-Based Tracking

Magnetic Tracking Technology: This technology includes a tracking source and two sensors, one sensor for the head and another one for the hand. The tracking source creates an electromagnetic field in which the sensors are placed. The computer then calculates the orientation and position of the sensors based on the signal attenuation of the field. This gives the effect of allowing a full 360 range of motion. i.e., allowing us to look all the way around the 3D environment. It also allows us to move around all three degrees of freedom. The hand tracker has some control buttons that allow the user to navigate along the environment. It allows us to pick things up and understand the size and shape of the objects [45]. In Figure 5 we have tried to draw the tracking techniques to give a better understanding to the reader.

Figure 5.

Augmented reality tracking techniques presentation.

Frikha et al. [46] introduce a new mutual occlusion problem handler. The problem of occlusion occurs when the real objects are in front of the virtual objects in the scene. The authors use a 3D positioning approach and surgical instrument tracking in an AR environment. The paradigm is introduced that is based on monocular image-based processing. The result of the experiment suggested that this approach is capable of handling mutual occlusion automatically in real-time.

One of the main issues with magnetic tracking is the limited positioning range [47]. Orientation and position can be determined by setting up the receiver to the viewer [48]. Receivers are small and light in weight and the magnetic trackers are indifferent to optical disturbances and occlusion; therefore, these have high update rates. However, the resolution magnetic field declines with the fourth power of the distance, and the strength of magnetic fields decline with the cube of the distance [49]. Therefore, the magnetic trackers have constrained working volume. Moreover, magnetic trackers are sensitive to environments around magnetic fields and the type of magnetic material used and are also susceptible to measurement jitter [50].

Magnetic tracking technology is widely used in the range of AR systems, with applications ranging from maintenance [51] to medicine [52] and manufacturing [53].

Inertial Tracking: Magnetometers, accelerometers, and gyroscopes are examples of inertial measurement units (IMU) used in inertial tracking to evaluate the velocity and orientation of the tracked object. An inertial tracking system is used to find the three rotational degrees of freedom relative to gravity. Moreover, the time period of the trackers’ update and the inertial velocity can be determined by the change in the position of the tracker.

Advantages of Inertial Tracking: It does not require a line of sight and has no range limitations. It is not prone to optical, acoustic, magnetic, and RE interference sources. Furthermore, it provides motion measurement with high bandwidth. Moreover, it has negligible latency and can be processed as fast as one desires.

Disadvantages of Inertial Tracking: They are prone to drift of orientation and position over time, but their major impact is on the position measurement. The rationale behind this is that the position must be derived from the velocity measurements. The usage of a filter could help in resolving this issue. However, the issue could while focusing on this, the filter can decrease the responsiveness and the update rate of the tracker [54]. For the ultimate correction of this issue of the drift, the inertial sensor should be combined with any other kind of sensor. For instance, it could be combined with ultrasonic range measurement devices and optical trackers.

3.1.2. Vision-Based Tracking

Vision-based tracking is defined as tracking approaches that ascertain the camera pose by the use of data captured from optical sensors and as registration. The optical sensors can be divided into the following three categories:

- visible light tracking;

- 3D structure tracking;

- infrared tracking.

In recent times, vision-based tracking AR is becoming highly popular due to the improved computational power of consumer devices and the ubiquity of mobile devices, such as tablets and smartphones, thereby making them the best platform for AR technologies. Chakrabarty et al. [55] contribute to the development of autonomous tracking by integrating the CMT into IBVS, their impact on the rigid deformable targets in indoor settings, and finally the integration of the system into the Gazebo simulator. Vision-based tracking is demonstrated by the use of an effective object tracking algorithm [56] known as the clustering of static-adaptive correspondences for deformable object tracking (CMT). Gupta et al. [57] detail the comparative analysis between the different types of vision-based tracking systems.

Moreover, Krishna et al. [58] explore the use of electroencephalogram (EEG) signals in user authentication. User authentication is similar to facial recognition in mobile phones. Moreover, this is also evaluated by combining it with eye-tracking data. This research contributes to the development of a novel evaluation paradigm and a biometric authentication system for the integration of these systems. Furthermore, Dzsotjan et al. [59] delineate the usefulness of the eye-tracking data evaluated during the lectures in order to determine the learning gain of the user. Microsoft HoloLens2’s designed Walk the Graph app was used to generate the data. Binary classification was performed on the basis of the kinematic graphs which users reported of their own movement.

Ranging from smartphones to laptops and even to wearable devices with suitable cameras located in them, visible light tracking is the most commonly used optical sensor. These cameras are particularly important because they can both make a video of the real environment and can also register the virtual content to it, and thereby can be used in video see-through AR systems.

Chen et al. [60] resolve the shortcomings of the deep learning lightning model (DAM) by combining the method of transferring a regular video to a 3D photo-realistic avatar and a high-quality 3D face tracking algorithm. The evaluation of the proposed system suggests its effectiveness in real-world scenarios when we have variability in expression, pose, and illumination. Furthermore, Rambach et al. [61] explore the details pipeline of 6DoF object tracking using scanned 3D images of the objects. The scope of research covers the initialization of frame-to-frame tracking, object registration, and implementation of these aspects to make the experience more efficient. Moreover, it resolves the challenges that we faced with occlusion, illumination changes, and fast motion.

3.1.3. Three-Dimensional Structure Tracking

Three-dimensional structure information has become very affordable because of the development of commercial sensors capable of accomplishing this task. It was begun after the development of Microsoft Kinect [62]. Syahidi et al. [63] introduce a 3D AR-based learning system for pre-school children. For determining the three-dimensional points in the scene, different types of sensors could be used. The most commonly used are the structured lights [64] or the time of flight [65]. These technologies work on the principle of depth analysis. In this, the real environment depth information is extracted by the mapping and the tracking [66]. The Kinect system [67], developed by Microsoft, is one of the widely used and well-developed approaches in Augmented Reality.

Rambach et al. [68] present the idea of augmented things: utilizing off-screen rendering of 3D objects, the realization of application architecture, universal 3D object tracking based on the high-quality scans of the objects, and a high degree of parallelization. Viyanon et al. [69] focus on the development of an AR app known as “AR Furniture" for providing the experience of visualizing the design and decoration to the customers. The customers fit the pieces of furniture in their rooms and were able to make a decision regarding their experience. Turkan et al. [70] introduce the new models for teaching structural analysis which has considerably improved the learning experience. The model integrates 3D visualization technology with mobile AR. Students can enjoy the different loading conditions by having the choice of switching loads, and feedback can be provided in the real-time by AR interface.

3.1.4. Infrared Tracking

The objects that emitted or reflected the light are some of the earliest vision-based tracking techniques used in AR technologies. Their high brightness compared to their surrounding environment made this tracking very easy [71,72]. The self-light emitting targets were also indifferent to the drastic illumination effects i.e., harsh shadows or poor ambient lighting. In addition, these targets could either be transfixed to the object being tracked and camera at the exterior of the object and was known as “outside-looking-in” [73]. Or it could be “inside-looking-out”, external in the environment with camera attached to the target [74]. The inside-looking-out configuration, compared to the sensor of the inside-looking-out system, has greater resolution and higher accuracy of angular orientation. The inside-looking-out configuration is used in the development of several systems [20,75,76,77], typically with infrared LEDs mounted on the ceiling and a head-mounted display with a camera facing externally.

3.1.5. Model-Based Tracking

The three-dimensional tracking of real-world objects has been the subject of researchers’ interest. It is not as popular as natural feature tracking or planner fiducials, however, a large amount of research has been done on it. In the past, tracking the three-dimensional model of the object was usually created by the hand. In this system, the lines, cylinders, spheres, circles, and other primitives were combined to identify the structure of objects [78]. Wuest et al. [79] focus on the development of the scalable and performance pipeline for creating a tracking solution. The structural information of the scene was extracted by using the edge filters. Additionally, for the determination of the pose, edge information and the primitives were matched [80].

In addition, Gao et al. [81] explore the tracking method to identify the different vertices of a convex polygon. This is done successfully as most of the markers are square. The coordinates of four vertices are used to determine the transformation matrix of the camera. Results of the experiment suggested that the algorithm was so robust to withstand fast motion and large ranges that make the tracking more accurate, stable, and real time.

The combination of edge-based tracking and natural feature tracking has the following advantages:

- It provides additional robustness [82].

- Enables spatial tracking and thereby is able to be operated in open environments [83].

- For variable and complex environments, greater robustness was required. Therefore, they introduced the concept of keyframes [84] in addition to the primitive model [85].

Figen et al. [86] demonstrate of a series of studies that were done at the university level in which participants were asked to make the mass volume of buildings. The first study demanded the solo work of a designer in which they had to work using two tools: MTUIs of the AR apps and analog tools. The second study developed the collaboration of the designers while using analog tools. The study has two goals: change in the behavior of the designer while using AR apps and affordances of different interfaces.

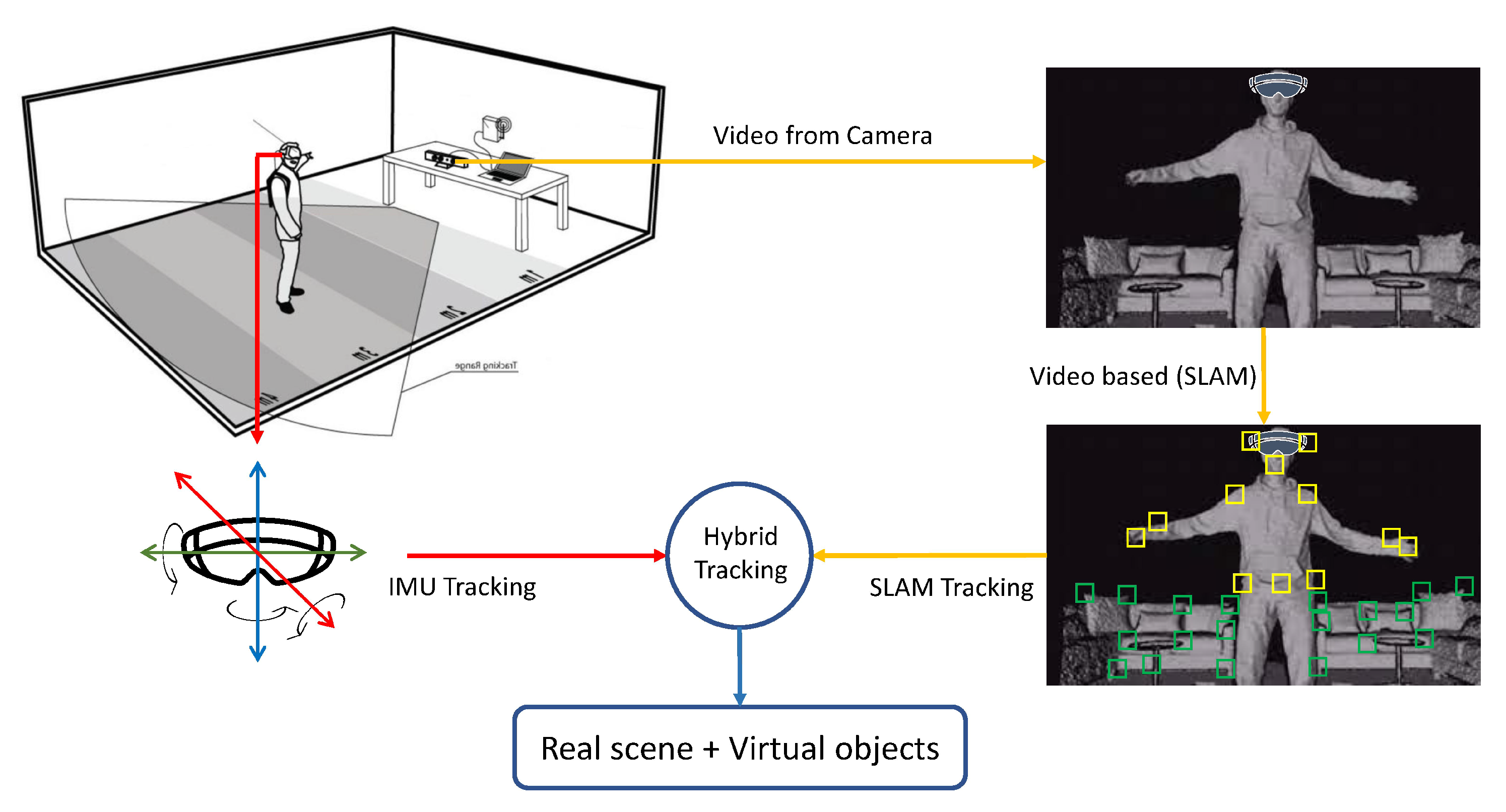

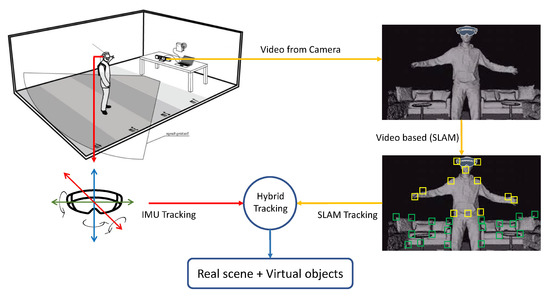

Developing and updating the real environment’s map simultaneously had been the subject of interest in model-based tracking. This has a number of developments. First, simultaneous localization and map building (SLAM) was primarily done for robot navigation in unknown environments [87]. In augmented reality, [88,89], this technique was used for tracking the unknown environment in a drift-free manner. Second, parallel mapping and tracking [88] was developed especially for AR technology. In this, the mapping of environmental components and the camera tracks were identified as a separate function. It improved tracking accuracy and also overall performance. However, like SLAM, it did not have the capability to close large loops in the constrained environment and area (Figure 6).

Figure 6.

Hybrid tracking: inertial and SLAM combined and used in the latest mobile-based AR tracking.

Oskiper et al. [90] propose a simultaneous localization and mapping (SLAM) framework for sensor fusion, indexing, and feature matching in AR apps. It has a parallel mapping engine and error-state extended Kalman filter (EKF) for these purposes. Zhang et al.’s [91] Jaguar is a mobile tracking AR application with low latency and flexible object tracking. This paper discusses the design, execution, and evaluation of Jaguar. Jaguar enables a markerless tracking feature which is enabled through its client development on top of ARCoreest from Google. ARCore is also helpful for context awareness while estimating and recognizing the physical size and object capabilities, respectively.

3.1.6. Global Positioning System—GPS Tracking

This technology refers to the positioning of outdoor tracking with reference to the earth. The present accuracy of the GPS system is up to 3 m. However, improvements are available with the advancements in satellite technology and a few other developments. Real-time kinematic (RTS) is one example of them. It works by using the carrier of a GPS signal. The major benefit of it is that it has the ability to improve the accuracy level up to the centimeter level. Feiner’s touring machine [92] was the first AR system that utilized GPS in its tracking system. It used the inclinometer/magnetometer and differential GPS positional tracking. The military, gaming [93,94], and the viewership of historical data [95] have applied GPS tracking for the AR experiences. As it only has the supporting positional tracking low accuracy, it could only be beneficial in the hybrid tracking systems or in the applications where the pose registration is not important. AR et al. [96] use the GPS-INS receiver to develop models for object motion having more precision. Ashutosh et al. [97] explore the hardware challenges of AR technology and also explore the two main components of hardware technology: battery performance and global positioning system (GPS). Table 1 provides a succinct categorization of the prominent tracking technologies in augmented reality. Example studies are referred to while highlighting the advantages and challenges of each type of tracking technology. Moreover, possible areas of application are suggested.

3.1.7. Miscellaneous Tracking

Yang et al. [98], in order to recognize the different forms of hatch covers having similar shapes, propose tracking and cover recognition methods. The results of the experiment suggest its real-time property and practicability, and tracking accuracy was enough to be implemented in the AR inspection environment. Kang et al. [99] propose a pupil tracker which consists of several features that make AR more robust: key point alignment, eye-nose detection, and infrared (NIR) led. NIR led turns on and off based on the illumination light. The limitation of this detector is that it cannot be applied in low-light conditions.

Table 1.

Summary of tracking techniques and their related attributes.

Table 1.

Summary of tracking techniques and their related attributes.

| No. | Tracking Technology | Category of Tracking Technique | Status of Technique, Used in Current Devices | Tools/Company Currently Using the Technology | Key Concepts | Advantages | Challenges | Example Application Areas | Example Studies |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Magnetic | Marker-less/Sensor based | Yes | i. Edge Tracking/Premo etc. ii. Most HMD/Most Recent Android Devices | Sensors are placedwithin an electromagnetic field | +360 degree motion +navigation around the environments +manipulationof objects | -limited positioning range -constrained working volume -highly sensitive to surrounding environments | Maintenance Medicine Manufacturing | [45,46,47,48,49,50,51,52,53] |

| 2 | Inertial | Marker-less/Sensor based | Yes | ARCore/Unity | Motion sensors (e.g., accelerometers and gyroscopes) are used to determine the velocity and orientation of objects | +high-bandwidth motion measurement +Negligible latency | -drift overtime impacting position measurement | Transport Sports | [54] |

| 3 | Optical | Marker-less/Vision based | Yes | i. Unity ii. Opti Track Used in conguction with Inertial sensors + Optical (Vision Based) sensors | Virtual content is added to real environments through cameras and optical sensors.Example approaches include visible light, 3D structure, and infrared tracking. | +Popular due to affordable consumer devices +Strong tracking algorithms +Applicationto real-world scenarios | -occlusion when objects are in close range | Education and Learning E-commerce Tourism | [100,101] |

| 4 | Model Based i. Edge-Based ii. Template-Based iii. Depth Imaging | Marker-less/Computer Vision-based | Yes | i. VisionLib ii. Unity iii. ViSP | A 3D model is visualized of real objects | +implicit knowledge of the 3D structure +empowersspatial tracking +robustness is achieved even in complex environments | -algorithms are required to track and predict movements -models need to be created using dedicated tools and libraries | Manufacturing Construction Entertainment | [78,79,80,81,82,83,84,85,86] |

| 5 | GPS | Marker-less/Sensor based | Yes | i. ARCore/ARKit ii. Unity/ARFoundation iii. Vuforia | GPS sensors are employed to track the price location of objects in the environment | +high tracking accuracy (up to cms) | -hardware requirements -objects should be modelled ahead | Gaming | [102,103,104,105,106,107] |

| 6 | Hybrid | Marker-less/Sensor based/Computer Vision | Yes | i. ARCore ii. ARKit | A mix of markerless technologies is used to overcome the challenges of a single-tracking technology | +improved tracking range and accuracy +higher degree of freedom +lower drift and jitter | -the need for multiple technologies (e.g., accelerators, sensors) so cost issues | Simulation Transport | [108,109,110,111] |

| 7 | SLAM | Marker-less/Computer Vision/Non-Model-based | Yes | i. WikiTude ii. Unity iii. ARCore | A map is created via a vision of the real environment to track the virtual object on it. | Can track unknown environments, Parallel mapping engine | Does not have the capability to close large loops in the constrained environment | Mobile based AR Tracking, Robot Navigation, | [112,113,114] |

| 8 | Structure from Motion (SFM) | Marker-Less/Computer Vision/Non-Model-Based | Yes | i. SLAM ii. Research Based | 3D model reconstruction approach based on Multi View Stereo | Can be used for estimating the 3D structure of a scene from a series of 2D images | Shows limited reconstruction ability in vegetated environments | 3-D scanning, augmented reality, and visual simultaneous localization and mapping (vSLAM) | [90] |

| 9 | Fiducial/Landmark | Marker-based /Fiducial | Yes | i. Solar/Unity ii. Uniducial/Unity | Tracking is made with reference to artificial landmarks (i.e., markers) added to the AR environment | +better accuracy is achieved +stable tracking with less cost | -the need for landmarks -requires image recognition (i.e., camera) -less flexible compared to marker-based | Marketing | [115,116,117] |

| 10 | QR Code based Tracking | Marker-Based/Tag-Based | Yes | Microsoft Hololense/Immersive Headsets/Unity | Tracking is made | +better accuracyis achieved +stable tracking with less cost | QR codes pose significant security risks. | Supply Chain Management | [115] |

Moreover, Bach et al. [118] introduce an AR canvas for information visualization which is quite different from the traditional AR canvas. Therefore, dimensions and essential aspects for developing the visualization design for AR-canvas while enlisting the several limitations within the process. Zeng et al. [119] discuss the design and the implementation of FunPianoAR for creating a better AR piano learning experience. However, a number of discrepancies occurred with this system, and the initiation of a hybrid system is a more viable option. Rewkowski et al. [120] introduce a prototype system of AR to visualize the laparoscopic training task. This system is capable of tracking small objects and requires surgery training by using widely compatible and inexpensive borescopes.

3.1.8. Hybrid Tracking

Hybrid tracking systems were used to improve the following aspects of the tracking systems:

- Improving the accuracy of the tracking system.

- Coping with the weaknesses of the respective tracking methods.

- Adding more degrees of freedom.

Gorovyi et al. [108] detail the basic principles that make up an AR by proposing a hybrid visual tracking algorithm. The direct tracking techniques are incorporated with the optical flow technique to achieve precise and stable results. The results suggested that they both can be incorporated to make a hybrid system, and ensured its success in devices having limited hardware capabilities. Previously, magnetic tracking [109] or inertial trackers [110] were used in the tracking applications while using the vision-based tracking system. Isham et al. [111] use a game controller and hybrid tracking to identify and resolve the ultrasound image position in a 3D AR environment. This hybrid system was beneficial because of the following reasons:

- Low drift of vision-based tracking.

- Low jitter of vision-based tracking.

- They had a robust sensor with high update rates. These characteristics decreased the invalid pose computation and ensured the responsiveness of the graphical updates [121].

- They had more developed inertial and magnetic trackers which were capable of extending the range of tracking and did not require the line of sight. The above-mentioned benefits suggest that the utilization of the hybrid system is more beneficial than just using the inertial trackers.

In addition, Mao et al. [122] propose a new tracking system with a number of unique features. First, it accurately translates the relative distance into the absolute distance by locating the reference points at the new positions. Secondly, it embraces the separate receiver and sender. Thirdly, resolves the discrepancy in the sampling frequency between the sender and receiver. Finally, the frequency shift due to movement is highly considered in this system. Moreover, the combination of the IMU sensor and Doppler shift with the distributed frequency modulated continuous waveform (FMCW) helps in the continuous tracking of mobile due to multiple time interval developments. The evaluation of the system suggested that it can be applied to the existing hardware and has an accuracy to the millimeter level.

The GPS tracking system alone only provides the positional information and has low accuracy. So, GPS tracking systems are usually combined with vision-based tracking or inertial sensors. The intervention would help gain the full pose estimation of 6DoF [123]. Moreover, backup tracking systems have been developed as an alternative when the GPS fails [98,124]. The optical tracking systems [100] or the ultrasonic rangefinders [101] can be coupled with the inertial trackers for enhancing efficiency. As the differential measurement approach causes the problem of drift, these hybrid systems help resolve them. Furthermore, the use of gravity as a reference to the inertial sensor made them static and bound. The introduction of the hybrid system would make them operate in a simulator, vehicle, or in any other moving platform [125]. The introduction of accelerators, cameras, gyroscopes [126], global positioning systems [127], and wireless networking [128] in mobile phones such as tablets and smartphones also gives an opportunity for hybrid tracking. Furthermore, these devices have the capability of determining outdoor as well as indoor accurate poses [129].

3.2. Marker-Based Tracking

Fiducial Tracking: Artificial landmarks for aiding the tracking and registration that are added to the environment are known as fiducial. The complexity of fiducial tracking varies significantly depending upon the technology and the application used. Pieces of paper or small colored LEDs were used typically in the early systems, which had the ability to be detected using color matching and could be added to the environment [130]. If the position of fiducials is well-known and they are detected enough in the scene then the pose of the camera can be determined. The positioning of one fiducial on the basis of a well-known previous position and the introduction of additional fiducials gives an additional benefit that workplaces could dynamically extend [131]. A QR code-based fudicial/marker is also proposed by some researchers for marker-/tag-based tracking [115]. With the progression of work on the concept and complexity of the fiducials, additional features such as multi-rings were introduced for the detection of fiducials at much larger distances [116]. A minimum of four points of a known position is needed for determining for calculating the pose of the viewer [117]. In order to make sure that the four points are visible, the use of these simpler fiducials demanded more care and effort for placing them in the environment. Examples of such fiducials are ARToolkit and its successors, whose registration techniques are mostly planar fiducial. In the upcoming section, AR display technologies are discussed to fulfill all the conditions of Azuma’s definition.

3.3. Summary

This section provides comprehensive details on tracking technologies that are broadly classified into markerless and marker-based approaches. Both types have many subtypes whose details, applications, pros, and cons are provided in a detailed fashion. The different categories of tracking technologies are presented in Figure 4, while the summary of tracking technologies is provided in Figure 7. Among the different tracking technologies, hybrid tracking technologies are the most adaptive. This study combined SLAM and inertial tracking technologies as part of the framework presented in the paper.

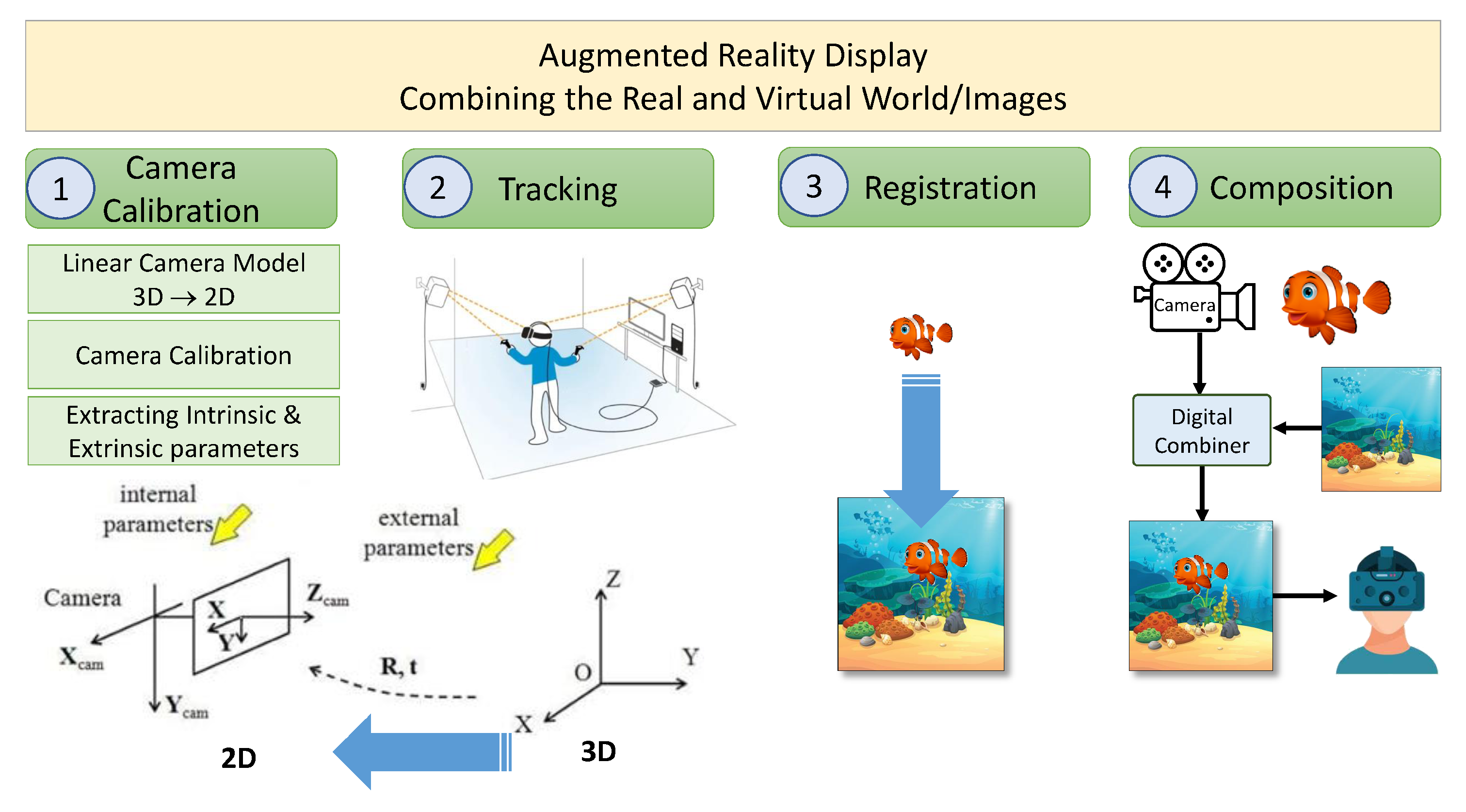

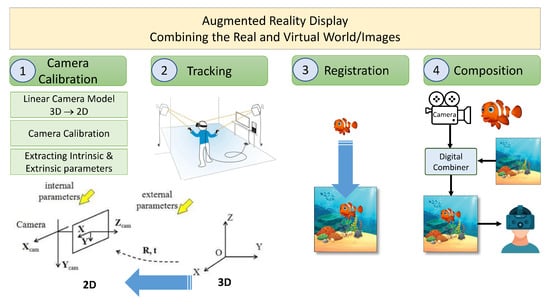

Figure 7.

Steps for combining real and virtual content.

4. Augmented Reality Display Technology

For the combination of a real and the virtual world in such a way that they both look superimposed on each other, as in Azuma’s definition, some technology is necessarily required to display them.

4.1. Combination of Real and the Virtual Images

Methods or procedures required for the merging of the virtual content in the physical world include camera calibration, tracking, registration, and composition as depicted in Figure 7.

4.2. Camera vs. Optical See Through Calibration

It is a procedure or an optical model in which the eye display geometry or parameters define the user’s view. Or, in other words, it is a technique of complementing the dimensions and parameters of the physical and the virtual camera.

In AR, calibration can be used in two ways, one is camera calibration, and another is optical calibration. The camera calibration technique is used in video see-through (VST) displays. However, optical calibration is used in optical see-through (OST) displays. OST calibration can be further divided into three umbrellas of techniques. Initially, manual calibration techniques were used in OST. Secondly, semi-automatic calibration techniques were used, and thirdly, we have now automatic calibration techniques. Manual calibration requires a human operator to perform the calibration tasks. Semi-automatic calibration, such as simple SPAAM and display relative calibration (DRC), partially collect some parameters automatically, which usually needed to be done manually in earlier times by the user. Thirdly, the automatic OST calibration was proposed by Itoh et al. in 2014 with the model of interaction-free display calibration technique (INDICA) [132]. In video see through (VST), computer vision techniques such as cameras are used for the registration of real environments. However, in optical see through (OST), VST calibration techniques cannot be used as it is more complex because cameras are replaced by human eyes. Various calibration techniques were developed for OST. The author evaluates the registration accuracy of the automatic OST head-mounted display (HMD) calibration technique called recycled INDICA presented by Itoh and Klinker. In addition, two more calibration techniques called the single-point active alignment method (SPAAM) and degraded SPAAM were also evaluated. Multiple users were asked to perform two separate tasks to check the registration and the calibration accuracy of all three techniques can be thoroughly studied. Results show that the registration method of the recycled INDICA technique is more accurate in the vertical direction and showed the distance of virtual objects accurately. However, in the horizontal direction, the distance of virtual objects seemed closer than intended [133]. Furthermore, the results show that recycled INDICA is more accurate than any other common technique. In addition, this technique is also more accurate than the SPAAM technique. Although, different calibration techniques are used for OST and VST displays, as discussed in [133], they do not provide all the depth cues, which leads to interaction problems. Moreover, different HMDs have different tracking systems. Due to this, they are all calibrated with an external independent measuring system. In this regard, Ballestin et al. propose a registration framework for developing AR environments where all the real objects, including users, and virtual objects are registered in a common frame. The author also discusses the performance of both displays during interaction tasks. Different simple and complex tasks such as 3D blind reaching are performed using OST and VST HMDs to test their registration process and interaction of the users with both virtual and real environments. It helps to compare the two technologies. The results show that these technologies have issues, however, they can be used to perform different tasks [134].

Non-Geometric Calibration Method

Furthermore, these geometric calibrations lead to perceptual errors while converting from 3D to 2D [135]. To counter this problem, parallax-free video see-through HMDs were proposed; however, they were very difficult to create. In this regard, Cattari et al. in 2019 proposes a non-stereoscopic video see-through HMD for a close-up view. It mitigates perceptual errors by mitigating geometric calibration. Moreover, the authors also identify the problems of non-stereoscopic VST HMD. The aim is to propose a system that provides a view consistent with the real world [136,137]. Moreover, State et al. [138] focus on a VST HMD system that generates zero eye camera offset. While Bottechia et al. [139] present an orthoscope monocular VST HMD prototype.

4.3. Tracking Technologies

Some sort of technology is required to track the position and orientation of the object of interest which could either be a physical object or captured by a camera with reference to the coordinate plan (3D or 2D) of a tracking system. Several technologies ranging from computer vision techniques to 6DoF sensors are used for tracking the physical scenes.

4.4. Registration

Registration is defined as a process in which the coordinate frame used for manifesting the virtual content is complemented by the coordinate frame of the real-world scene. This would help in the accurate alignment of the virtual content and the physical scene.

4.5. Composition

Now, the accuracy of two important steps, i.e., the accurate calibration of the virtual camera and the correct registration of the virtual content relative to the physical world, signifies the right correspondence between the physical environment and the virtual scene which is generated on the basis of tracking updates. This process then leads to the composition of the virtual scene’s image and can be done in two ways: Optically (or physically) or digitally. The physical or digital composition depends upon the configuration and dimensions of the system used in the augmented reality system.

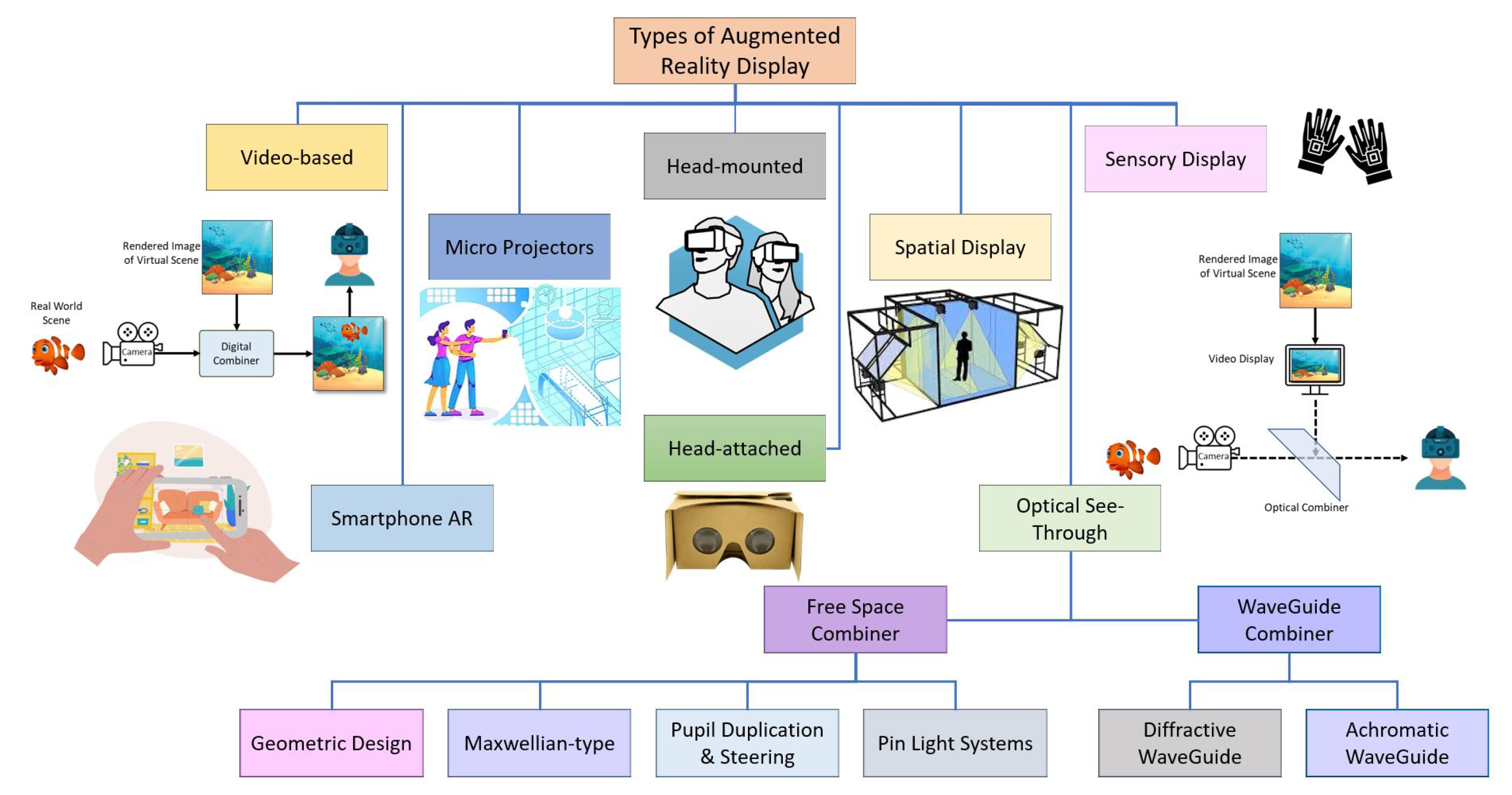

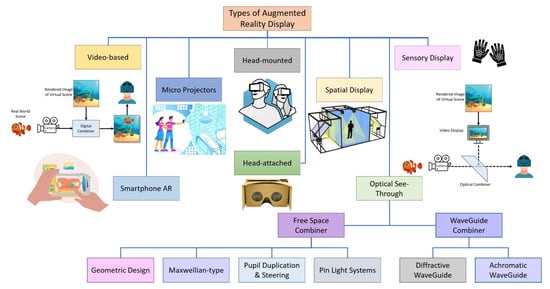

4.6. Types of Augmented Reality Displays

The combination of virtual content in the real environment divides the AR displays into four major types, as depicted in Figure 8. All have the same job to show the merged image of real and virtual content to the user’s eye. The authors have categorized the latest technologies of optical display after the advancements in holographic optical elements HOEs. There are other categories of AR display that arealso used, such as video-based, eye multiplexed, and projection onto a physical surface.

Figure 8.

Types of augmented reality display technologies.

4.7. Optical See-Through AR Display

These kinds of displays use the optical system to merge the real scenes and virtual scene images. Examples of AR displays are head-up display HUD systems of advanced cars and cockpits of airplanes. These systems consist of the following components: beam splitters, which can be of two forms, combined prisms or half mirrors. Most beam splitters reflect the image from the video display. This reflected image is then integrated with a real-world view that can be visualized from the splitter. For half mirrors as a beam splitter, the working way is somewhat different: the real-world view is reflected on the mirror rather than the image of the video display. At the same time, the video display can also be viewed from the mirror. The transport projection system is semi-transparent optical technology used in optical display systems. Their semi-transparent property allows the viewer to witness the view at the back of the screen. Additionally, this system uses diffused light to manifest the exhibited image. Examples of semi-display optical systems are transparent projection film, transparent LCDs, etc. Optical combiners are used for the combination of virtual and real scene images. Optical see-through basically has two sub-categories, one is a free-space combiner and the other is a wave-guide combiner [140]. Additionally, now the advancement of technology has enabled technicians to make self-transparent displays. This self-transparent feature help in the miniaturization and simplification of the size and structure of the optical see-through displays.

4.7.1. Free-Space Combiners

Papers related to free space combiners are discussed here. Pulli et al. [11] introduce a second-generation immersive optical see-through AR system known as meta 2. It is based on an optical engine that uses the free-form visor to make a more immersive experience. Another traditional geometric display is ultra-fast high-resolution piezo linear actuators combined with Alvarez’s lens to make a new varifocal optical see-through HMD. It uses a beamsplitter which acts as an optical combiner to merge the light paths of the real and virtual worlds [12]. Another type of free-space combiner is Maxwellian-type [112,113,114,141]. In [142], the author employs the random structure as a spatial light modulator for developing a light-field near-eye display based on random pinholes. The latest work in [143,144] introduces an Ini-based light field display using the multi-focal micro-lens to propose the extended depth of the field. To enhance the eyebox view there is another technique called puppil duplication steering [145,146,147,148,149,150]. In this regard, refs. [102,151] present the eyebox-expansion method for the holographic near-eye display and pupil-shifting holographic optical element (PSHOE) for the implementation. Additionally, the design architecture is discussed and the incorporation of the holographic optical element within the holographic display system is discussed. There is another recent technique similar to the Maxwellian view called pin-light systems. It increases the Maxwellian view with larger DoFs [103,104].

4.7.2. Wave-Guide Combiner

The waveguide combiner basically traps light into TIR as opposed to free-space, which lets the light propagate without restriction [104,105,106]. The waveguide combiner has two types, one is diffractive waveguides and another is achromatic waveguides [107,152,153,154,155].

4.8. Video-Based AR Displays

These displays execute the digital processes as their working principle [156]. To rephrase, the merging of the physical world video and the virtual images, in video display systems, is carried out by digital processing. The working of the video-based system depends upon the video camera system by which it fabricates the real-world video into digital. The rationale behind this system is that the composition of the physical world’s video or scenario with the virtual content could be manifested digitally through the operation of a digital image processing technique [157]. Mostly, whenever the user has to watch the display, they have to look in the direction of the video display, and the camera is usually attached at the back of this display. So, the camera faces the physical world scene. These are known as “video see-through displays" because in them the real world is fabricated through the digitization (i.e., designing the digital illusion) of these video displays. Sometimes the design of the camera is done in such a way that it may show an upside-down image of an object, create the illusion of a virtual mirror, or site the image at a distant place.

4.9. Projection-Based AR Display

Real models [158] and walls [159] could be example of projection-based AR displays. All the other kinds of displays use the display image plan for the combination of the real and the virtual image. However, this display directly overlays the virtual scene image over the physical object. They work in the following manner:

- First, they track the user’s viewpoint.

- Secondly, they track the physical object.

- Then, they impart the interactive augmentation [160].

Mostly, these displays have a projector attached to the wall or a ceiling. This intervention has an advantage as well as a disadvantage. The advantage is that this does not demand the user to wear something. The disadvantage is that it is static and restricts the display to only one location of projection. For resolving this problem and making the projectors mobile, a small-sized projector has been made that could be easily carried from one place to another [161]. More recently, with the advancement of technology, miniaturized projectors have also been developed. These could be held in the hand [162] or worn on the chest [163] or head [164].

4.10. Eye-Multiplexed Augmented Reality Display

In eye-multiplexed AR displays, the users are allowed to combine the views of the virtual and real scenes mentally in their minds [72]. Rephrased, these displays do not combine the image digitally; therefore, it requires less computational power [72]. The process is as follows. First, the virtual image gets registered to the physical environment. Second, the user will get to see the same rendered image as the physical scene because the virtual image is registered to the physical environment. The user has to mentally configure the images in their mind to combine the virtual and real scene images because the display does not composite the rendered and the physical image. For two reasons, the display should be kept near the viewer’s eye: first, the display could appear as an inset into the real world, and second, the user would have to put less effort into mentally compositing the image.

The division of the displays on the basis of the position of the display between the real and virtual scenes is referred to as the “eye to world spectrum”.

4.11. Head-Attached Display

Head-attached displays are in the form of glasses, helmets, or goggles. They vary in size from smaller to bigger. However, with the advancement of technology, they are becoming lighter to wear. They work by displaying the virtual image right in front of the user’s eye. As a result, no other physical object can come between the virtual scene and the viewer’s eye. Therefore, the third physical object cannot occlude them. In this regard, Koulieris et al. [165] summarized the work on immersive near-eye tracking technologies and displays. Results suggest various loopholes within the work on display technologies: user and environmental tracking and emergence–accommodation conflict. Moreover, it suggests that advancement in the optics technology and focus adjustable lens will improve future headset innovations and creation of a much more comfortable HMD experience. In addition to it, Minoufekr et al. [166] illustrate and examine the verification of CNC machining using Microsoft HoloLens. In addition, they also explore the performance of AR with machine simulation. Remote computers can easily pick up the machine models and load them onto the HoloLens as holograms. A simulation framework is employed that makes the machining process observed prior to the original process. Further, Franz et al. [88] introduce two sharing techniques i.e., over-the-shoulder AR and semantic linking for investigating the scenarios in which not every user is wearing HWD. Semantic linking portrays the virtual content’s contextual information on some large display. The result of the experiment suggested that semantic linking and over-the-shoulder suggested communication between participants as compared to the baseline condition. Condino et al. [167] aim to explore two main aspects. First, to explore complex craniotomies to gauge the reliability of the AR-headsets [168]. Secondly, for non-invasive, fast, and completely automatic planning-to-patient registration, this paper determines the efficacy of patient-specific template-based methodology for this purpose.

4.12. Head-Mounted Displays

The most commonly used displays in AR research are head-mounted displays (HMDs). They are also known as face-mounted displays or near-eye displays. The user puts them on, and the display is represented right in front of their eyes. They are most commonly in the form of goggles. While using HMDs, optical and video see-through configurations are most commonly used. However, recently, head-mounted projectors are also explored to make them small enough to wear. Examples of smart glasses, Recon Jet, Google glass, etc., are still under investigation for their usage in head-mounted displays. Barz et al. [169] introduce a real-time AR system that augments the information obtained from the recently attended objects. This system is implemented by using head-mounted displays from the state-of-the-art Microsoft HoloLens [170]. This technology can be very helpful in the fields of education, medicine, and healthcare. Fedosov et al. [171] introduce a skill system, and an outdoor field study was conducted on the 12 snowboards and skiers. First, it develops a system that has a new technique to review and share personal content. Reuter et al. [172] introduce the coordinative concept, namely RescueGlass, for German Red Cross rescue dog units. This is made up of a corresponding smartphone app and a hands-free HMD (head-mounted display) [173]. This is evaluated to determine the field of emergency response and management. The initial design is presented for collaborative professional mobile tasks and is provided using smart glasses. However, the evaluation suggested a number of technical limitations in the research that could be covered in future investigations. Tobias et al. [174] explore the aspects such as ambiguity, depth cues, performed tasks, user interface, and perception for 2D and 3D visualization with the help of examples. Secondly, they categorize the head-mounted displays, introduce new concepts for collaboration tasks, and explain the concepts of big data visualization. The results of the study suggested that the use of collaboration and workspace decisions could be improved with the introduction of the AR workspace prototype. In addition, these displays have lenses that come between the virtual view and the user’s eye just like microscopes and telescopes. So, the experiments are under investigation to develop a more direct way of viewing images such as the virtual retinal display developed in 1995 [175]. Andersson et al. [176] show that training, maintenance, process monitoring, and programming can be improved by integrating AR with human—robot interaction scenarios.

4.13. Body-Attached and Handheld Displays

Previously, the experimentation with handheld display devices was done by tethering the small LSDs to the computers [177,178]. However, advancements in technology have improved handheld devices in many ways. Most importantly, they have become so powerful to operate AR visuals. Many of them are now used in AR displays such as personal digital assistants [179], cell phones [180], tablet computers [181], and ultra-mobile PCs [182].

4.13.1. Smartphones and Computer tablets

In today’s world, computer tablets and smartphones are powerful enough to run AR applications, because of the following properties: various sensors, cameras, and powerful graphic processors. For instance, Google Project Tango and ARCore have the most depth imaging sensors to carry out the AR experiences. Chan et al. [183] discuss the challenges faced while applying and investigating methodologies to enhance direct touch interaction on intangible displays. Jang et al. [184] aim to explore e-leisure due to enhancement in the use of mobile AR in outdoor environments. This paper uses three methods, namely markerless, marker-based, and sensorless to investigate the tracking of the human body. Results suggested that markerless tracking cannot be used to support the e-leisure on mobile AR. With the advancement of electronic computers, OLED panels and transparent LCDs have been developed. It is also said that in the future, building handheld optical see-through devices would be available. Moreover, Fang et al. [185] focus on two main aspects of mobile AR. First, a combination of the inertial sensor, 6DoF motion tracking based on sensor-fusion, and monocular camera for the realization of mobile AR in real-time. Secondly, to balance the latency and jitter phenomenon, an adaptive filter design is introduced. Furthermore, Irshad et al. [186] introduce an evaluation method to assess mobile AR apps. Additionally, Loizeau et al. [187] explore a way of implementing AR for maintenance workers in industrial settings.

4.13.2. Micro Projectors

Micro projectors are an example of a mobile phone-based AR display. Researchers are trying to investigate these devices that could be worn on the chest [188], shoulder [189], or wrist [190]. However, mostly they are handheld and look almost like handheld flashlights [191].

4.13.3. Spatial Displays

Spatial displays are used to exhibit a larger display. Henceforth, these are used in the location where more users could get benefit from them i.e., public displays. Moreover, these displays are static, i.e., they are fixed at certain positions and can not be mobilized.

The common examples of spatial displays include those that create optical see-through displays through the use of optical beamers: half mirror workbench [192,193,194,195] and virtual showcases. Half mirrors are commonly used for the merging of haptic interfaces. They also enable closer virtual interaction. Virtual showcases may exhibit the virtual images on some solid or physical objects mentioned in [196,197,198,199,200]. Moreover, these could be combined with the other type of technologies to excavate further experiences. The use of volumetric 3D displays [201], autostereoscopic displays [202], and other three-dimensional displays could be researched to investigate further interesting findings.

4.13.4. Sensory Displays

In addition to visual displays, there are some sensors developed that work with other types of sensory information such as haptic or audio sensors. Audio augmentation is easier than video augmentation because the real world and the virtual sounds get naturally mixed up with each other. However, the most challenging part is to make the user think that the virtual sound is spatial. Multi-channel speaker systems and the use of stereo headphones with the head-related transfer function (HRTF) are being researched to cope with this challenge [203]. Digital sound projectors use the reverberation and the interference of sound by using a series of speakers [204]. Mic-throughand hear-through systems, developed by Lindeman [205,206,206], work effectively and are analogous to video and optical see-through displays. The feasibility test for this system was done by using a bone conduction headset. Other sensory experiences are also being researched. For example, the augmentation of the gustatory and olfactory senses. Olfactory and visual augmentation of a cookie-eating scene was developed by Narumi [207]. Table 2 gives the primary types of augmented reality display technologies and discusses their advantages and disadvantages.

Table 2.

A Summary of Augmented Reality Display Technologies.

4.14. Summary

This section presented a comprehensive survey of AR display technologies. These displays not only focused on combing the virtual and real-world scenes of visual experience but also other ways of combining the sensory, olfactory, and gustatory senses are also under examination by researchers. Previously, head-mounted displays were most commonly in practice; however, now handheld devices and tablets or mobile-based experiences are widely used. These things may also change in the future depending on future research and low cost. The role of display technologies was elaborated first, thereafter, the process of combining the real and augmented contents and visualizing these to users was elaborated. The section elaborated thoroughly on where the optical see-through and video-based see-through are utilized along with details of devices. Video see-through (VST) is used in head-mounted displays and computer vision techniques such as cameras are used for registration of real environment, while in optical see-through (OST), VST calibration techniques cannot be used due to complexity, and cameras are replaced by human eyes. The optical see-through is a trendy approach as of now. The different calibration approaches are presented and analyzed and it is identified after analysis, the results show that recycled INDICA is more accurate than other common techniques presented in the paper. This section also presents video-based AR displays. Figure 8 present a classified representation of different display technologies pertaining to video-based, head-mounted, and sensory-based approaches. The functions and applications of various display technologies are provided in Table 2 Each of the display technologies presented has its applicability in various realms whose details are summarized in the same Table 2.

5. Walking and Distance Estimation in AR

The effectiveness of AR technologies depends on the perception of distance of users from both real and virtual objects [214,215]. Mikko et al. performed some experiments to judge depth using stereoscopic depth perception [216]. The perception can be changed if the objects are on the ground or off the ground. In this regard, Carlos et al. also proposed a comparison between the perception of distance of these objects on the ground and off the ground. The experiment was done where the participant perceived the distance from cubes on the ground and off the ground as well. The results showed that there is a difference between both perceptions. However, it was also shown that this perception depends on whether the vision is monocular or binocular [217]. Plenty of research has been done in outdoor navigation and indoor navigation areas with AR [214]. In this regard, Umair et al. present an indoor navigation system in which Google glass is used as a wearable head-mounted display. A pre-scanned 3D map is used to track an indoor environment. This navigation system is tested on both HMD and handheld devices such as smartphones. The results show that the HMD was more accurate than the handheld devices. Moreover, it is stated that the system needs more improvement [218].

6. AR Development Tool

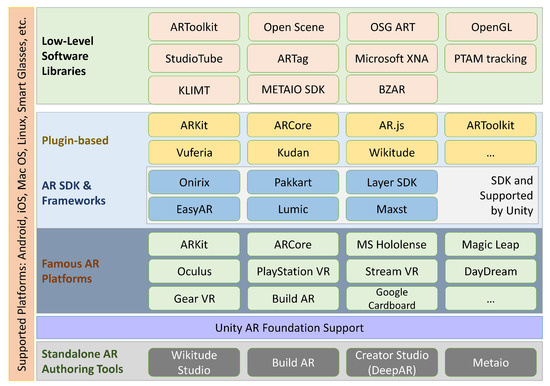

In addition to the tracking and display devices, there are some other software tools required for creating an AR experience. As these are hardware devices, they require some software to create an AR experience. This section explores the tools and the software libraries. It will cover both the aspects of the commercially available tools and some that are research related. Different software applications require a separate AR development tool. A complete set of low-level software libraries, plug-ins, platforms, and standalones are presented in Figure 9 so they can be summarized for the reader.

Figure 9.

Stack of development libraries, plug-ins, platforms, and standalone authoring tools for augmented reality development.

In some tools, computer vision-based tracking (see Section 3.1.2) is preferred for creating an indoor experience, while others utilized sensors for creating an outdoor experience. The use of each tool would depend upon the type of platform (web or mobile) for which it is designed. Further in the document, the available AR tools are discussed, which consist of both novel tools and those that are widely known. Broadly, the following tools will be discussed:

- Low-level software development tools: needs high technological and programming skills.

- Rapid prototyping: provides a quick experience.

- Plug-ins that run on the existing applications.

- Standalone tools that are specifically designed for non-programmers.

- Next generation of AR developing tools.

6.1. Low-Level Software Libraries and Frameworks

Low-level software and frameworks make the functions of display and core tracking accessible for creating an AR experience. One of the most commonly used AR software libraries, as discussed in the previous section, is ARToolKit. ARToolKit is developed by Billing Hurst and Kato that has two versions [219]. It works on the principle of a fiducial marker-based registration system [220]. There are certain advances in the ARToolKit discussed related to the tracking in [213,221,222,223,224]. The first one is an open-source version that provides the marker-based tracking experience, while the second one provides natural tracking features and is a commercial version. It can be operated on Linux, Windows, and Mac OS desktops as it is written in the C language. It does not require complex graphics or built-in support for accomplishing its major function of providing a tracking experience, and it can operate simply by using low-level OpenGL-based rendering. ARToolKit requires some additional libraries such as osgART and OpenScene graph library so it can provide a complete AR experience to AR applications. OpenScene graph library is written in C language and operates as an open-source graph library. For graphic rendering, the OpenScene graph uses OpenGL. Similarly, the osgART library links the OpenScene graph and ARToolKit. It has advanced rendering techniques that help in developing the interacting AR application. OsgART library has a modular structure and can work with any other tracking library such as PTAM and BazAR, if ARtoolkit is not appropriate. BazAR is a workable tracking and geometric calibration library. Similarly, PTAM is a SLAM-based tracking library. It has a research-based and commercial license. All these libraries are available and workable to create a workable AR application. Goblin XNA [208] is another platform that has the components of interactions based on physics, video capture, a head-mounted AR display on which output is displayed, and a three-dimensional user interface. With Goblin XNA, existing XNA games could be easily modified [209]. Goblin XNA is available as a research and educational platform. Studierstube [210] is another AR system through which a complete AR application can be easily developed. It has tracking hardware, input devices, different types of displays, AR HMD, and desktops. Studierstube was specially developed to subsidize the collaborative applications [211,212]. Studierstube is a research-oriented library and is not available as commercial and workable easy-to-use software. Another commercially available SDK is Metaio SDK [225]. It consists of a variety of AR tracking technologies including image tracking, marker tracking, face tracking, external infrared tracking, and a three-dimensional object tracking. However, in May 2015, it was acquired by Apple and Metaio products and subscriptions are no longer available for purchase. Some of these libraries such as Studierstube and ARToolKit were initially not developed for PDAs. However, they have been re-developed for PDAs [226]. It added a few libraries in assistance such as open tracker, pocketknife for hardware abstraction, KLIMT as mobile rendering, and the formal libraries of communication (ACE) and screen graphs. All these libraries helped to develop a complete mobile-based AR collaborative experience [227,228]. Similarly, ARToolKit also incorporated the OpenScene graph library to provide a mobile-based AR experience. It worked with Android and iOS with a native development kit including some Java wrapping classes. Vuforia’s Qualcomm low-level library also provided an AR experience for mobile devices. ARToolKit and Vuforia both can be installed as a plug-in in Unity which provides an easy-to-use application development for various platforms. There are a number of sensors and low-level vision and location-based libraries such as Metaio SDK and Droid which were developed for outdoor AR experience. In addition to these low-level libraries, the Hit Lab NZ Outdoor AR library provided high-level abstraction for outdoor AR experience [229]. Furthermore, there is a famous mobile-based location AR tool that is called Hoppala-Augmentation. The geotags given by this tool can be browsed by any of the AR browsers including Layar, Junaio, and Wikitude [230].

6.2. ARTag

ARTag is designed to resolve the limitations of ARToolkit. This system was developed to resolve a number of issues:

- Resolving inaccurate pattern matching by preventing the false positive matches.

- Enhancing the functioning in the presence of the imbalanced lightening conditions.

- Making the occlusion more invariant.

However, ARTag is no longer actively under development and supported by the NRC Lab. A commercial license is not available.

6.3. Wikitude Studio

This is also a web-based authoring tool for creating mobile-based AR applications. It allows the utilization of computer vision-based technology for the registration of the real world. Several types of media such as animation and 3D models can be used for creating an AR scene. One of the important features of Wikitude is that the developed mobile AR content can be uploaded not only on the Wikitude AR browser app but also on a custom mobile app [231]. Wikitude’s commercial plug-in is also available in Unity to enhance the AR experience for developers.

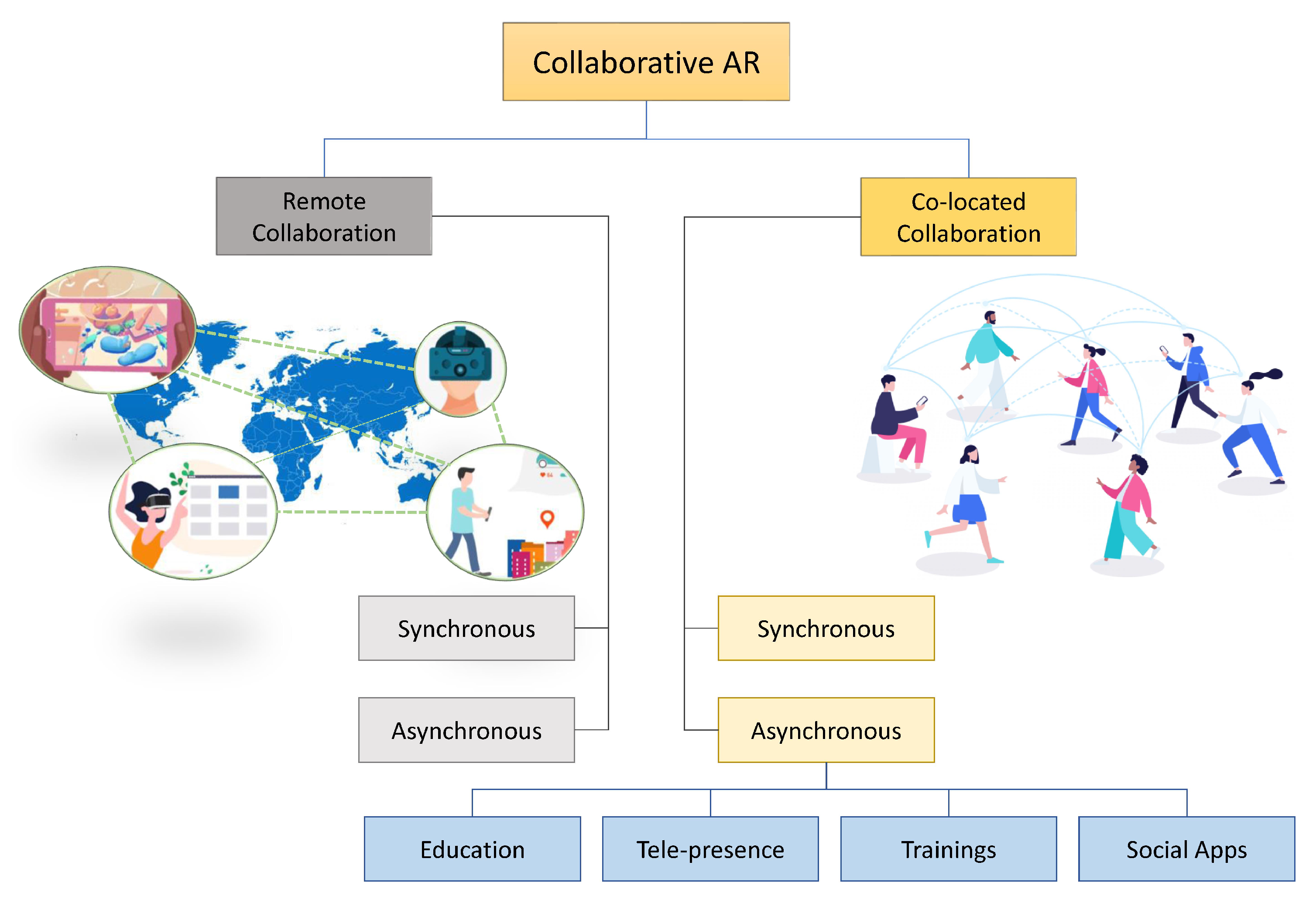

6.4. Standalone AR Tools