Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

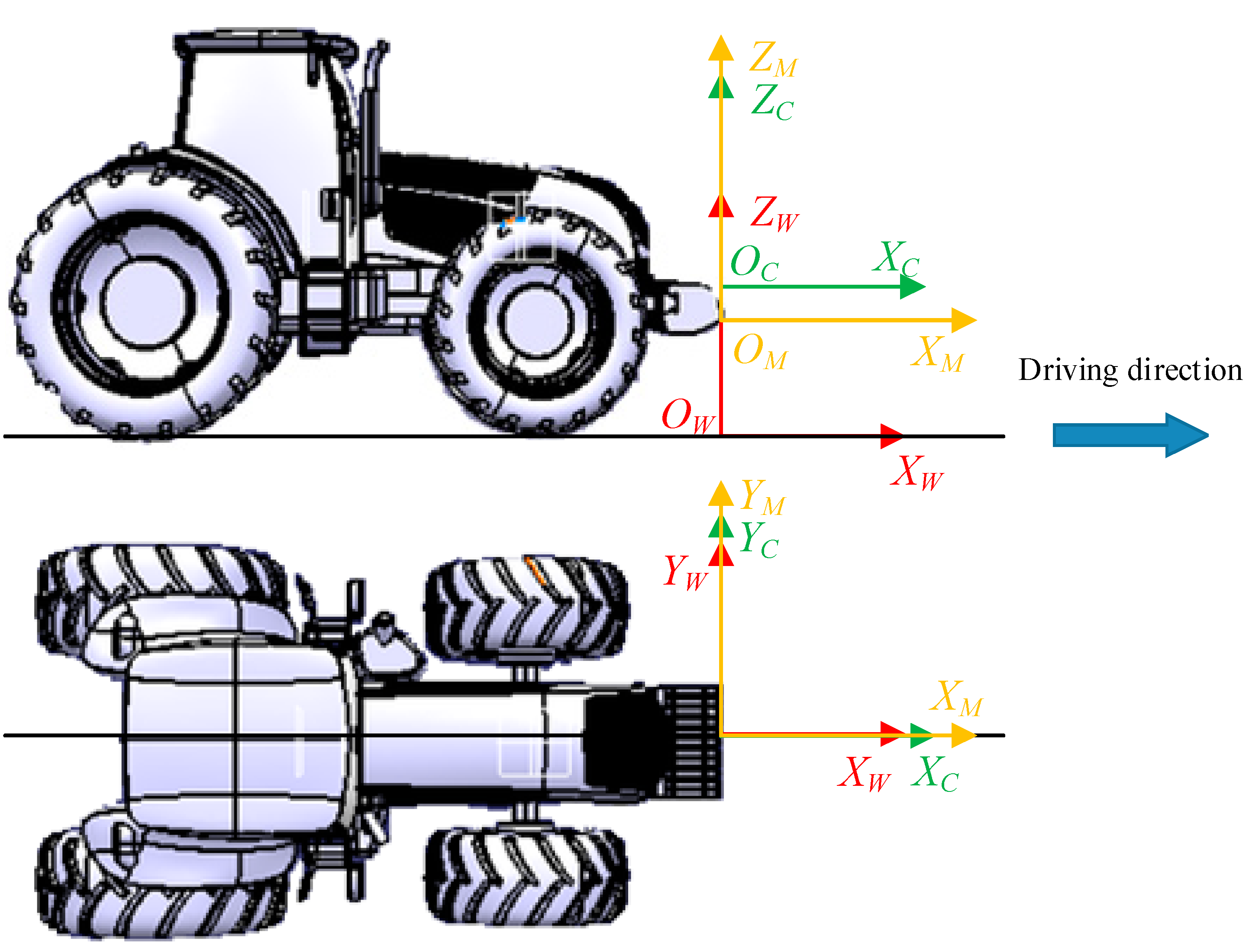

3.1. Space-Time Reference Alignment of Sensors

3.1.1. Time Reference Alignment

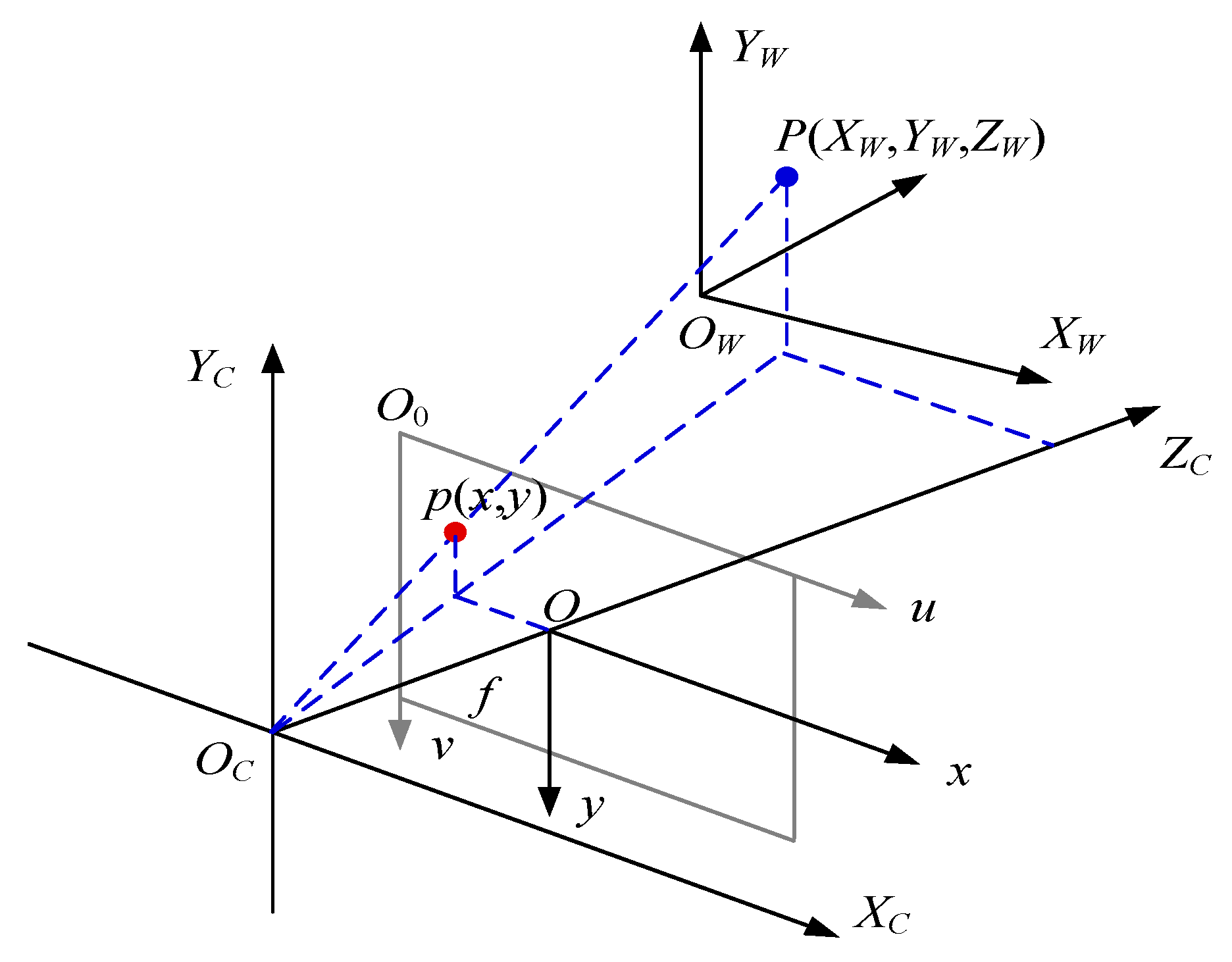

3.1.2. Transformation between mmWave Radar Coordinate System and Pixel Coordinate System

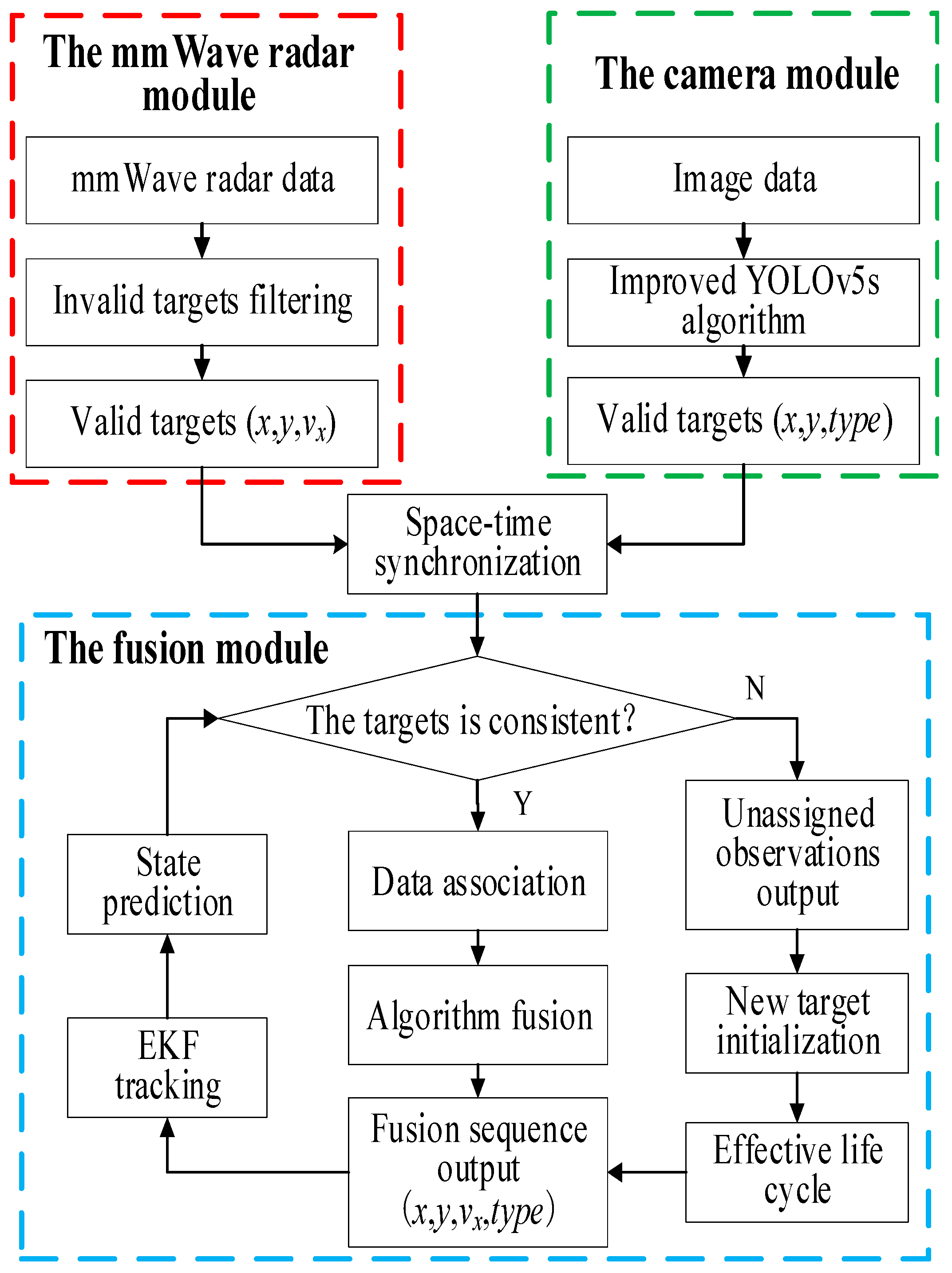

3.2. Fusion Processing of Sensor Information

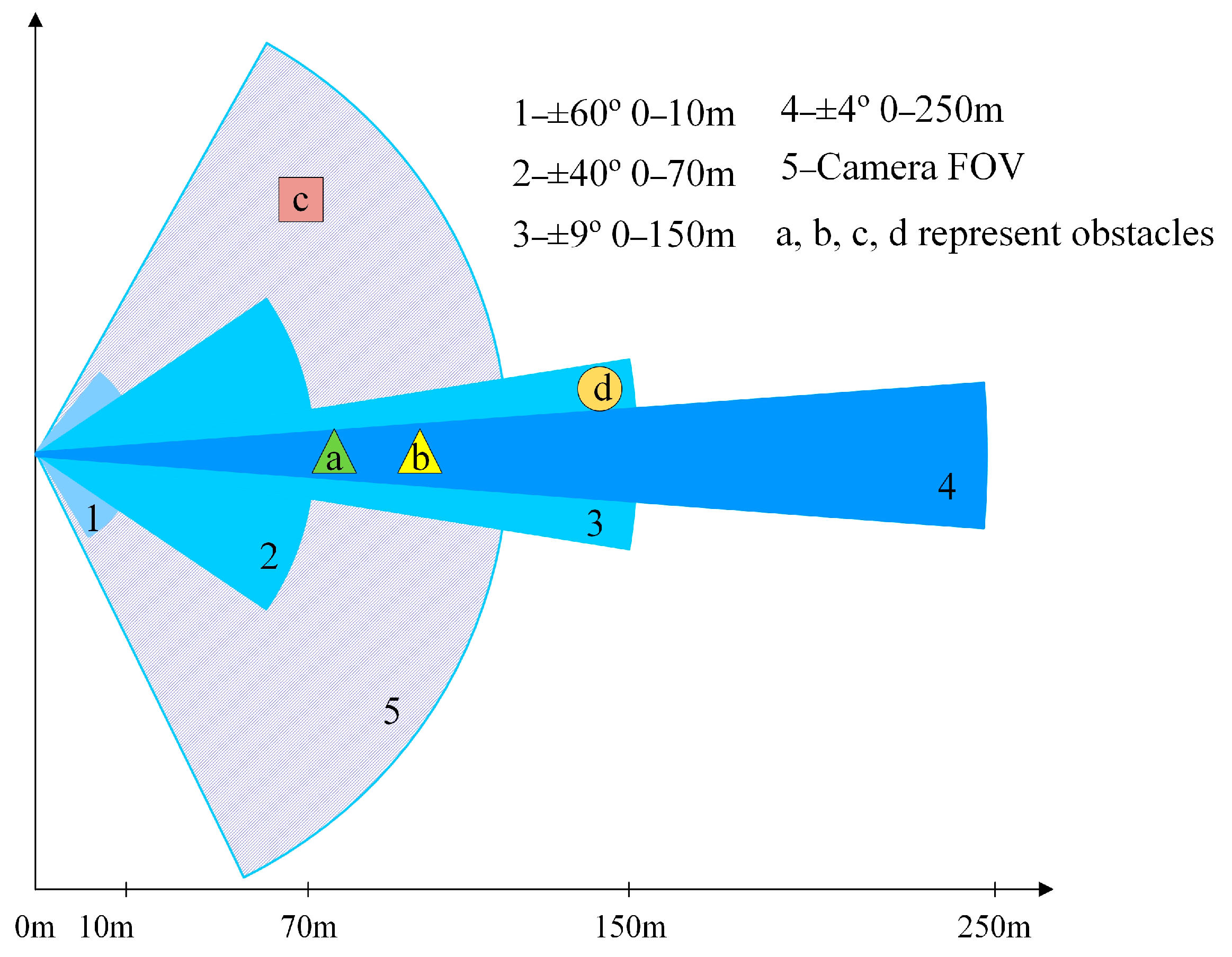

3.2.1. Introduction to Obstacle Detection Algorithm

3.2.2. Decision-Level Fusion of Sensor Information

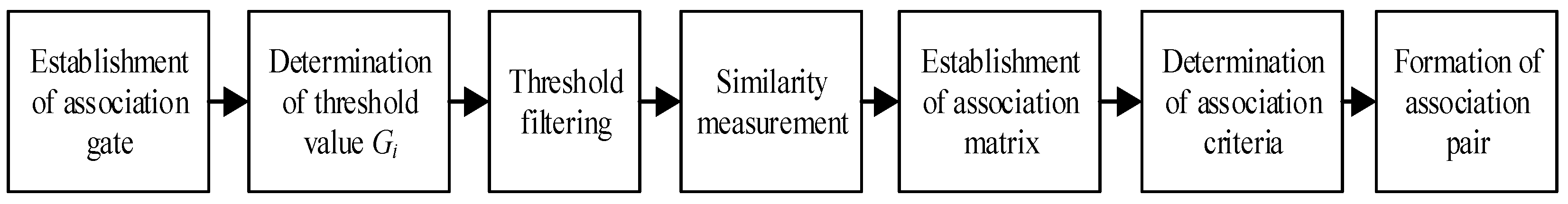

3.2.3. Data Association Based on Global Nearest Neighbor Method

- (1)

- Establishment of association gate

- (2)

- Determination of threshold value Gi and threshold filtering

- (3)

- Similarity measurement

- (4)

- Establishment of the association matrix

- (5)

- Determination of association criteria and formation of association pairs

3.2.4. Weighted Output of Observations

3.2.5. Target Tracking Based on Extended Kalman Filter

4. Results and Discussion

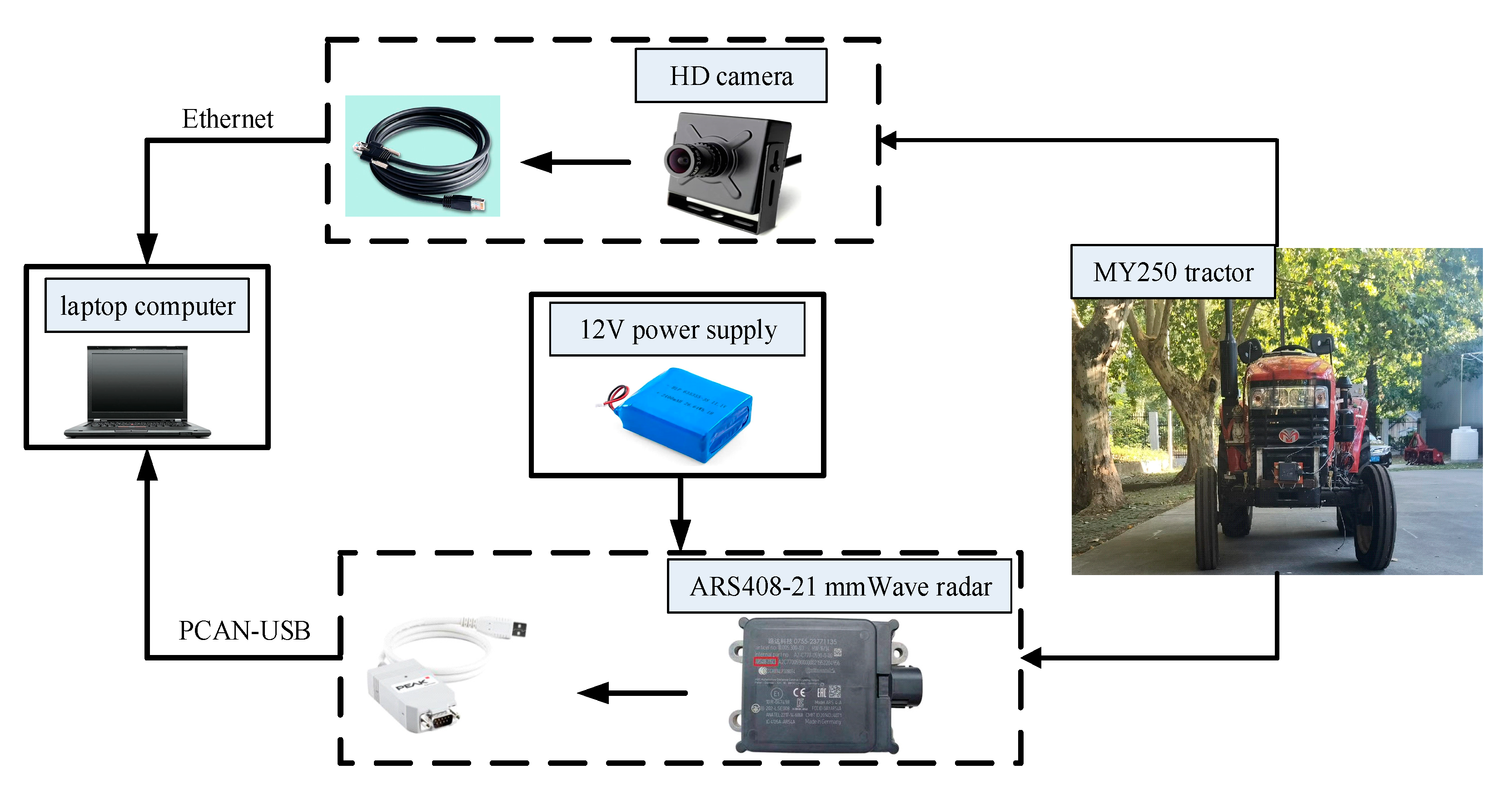

4.1. Introduction to the Experimental Platform

4.2. Sensor Calibration

4.2.1. Internal Parameter of Camera

4.2.2. External Parameters of mmWave Radar and Camera

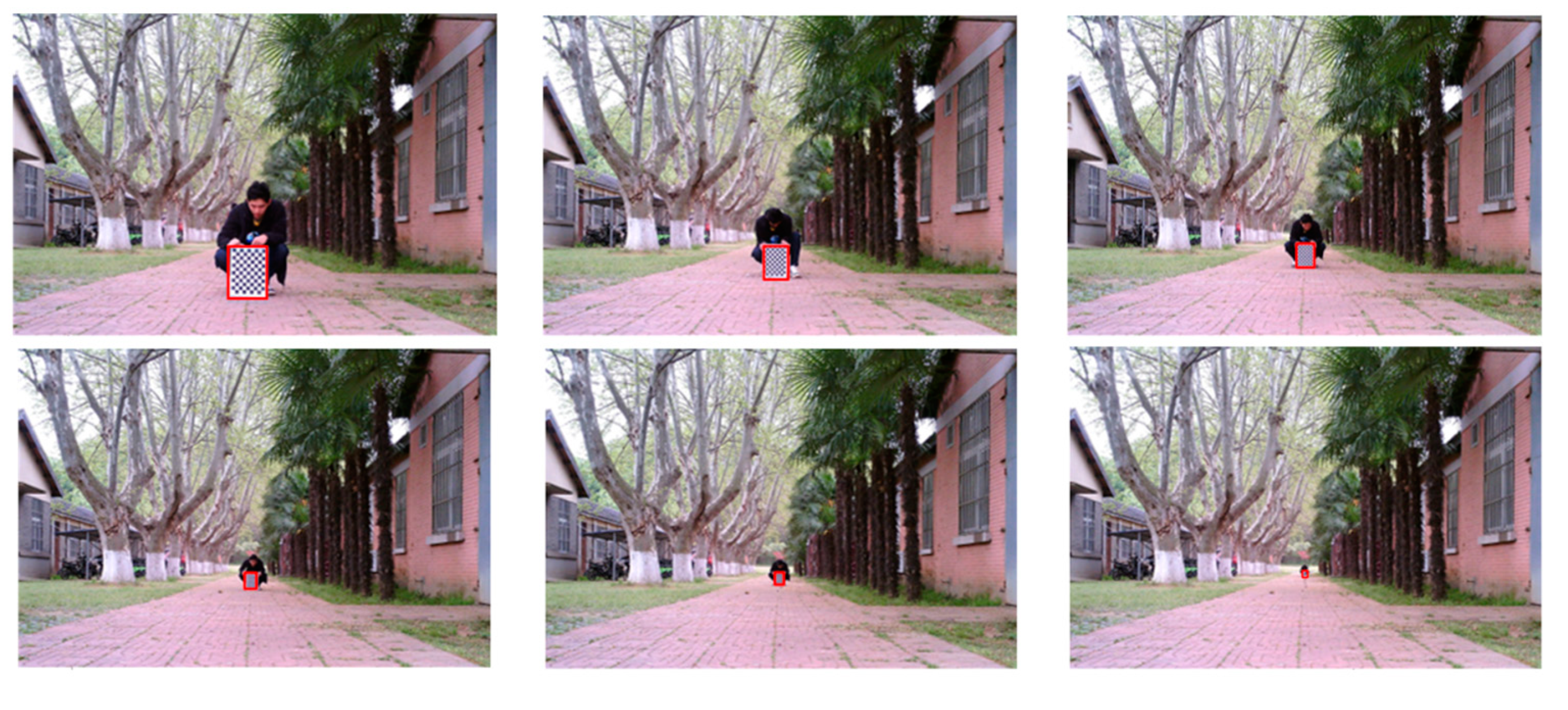

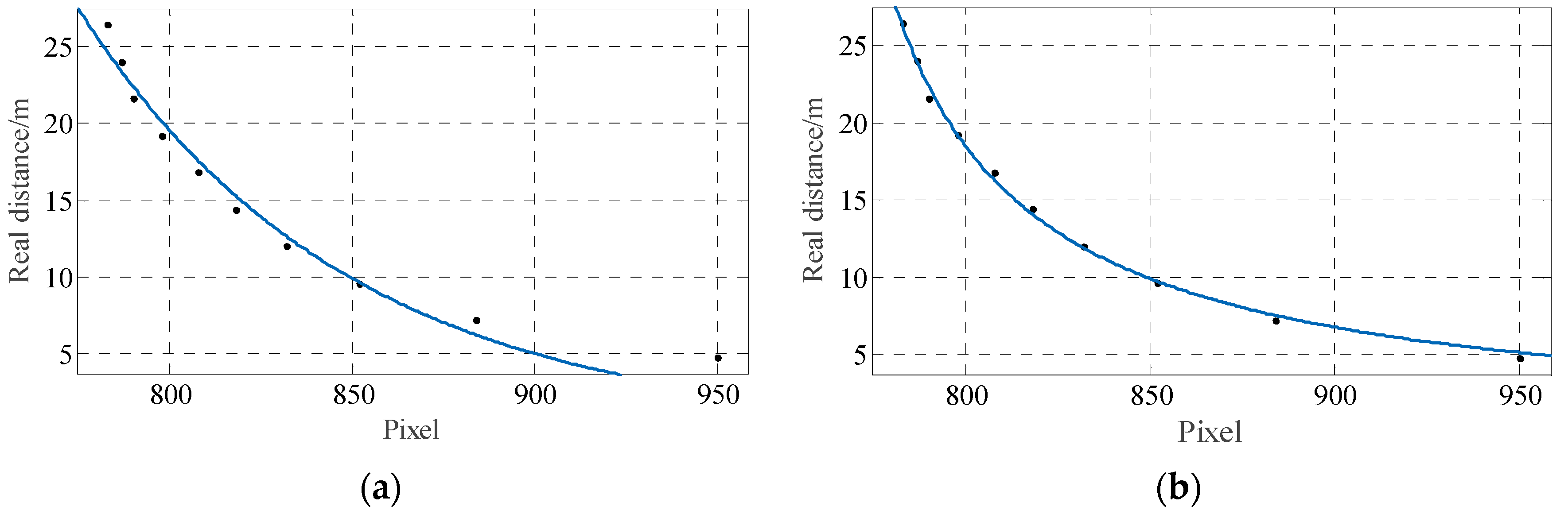

4.2.3. Solving of Pixel Value-Vertical Distance Relationship Function

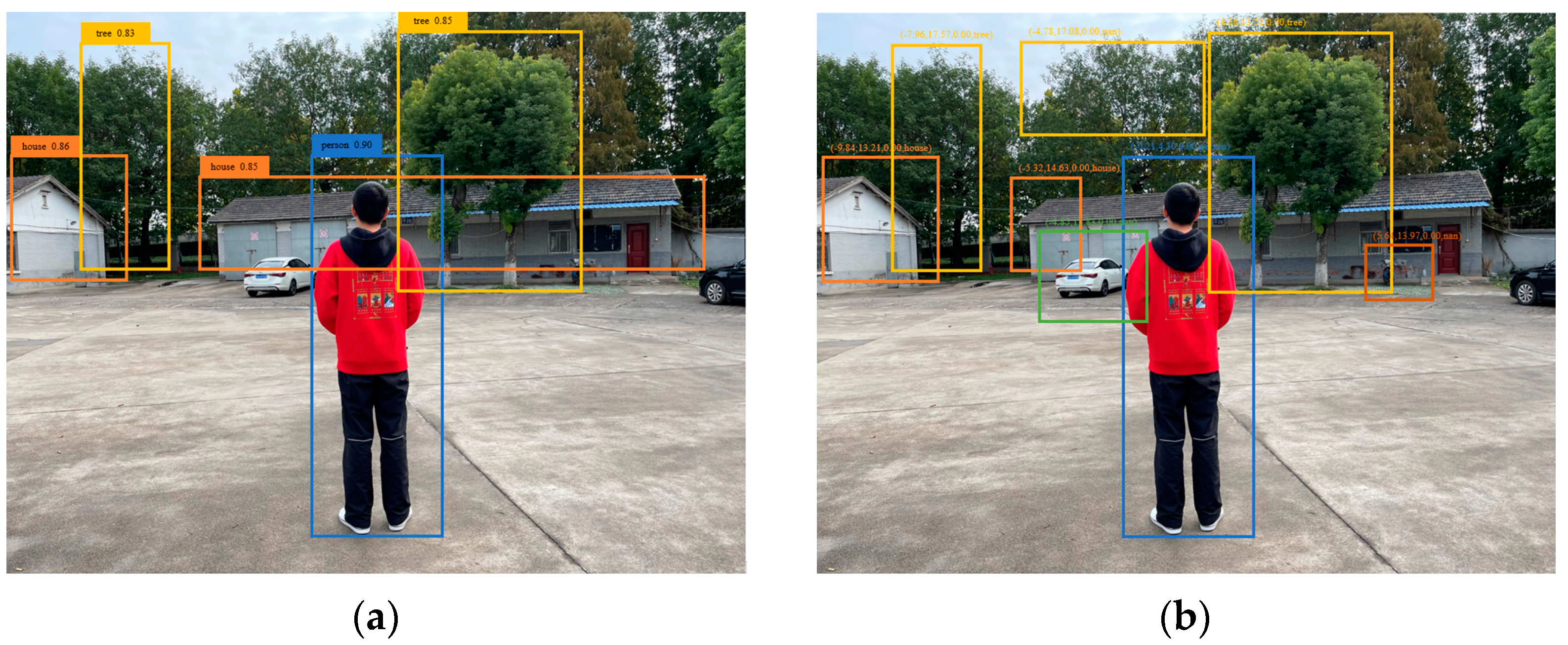

4.3. mmWave Radar and Camera Information Fusion Test

4.4. Comparison between This Study and Other Sensor Fusion Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Garcia-Perez, L.; Garcia-Alegre, M.C.; Ribeiro, A.; Guinea, D. An Agent of Behaviour Architecture for Unmanned Control of a Farming Vehicle. Comput. Electron. Agric. 2008, 60, 39–48. [Google Scholar] [CrossRef]

- Fue, K.; Porter, W.; Barnes, E.; Li, C.Y.; Rains, G. Autonomous Navigation of a Center-articulated and Hydrostatic Transmission Rover Using a Modified Pure Pursuit Algorithm in a Cotton Field. Sensors 2020, 20, 4412. [Google Scholar] [CrossRef] [PubMed]

- Popescu, D.; Stoican, F.; Stamatescu, G.; Ichim, L.; Dragana, C. Advanced UAV-WSN System for Intelligent Monitoring in Precision Agriculture. Sensors 2020, 20, 817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ji, Y.H.; Peng, C.; Li, S.C.; Chen, B.; Miao, Y.L.; Zhang, M.; Li, H. Multiple Object Tracking in Farmland Based on Fusion Point Cloud Data. Comput. Electron. Agric. 2022, 200, 107259. [Google Scholar] [CrossRef]

- Rovira-Mas, F. Sensor Architecture and Task Classification for Agricultural Vehicles and Environments. Sensors 2010, 10, 11226–11247. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Huang, Z.H.; You, W.J.; Lin, J.T.; Tang, X.L.; Huang, H. An Autonomous Fruit and Vegetable Harvester with a Low-cost Gripper Using a 3D Sensor. Sensors 2020, 20, 93. [Google Scholar] [CrossRef] [Green Version]

- Yeong, D.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Dvorak, J.S.; Stone, M.L.; Self, K.P. Object Detection for Agricultural and Construction Environments Using an Ultrasonic Sensor. J. Agric. Saf. Health 2016, 22, 107–119. [Google Scholar]

- Xue, J.; Cheng, F.; Li, Y.; Song, Y.; Mao, T. Detection of Farmland Obstacles Based on an Improved YOLOv5s Algorithm by Using CIoU and Anchor Box Scale Clustering. Sensors 2022, 22, 1790. [Google Scholar] [CrossRef]

- Nashashibi, A.; Ulaby, F.T. Millimeter Wave Radar Detection of Partially Obscured Targets. In Proceedings of the IEEE Antennas and Propaga-tion Society International Symposium. 2001 Digest. Held in Conjunction with: USNC/URSI National Radio Science Meeting (Cat. No.01CH37229), Boston, MA, USA, 8–13 July 2001; pp. 765–768. [Google Scholar]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle Detection and Recognition in Farmland Based on Fusion Point Cloud Data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Chen, X.; Wang, S.; Zhang, B.; Luo, L. Multi-feature Fusion Tree Trunk Detection and Orchard Mobile Robot Localization Using Camera/Ultrasonic Sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Maldaner, L.F.; Molin, J.P.; Canata, T.F.; Martello, M. A System for Plant Detection Using Sensor Fusion Approach Based on Machine Learning Model. Comput. Electron. Agric. 2021, 189, 106382. [Google Scholar] [CrossRef]

- Xue, J.; Fan, B.; Yan, J.; Dong, S.; Ding, Q. Trunk Detection Based on Laser Radar and Vision Data Fusion. Int. J. Agric. Biol. Eng. 2018, 11, 20–26. [Google Scholar] [CrossRef] [Green Version]

- Wei, Z.Q.; Zhang, F.K.; Chang, S.; Liu, Y.Y.; Wu, H.C.; Feng, Z.Y. MmWave Radar and Vision Fusion for Object Detection in Autonomous Driving: A Review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef] [PubMed]

- Huang, W.; Zhang, Z.; Li, W.; Tian, J. Moving Object Tracking Based on Millimeter-wave Radar and Vision Sensor. J. Appl. Sci. Eng. 2018, 21, 609–614. [Google Scholar]

- Wang, T.; Zheng, N.; Xin, J.; Ma, Z. Integrating Millimeter Wave Radar with a Monocular Vision Sensor for On-road Obstacle Detection Applications. Sensors 2011, 11, 8992–9008. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Long, N.; Wang, K.; Cheng, R.; Hu, W.; Yang, K. Unifying Obstacle Detection, Recognition, and Fusion Based on Millimeter Wave Radar and RGB-depth Sensors for the Visually Impaired. Rev. Sci. Instrum. 2019, 90, 044102. [Google Scholar] [CrossRef]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-Road Vehicle Detection and Tracking Using MMW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. In 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF); IEEE: New York, NY, USA, 2019; pp. 1–7. [Google Scholar]

- Guo, X.; Du, J.; Gao, J.; Wang, W. Pedestrian Detection Based on Fusion of Millimeter Wave Radar and Vision. In Proceedings of the 2018 International Conference on Artificial Intelligence and Pattern Recognition, Beijing, China, 18–20 August 2018; pp. 38–42. [Google Scholar]

- Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors 2020, 20, 956. [Google Scholar] [CrossRef] [Green Version]

- Masazade, E.; Fardad, M.; Varshney, P.K. Sparsity-Promoting Extended Kalman Filtering for Target Tracking in Wireless Sensor Networks. IEEE Signal Process. Lett. 2012, 19, 845–848. [Google Scholar] [CrossRef]

- Zhang, Z.Y. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

| Methods | Advantages | Disadvantages | Degree of Difficulty | Scope of Application |

|---|---|---|---|---|

| GNN | Calculation is small and simple | When the target density is large, association errors are likely to occur | Easily | Target density is small |

| PDA | Applicable to target tracking in clutter environment | Difficult to meet real-time requirements | Difficult | Target tracking in clutter environment |

| JPDA | Better adapt to target tracking in dense environment | Phenomenon of combined explosion of calculated load may occur | Easily | Tracking of dense maneuvering targets |

| MHT | Better adapt to target tracking in dense environment | Too much prior knowledge depending on target and clutter | Difficult | Tracking of dense maneuvering targets |

| SSE | R-Square | RMSE | |

|---|---|---|---|

| Power function | 209.4915 | 0.9589 | 3.2364 |

| Rational functions | 33.9776 | 0.9933 | 1.3034 |

| Exponential functions | 227.8865 | 0.9553 | 3.3755 |

| Tree | Human | Tractor | Haystack | House | Wire Poles | Sheep | Other | |

|---|---|---|---|---|---|---|---|---|

| Number | 175 | 154 | 203 | 62 | 166 | 94 | 57 | 42 |

| Method | Average Rate of Accuracy Detection (%) | Average Rate of Missing Detection (%) |

|---|---|---|

| Camera-only detection | 62.47 | 27.51 |

| Fusion detection | 86.18 | 13.80 |

| Category | Accuracy of Camera-Only Detection (%) | Accuracy of Fusion Detection (%) |

|---|---|---|

| Human | 83.07 | 95.19 |

| Tractors | 73.09 | 96.90 |

| Sheep | 55.60 | 61.06 |

| Haystack | 51.66 | 66.32 |

| Wire poles | 60.01 | 73.11 |

| House | 42.24 | 53.78 |

| Trees | 40.81 | 48.02 |

| Other | 0.00 | 40.00 |

| Integration Mode | Accuracy (%) |

|---|---|

| Data level fusion | 88.56 |

| Feature level fusion | 90.81 |

| Decision level fusion (in this paper) | 95.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, P.; Wang, B.; Cheng, F.; Xue, J. Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera. Sensors 2023, 23, 230. https://doi.org/10.3390/s23010230

Lv P, Wang B, Cheng F, Xue J. Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera. Sensors. 2023; 23(1):230. https://doi.org/10.3390/s23010230

Chicago/Turabian StyleLv, Pengfei, Bingqing Wang, Feng Cheng, and Jinlin Xue. 2023. "Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera" Sensors 23, no. 1: 230. https://doi.org/10.3390/s23010230

APA StyleLv, P., Wang, B., Cheng, F., & Xue, J. (2023). Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera. Sensors, 23(1), 230. https://doi.org/10.3390/s23010230