An Investigation of Signal Preprocessing for Photoacoustic Tomography

Abstract

:1. Introduction

2. Materials and Methods

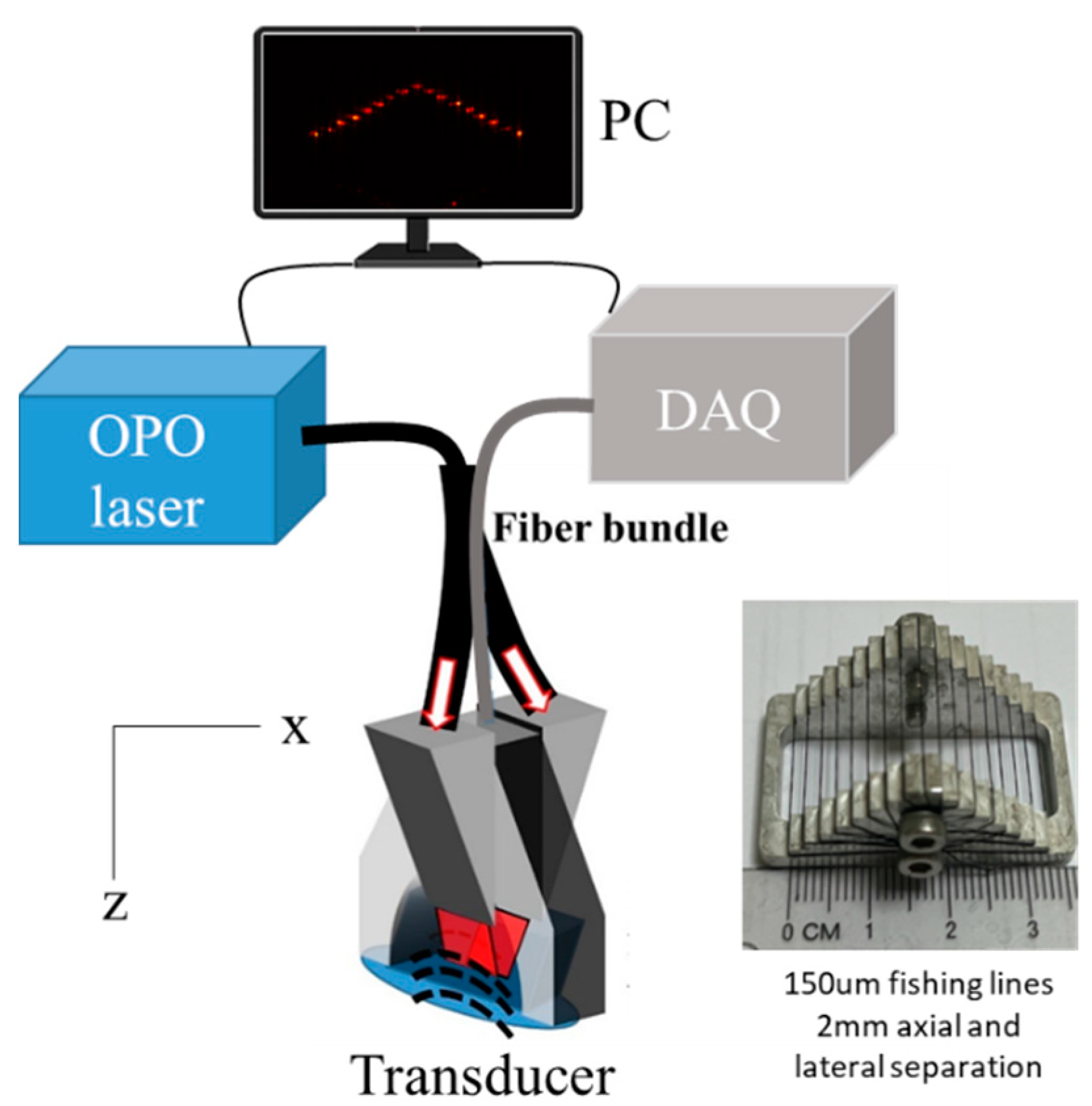

2.1. Image Acquisition

2.2. Signal Processing

2.2.1. Optimization of Preprocessing Methods’ Parameters

- Finding all local minima and maxima of the data;

- The calculation of the lower and upper envelopes by the cubic spline interpolation of the minima and maxima, respectively;

- Calculating the mean of the lower and upper envelopes, ;

- Calculating the provisional component h1 as the difference between the data and : ;

- Repeat sifting (steps 1–4) but using the provisional component as the data, yielding ;

- Continue sifting k times until the stopping criterion is reached, yielding . This is the first component, ;

- Derive the residue : ;

- Repeat steps 1–8 using the residue r1 as the data, yielding ;

- Continue repeating steps 1–8 to yield all further components: ;

- Stop when the residue is a monotonic function, meaning no more minima and maxima exist, and thus no more components are extracted.

2.2.2. CNR Measurement

2.2.3. Resolution Measurement

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Optimization of Wavelet Denoising

Appendix A.2. Selection of the Wavelet Family and Order

| Family and Order Comparison | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Family | Order | CNR | Rank | FWHMx | Rank | FWHMz | Rank | Average Rank | Rank |

| coif | 1 | 34.27 | 13 | 552.1 | 1 | 779.8 | 21 | 11.7 | 13 |

| 2 | 34.74 | 10 | 553.07 | 10 | 779.54 | 11 | 10.3 | 11 | |

| 3 | 36.28 | 2 | 553.98 | 13 | 779.43 | 6 | 7.0 | 2 | |

| 4 | 36.03 | 3 | 554.46 | 15 | 779.46 | 7 | 8.3 | 6 | |

| 5 | 36.84 | 1 | 555.25 | 19 | 779.38 | 4 | 8.0 | 4 | |

| fk | 4 | 33.61 | 20 | 554.25 | 14 | 779.76 | 20 | 18.0 | 21 |

| 6 | 33.18 | 21 | 555.69 | 20 | 779.46 | 7 | 16.0 | 20 | |

| 8 | 34.83 | 8 | 553.61 | 11 | 779.39 | 5 | 8.0 | 4 | |

| db | 1 | 33.65 | 17 | 552.56 | 6 | 779.68 | 17 | 13.3 | 15 |

| 2 | 33.73 | 15 | 554.86 | 16 | 779.56 | 12 | 14.3 | 18 | |

| 3 | 34.36 | 11 | 552.51 | 4 | 779.67 | 15 | 10.0 | 9 | |

| 4 | 35.42 | 5 | 552.38 | 2 | 779.35 | 3 | 3.3 | 1 | |

| 5 | 35.15 | 7 | 553.63 | 12 | 779.2 | 2 | 7.0 | 2 | |

| 6 | 35.19 | 6 | 555.06 | 18 | 779.16 | 1 | 8.3 | 6 | |

| sym | 1 | 33.65 | 17 | 552.56 | 6 | 779.68 | 17 | 13.3 | 15 |

| 2 | 33.73 | 15 | 554.86 | 16 | 779.56 | 12 | 14.3 | 18 | |

| 3 | 34.36 | 11 | 552.51 | 4 | 779.67 | 15 | 10.0 | 9 | |

| 4 | 34.24 | 14 | 552.47 | 3 | 779.59 | 14 | 10.3 | 11 | |

| 5 | 35.79 | 4 | 556.38 | 21 | 779.49 | 10 | 11.7 | 13 | |

| 6 | 34.78 | 9 | 552.77 | 9 | 779.46 | 7 | 8.3 | 6 | |

| haar | 33.65 | 17 | 552.56 | 6 | 779.68 | 17 | 13.3 | 15 | |

| db4 DenoisingMethod and ThresholdRule Comparison | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| DenoisingMethod | ThresholdRule | CNR | Rank | FWHMx | Rank | FWHMz | Rank | Average % behind Best | Rank |

| UniversalThreshold | Soft | 35.42 | 11 | 552.38 | 12 | 779.35 | 1 | 1.14% | 2 |

| Hard | 35.48 | 10 | 550.52 | 1 | 780.25 | 5 | 1.15% | 3 | |

| Bayes | Median | 36.19 | 5 | 550.68 | 3 | 780.34 | 8 | 1.85% | 7 |

| Mean | 36.22 | 4 | 550.84 | 5 | 780.33 | 7 | 1.89% | 9 | |

| Soft | 36.15 | 6 | 551.67 | 9 | 779.86 | 3 | 1.85% | 8 | |

| Hard | 36.28 | 3 | 550.72 | 4 | 780.43 | 12 | 1.94% | 10 | |

| Minimax | Soft | 35.9 | 8 | 551.7 | 10 | 779.65 | 2 | 1.60% | 5 |

| Hard | 36.03 | 7 | 550.66 | 2 | 780.41 | 11 | 1.69% | 6 | |

| FDR | Hard | 35.51 | 9 | 550.97 | 6 | 780.37 | 9 | 1.21% | 4 |

| BlockJS | James-Stein | 34.34 | 12 | 552.09 | 11 | 780.27 | 6 | 0.13% | 1 |

| SURE | Soft | 36.48 | 1 | 551.22 | 8 | 780.17 | 4 | 2.15% | 12 |

| Hard | 36.29 | 2 | 551.12 | 7 | 780.4 | 10 | 1.97% | 11 | |

| db4 NoiseEstimation Comparison | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| DenoisingMethod/ ThresholdRule | NoiseEstimation | CNR | Rank | FWHMx | Rank | FWHMz | Rank | Average Rank | Rank |

| UniversalThreshold/hard | LevelDependent | 35.48 | 5 | 550.52 | 1 | 780.25 | 2 | 2.67 | 1 |

| LevelIndependent | 34.09 | 4 | 552.22 | 10 | 780.29 | 3 | 5.67 | 6 | |

| Bayes/median | LevelDependent | 36.15 | 9 | 551.67 | 5 | 779.86 | 1 | 5.00 | 4 |

| LevelIndependent | 34.25 | 5 | 552.12 | 8 | 780.31 | 4 | 5.67 | 6 | |

| Minimax/hard | LevelDependent | 36.03 | 8 | 550.66 | 2 | 780.41 | 10 | 6.67 | 9 |

| LevelIndependent | 33.99 | 2 | 552.11 | 7 | 780.32 | 5 | 4.67 | 2 | |

| FDR/hard | LevelDependent | 35.51 | 7 | 550.97 | 3 | 780.37 | 8 | 6.00 | 8 |

| LevelIndependent | 33.98 | 1 | 552.12 | 8 | 780.32 | 5 | 4.67 | 2 | |

| SURE/hard | LevelDependent | 36.29 | 10 | 551.12 | 4 | 780.4 | 9 | 7.67 | 10 |

| LevelIndependent | 34 | 3 | 552.07 | 6 | 780.33 | 7 | 5.33 | 5 | |

Appendix B

Selection of the Optimal Row from the Multi-Point Acquisition

| CNR (μm) | FWHMx (μm) | FWHMz (μm) | ||||

|---|---|---|---|---|---|---|

| Row | µ | σ | µ | σ | µ | σ |

| 1 | 7.71 | N/A | 630.71 | N/A | 782.56 | N/A |

| 2 | 9.6 | 0.59 | 538.2 | 1.98 | 786.06 | 7.58 |

| 3 | 10.89 | 1.42 | 541.88 | 4.47 | 777.97 | 0.36 |

| 4 | 11.78 | 2.5 | 545.96 | 7.71 | 787.91 | 14.5 |

| 5 | 13.25 | 1.61 | 537.6 | 0.53 | 783.23 | 5.37 |

| 6 | 12.28 | 2.11 | 583.72 | 67.04 | 778.73 | 2.39 |

| 7 | 10.52 | 2.62 | 544.54 | 7.74 | 774.52 | 4.41 |

| 8 | 8.33 | 1.84 | 542.12 | 4.74 | 775.93 | 1.43 |

| 9 | 5.42 | 1.63 | 543.37 | 4.89 | 790.78 | 16.62 |

References

- Mallidi, S.; Luke, G.P.; Emelianov, S. Photoacoustic Imaging in Cancer Detection, Diagnosis, and Treatment Guidance. Trends Biotechnol. 2011, 29, 213–221. [Google Scholar] [CrossRef] [Green Version]

- Yao, J.; Wang, L. V Photoacoustic Brain Imaging: From Microscopic to Macroscopic Scales. Neurophotonics 2014, 1, 11003. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- ANSI Z136.1-2014; ANSI American National Standard for Safe Use of Lasers. ANSI: New York, NY, USA, 2014.

- Li, J.; Yu, B.; Zhao, W.; Chen, W. A Review of Signal Enhancement and Noise Reduction Techniques for Tunable Diode Laser Absorption Spectroscopy. Appl. Spectrosc. Rev. 2014, 49, 666–691. [Google Scholar] [CrossRef]

- Farnia, P.; Najafzadeh, E.; Hariri, A.; Lavasani, S.N.; Makkiabadi, B.; Ahmadian, A.; Jokerst, J.V. Dictionary Learning Technique Enhances Signal in LED-Based Photoacoustic Imaging. Biomed. Opt. Express 2020, 11, 2533. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Feng, X.; Zhang, R.; Liu, S.; Zheng, Y. Adaptive Photoacoustic Sensing Using Matched Filter. IEEE Sens. Lett. 2017, 1, 1–3. [Google Scholar] [CrossRef]

- Guney, G.; Uluc, N.; Demirkiran, A.; Aytac-Kipergil, E.; Unlu, M.B.; Birgul, O. Comparison of Noise Reduction Methods in Photoacoustic Microscopy. Comput. Biol. Med. 2019, 109, 333–341. [Google Scholar] [CrossRef] [PubMed]

- Tzoumas, S.; Rosenthal, A.; Lutzweiler, C.; Razansky, D.; Ntziachristos, V. Spatiospectral Denoising Framework for Multispectral Optoacoustic Imaging Based on Sparse Signal Representation. Med. Phys. 2014, 41, 113301. [Google Scholar] [CrossRef]

- Zeng, L.; Xing, D.; Gu, H.; Yang, D.; Yang, S.; Xiang, L. High Antinoise Photoacoustic Tomography Based on a Modified Filtered Backprojection Algorithm with Combination Wavelet. Med. Phys. 2007, 34, 556–563. [Google Scholar] [CrossRef] [Green Version]

- Holan, S.H.; Viator, J.A. Automated Wavelet Denoising of Photoacoustic Signals for Circulating Melanoma Cell Detection and Burn Image Reconstruction. Phys. Med. Biol. 2008, 53, N227. [Google Scholar] [CrossRef]

- Hill, E.R.; Xia, W.; Clarkson, M.J.; Desjardins, A.E. Identification and Removal of Laser-Induced Noise in Photoacoustic Imaging Using Singular Value Decomposition. Biomed. Opt. Express 2017, 8, 68–77. [Google Scholar] [CrossRef]

- Lei, Y.; Lin, J.; He, Z.; Zuo, M.J. A Review on Empirical Mode Decomposition in Fault Diagnosis of Rotating Machinery. Mech Syst. Signal Process 2013, 35, 108–126. [Google Scholar] [CrossRef]

- SUN, M.; FENG, N.; SHEN, Y.I.; SHEN, X.; LI, J. Photoacoustic signals denoising based on empirical mode decomposition and energy-window method. Adv. Adapt. Data Anal. 2012, 04, 1250004. [Google Scholar] [CrossRef]

- Bamber, J.; Phelps, J. The-Effective Directivity Characteristic of a Pulsed Ultrasound Transducer and Its Measurement by Semi-Automatic Means. Ultrasonics 1977, 15, 169–174. [Google Scholar] [CrossRef]

- Cox, B.T.; Treeby, B.E. Effect of Sensor Directionality on Photoacoustic Imaging: A Study Using the k-Wave Toolbox. Photons Plus Ultrasound Imaging Sens. 2010, 7564, 123–128. [Google Scholar] [CrossRef] [Green Version]

- Warbal, P.; Saha, R.K. In Silico Evaluation of the Effect of Sensor Directivity on Photoacoustic Tomography Imaging. Optik 2022, 252, 168305. [Google Scholar] [CrossRef]

- Yang, C.; Lan, H.; Gao, F.; Gao, F. Review of Deep Learning for Photoacoustic Imaging. Photoacoustics 2021, 21, 100215. [Google Scholar] [CrossRef]

- Gröhl, J.; Schellenberg, M.; Dreher, K.; Maier-Hein, L. Deep Learning for Biomedical Photoacoustic Imaging: A Review. Photoacoustics 2021, 22, 100241. [Google Scholar] [CrossRef]

- Merčep, E.; Deán-Ben, X.L.; Razansky, D. Combined Pulse-Echo Ultrasound and Multispectral Optoacoustic Tomography with a Multi-Segment Detector Array. IEEE Trans. Med. Imaging 2017, 36, 2129–2137. [Google Scholar] [CrossRef]

- Rilling, G.; Flandrin, P.; Goncalves, P. On Empirical Mode Decomposition and Its Algorithms. In Proceedings of the 6th IEEE-EURASIP Workshop on Nonlinear Signal and Image Processing, Grado, Italy, 8–11 June 2003. [Google Scholar]

- Huen, I.K.-P.; Morris, D.M.; Wright, C.; Parker, G.J.M.; Sibley, C.P.; Johnstone, E.D.; Naish, J.J.H.; Hernando, D.; Sharma, S.D.; Ghasabeh, M.A.; et al. Wee Testimonial Football Draft League. Magn. Reason. Med. 2016, 7, 1–2. [Google Scholar] [CrossRef]

- Xu, M.; Wang, L.V. Universal Back-Projection Algorithm for Photoacoustic Computed Tomography. Phys. Rev. E Stat. Nonlin. Soft Matter. Phys. 2005, 71, 1–7. [Google Scholar] [CrossRef]

- Bell, M.A.L.; Kuo, N.P.; Song, D.Y.; Kang, J.U.; Boctor, E.M. In Vivo Visualization of Prostate Brachytherapy Seeds with Photoacoustic Imaging. J. Biomed. Opt. 2014, 19, 126011. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miri Rostami, S.R.; Mozaffarzadeh, M.; Ghaffari-Miab, M.; Hariri, A.; Jokerst, J. GPU-Accelerated Double-Stage Delay-Multiply-and-Sum Algorithm for Fast Photoacoustic Tomography Using LED Excitation and Linear Arrays. Ultrason. Imaging 2019, 41, 301–316. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, K.; Huang, C.; Kao, Y.J.; Chou, C.Y.; Oraevsky, A.A.; Anastasio, M.A. Accelerating Image Reconstruction in Three-Dimensional Optoacoustic Tomography on Graphics Processing Units. Med. Phys. 2013, 40, 023301. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Antholzer, S.; Haltmeier, M.; Schwab, J. Deep Learning for Photoacoustic Tomography from Sparse Data. Inverse Probl. Sci. Eng. 2019, 27, 987–1005. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Allman, D.; Reiter, A.; Bell, M.A.L. A Machine Learning Method to Identify and Remove Reflection Artifacts in Photoacoustic Channel Data. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017; pp. 1–4. [Google Scholar]

- Allman, D.; Reiter, A.; Bell, M.A.L. Photoacoustic Source Detection and Reflection Artifact Removal Enabled by Deep Learning. IEEE Trans. Med. Imaging 2018, 37, 1464–1477. [Google Scholar] [CrossRef]

- Allman, D.; Bell, M.; Reiter, A. Exploring the Effects of Transducer Models When Training Convolutional Neural Networks to Eliminate Reflection Artifacts in Experimental Photoacoustic Images. Photons Plus Ultrasound Imaging Sens. 2018, 190, 499–504. [Google Scholar] [CrossRef]

- Gao, F.; Kishor, R.; Feng, X.; Liu, S.; Ding, R.; Zhang, R.; Zheng, Y. An Analytical Study of Photoacoustic and Thermoacoustic Generation Efficiency towards Contrast Agent and Film Design Optimization. Photoacoustics 2017, 7, 1–11. [Google Scholar] [CrossRef]

- Svanström, E. Analytical Photoacoustic Model of Laser-Induced Ultrasound in a Planar Layered Structure. Ph.D. Thesis, Luleå Tekniska Universitet, Luleå, Sweden, 2013. [Google Scholar]

- Wang, L.V. Tutorial on Photoacoustic Microscopy and Computed Tomography. IEEE J. Sel. Top. Quantum Electron. 2008, 14, 171–179. [Google Scholar] [CrossRef]

| Preprocessing | Parameter | Value/Type |

|---|---|---|

| Bandpass filter | Filter type Bandwidth | FIR 90% of the center frequency |

| Wavelet denoising | Wavelet family | Daubechies |

| Order | 4th | |

| Denoising method | Universal threshold | |

| Threshold rule | Hard | |

| Noise estimation | Level-dependent | |

| EMD | Number of components used | 2 |

| SVD | Number of components used | 1 |

| Sensor directivity | Angular FWHM | ±13.5 degrees |

| Point | Multi-point | Finger | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | CNR | Peak SNR | FWHMx (μm) | FWHMz (μm) | CNR | Peak SNR | FWHMx (μm) | FWHMz (μm) | CNR | Peak SNR | FWHMx (μm) | FWHMz (μm) |

| Target | - | - | 150 | 150 | - | - | 150 | 150 | - | - | - | - |

| Unfiltered | 34 | 698 | 552.1 (368%) | 780.3 (520%) | 13.2 | 185 | 537.6 (358%) | 783.2 (522%) | 16.9 | 79 | 533.3 | 767.1 |

| Bandpass | 38.3 | 783 | 552.2 (368%) | 780.5 (520%) | 13.2 | 190 | 538.1 (359%) | 786.2 (524%) | 17.5 | 80 | 533.1 | 766.8 |

| Wavelet | 31.3 | 723 | 586.5 (391%) | 777.5 (518%) | 8 | 168 | 537.5 (358%) | 778.1 (519%) | 12.9 | 67 | 534.5 | 770.2 |

| EMD | 33.5 | 564 | 574.7 (383%) | 781.7 (521%) | 13.2 | 115 | 537.6 (358%) | 781.2 (521%) | 16.5 | 61 | 532.4 | 767.6 |

| SVD | 44.6 | 678 | 539.4 (360%) | 778.9 (519%) | 17.9 | 184 | 537.3 (358%) | 803.7 (536%) | 28.7 | 73 | 532.8 | 763.3 |

| Unfiltered+ directivity | 52.4 | 779 | 540.1 (360%) | 779.5 (520%) | 17.7 | 221 | 538.1 (359%) | 813.6 (542%) | 27.7 | 113 | 532.4 | 766.1 |

| Bandpass+ directivity | 46 | 917 | 539.3 (360%) | 778.7 (519%) | 15.7 | 226 | 537.2 (358%) | 834.3 (556%) | 25.7 | 106 | 532.9 | 766.6 |

| Wavelet+ directivity | 44.9 | 796 | 538.8 (359%) | 780.5 (520%) | 17.5 | 199 | 537.2 (358%) | 787 (525%) | 26.4 | 99 | 532.4 | 765.3 |

| EMD+directivity | 34 | 615 | 552.1 (368%) | 780.3 (520%) | 13.2 | 136 | 537.6 (358%) | 783.2 (522%) | 16.9 | 92 | 533.3 | 767.1 |

| SVD+directivity | 38.3 | 768 | 552.2 (368%) | 780.5 (520%) | 13.2 | 218 | 538.1 (359%) | 786.2 (524%) | 17.5 | 104 | 533.1 | 766.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huen, I.; Zhang, R.; Bi, R.; Li, X.; Moothanchery, M.; Olivo, M. An Investigation of Signal Preprocessing for Photoacoustic Tomography. Sensors 2023, 23, 510. https://doi.org/10.3390/s23010510

Huen I, Zhang R, Bi R, Li X, Moothanchery M, Olivo M. An Investigation of Signal Preprocessing for Photoacoustic Tomography. Sensors. 2023; 23(1):510. https://doi.org/10.3390/s23010510

Chicago/Turabian StyleHuen, Isaac, Ruochong Zhang, Renzhe Bi, Xiuting Li, Mohesh Moothanchery, and Malini Olivo. 2023. "An Investigation of Signal Preprocessing for Photoacoustic Tomography" Sensors 23, no. 1: 510. https://doi.org/10.3390/s23010510