An Intelligent Platform for Software Component Mining and Retrieval

Abstract

1. Introduction

1.1. Role of Proposed Framework in the Development of Robotics and Internet of Things (IoT)-Based Systems

1.2. Research Contribution

- Proposed a framework that uses ML techniques to automatically recommend source code examples.

- We evaluated our framework using a dataset of 2500 code examples related to 50 queries. The evaluation results show that our proposed framework works effectively for source code components as it recommends relevant code examples for developers from existing schemas or online engines.

- A small prototype of the proposed framework is implemented and is qualitatively assessed using two experimental stages.

2. Related Work

| Tool/ Approach | Strength | Weakness |

|---|---|---|

| Google Code Search and Ohloh [41,42] | Results are ranked based on textual similarity. | Uses only one feature which is textual similarity. |

| Sourcerer [29] | Uses the basic notation of CodeRank, which only extracts structural information. | Only focus on structural information of source code. |

| PARSEWeb [4] | Uses the frequency and length of MIS (method-invocation sequences) to rank the final result. | Uses MIS feature during the ranking phase. |

| Exemplar [43] | Uses three ranking schemes WOS (word occurrences schema), DCS (dataflow connection schema), and RAS (relevant API calls schema) to rank the application. | This tool ranks the applications, not the source code snippets. |

| Semantic Code Search [44] | The comparable code snippets that follow the call sequences extrapolated from code snippets determine the ranking. | Uses a call sequence, which is the only feature used for the ranking code snippets. |

| Pattern-based Approach [45] | This approach considers popularity to rank the working code examples. | Popularity is the only feature that contributed to the final ranking. |

| QualBoa [37] | This tool incorporates functional and quality attributes | Ranking components based on the functional score |

3. Research Methodology

3.1. Process Description

3.1.1. Similarity Measure for Textual Information

3.1.2. Popularity

3.1.3. Code Metrics

3.1.4. Measure Context Similarity

3.2. Training of a Ranking Schema

| Algorithm 1: The learning process details of the RankBoot algorithm for approaching the final ranking H. |

Require: Initial values of (D , ) over each code example pair in the training data. 1: Initialize: 2: for t = 1, …, T (T = 12) do 3: Build ranking function f t (c) based on the ranking feature. 4: Choose using Equation (8). 5: Update: 6: end for 7: Output the final ranking |

4. Experimental Evaluations

4.1. Experimental Stage A

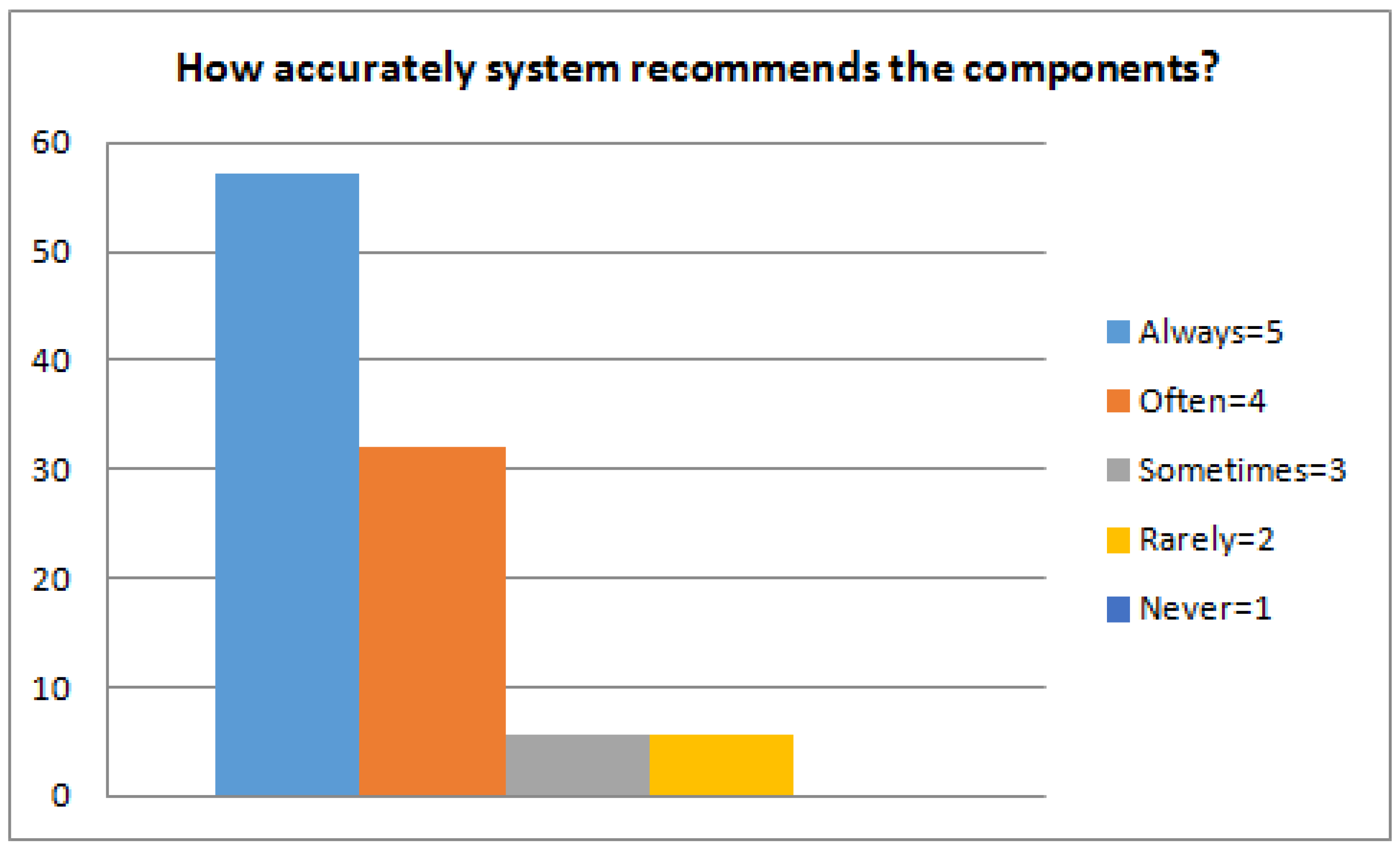

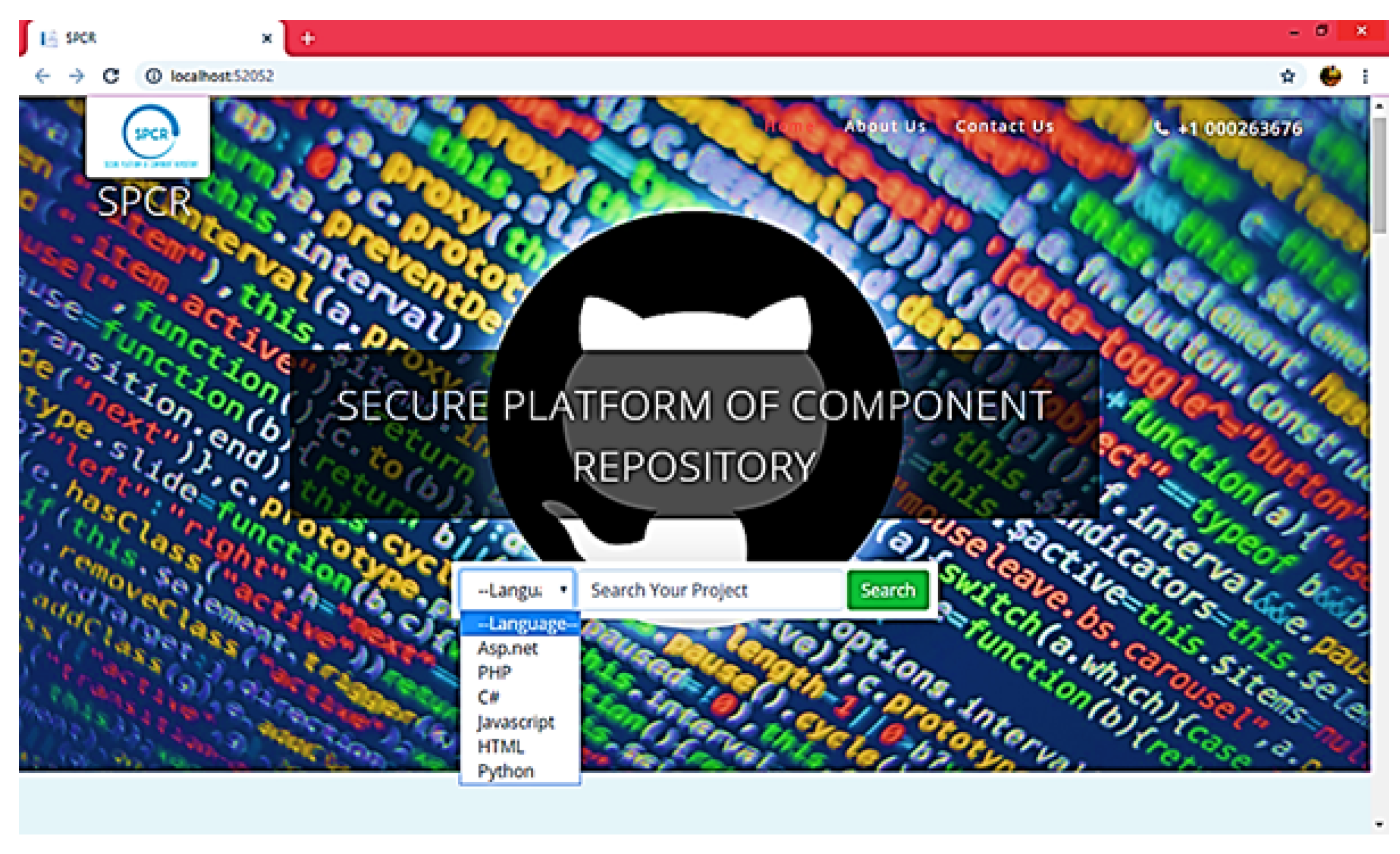

4.2. Experimental Stage B

4.3. Experimental Stage C

4.4. Automatic Evaluation Metrics

- ■

- R@k: This metric is commonly used to evaluate whether the proposed approach can retrieve the correct code component in the top-k returned results. Equation (9) is used to calculate the value of this metric.where (.) represents the ranking of correct code components against a given specification (query(q)) and Q represents the set containing all queries. If the relevant code component cannot be found in the top-k results, the frank function () returns 0, otherwise, it returns 1. The better code retrieval performance is shown by a higher rank () value.

- ■

- MRR: “Mean Reciprocal Rank” is represented by MRR. This metric is frequently used for the code component retrieval task. It computes the mean reciprocal ranks of the correct code components of query set Q. Equation (10) is used to measure the MRR.

- ■

- NDCG: It is the “Normalized Discounted Cumulative Gain” denoted by NDCG. This approach is frequently used to assess the value of a set of search results. This is computed by dividing “discounted cumulative gain” (DCG) and “deal deduct cumulative gain” (IDCG). Equations (11) and (12) are used to compute NDCG.where p is the rank position, is the graded relevance of the result at position i, and is the list of relevant results up to position p. We set p equal to 10 for all experiments.

4.5. Comparison Baselines

- ■

- ■

- Sourcerer [29]: It is an open-source search engine that implements the fundamental idea of CodeRank. Instead of using conventional keyword-based search, this engine extracts source code structural to perform the search.

- ■

- PARSEWeb [4]: The PARSEWeb tool scans the local source code repository to extract different “method-invocation sequences” (MIS) and groups comparable MISs using a sequence post-processor. The retrieved MISs can be used to answer the supplied query. Results ranking is based on the frequency and length of MIS.

- ■

- Exemplar [43]: This is a search engine that searches the relevant software projects based on the query. Three ranking techniques employed by Exemplar are WOS, DCS, and RAS. These raking schemes perform ranking to sort retrieved application list.

- ■

- Semantic Code Search [44]: This “Semantic Code Search” approach retrieves similar code snippets that are ranked based on the call sequence extracted from code snippets.

- ■

- Pattern-based Approach [45]: This approach uses three features—similarity, popularity, and line length—to identify working code examples.

- ■

- QualBoa [37]: This is a recommendation system that retrieves source code components by considering both the functional and non-functional (quality aspect) requirements of the source code snippet. The ranking of source code snippets is performed based on reusability score and functional matching.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of Open Access Journals |

| TLA | three-letter acronym |

| LD | linear dichroism |

References

- Gharehyazie, M.; Ray, B.; Filkov, V. Some from here, some from there: Cross-project code reuse in github. In Proceedings of the 2017 IEEE/ACM 14th International Conference on Mining Software Repositories (MSR), Buenos Aires, Argentina, 20–21 May 2017; pp. 291–301. [Google Scholar]

- Haefliger, S.; Von Krogh, G.; Spaeth, S. Code reuse in open source software. Manag. Sci. 2008, 54, 180–193. [Google Scholar] [CrossRef]

- Ponzanelli, L.; Bavota, G.; Di Penta, M.; Oliveto, R.; Lanza, M. Mining StackOverflow to turn the IDE into a self-confident programming prompter. In Proceedings of the 11th Working Conference on Mining Software Repositories, ACM, Hyderabad, India, 31 May–1 June 2014; pp. 102–111. [Google Scholar]

- Thummalapenta, S.; Xie, T. Parseweb: A programmer assistant for reusing open source code on the web. In Proceedings of the Twenty-Second IEEE/ACM International Conference on Automated Software Engineering, Atlanta, GA, USA, 5–9 November 2007; pp. 204–213. [Google Scholar]

- Pastebin. BWorld Robot Control Software. 2008. Available online: http://pastebin.com/ (accessed on 22 September 2022).

- Discover Gists. Available online: https://gist.github.com/ (accessed on 22 September 2022).

- codeshare. 2009. Available online: https://codeshare.io/ (accessed on 22 September 2022).

- Reiss, S.P. Semantics-based code search. In Proceedings of the 31st International Conference on Software Engineering, IEEE Computer Society, Vancouver, BC, Canada, 16–24 May 2009; pp. 243–253. [Google Scholar]

- Mockus, A. Large-scale code reuse in open source software. In Proceedings of the First International Workshop on Emerging Trends in FLOSS Research and Development (FLOSS’07: ICSE Workshops 2007), Minneapolis, MN, USA, 20–26 May 2007; p. 7. [Google Scholar]

- Inoue, K.; Sasaki, Y.; Xia, P.; Manabe, Y. Where does this code come from and where does it go?–Integrated code history tracker for open source systems. In Proceedings of the 34th International Conference on Software Engineering, Zurich, Switzerland, 2–9 June 2012; pp. 331–341. [Google Scholar]

- Sadowski, C.; Stolee, K.T.; Elbaum, S. How developers search for code: A case study. In Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering, Bergamo, Italy, 30 August–4 September 2015; pp. 191–201. [Google Scholar]

- Rosen, C.; Shihab, E. What are mobile developers asking about? a large scale study using stack overflow. Empir. Softw. Eng. 2016, 21, 1192–1223. [Google Scholar] [CrossRef]

- Gui, J.; Mcilroy, S.; Nagappan, M.; Halfond, W.G. Truth in advertising: The hidden cost of mobile ads for software developers. In Proceedings of the 37th International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; Volume 1, pp. 100–110. [Google Scholar]

- Linares-Vásquez, M.; Bavota, G.; Bernal-Cárdenas, C.; Di Penta, M.; Oliveto, R.; Poshyvanyk, D. API change and fault proneness: A threat to the success of Android apps. In Proceedings of the 2013 9th Joint Meeting on Foundations of Software Engineering, Saint Petersburg, Russia, 18–26 August 2013; pp. 477–487. [Google Scholar]

- Sarro, F.; Al-Subaihin, A.A.; Harman, M.; Jia, Y.; Martin, W.; Zhang, Y. Feature lifecycles as they spread, migrate, remain, and die in app stores. In Proceedings of the 2015 IEEE 23rd International Requirements Engineering Conference (RE), Ottawa, ON, Canada, 24–28 August 2015; pp. 76–85. [Google Scholar]

- Lim, W.C. Effects of reuse on quality, productivity, and economics. IEEE Softw. 1994, 11, 23–30. [Google Scholar] [CrossRef]

- Abdalkareem, R.; Shihab, E.; Rilling, J. On code reuse from StackOverflow: An exploratory study on Android apps. Inf. Softw. Technol. 2017, 88, 148–158. [Google Scholar] [CrossRef]

- Nasehi, S.M.; Sillito, J.; Maurer, F.; Burns, C. What makes a good code example?: A study of programming Q&A in StackOverflow. In Proceedings of the 2012 28th IEEE International Conference on Software Maintenance (ICSM), Trento, Italy, 23–28 September 2012; pp. 25–34. [Google Scholar]

- Kim, D.; Nam, S.; Hong, J.E. A dynamic control technique to enhance the flexibility of software artifact reuse in large-scale repository. J. Supercomput. 2019, 75, 2027–2057. [Google Scholar] [CrossRef]

- Wang, S.; Mao, X.; Yu, Y. An initial step towards organ transplantation based on GitHub repository. IEEE Access 2018, 6, 59268–59281. [Google Scholar] [CrossRef]

- Corley, C.S.; Damevski, K.; Kraft, N.A. Changeset-based topic modeling of software repositories. IEEE Trans. Softw. Eng. 2018, 46, 1068–1080. [Google Scholar] [CrossRef]

- Chen, Z.; Xiao, L. Agent Component Reuse Based on Semantics Concept Similarity. In Proceedings of the 2019 2nd International Conference on Computers in Management and Business, Cambridge, UK, 24–27 March 2019; pp. 19–23. [Google Scholar]

- Ragkhitwetsagul, C. Measuring code similarity in large-scaled code Corpora. In Proceedings of the 2016 IEEE International Conference on Software Maintenance and Evolution (ICSME), Raleigh, NC, USA, 2–7 October 2016; pp. 626–630. [Google Scholar]

- Diamantopoulos, T.; Karagiannopoulos, G.; Symeonidis, A. Codecatch: Extracting source code snippets from online sources. In Proceedings of the 2018 IEEE/ACM 6th International Workshop on Realizing Artificial Intelligence Synergies in Software Engineering (RAISE), Gothenburg, Sweden, 28–29 May 2018; pp. 21–27. [Google Scholar]

- Freund, Y.; Iyer, R.; Schapire, R.E.; Singer, Y. An efficient boosting algorithm for combining preferences. J. Mach. Learn. Res. 2003, 4, 933–969. [Google Scholar]

- Higgins, C.; Robles, J.; Cooper, A.; Williams, S. Colza: Knowledge-Based, Component-Based Models. Softw. Eng. J. 2019, 6. [Google Scholar]

- Patel, S.; Kaur, J. A study of component based software system metrics. In Proceedings of the 2016 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 29–30 April 2016; pp. 824–828. [Google Scholar]

- Jifeng, H.; Li, X.; Liu, Z. Component-based software engineering. In Component-Based Software Engineering, Proceedings of the International Colloquium on Theoretical Aspects of Computing, Västeras, Sweden, 29 June–1 July 2006; Springer: Berlin/Heidelberg, Germany, 2005; pp. 70–95. [Google Scholar]

- Linstead, E.; Bajracharya, S.; Ngo, T.; Rigor, P.; Lopes, C.; Baldi, P. Sourcerer: Mining and searching internet-scale software repositories. Data Min. Knowl. Discov. 2009, 18, 300–336. [Google Scholar] [CrossRef]

- Hoffmann, R.; Fogarty, J.; Weld, D.S. Assieme: Finding and leveraging implicit references in a web search interface for programmers. In Proceedings of the 20th annual ACM symposium on User interface software and technology, Newport, RI, USA, 7–10 October 2007; pp. 13–22. [Google Scholar]

- Padhy, N.; Panigrahi, R.; Satapathy, S.C. Identifying the Reusable Components from Component-Based System: Proposed Metrics and Model. In Information Systems Design and Intelligent Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 89–99. [Google Scholar]

- Bajracharya, S.; Ossher, J.; Lopes, C. Sourcerer: An infrastructure for large-scale collection and analysis of open-source code. Sci. Comput. Program. 2014, 79, 241–259. [Google Scholar] [CrossRef]

- German, D.M.; Di Penta, M.; Gueheneuc, Y.G.; Antoniol, G. Code siblings: Technical and legal implications of copying code between applications. In Proceedings of the 2009 6th IEEE International Working Conference on Mining Software Repositories, Vancouver, BC, Canada, 16–17 May 2009; pp. 81–90. [Google Scholar]

- Davies, J.; German, D.M.; Godfrey, M.W.; Hindle, A. Software bertillonage: Finding the provenance of an entity. In Proceedings of the 8th Working Conference on Mining Software Repositories, Honolulu, HI, USA, 21–22 May 2011; pp. 183–192. [Google Scholar]

- Kawamitsu, N.; Ishio, T.; Kanda, T.; Kula, R.G.; De Roover, C.; Inoue, K. Identifying source code reuse across repositories using lcs-based source code similarity. In Proceedings of the 2014 IEEE 14th International Working Conference on Source Code Analysis and Manipulation, Victoria, BC, Canada, 28–29 September 2014; pp. 305–314. [Google Scholar]

- Huang, S.; Lu, Y.Q.; Xiao, Y.; Wang, W. Mining application repository to recommend XML configuration snippets. In Proceedings of the 2012 34th International Conference on Software Engineering (ICSE), Zurich, Switzerland, 2–9 June 2012; pp. 1451–1452. [Google Scholar]

- Diamantopoulos, T.; Thomopoulos, K.; Symeonidis, A. QualBoa: Reusability-aware recommendations of source code components. In Proceedings of the 2016 IEEE/ACM 13th Working Conference on Mining Software Repositories (MSR), Austin, TX, USA, 14–15 May 2016; pp. 488–491. [Google Scholar]

- Zhou, J.; Zhang, H. Learning to rank duplicate bug reports. In Proceedings of the 21st ACM International Conference on Information and Knowledge Management, Maui, HI, USA, 29 October–2 November 2012; pp. 852–861. [Google Scholar]

- Xuan, J.; Monperrus, M. Learning to combine multiple ranking metrics for fault localization. In Proceedings of the 2014 IEEE International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, 29 September–3 October 2014; pp. 191–200. [Google Scholar]

- Binkley, D.; Lawrie, D. Learning to rank improves IR in SE. In Proceedings of the 2014 IEEE International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, 29 September–3 October 2014; pp. 441–445. [Google Scholar]

- Cox, R. Regular Expression Matching with a Trigram Index or How Google Code Search Worked. 2012. Available online: https://swtch.com/~rsc/regexp/regexp4.html (accessed on 29 September 2022).

- Bruntink, M. An initial quality analysis of the ohloh software evolution data. Electron. Commun. EASST 2014, 65. [Google Scholar]

- McMillan, C.; Grechanik, M.; Poshyvanyk, D.; Fu, C.; Xie, Q. Exemplar: A source code search engine for finding highly relevant applications. IEEE Trans. Softw. Eng. 2011, 38, 1069–1087. [Google Scholar] [CrossRef]

- Mishne, A.; Shoham, S.; Yahav, E. Typestate-based semantic code search over partial programs. In Proceedings of the ACM International Conference on Object Oriented Programming Systems Languages and Applications, Tucson, AZ, USA, 19–26 October 2012; pp. 997–1016. [Google Scholar]

- Keivanloo, I.; Rilling, J.; Zou, Y. Spotting working code examples. In Proceedings of the 36th International Conference on Software Engineering, Hyderabad, India, 31 May–7 June 2014; pp. 664–675. [Google Scholar]

- Liu, T.Y. Learning to Rank for Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Kim, J.; Lee, S.; Hwang, S.w.; Kim, S. Towards an intelligent code search engine. Proc. AAAI 2010, 24, 1358–1363. [Google Scholar] [CrossRef]

- Mcmillan, C.; Poshyvanyk, D.; Grechanik, M.; Xie, Q.; Fu, C. Portfolio: Searching for relevant functions and their usages in millions of lines of code. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2013, 22, 1–30. [Google Scholar] [CrossRef]

- Salton, G.; Wong, A.; Yang, C.S. A vector space model for automatic indexing. Commun. ACM 1975, 18, 613–620. [Google Scholar] [CrossRef]

- Manning, C.D.; Raghavan, P.; Schütze, H. Scoring, term weighting and the vector space model. Introd. Inf. Retr. 2008, 100, 2–4. [Google Scholar]

- Buse, R.P.; Weimer, W.R. Learning a metric for code readability. IEEE Trans. Softw. Eng. 2009, 36, 546–558. [Google Scholar] [CrossRef]

- Ye, X.; Bunescu, R.; Liu, C. On the naturalness of software. In Proceedings of the IEEE International Conference on Software Engineering, Essen, Germany, 3–7 September 2012. [Google Scholar]

- Wang, J.; Dang, Y.; Zhang, H.; Chen, K.; Xie, T.; Zhang, D. Mining succinct and high-coverage API usage patterns from source code. In Proceedings of the 2013 10th Working Conference on Mining Software Repositories (MSR), San Francisco, CA, USA, 18–19 May 2013; pp. 319–328. [Google Scholar]

- Grahne, G.; Zhu, J. Efficiently using prefix-trees in mining frequent itemsets. FIMI 2003, 90, 65. [Google Scholar]

- Brin, S.; Page, L. The anatomy of a large-scale hypertextual web search engine. Comput. Net. ISDN Syst. 1998, 30, 107–117. [Google Scholar] [CrossRef]

- Zhong, H.; Xie, T.; Zhang, L.; Pei, J.; Mei, H. MAPO: Mining and recommending API usage patterns. In Proceedings of the European Conference on Object-Oriented Programming; Springer: Berlin/Heidelberg, Germany, 2009; pp. 318–343. [Google Scholar]

- Holmes, R.; Walker, R.J.; Murphy, G.C. Strathcona example recommendation tool. In Proceedings of the 10th European Software Engineering Conference Held Jointly with 13th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Lisbon, Portugal, 5–9 September 2005; pp. 237–240. [Google Scholar]

- Jaccard, P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull. Soc. Vaudoise Sci. Nat. 1901, 37, 547–579. [Google Scholar]

- Niu, H.; Keivanloo, I.; Zou, Y. Learning to rank code examples for code search engines. Empir. Softw. Eng. 2017, 22, 259–291. [Google Scholar] [CrossRef]

- Gu, X.; Zhang, H.; Kim, S. Deep code search. In Proceedings of the 2018 IEEE/ACM 40th International Conference on Software Engineering (ICSE), Gothenburg, Sweden, 27 May–3 June 2018; pp. 933–944. [Google Scholar]

- Haldar, R.; Wu, L.; Xiong, J.; Hockenmaier, J. A multi-perspective architecture for semantic code search. arXiv 2020, arXiv:2005.06980. [Google Scholar]

- Shuai, J.; Xu, L.; Liu, C.; Yan, M.; Xia, X.; Lei, Y. Improving code search with co-attentive representation learning. In Proceedings of the 28th International Conference on Program Comprehension, Seoul, Republic of Korea, 13–15 July 2020; pp. 196–207. [Google Scholar]

- Husain, H.; Wu, H.H.; Gazit, T.; Allamanis, M.; Brockschmidt, M. Codesearchnet challenge: Evaluating the state of semantic code search. arXiv 2019, arXiv:1909.09436. [Google Scholar]

| Category/Class | Feature | Description | Query Dependency |

|---|---|---|---|

| Similarity | Textual Similarity | Candidate code example and query likeness is measured using cosine similarity. | Yes |

| Popularity | Frequency | Code example method frequent occurrence of a specific in the corpus | No |

| Probability | The frequency at which a method calls a code fragment. method | ||

| Code Metrics | Line length | The candidate code length in terms of lines. | No |

| Number of identifiers | The average amount of identifiers associated with each LOC of a candidate fragment | ||

| Length of call sequence | Number of times a candidate code component method is called | ||

| Code Comment ratio | The number of comments associated with candidates LOC | ||

| Page Ranking | A metric to compute significance of code snippets | ||

| Fan-in | The number of times a specific code snippet calls other unique code snippets | ||

| Fan-out | The number of times a specific code snippet is called by other code fragments | ||

| Cyclomatic complexity | Occurrences of decisions in a code component (for, while, etc.) | ||

| Context | Context similarity | The Jaccard similarity measure is computed when a query is raised; it calculates the likeness between the method’s body and code example signature | Yes |

| Item # | Amount |

|---|---|

| Projects | 585 |

| Java Files | 65,491 |

| Code components | 360,162 |

| Code snippets LOC | 3,866,351 |

| Task | Query 1 | Query 2 | Query 3 | Query 4 | Query 5 |

|---|---|---|---|---|---|

| Primary Input 1 | Select Language | Select Language | Select Language | Select Language | Select Language |

| Primary Input 2 | Select function to be retrieved | Select function to be retrieved | Select function to be retrieved | Select function to be retrieved | Select function to be retrieved |

| Response Time (sec) | 10 | 12 | 8 | 8 | 9 |

| Download history time(sec) | 6 | 8 | 22 | 4 | 20 |

| Memory utilization(KB) | 2 | 3 | 4 | 1 | 2 |

| Reliability (max) | 90 | 95 | 92 | 93 | 90 |

| Application domain | ANY | ANY | ANY | ANY | ANY |

| Operating systems | Windows | Windows | Windows | Windows | Windows |

| Precision | 0.75 | 0.83 | 0.89 | 0.90 | 0.91 |

| Recall | 0.81 | 0.91 | 0.91 | 0.92 | 0.93 |

| Tool/Approach | MRR | R@10 | NDCG |

|---|---|---|---|

| Google Code Search and Ohloh [41,42] | 57.81 | 66.10 | 69.01 |

| Sourcerer [29] | 66.01 | 69.02 | 68.01 |

| PARSEWeb [4] | 66.01 | 69.02 | 68.01 |

| Exemplar [43] | 62.05 | 71.02 | 69.50 |

| Semantic Code Search [44] | 67.40 | 79.02 | 70.08 |

| Pattern-based Approach [45] | 52.10 | 64.65 | 71.09 |

| QualBoa [37] | 67.00 | 81.50 | 75.09 |

| Our approach | 73.90 | 84.31 | 77.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bibi, N.; Rana, T.; Maqbool, A.; Afzal, F.; Akgül, A.; De la Sen, M. An Intelligent Platform for Software Component Mining and Retrieval. Sensors 2023, 23, 525. https://doi.org/10.3390/s23010525

Bibi N, Rana T, Maqbool A, Afzal F, Akgül A, De la Sen M. An Intelligent Platform for Software Component Mining and Retrieval. Sensors. 2023; 23(1):525. https://doi.org/10.3390/s23010525

Chicago/Turabian StyleBibi, Nazia, Tauseef Rana, Ayesha Maqbool, Farkhanda Afzal, Ali Akgül, and Manuel De la Sen. 2023. "An Intelligent Platform for Software Component Mining and Retrieval" Sensors 23, no. 1: 525. https://doi.org/10.3390/s23010525

APA StyleBibi, N., Rana, T., Maqbool, A., Afzal, F., Akgül, A., & De la Sen, M. (2023). An Intelligent Platform for Software Component Mining and Retrieval. Sensors, 23(1), 525. https://doi.org/10.3390/s23010525