LEAN: Real-Time Analysis of Resistance Training Using Wearable Computing

Abstract

1. Introduction

Contributions

- Form classification that does not assume that the first few repetitions during an exercise set are of good form, does not place the inertial sensors on the weight stack, and does not require multiple sensors mounted on the body.

- Integration of the form analysis classification model and the repetition-counting algorithm to improve the computational efficiency of the system.

- An incremental repetition-counting algorithm that uses dynamically sized buffers to count repetitions in real time and that does not have to store long time periods of motion data in memory.

- A description of how to compute fine-grained exercise metrics. This includes the ratio of the time spent on concentric (shortening the muscle) and eccentric (lengthening the muscle) motions and the range of motion.

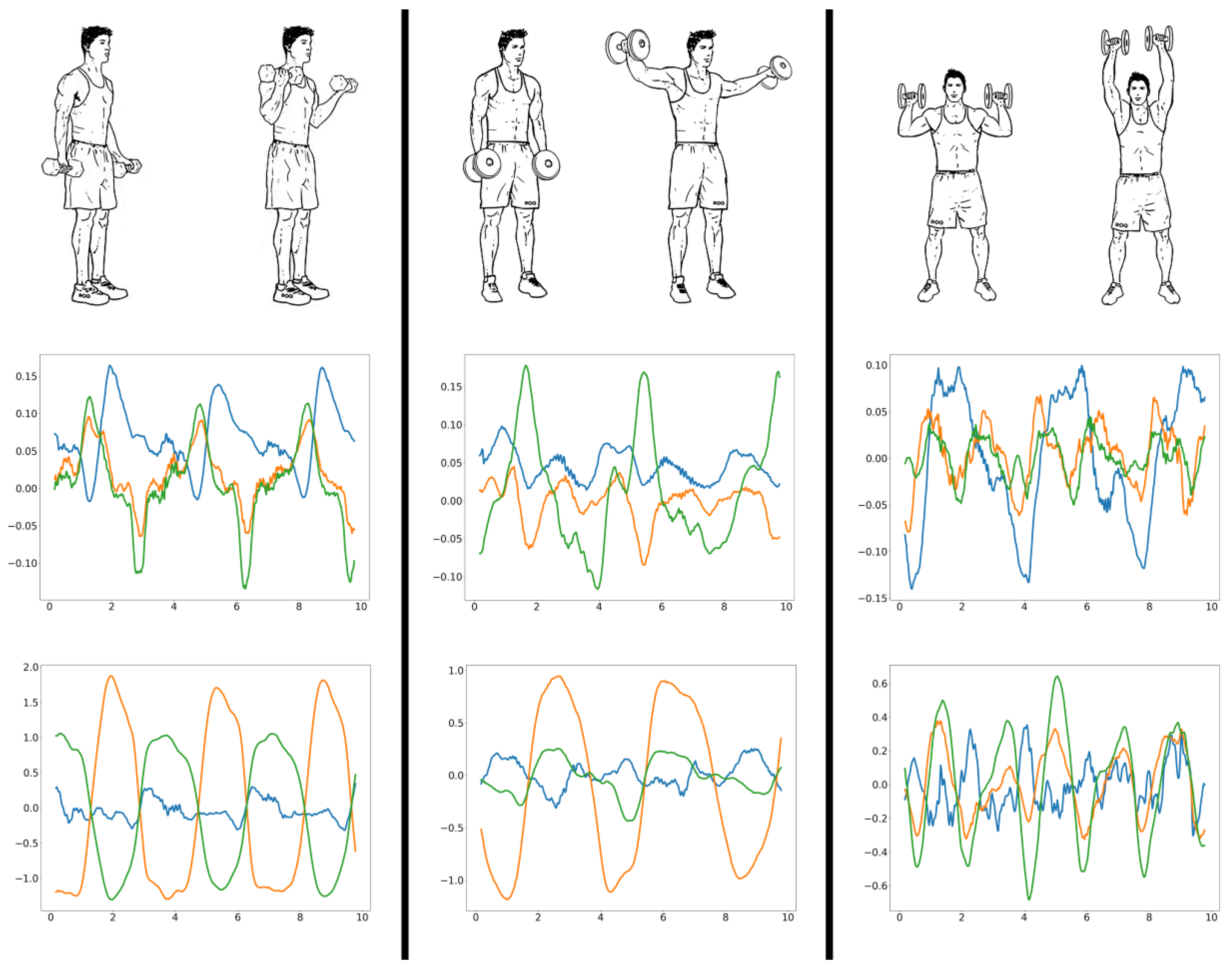

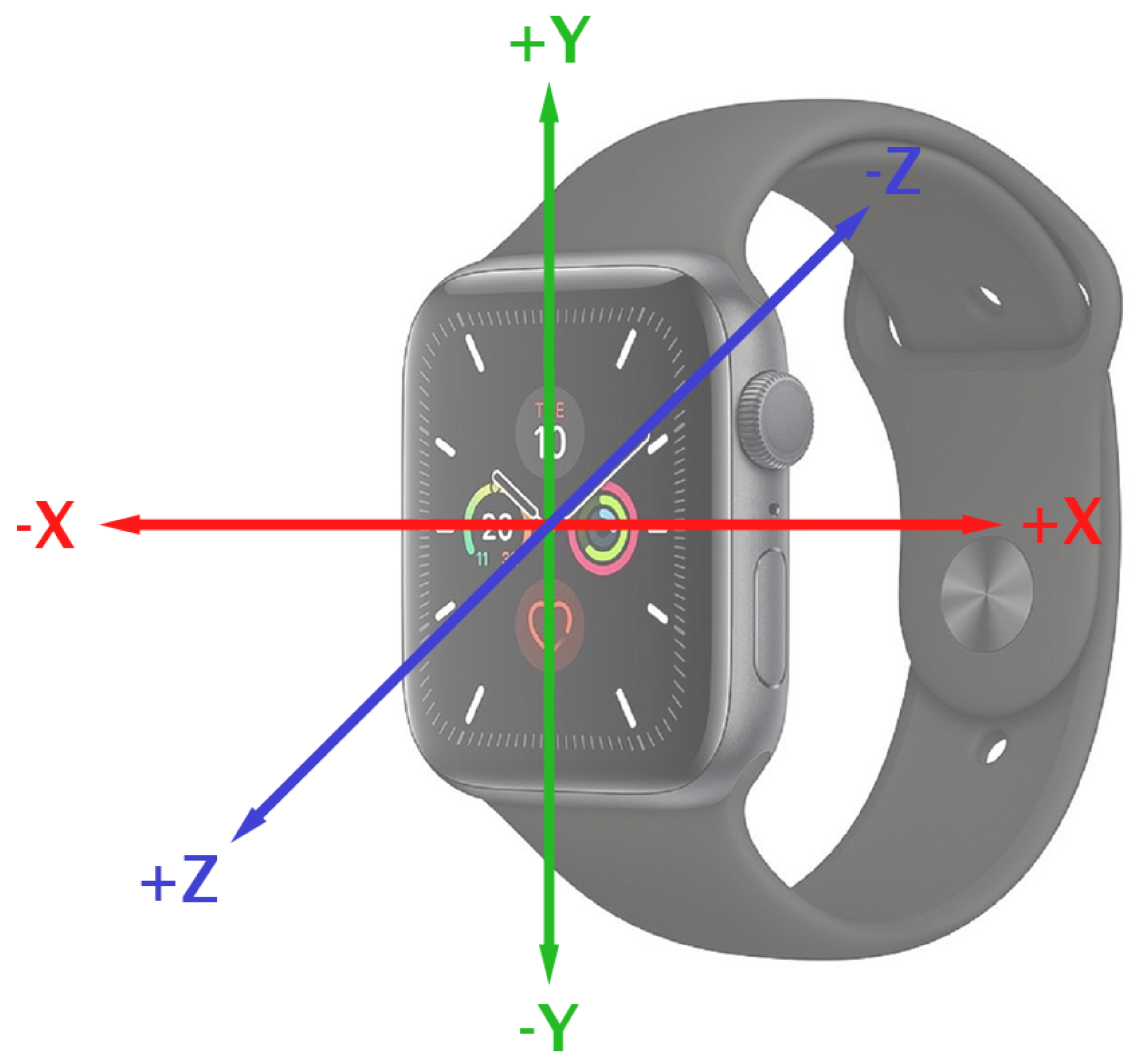

2. Exercise Analysis

2.1. Exercise and Form Classification

2.2. Repetition Counting

| Algorithm 1 Repetition counting algorithm |

| Input: B = Gravity buffer array; TP = Turning points array Output: C = Repetition count Initialisation:

|

2.3. Other Exercise Metrics

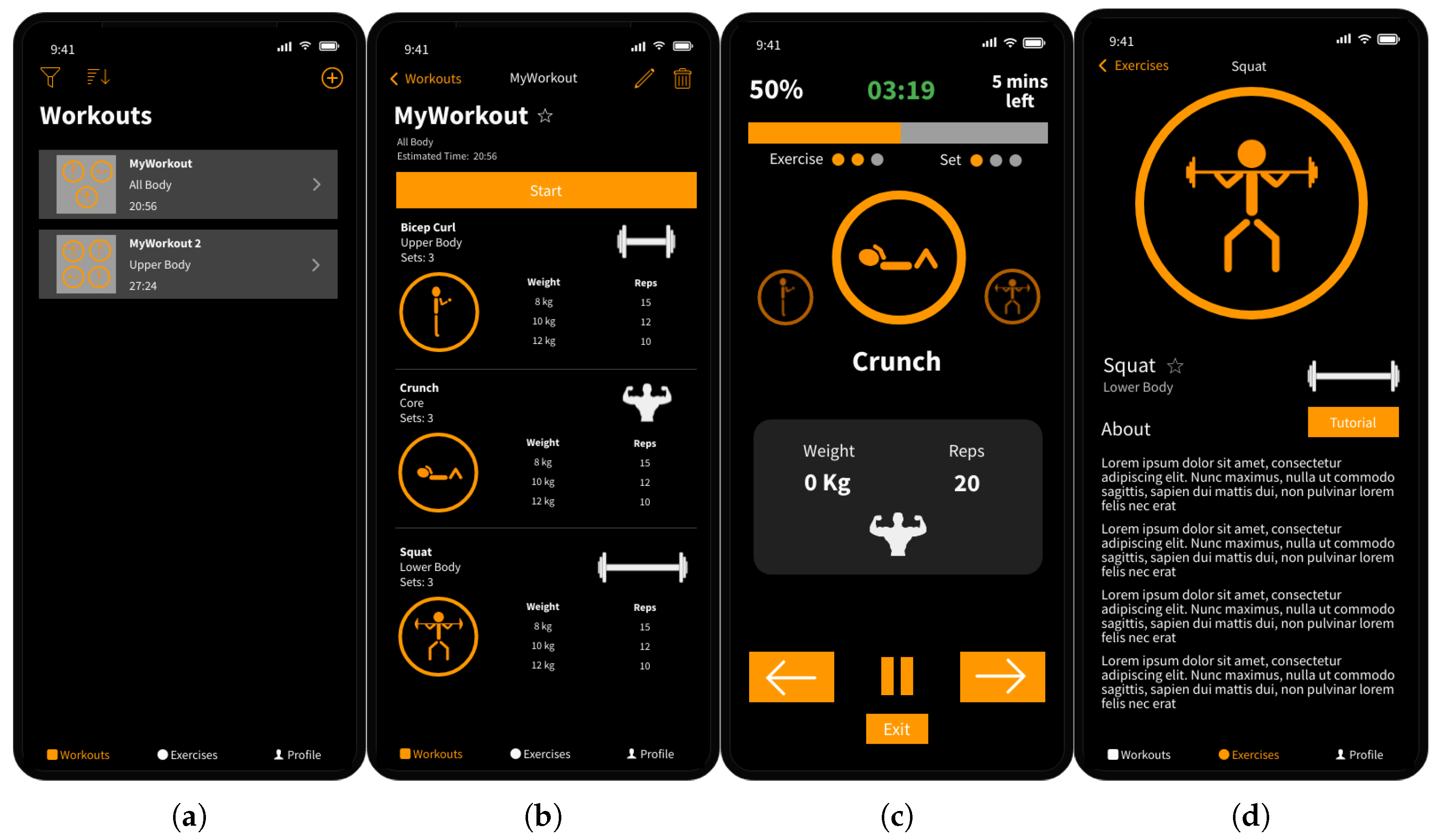

3. App Design

3.1. iPhone App Front-End

3.2. Apple Watch App Front-End

4. Performance Evaluation

4.1. Data Collection

4.2. Exercise and Form Classification

4.3. Repetition Counting

4.4. Other Exercise Metrics

4.5. User Survey

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, H.; Garg, S. Obesity and overweight—Their impact on individual and corporate health. J. Public Health 2020, 28, 211–218. [Google Scholar] [CrossRef]

- Ishii, S.; Yokokubo, A.; Luimula, M.; Lopez, G. ExerSense: Physical Exercise Recognition and Counting Algorithm from Wearables Robust to Positioning. Sensors 2021, 21, 91. [Google Scholar] [CrossRef] [PubMed]

- Chapron, K.; Lapointe, P.; Lessard, I.; Darsmstadt-Bélanger, H.; Bouchard, K.; Gagnon, C.; Lavoie, M.; Duchesne, E.; Gaboury, S. Acti-DM1: Monitoring the Activity Level of People With Myotonic Dystrophy Type 1 Through Activity and Exercise Recognition. IEEE Access 2021, 9, 49960–49973. [Google Scholar] [CrossRef]

- Yoshimura, E.; Tajiri, E.; Michiwaki, R.; Matsumoto, N.; Hatamoto, Y.; Tanaka, S. Long-term Effects of the Use of a Step Count—Specific Smartphone App on Physical Activity and Weight Loss: Randomized Controlled Clinical Trial. JMIR mHealth uHealth 2022, 10, e35628. [Google Scholar] [CrossRef] [PubMed]

- Bauer, C. On the (In-)Accuracy of GPS Measures of Smartphones: A Study of Running Tracking Applications. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia, Vienna, Austria, 2–4 December 2013; pp. 335–341. [Google Scholar]

- Janssen, M.; Scheerder, J.; Thibaut, E.; Brombacher, A.; Vos, S. Who uses running apps and sports watches? Determinants and consumer profiles of event runners’ usage of running-related smartphone applications and sports watches. PLoS ONE 2017, 12, e0181167. [Google Scholar] [CrossRef] [PubMed]

- Weisenthal, B.M.; Beck, C.A.; Maloney, M.D.; DeHaven, K.E.; Giordano, B.D. Injury Rate and Patterns Among CrossFit Athletes. Orthop. J. Sports Med. 2014, 2, 2325967114531177. [Google Scholar] [CrossRef] [PubMed]

- Hak, P.T.; Hodzovic, E.; Hickey, B. The nature and prevalence of injury during CrossFit training. J. Strength Cond. Res. 2013. online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Gray, S.E.; Finch, C.F. The causes of injuries sustained at fitness facilities presenting to Victorian emergency departments—Identifying the main culprits. Inj. Epidemiol. 2015, 2, 6. [Google Scholar] [CrossRef] [PubMed]

- Stone, M.H.; Collins, D.; Plisk, S.; Haff, G.; Stone, M.E. Training principles: Evaluation of modes and methods of resistance training. Strength Cond. J. 2000, 22, 65. [Google Scholar] [CrossRef]

- Coulter, K.S. Intimidation and distraction in an exercise context. Int. J. Sport Exerc. Psychol. 2021, 19, 668–686. [Google Scholar] [CrossRef]

- Kowsar, Y.; Velloso, E.; Kulik, L.; Leckie, C. LiftSmart: A Monitoring and Warning Wearable for Weight Trainers. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing, London, UK, 9–13 September 2019; pp. 298–301. [Google Scholar]

- Radhakrishnan, M.; Misra, A.; Balan, R.K. W8-Scope: Fine-grained, practical monitoring of weight stack-based exercises. Pervasive Mob. Comput. 2021, 75, 101418. [Google Scholar] [CrossRef]

- Velloso, E.; Bulling, A.; Gellersen, H.; Ugulino, W.; Fuks, H. Qualitative Activity Recognition of Weight Lifting Exercises. In Proceedings of the Augmented Human Inernational Conference, Stuttgart, Germany, 7–8 March 2013; pp. 116–123. [Google Scholar]

- O’Reilly, M.A.; Whelan, D.F.; Ward, T.E.; Delahunt, E.; Caulfield, B.M. Classification of deadlift biomechanics with wearable inertial measurement units. J. Biomech. 2017, 58, 155–161. [Google Scholar] [CrossRef] [PubMed]

- Nishino, Y.; Maekawa, T.; Hara, T. Few-Shot and Weakly Supervised Repetition Counting With Body-Worn Accelerometers. Front. Comput. Sci. 2022, 4. [Google Scholar] [CrossRef]

- Balkhi, P.; Moallem, M. A Multipurpose Wearable Sensor-Based System for Weight Training. Automation 2022, 3, 132–152. [Google Scholar] [CrossRef]

- Morris, D.; Saponas, T.S.; Guillory, A.; Kelner, I. RecoFit: Using a Wearable Sensor to Find, Recognize, and Count Repetitive Exercises. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 3225–3234. [Google Scholar]

- Chang, K.h.; Chen, M.Y.; Canny, J. Tracking Free-Weight Exercises. In Proceedings of the Ubiquitous Computing, Innsbruck, Austria, 16–19 September 2007; pp. 19–37. [Google Scholar]

- Mendiola, V.; Doss, A.; Adams, W.; Ramos, J.; Bruns, M.; Cherian, J.; Kohli, P.; Goldberg, D.; Hammond, T. Automatic Exercise Recognition with Machine Learning. In Precision Health and Medicine: A Digital Revolution in Healthcare; Springer: Cham, Switzerland, 2020; pp. 33–44. [Google Scholar]

- Shen, C.; Ho, B.J.; Srivastava, M. MiLift: Efficient Smartwatch-Based Workout Tracking Using Automatic Segmentation. IEEE Trans. Mob. Comput. 2018, 17, 1609–1622. [Google Scholar] [CrossRef]

- Soro, A.; Brunner, G.; Tanner, S.; Wattenhofer, R. Recognition and Repetition Counting for Complex Physical Exercises with Deep Learning. Sensors 2019, 19, 714. [Google Scholar] [CrossRef] [PubMed]

- Crema, C.; Depari, A.; Flammini, A.; Sisinni, E.; Haslwanter, T.; Salzmann, S. Characterization of a wearable system for automatic supervision of fitness exercises. Measurement 2019, 147, 106810. [Google Scholar] [CrossRef]

- Tian, J.; Zhou, P.; Sun, F.; Wang, T.; Zhang, H. Wearable IMU-Based Gym Exercise Recognition Using Data Fusion Methods. In Proceedings of the International Conference on Biological Information and Biomedical Engineering, Hangzhou, China, 20–22 July 2021. [Google Scholar]

- Hosang, A.; Hosein, N.; Hosein, P. Using Recurrent Neural Networks to approximate orientation with Accelerometers and Magnetometers. In Proceedings of the IEEE International Conference on Intelligent Data Science Technologies and Applications, Tartu, Estonia, 15–16 November 2021; pp. 88–92. [Google Scholar]

- Barandas, M.; Folgado, D.; Fernandes, L.; Santos, S.; Abreu, M.; Bota, P.; Liu, H.; Schultz, T.; Gamboa, H. TSFEL: Time Series Feature Extraction Library. SoftwareX 2020, 11, 100456. [Google Scholar] [CrossRef]

- Ding, H.; Han, J.; Shangguan, L.; Xi, W.; Jiang, Z.; Yang, Z.; Zhou, Z.; Yang, P.; Zhao, J. A Platform for Free-Weight Exercise Monitoring with Passive Tags. IEEE Trans. Mob. Comput. 2017, 16, 3279–3293. [Google Scholar] [CrossRef]

- Guo, X.; Liu, J.; Chen, Y. FitCoach: Virtual fitness coach empowered by wearable mobile devices. In Proceedings of the IEEE International Conference on Computer Communications and Networks, Vancouver, BC, Canada, 31 July–3 August 2017; pp. 1–9. [Google Scholar]

- Seeger, C.; Buchmann, A.; Laerhoven, K.V. myHealthAssistant: A Phone-based Body Sensor Network that Captures the Wearer’s Exercises throughout the Day. In Proceedings of the 6th ICST Conference on Body Area Networks, Beijing, China, 7–8 November 2011. [Google Scholar]

- Pallarés, J.G.; Hernández-Belmonte, A.; Martínez-Cava, A.; Vetrovsky, T.; Steffl, M.; Courel-Ibáñez, J. Effects of range of motion on resistance training adaptations: A systematic review and meta-analysis. Scand. J. Med. Sci. Sports 2021, 31, 1866–1881. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.; Seong, J.; Kim, C.; Choi, Y. Efficiency Optimization of Deep Workout Recognition with Accelerometer Sensor for a Mobile Environment. In Proceedings of the IEEE Region 10 Conference, Jeju, Republic of Korea, 28–31 October 2018; pp. 1142–1147. [Google Scholar]

| Exercise | Feature | Axis | Used to Identify |

|---|---|---|---|

| Bicep curl | Maximum acceleration | X | The speed of the repetition. |

| Gravity height | X | The range of the repetition. | |

| Rotation symmetry | Y, Z | If a bicep curl is occurring. | |

| Lateral raise | Maximum rotation | Y | The speed of the repetition. |

| Gravity height | X | The range of the repetition. | |

| Minimum roll | N/A | Whether the wrist had been by the user’s side. | |

| Roll height | N/A | The range of the repetition. | |

| Gravity/Roll turning points | X, Z | A smooth and repetitive rotation pattern. |

| Exercise | Form | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Bicep curl | Bad range | 1.00 | 1.00 | 1.00 |

| Good | 1.00 | 1.00 | 1.00 | |

| Other | 1.00 | 1.00 | 1.00 | |

| Too fast | 1.00 | 1.00 | 1.00 | |

| Avg/Total | 1.00 | 1.00 | 1.00 | |

| Lateral raise | Bad range | 0.99 | 0.99 | 0.99 |

| Good | 1.00 | 0.99 | 1.00 | |

| Other | 0.99 | 1.00 | 1.00 | |

| Too fast | 1.00 | 1.00 | 1.00 | |

| Avg/Total | 1.00 | 1.00 | 1.00 | |

| Shoulder press | Bad range | 0.89 | 0.92 | 0.90 |

| Good | 0.91 | 0.88 | 0.90 | |

| Other | 1.00 | 1.00 | 1.00 | |

| Leg momentum | 1.00 | 1.00 | 1.00 | |

| Avg/Total | 0.95 | 0.95 | 0.95 |

| Exercise | Reps | Reps (LEAN) | Reps (GWT) |

|---|---|---|---|

| Bicep curl | 8 | 8 | 8 |

| 10 | 10 | 11 | |

| 12 | 12 | 12 | |

| Lateral raise | 8 | 8 | 9 |

| 10 | 10 | 10 | |

| 12 | 12 | 12 | |

| Shoulder press | 8 | 7 | 8 |

| 10 | 10 | 11 | |

| 12 | 11 | 12 |

| Exercise | Values Estimated Using Video Footage | Values Recorded by LEAN | ||

|---|---|---|---|---|

| Average Rep Time (s) | Range of Motion (deg) | Average Rep Time (s) | Range of Motion (deg) | |

| Bicep curl | 3.06 | 155 | 3.02 | 158.18 |

| Lateral raise | 4.12 | 107 | 4.18 | 108.58 |

| Shoulder press | 4.20 | N/A | 4.27 | N/A |

| Exercise | Volunteer 1 | Volunteer 2 | Volunteer 3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Exercise Performance | App Feedback | Exercise Performance | App Feedback | Exercise Performance | App Feedback | |||||||

| Form | Reps | Form | Reps | Form | Reps | Form | Reps | Form | Reps | Form | Reps | |

| Workout 1 | ||||||||||||

| Bicep curl | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Good | 10 |

| Lateral raise | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Bad range | 10 |

| Shoulder press | Good | 10 | Good | 9 | Good | 10 | Good | 10 | Good | 10 | Good | 10 |

| Workout 2 | ||||||||||||

| Bicep curl | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Good | 10 | Good | 10 |

| Lateral raise | Good | 10 | Good | 10 | Good | 10 | Bad range | 9 | Good | 10 | Bad range | 10 |

| Shoulder press | Good | 10 | Good | 9 | Good | 10 | Good | 10 | Good | 10 | Good | 9 |

| Workout 3 | ||||||||||||

| Bicep curl | Bad range | 10 | Bad range | 10 | Too fast | 10 | Too fast | 10 | Too fast | 10 | Too fast | 10 |

| Lateral raise | Bad range | 10 | Bad range | 10 | Bad range | 10 | Bad range | 9 | Too fast | 10 | Too fast | 10 |

| Shoulder press | Bad range | 10 | Bad range | 9 | Bad range | 10 | Good | 10 | Bad range | 10 | Bad range | 10 |

| Strongly Agree | Agree | Neither Agree Nor Disagree | Disagree | Strongly Disagree | |

|---|---|---|---|---|---|

| The iPhone app was easy to understand and navigate. | 2 | 4 | 0 | 0 | 0 |

| The Apple Watch app was easy to understand and navigate. | 3 | 1 | 0 | 0 | 0 |

| The form analysis feature was useful in identifying poor form and how to correct it. | 1 | 2 | 1 | 0 | 0 |

| The form analysis feature was accurate. | 3 | 1 | 0 | 0 | 0 |

| The repetition counting feature was useful. | 1 | 2 | 0 | 1 | 0 |

| The repetition counting feature was accurate. | 0 | 2 | 2 | 0 | 0 |

| The exercise metrics were useful and/or interesting. | 2 | 2 | 0 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Coates, W.; Wahlström, J. LEAN: Real-Time Analysis of Resistance Training Using Wearable Computing. Sensors 2023, 23, 4602. https://doi.org/10.3390/s23104602

Coates W, Wahlström J. LEAN: Real-Time Analysis of Resistance Training Using Wearable Computing. Sensors. 2023; 23(10):4602. https://doi.org/10.3390/s23104602

Chicago/Turabian StyleCoates, William, and Johan Wahlström. 2023. "LEAN: Real-Time Analysis of Resistance Training Using Wearable Computing" Sensors 23, no. 10: 4602. https://doi.org/10.3390/s23104602

APA StyleCoates, W., & Wahlström, J. (2023). LEAN: Real-Time Analysis of Resistance Training Using Wearable Computing. Sensors, 23(10), 4602. https://doi.org/10.3390/s23104602