1. Introduction

The steadily increasing number of population and economic activities has led to an increase in freeway congestion. Along this line, several dynamic traffic-management measures such as ramp metering [

1,

2], dynamic routing [

3], and real-time travel information systems have been widely used for reducing congestion. One promising strategy is the use of variable speed limit (VSL) control [

4,

5,

6], which not only harmonizes the speed within each lane and across different lanes but also increases the capacity of the freeway. Given the dynamic nature of freeway traffic systems, VSL is typically modeled as a feedback control problem. The controller automatically adjusts the speed limits according to real-time measurements of the freeway traffic state, and the objective is to achieve a desirable controlled variable, i.e., low bottleneck density and high-throughput rate. Göksu et al. [

7] proposed a VSL controller, which utilized saturation feedback to ensure the integral input of traffic state to system stability. Some methods also considered integrating the ramp metering and variable speed limit control together to improve freeway traffic control performance [

8,

9].

One of the main challenges of implementing this type of control is that it needs to accurately predict the effect of the different speed limits of different lanes on traffic flow. This can be difficult to do, as traffic patterns are often complex and can be affected by a wide variety of factors. Additionally, different speed limits may not be effective in all situations and may even cause more congestion in some cases. It is also important to ensure that the different speed limits are able to contribute to congestion mitigation so that they can adjust their driving behavior accordingly.

In the machine learning context, reinforcement learning (RL) has been widely used for achieving feedback control autonomy [

10,

11]. RL has also effectively boosted the development of intelligent transportation system [

12,

13]. RL is a goal-oriented learning tool wherein the agent learns a policy to optimize a long-term reward by interacting with the environment. At each step, an RL agent receives the state feedback of the environment, and acts accordingly. The evaluative feedback about its performance in each step allows it to improve the performance of subsequent actions. In a control engineering context, RL can be viewed as a feedback control algorithm. Given the coherence between RL and VSL, researchers start to investigate reinforcement learning (RL) techniques for VSL control [

14,

15,

16]. Several studies have reported the superior performance of RL techniques over traditional control approaches.

The success of modern RL systems is largely due to their combination with deep neural networks. With deep neural networks, RL agents are able to learn a meaningful state representation [

17]. In general, deep reinforcement learning (DRL) is defined as the utilization of deep learning algorithms within RL. As DRL algorithms have performed amazing feats in recent years, there have been some attempts to apply DRL to VSL control [

15,

18]. Ke et al. [

18] investigated using the transfer learning method to improve the transferability of deep reinforcement learning variable speed limits (VSL) control. For VSL control, DRL exhibit major difference from traditional RL methods. Their controllers are built upon deep neural networks which have the capacity to learn more complex representations of the environments. This expressive power allows for learning more optimal speed limits [

15].

Despite the promising results of DRL, training the deep neural networks of DRL is still not an easy task. The algorithms for training DRL agents are highly sensitive and require a long learning process [

19,

20]. This issue mainly stems from the difficulties in defining a representative reward function for VSL agents. There are some recent works that use adversarial perturbed state observations to obtain the worst case reward in order to improve the robustness of the agent [

21,

22]. However, we need to know how the perturbations are generated. Essentially, the goal of traffic management is to reduce travel time and increase traffic flow capacity. However, in practice, the average travel time and total flow cannot be computed until all the vehicles have completed their routes, which causes the issue of delayed rewards. Handling delayed rewards is one of the most critical challenges in RL research [

23,

24]. The training through one-step agent–environment interaction is ineffective because the definition of which action induces rewards is ambiguous. This problem is often solved by reward engineering so that the reward is more related to the current action of the agent. However, reward engineering is a tedious process, which requires not only intensive parameter tuning but also specific domains. More importantly, the choosing reward may not necessarily lead to improvements of the freeway capacity.

Recent research has shown that evolutionary strategy (ES) algorithms can be used for tasks that are dominated by DRL algorithms [

25]. In DRL algorithms, neural networks explore different actions in each step and these actions return rewards that are used to update the parameters of the network via backpropagation. Instead of using backpropagation, ES uses a “random search” approach to search the optimal neural networks parameters. It involves a number of agents acting in parallel using parameters sampled from a given distribution. Each agent acts in its own environment, and once it finishes a set number of episodes, the cumulative reward is returned to the algorithm as a fitness score. With this score, the parameter distribution moves toward that of the more successful agents. ES algorithms offer an attractive advantage when compared to DRL algorithms on VSL control tasks. The fitness score guiding the learning process is the overall return of the whole control period. As a result, the problem of the delayed reward for training deep neural networks can be readily solved with ES. In other words, we can directly use the total traffic flow or average travel time to train an ES agent [

26,

27].

In essence, evolution strategy (ES) is a nature-inspired direct search and optimization method which uses mutation, recombination, and selection applied to a population of individuals in order to evolve iteratively better and better solutions. Several types of ES algorithms have been proposed in the literature. The difference of these models mainly lies in how they represent the population and how they perform mutation and recombination. For example, Salimans et al. [

25] already showed that deep neural networks learned by a special natural evolution strategy (NES) can achieve competitive results in video games and robotic control. NES updates the search distribution in the direction of higher expected fitness using the gradient induced from fitness score. Afterwards, Such et al. [

28] also showed that the gradient-free approach genetic algorithms (GAs) are also a competitive alternative for training deep neural networks on DRL tasks.

The version of ES we use in this work belongs to the adaptive evolution strategies. We use the covariance matrix adaptation evolution strategy (CMA-ES) [

29], which represents the population by a full-covariance multivariate Gaussian. The recombination process of CMA-ES refers to selecting a new mean value for the multivariate Gaussian. Mutation amounts to adding a random vector with a zero mean. The dependencies between different individuals within the population are represented by a covariance matrix. The covariance matrix adaptation (CMA) is to update the covariance matrix of this distribution. We choose CMA-ES since it is known to converge faster than other ES, and can automatically adapt the learning step and noise distribution. We evaluate the proposed CMA-ES optimized deep neural network controllers on a simulated freeway traffic with recurrent bottlenecks, in which distinctive and dynamic speed limits among lanes are allowed. The main contribution of our paper can be summarized as the following:

We propose a multi-lane differential variable speed limit control framework which can better handle the delayed reward.

We propose the CMA-ES based VSL algorithm to improve the robustness of our control framework, which needs less parameter tuning. The CMA-ES also learns explainable control policy.

Various experimental results show that CMA-ES agent is better than non-evolutionary search (NES) and deep reinforcement learning (DRL) agents in the real-world freeway network with on-ramp and off-ramp roads.

2. Problem Statement

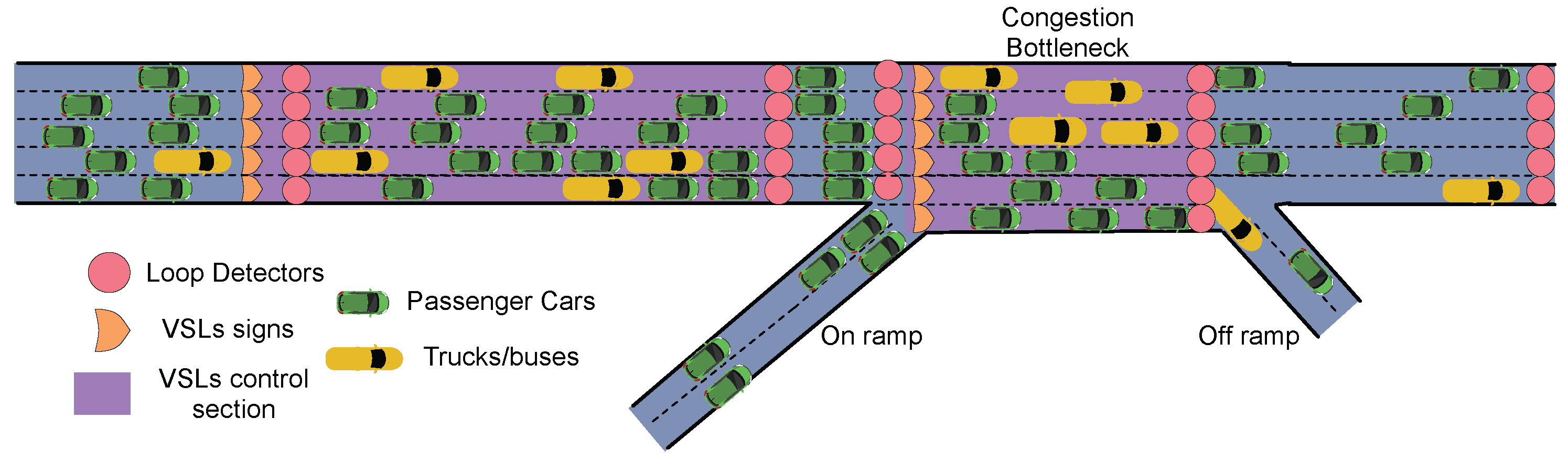

The freeway section considered in this paper is given in

Figure 1. The freeway section in

Figure 1 is composed of multiple on-ramp and an off-ramp lanes.As we can see, the interference between vehicles mainly happens in the merging area between the inflow of on-ramp roads and the outflow of the mainstream. The conflicts cause further speed reductions in the merging area, contributing to the creation of a generalized bottleneck.

Following the framework of our previous work [

15], different speed limits across different lanes in the same control freeway section are allowed in this work. The benefits of differential variable speed limits (DVSL) among lanes are limited by driver compliance. Therefore we assume that the DVSL system is running on a connected and automated vehicle (CAV) environment, in which the infrastructure can send commands to the vehicles, and vehicle speed commands or speed limits are automatically enforced.

The DVSL control system in

Figure 1 includes three sections: (1) the upstream of the bottleneck in which the outflow is controlled by adjusting the posted speed limits (purple); (2) the bottleneck with congestion in which the speed limits can change (purple); (3) a speed modulation section that allowed vehicles to accelerate from low speed adjust its speed between two controlled sections (dark blue). Traditional systems only change the speed of the upstream. We also implement VSL signs in the recurrent bottleneck. The function of upstream speed limit control is to adjust the outflow to the bottleneck and prevent the capacity drop of the downstream bottleneck. The speed limits in the bottleneck control the congestion formation and dissipation. If the congestion is presented in the bottleneck, a lower speed limit would be more desirable as it harmonizes the driving speed of all vehicles. On the other hand, a higher speed limit can also allow the congestion to dissipate faster.

The DVSL control problem is modeled as a temporal decision process. In each time step, the DVSL agent determines the speed limits of each controlled lane according to the occupancy rate reported by the loop detectors. The mapping between the traffic state and speed limits is represented by a policy

parameterized by

. The speed limits

at time point

t can be calculated by

where

,

n is the number of the controlled lanes, and the traffic state observation

at time point

t, m, is the number of the loop detectors in this system.

The goals of VSL control can be various. A main targeted impact of VSL is to enhance traffic safety; other targets include improvement of traffic flow efficiency, such as in the sense of reduced travel times and environmental (noise, pollution) benefits. The main target of this study is the efficiency of the freeway section. The efficiency can be measured by the system travel time. In real-world applications, it is impractical to calculate all the vehicles’ travel time. However, we can use the loop detectors in the entry and exit of the freeway to calculate the discrete representation of system travel time. The discrete-time representation of system travel time (TTT) over the control time horizon T can be calculated by

where

is the total number of vehicles in the network at time

t.

is the time duration.

and

are the received and exit flow, respectively.

in Equation (

2) is independent of the control measures taken in the freeway, hence the minimization of system travel time is equivalent to searching for policy

that maximizes the following quantity

In this study, the number of vehicles

entering the freeway system at each time step from both upstream mainline and on-ramp are recorded. The number of vehicles

leaving the freeway system at downstream mainline and off-ramp is also recorded. We model policy

by a deep neural network, and its parameter

is optimized by CMA-ES [

29], in which the feature is encoded using three-layer neural networks. The neural network structure and optimization method will be discussed in the next section.

Given the large amount of mathematical notation employed in the remainder of this article, we have included a brief summary of the nomenclature in Nomenclatures.

3. Proposed Method

In this section, we will introduce how to integrate the CMA-ES introduced above to optimize deep neural networks to generate variable speed limits. The policy

is built upon deep neural networks parameterized by

. Due to the driver compliance issue, a continuous speed limit is not feasible to post in a variable speed sign. However, it is more convenient to design a deep neural network with continuous outputs. To avoid this contradiction, we first generate a continuous neural networks output of

at time steps

t given observation

by

is a neural network parameterized by

.

is the real-time traffic states reported by

m loop detectors. The value of

is clipped between

.

M is the number of feasible speed limits. Then the discrete action

is obtained by choosing the integer parts of

. Obviously,

. Then the speed limits

is calculated by

.

is the minimum value of the speed limit,

I is the integer multiples, the maximum value of speed limits is

. For example, if the feasible speed limits is [60 km/h, 65 km/h,

120 km/h], then

= 60 km/h,

I = 5 km/h, and

.

Figure 2 gives an illustration of the policy

.

The next step is to obtain the optimal parameters of deep neural networks that maximize the reward . There have been various kinds of DRL algorithms for learning parameters , the common theme is to use backpropagation, which involves a gradient approximation of parameter . However, optimizing alone for high reward by the gradient can cause the model to become stuck in local optima, and as a result, the agent may fail to learn appropriately. This motivates us to explore the gradient-free evolutionary algorithms to optimize . More specifically, in this work we use the covariance matrix adaptation evolution strategy (CMA-ES).

ES is broadly based on the principle of biological evolution, namely the repeated interplay of variation (via recombination and mutation) and selection. In each generation, some individuals are selected to become the parents in the next generation based on their fitness score. For VSL control, the fitness score is the system travel time term

.

represents the bias and weights of the neural networks. The next generation are generated by the variation of the parents. The individuals of the new generation are working on the VSL control environment in parallel. The variation rule of the CMA-ES is given below:

where

is the

k-th parameter of

-th generation,

is the step size,

is the covariance matrix of the noise,

represents the center of mass of the selected individuals of generation. The selection set

is determined by the fitness score

.

, For each evolutionary generation, we generate

K individuals, and evaluate them on the same DVSL task to obtain their fitness score. The

p fit individuals with larger

are selected as members of

.

CMA-ES provides an adaptive mechanism to update covariance matrix

and updates step size

. First, the evolution path

is calculated by

is the update step for evolution path, the column of

represent normalized eigenvectors of

,

is a diagonal matrix whose elements are the square roots of the eigenvalues of

. Then

is updated by

is the update step for covariance matrix.

Next step is to adapt the global step size

, the evolution path

is calculated by

is the update step for

. The length of the evolution path determines the step size for generation

.

where

is the update parameter for

,

is a dumping parameter.

In summary, CMA-ES estimates the variation of neural network individuals through incremental adaption along evolution paths and contains information about the correlation between consecutive updates. With CMA-ES, we can use an adaptive gradient-free approach to update the neural network controller for VSL control task. In this work, we use the open-source package pycma (

https://github.com/CMA-ES/pycma, accessed on 5 September 2021) with distributed workers as the optimization tool for our deep neural networks. Each worker operates independently, and samples a perturbation

from a normal distribution with a mean of 0 and a standard deviation

. Then, it computes the returns based on the throughput

, which is the negative sum of the difference between the inflow and outflow of vehicles for each time step

t. The complete Algorithm 1 is summarized below:

| Algorithm 1 Adaptive Evolutionary Strategy for VSL control |

- 1:

Initialize reward function and neural network’s parameters, noise standard deviation , initial policy parameters - 2:

for Every time step t = 0, 1, 2… do - 3:

for Each worker i = 1, …, n do - 4:

Sample the perturbation - 5:

Compute returns based on the throughput - 6:

end for - 7:

Send all scalar returns to every worker - 8:

for each worker do - 9:

Reconstruct all perturbations for using random seeds - 10:

update based on - 11:

end for - 12:

end for

|

4. Experiments

In this section, we mainly conducted experiments on a simulated freeway section built by SUMO to evaluate the effectiveness of CMA-ES-based DVSL control.We set the initial control time horizon T as 60, the incident time as 0, and the incident length as 0. We selected the batch size as 32. The noise standard deviation is set as 1, we use the

normalized columns initializer for initialization [

30]. The learning rate is set as 0.1. We run the experiment 100,000 times.The terminal is activated when it exceeds the total simulation step (18,000); meanwhile, if all vehicles have left the network or a collision happens, the terminal will also be intrigued.

4.1. The Simulated Freeway Section

We use the open-source software SUMO to implement our experiments. The software supports setting the speed limits for each lane using its API, the Traffic Control Interface (TraCI) package. An 874.51 m freeway section with on- and off-ramps of I405 north bound in California, USA is selected. The original speed limits for the main-lane of this section are 65 mile/h and for the on- and off-ramps are 50 mile/h.

Figure 3 illustrates the topology of the simulated freeway in SUMO. The travel demand of this freeway can be categorized into three routes: (1) from main-lane to main-lane (M2M), (2) from main-lane to off-ramp (M2Off), and (3) from on-ramp to mainline (On2M). We simulate 2 h of traffic for this freeway section. In the first hour, the hourly demand of these three routes is modeled as Poisson distribution with average values of 375 (M2M), 125(M2Off), and 200(On2M), respectively. In the second hour, the traffic turns to congestion, and the average values become 4650, 1550, and 3000, respectively. The depart lane of the vehicles are randomly set according to a uniform distribution. Passenger cars with a length of 3.5 m and truck/bus with a length of 8 m are selected as vehicle types in the simulated traffic stream. The combination of vehicles is generated randomly according to probability [0.85, 0.15].The demand distribution probability can be visualized as in

Figure 4.

Different vehicles exhibit various and distinct driving behaviors in the real world. To account for this heterogeneity, we randomly assigned half of the vehicles with the “Krauss” car-following model, and the other half with the “IDM” car-following model [

31]. The SUMO default “LC2013” model was used as the lane-change model for all vehicles.

We explore two critical characteristics of drivers. The first pertains to their desired speed with respect to speed limits and can be expressed through the “speedFactor” variable, which can also be modeled using a normal distribution. For DVSL agent training, we set the speedFactor to “normc(1,0.1,0.2,2)”, indicating that the speedFactor has a mean of 1 and a standard deviation of 0.1, and ranges from 0.2 to 2.

The second property we investigate is the propensity of vehicles to execute rule-based lane changes to increase their speed. To capture this, we introduce a “speedgen” variable that corresponds to the “lcSpeedGain” attribute. When training VSL agents, we set the “lcSpeedGain” attribute to 1, indicating that the vehicle is more likely to change lanes to achieve higher speeds. A higher “lcSpeedGain” value suggests that the vehicle is more inclined to change lanes to gain speed.

To collect state variables for DVSL control, we place loop detectors in the freeway section. We set up 22 loop detectors around two control areas to monitor the traffic state. The occupancy rate of these 22 detectors, which reflects the congestion level of the corresponding areas, serves as input

for the deep neural networks. The variable speed limits range from 40 to 75 miles per hour and change every five minutes. We also use SUMO simulation software in conjunction with the Handbook Emission Factors for Road Transport (HBEFA)4.1 [

32] to model emissions, obtaining the CO, HC, NOx, and PMx.

4.2. Comparison Results

The total travel time index given in Equation (

2) is used as the fitness score for ES-based algorithms and the reward function for the DVSL-based control agent. The DVSL controller of all the methods is built upon a three-layer deep neural network. The first layer has 60 hidden neurons, and the second layer has 30 hidden neurons. The ReLU function is used as the activation function for the hidden layer, the Sigmoid function is used the activation function for the output layer. For the evolutionary strategy-based model, each generation has 8 individuals, and 1000 episodes are used to optimize the neural networks. For DDPG, we use 1000 episodes to train the DRL-based controller.

We compare CMA-ES based DVSL control with the following baseline methods, which include:

To compare the reward behavior over the learning process for all used learning-based algorithms, we visualized the plot for ES, CMA-ES, and DDPG in the following

Figure 5. We can find that our proposed CMA-ES algorithm can achieve the highest reward compared to the ES and DDPG methods. Meanwhile, it is more stable compared to the DDPG method.

Table 1 compares the best performance of different methods. The results show that CMA-ES outperforms both ES- and DDPG-based methods in terms of average travel time, emission, and safety. CMA-ES can reduce average travel time by 18.12%, CO emission by 12.76%, and HC emission of the freeway by 11.11%. Most importantly, it can reduce emergency brake use by 2%, which indicates an improvement for safety. Both ES and CMA-ES outperform the DDPG-based controller, indicating that evolutionary strategies are more suitable for VSL control tasks compared with the DRL method. All the methods cannot reduce the NOx and PMx emissions. Interestingly, the DDPG-based controller even increases NOx and PMx emissions, though it also improves the efficiencies of the freeway. We can also find that continuous control action space design (i.e., DDPG) will be better than discrete control action space design (i.e., DQN).

Comparative studies between ES and DRL on video games have shown that they exhibit different behavior patterns. ES’s behavior is dominated by the prior action distribution, whereas DRL tends to react to the real-time state changes [

33]. To study the behaviors patterns of DRL and ES on the VSL task,

Figure 6 plots the speed limits given by CMA-ES, ES, and DDPG based algorithms. Obviously, the behaviors of DDPG in

Figure 6 look more random, whereas CMA-ES produces more regular patterns. The behavior of ES seems like an intermediate point between CMA-ES and DDPG. The reason might be that ES uses both gradient information and evolutionary strategies. The behaviors of CMA-ES can be viewed as the combination of static rules and dynamic feedback control. The most left lane of the upstream and bottleneck sections are ruled by the highest speed limits of 75 mph. The CMA-ES are learned to set those lanes as overtaking lanes. This accords with the simple traffic rule as the experiments are running on a right-driving environment. In other lanes, the CMA-ES dynamically switches the speed between the highest 75 mph and the lowest 40 mph according to real-time traffic state. The CMA-ES seldom uses the speed limits between 40 and 75. Compared with the upstream control section, the speed limits of the bottleneck are more often set to the lowest value. Obviously, the speed limits produced by CMA-ES are explainable and easy to understand. Moreover, this type of behavior leads to superior performance compared with the random behavior of DDPG. Additionally, the speed limits produced by the CMA-ES algorithm can be viewed as a combination of static rules and dynamic feedback control, making them more interpretable. For instance, the CMA-ES algorithm learns to set the most left lane of the upstream and bottleneck sections as overtaking lanes with the highest speed limit of 75 mph, which aligns with the simple traffic rules in a right-driving environment. On the other hand, DDPG produces speed limits that do not align with any discernible traffic rules, making them more difficult to interpret.

4.3. Assessing Generalization

For this research, the ES and DRL agents were exclusively trained in a simulation environment. However, a crucial question arises: how can a DVSL agent leverage simulation to perform practical tasks in the real world? Additionally, how can we extend its performance to novel scenarios that differ from the simulation? Traffic simulation presents a challenge, since even the most sophisticated simulators cannot fully replicate real traffic flow dynamics and uncertainty. We can evaluate the agents’ generalization abilities by subjecting them to test environments with varying attributes from the ones they were trained on. This ability to generalize provides us with evidence of the agents’ performance in a different environment, such as a real-world implementation with different attributes from the simulated environment. The simulation environment employed in this study is expected to be equipped with advanced CAV techniques, with CAVs adhering to the recommended speeds. Therefore, we are intrigued by the performance variations of DVSL control concerning critical attribute changes introduced by CAV techniques. The first important attribute is the driver’s minimum time headway. During training, this value is set to 1 s. We evaluate the agents on environments with and .

Table 2 gives the performance of different methods with different

. It shows that highly developed CAV with a very low reaction time is beneficial for freeway traffic. It is obvious that smaller

leads to lower travel time and less emission. DDPG fails to improve the freeway performance when

is lower to 0.25. Both ES and CMA-ES can still reduce the travel time and emission under an environment with lower

. The second change brought by CAV technique might be that the vehicles are more likely to drive under the speed limits. This can be simulated lower down the standard deviation

of “speedFactor”. We evaluate the DVSL models with

and

.

Table 3 gives the results of different methods with different deviation

. The lower deviation will lead to lower throughput and higher emission for the freeway. The higher deviation value makes the driving speed more diverse, which would allow more gaps for merging vehicles, and improves the efficiency of merging area. In this case, the DDPG-based DVSL agent fails to improve the performance of the freeway. ES outperforms CMA-ES when

is lower, though CMA-ES outperforms ES when

. The results show that the efficiency of traffic will be improved by a more diverse driving style. Therefore, it might be desirable to add some random driving mechanism to the CAVs as this will lead to systematic benefits.

In order to further demonstrate the robustness of our model to different flow rates, we analyzed our model’s performance in comparison to non-control performance. We measured the percentage of improvement in average travel time corresponding to different flow rates. As shown in

Figure 7, we can see that in comparison to the non-control framework, our model demonstrates robustness when the flow volume increases on the main lane and ramp lanes. The red histograms represent the main lane while the blue histograms represent the ramp lane. We can observe that when the traffic flow increasea, the percentage of increment of main will decrease; however, we can observe that given an extremely high volume, i.e., 1600, the ramp lane efficiency will decrease. Given a very high traffic volume, the model will learn to sacrifice some travel efficiency on ramp lanes in order to increase system-level efficiency.

5. Conclusions and Discussion

In this paper, we have proposed a gradient-free and adaptive evolutionary strategy for multi-lane differential variable speed limit control. In order to learn the dependence between traffic state and optimal action, the deep neural networks were used to learn the representation of the traffic state. Our solution outperforms the DRL-based solutions in terms of improvements in freeway capacity and emission reduction. The learned speed limits are explainable and can be generalized to environment with different properties.

The proposed method has demonstrated promising results in the task of multi-lane differential variable speed limit control, outperforming deep reinforcement learning-based approaches, traditional evolutionary search methods, and the no-control scenario. However, one limitation of the proposed work is that it requires a significant amount of computational resources, as it relies on a large number of parallel workers to efficiently optimize the policy parameters. Future work could explore ways to reduce the computational cost of the proposed method while maintaining its effectiveness.

Several interesting questions stem from our paper both theoretically and practically, which we plan to study in the future. We aim to extend the approach to large freeway networks and a broader set of dynamic events, such as adverse weather and traffic incidents, in the future. Another interesting direction we plan to study is the incorporation of more advanced traffic-control strategies. In this paper, the most basic neural network architecture and adaptive evolutionary strategy are used. We believe that more systematic research of architectures such as graph convolutional networks [

34] and optimization strategies may provide improvements in control performance. Furthermore, it is also interesting to study the cooperation effect of connected automated vehicles [

35] and the infrastructure.