Abstract

This paper plans to establish a warehouse management system based on an unmanned aerial vehicle (UAV) to scan the QR codes printed on packages. This UAV consists of a positive cross quadcopter drone and a variety of sensors and components, such as flight controllers, single-board computers, optical flow sensors, ultrasonic sensors and cameras, etc. The UAV stabilizes itself by proportional-integral-derivative (PID) control and takes pictures of the package as it reaches ahead of the shelf. Through convolutional neural networks (CNNs), the placement angle of the package can be accurately identified. Some optimization functions are applied to compare system performance. When the angle is 90°, that is, the package is placed normally and correctly, the QR code will be read directly. Otherwise, image processing techniques that include Sobel edge computing, minimum circumscribed rectangle, perspective transformation, and image enhancement is required to assist in reading the QR code. The experimental results showed that the proposed algorithm provided good performance of a recognition rate of 94% for the stochastic gradient descent (SGD) and 95% for Adadelta optimization functions. After that, successful QR code reading was presented.

1. Introduction

Industry 4.0 was proposed at the Hannover Messe in 2011 []. Its main concept is smart manufacturing, which includes artificial intelligence (AI), Internet of Things (IoT), and big data application technology. Driven by the trend of Industry 4.0, smart factories and automatic storage have also become trends, and the latter will also indirectly drive the demand for robots. The design and development of unmanned aerial vehicles (UAVs) or flying robots is growing rapidly. According to a report from Tractica [], the commercial application of UAVs will push commercial-grade drones from 80,000 in 2015 to more than 2.6 million in 2025, and the annual revenue of commercial drone hardware sales will reach nearly 4 billion U.S. dollars. It is clear that commercial drone services (Drone as a Service, DaaS) will provide considerable business opportunities. The main areas of commercial services include shooting, entertainment, surveying and mapping, aerial operations and other services, and a small part includes survey and disaster relief, early warning systems, data collection and analysis, and environmental monitoring, etc.

The warehouse management system (WMS) is generally divided into warehousing, data creation, packaging, shipment, delivery, and purchase. When operators need to purchase and ship goods, they often need to know the quantity of goods in the warehouse. The traditional warehouse inventory is time consuming and labor intensive. In 2018, Worldwide Chain Stores (WCS) planned to let drones enter the warehouse management market and cooperated with a drone scanning technology company to provide retailers with drone warehouse management solutions []. Now, WCS uses the latest drone platform, scanning and communication technologies to integrate WMS and Excel to provide a revolutionary solution for scanning products and pallets in the warehouse, and uses the scanner on the drone to scan the barcode on each pallet, which is 50 times faster than manual. However, the operation needs manual control for UAV.

In addition, in recent years, the rights and interests of workers have been paid more and more attention, but occupational accidents still emerge endlessly every year. The Occupational Safety and Health Administration (OHSA) and the US National Institute for Occupational Safety and Health (NIOSH) published their respective researches on warehousing safety in 2019 [] and 2013 [], all of which mentioned that the improper placement and the offset of the center of gravity of goods are one of the main reasons for storage safety accidents.

At present, many studies on UAV storage inventory are carried out with radio frequency identification (RFID) technology, for example: Fernández-Caramés [], Ong [], Chin [], and Huang []. However, although RFID can easily identify the content of the barcode, it cannot check whether the package has potential risk factors due to improper placement.

Machine vision is one of the most important parts of artificial intelligence applications. Two-dimensional images can only provide plane information of the object, while three-dimensional images can provide three-dimensional information of the object, but both have the property of rotational invariance. To infer the 3D position and rotational angle of an object from a 2D image, the following methods can be applied: scale-invariant feature transform (SIFT) [], Hough transform [], Harris corner detection [], and so on. In the process of establishing the training set, the concept of plane rotation and three-dimensional rotation is used at the same time. Some training data sets and methods for identifying object rotation are available for experiments in Li [], Li [], Diego Marcos [], Asako [], and Shi []. The rotated MNIST dataset, randomly rotated Imagenet dataset, rotated ShapeNet dataset, and multi-view images of objects are used. In addition, recurrent convolutional neural network (RCNN), multi-view convolutional neural network (MVCNN), and image projection are also for recognition of rotational objects.

At present, artificial intelligence (AI) algorithms have been applied to many fields. There are four classifiers, k-nearest neighbor (KNN), support vector machine (SVM), kernel extreme learning machine (KELM), and random forest (RF), used to classify non-severe depression patients and normal subjects by Li et al. []. Zhao et al. [] proposed the elastic net broad learning system and grey wolf optimization algorithms to overcome unsatisfactory prediction and long training time by traditional regression prediction algorithms and achieved a better prediction result of bearing performance. A new bearing fault diagnosis method based on joint distribution adaptive and deep belief network with improved sparrow search algorithm was proposed by Zhao et al. [] to effectively solve the learning problem of an inconsistent distribution of training data and test data. Moreover, many papers [,,,,] have pointed out that convolutional neural networks (CNNs) can solve different application problems in the application of image classification. These papers have discussed that CNNs can achieve the application of image classification and recognition by analyzing images with shape and motion characteristics or directly relying on the self-identification ability of the neural network model. Garbage classification [], cloud classification [], clothing classification [], fruit quality classification [], and housing classification [] are the application examples. In addition, a CNN-based hybrid tracking algorithm was developed to robustly track multiple high-speed moving objects simultaneously in [].

This paper adopts the CNN method and image recognition to classify the placement angles of the packages in the shelf. It cannot only help to identify the QR code, but also can make an assessment and warning of potential risk factors such as improper scanning and placement. This paper will provide some results for advanced study.

- A UAV is designed, which includes a positive cross quadcopter drone and a variety of sensors and components, such as flight controllers, single-board computers, optical flow sensors, ultrasonic sensors and cameras, etc.

- The UAV is successfully stabilized by PID control even when disturbances occurr.

- The placement angle of the package can be accurately classified by CNN. Optimization functions, such as SGD, RMSprop, Adadelta, and Adam, are applied to improve the system performance and show the recognition rates of 94%, 92%, 95%, and 93%, respectively. If the angle is not 90°, a warning will be issued to prompt the management personnel to handle it to avoid accidents or losses. In addition, image processing is required to assist in reading the QR code, including the use of Sobel edge computing, minimum circumscribed rectangle, perspective transformation, and image enhancement. Successful QR code reading is also provided.

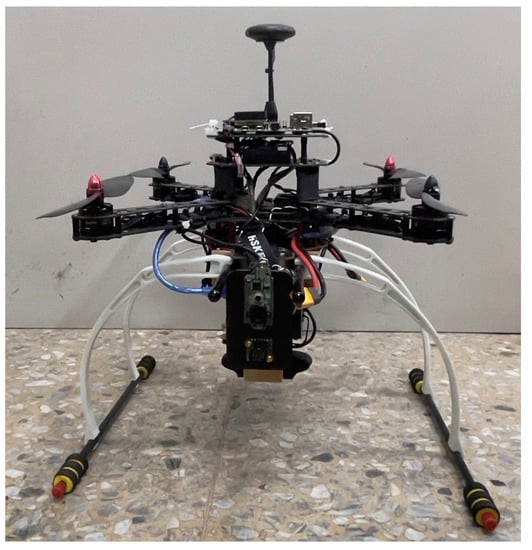

2. Unmanned Aerial Vehicle System

The four-axis quadrature drone is the most commonly used type of UAV. It uses four rotors to generate lift and make the UAV take off and land vertically, hover, and fly. In this paper, a positive fork four-axis UAV with a wheelbase of 38 cm is designed. The flight controller Pixhawk 4 (Holybro Pixhawk 4, https://holybro.com/) and two Raspberry Pi 4 are equipped with an optical flow sensor (Hex Hereflow, https://hexuav.com/), an ultrasonic sensor (MaxBotix XL-MaxSonar MB1260, https://maxbotix.com/), Logitech C920 and C310 cameras, and other components to realize the drone that shoots images before it reaches the shelf autonomously, as shown in Figure 1.

Figure 1.

The picture of the unmanned aerial vehicle.

The control system of the UAV is composed of Raspberry Pi 4-1 and the flight controller, and it communicates with the flight controller through the MAVLink (Micro Air Vehicle Link) protocol in the DroneKit drone development library. Attitude estimation is the key parameter to the control system. Throttle control measures the distance to the ground through the optical flow sensor and maintains it at 1.2~1.5 m through the Alt-Hold flight mode. Through the C920 camera on the gimbal capturing the image of the trajectory line, the offsets of the roll angle and the yaw angle are calculated through image processing and then used by proportional–integral–derivative (PID) control to correct the orientation of the drone.

During the flight, the UAV continuously recognizes the trajectory line at the same time. If the trajectory line has not been recognized and the distance between the ultrasonic measurement and the distance between the UAV and the shelf is greater than 70 cm, the UAV will be given a forward pitch angle. If the trajectory line is recognized and the distance between the drone and the shelf is between 60 and 70 cm, the UAV will enter hovering mode. Before the drone arrives on the shelf, Raspberry Pi 4-1 will send a command to Raspberry Pi 4-2 through a Bluetooth interface. After receiving the command, it will turn on the C310 camera to shoot images via file transfer protocol to transfer the photos to the back-end computer.

PID control is widely used in industrial control []. Nowadays, many people also apply PID control to UAVs, such as Lin [], Yang [], and Zhang []. The PID control is expressed as (1),

where , and represent the proportional gain, integral gain, and derivative gain, respectively.

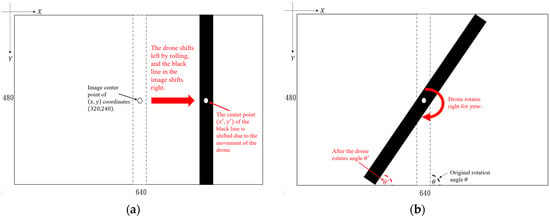

In order to keep the drone moving horizontally, it is also necessary to consider issues such as pitch, roll, and yaw angles under different conditions and time. To carry out the roll and yaw control of the UAV by using a shot image with a size of 640 × 480, so that the center coordinates of the image are set to 320 × 280, the minimum bounding rectangle (MBR) is used to obtain the black line image coordinates and calculate the distance between it and the image center coordinates, which is the roll offset distance. The PID control will correct the roll offset of the drone, as shown in Figure 2a. When the black line rotates an angle of θ′, the yaw offset can be known, as shown in Figure 2b. As the distance between the ultrasonic sensor and the object is about 60~70 cm, the UAV will stop executing the pitch command.

Figure 2.

The roll control (a) and yaw control (b) of the UAV.

3. Convolutional Neural Network

A traditional convolutional neural network (CNN) consists of one or more convolutional layers, pooling layers, and fully connected layers (FCs). A convolutional layer adopts an image as its input and is formed by a plurality of different, generally 3 × 3, filters (called convolution kernels) to conduct convoluting operation and then produce different features []. The feature map is the output to the next layer through a rectified linear unit (ReLU) for the activation function described as

After down sampling, the outputs are inputted to the fully connected layer for classification [,]. The feature map is flattened in the fully connected layer, and the weights are updated in the neural network through backpropagation. The softmax function is used in the output of the fully connected layer and adjust the range of each element in the output vector to locate between 0 and 1, with the sum of all elements being 1. It is described as

The loss function is an important part of the CNN. It is used to measure the inconsistency between the predicted value and the actual label. The robustness of the model increases as the value of the loss function decreases. A cross-entropy algorithm is used to calculate the loss function (4),

where is the number of samples, is the number of classifications, and is the actual label. The parameters are updated by

where l is the number of iterations, is the gradient of the loss function, and is the learning rate.

The expression for calculating the loss function gradient is as follows:

where means all outputs and is one of them.

The regularization strategy is to limit the ability of the model through penalty, which adds a norm constraint to the loss function []. L1 regularization refers to the sum of the absolute values of each element in the weight vector w. Its expression is as follows,

where L is a function with absolute value sign, λ is the regularization coefficient and η is the learning rate. L2 regularization is the squared root of the sum of squares of the various parameters of the model. Its expression is as follows,

Compared with the L2 constraint, the L1 constraint can produce a sparser model. Under a smaller w, it will be reduced to 0, thereby achieving the function of feature selection. That is, L1 has sparsity and can be used for feature selection, while L2 can improve computational efficiency and has an analytical expression, so both can prevent model overfitting.

4. Experimental Results

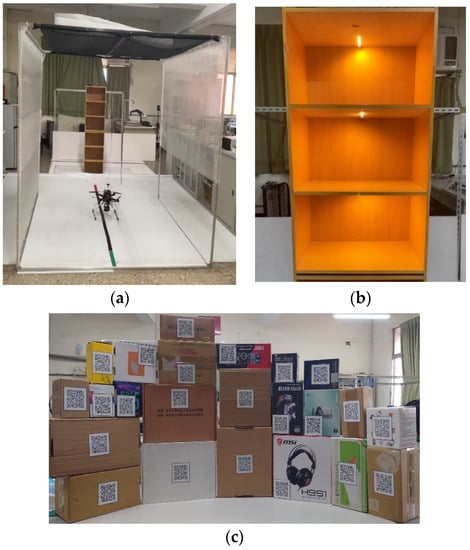

In order to explore how to use UAVs to take pictures of packages on the shelves, read the QR code information on the packages, and realize the function of warehouse management, the experimental environment was set up by laying a white canvas with a length of 300 cm and a width of 350 cm on the indoor floor and pasting a black line with a length of 300 cm and a width of 4.5 cm and a red line with a length of 30 cm in the middle of the canvas as the end point, as shown in Figure 3a. In order to ensure the safety of indoor flight, a flight frame was built, and the top and left and right sides of the frame were covered with black gauze and white gauze. It consisted of two identical three-layer cabinets, 160 cm high, 40 cm wide, and 30 cm deep. A yellow light strip was installed in the cabinet to provide supplementary light in the cabinet, as shown in Figure 3b. Different packages are shown in Figure 3c. The size of the QR code on the package was 7 cm in length and width, an outer frame for identification was added on the outside, and the information in the QR code was the website of Southern Taiwan University of Science and Technology and the website of a random shopping website.

Figure 3.

Experimental environment and package placement angle. (a) UAV flight field and frame, (b) fill light in the cabinet, and (c) package type.

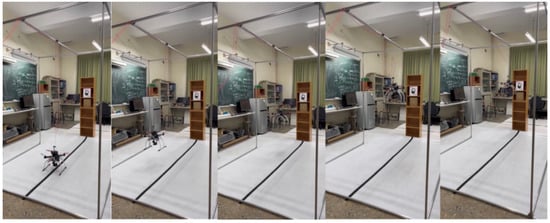

Figure 4 shows the five segmented screens of the video of the experiment process during the actual flight. Firstly, the drone was placed on the black line on the ground and prepares to take off, then the drone started to take off and increase its altitude. The optical sensor mounted on the UAV was continuously detecting the height with the ground until the height of the drone was raised to 1.2 to 1.5 m. In addition, the UAV kept moving forward during these processes and detected the distance from the shelf by the ultrasonic sensor. Finally, the flight controller stopped advancing command and kept hovering.

Figure 4.

UAV flight process.

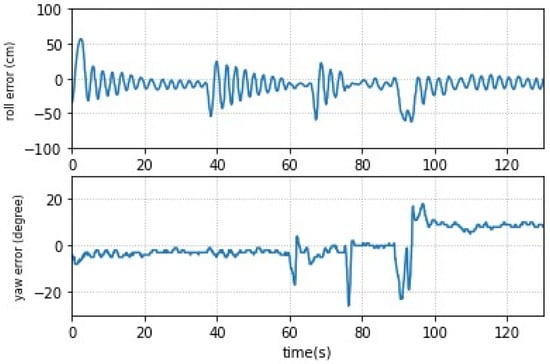

The experimental results of the UAV flight by PID control are shown in Figure 5. The UAV was placed randomly on the black line on the ground in the beginning, so there would be errors initially. The UAV successfully corrected back to the predetermined trajectory within 20 s after takeoff. In order to test the roll correction ability, the external force disturbance was applied to the UAV during the flight at the instances of 40, 70, and 90 s. There was a deviation due to disturbance, but it was successfully corrected later. Similarly, the external force for yaw control was applied to the UAV during the flight at the instances of 60, 75, and 90 s.

Figure 5.

UAV roll and yaw control by PID control.

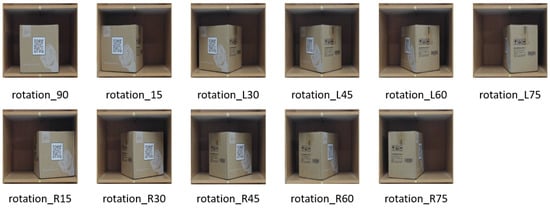

In order to establish the image of the placement angle of the package, the shelf mezzanine was used as the baseline, the middle of the baseline as the origin, and a protractor was used to assist in drawing 90°, left 15, left 30, left 45, left 60, and left 75. The same was true for the right side of the base line. When creating training and test data for the package placement angle, the package needs to be placed based on the base line and the origin, as shown in Figure 6.

Figure 6.

Various rotated packages.

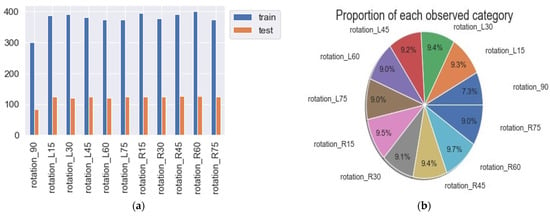

Next, a method that can identify the placement angle of the package is needed. CNN generally uses multiple convolutional layers to extract object features, but the angle of the package has no identifiable features in the image. Therefore, the difference in surface size and outline when the package is placed at different angles may define the package placement angle. Through different shooting heights, distances, offsets, rotations, lighting, contrast, and cropping, more training data can be generated for CNN. In order to make the similarity between the training image and the test image not too high, random deletion is considered, and then 20% of the images are selected as the validation set, and the rest are used as the training set. Figure 7a shows the number of training and validation data for training CNN, and Figure 7b shows the data ratio of images from each angle.

Figure 7.

Data volume and distribution. (a) Training and testing data volume; (b) data volume distribution.

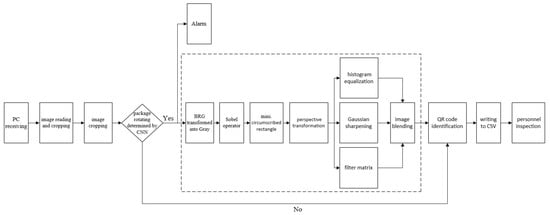

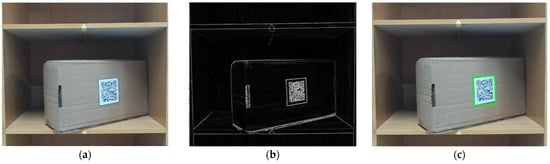

After creating a package image, the QR recognition will then be processed, as shown in Figure 8. After the back-end computer receives the image, it will crop the environment image directly and then predict whether the package is rotated through CNN. If there is no rotation, the pyzbar decoder is used to read the package information directly from the image. Otherwise, the QR code image will be corrected back to the forward image through perspective transformation. Then, histogram equalization and other technologies are used to strengthen the outline of the QR code profile, so that the pyzbar decoder can read the package message from it.

Figure 8.

Identification process of a QR code.

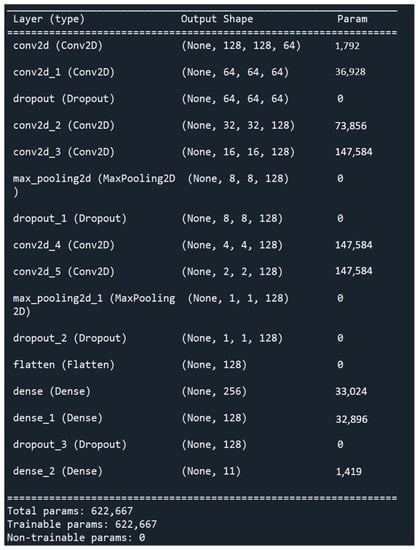

The computer specifications used in the experiment are: Intel Core i9-9900K 3.6 GHz, GeForce RTX 3080 10G, CUDA (Compute Unified Device Architecture), and the number of training data is 8704. The CNN architecture used in this paper, as shown in Figure 9, contains 6 convolutional layers, 2 pooling layers, 4 Dropout layers, 2 fully connected layers, and 1 SoftMax layer. The Dropout layer is a regularization method that can effectively prevent over-fitting effects. It randomly discards the hidden layer neurons with the probability we set (25%), so that the model does not rely too much on specific neurons, so as to increase the generalization ability of the model, thereby achieving the purpose of preventing overfitting. We add a layer of Dropout before the SoftMax layer because Dropout uses the Bernoulli function to randomly give 0 and 1, so we use 10% Dropout to verify the robustness of the system and then classify 11 kinds of package placement angles through the SoftMax layer. In order to optimize the results of the model, hyperparameters are adjusted and optimized for better performance.

Figure 9.

CNN architecture used in this paper.

Hyperparameters refer to parameters that are not learned by the model itself, such as the number of hidden layers of the model, optimizer, number of discarded layers, learning rate, regularization coefficient, batch size, training cycle, etc. These parameters can affect the performance and behavior of the model and can be divided into grid search and random search. The grid search will adjust the learning rate, batch size, and regularization coefficient of the model, and different optimization functions are applied for comparison, such as SGD, RMSprop, Adadelta, Adam, Adamax, and Adagrad. Then, the adjusted model is subjected to 10-fold cross-validation to confirm performance and accuracy. K-fold cross-validation divides the original data set into K non-overlapping subsets, and then each subset takes turns as the test set, and the remaining K-1 subsets are used as the training set. The model is trained K times and tested K times, and finally, the average value of the K test results are obtained as an estimate of the model performance. The results are shown in Table 1. Regularization coefficients L1 and L2 of 0.001 reduce the problem of overfitting. The accuracy of various optimization functions in the table is about 90%, and only Adamax has the probability of the accuracy rate less than 10%. For Adagrad, its convergence speed is too slow, and the accuracy rate is lower than other methods. So, these two methods can be excluded.

Table 1.

The accuracy of various optimization functions confirmed by 10-fold cross-validation.

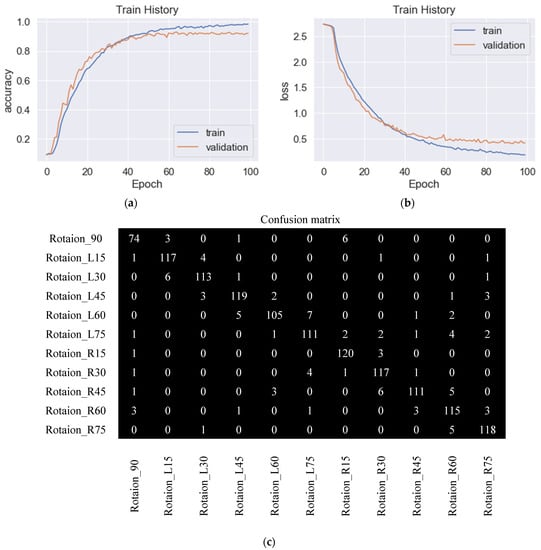

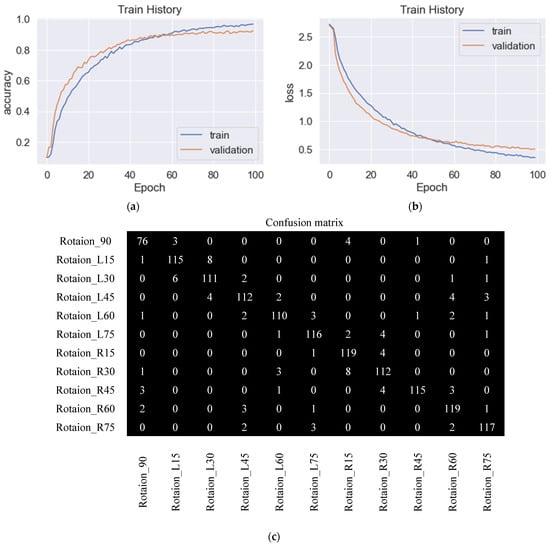

The model trained by SDG had an accuracy rate of 92.0% in identification, and the parameters were set as learning rate 0.01, learning rate decay rate 0.000001 after each parameter update, movement amount 0.9, and Nesterov momentum included. The SDG training process is shown in Figure 10a. The figure describes the accuracy rate during the training and verification process. Figure 10b shows the loss during SDG training, and the accuracy rate did not change much at Epoch 70. Figure 10c is the confusion matrix in which we can find that each angle had mutual confusion.

Figure 10.

Training and validation of the proposed CNN, performed using SDG. (a) Training and validation accuracy with 92.0% accuracy, (b) training and validation loss, and (c) confusion matrix.

Table 2 displays the test results of the trained model with 20 test photos for various angles. It was found that three photos were wrongly identified in the recognition of L45° and L60°. The time required to identify a single image of the package excluded the image pre-processing time was about 0.023 s. So, the recognition of the trained model with 20 test photos for one displacement angle needs 0.478 s, about 20 times of 0.023 s, in Table 2. We used time.time() in the python time module to calculate time.

Table 2.

Rotation recognition results of 20 packages tested using the SGD method.

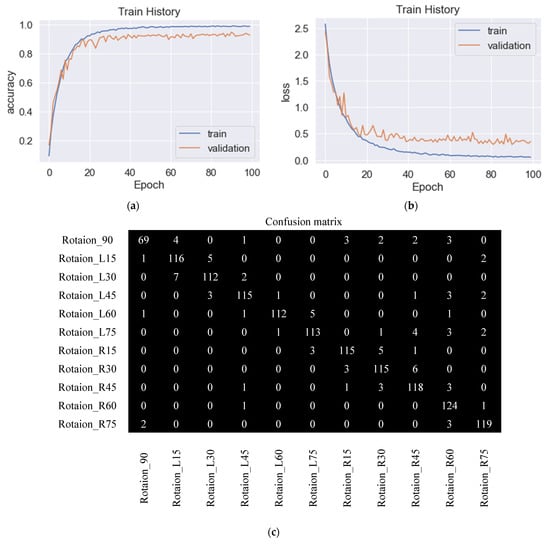

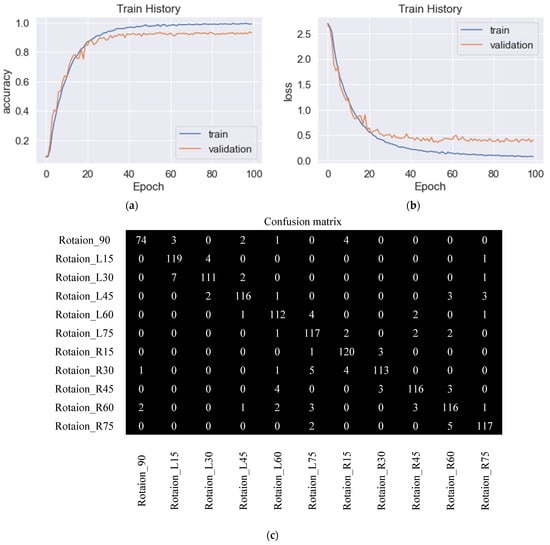

For various optimization functions, their results of accuracy and loss are compared. With the parameters set as learning rate 0.001, the moving average of gradient squared decay rate 0.9, and positive learning rate decay value after each parameter update, Figure 11a shows an identification accuracy rate of 92.8% by RMSprop. Figure 11b shows the loss in process by RMSprop, and there were still slight shocks at the 70th and 90th training stages. Figure 11c shows the confusion matrix. There were situations where various angles were confused with each other, especially at 90° as the most obvious, and a total of 15 images were misidentified for L15°, L45°, R15°, R30°, R45°, and R60°. Figure 12a shows the training process of Adadelta, with an identification accuracy rate of 92.9%. The parameters were set to learning rate 1.0, the moving average of the gradient squared decay rate 0.95, and positive learning rate decay after each parameter update. Figure 12b shows the loss values, and the accuracy rate did not change much from the 50th training stage. The confusion matrix is shown in Figure 12c; there were mutual confusions in various angles, especially at R60° most obviously, and 12 images were misrecognized, which were 90°, L45°, L60°, L75°, R45°, and R75°, respectively. The accuracy rate of 92.3% by Adam is shown in Figure 13a. From the 100th training stage, the accuracy rate did not change much. The parameters were set as the learning rate 0.001, the exponential decay rates of the first-order momentum and the second-order momentum β1, β2 0.9 and 0.999, respectively, and the positive learning rate decay value after each parameter update was also required. The loss process is shown in Figure 13b. The confusion matrix is shown in Figure 13c, and there were various angles in the confusion matrix that still confused each other, especially at L45°. A total of 13 images were wrongly identified as L30°, L60°, R60°, and R75°. It was found that Adam has the slowest convergent rate.

Figure 11.

Training and validation of the proposed CNN, performed using RMSprop. (a) Training and validation accuracy with 92.9% accuracy, (b) training and validation loss, and (c) confusion matrix.

Figure 12.

Training and validation of the proposed CNN, performed using Adadelta. (a) Training and validation accuracy with 92.9% accuracy, (b) training and validation loss, and (c) confusion matrix.

Figure 13.

Training and validation of the proposed CNN, performed using Adam. (a) Training and validation accuracy with 92.3% accuracy, (b) training and validation loss, and (c) confusion matrix.

Next, 20 test photos for each performance of the model trained by the above-mentioned various optimization functions is shown in Table 3. The recognition on L60° had a higher error rate, followed by L15°.

Table 3.

Recognition ability of different optimization functions from various angles.

From the above, CNN can be used to identify the placement angle of the package. Here, we can apply the angle identification by CNN in two ways:

First: When CNN recognizes that the package is rotated (that is, the placement angle is not 90°), it means that the package may not be placed properly. At this time, the system sends out a warning message to warn the management personnel to arrange it to prevent the package falling from the shelf and causing accidental or unnecessary damage.

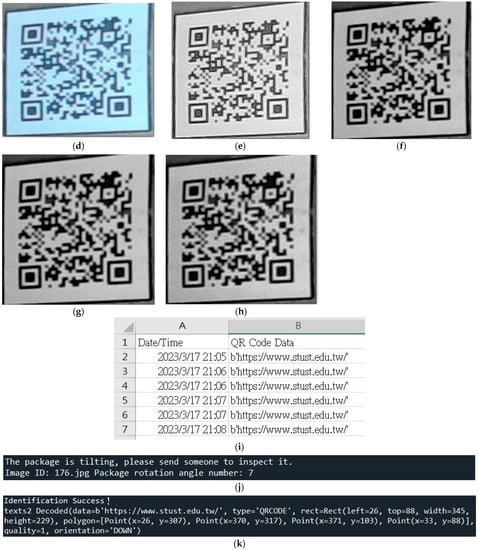

Second: Since the QR code is printed on the interested surface of the package, it can be inferred that when the package is rotated, that is, the QR code image must also produce a rotation of the same angle. In order to improve the efficiency of the overall work and reduce unnecessary collisions that may be caused by drones looking for the best shooting position of the QR code in the region of interest, it can be used as a decision whether to process the image again. If the CNN recognizes that it is 90°, it means that the package and the QR code image are not rotated, and the UAV can directly read the QR code at this time. Otherwise, the image will be processed to assist in reading the QR code (Figure 14a). The processing process is shown as follows. Firstly, the Sobel edge operation is performed on the original image (Figure 14b) to make the QR code image obvious, and then the QR code image is framed by the minimum circumscribed rectangle (Figure 14c). After that, the skewed QR code image is pulled back to the forward image by perspective transformation for easy reading (Figure 14d). In order to improve the recognition rate, the pictures after the perspective transformation are subjected to various image enhancements, such as filter matrix (Figure 14e), Gaussian sharpening (Figure 14f), histogram equalization (Figure 14g), and image blending (Figure 14h), as shown in Figure 14. Figure 14i shows writing the read information to CSV; predicting the package is not 90° with a rotation and listing a warning is shown in Figure 14j; and Figure 14k shows the success message of QR code reading.

Figure 14.

QR code image correction and reading. (a) Original image, (b) Sobel operator, (c) QR code framed by the minimum circumscribed rectangle, (d) perspective transformation, (e) mask matrix, (f) Gaussian blur (sharpening), (g) histogram equalization, (h) image overlay, (i) write the read information to CSV, (j) predict that the package is not 90° with a rotation and list a warning, and (k) success message.

5. Conclusions

This study intends to develop a warehouse management system based on UAVs to scan the QR codes printed on the packages. The UAV includes a positive cross quadcopter drone with a wheelbase of 38 cm and a variety of sensors and components, such as flight controllers, single-board computers, optical flow sensors, ultrasonic sensors and cameras, etc. The UAV is stabilized by PID control and takes pictures of the package as it reaches ahead of the shelf autonomously. Through convolutional neural networks (CNN), the placement angle of the package can be accurately identified. Optimization functions, such as SGD, RMSprop, Adadelta, and Adam, are applied to improve the system performance. In terms of the results, two cases should be considered. If the angle is 90°, the QR code can be read directly. Otherwise, image processing is required to assist in reading the QR code, including the use of Sobel edge computing, minimum circumscribed rectangle, perspective transformation, image enhancement, and other steps. Furthermore, the system will issue a warning to prompt the management personnel to arrange the package to avoid accidents or losses and use the placement angle for the QR code map to improve the drone’s work efficiency and reduce unnecessary collisions between the drone and package.

The UAV system can also be applied in monitoring the safety of the warehouse interior and conducting inventory counts. In addition, through the combination of the UAV system and IoT technology, real-time monitoring and management of the entire logistics process can be realized, thereby improving the operational efficiency and quality of logistics.

Author Contributions

Conceptualization, M.-S.W.; methodology, S.-Y.Y.; software, S.-Y.Y. and H.-C.J.; validation, M.-S.W. and S.-Y.Y.; formal analysis, H.-C.J.; investigation, M.-S.W.; resources, S.-Y.Y.; data curation, H.-C.J.; writing—original draft preparation, S.-Y.Y.; writing—review and editing, M.-S.W., S.-Y.Y. and C.-Y.C.; visualization, C.-Y.C.; supervision, M.-S.W.; project administration, S.-Y.Y. and C.-Y.C.; funding acquisition, M.-S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Higher Education Sprout Project of Ministry of Education, Taiwan and National Science and Technology Council, grant number MOST 111-2221-E-218-024-.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lin, H.-T. Implementation of Industry 4.0 Production Line for Intelligent Manufacture-A Case Study on Production Test Line of Notebooks. Master’s Thesis, Tunghai University, Taichung City, Taiwan, 2015. [Google Scholar]

- Diversified Development of UAV Applications. Available online: https://www.moea.gov.tw/MNS/ietc/bulletin/Bulletin.aspx?kind=51&html=1&menu_id=33594&bull_id=9494 (accessed on 29 March 2023). (In Chinese)

- Tsai, Y.-S. Intelligent Warehouse with Mobile Vehicle for Maker Education. Master’s Thesis, Cheng Shiu University, Kaohsiung, Taiwan, 2021. [Google Scholar]

- Occupational Safety and Health Administration (OSHA). Warehouse Safety: A Practical Guide to Preventing Warehouse Incidents and Injuries; Occupational Safety and Health Administration (OSHA): Washington, DC, USA, 2019.

- National Institute for Occupational Safety and Health (NIOSH). Preventing Injuries and Deaths of Workers Who Operate or Work Near Forklifts; National Institute for Occupational Safety and Health (NIOSH): Washington, DC, USA, 2013.

- Fernández-Caramés, T.M. Towards an Autonomous Industry 4.0 Warehouse: A UAV and Blockchain-Based System for Inventory and Traceability Applications in Big Data-Driven Supply Chain Management. Sensors 2019, 19, 2394. [Google Scholar] [CrossRef] [PubMed]

- Ong, J.H.; Sanchez, A.; Williams, J. Multi-UAV System for Inventory Automation. In Proceedings of the 2007 1st Annual RFID Eurasia, Istanbul, Turkey, 5–6 September 2007; pp. 1–6. [Google Scholar]

- Chin, J.-Y. Research and Design of UAV Implement Automated RFID Inventory System. Master’s Thesis, National Chin-Yi University of Technology, Taichung City, Taiwan, 2017. [Google Scholar]

- Huang, C.-Y. Designing of a RFID-enabled Drone system for Autonomous Stock Counting Processes. Master’s Thesis, National Chin-Yi University of Technology, Taichung City, Taiwan, 2017. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent No 3,069,654, 18 December 1962. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 10–5244. [Google Scholar]

- Li, S. Rotation invariance neural network. arXiv 2017, arXiv:1706.05534. [Google Scholar]

- Li, J.; Yang, Z.; Liu, H.; Cai, D. Deep rotation equivariant network. Neurocomputing 2018, 290, 26–33. [Google Scholar] [CrossRef]

- Marcos, D.; Volpi, M.; Komodakis, N.; Tuia, D. Rotation equivariant vector field networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5048–5057. [Google Scholar]

- Kanezaki, A.; Matsushita, Y.; Nishida, Y. Rotationnet: Joint object categorization and pose estimation using multiviews from unsupervised viewpoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5010–5019. [Google Scholar]

- Shi, B.; Bai, S.; Zhou, Z.; Bai, X. Deeppano: Deep panoramic representation for 3-d shape recognition. IEEE Signal. Process. Lett. 2015, 22, 2339–2343. [Google Scholar] [CrossRef]

- Li, M.; Zhang, J.; Song, J.; Li, Z.; Lu, S. A Clinical-Oriented Non-Severe Depression Diagnosis Method Based on Cognitive Behavior of Emotional Conflict. IEEE Trans. Comput. Soc. Syst. 2023, 10, 131–141. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, P.; Zhang, R.; Yao, R.; Wu, D. A novel performance trend prediction approach using ENBLS with GWO. Meas. Sci. Technol. 2022, 34, 025018. [Google Scholar] [CrossRef]

- Zhao, H.; Yang, X.; Chen, B.; Chen, H.; Wu, D. Bearing fault diagnosis using transfer learning and optimized deep belief network. Meas. Sci. Technol. 2022, 33, 1239–1253. [Google Scholar] [CrossRef]

- Pei, Y.; Huang, Y.; Zou, Q.; Zhang, X.; Wang, S. Effects of image degradation and degradation removal to CNN-based image classification. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1239–1253. [Google Scholar] [CrossRef] [PubMed]

- Suganthi, M.; Sathiaseelan, J.G.R. An exploratory of hybrid techniques on deep learning for image classification. In Proceedings of the 2020 4th International Conference on Computer, Communication and Signal Processing (ICCCSP), Chennai, India, 28–29 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Hasan, M.S. An application of pre-trained CNN for image classification. In Proceedings of the 2017 20th International Conference of Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 22–24 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Shape and motion features approach for activity tracking and recognition from kinect video camera. In Proceedings of the 2015 IEEE 29th International Conference on Advanced Information Networking and Applications Workshops, Gwangju, Republic of Korea, 24–27 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 445–450. [Google Scholar]

- Chen, D.; Song, Z.; Guo, J.; Yang, S.; Yao, H. CNN-based image small-angle rotation angle estimation. In Proceedings of the 2022 IEEE 24th International Workshop on Multimedia Signal Processing (MMSP), Shanghai, China, 26–28 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–5. [Google Scholar]

- Sultana, R.; Adams, R.D.; Yan, Y.; Yanik, P.M.; Tanaka, M.L. Trash and recycled material identification using convolutional neural networks (CNN). In Proceedings of the 2020 SoutheastCon, Raleigh, NC, USA, 28–29 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Lai, C.; Liu, T.; Mei, R.; Wang, H.; Hu, S. The cloud images classification based on convolutional neural network. In Proceedings of the 2019 International Conference on Meteorology Observations (ICMO), Chengdu, China, 28–31 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Wang, D. A comparative research on clothing images classification based on neural network models. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 495–499. [Google Scholar]

- Ibrahim, M.S.; Hamid, S.A.; Muhammad, Z.; Leh, N.A.M.; Abdullah, S.; Bakar, S.J.A.; Osman, M.K.; Fadhlullah, S.Y. CNN Comparative Study for Apple Quality Classification. In Proceedings of the 2022 IEEE 12th International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 21–22 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 53–58. [Google Scholar]

- Viratkapan, V.; Mruetusatorn, S. Classification of House Categories Using Convolutional Neural Networks (CNN). In Proceedings of the 2022 7th International Conference on Business and Industrial Research (ICBIR), Bangkok, Thailand, 19–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 509–514. [Google Scholar]

- Li, Q.; Hu, S.; Shimasaki, K.; Ishii, I. An Active Multi-Object Ultrafast Tracking System with CNN-Based Hybrid Object Detection. Sensors 2023, 23, 4150. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.-B. Design of the Fuzzy Autonomous Flight Control for Hexacopter. Master’s Thesis, Department of Electrical Engineering, National Ilan University, Yilan City, Taiwan, 2021. [Google Scholar]

- Lin, T.-L. Unmanned Aerial Vehicle Position and Sensor Fusion. Master’s Thesis, Department of Mechanical Engineering, National Yang Ming Chiao Tung University, Hsinchu City, Taiwan, 2018. [Google Scholar]

- Yue, J.; Zhang, Q. Research Progress and Key Technologies of Micro Quad-Rotor UAVs. Electron. Opt. Amp. Control. 2012, 17, 46–52. [Google Scholar]

- Wan, J.; Ai, J.-L. Design and Simulation of Fuzzy Control System of UAV Formation Flight. J. Syst. Simul. 2009, 21, 4183–4189. [Google Scholar]

- Chen, P.-J.; Yang, S.-Y.; Wang, C.-S.; Muslikhin, M.; Wang, M.-S. Development of a Chinese chess robotic system for the elderly using convolutional neural networks. Sustainability 2020, 12, 3980. [Google Scholar] [CrossRef]

- Gui, W.; Jun, T. Chinese chess recognition algorithm based on computer vision. In Proceedings of the 26th Chinese Control and Decision Conference, Changsha, China, 31 May–2 June 2014. [Google Scholar]

- Rosenblatt, F. The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Easy to Understand the Principle of Regularization L1 and L2. Available online: https://zhuanlan.zhihu.com/p/309373613 (accessed on 29 March 2023). (In Chinese).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).