Efficient Detection and Tracking of Human Using 3D LiDAR Sensor

Abstract

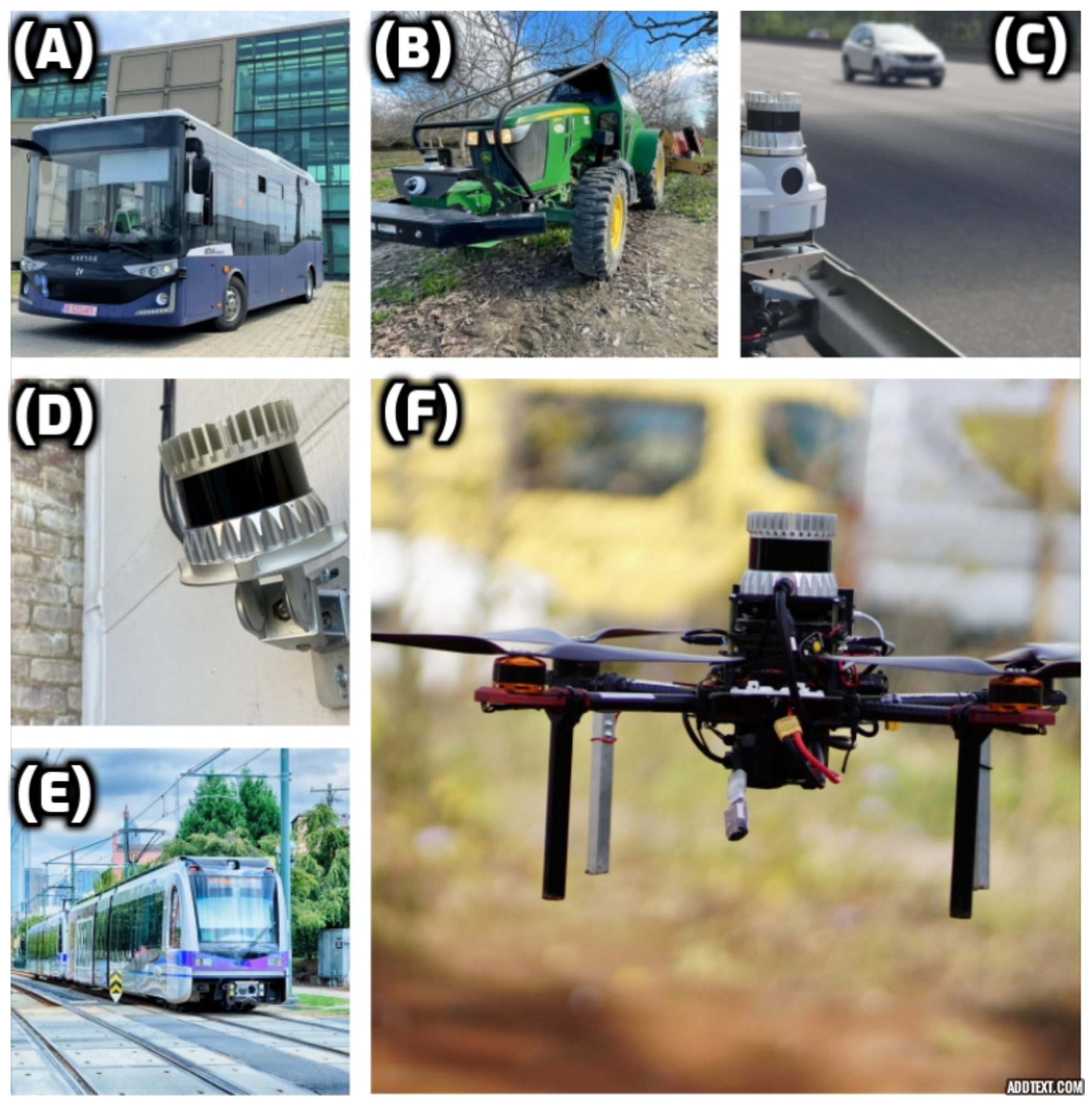

:1. Introduction

2. Implementation and Challenges

2.1. Restricted Vertical Field of View

2.2. Extreme Pose Change and Occlusion

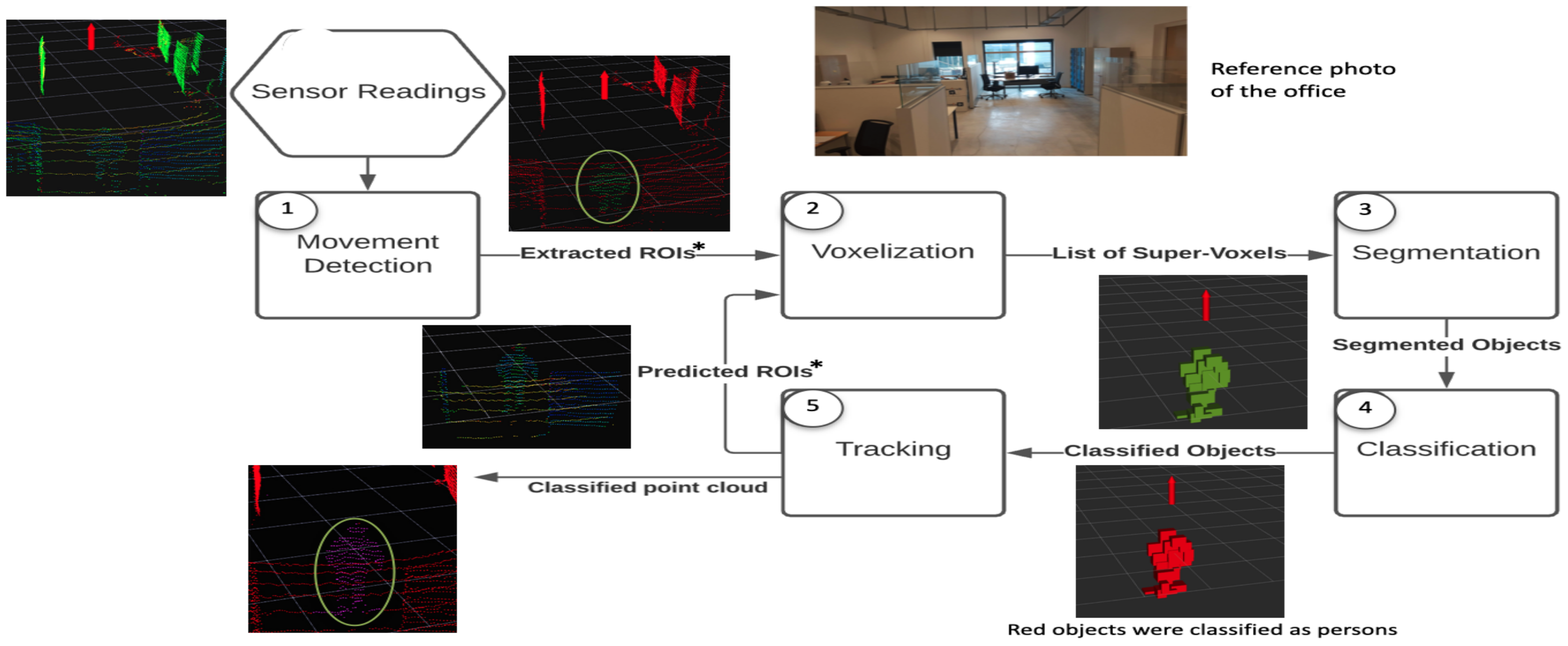

3. Architecture and Implementation

3.1. Movement Detection

3.2. Voxelization and Segmentation

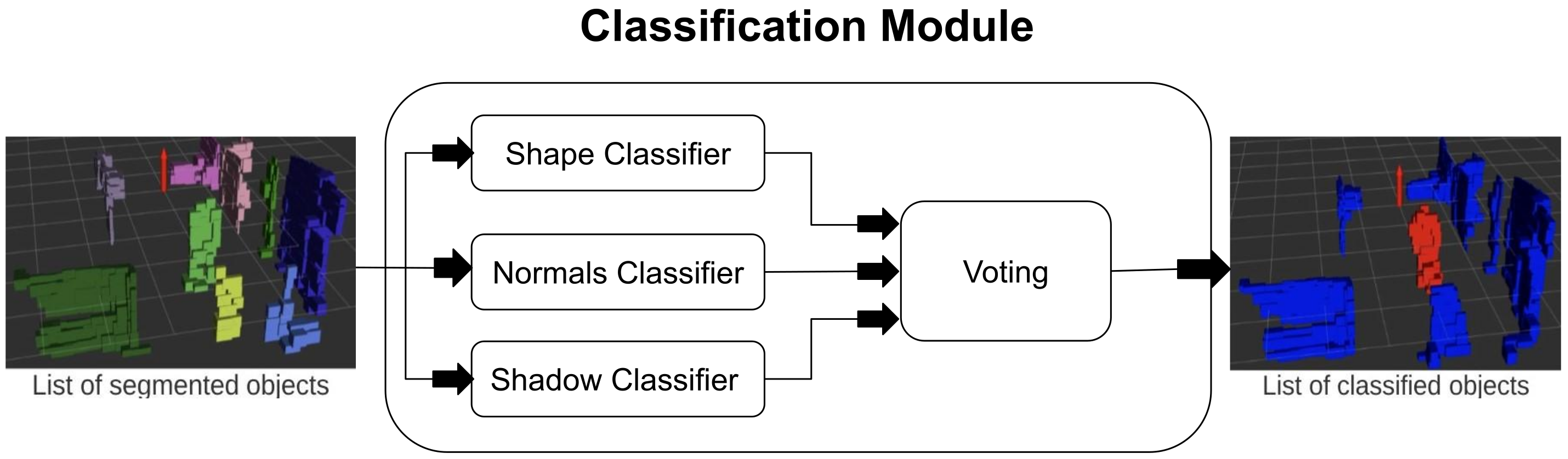

3.3. Classification

3.3.1. Shape Classifier

3.3.2. Normals Classifier

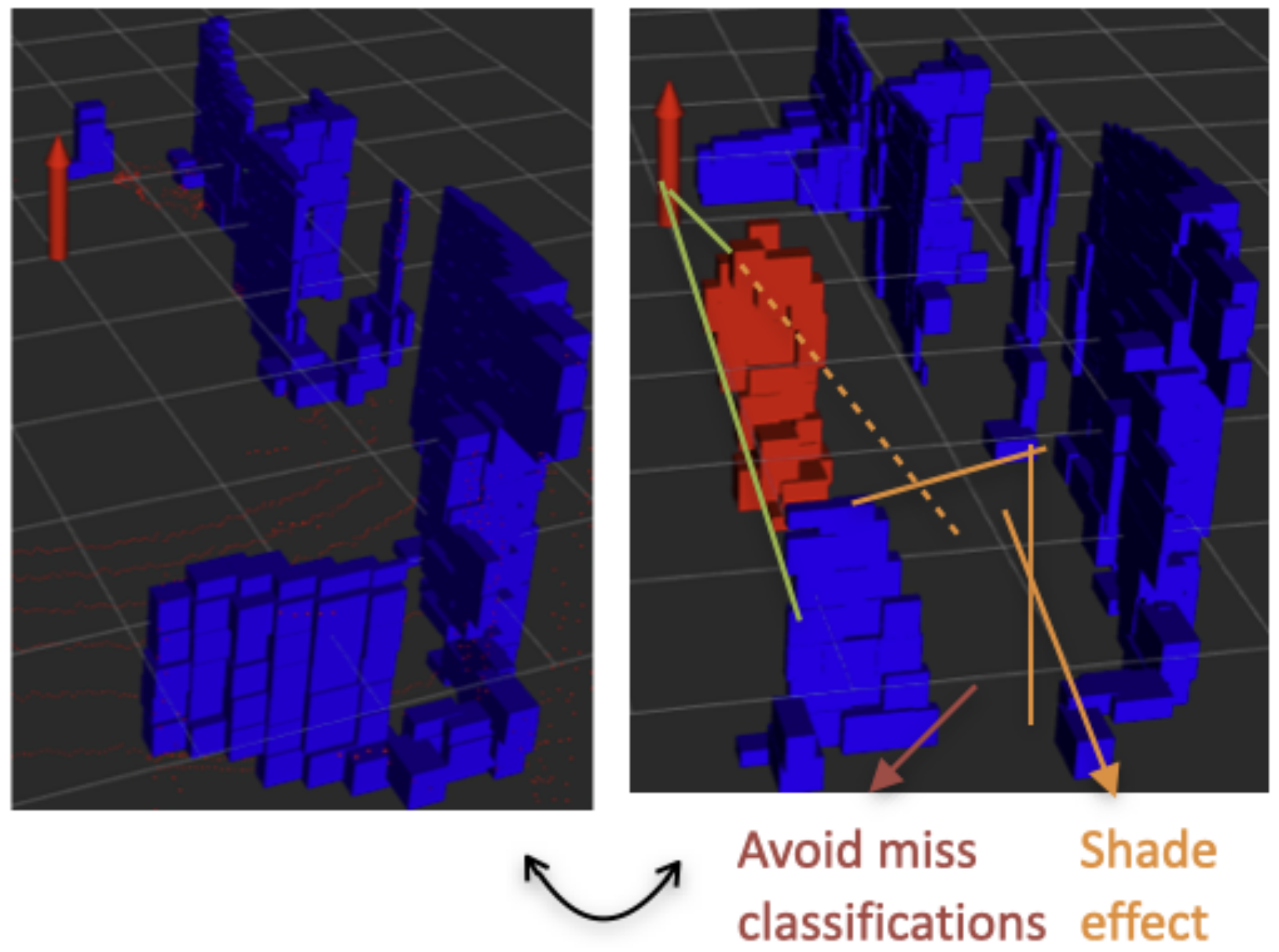

3.3.3. Shade Classifier

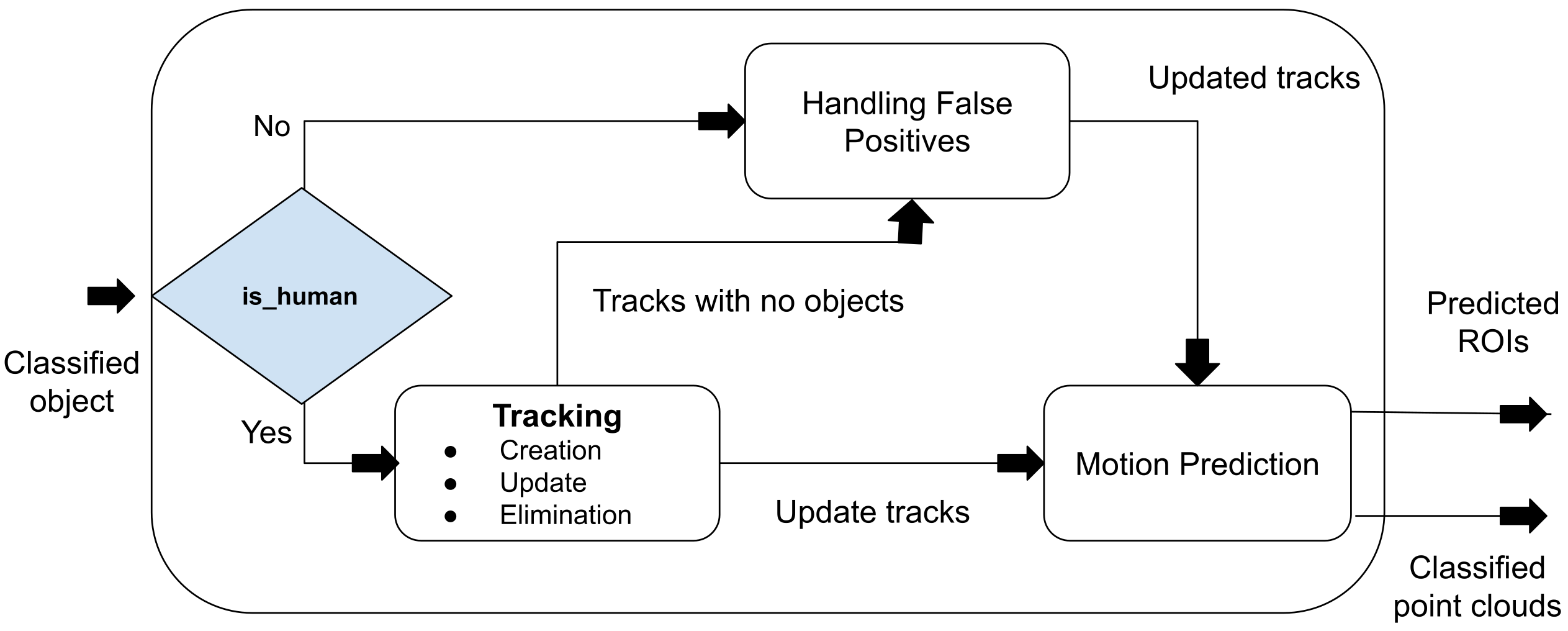

3.4. Tracking

3.4.1. Track Creation and Elimination

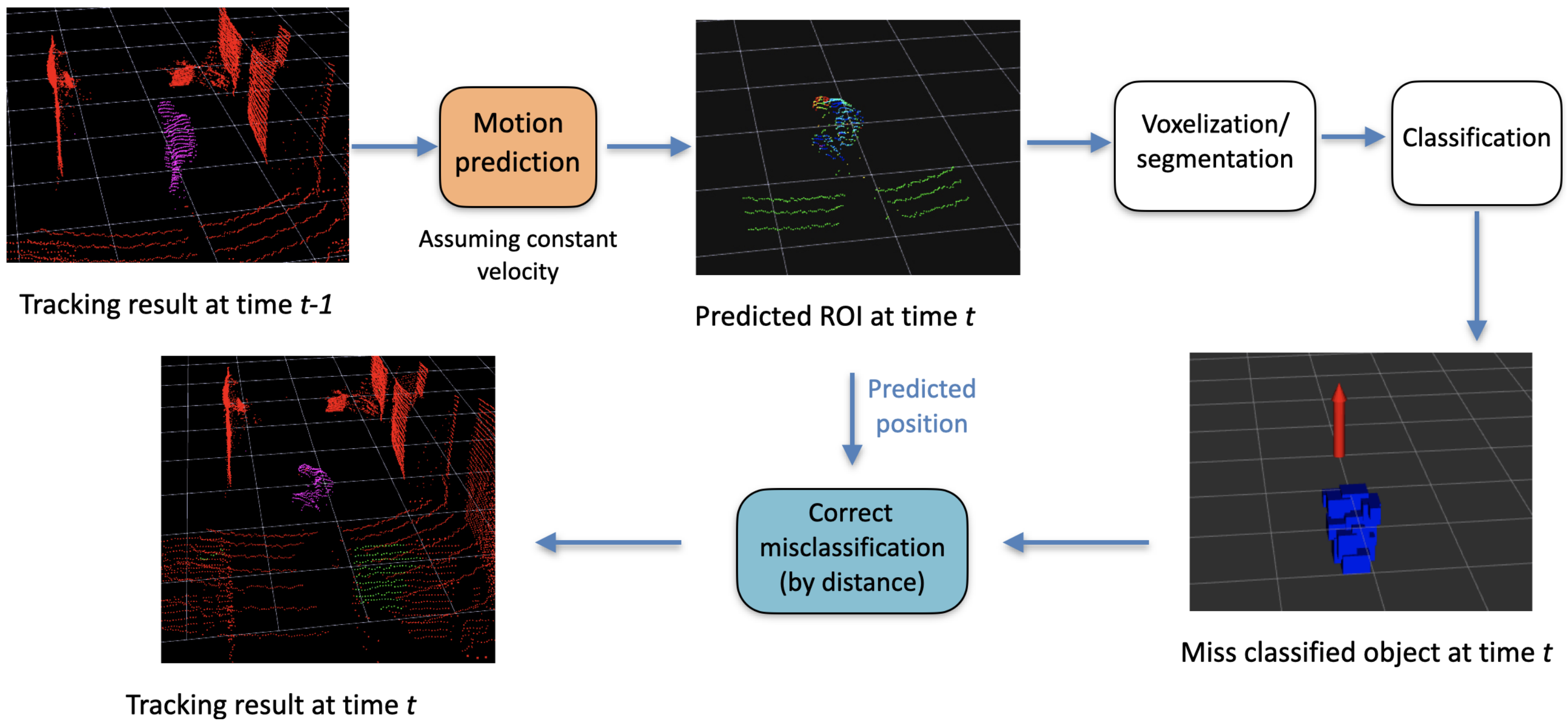

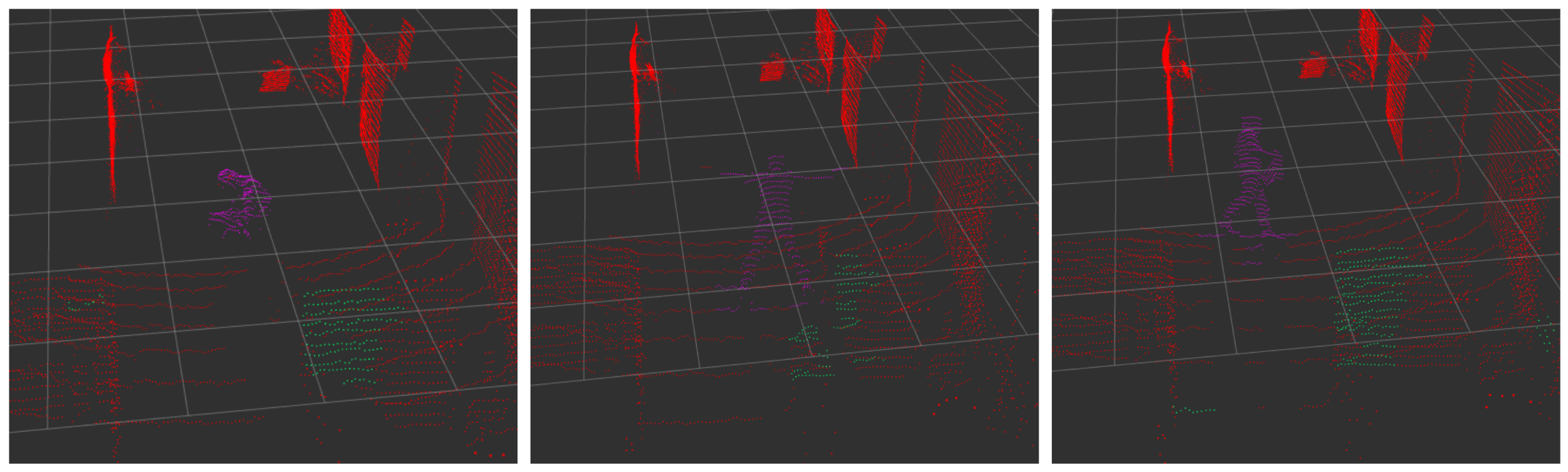

3.4.2. Motion Prediction

3.4.3. Track Updation

4. Validation and Results

5. Conclusion and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, L.; Li, Q.; Li, M.; Nüchter, A.; Wang, J. 3D LIDAR point cloud based intersection recognition for autonomous driving. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 456–461. [Google Scholar]

- VanVoorst, B.R.; Hackett, M.; Strayhorn, C.; Norfleet, J.; Honold, E.; Walczak, N.; Schewe, J. Fusion of LIDAR and video cameras to augment medical training and assessment. In Proceedings of the 2015 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 345–350. [Google Scholar]

- Torres, P.; Marques, H.; Marques, P. Pedestrian Detection with LiDAR Technology in Smart-City Deployments–Challenges and Recommendations. Computers 2023, 12, 65. [Google Scholar] [CrossRef]

- Wang, Z.; Menenti, M. Challenges and Opportunities in Lidar Remote Sensing. Front. Earth Sci. 2021, 9, 713129. [Google Scholar] [CrossRef]

- Ibrahim, M.; Akhtar, N.; Jalwana, M.A.A.K.; Wise, M.; Mian, A. High Definition LiDAR mapping of Perth CBD. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Lou, L.; Li, Y.; Zhang, Q.; Wei, H. SLAM and 3D Semantic Reconstruction Based on the Fusion of Lidar and Monocular Vision. Sensors 2023, 23, 1502. [Google Scholar] [CrossRef]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A Comparative Analysis of LiDAR SLAM-Based Indoor Navigation for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6907–6921. [Google Scholar] [CrossRef]

- Gritti, A.P.; Tarabini, O.; Guzzi, J.; Di Caro, G.A.; Caglioti, V.; Gambardella, L.M.; Giusti, A. Kinect-based people detection and tracking from small-footprint ground robots. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4096–4103. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Zhang, G.; Zhu, P.; Chen, Y.Q. Detecting and tracking people in real time with RGB-D camera. Pattern Recognit. Lett. 2015, 53, 16–23. [Google Scholar] [CrossRef]

- Jafari, O.H.; Mitzel, D.; Leibe, B. Real-time RGB-D based people detection and tracking for mobile robots and head-worn cameras. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5636–5643. [Google Scholar]

- Wang, B.; Rodríguez Florez, S.A.; Frémont, V. Multiple obstacle detection and tracking using stereo vision: Application and analysis. In Proceedings of the 2014 13th International Conference on Control Automation Robotics Vision (ICARCV), Singapore, 10–12 December 2014; pp. 1074–1079. [Google Scholar]

- Zhi Yan, T.D.; Bellotto, N. Online learning for 3D LiDAR-based human detection: Experimental analysis of point cloud clustering and classification methods. Auton. Robot. 2020, 44, 147–164. [Google Scholar] [CrossRef]

- Imad, M.; Doukhi, O.; Lee, D.J. Transfer Learning Based Semantic Segmentation for 3D Object Detection from Point Cloud. Sensors 2021, 21, 3964. [Google Scholar] [CrossRef] [PubMed]

- Azim, A.; Aycard, O. Detection, classification and tracking of moving objects in a 3D environment. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 802–807. [Google Scholar] [CrossRef]

- Ravi Kiran, B.; Roldao, L.; Irastorza, B.; Verastegui, R.; Suss, S.; Yogamani, S.; Talpaert, V.; Lepoutre, A.; Trehard, G. Real-time Dynamic Object Detection for Autonomous Driving using Prior 3D-Maps. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Vu, T.D.; Aycard, O. Laser-based detection and tracking moving objects using data-driven Markov chain Monte Carlo. In Proceedings of the Robotics and Automation, 2009, ICRA’09, Kobe, Japan, 12–17 May 2009; pp. 3800–3806. [Google Scholar]

- Brščić, D.; Kanda, T.; Ikeda, T.; Miyashita, T. Person Tracking in Large Public Spaces Using 3-D Range Sensors. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 522–534. [Google Scholar] [CrossRef]

- Chavez-Garcia, R.O.; Aycard, O. Multiple Sensor Fusion and Classification for Moving Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 525–534. [Google Scholar] [CrossRef]

- Schöler, F.; Behley, J.; Steinhage, V.; Schulz, D.; Cremers, A.B. Person tracking in three-dimensional laser range data with explicit occlusion adaption. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1297–1303. [Google Scholar]

- Spinello, L.; Luber, M.; Arras, K.O. Tracking people in 3D using a bottom-up top-down detector. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1304–1310. [Google Scholar] [CrossRef]

- Vaquero, V.; del Pino, I.; Moreno-Noguer, F.; Solà, J.; Sanfeliu, A.; Andrade-Cetto, J. Dual-Branch CNNs for Vehicle Detection and Tracking on LiDAR Data. IEEE Trans. Intell. Transp. Syst. 2021, 22, 6942–6953. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Segmentation et classification de points 3D obtenus à partir de relevés laser terrestres: Une approche par super-voxels. In Proceedings of the RFIA 2012 (Reconnaissance des Formes et Intelligence Artificielle), Lyon, France, 24–27 January 2012. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-Voxel Feature Set Abstraction With Local Vector Representation for 3D Object Detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Li, Z.; Wang, F.; Wang, N. Lidar r-cnn: An efficient and universal 3d object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 5160–5169. [Google Scholar]

- Ye, M.; Xu, S.; Cao, T. Hvnet: Hybrid voxel network for lidar based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1526–1535. [Google Scholar]

- Yang, Z.; Zhou, Y.; Chen, Z.; Ngiam, J. 3d-man: 3d multi-frame attention network for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 10240–10249. [Google Scholar]

- Xu, Y.; Tong, X.; Stilla, U. Voxel-based representation of 3D point clouds: Methods, applications, and its potential use in the construction industry. Autom. Constr. 2021, 126, 103675. [Google Scholar] [CrossRef]

- Häselich, M.; Jöbgen, B.; Wojke, N.; Hedrich, J.; Paulus, D. Confidence-based pedestrian tracking in unstructured environments using 3D laser distance measurements. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4118–4123. [Google Scholar]

- Yan, Z.; Duckett, T.; Bellotto, N. Online learning for human classification in 3D LiDAR-based tracking. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, Canada, 24–28 September 2017; pp. 864–871. [Google Scholar]

| Cases | Description |

|---|---|

| Case 1: | Single person is walking in front of the camera. |

| Case 2: | Single person is walking in front of the camera when there is occlusion. |

| Case 3: | Single person with different and complex poses. |

| Case 4: | Two persons are walking in front of the camera. |

| Case 5: | Three person walking in front of the camera with occlusion and doing complex poses. |

| Case 6: | Two persons are walking in front of the camera and the tracker tracks only the one person. |

| Case | Precision | Recall | F1 Score | Freq |

|---|---|---|---|---|

| Case 1: | 95.37 | 95.12 | 95.25 | 9.01 |

| Case 2: | 90.18 | 72.24 | 80.22 | 7.84 |

| Case 3: | 94.88 | 94.49 | 94.68 | 8.61 |

| Case 4: | 93.65 | 95.16 | 94.40 | 6.80 |

| Case 5 with normal walk | 96.41 | 78.83 | 86.74 | 8.11 |

| Case 5 with complex poses | 97.51 | 91.35 | 94.33 | 7.71 |

| Case 5 with occlusion | 91.23 | 87.93 | 89.55 | 7.37 |

| Method | Precision | Recall | F-Score |

|---|---|---|---|

| Online Learning [31] | 73.4 | 96.50 | 83.01 |

| Proposed Framework | 93.7 | 87.3 | 90.24 |

| AC + Proposed Framework [13] | 63.12 | 96.50 | 76.23 |

| Method | Average Bandwidth (MB/s) | Mean Bandwidth (MB) | Min Bandwidth (MB) | Max Bandwidth (MB) |

|---|---|---|---|---|

| Online Learning [31] | 16.59 | 1.69 | 1.67 | 1.72 |

| Proposed Framework | 9.06 | 0.90 | 0.90 | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gómez, J.; Aycard, O.; Baber, J. Efficient Detection and Tracking of Human Using 3D LiDAR Sensor. Sensors 2023, 23, 4720. https://doi.org/10.3390/s23104720

Gómez J, Aycard O, Baber J. Efficient Detection and Tracking of Human Using 3D LiDAR Sensor. Sensors. 2023; 23(10):4720. https://doi.org/10.3390/s23104720

Chicago/Turabian StyleGómez, Juan, Olivier Aycard, and Junaid Baber. 2023. "Efficient Detection and Tracking of Human Using 3D LiDAR Sensor" Sensors 23, no. 10: 4720. https://doi.org/10.3390/s23104720

APA StyleGómez, J., Aycard, O., & Baber, J. (2023). Efficient Detection and Tracking of Human Using 3D LiDAR Sensor. Sensors, 23(10), 4720. https://doi.org/10.3390/s23104720