Spatial–Temporal Self-Attention Enhanced Graph Convolutional Networks for Fitness Yoga Action Recognition

Abstract

1. Introduction

- (1)

- Given the RGB videos, the 2D coordinates of human joints in the video frames are estimated by the pose estimation algorithms to obtain the human skeleton data. RGB videos can be collected from video websites or RGB cameras.

- (2)

- The 3D coordinates of human joints can be directly captured by the depth sensors, so as to obtain the human skeleton data.

- (1)

- A new skeleton-based action recognition method for fitness yoga, the spatial–temporal self-attention enhanced graph convolutional network (STSAE-GCN) is proposed to better recognize fitness yoga actions.

- (2)

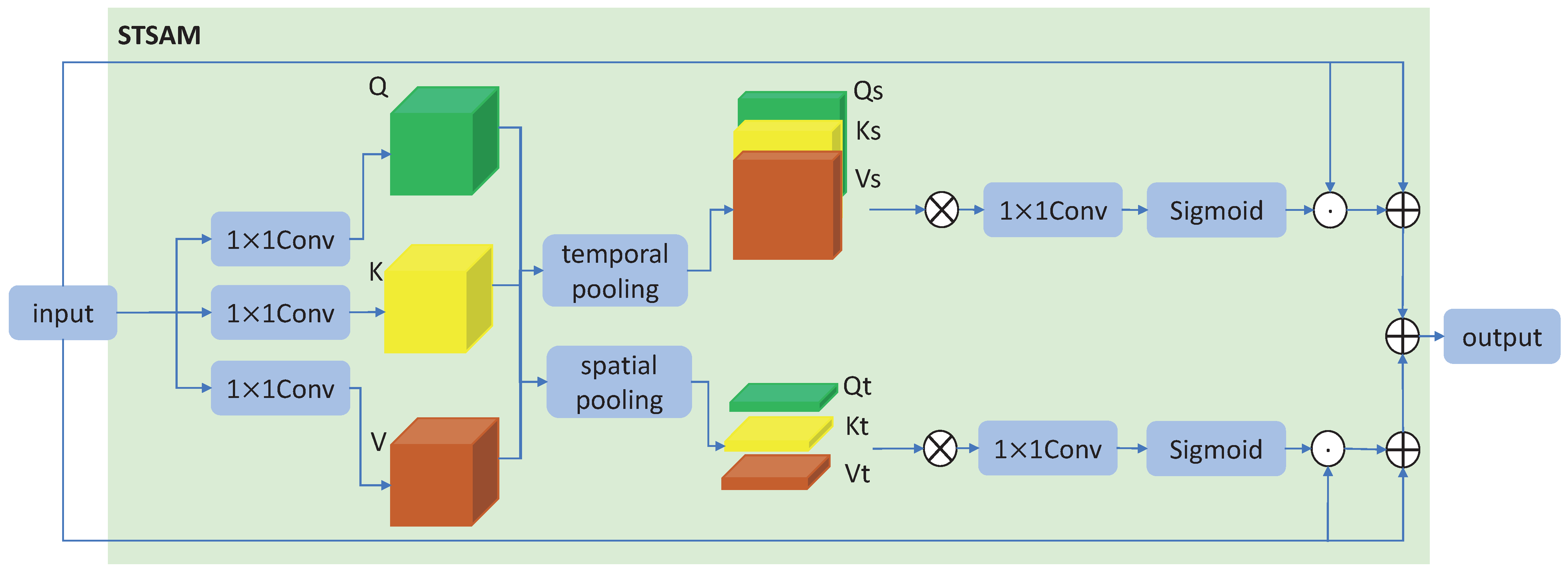

- The spatial–temporal self-attention module (STSAM) that can improve the spatial–temporal expression ability of the model is presented. The STSAM has the characteristics of plug-and-play and can be applied in other skeleton-based action recognition methods.

- (3)

- A dataset Yoga10 of 960 videos is built. The STSAE-GCN proposed in this research achieves 93.83% recognition accuracy on Yoga10, and outperforms state-of-the-art methods. The Yoga10 dataset can provide a unified verification basis for future fitness yoga action recognition.

2. Related Work

2.1. Skeleton-Based Action Recognition

2.2. Attention Mechanism

2.3. Yoga Pose Detection

3. Method

3.1. Adaptive Graph Convolutional Networks

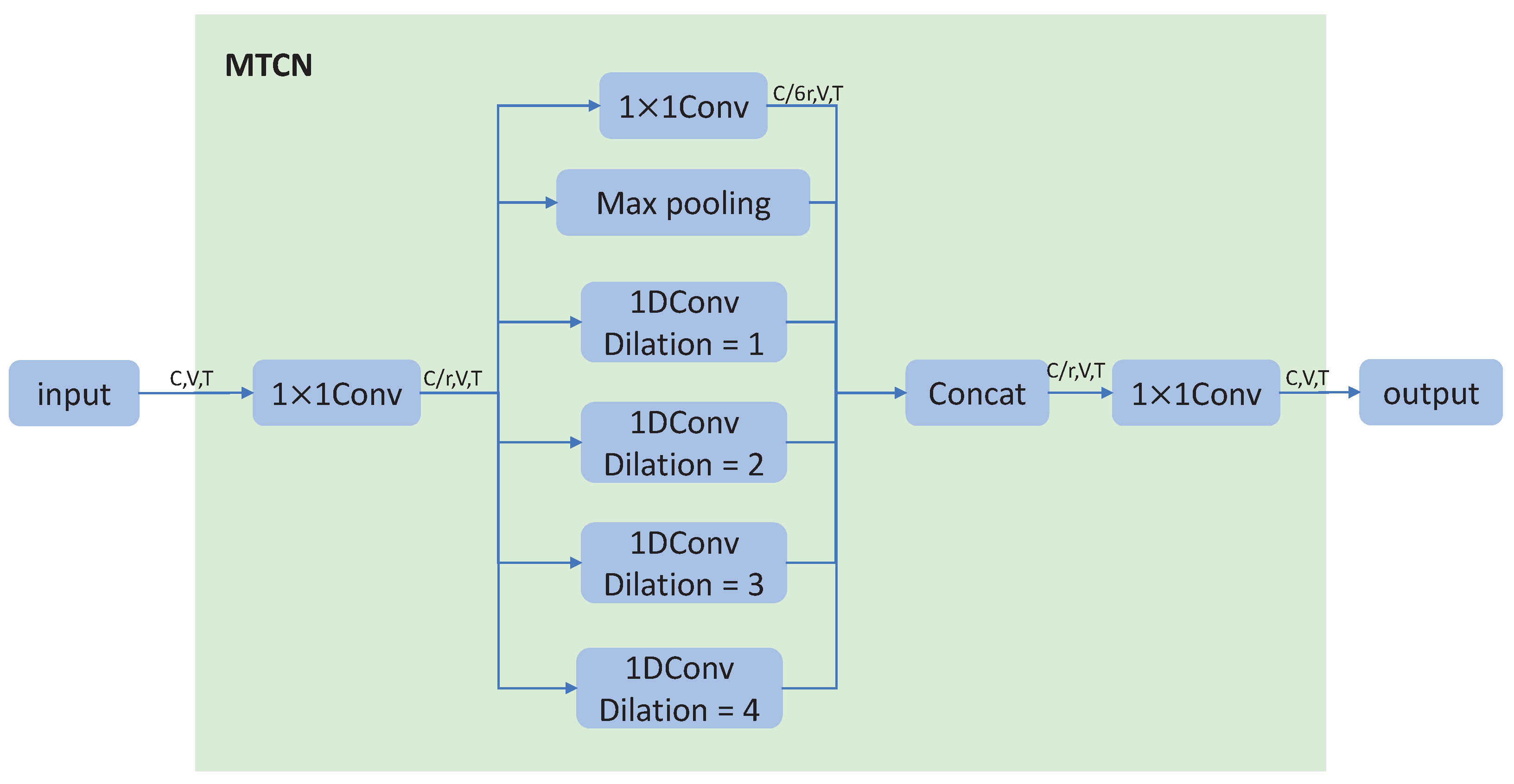

3.2. Multi-Branch Temporal Convolutional Networks

3.3. Spatial–Temporal Self-Attention Module

4. Experiments and Discussion

4.1. Dataset

4.2. Ablation Study

4.3. Comparison with State-of-the-Art Methods

4.4. Plug-and-Play Spatial–Temporal Self-Attention Module

4.5. Discussion

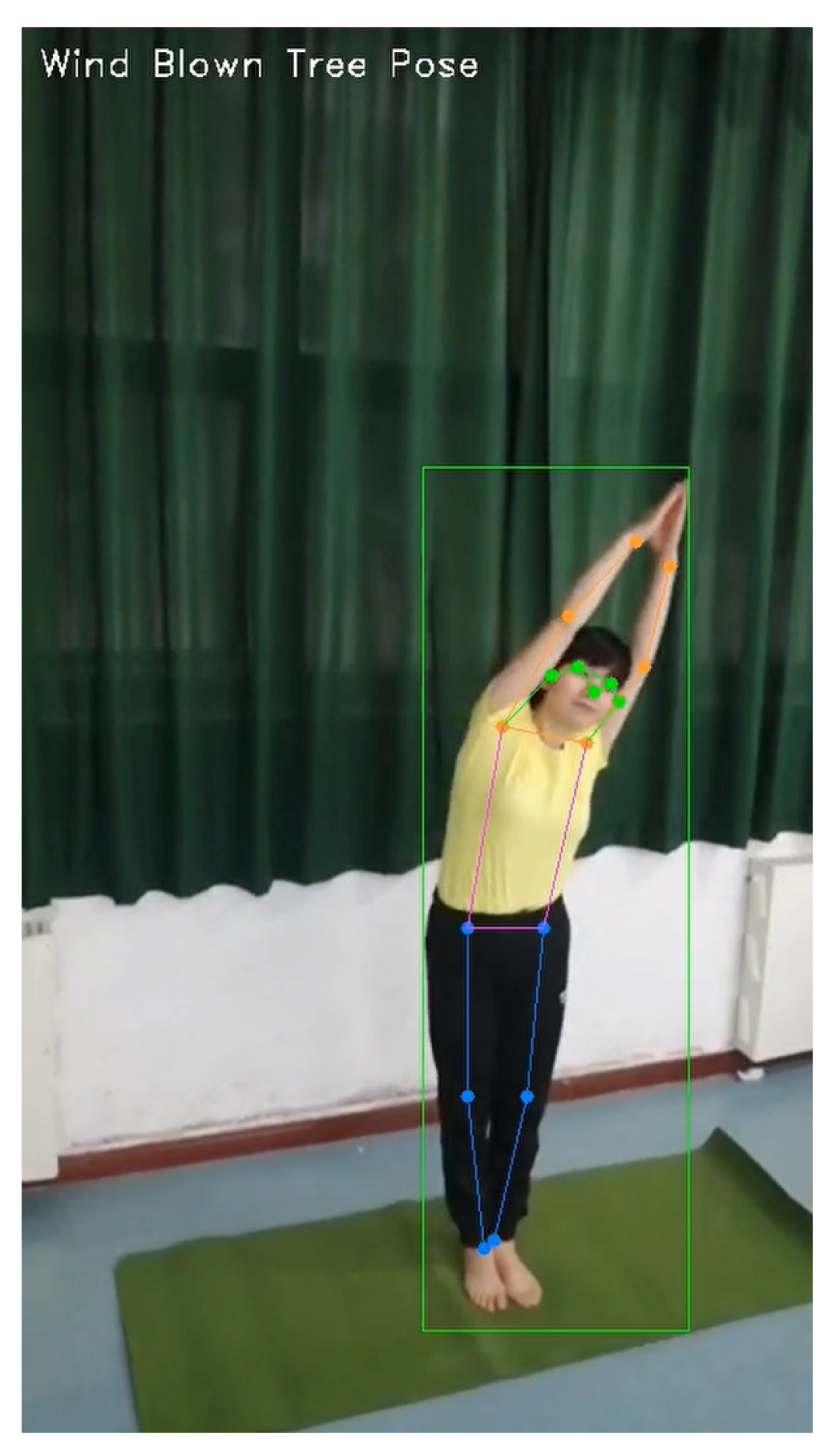

4.6. Practical Application of Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Poppe, R. A survey on vision-based human action recognition. Image Vis. Comput. 2010, 28, 976–990. [Google Scholar] [CrossRef]

- Weinland, D.; Ronfard, R.; Boyer, E. A survey of vision-based methods for action representation, segmentation and recognition. Comput. Vis. Image Underst. 2011, 115, 224–241. [Google Scholar] [CrossRef]

- Ladjailia, A.; Bouchrika, I.; Merouani, H.F.; Harrati, N.; Mahfouf, Z. Human activity recognition via optical flow: Decomposing activities into basic actions. Neural Comput. Appl. 2020, 32, 16387–16400. [Google Scholar] [CrossRef]

- Lin, J.; Gan, C.; Han, S. TSM: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 7083–7093. [Google Scholar]

- Li, Y.; Ji, B.; Shi, X.; Zhang, J.; Kang, B.; Wang, L. TEA: Temporal excitation and aggregation for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 909–918. [Google Scholar]

- Wang, Z.; She, Q.; Smolic, A. Action-net: Multipath excitation for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13214–13223. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 6202–6211. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27, 381. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Zhu, W.; Lan, C.; Xing, J.; Zeng, W.; Li, Y.; Shen, L.; Xie, X. Co-occurrence feature learning for skeleton based action recognition using regularized deep LSTM networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-structural graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3595–3603. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12026–12035. [Google Scholar]

- Li, B.; Dai, Y.; Cheng, X.; Chen, H.; Lin, Y.; He, M. Skeleton based action recognition using translation-scale invariant image mapping and multi-scale deep CNN. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 601–604. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View adaptive neural networks for high performance skeleton-based human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1963–1978. [Google Scholar] [CrossRef] [PubMed]

- Lee, I.; Kim, D.; Kang, S.; Lee, S. Ensemble deep learning for skeleton-based action recognition using temporal sliding lstm networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1012–1020. [Google Scholar]

- Wang, L.; Zhao, X.; Liu, Y. Skeleton feature fusion based on multi-stream LSTM for action recognition. IEEE Access 2018, 6, 50788–50800. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Z.; Yuan, C.; Li, B.; Deng, Y.; Hu, W. Channel-wise topology refinement graph convolution for skeleton-based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13359–13368. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-based action recognition with multi-stream adaptive graph convolutional networks. IEEE Trans. Image Process. 2020, 29, 9532–9545. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 143–152. [Google Scholar]

- Duan, H.; Zhao, Y.; Chen, K.; Lin, D.; Dai, B. Revisiting skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2969–2978. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Malik, N.u.R.; Sheikh, U.U.; Abu-Bakar, S.A.R.; Channa, A. Multi-View Human Action Recognition Using Skeleton Based-FineKNN with Extraneous Frame Scrapping Technique. Sensors 2023, 23, 2745. [Google Scholar] [CrossRef] [PubMed]

- Duan, H.; Wang, J.; Chen, K.; Lin, D. PYSKL: Towards Good Practices for Skeleton Action Recognition. arXiv 2022, arXiv:2205.09443. [Google Scholar]

- Rajendran, A.K.; Sethuraman, S.C. A Survey on Yogic Posture Recognition. IEEE Access 2023, 11, 11183–11223. [Google Scholar] [CrossRef]

- Rector, K.; Vilardaga, R.; Lansky, L.; Lu, K.; Bennett, C.L.; Ladner, R.E.; Kientz, J.A. Design and real-world evaluation of Eyes-Free yoga: An Exergame for blind and Low-Vision exercise. Acm Trans. Access. Comput. (Taccess) 2017, 9, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Wanjun, Y.; Chong, C.; Rui, C. Yoga action recognition based on STF-ResNet. In Proceedings of the 2023 IEEE 3rd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 29–31 January 2023; pp. 556–560. [Google Scholar]

- Chen, H.T.; He, Y.Z.; Hsu, C.C. Computer-assisted yoga training system. Multimed. Tools Appl. 2018, 77, 23969–23991. [Google Scholar] [CrossRef]

- Trejo, E.W.; Yuan, P. Recognition of Yoga poses through an interactive system with Kinect device. In Proceedings of the 2018 2nd International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 23–25 June 2018; pp. 1–5. [Google Scholar]

- Jin, X.; Yao, Y.; Jiang, Q.; Huang, X.; Zhang, J.; Zhang, X.; Zhang, K. Virtual personal trainer via the kinect sensor. In Proceedings of the 2015 IEEE 16th International Conference on Communication Technology (ICCT), Hangzhou, China, 18–21 October 2015; pp. 460–463. [Google Scholar]

- Chen, H.T.; He, Y.Z.; Hsu, C.C.; Chou, C.L.; Lee, S.Y.; Lin, B.S.P. Yoga posture recognition for self-training. In Proceedings of the International Conference on Multimedia Modeling, Dublin, Ireland, 6–10 January 2014; pp. 496–505. [Google Scholar]

- Chen, H.T.; He, Y.Z.; Chou, C.L.; Lee, S.Y.; Lin, B.S.P.; Yu, J.Y. Computer-assisted self-training system for sports exercise using kinects. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), San Jose, CA, USA, 15–19 July 2013; pp. 1–4. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An attention enhanced graph convolutional lstm network for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1227–1236. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

| Model | 1 | 2 | 3 | 4 | 5 | Average |

|---|---|---|---|---|---|---|

| baseline | 89.51 | 87.65 | 90.49 | 90.62 | 90.99 | 89.85 |

| SSAM | 88.15 | 92.47 | 91.85 | 92.10 | 91.73 | 91.26 |

| TSAM | 91.48 | 92.59 | 90.99 | 90.49 | 89.14 | 90.94 |

| STSAM | 93.58 | 93.46 | 92.47 | 93.83 | 92.22 | 93.11 |

| Model | 1 | 2 | 3 | 4 | 5 | Average |

|---|---|---|---|---|---|---|

| S-T | 91.48 | 92.84 | 87.53 | 92.72 | 90.62 | 91.04 |

| T-S | 88.89 | 91.23 | 91.98 | 89.88 | 92.10 | 90.82 |

| STSAM | 93.58 | 93.46 | 92.47 | 93.83 | 92.22 | 93.11 |

| Model | 1 | 2 | 3 | 4 | 5 | Average |

|---|---|---|---|---|---|---|

| AAGCN [19] | 92.10 | 90.37 | 88.27 | 88.40 | 86.30 | 89.09 |

| MSG3D [20] | 91.60 | 90.62 | 92.22 | 90.62 | 92.22 | 91.46 |

| CTRGCN [18] | 84.69 | 87.78 | 87.28 | 90.25 | 89.51 | 87.90 |

| ST-GCN++ [26] | 89.51 | 87.65 | 90.49 | 90.62 | 90.99 | 89.85 |

| STSAE-GCN(ours) | 93.58 | 93.46 | 92.47 | 93.83 | 92.22 | 93.11 |

| Model | Top-1 | Top-5 |

|---|---|---|

| CTRGCN [18] | 90.25 | 98.89 |

| CTRGCN+ | 92.47 | 99.38 |

| AAGCN [19] | 92.10 | 98.77 |

| AAGCN+ | 93.03 | 99.26 |

| MSG3D [20] | 92.22 | 99.26 |

| MSG3D+ | 93.33 | 99.38 |

| View | Top-1 | Top-5 |

|---|---|---|

| view-1 | 96.30 | 99.63 |

| view-2 | 98.15 | 99.63 |

| view-3 | 87.04 | 96.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, G.; Zhou, H.; Zhang, L.; Wang, J. Spatial–Temporal Self-Attention Enhanced Graph Convolutional Networks for Fitness Yoga Action Recognition. Sensors 2023, 23, 4741. https://doi.org/10.3390/s23104741

Wei G, Zhou H, Zhang L, Wang J. Spatial–Temporal Self-Attention Enhanced Graph Convolutional Networks for Fitness Yoga Action Recognition. Sensors. 2023; 23(10):4741. https://doi.org/10.3390/s23104741

Chicago/Turabian StyleWei, Guixiang, Huijian Zhou, Liping Zhang, and Jianji Wang. 2023. "Spatial–Temporal Self-Attention Enhanced Graph Convolutional Networks for Fitness Yoga Action Recognition" Sensors 23, no. 10: 4741. https://doi.org/10.3390/s23104741

APA StyleWei, G., Zhou, H., Zhang, L., & Wang, J. (2023). Spatial–Temporal Self-Attention Enhanced Graph Convolutional Networks for Fitness Yoga Action Recognition. Sensors, 23(10), 4741. https://doi.org/10.3390/s23104741