1. Introduction

In the process industry such as the sintering process, process monitoring is mainly based on conventional sensors which measure the temperature, pressure, flow and other data of the process. The measurement of quality indicators is generally offline testing and it is difficult to achieve online analysis. At present, some online sensors that meet the needs have been developed and applied to some processes with relatively simple reaction mechanisms.

The most important quality index of the sinter is the FeO content of the sinter, which can reflect the reducibility and strength of the sinter. The reducibility of iron ore raw material is an important index for the later blast furnace ironmaking, which determines the furnace conditions and the adjustment of various parameters.

With the increase in FeO content, the output of molten iron in the blast furnace will decrease. However, we cannot simply pursue low FeO content, because FeO content also determines the strength of the sinter. The low content will reduce the strength of the sinter, damage the morphology and increase the proportion of powder. This will hinder the rise of gas flow in the furnace body of the blast furnace, affect the permeability of the material column, make it difficult for the furnace to run smoothly and reduce the output [

1].

The common detection method is offline laboratory tests. The operator takes the sintered sample on the conveyor belt and sends it to the chemical composition analysis room. Potassium dichromate titration is often used for offline laboratories and the operation is cumbersome. This method necessitates grinding the sample. If the sample is difficult to dissolve, the analysis results will be poor. However, in general, the method has high accuracy and is the common chemical analysis method for FeO of sinter in iron and steel enterprises [

2]. Another test method is X-ray diffraction, which is still an offline detection method. The ore sample to be measured and the supporting internal standard reagents need to be dried in an oven at 105

C for 2 h and cooled to room temperature before they can be taken. The powder must then be carefully ground to a uniform and consistent level; otherwise, errors may be introduced [

3].

The above offline tests are relatively accurate; however, they are time-consuming, often taking three or four hours to produce a result, and the labor and material costs of the tests are high. Whereas the process of sintering ore from batching to completion of sintering takes about one hour, the test results obtained after three or four hours have a significant lag. If the test results do not meet the process requirements, only the current working condition parameter settings can be adjusted to compensate. This is a great challenge for line operators who want to obtain the control objective of consistently good sinter quality.

Therefore, it is urgent to develop online measurement means of sintering quality indicators. Moreover, sophisticated online measurement means are the basis for building an automatic control system for sinter production. At present, online measurement means mainly include magnetic analyzers and online measurement devices. Shougang Group introduced the magnetic analyzer of the Belgium company to detect via cutting the magnetic induction line when the iron ore passes and causing the change of magnetic field current [

4]. However, this method has strict requirements for samples and it also needs regular calibration and calibration by the instrument side. Shougang Group reported that the measurement accuracy of the magnetic induction coil was poor because the particles of the measured sinter samples were too small and there were many powder particles and the problem could not be solved by the equipment manufacturer’s personnel again.

As more and more industrial data can be recorded with the use of automated systems in factories, data-driven soft measurement methods are emerging as viable solutions for industrial measurements. Li et al. used LSTM neural networks with self-encoders to construct a prediction model for FeO content [

5,

6]. Yan et al. proposed a denoised self-encoder framework for predicting sintering endpoints [

7]. Yang et al. used a hidden variable model to model sinter quality index [

8].

With the development of industrial cameras and image algorithms in recent years, image-based online soft sensors in the field of industrial inspection have gradually gained the attention of academia and industry [

9]. Usantiaga proposed a temperature measurement system for the sinter cooling process based on infrared thermal imaging technology [

10]. Jiang combined the mechanism with the image characteristics acquired via the infrared imager and proposed a method for measuring the polymorph of FeO content in sinter based on the heterogeneous characteristics of infrared thermal images [

11]. However, this method uses the fuzzy classification labels obtained via mechanism analysis and the label accuracy required via regression analysis is insufficient. In addition, the above study used BP neural networks as regressors, which made it difficult to obtain time-series information. And the shallow feature extractor used in this study, cannot obtain deep feature information of the image. In recent years, deep learning methods have been increasingly applied in the image field, such as deep convolutional network models such as ResNet [

12,

13], achieving better results than shallow networks. This is also one of the motivations for the research in this paper.

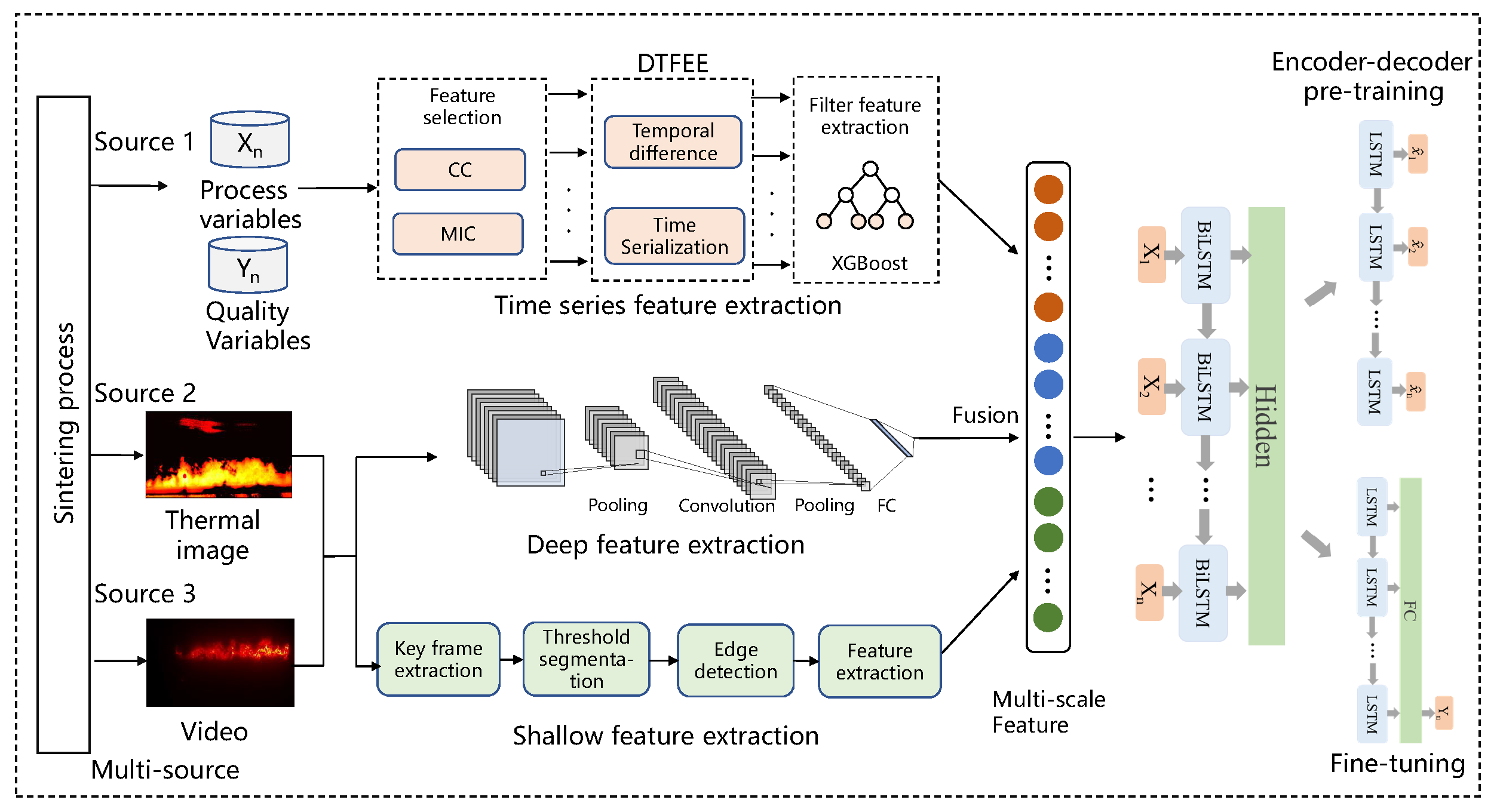

This paper combines the low-resolution images of a thermal imaging camera with the high-resolution images of an industrial camera to achieve more accurate access to critical information. Because there are too few assay labels, it is difficult to detect in real time, and more quality variable labels need to be predicted. The work in this paper relies on experts to calibrate a batch of label data and obtains a better database. On this basis, a sintering quality prediction model based on multi-source data fusion is proposed and video data collected via industrial cameras and thermal imagers are introduced. Firstly, the keyframe information of the tail video of the sintering machine is obtained via the keyframe extraction method based on the feature height. Secondly, using the shallow layer feature construction method based on sinter layering and the deep layer feature extraction method based on ResNet, the feature information of the image is extracted from both the deep layer and the shallow layer. Then, the industrial time series data information is fused and the sintering quality prediction model based on multi-source data fusion is designed, which fully extracts the heterogeneous data information from multiple sources. Finally, the method is applied in a practical industrial case. The experimental results show that the model can effectively improve the accuracy of the sinter quality prediction model.

The main contributions of this paper are as follows:

Obtaining shallow features based on industrial mechanics with thermal and visible images.

Extracting deep features based on the ResNet model and constructing a multi-scale feature extraction model.

Fusing time series data to construct a novel soft measurement model based on multi-source data fusion for FeO content of sintered ore.

The remainder of this paper is organized as follows. In

Section 2, the characteristics of the sintering process and quality variables are analyzed. The method and model proposed in this paper are introduced in

Section 3. Then, the proposed method is verified via the actual production process data in

Section 4.

Section 5 summarizes the full text and puts forward the new prospect and future work direction.

2. Characteristics of Multi-Source Data in Sintering

2.1. Description of the Sintering Process and Test Data

A sintering process is shown in

Figure 1. Before the sintered ore is fed into the blast furnace, it is divided into several processes: proportioning, mixing, sintering, crushing, screening and cooling. There are many different types of raw materials used for sinter production. There are more than 10 different bins, consisting mainly of iron ore fines, fuels, fluxes and some additional raw materials. A reasonable material ratio should be developed based on the different compositions of the raw materials, the quality requirements of the sinter ore and the quality requirements of the blast furnace ironmaking. The sintering ore mixing process requires full mixing of the components to obtain a mixture with a uniform and stable chemical composition, while adding water to obtain a good granularity and the necessary material temperature and to improve permeability. The mixture is fed into the belt sintering machine for production and after the sintering is completed, crushing, screening and cooling are required. The finished sintered ore obtained is sent to the ironmaking plant as raw material for blast furnace ironmaking. The sintering data collected contain operational variables, condition variables and quality variables. The quality variables depend on manual testing.

2.2. Sintering Image Data Acquisition

Due to the scarcity of laboratory data, the sintering process information is not perfect. To better obtain information on the sintering process, an industrial camera and a thermal camera were set up at the observation port at the end of the sintering machine. A schematic diagram of the sintering machine image acquisition is shown in

Figure 1. The industrial visible light camera has a resolution of 1920 × 1080 and the captured images are shown in

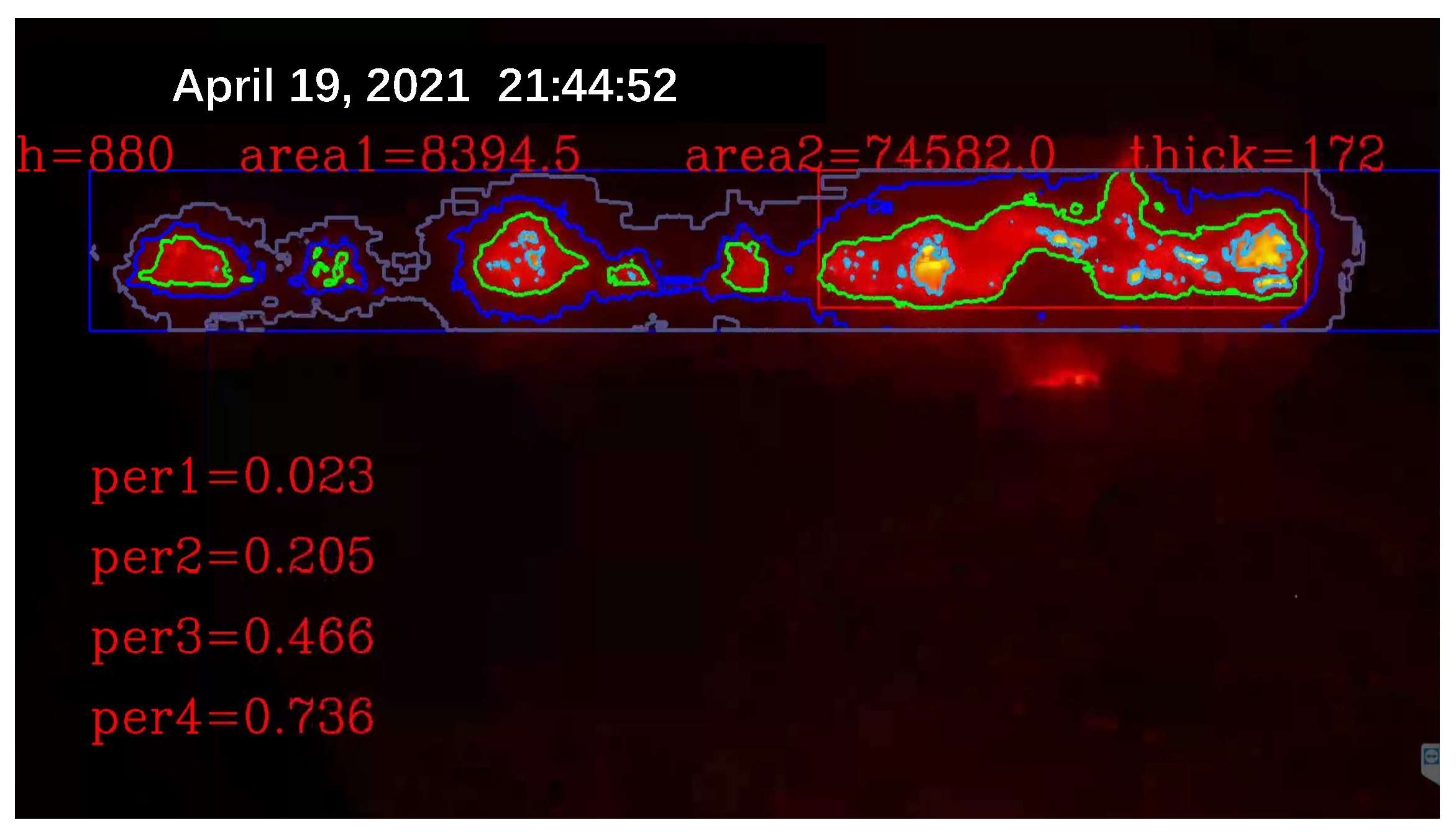

Figure 2. The thermal imager has a resolution of 640 × 480 and the captured image is shown in

Figure 3.

The captured information is uploaded to the database for storage via the industrial fieldbus. Live video information is displayed on a display screen in the central control room for the controller to view or can be downloaded to a cloud server via a remote connection.

2.3. Analysis of Sintering Image Data

At present, most of the quality indexes of sinter are obtained via laboratory analysis. In the process of sinter production, due to the lag of laboratory analysis, the actual working conditions often depend on expert experience to judge. During the sintering process, the internal state of the sinter is not visible and only the topmost surface is exposed. However, the topmost part is the part that is ignited and sintered first. Therefore, on the sintering machine with a length of 90 m, most of the length is in a sintered and finished state. Only the flatness information about the ore laying can be observed from the top and the key sintering state information cannot be obtained. Fortunately, once sintering is complete, the sintered ore reaches the end of the trolley and falls naturally into the collection bin. After the last piece of sintered ore has fallen and before the next piece of sintered ore falls, we can observe the flame information in the sinter section. There is a flame viewing port at the end of the sintering machine. Experts with rich production experience can analyze the range of the FeO quality index of the sinter at the moment by observing the flame red layer data at the end of the sintering machine. Through such manual judgment, it is possible to rely on expert experience to help judge and control the process in the absence of sufficient test indicators. However, there are still significant limitations to this method, with the subjective errors of manual judgement being high and subject to the harsh conditions of the industrial site. In harsh conditions such as high temperatures, loud noises, vibrations and dust, it is difficult for workers to carry out observations for long periods of time, making it difficult to replace conventional observation with fire observation as an aid. Therefore, in the actual production process, it is necessary to develop stable measurement means to replace expert manual observation. Experts can make judgements by observing flame images. Therefore, in this experiment a camera is set up to capture the images and learn information and it is feasible to gauge experts’ levels of experience and knowledge through soft measurements.

Due to the different sintering states of raw materials in different directions and different temperatures, the flame colors presented by them are also different. The surface of the black part is sintered and the temperature is reduced to below 300 C, resulting in dim color and unclear observation. The red fire layer is the burning part of the sinter. It is generally located in the lower part of the sinter, mainly in red, and the temperature is about 600 C. The stomatal layer is the brightest part of the image. Because the temperature exceeds 800 C, it appears yellow and white in the image. The sinter will fall periodically at the tail of the sintering machine. When a batch of sintered ore reaches the last wind box at the end of the sintering machine, it will break and fall with the rest of the ore still on the trolley due to the loss of the support of the trolley. At the moment of falling, the flame information of the fault is very clear. After a short time, the falling of ore will raise a large amount of dust and cause vibration at the same time, making the image captured via the camera blurred and accompanied by shaking. It is difficult to obtain accurate flame information from the image at this time. Therefore, the system needs to screen out the clear images at the moment of falling as the analysis sample set. Experienced workers can judge the FeO content of the sinter at this time by observing the section.

5. Conclusions

This paper presents a method to detect FeO content in sinter based on multi-source information fusion. The method first collects video data of the red layer at the end of the sintering machine through an industrial camera. Secondly, the keyframe extraction algorithm based on feature height and the shallow feature construction method based on sinter layering are designed according to the actual process. Then, deep features of sinter tail red layer images are extracted from keyframes by ResNet model. Finally, combined with the process parameters of the production process, an online real-time prediction model of FeO content in sinter is established through the LSTM network.

The model solves the problems of poor time efficiency and high cost of existing technologies by extracting multi-scale information from industrial camera video data and integrating the process parameters. It has practical significance for the guidance of the sinter production process and provides technical support for energy conservation, emission reduction, and quality and efficiency improvement of iron and steel enterprises. There are also quality variables in the sintering process that are relevant to the images of the faults, such as the total iron content, tumbler index, etc. This method can be extended to other variable predictions as long as suitable image labeled data are available. However, the new system requires labeled data before deployment, high quality image labeled data have a direct impact on system accuracy. To reduce manual effort and improve deployment efficiency, a future direction that could be considered is self-supervised learning of images, thus reducing the workload of expert labeling.