Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition

Abstract

:1. Introduction

1.1. Background

1.2. Existing Methods

1.2.1. Data-Acquisition Medium

1.2.2. Gesture Description

1.2.3. Gesture Identifiers

1.2.4. Gesture Classifiers

1.3. Contribution of the Paper

2. Methods

2.1. Methodology Structure

2.1.1. Overview

2.1.2. Defining Simplifications

- Components selected prior to the current stage of the investigation cannot be changed. Implementations were only considered if they were applicable with the previously selected components. Selected components should not be changed to accommodate for the needs of a new proposed approach. For example, the data-acquisition method selected in stage two could not be changed to accommodate for the requirements of a gesture-identification component proposed in stage three.

- The selection of each component was to be made without consideration for future components. The selection of each component was to be based on the applicable governing criteria, selection of previous components, and the relevant validation results.

- The gesture-description and data-acquisition components were selected from the reviewed literature, without any new quantitative or qualitative analysis being performed.

2.1.3. Governing Criteria

- 4.

- Reliability in issuing the intended command: This criterion graded both the number of unique commands the algorithms can issue, and the algorithm’s ability to distinguish between these unique commands.

- 5.

- Reproducibility of the intended command: This criterion graded the algorithm’s ability to robustly reproduce the same action when presented with the same user input.

- 6.

- Physically non-restrictive equipment or instrumentation: This criterion graded how restrictive the algorithm’s control interface was, referring to both restrictions caused by elements that were physically placed upon the user’s body or elements that required the user to operate within a restricted space or in a restricted manner.

- 7.

- Ease of operation and shorter user learning cycle: This criterion graded how complex or difficult to learn the control interface was, considering factors such as complexity of inputs, complexity of input structure and physical difficulty to form inputs.

- 8.

- Computationally inexpensive: This criterion graded the computation requirements for the algorithm’s operation and inversely the speed in which the algorithm could operate at if given an abundance of computational resources.

- 9.

- Monetarily inexpensive: This criterion graded the general cost needed for the algorithm to function, including the cost of the computational hardware required to run the algorithm and the sensory hardware to acquire data.

2.2. Stage One: Selection of Gesture-Description Model

2.2.1. Overview

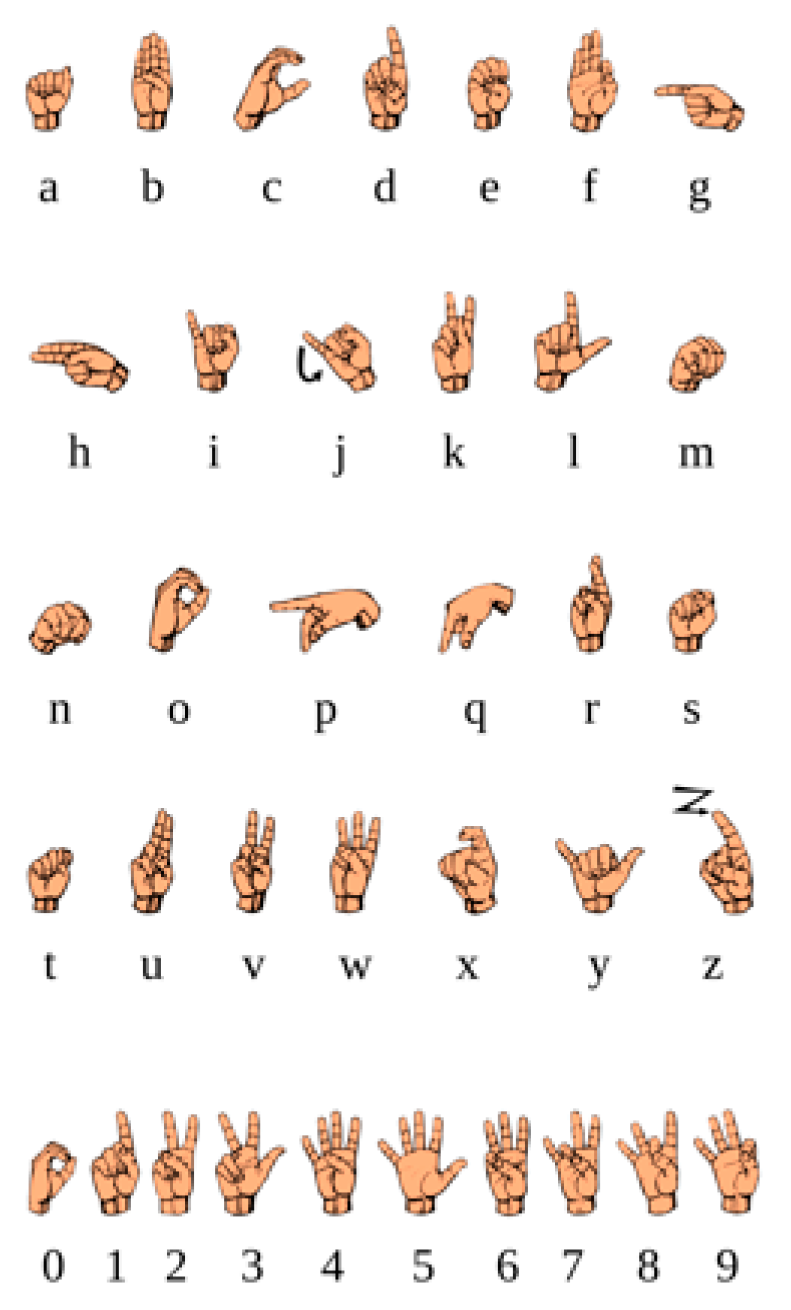

2.2.2. Gesture-Type Selection Justification

2.2.3. Gesture Model Selection Justification

2.2.4. Gesture Information Justification

2.3. Stage Two: Selection of Data-Acquisition Method

2.4. Stage Three: Selection of Gesture-Identification Algorithm

- 10.

- Identifier Implementation: The aim of this subtest was to implement a baseline variant of the three algorithms. The baseline variant of this method should be capable of observing a single human hand and printing the angle of its 15 primary joints to the terminal while also displaying the 3D-skeleton model on screen. The method used to calculate these joint angles is described in Section 2.5. The purpose of this stage is three-fold. Firstly, it serves to assess the operational readiness of the algorithms. Secondly, it assesses the computational requirements necessary to implement the algorithms. Finally, it acquires an operational version of said algorithms upon which future testing would be performed. The key independent variables of this test are the three different algorithms being tested. All algorithms are to be applied on the same 2017 Mac Book Pro that operates using a 3.5 GHz Dual-Core Intel Core i7 CPU, an Intel Iris Plus Graphics 650 1536 MB graphics card, 16 GB 2133 MHz LPDDR3 of RAM, and 250.69 GB of storage.

- 11.

- Qualitative Analysis: The aim of this subtest was to qualitatively observe the implemented algorithms’ localisation accuracy. This subtest was the first step towards ensuring that the selected algorithm conforms to Criteria 1 and 2 and minimizing the drawbacks of the selected data-acquisition method. This investigation stage aimed to observe each algorithm’s accuracy in cases of self-occlusion, rotation, and translation using the operational version derived in the first subtest. The method for this observation was relatively simple. First, a user’s hand was held in a constant position in front of the camera. The displayed three-dimensional model was then recorded. From this position, the hand was then rotated and translated around the camera’s viewport. While these rotations and translations occurred, the displayed model was constantly observed. These observations aimed to determine whether the algorithm could maintain its localisation accuracy despite the movement. The final stage of the observation was to turn the hand so that certain aspects of the hand become occluded from the camera’s POV. This was conducted to determine whether the algorithm could still produce a model despite the occlusion of hand features.

2.5. Stage Four: Validaton of Selected Gesture-Identification Algorithm

- 12.

- Hand pose: The first of these variables was the pose of the hand. In total, three positions were investigated: a fully closed position, a partially closed position, and a fully open position. These three positions were selected because they are stable, easy to hold, repeatable positions, and again, because they were the advised positions suggested by our clinical reference, Jayden Balestra [25]. Furthermore, these three positions were used to simulate a full range of motion of the human hand, as it was important to validate the accuracy of the model across a hand’s full range of motion. An example of the three poses used are shown in Appendix A and Appendix B.

- 13.

- Hand orientation with respect to camera: The second independent variable that was altered over the course of this analysis was the incident angle formed from the camera’s point of view and the observed hand. By changing this angle of orientation, the algorithm’s robustness against rotation and self-occlusion (the drawbacks of single camera RGB solutions) could be quantitatively observed. For each of the three hand poses defined above, four photos were taken: one from directly in front of the hand, one from a 45° offset, one from a 90° offset, and one from a 180° offset. An example of the four viewpoints used are shown in Appendix A and Appendix B. To ensure a high level of accuracy within the test itself, a wide range of controls were put in place, to make sure each stage of the analysis was repeatable and accurate.

- 14.

- Lighting: To avoid lighting variance, all tests were to be conducted in a well illuminated environment, specifically aiming that no shadowing be present on the observed hand.

- 15.

- Background: Background variation is known to have an impact on the MPH modelling process. As this is not a factor currently being analysed, a white backdrop was used for all tests. A white backdrop was used to ensure that there was a high level of contrast between the hand and the background to aid in the feature-extraction process.

- 16.

- Pose stability and body position: To ensure that the same position and viewpoint angles were observed for each participant, two controls were put in place to manage body position and hand stability. The first control is that participants are to kneel in a comfortable position, with their forearm braced against the test bench. The test bench is to contain a set of marks, indicating the appropriate positions for the background and participant forearm.

- 17.

- Pitch, roll, and yaw of the camera: To ensure that the viewpoint orientation was maintained across all participants, and only varied by the desired amounts between tests, the Halide camera application was used [26].

- 18.

- General hand size/distance from camera: Whilst changes in participant hand size were unavoidable, to avoid exacerbating these variations, a fixed camera distance was used for all participants. This was performed by simply having fixed mounting points for the camera on the test bench at the correct location and orientation for each of the desired viewpoint angles.

2.6. Stage Five: Selection of Gesture-Classifcation Algorithm

- 19.

- If the Stage 4 results show that the selected gesture-identification algorithm can accurately and robustly produce a model that reflects the user’s hand, then a low dimensionality classifier built around the 15 single-dimension joint angles should be investigated. The final selected algorithm is that which favours Criterion 5 over Criteria 1 and 2. The algorithms to be investigated are decision trees, KNNs, and linear regression [27].

- 20.

- If the Stage 4 results show a less than ideal model accuracy, then a higher input dimensionality classifier which uses the original 21 three-dimensional coordinate system (63 total dimensions) would be investigated. The final selected algorithm is that which favours Criteria 1 and 2 over Criterion 5. Specifically, the algorithms to be investigated are ANNs, SVM, linear regression, and a non-machine learning bounds-based approach [27,28].

- 21.

- Test data set: A custom data set was to be made for the selected classifiers. Ideally, after the initial group of prospective classifiers had been defined, a common set of ten gestures would be identified between the algorithms. Once this common gesture set had been defined, ten images were created for each gesture and converted into three-dimensional models using MPH. These 100 models formed the test data set for this stage of the investigation. Note, to ensure that Criteria 1 and 2 were assessed correctly, the hands present in the ten selected images varied, in scale, orientation, and pose. By introducing these variations into the common data set, the algorithm’s accuracy will be tested in a more robust fashion as they are not being tested in a ‘best-case scenario’.

- 22.

- Computation power provided to each algorithm: To ensure that no one algorithm is favoured during this analysis process, all testing should be performed on the same device, with no background processes running. When performing classification-time testing, the time taken should only be considered for the ‘prediction stage’ of the classifier. Specifically, this time value should exclude the time taken to initialize/train the classifier, load the MPH model, and any time associated with the creation of the confusion matrices.

2.7. Stage Six: Gesture Mapping and Tuning

3. Results

3.1. Gesture-Identification Selection

3.1.1. Implementation Results

3.1.2. Qualitative Analysis Results

Resistance to Translation

Resistance to Rotation

Resistance to Self-Occlusion

3.1.3. Final Selection

3.2. Gesture-Identification Validation Results

3.2.1. Measured Joint Angles

3.2.2. Calculated Joint Angles

3.2.3. Final Accuracy Percentages

3.3. Gesture-Classsifier Selection

3.3.1. Scope Definition

- 23.

- ANN classifier: Conventional machine-learning classifier built to classify Indian sign language—sourced from [32].

- 24.

- Linear-regression classifier: Conventional machine-learning classifier built to classify Russian sign language—sourced from [33].

- 25.

- SVM classifier: Conventional machine-learning classifier built to classify Indian sign language—sourced from [34].

- 26.

- Bounds-based classifier: Non-machine-learning, statically defined classifier built to classify ASLAN counting gestures—altered version, original found from [31].

3.3.2. Accuracy Results

3.3.3. Computational Performance Results

3.3.4. Final Classifier Selection

3.4. Gesture-Mapping Selection and Tuning

3.5. Performance Overview

- 27.

- Reliability in issuing the intended command: From the results of Stage 5, the final solution proved to be capable of accurately distinguishing between the intended commands within the command set. When combined with the mapping medium developed in Stage 6, the solution was able to reliably transmit the intended commands to the chosen application medium. Hence, this criterion is satisfied.

- 28.

- Reproducibility of the intended command: From the results of Stage 4, the initial confidence for the final solution’s conformity to this criterion was challenged due to MPH lack of rotational robustness. However, through the implementation of a bounds-based classifier, the final solution was able to recognise commands robustly and repeatably despite viewpoint and scale variations. Hence, this criterion is satisfied.

- 29.

- Physically non-restrictive equipment or instrumentation: Given the selection made in Stage 2, the use of a single RGB camera ensured the final solution’s conformity to this criterion. Furthermore, as per the results of Stage 5, the final HGR algorithm is capable of recognising gestures from multiple viewpoints meaning the operator does not have to maintain a perfect position in front of the camera. Hence, this criterion is satisfied.

- 30.

- Ease of operation and shorter user learning cycle: Given the selection made in Stage 1, the use of single-hand, static, sign language gestures ensured that the commands were simple and easy to learn. When combined with the finely tuned, one-to-one gesture-mapping component developed in Stage 6, natural and accessible drone control was facilitated. Hence, this criterion is satisfied.

- 31.

- Computationally inexpensive: Through the computational analysis performed in Stage 5, and the selection of MPH justified in Stage 3, the final solution was specifically selected to be as computationally lightweight as possible. Hence, this criterion is satisfied.

- 32.

- Monetarily inexpensive: Given Stage 2’s selection of an inexpensive, non-specialised data-acquisition method, and the low computational requirements of the final algorithm, the final solution is monetarily efficient. Hence, this criterion is satisfied.

4. Discussion

4.1. Principal Findings

4.2. Results Analysis

4.2.1. Gesture-Identifier Selection Analysis

4.2.2. Gesture-Identifier Validation Analysis

4.2.3. Gesture-Classifier Selection Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Stage 4—Viewpoint and Pose Example

| Pose | Viewpoint Offset | |||

| 0° (Font) | 45° (Forty) | 90° (Side) | 180° (Back) | |

| Open |  |  |  |  |

| Partial |  |  |  |  |

| Closed |  |  |  |  |

Appendix B. Stage 5—Classification Analysis Data Set

| Gesture Identifier | Gesture Reference Image | Models |

| 1 |  |  |

| 2 |  |  |

| 3 |  |  |

| 4 |  |  |

| 5 |  |  |

| 6 |  |  |

| 7 |  |  |

| 8 |  |  |

Appendix C. Stage 5—Classification Raw Confusion Data

| Linear | |||||||

| 4 | 1 | 1 | 2 | 0 | 0 | 2 | 0 |

| 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 6 | 4 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 1 | 7 | 0 | 0 | 1 |

| 0 | 0 | 0 | 7 | 0 | 3 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 |

| 1 | 0 | 3 | 0 | 0 | 0 | 0 | 6 |

| ANN | |||||||

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 5 | 0 | 0 | 0 | 5 | 0 | 0 |

| 1 | 0 | 5 | 1 | 0 | 0 | 3 | 0 |

| 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 |

| 0 | 0 | 0 | 0 | 4 | 0 | 6 | 0 |

| 0 | 4 | 0 | 0 | 0 | 0 | 0 | 6 |

| SVM | |||||||

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 6 | 1 | 0 | 1 | 0 | 0 |

| 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 9 | 1 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 2 | 8 |

| 0 | 2 | 0 | 0 | 0 | 0 | 0 | 8 |

| Bounds-Based | |||||||

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 10 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 |

| 2 | 0 | 0 | 0 | 1 | 0 | 0 | 7 |

Appendix D. Code Methods

Appendix E

| Aircraft | Flight Weight | 80 g |

| Dimensions | 98 × 92 × 41 mm | |

| Propeller Diameter | 76.2 mm | |

| Built in Functions | Range Finder Barometer LED Vision System 2.4 GHz 802.11n Wi-Fi | |

| Electrical Interface | Micro USB Charging Port | |

| Flight Performance | Maximum Flight Range | 100 m |

| Maximum Flight Time | 13 min | |

| Maximum Speed | 8 m/s | |

| Maximum Height | 30 m | |

| Battery | Detachable Battery | 3.8 V–1.1 Ah |

| Camera | Photo | 5 MP (2592 × 1936) |

| FOV | 82.6° | |

| Video | HD720P30 | |

| Format | JPG (Photo), MP4 (Video) | |

| EIS | Yes |

References

- Mahmood, M.; Rizwan, M.F.; Sultana, M.; Habib, M.; Imam, M.H. Design of a Low-Cost Hand Gesture Controlled Automated Wheelchair. In Proceedings of the 2020 IEEE Region 10 Symposium (TENSYMP), Dhaka, Bangladesh, 5–7 June 2020; pp. 1379–1382. [Google Scholar] [CrossRef]

- Posada-Gomez, R.; Sanchez-Medel, L.H.; Hernandez, G.A.; Martinez-Sibaja, A.; Aguilar-Laserre, A.; Lei-ja-Salas, L. A Hands Gesture System of Control for an Intelligent Wheelchair. In Proceedings of the 2007 4th International Conference on Electrical and Electronics Engineering, Bursa, Turkey, 5–7 September 2007; pp. 68–71. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Deep Learning Based Hand Gesture Recognition and UAV Flight Controls. Int. J. Autom. Comput. 2020, 17, 17–29. [Google Scholar] [CrossRef]

- Lavanya, K.N.; Shree, D.R.; Nischitha, B.R.; Asha, T.; Gururaj, C. Gesture Controlled Robot. In Proceedings of the 2017 International Conference on Electrical, Electronics, Communication, Computer, and Optimization Techniques (ICEECCOT), Mysore, India, 15–16 December 2017; pp. 465–469. [Google Scholar] [CrossRef]

- Premaratne, P.; Nguyen, Q.; Premaratne, M. Human Computer Interaction Using Hand Gestures. In Advanced Intelligent Computing Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 381–386. [Google Scholar] [CrossRef]

- Mitra, S.; Acharya, T. Gesture Recognition: A Survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Guo, L.; Lu, Z.; Yao, L. Human-machine interaction sensing technology based on hand gesture recognition: A review. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 300–309. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Gesture recognition for human-robot collaboration: A review. Int. J. Ind. Ergon. 2018, 68, 355–367. [Google Scholar] [CrossRef]

- Damaneh, M.M.; Mohanna, F.; Jafari, P. Static hand gesture recognition in sign language based on convolutional neural network with feature extraction method using ORB descriptor and Gabor filter. Expert Syst. Appl. 2023, 211, 118559, ISSN: 0957-4174. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, Y.; Jin, R.; Yuan, X.; Sekha, R.; Wilson, S.; Vaidyanathan, R. Hand Gesture Recognition with Convolutional Neural Networks for the Multimodal UAV Control. In Proceedings of the 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Cranfield, UK, 25–27 November 2019; pp. 198–203. [Google Scholar] [CrossRef]

- Yoo, M.; Na, Y.; Song, H.; Kim, G.; Yun, J.; Kim, S.; Moon, C.; Jo, K. Motion Estimation and Hand Gesture Recognition-Based Human–UAV Interaction Approach in Real Time. Sensors 2022, 22, 2513. [Google Scholar] [CrossRef] [PubMed]

- Yeh, Y.-P.; Cheng, S.-J.; Shen, C.-H. Research on Intuitive Gesture Recognition Control and Navigation System of UAV. In Proceedings of the 2022 IEEE 5th International Conference on Knowledge Innovation and Invention (ICKII), Hualien, Taiwan, 22–24 July 2022; pp. 5–8. [Google Scholar] [CrossRef]

- Tsai, C.-C.; Kuo, C.-C.; Chen, Y.-L. 3D Hand Gesture Recognition for Drone Control in Unity. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 985–988. [Google Scholar] [CrossRef]

- Lee, J.-W.; Yu, K.-H. Wearable Drone Controller: Machine Learning-Based Hand Gesture Recognition and Vibrotactile Feedback. Sensors 2023, 23, 2666. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Kang, P.; Song, X.; Lo, B.P.L.; Shull, P.B. Emerging wearable interfaces and algorithms for hand gesture recognition: A survey. IEEE Rev. Biomed. Eng. 2022, 15, 85–102. [Google Scholar] [CrossRef] [PubMed]

- Rautaray, S.S.; Agrawal, A. Vision Based Hand Gesture Recognition for Human-Computer Interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Aggarwal, J.K.; Ryoo, M.S. Human Activity Analysis: A Review. ACM Comput. Surv. 2011, 43, 16:1–16:43. [Google Scholar] [CrossRef]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. MediaPipe Hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar] [CrossRef]

- Moon, G.; Yu, S.-I.; Wen, H.; Shiratori, T.; Lee, K.M. InterHand2.6M: A dataset and baseline for 3D interacting hand pose estimation from a single RGB image. In Computer Vision-ECCV 2020 (Lecture Notes in Computer Science); Springer: Berlin/Heidelberg, Germany, 2020; pp. 548–564. [Google Scholar] [CrossRef]

- Ge, L.; Ren, Z.; Li, Y.; Xue, Z.; Wang, Y.; Cai, J.; Yuan, J. 3D hand shape and pose estimation from a single RGB image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10833–10842. [Google Scholar] [CrossRef]

- Jindal, M.; Bajal, E.; Sharma, S. A Comparative Analysis of Established Techniques and Their Applications in the Field of Gesture Detection. In Machine Learning Algorithms and Applications in Engineering; CRC Press: Boca Raton, FL, USA, 2023; p. 73. [Google Scholar]

- Yasen, M.; Jusoh, S. A systematic review on hand gesture recognition techniques, challenges and applications. PeerJ Comput. Sci. 2019, 5, e218. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- American Sign Language. Wikipedia. Available online: https://en.wikipedia.org/wiki/American_Sign_Language (accessed on 3 April 2023).

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand Gesture Recognition Based on Computer Vision: A Review of Techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Balestra, J.; (Climbit Physio, Belmont, WA, Australia). Personal communication, 2022.

- Xu, X.; Zhang, X.; Fu, H.; Chen, L.; Zhang, H.; Fu, X. Robust Passive Autofocus System for Mobile Phone Camera Applications. Comput. Electr. Eng. 2014, 40, 1353–1362. [Google Scholar] [CrossRef]

- Bhushan, S.; Alshehri, M.; Keshta, I.; Chakraverti, A.K.; Rajpurohit, J.; Abugabah, A. An Experimental Analysis of Various Machine Learning Algorithms for Hand Gesture Recognition. Electronics 2022, 11, 968. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Srivastava, G.; Liyanage, M.; Iyapparaja, M.; Chowdhary, C.L.; Koppu, S.; Maddikunta, P.K.R. Hand Gesture Recognition Based on a Harris Hawks Optimized Convolution Neural Network. Comput. Electr. Eng. 2022, 100, 107836. [Google Scholar] [CrossRef]

- Katsuki, Y.; Yamakawa, Y.; Ishikawa, M. High-speed human/robot hand interaction system. In Proceedings of the HRIACM/IEEE International Conference on Human-Robot Interaction System, Portland, OR, USA, 2–5 March 2015; pp. 117–118. [Google Scholar] [CrossRef]

- MediaPipe. MediaPipeHands [SourceCode]. Available online: https://github.com/google/mediapipe/tree/master/mediapipe/python/solutions (accessed on 21 April 2022).

- CVZone. HandTrackingModule [SourceCode]. Available online: https://github.com/cvzone/cvzone/blob/master/cvzone/HandTrackingModule.py (accessed on 23 May 2022).

- Soumotanu Mazumdar. Sign-Language-Detection [SourceCode]. Available online: https://github.com/FortunateSpy5/sign-language-detection (accessed on 21 August 2022).

- Dmitry Manoshin. Gesture_Recognition [SourceCode]. Available online: https://github.com/manosh7n/gesture_recognition (accessed on 9 August 2022).

- Halder, A.; Tayade, A. Real-time vernacular sign language recognition using mediapipe and machine learning. Int. J. Res. Publ. Rev. 2021, 2, 9–17. Available online: https://scholar.google.com/scholar?as_q=Real-time+vernacular+sign+language+recognition+using+mediapipe+and+machine+learning&as_occt=title&hl=en&as_sdt=0%2C31 (accessed on 16 August 2022).

- Damia F Escote. DJITelloPy [SourceCode]. Available online: https://github.com/damiafuentes/DJITelloPy (accessed on 2 April 2022).

- Hamilton, G.F.; Lachenbruch, P.A. Reliability of Goniometers in Assessing Finger Joint Angle. Phys. Ther. 1969, 49, 465–469. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- TELLO SPECS. RYZE. Available online: https://www.ryzerobotics.com/tello/specs (accessed on 25 May 2023).

- Tello. Wikipedia. Available online: https://de.wikipedia.org/wiki/Tello_(Drohne) (accessed on 25 May 2023).

| Joint Number | Thumb | Index | Middle | Ring | Pinkie |

|---|---|---|---|---|---|

| J1 | 162 | 178 | 175 | 178 | 178 |

| J2 | 177 | 172 | 172 | 170 | 172 |

| J3 | 180 | 177 | 180 | 180 | 180 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie |

|---|---|---|---|---|---|

| J1 | 153 | 168 | 168 | 170 | 173 |

| J2 | 157 | 93 | 92 | 87 | 97 |

| J3 | 118 | 113 | 107 | 117 | 113 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie |

|---|---|---|---|---|---|

| J1 | 148 | 98 | 98 | 107 | 107 |

| J2 | 143 | 82 | 83 | 82 | 90 |

| J3 | 117 | 110 | 108 | 108 | 112 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 151 | 156 | 142 | 142 | 144 | 144 | 142 | 140 | 132 | 128 |

| J2 | 151 | 151 | 53 | 54 | 47 | 48 | 34 | 35 | 40 | 41 |

| J3 | 105 | 100 | 164 | 161 | 127 | 115 | 136 | 128 | 147 | 143 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 140 | 138 | 133 | 157 | 123 | 133 | 112 | 112 | 91 | 85 |

| J2 | 144 | 150 | 78 | 63 | 73 | 63 | 66 | 67 | 83 | 89 |

| J3 | 147 | 148 | 133 | 132 | 120 | 123 | 120 | 120 | 118 | 111 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 143 | 149 | 74 | 76 | 73 | 73 | 76 | 75 | 83 | 82 |

| J2 | 154 | 163 | 107 | 103 | 95 | 94 | 92 | 93 | 95 | 95 |

| J3 | 163 | 163 | 113 | 108 | 113 | 113 | 114 | 113 | 113 | 110 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 159 | 161 | 125 | 135 | 121 | 156 | 116 | 148 | 108 | 118 |

| J2 | 154 | 154 | 75 | 25 | 90 | 20 | 97 | 37 | 94 | 54 |

| J3 | 105 | 105 | 163 | 170 | 155 | 173 | 143 | 170 | 157 | 163 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 152 | 154 | 145 | 156 | 154 | 164 | 161 | 176 | 156 | 160 |

| J2 | 161 | 161 | 119 | 97 | 99 | 60 | 103 | 75 | 110 | 94 |

| J3 | 108 | 105 | 124 | 98 | 138 | 132 | 126 | 116 | 122 | 115 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 141 | 142 | 145 | 166 | 158 | 171 | 165 | 170 | 151 | 151 |

| J2 | 164 | 167 | 100 | 87 | 85 | 76 | 78 | 74 | 92 | 88 |

| J3 | 132 | 132 | 129 | 126 | 127 | 124 | 127 | 128 | 134 | 135 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 145 | 134 | 111 | 121 | 113 | 114 | 116 | 115 | 110 | 115 |

| J2 | 142 | 146 | 113 | 113 | 107 | 106 | 104 | 104 | 121 | 122 |

| J3 | 161 | 160 | 139 | 132 | 133 | 132 | 135 | 134 | 145 | 146 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 160 | 163 | 151 | 159 | 162 | 169 | 153 | 159 | 144 | 150 |

| J2 | 153 | 153 | 100 | 89 | 84 | 38 | 91 | 63 | 102 | 85 |

| J3 | 118 | 116 | 151 | 98 | 156 | 153 | 154 | 135 | 146 | 121 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 159 | 165 | 163 | 166 | 168 | 172 | 171 | 179 | 166 | 170 |

| J2 | 175 | 175 | 168 | 175 | 169 | 178 | 171 | 173 | 171 | 171 |

| J3 | 166 | 166 | 177 | 178 | 178 | 179 | 174 | 177 | 172 | 174 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 149 | 149 | 151 | 167 | 164 | 171 | 171 | 172 | 168 | 169 |

| J2 | 171 | 176 | 165 | 173 | 163 | 171 | 163 | 164 | 157 | 157 |

| J3 | 169 | 170 | 175 | 175 | 175 | 175 | 175 | 175 | 172 | 172 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 163 | 159 | 140 | 164 | 147 | 168 | 163 | 171 | 161 | 168 |

| J2 | 169 | 174 | 165 | 169 | 157 | 169 | 161 | 171 | 169 | 172 |

| J3 | 174 | 168 | 167 | 177 | 176 | 178 | 172 | 174 | 170 | 172 |

| Joint Number | Thumb | Index | Middle | Ring | Pinkie | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | 3D | 2D | |

| J1 | 159 | 164 | 161 | 161 | 172 | 177 | 162 | 164 | 152 | 154 |

| J2 | 176 | 177 | 174 | 176 | 171 | 177 | 175 | 179 | 168 | 175 |

| J3 | 168 | 169 | 174 | 177 | 177 | 178 | 175 | 176 | 175 | 176 |

| Hand Position | Percentage Accuracy | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Thumb | Index | Middle | Ring | Pinkie | ||||||

| Avg | Min | Avg | Min | Avg | Min | Avg | Min | Avg | Min | |

| Open | 96.2% | 92.0% | 93.8% | 78.7% | 95.7% | 84.0% | 95.7% | 91.0% | 94.4% | 85.4% |

| Partial | 92.2% | 63.6% | 82.0% | 66.1% | 82.6% | 54.2% | 86.2% | 68.2% | 82.6% | 63.6% |

| Closed | 89.6% | 60.7% | 72.3% | 50.9% | 77.0% | 53.1% | 78.5% | 41.5% | 82.3% | 44.4% |

| Hand Position | Percentage Accuracy | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Thumb | Index | Middle | Ring | Pinkie | ||||||

| Avg | Min | Avg | Min | Avg | Min | Avg | Min | Avg | Min | |

| Open | 96.2% | 92.0% | 95.6% | 84.3% | 96.8% | 89.1% | 96.2% | 92.1% | 95.4% | 86.5% |

| Partial | 91.2% | 64.4% | 88.9% | 72.0% | 77.6% | 41.3% | 86.8% | 67.6% | 85.4% | 66.5% |

| Closed | 88.5% | 60.7% | 57% | 9.8% | 59.7% | 16.9% | 66.4% | 22.0% | 76.6% | 38.9% |

| Hand Position | Percentage Accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| Front | Forty-Five | Side | Back | |||||

| Avg | Min | Avg | Min | Avg | Min | Avg | Min | |

| Open | 96.8% | 91.6% | 94.5% | 84.8% | 93.2% | 78.7% | 96.0% | 85.4% |

| Partial | 88.6% | 71.0% | 90.0% | 81.3% | 76.1% | 63.6% | 85.7% | 54.2% |

| Closed | 67.8% | 41.5% | 86.3% | 64.3% | 84.8% | 60.7% | 80.7% | 51.8% |

| Hand Position | Percentage Accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| Front | Forty-Five | Side | Back | |||||

| Avg | Min | Avg | Min | Avg | Min | Avg | Min | |

| Open | 97.6% | 92.2% | 96.3% | 91.2% | 94.1% | 84.3% | 96.2% | 86.5% |

| Partial | 91.3% | 65.2% | 90.3% | 80.5% | 76.9% | 64.4% | 85.6% | 41.3% |

| Closed | 53.2% | 9.8% | 81.9% | 39.8% | 84.9% | 60.7% | 58.0% | 24.1% |

| Gesture Identifier | Command |

|---|---|

| 1 | Move along z axis (forward velocity) |

| 2 | Move along −z axis (backward velocity) |

| 3 | Move along y axis (upward velocity) |

| 4 | Move along −y axis (downward velocity) |

| 5 | Move along x axis (bank right) |

| 6 | Move along −x axis (bank left) |

| 7 | Set required movement along, x, y, and z axes to zero (stop) |

| 8 | Take-off or land (depending on whether in flight, or landed) |

| Gesture Identifier | Command |

|---|---|

| 1 | Increase velocity along z axis (forward velocity) |

| 2 | Increase velocity along −z axis (backward velocity) |

| 3 | Increase velocity along y axis (upward velocity) |

| 4 | Increase velocity along −y axis (downward velocity) |

| 5 | Increase velocity along x axis (bank right) |

| 6 | Increase velocity along −x axis (bank left) |

| 7 | Set velocity along, x, y, and z axes to zero (stop) |

| 8 | Take-off or land (depending on whether in flight or landed) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khaksar, S.; Checker, L.; Borazjan, B.; Murray, I. Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition. Sensors 2023, 23, 5462. https://doi.org/10.3390/s23125462

Khaksar S, Checker L, Borazjan B, Murray I. Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition. Sensors. 2023; 23(12):5462. https://doi.org/10.3390/s23125462

Chicago/Turabian StyleKhaksar, Siavash, Luke Checker, Bita Borazjan, and Iain Murray. 2023. "Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition" Sensors 23, no. 12: 5462. https://doi.org/10.3390/s23125462

APA StyleKhaksar, S., Checker, L., Borazjan, B., & Murray, I. (2023). Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition. Sensors, 23(12), 5462. https://doi.org/10.3390/s23125462