1. Introduction

Integral imaging is a well-known technique for visualizing and recognizing three-dimensional objects. Since Lippmann proposed integral imaging technology in 1908 [

1], it has been actively studied in various fields, including 3D image recording, 3D visualization, and 3D object recognition [

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15]. Specifically, computational integral imaging exhibits several advantages over traditional 3D imaging techniques, such as the benefit of a full parallax with white light and continuous viewpoints, without the need to wear eyeglasses. It provides a more immersive and realistic viewing experience in 3D visualization and virtual reality applications.

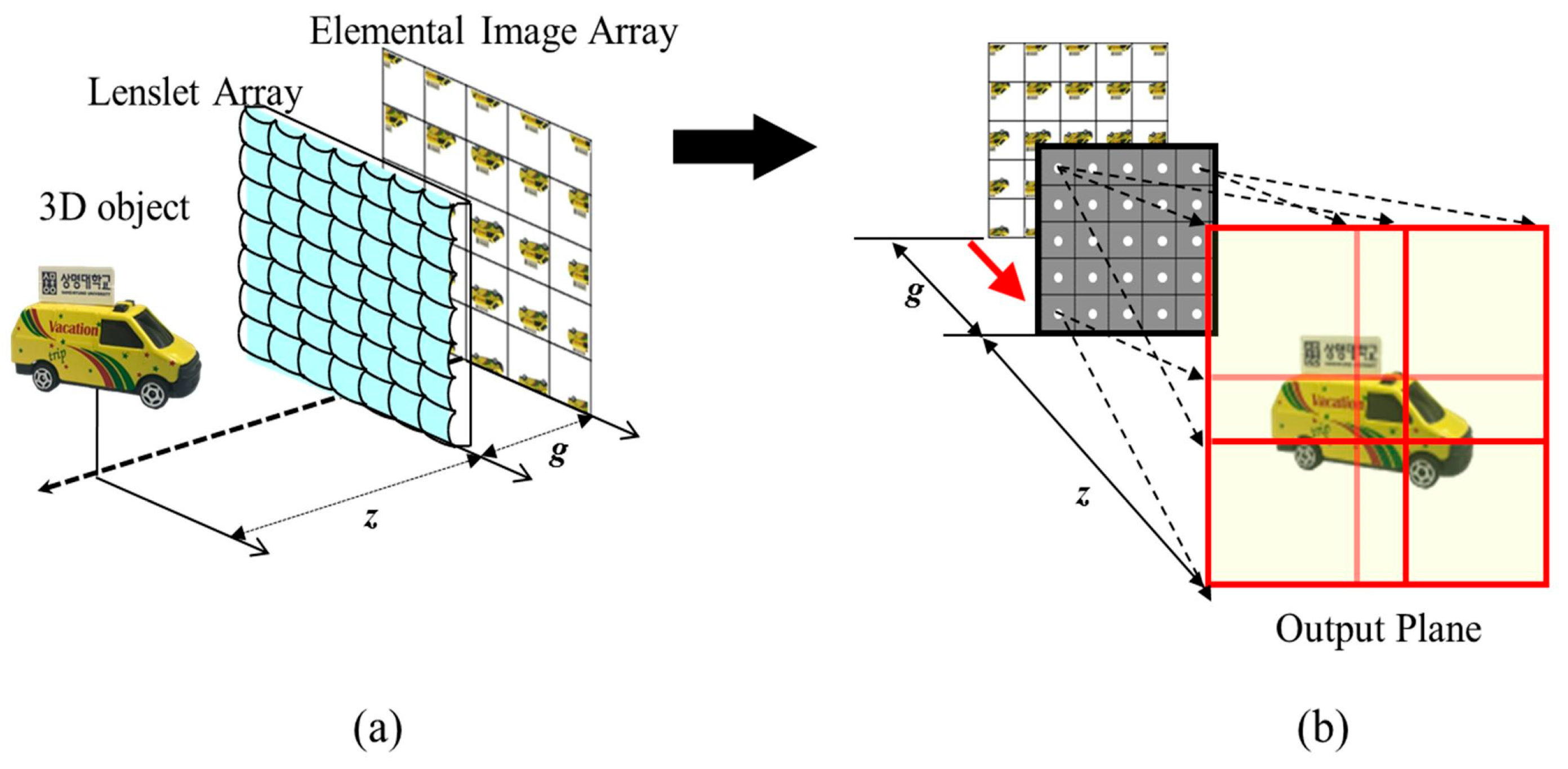

The computational integral imaging system is composed of a pickup process and a reconstruction process, as shown in

Figure 1. In the pickup process, an image camera captures rays coming from a three-dimensional object and passing through a lenslet array. These recorded rays are known as an elemental image array (EIA). The reconstruction process generates 3D images from the EIA by employing a computational integral imaging reconstruction (CIIR) method. This CIIR method overcomes optical limitations such as lens aberrations and barrel distortion, producing 3D volume images for recognizing 3D objects and estimating their depths.

Generally, computational integral imaging reconstruction (CIIR) methods are based on back projection [

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40]. The principle of back projection involves projecting 2D elemental images onto a 3D space and overlapping each projected image at a reconstruction image plane [

16,

17,

18,

19]. Back projection-based CIIR methods, owing to their straightforward model for ray optics, have been extensively researched for the improvement of 3D imagery [

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40]. These methods can be categorized into pixel-mapping-based projection, windowing-based projection, and convolution-based projection. Pixel mapping methods project each pixel of an elemental image array into 3D space through a pinhole array [

20,

21,

22,

23,

24,

25], reducing computational costs and improving the visual quality of the reconstructed images. Windowing methods project weighted elemental images into a 3D space, with windowing functions being defined from a signal model of CIIR. The signal model enhances the visual quality of the reconstructed images by eliminating blurring and lens array artifacts [

26,

27]. Recently, CIIR methods utilizing convolution and the delta function have been introduced to acquire depth information, offering improvements in reconstructed image quality and control over depth resolution [

28,

29,

30,

31,

32,

33,

34,

35]. In addition, CIIR methods using a tilted elemental image array have been proposed to enhance image quality [

36,

37]. A depth-controlled computational reconstruction using sub-images or continuously non-uniform shifting pixels was proposed to achieve improved depth resolution and image quality [

38,

39], and a deep learning-based integral imaging system that uses a pre-trained Mask R-CNN was suggested to avoid blurry areas that are out of focus [

40].

Existing methods of computational integral imaging reconstruction (CIIR) typically involve magnification, overlapping, and normalization processes. However, to reduce computational costs, magnification can be replaced with shifting processes. The overlapping process is necessary and cannot be eliminated. The normalization process is also necessary to correct for uneven overlapping artifacts. However, this process demands a significant amount of memory and computing time, equivalent to that of the overlapping process. As the size of each elemental image increases, memory usage and processing time also increase, making this method unsuitable for real-time applications.

In this paper, we present a novel method for computational integral imaging reconstruction (CIIR) using elemental image blending. Our proposed technique eliminates the need for the normalization process to compensate for uneven overlapping artifacts, resulting in decreased memory usage and computational time compared to those of existing methods. We conducted an analysis of the impact of elemental image blending on a CIIR method using windowing techniques. Our model and analysis show that the proposed method is theoretically superior to the standard CIIR method. Additionally, we performed computer simulations and optical experiments to evaluate the proposed method. The experimental results indicate that compared to existing CIIR methods, our method exhibits approximately half the memory usage, along with and improved processing speed. Moreover, the proposed method enhances the image quality over that of the standard CIIR method.

2. Conventional Computational Integral Imaging Reconstruction

The conventional CIIR method is depicted in

Figure 2. The model is expanded by the window model to explain existing techniques using the same model. Each elemental image is subjected to a window function, moved according to the shift factor, and then overlapped. The number of overlaps within the reconstructed image plane may be inconsistent, prompting the execution of a normalization process to remove the uneven overlapping artifact. By repeatedly carrying out these operations along the

z-axis, we can achieve a volumetric reconstruction of a 3D object.

However, the standard CIIR method exhibits some artifacts that lead to a decline in visual quality. During the overlapping process, uneven overlapping artifacts—also known as granular noise—emerge in the reconstructed images [

26]. Furthermore, blurring artifacts appear in the out-of-focus areas, and lenslet artifacts arise due to the lenslet’s shape. As the lenslet shape is rectangular, the reconstructed images appear blocky. The window-based CIIR method has been proposed as a means to enhance the quality of reconstructed images by eliminating these artifacts [

26,

27]. This CIIR approach incorporates a window function in the overlapping process, using a signal model.

The standard CIIR method can be viewed as a CIIR approach that employs a rectangular window. In this case, the rectangular window can be substituted with a different window, such as a triangular or cubic one. Switching the rectangular window to a triangular window reduces blurring artifacts and lenslet artifacts, enhancing the image quality of the reconstructed image [

27]. The primary cause of these artifacts is the discontinuity in the windows. Thus, to minimize artifacts, a smooth and continuous window should be implemented to eliminate discontinuity and produce seamlessly reconstructed signals.

To explain the signal model of integral imaging, we introduce our previously proposed signal model [

26].

Figure 3a is an optical model using a 1D pinhole array of an integral imaging system.

Figure 3b is a model that abstracts the integral imaging system by introducing a rectangular window function. Here,

fz(

x) is the intensity signal of an object located at the distance z from the lens array, and

rz(

x) is the reconstructed signal at the distance z obtained by the back-projecting and overlapping of the picked-up EIA passing through the virtual pinhole array. The EIA pickup process is described as a procedure that windows, inverts, and downscales the

fz(

x) signal. On the contrary, the reconstruction process is explained as a process that re-inverts, upscales, and overlaps these elemental images. This means that the inversion and scaling effects disappear, leaving only the windowing and overlapping effects. It thus allows for the reconstruction model to be described as a simplified model in

Figure 3b.

Based on the model in

Figure 3b, the relationship between the original signal

fz(

x) and its reconstructed signal

rz(

x) is written as

where

πi(

x/w)

= π0((

x-i⍺)/

w), ⍺ represents the size of each elemental image,

N represents the number of elemental images, and

w represents the size of the window

π0(

x). Here, the shifted window function (SWF)

πi(

x) is a shifted version of the window function

π0(

x), where the function

π0(

x) = 1, for 0 ≤

x ≤ 1, and zero otherwise. In SWF, the shifting factor

s is a multiple of the elemental signal length ⍺; that is,

s =

i·⍺. The sum of the shifted windows is

Sπ(

x), and from Equation (1), the original signal

fz(

x) is obtained as

fz(

x) =

rz(

x)/

Sπ(

x). Here, the normalization process is considered by the division of a reconstructed image by the summation of SWFs in the window-based CIIR method.

For example,

Figure 4a shows nine shifted window functions (SWFs) using a rectangular window function, while

Figure 5a displays nine SWFs derived from a triangular window function. The sum of these SWFs, represented as

Sπ(

x), is highlighted in red at the bottom of each figure. The function

πNi(

x) in

Figure 4b represents the normalized window function of

πi(

x), achieved by dividing each rectangular window function

πi(

x) by

Sπ(

x); hence,

πNi(

x) =

πi(

x)/

Sπ(

x). As seen in the lower section of

Figure 4b, the sum of these normalized SWFs equals one.

Figure 5b echoes this concept, presenting normalized SWFs for the triangular window function. It can be observed that the window functions in

Figure 5a are simply translated versions of

πi(

x); however, their normalized window functions can be different due to the shape of the function

Sπ(

x), as illustrated in

Figure 5b. The differences are particularly noticeable in

πN0(

x) and

πN8(

x). In conclusion, applying normalized window functions to the EIA eliminates the need for the normalization process of the CIIR. Therefore, the development of a method using normalized window functions is important.

3. Proposed CIIR Model via Elemental Image Blending

A computational reconstruction method for integral imaging systems is proposed by using elemental image blending. Conventional CIIR methods require a compensation process to normalize the reconstructed images due to uneven overlapping. The normalization process is performed by dividing the reconstructed images by the summation of the SWFs. This process requires additional memory because it needs to record the overlapping numbers for all pixels of the reconstructed images. To eliminate the normalization process, we introduce elemental image blending. Here, the overlapping process is modified with elemental image blending; thus, the normalization process can be canceled out. It turns out that our method saves approximately half of the memory, consequently improving the computation speed. In this section, we describe the proposed method and provide a mathematical analysis of the impact of the elemental image blending technique on CIIR using the window signal model.

Figure 6 depicts the proposed CIIR method based on elemental image blending. The flow of the proposed method is as follows: First, two elemental images,

and

, are obtained, and then overlapping is performed using elemental image blending. The resulting image is then overwritten onto the reconstruction buffer. This process is repeated for all the elemental images of the EIA. In the proposed method, the reconstruction buffer is a temporal memory that stores the overlapped image

of the two elemental images,

and

. The elemental image

is extracted from the reconstruction buffer, while

is obtained from

elemental image in EIA. As depicted in

Figure 6a, the proposed method consists of the overlapping process using image blending, the extraction process of the elemental image

, and the overwriting process of

, as explained in the following paragraphs.

Figure 6b describes the process of overlapping two images,

and

, using image blending. In the overlapping process, there are areas where the two input images overlap and areas where they do not. The area where two images overlap, with blending, is referred to as the blending area. Let the parameter

be the width of the input images, and the parameter

be the shift factor. The overlapping range in

is from

to

, while the overlapping range in

is from 0 to

. Thus, the blending area and the non-blending area are separable. The blending area is blended by the well-known alpha-blending for elemental image blending. We choose the alpha-blending as the elemental image blending due to its simplicity and effectiveness. The blended image is stored in

from

to

. The non-blending areas of

and

are copied in

from 0 to

and in

from

to

, respectively. Thus, the images of each area are merged to output

Figure 6c illustrates the process of extracting

from the reconstruction buffer. The overlapping results are stored in the reconstruction buffer, and thus the extracted image of size

from this buffer is different from the elemental image. In the initial reconstruction buffer, the elemental image located at the leftmost position in EIA is written as

The initial queue pointer,

, points to the 0th column of the reconstruction buffer. The image of size

, extracted from

, is defined as

. As the overlapping and overwriting process repeats, the queue point is updated.

can be obtained by extracting an image of

in size from

.

Figure 6d illustrates the process of overwriting

in the reconstruction buffer.

, the overlapping image of

and

, is overwritten onto the buffer, starting from

. Here

is the position obtained by moving

by

. As the overlapping and overwriting processes are repeated, the queue pointer is updated as

When is input in the overwriting process, the queue pointer is updated, and is overwritten onto the buffer starting from .

We also provide the flowchart of the proposed method, as shown in

Figure 7. The proposed method employs horizontal overlapping for each row of the EIA, as shown in

Figure 7a. Vertical overlapping is applied to the resulting images after horizontal overlapping, which is achieved by the use of horizontal overlapping of the resulting transposed images.

Figure 7b depicts the flowchart of the overlapping process. The first elemental image,

, is written in the reconstruction buffer. Subsequently,

is extracted from the reconstruction buffer, and

is fetched from the EIA for image blending. This process is repeated until the final elemental image is blended in the image blending step.

The proposed method of the overlapping and overwriting processes, as described above, can eliminate the normalization process. Here, we mathematically explain how the normalization process can be eliminated and how our elemental image blending affects the image quality of the reconstructed image.

To analyze the overall CIIR model using elemental image blending, elemental image blending is represented by windowing an elemental image. Here, alpha-blending was used as an example. When two elemental image signals are alpha-blended, two weighted signals,

and

, are represented as two windowing functions for two elemental images, which are written as

As the elemental images overlap, the weights of the signals change due to overlapping, as shown in

Figure 8. Accordingly, the weights after overlapping can be represented in different windowing functions. Let the weight of the first elemental image signal be

. It is then defined as the product of the shifted function of

,

Similarly, the weight of the

signal,

, is defined as

which is the product of

and the shifted function of

when

is between 2 and

. As shown in

Figure 8, the

signal is required for image blending with the next elemental image; thus, its overlap decreases as

increases from

to the end.

According to the formulas, the alpha blending signal for each elemental image can be represented in shifted window functions, as shown in

Figure 9. Since the summation of window functions is a unit, the original signal can be reconstructed without compensation processes, such as normalization. Note that the form of each window in

Figure 9 is a continuous and smooth window. According to the window theory mentioned in

Section 2, a continuous window is excellent for removing lenslet artifacts, and it reduces blurring artifacts that occur in the standard CIIR method, which utilizes the rectangular window. Therefore, the proposed method using elemental image blending provides better image quality than does the standard CIIR, while also and requiring less memory and computing time.

4. Experiment Results and Discussion

To demonstrate the usefulness of our proposed method, we conducted optical and computational experiments.

Figure 10 illustrates the optical experimental setup consisting of lenslets and a CCD camera, along with the acquired EIAs in that environment. The size and focal length of each lenslet is 1.08 mm and 5.2 mm, respectively. The CCD camera in use is the Canon EOS 800D model, equipped with an APS-C size 1.6× crop sensor and an effective pixel count of 24.2 million pixels. Two 3D objects, green and yellow cars, were used in the experiment. The EIA for the green car comprises 32 × 32 elemental images, with each elemental image consisting of 32 × 32 pixels. The EIA for the yellow car consists of 45 × 34 elemental images, and each elemental image has a resolution of 58 × 58 pixels. The resolution of the elemental images can be controlled by adjusting the distance between the camera and the lens array. The location of the 3D objects,

, is around 20 mm. We reconstructed the 3D objects using existing CIIRs with rectangular and triangular windows and our proposed CIIR.

Figure 11 shows the reconstructed images of the green car with the output plane locations set at 20 mm and 30 mm. The images in

Figure 11a are reconstructed by the standard CIIR with a rectangular window. In the reconstructed image at 20 mm, there is a blocky area due to lenslet artifacts. Similarly, the reconstructed image at 30 mm also shows blocky areas, and the object boundary is defocused and blurry, as highlighted by the dotted ellipses areas. The images in

Figure 11c are reconstructed by the proposed CIIR. In the reconstructed image at 20 mm, there are no blocky areas, and the object boundary is clean, compared with those of the standard method. The reconstructed image at 30 mm also shows no blocky areas, and the object boundary is relatively sharp. The images in

Figure 11b are reconstructed by the window-based CIIR with a triangular window. The images suffer from less lenslet and blurring artifacts, compared to those obtained by using the CIIR with a rectangular window. Also, they show similar quality to those of the reconstructed images using the proposed CIIR.

Figure 12 shows the reconstructed images of the yellow car with the same locations of the output plane as those in the previous experiment.

Figure 12a shows the images reconstructed by the window-based CIIR with a rectangular window. As can be seen from the enlarged images, the star and text are blurry due to blurring artifacts, and there are blocky areas due to the lenslet artifacts. On the other hand, the image reconstructed by the proposed CIIR using alpha blending, indicated in

Figure 12c, shows reduced blurring artifacts and significantly fewer lenslet artifacts. The image quality of the proposed method is similar to that of the image reconstructed by the window-based CIIR with a triangular window, as shown in

Figure 12b.

We conducted another experiment using the public light field dataset provided by the Heidelberg Collaboratory for Image Processing (HCI) [

41].

Figure 13a,b displays one of the HCI datasets used in our experiment, called ‘bicycle’. As the HCI data are provided in a set of 81 files, we concatenate these into a 2D array to form an EIA suitable for CIIR. Consequently, the newly prepared EIA comprises 9 × 9 elemental images, each of which has a size of 512 × 512 pixels.

Figure 13a presents a magnified section of this EIA.

Note that the characteristics of the HCI data contrast, to some extent, with those of an optical EIA. In general, an EIA directly picked up through a planar lens array exhibits a positive disparity for near objects. The disparity approaches zero as the distance to the objects increases. On the other hand, the disparity of the two nearest elemental images from the HCI data shows positive values for near objects and negative values for distant examples. Moreover, the disparity values present in these HCI images do not exceed 1. These observations can be easily inferred from the elemental image difference, as illustrated in

Figure 13c.

To apply the HCI images to CIIR, an EIA from these HCI images should be prepared. Given that the disparity value is less than one, each elemental image is magnified by image interpolation. For addressing negative disparity values, each elemental image is inverted by image flipping. Subsequently, these elemental images are concatenated into a 2D array to construct the EIA. Once prepared, this EIA could be applied to the CIIR methods, including our proposed method, allowing us to evaluate the experimental results.

Figure 14 illustrates the reconstructed images of the ‘bicycle’ dataset, focusing on near and far objects, respectively. For near focus, the shift factor for CIIR is set to 1 pixel. As performed for the distant focus, elemental images were similarly flipped and magnified twice, and they were then applied to the CIIRs, with a shift factor of 1 pixel.

Figure 14a,b depicts the images reconstructed from the CIIR methods, based on rectangular and triangular window functions, respectively.

Figure 14c shows the images from the proposed method. The areas marked as ① and ③ in

Figure 14 represent the images of objects located at a depth in focus. All three methods show clear objects. Areas marked as ② and ④ in

Figure 14 represent the images of objects positioned at a depth out of focus.

As depicted in

Figure 14c, the images reconstructed from the experiment utilizing the HCI data also show that our method yields relatively sharper images compared to those captured using conventional methods. Notably, this experimental result indicates that our approach significantly broadens the depth of focus relative to the existing methods. Furthermore, the blurring phenomenon in the areas out of focus is reduced in the proposed method, yielding smoother image quality compared to that of the conventional methods.

We repeated a time–memory measurement experiment to demonstrate the usefulness of the proposed method in terms of memory and time efficiency. The experiment was performed by implementing CIIRs with elemental image blending, a rectangular window, a triangular window, and a cubic window in MATLAB R2022a. The experiment used an EIA of 10 × 10 elemental images, with 512 × 512 pixels as the size of each elemental image. The computer used for time measurement was equipped with an Intel® Core™ i7-10700KF CPU @ 3.80 GHz processor and 64 GB RAM.

In this experiment, we conducted CIIRs without magnification in order to minimize memory usage. The window-based CIIR methods require memory for normalization, in which the amount of required memory is the same size as the reconstructed image. In contrast, the proposed CIIR requires only the memory for a reconstructed image. The experiment employed a fixed size for both the elemental image and the number of elemental images. For each CIIR method, the shifting factors were varied with values of 8, 16, 32, and 64.

Figure 15 shows the simulation results as a scatterplot. Typically, memory requirements increase as the shifting parameter increases in CIIR. Moreover, the computational time required follows the order of the rectangular, triangular, and cubic windows, in accordance with the complexity of CIIR. As shown in

Figure 15, the proposed CIIR using alpha blending requires the least time and memory, when compared to the other methods.

A notable point to compare is that the proposed method requires less time and memory than the method using a triangular window, which provides a similar image quality to that of the proposed method in the optical experiment conducted above. The proposed method requires less time than the method using a rectangular window due to the influence of memory access time. Note that the proposed method demands less time and memory than the CIIR employing a triangular window, which achieves similar image quality to that of the proposed method in the above optical experiment.

Therefore, the experimental results show that the proposed CIIR provides reconstructed images with better subjective image quality than the standard CIIR. It also provides reconstructed images with similar image quality to that of the window-based CIIR using a triangular window. In addition, from an objective evaluation perspective, the proposed CIIR method requires less processing time due to less memory usage compared to that of the window-based CIIRs.