Wireless Local Area Networks Threat Detection Using 1D-CNN

Abstract

:1. Introduction

- a framework for threat detection in WLANs based on 1D-CNN has been developed, removing the requirement for manual selection of detection features and the creation of a model to profile normal behavior;

- a feature selection method based on the F-value metric was proposed, which indicates which feature contains the most information about the origin of the frame;

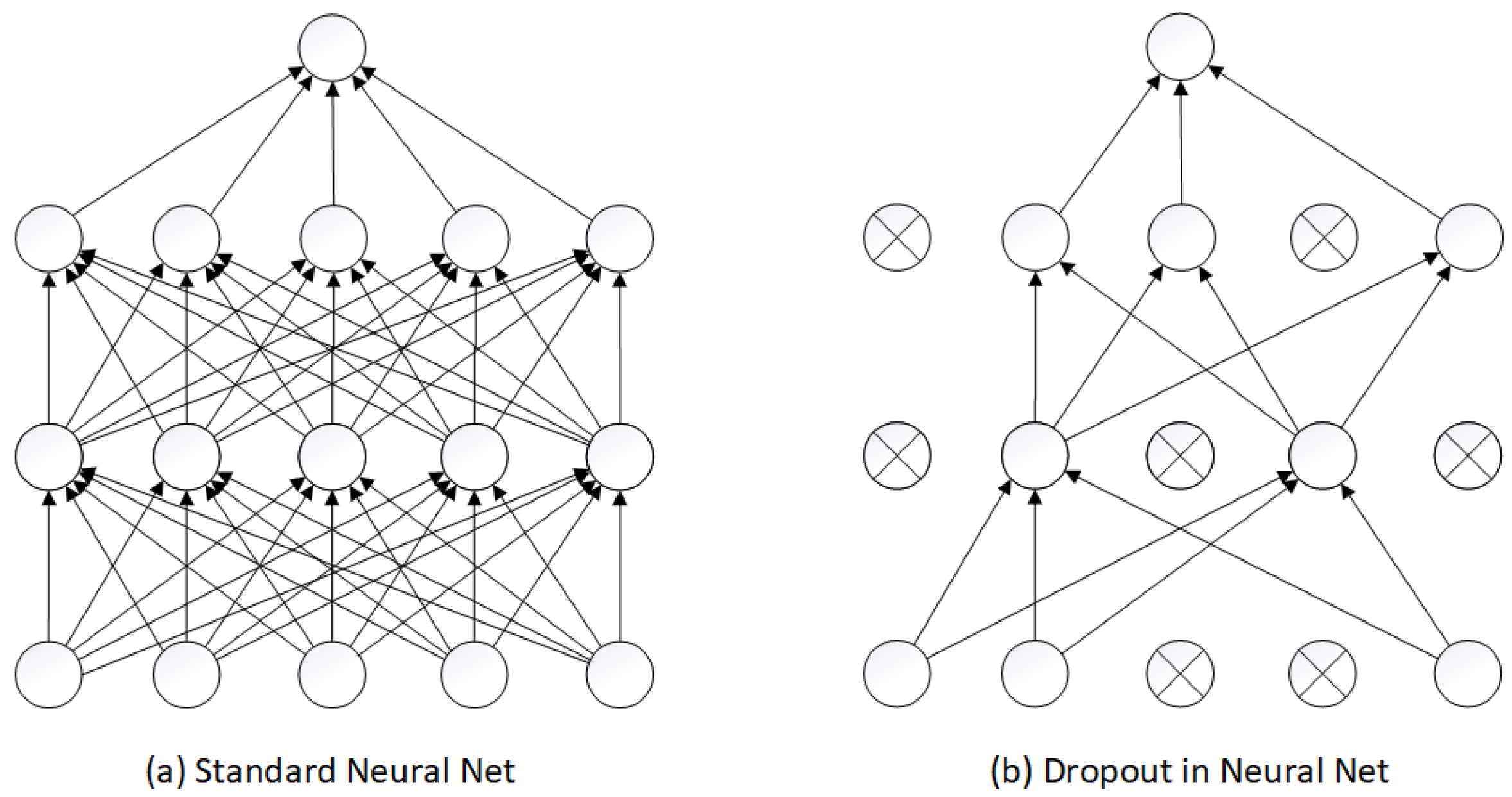

- dropout regularization technique is used to prevent overfitting during the training process, which allows to increase the randomness of parameters and ensure accurate predictions on unknown samples;

- the proposed 1D-CNN model is relatively simple, very fast, and thus consumes little energy, which was shown by the performance results;

- the model was trained on data from the complete dataset that was not considered in other works, to the best of our knowledge this is the first paper which took this into consideration, no one else has trained their model on such a large data set.

2. State of the Art

3. Possible IEEE 802.11 MAC Layer Threats

3.1. Flooding

- Deauthentication flood-it involves sending a large number of deauthentication management frames with a specific destination MAC address. This results in the connection loss of a client with that MAC address, or if the broadcast address is used, the disconnection of all clients that receive the frame. The work [34] shows that even the IEEE 802.11w extension does not completely solve the problems with this attack.

- Disassociation flood-this attack is similar to a deauthentication flood, but uses disassociation management frames instead. The result is the same, with clients losing their connection to the network.

- Block Acknowledge flood-is effective against IEEE 802.11 networks that use the Block Acknowledge feature. The attacker sends a fake ADDBA message on behalf of a real client with high sequence numbers, causing the AP to not accept frames from the STA until the sequence numbers in the ADDBA message are reached. The authors in [35] exploit this vulnerability.

- Authentication request flood-it involves sending a large number of authentication request frames, which overloads the AP and can cause it to shut down and drop the wireless network [36].

- Fake Power Saving flood-it takes advantage of the Power Saving mechanism by sending a null frame on behalf of the victim with the power saving bit set to 1. The AP starts buffering frames for that client and sends the information about it in the ATIM field in the beacon frame. The client obviously is not in a sleep state therefore he ignores all ATIM messages. This causes the AP to buffer frames for the victim and can result in dropped frames [37].

- Clear-to-Send flood-it relies on the Request-to-Send (RTS)/Clear-to-Send (CTS) mechanism and involves sending a large number of CTS frames with the duration set to its maximum value. This causes STA to wait for a transmission that never occurs, preventing other clients from accessing the medium [38].

- Request-to-Send flood-this attack is similar to a CTS flood, but involves sending a large number of RTS frames, which also prevents other clients from accessing the medium [38].

- Beacon flood-it involves sending multiple beacon frames with different SSIDs, which can cause confusion for end users attempting to connect to the correct network [39].

- Probe Request flood-this attack aims to drain resources from the AP by sending a large number of probe request frames [40].

- Probe Response flood-this attack involves flooding a victim with a large number of probe response frames after receiving a probe request frame, causing the victim to have difficulty connecting to the correct network.

3.2. Impersonation

- Honeypot-a wireless network created by an attacker that is designed to attract unsuspecting victims. The victim is typically unaware that they are connecting to a network created by the attacker, who then has access to all the security keys and can monitor all the traffic on the network [41].

- Evil Twin-a wireless network created by an attacker that is an exact replica of an existing network used by the victim. The attacker may attempt to lure clients from the legitimate network to their own, and then launch further attacks on the newly associated devices [42].

- Caffe Latte-a method of attacking wireless networks where direct access to the access point is not necessary. The attacker simply needs to be in proximity to a device that has already authenticated to the target network. The attacker creates an identical copy of the network and tricks the device into connecting to it, and then uses ARP Request injection to collect enough initialization vectors (IVs) to crack the WEP key.

- Hirte-an extension of the Caffe Latte attack in which ARP packets are fragmented to collect even more IVs from the connected device, making it easier to crack the WEP key.

3.3. Injection

- ARP Injection-this method involves injecting a fake ARP Request into the wireless network. The targeted STA, whose IP matches the request, will respond with an ARP response, thereby producing more IVs that can be used to crack the WEP key.

- Fragmentation-the attacker first performs a fake authentication with the Access Point (AP) and then receives at least one frame. Since the Logical Link Control (LLC) header has many known fields, the attacker can guess the first 8 bytes of the keystream. Then, the attacker constructs a frame with a known payload, breaks it into fragments, and sends them to the AP with the broadcast address as the destination. The AP then processes these fragments, puts them together, and sends them to all STAs. Since the content of these fragments is known, the attacker can retrieve the WEP pseudo random generation algorithm which can be later used for various injection attacks.

- Chop-Chop-this attack is based on dropping the last byte of the encrypted frame and then guessing a valid Integrity Check Value (ICV) for this truncated frame using the AP. When the attacker injects a truncated frame into the network, the AP will state whether or not the ICV is valid. Once the attacker has chosen a valid ICV, he is able to retrieve one byte of keystream.

4. Dataset Description

4.1. General Description

4.2. Preprocessing Steps

- Every column containing duplicate information or being empty was removed.

- Every column containing non-numeric values, such as SSID, was removed.

- Every column related to the test environment in which the dataset was recorded, such as MAC addresses and SSID names, were removed.

- Not all frames contain all the fields described in the dataset. In such cases, the missing values were replaced with −1, as all present fields have positive values and the absence of a field was indicated by a negative one

- The dataset was balanced. Excess of normal records was dropped at least to obtain a 1:1 ratio with the number of attack records.

- Every field was converted to a decimal floating point number.

- The 36 best features were selected using the ANOVA F-value algorithm. ANOVA, or Analysis of Variance, is a statistical technique used to compare the means of different groups. It is often used to test for significant differences between the means of two or more groups. The F-value, also known as the F-ratio, measures the ratio of the variance between groups to the variance within groups. In ANOVA, the F-value is used to determine whether the means of the groups are significantly different from each other. To select features using ANOVA-F, an F-test is performed on each feature, and the features with the highest F-values are selected (see Table 4). This approach is based on the idea that the features with the highest F-values are the ones that are most likely to be important for distinguishing between different groups.

- Dataset was scaled using MinMaxScaler which is described by the following equationswhere min, max = feature range.

- Classes were encoded using One-Hot Encoding [43]. Each class was replaced by a vector of the same length as the number of classes, which contained only zeroes. For each class, a zero in its respective vector was replaced with a one, so that each class was assigned a unique vector. This can be seen in the following example.

| Feature Name | F-Value | Feature Name | F-Value |

|---|---|---|---|

| wlan.fc.pwrmgt | 653,236.4 | radiotap.channel.type.cck | 83,735.6 |

| radiotap.channel.type.ofdm | 83,234.3 | wlan.wep.icv | 71,694.1 |

| radiotap.datarate | 66,488.8 | wlan.fc.protected | 55,439.5 |

| wlan.duration | 52,483.1 | wlan.wep.key | 46,696.8 |

| wlan.fc.type_subtype | 42,291.6 | wlan.qos.ack | 36,927.5 |

| wlan.qos.amsdupresent | 36,834.8 | wlan.fc.ds | 34,711.1 |

| wlan.qos.tid | 33,638.9 | frame.len | 25,735.4 |

| wlan.qos.eosp | 24,979.2 | data.len | 24,374.6 |

| wlan.frag | 22,569.9 | wlan.seq | 14,606.9 |

| wlan_mgt.fixed.beacon | 14,334.8 | wlan.wep.iv | 12,185.2 |

| wlan.fc.retry | 8792.1 | wlan.fc.frag | 5998.5 |

| wlan.qos.bit4 | 5339.3 | wlan.qos.txop_dur_req | 5339.3 |

| wlan_mgt.fixed.capabilities.cfpoll.ap | 4863.4 | wlan_mgt.tim.bmapctl.multicast | 3528.0 |

| wlan.tkip.extiv | 3300.0 | wlan_mgt.fixed.capabilities.apsd | 3036.0 |

| wlan_mgt.fixed.capabilities.radio_measurement | 3035.8 | wlan_mgt.fixed.capabilities.agility | 3031.4 |

| wlan_mgt.fixed.capabilities.del_blk_ack | 3026.6 | wlan_mgt.fixed.capabilities.pbcc | 3026.1 |

| wlan_mgt.fixed.capabilities.ibss | 3025.2 | wlan_mgt.fixed.capabilities.dsss_ofdm | 3025.2 |

| wlan_mgt.fixed.capabilities.imm_blk_ack | 3025.1 | wlan_mgt.fixed.capabilities.spec_man | 3024.1 |

4.3. Dataset Division

5. Deep Learning Models

- Multilayer Perceptron (MLP)-model composed of fully connected layers, e.g., used in regression, classification, dimensionality reduction

- CNN-model composed of convolutional layers, e.g., used in image processing and classification, signal filtering and analysis.

- Long Short-Term Memory (LSTM)-a deep learning model which has feedback connections allowing it to process whole sequences of data, e.g., used in handwriting recognition and speech recognition.

- Autoencoder-a deep learning model which consists of two submodels, encoder, and decoder. Its main goal is to reduce input to a lower dimension using the encoder and then reconstruct the input data from it using the decoder, e.g., used in dimensionality reduction, data compression, and decompression, signal denoising.

5.1. Convolutional Neural Networks

5.2. Regularization

6. Proposed 1D-CNN Model

7. Model Training and Evaluation

7.1. Environment Overview

- Ubuntu 22.04.2 LTS x86_64

- Python 3.9.12

- numpy 1.20.3

- pandas 1.3.4

- scikit_learn 1.2.1

- tensorflow 2.11.0

- wandb 0.13.10

7.2. Obtained Results

- Precision =

- Recall =

- F1 score =

- AUC = Area under ROC (receiver operating characteristic curve) curve

8. Discussion

- wlan_mgt.fixed.timestamp,

- wlan_mgt.fixed.capabilities.imm_blk_ack,

- wlan_mgt.fixed.capabilities.del_blk_ack.

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 1D-CNN | one-dimensional Convolutional Neural Network |

| 2D-CNN | two-dimensional Convolutional Neural Network |

| AP | Access Point |

| ARP | Address Resolution Protocol |

| ATIM | Announcement Traffic Indication Message |

| AWID | Aegean Wi-Fi Intrusion Dataset |

| CAE | Contractive Auto-Encoder |

| CDBN | Conditional Deep Belief Network |

| CNN | Convolutional Neural Networks |

| CTS | Clear to Send |

| DDQN | Double Deep Q-Network |

| DRL | Deep Reinforcement Learning |

| FN | False Negative |

| FP | False Positive |

| GPU | Graphics Processing Unit |

| ICV | Integrity Check Value |

| IDSs | Intrusion Detection Systems |

| IoT | Internet of Things |

| IV | Initialization Vector |

| IP | Internet Protocol |

| LLC | Logical Link Control |

| LSTM | Long Short-Term Memory |

| MAC | Medium Access Control |

| MLP | Multilayer Perceptron |

| PHY | Physical |

| RBF | Radial Basis Function |

| RBFNN | Radial Basis Function Neural Network |

| RF | Random Forest |

| RTS | Request to Send |

| SMOTE | Synthetic Minority Over-Sampling TEchnique |

| SSID | Service Set Identifier |

| SSDDQN | Semi-Supervised Double Deep Q-Network |

| SVM | Support Vector Machine |

| TP | True Positive |

| WEP | Wired Equivalent Privacy |

| WLANs | Wireless Local Area Networks |

| WPA | Wi-Fi Protected Access |

References

- 802.11-2020; IEEE Standard for Information Technology–Telecommunications and Information Exchange between Systems-Local and Metropolitan Area Networks–Specific Requirements-Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications. IEEE: New York, NY, USA, 2021. [CrossRef]

- IEEE Std 802.11ba-2021 (Amendment to IEEE Std 802.11-2020 as Amendment by IEEE Std 802.11ax-2021, and IEEE Std 802.11ay-2021); IEEE Standard for Information Technology–Telecommunications and Information Exchange between Systems–Local and Metropolitan Area Networks-Specific Requirements–Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications-Amendment 3: Wake-Up Radio Operation. IEEE: New York, NY, USA, 2021; pp. 1–180. [CrossRef]

- Natkaniec, M.; Bieryt, N. An Analysis of the Mixed IEEE 802.11ax Wireless Networks in the 5 GHz Band. Sensors 2023, 23, 4964. [Google Scholar] [CrossRef]

- Fang, W.; Li, F.; Sun, Y.; Shan, L.; Chen, S.; Chen, C.; Li, M. Information Security of PHY Layer in Wireless Networks. J. Sensors 2016, 2016, 1230387. [Google Scholar] [CrossRef] [Green Version]

- Vanhoef, M.; Piessens, F. Advanced Wi-Fi attacks using commodity hardware. In Proceedings of the 30th Annual Computer Security Applications Conference, New Orleans, LA, USA, 8–12 December 2014; pp. 256–265. [Google Scholar] [CrossRef] [Green Version]

- Uszko, K.; Kasprzyk, M.; Natkaniec, M.; Chołda, P. Rule-Based System with Machine Learning Support for Detecting Anomalies in 5G WLANs. Electronics 2023, 12, 2355. [Google Scholar] [CrossRef]

- Otoum, Y.; Wan, Y.; Nayak, A. Transfer Learning-Driven Intrusion Detection for Internet of Vehicles (IoV). In Proceedings of the 2022 International Wireless Communications and Mobile Computing (IWCMC), Dubrovnik, Croatia, 30 May–3 June 2022; pp. 342–347. [Google Scholar] [CrossRef]

- Zaza, A.M.; Kharroub, S.K.; Abualsaud, K. Lightweight IoT Malware Detection Solution Using CNN Classification. In Proceedings of the 2020 IEEE 3rd 5G World Forum (5GWF), Bangalore, India, 10–12 September 2020; pp. 212–217. [Google Scholar] [CrossRef]

- Stryczek, S.; Natkaniec, M. Internet Threat Detection in Smart Grids Based on Network Traffic Analysis Using LSTM, IF, and SVM. Energies 2023, 16, 329. [Google Scholar] [CrossRef]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Gritzalis, S. Intrusion detection in 802.11 networks: Empirical evaluation of threats and a public dataset. IEEE Commun. Surv. Tutor. 2016, 18, 184–208. [Google Scholar] [CrossRef]

- The AWID2 Dataset. Available online: https://icsdweb.aegean.gr/awid/awid2 (accessed on 28 May 2023).

- Perera Miriya Thanthrige, U.S.K.; Samarabandu, J.; Wang, X. Machine learning techniques for intrusion detection on public dataset. In Proceedings of the 2016 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Vancouver, BC, Canada, 14–18 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, L.; Li, J.; Yin, L.; Sun, Z.; Zhao, Y.; Li, Z. Real-Time Intrusion Detection in Wireless Network: A Deep Learning-Based Intelligent Mechanism. IEEE Access 2020, 8, 170128–170139. [Google Scholar] [CrossRef]

- Dong, S.; Xia, Y.; Peng, T. Network Abnormal Traffic Detection Model Based on Semi-Supervised Deep Reinforcement Learning. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4197–4212. [Google Scholar] [CrossRef]

- Duan, Q.; Wei, X.; Fan, J.; Yu, L.; Hu, Y. CNN-based Intrusion Classification for IEEE 802.11 Wireless Networks. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 830–833. [Google Scholar] [CrossRef]

- Chen, J.; Yang, T.; He, B.; He, L. An analysis and research on wireless network security dataset. In Proceedings of the 2021 International Conference on Big Data Analysis and Computer Science (BDACS), Kunming, China, 25–27 June 2021; pp. 80–83. [Google Scholar] [CrossRef]

- Lee, S.J.; Yoo, P.D.; Asyhari, A.T.; Jhi, Y.; Chermak, L.; Yeun, C.Y.; Taha, K. IMPACT: Impersonation Attack Detection via Edge Computing Using Deep Autoencoder and Feature Abstraction. IEEE Access 2020, 8, 65520–65529. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Sanchez-Esguevillas, A.; Arribas, J.I.; Carro, B. Network Intrusion Detection Based on Extended RBF Neural Network With Offline Reinforcement Learning. IEEE Access 2021, 9, 153153–153170. [Google Scholar] [CrossRef]

- Ran, J.; Ji, Y.; Tang, B. A Semi-Supervised Learning Approach to IEEE 802.11 Network Anomaly Detection. In Proceedings of the 2019 IEEE 89th Vehicular Technology Conference (VTC2019-Spring). Kuala Lumpur, Malaysia, 28 April–1 May 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Alotaibi, B.; Elleithy, K. A majority voting technique for Wireless Intrusion Detection Systems. In Proceedings of the 2016 IEEE Long Island Systems, Applications and Technology Conference (LISAT), Farmingdale, NY, USA, 29 April 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Feng, G.; Li, B.; Yang, M.; Yan, Z. V-CNN: Data Visualizing based Convolutional Neural Network. In Proceedings of the 2018 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Qingdao, China, 14–16 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Abdulhammed, R.; Faezipour, M.; Abuzneid, A.; Alessa, A. Enhancing Wireless Intrusion Detection Using Machine Learning Classification with Reduced Attribute Sets. In Proceedings of the 2018 14th International Wireless Communications & Mobile Computing Conference (IWCMC), Limassol, Cyprus, 25–29 June 2018; pp. 524–529. [Google Scholar] [CrossRef]

- Vaca, F.D.; Niyaz, Q. An Ensemble Learning Based Wi-Fi Network Intrusion Detection System (WNIDS). In Proceedings of the 2018 IEEE 17th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 1–3 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Chatzoglou, E.; Kambourakis, G.; Kolias, C.; Smiliotopoulos, C. Pick Quality Over Quantity: Expert Feature Selection and Data Preprocessing for 802.11 Intrusion Detection Systems. IEEE Access 2022, 10, 64761–64784. [Google Scholar] [CrossRef]

- The AWID3 Dataset. Available online: https://icsdweb.aegean.gr/awid/awid3 (accessed on 28 May 2023).

- Chatzoglou, E.; Kambourakis, G.; Kolias, C. Empirical Evaluation of Attacks Against IEEE 802.11 Enterprise Networks: The AWID3 Dataset. IEEE Access 2021, 9, 34188–34205. [Google Scholar] [CrossRef]

- Zhou, Y.; Cheng, G.; Jiang, S.; Dai, M. Building an efficient intrusion detection system based on feature selection and ensemble classifier. Comput. Netw. 2020, 174, 107247. [Google Scholar] [CrossRef] [Green Version]

- Asaduzzaman, M.; Rahman, M.M. An Adversarial Approach for Intrusion Detection Using Hybrid Deep Learning Model. In Proceedings of the 2022 International Conference on Information Technology Research and Innovation (ICITRI), Jakarta, Indonesia, 10 November 2022; pp. 18–23. [Google Scholar] [CrossRef]

- Misbha, D.S. Detection of Attacks using Attention-based Conv-LSTM and Bi-LSTM in Industrial Internet of Things. In Proceedings of the 2022 International Conference on Automation, Computing and Renewable Systems (ICACRS), Pudukkottai, India, 13–15 December 2022; pp. 402–407. [Google Scholar] [CrossRef]

- García, S.; Grill, M.; Stiborek, J.; Zunino, A. An empirical comparison of botnet detection methods. Comput. Secur. 2014, 45, 100–123. [Google Scholar] [CrossRef]

- Vanhoef, M. Fragment and Forge: Breaking Wi-Fi Through Frame Aggregation and Fragmentation. In Proceedings of the 30th USENIX Security Symposium; USENIX Association: Berkeley, CA, USA, 2021. [Google Scholar]

- Vanhoef, M.; Ronen, E. Dragonblood: Analyzing the Dragonfly Handshake of WPA3 and EAP-pwd. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020; pp. 517–533. [Google Scholar] [CrossRef]

- Chatzoglou, E.; Kambourakis, G.; Kolias, C. How is your Wi-Fi connection today? DoS attacks on WPA3-SAE. J. Inf. Secur. Appl. 2022, 64, 103058. [Google Scholar] [CrossRef]

- Schepers, D.; Ranganathan, A.; Vanhoef, M. On the Robustness of Wi-Fi Deauthentication Countermeasures; WiSec ’22; Association for Computing Machinery: New York, NY, USA, 2022; pp. 245–256. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Bayesian Convolutional Neural Networks with Bernoulli Approximate Variational Inference. arXiv 2015, arXiv:1506.02158. [Google Scholar] [CrossRef]

- Liu, C.; Yu, J.; Brewster, G. Empirical studies and queuing modeling of denial of service attacks against 802.11 WLANs. In Proceedings of the 2010 IEEE International Symposium on “A World of Wireless, Mobile and Multimedia Networks” (WoWMoM), Montrreal, QC, Canada, 14–17 June 2010; pp. 1–9. [Google Scholar] [CrossRef]

- Meiners, L.F. But…my station is awake! In Power Save Denial of Service in 802.11 Networks; IEEE: New York, NY, USA, 2009. [Google Scholar]

- Sawwashere, S.S.; Nimbhorkar, S.U. Survey of RTS-CTS Attacks in Wireless Network. In Proceedings of the 2014 Fourth International Conference on Communication Systems and Network Technologies, Bhopal, India, 7–9 April 2014; pp. 752–755. [Google Scholar] [CrossRef]

- Martínez, A.; Zurutuza, U.; Uribeetxeberria, R.; Fernández, M.; Lizarraga, J.; Serna, A.; Vélez, I. Beacon Frame Spoofing Attack Detection in IEEE 802.11 Networks. In Proceedings of the 2008 Third International Conference on Availability, Reliability and Security, Barcelona, Spain, 4–7 March 2008; pp. 520–525. [Google Scholar] [CrossRef]

- Ferreri, F.; Bernaschi, M.; Valcamonici, L. Access points vulnerabilities to DoS attacks in 802.11 networks. In Proceedings of the 2004 IEEE Wireless Communications and Networking Conference (IEEE Cat. No. 04TH8733), Atlanta, GA, USA, 21–25 March 2004; Volume 1, pp. 634–638. [Google Scholar] [CrossRef]

- Al-Gharabally, N.; El-Sayed, N.; Al-Mulla, S.; Ahmad, I. Wireless honeypots: Survey and assessment. In Proceedings of the 2009 Conference on Information Science, Technology and Applications, Wuhan, China, 4–5 July 2009; pp. 45–52. [Google Scholar] [CrossRef]

- Song, Y.; Yang, C.; Gu, G. Who is peeping at your passwords at Starbucks? —To catch an evil twin access point. In Proceedings of the 2010 IEEE/IFIP International Conference on Dependable Systems & Networks (DSN), Chicago, IL, USA, 28 June–1 July 2010; pp. 323–332. [Google Scholar] [CrossRef]

- Hancock, J.T.; Khoshgoftaar, T.M. Survey on categorical data for neural networks. J. Big Data 2020, 7, 28. [Google Scholar] [CrossRef] [Green Version]

- Rapacz, S.; Chołda, P.; Natkaniec, M. A Method for Fast Selection of Machine-Learning Classifiers for Spam Filtering. Electronics 2021, 10, 2083. [Google Scholar] [CrossRef]

- Liu, X.; Han, Y.; Du, Y. IoT Device Identification Using Directional Packet Length Sequences and 1D-CNN. Sensors 2022, 22, 8337. [Google Scholar] [CrossRef] [PubMed]

- Osman, R.A.; Saleh, S.N.; Saleh, Y.N.M. A Novel Interference Avoidance Based on a Distributed Deep Learning Model for 5G-Enabled IoT. Sensors 2021, 21, 6555. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- The Proposed CNN Models. Available online: https://github.com/marcinele/awid-ml-models (accessed on 28 May 2023).

| Paper | Model(s) | Dataset(s) | Detection of MAC Layer Threats | Features | Features Selection Method | Limitations | Year | ||

|---|---|---|---|---|---|---|---|---|---|

| Flooding | Impersonation | Injection | |||||||

| [12] | OneR, J48, RF 1, RT 2, AB 3 | AWID2 | + | + | + | 9 | Information Gain and Chi-Squared statistics | Interpretation of the features is missing | 2016 |

| [20] | Bagging, RF, Extra Trees | AWID2 | + | + | + | 20 | Extra Trees | Only few metrics are considered | 2016 |

| [21] | V-CNN | AWID2 | + | + | + | 71 | N/A | Only few metrics are considered | 2018 |

| [22] | OneR, J48, RF, NB 4, Bagging, Simple Logistic, ML Perceptron CS | AWID2 | only as Attack | only as Attack | only as Attack | 7 | Correlation Feature Selection with Best First Search | The laboratory-related features are considered | 2018 |

| [23] | Bagging, RF, Extra Trees, XGBoost | AWID2 | + | + | + | 18 | Manual | The laboratory-related features are considered | 2018 |

| [19] | Semi-Supervised Learning | AWID2 | + | + | + | 95 | N/A | Datase preparation phase is questionable | 2019 |

| [13] | CDBN 5 | AWID2, LITNET | + | − | + | 20 | Stacked Contractive Auto-Encoder | The laboratory-related features are considered. | 2020 |

| [15] | CNN 6 | AWID2 | + | + | + | 45 | Manual | Only few metrics are considered | 2020 |

| [17] | C4.8 | AWID2 | − | + | − | 5 | Stacked Auto-Encoder | One of the top features is based on frame transmission time | 2020 |

| [27] | C4.5, RF, Forest PA | NSL-KDD, AWID2, CIC-IDS2017 | + | + | + | 8 | Correlation-based feature selection Bat algorithm | One of the top features is based on frame transmission time | 2020 |

| [14] | DDQN 7 | AWID2, NSL-KDD | + | + | + | 49 | N/A | Interpretation of the features is missing | 2021 |

| [18] | RBFNN 8, DRL 9+RBFNN | AWID2, NSL-KDD, UNSW-NB15, CICIDS2017, CICDDOS2019 | + | + | + | 58 | Manual | High complexity of deep learning models | 2021 |

| [24] | Light GBM 10 | AWID2, AWID3 | + | + | + | 16 | Stacked Auto-Encoder | Unidentified | 2022 |

| [28] | LSTM 11, CNN | AWID2 | only as Attack | only as Attack | only as Attack | 12 | SHapley Additive exPlanations | One of the top features is based on frame transmission time | 2022 |

| [29] | Conv-LSTM, Bi-LSTM | AWID2, CTU-13 | + | + | + | 50 | N/A | Dataset preprocessing description is missing | 2022 |

| Proposed model | 1D-CNN | AWID2 | + | + | + | 36 | ANOVA F-value | Unidentified | 2023 |

| Full Set | |||||||

|---|---|---|---|---|---|---|---|

| AWID-ATK-F-Trn | AWID-ATK-F-Tst | AWID-CLS-F-Trn | AWID-CLS-F-Tst | ||||

| amok | 12,416 | amok | 3856 | flooding | 1,211,459 | flooding | 197,933 |

| arp | 1,529,284 | arp | 500,823 | impersonation | 1,884,378 | impersonation | 477,514 |

| auth_request | 93,011 | auth_request | 34,833 | injection | 1,530,373 | injection | 523,942 |

| beacon | 170,826 | beacon | 5498 | normal | 157,749,037 | normal | 47,325,477 |

| cafe_latte | 1,860,780 | cafe_latte | 16,719 | ||||

| deauthentication | 817,954 | chop_chop | 22,879 | ||||

| evil_twin | 23,598 | cts | 38,359 | ||||

| fragmentation | 1098 | deauthentication | 33,870 | ||||

| normal | 157,749,037 | disassociation | 34,871 | ||||

| probe_response | 117,252 | evil_twin | 27,045 | ||||

| fragmentation | 240 | ||||||

| hirte | 433,750 | ||||||

| normal | 47,325,477 | ||||||

| power_saving | 13,551 | ||||||

| probe_request | 10,981 | ||||||

| probe_response | 8578 | ||||||

| rts | 13,536 | ||||||

| Reduced Set | |||||||

|---|---|---|---|---|---|---|---|

| AWID-ATK-R-Trn | AWID-ATK-R-Tst | AWID-CLS-R-Trn | AWID-CLS-R-Tst | ||||

| amok | 31,180 | amok | 477 | flooding | 48,484 | flooding | 8097 |

| arp | 64,608 | arp | 13,644 | impersonation | 48,522 | impersonation | 20,079 |

| authentication_request | 3500 | beacon | 599 | injection | 65,379 | injection | 16,682 |

| beacon | 1799 | cafe_latte | 379 | normal | 1,633,190 | normal | 530,785 |

| cafe_latte | 45,889 | chop_chop | 2871 | ||||

| deauthentication | 10,447 | cts | 1759 | ||||

| evil_twin | 2633 | deauthentication | 4445 | ||||

| fragmentation | 770 | disassociation | 84 | ||||

| normal | 1,633,190 | evil_twin | 611 | ||||

| probe_response | 1558 | fragmentation | 167 | ||||

| hirte | 19,089 | ||||||

| normal | 530,785 | ||||||

| power_saving | 165 | ||||||

| probe_request | 369 | ||||||

| rts | 199 | ||||||

| Layers |

|---|

| Input Layer |

| Convolutional 1D (num_of_filters = 128, kernel_size = 1, strides = 1, activation = ReLU, padding = same) |

| Dropout (dropout_probability = 0.5) |

| Convolutional 1D (num_of_filters = 64, kernel_size = 1, strides = 1, activation = ReLU, padding = same) |

| Dropout (dropout_probability = 0.5) |

| Convolutional 1D (num_of_filters = 32, kernel_size = 1, strides = 1, activation = ReLU, padding = same) |

| Dropout (dropout_probability = 0.5) |

| Flatten |

| Dense (units = 100, activation = ReLU, kernel_regularizer = L2 (factor = 0.1) |

| Output Layer (activation = softmax) |

| Optimizer | Adam |

|---|---|

| Learning rate | 10−7 |

| Batch size | 50 |

| Number of epochs | 15 |

| Optimizer | Adam |

|---|---|

| Learning rate | 10−6 |

| Batch size | 50 |

| Number of epochs | 15 |

| Metric | Value |

|---|---|

| Accuracy | 0.946 |

| Precision | 0.946 |

| Recall | 0.951 |

| F1-score | 0.946 |

| AUC | 0.972 |

| Metric | Value |

|---|---|

| Accuracy | 0.955 |

| Precision | 0.972 |

| Recall | 0.934 |

| F1-score | 0.951 |

| AUC | 0.99 |

| Class | Number of Records | Predicted Records | % Predicted |

|---|---|---|---|

| Normal | 163,319 | 145,881 | 89.32% |

| Attack | 162,385 | 162,333 | 99.96% |

| Class | Number of Records | Predicted Records | % Predicted |

|---|---|---|---|

| Normal | 163,319 | 150,044 | 91.87% |

| Flooding | 48,484 | 48,421 | 99.87% |

| Injection | 65,379 | 65,379 | 100% |

| Impersonation | 48,522 | 47,187 | 97.25% |

| Model | Accuracy | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|

| 1D-CNN-binary (proposed) | 0.946 | 0.946 | 0.951 | 0.946 | 0.972 |

| 1D-CNN-multi-class (proposed) | 0.955 | 0.972 | 0.934 | 0.951 | 0.99 |

| Random Forest [12] | 0.9512 | 0.91 | - | - | 0.704 |

| SamSelect+SCAE+CDBN [13] | 0.974 | - | 0.976 | 0.971 | 0.978 |

| Double Deep Q-Network [14] | 0.9899 | 0.9699 | - | 0.9325 | - |

| CNN [15] | 0.9984 | - | - | - | - |

| Support Vector Machines (SVM) [17] | 0.9822 | - | 0.9764 | 0.9821 | - |

| DRL+RBFNN [18] | 0.955 | 0.914 | 0.955 | 0.934 | - |

| Random Forest [23] | 0.99096 | 0.96 | 0.96 | 0.95 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Natkaniec, M.; Bednarz, M. Wireless Local Area Networks Threat Detection Using 1D-CNN. Sensors 2023, 23, 5507. https://doi.org/10.3390/s23125507

Natkaniec M, Bednarz M. Wireless Local Area Networks Threat Detection Using 1D-CNN. Sensors. 2023; 23(12):5507. https://doi.org/10.3390/s23125507

Chicago/Turabian StyleNatkaniec, Marek, and Marcin Bednarz. 2023. "Wireless Local Area Networks Threat Detection Using 1D-CNN" Sensors 23, no. 12: 5507. https://doi.org/10.3390/s23125507