360° Map Establishment and Real-Time Simultaneous Localization and Mapping Based on Equirectangular Projection for Autonomous Driving Vehicles

Abstract

:1. Introduction

2. Related Work

2.1. Existing SLAM Designs

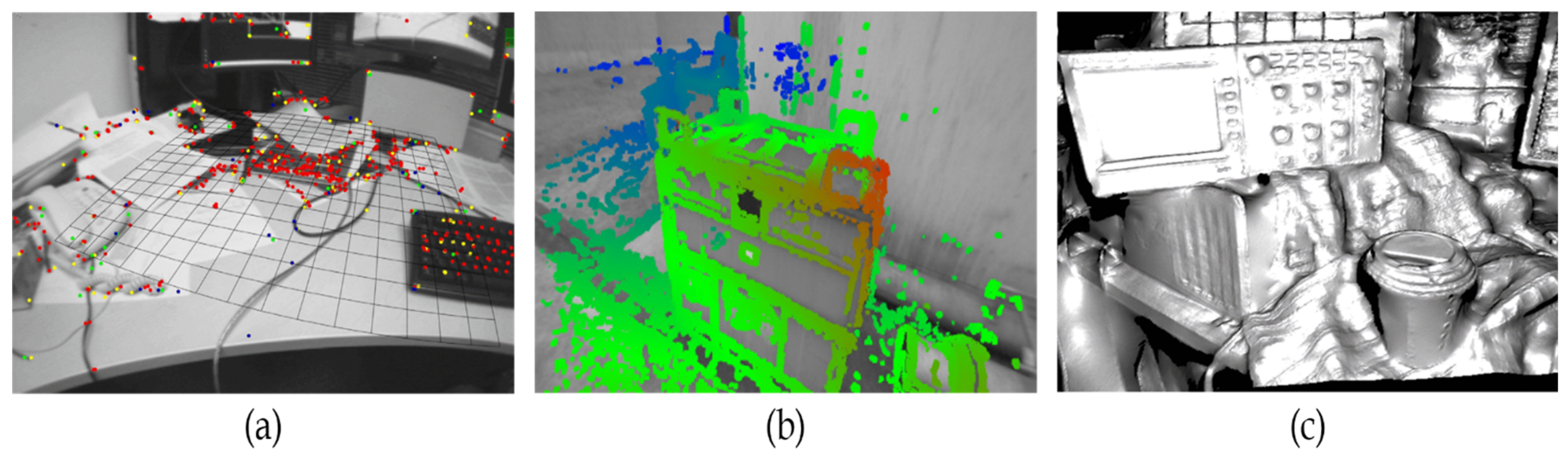

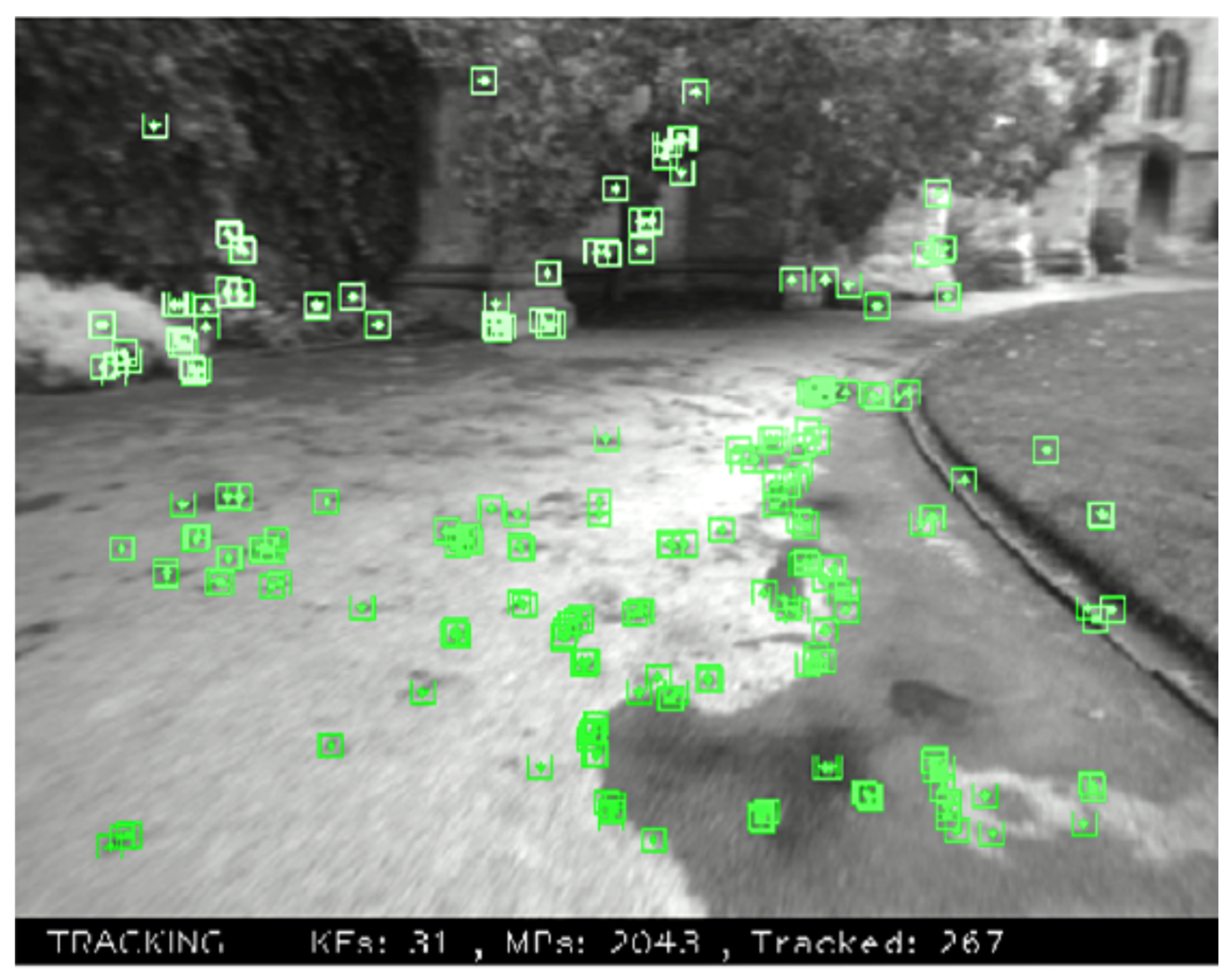

2.1.1. Feature-Based Methods

- (i)

- The five-point algorithm [19] is adopted to do the map initialization.

- (ii)

- Camera poses are estimated from corresponding feature points between the input image features and map points.

- (iii)

- 3D positions of feature points are estimated by triangulation and optimized by BA.

- (iv)

- A randomized tree-based searching [20] is adopted to recover the tracking process.

2.1.2. Direct Methods

- (i)

- Stereo measurement is adopted to perform the map initialization.

- (ii)

- Camera motion is estimated by synthetic images generated from the reconstructed map.

- (iii)

- The depth of every pixel is estimated using multi-baseline stereo, and optimized by considering space continuity.

- (i)

- The proposed method adopts the perspective transformation with any yaw degree which overcomes the drawback in the previous methods when dealing with huge panoramic images.

- (ii)

- Since the proposed method adopts the perspective transformation with any yaw degree, the feature extraction and feature matching are faster compared to the state-of-the-art methods. However, in the process of localization the proposed SLAM algorithm, the perspective transformation is performed only on a specific region of panorama. The other regions which may also possess more, meaningful details that can be used for camera pose estimation is not covered in the proposed algorithm.

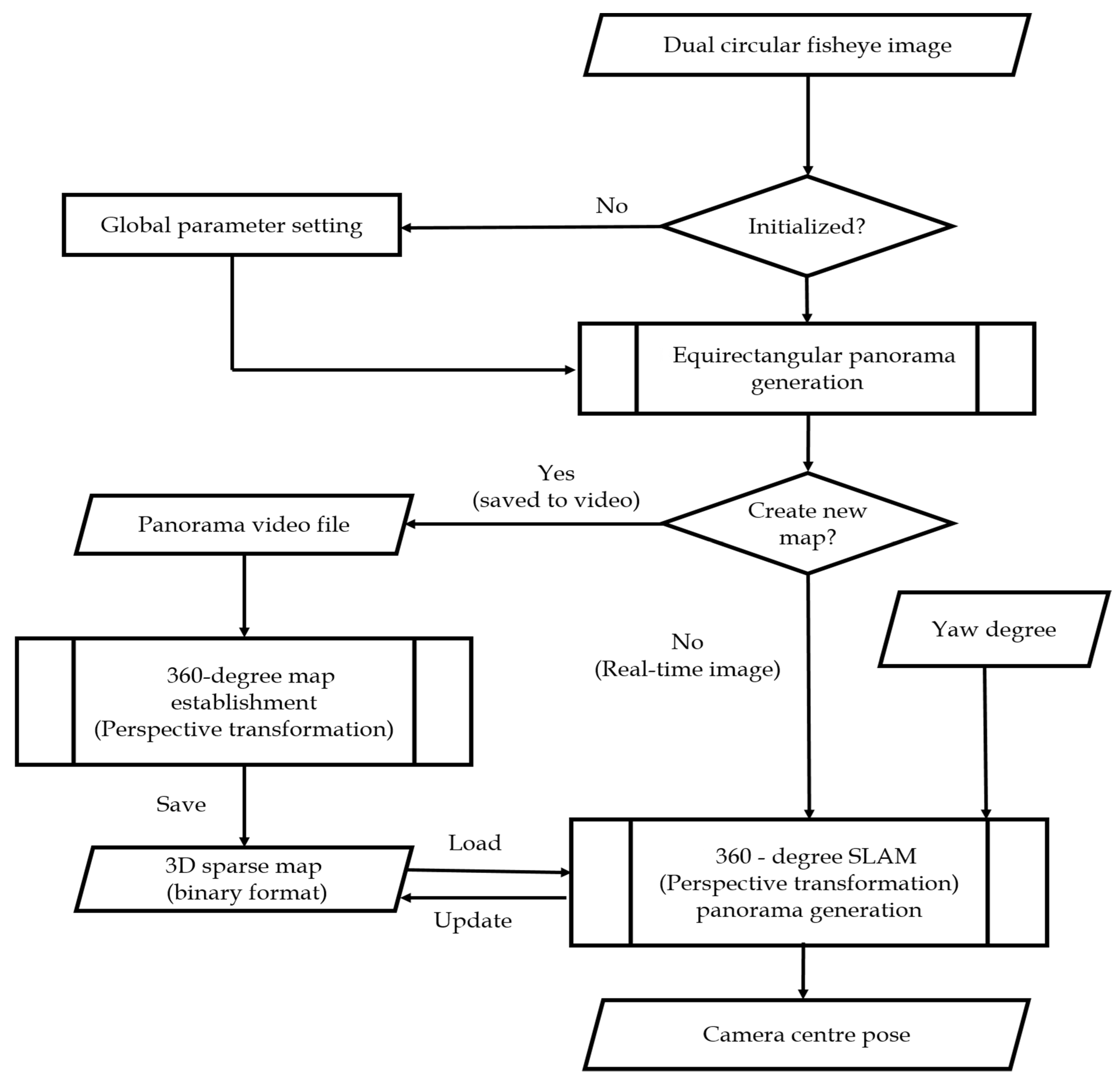

3. Proposed Model

3.1. Global Parameter Setting

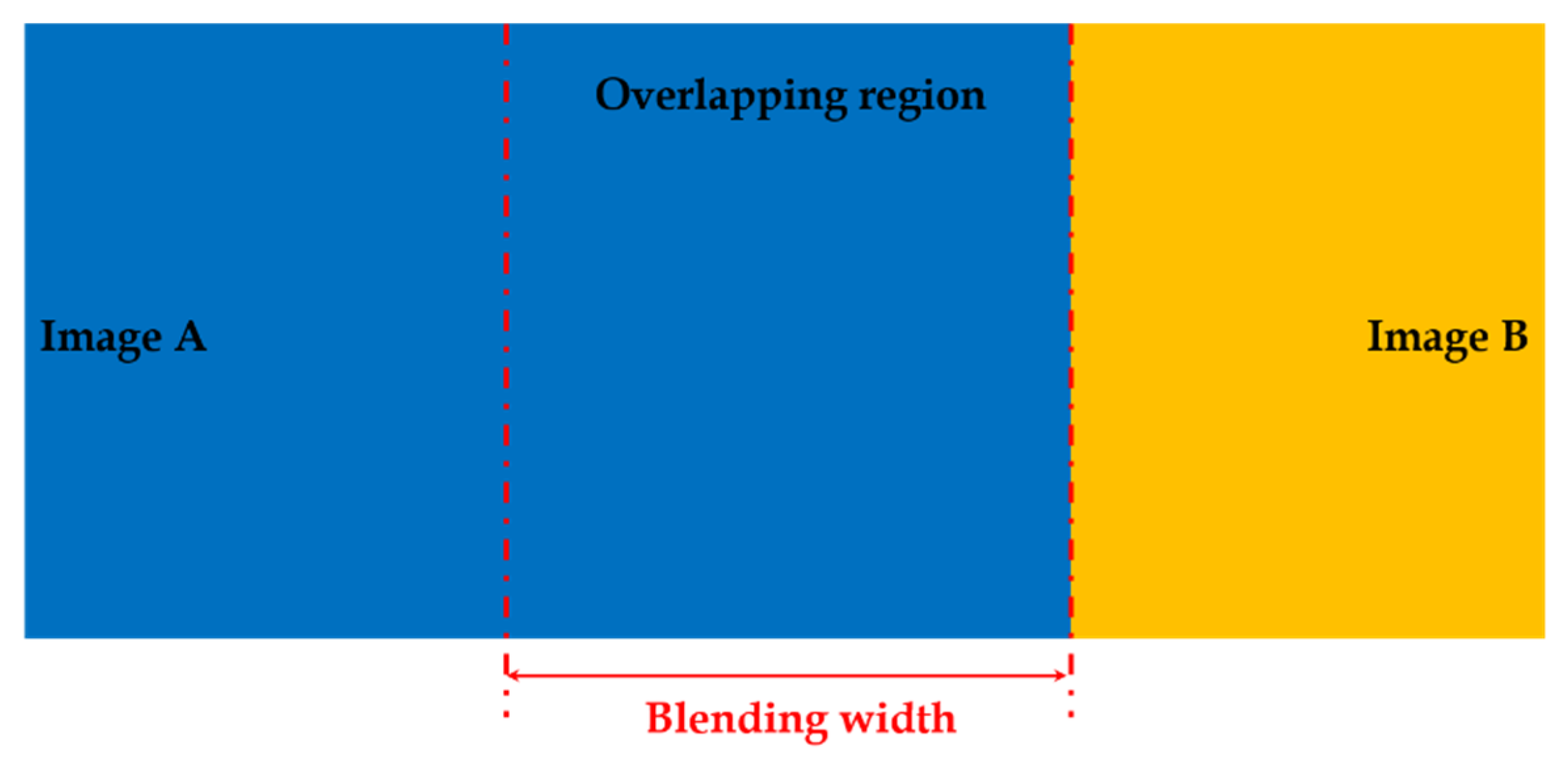

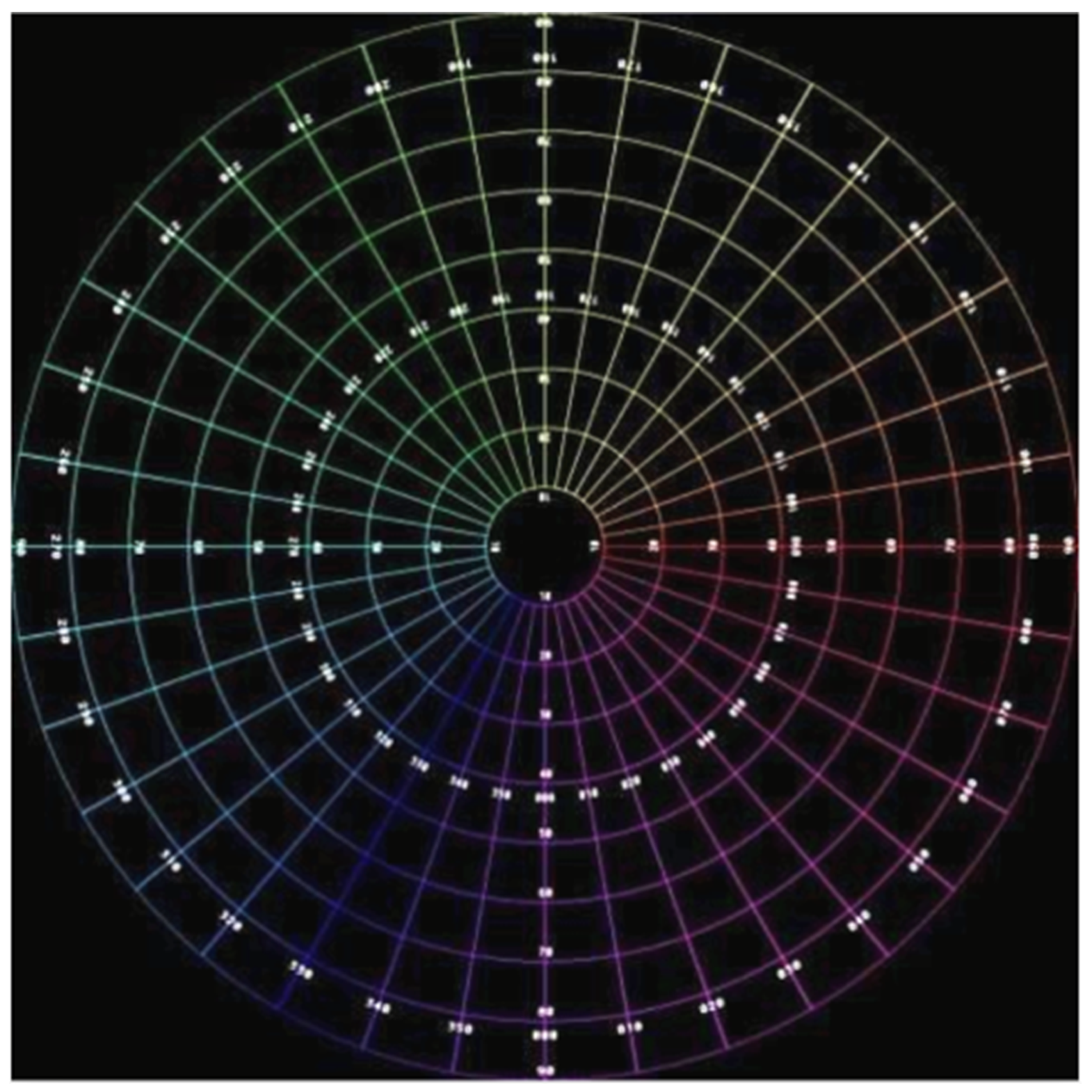

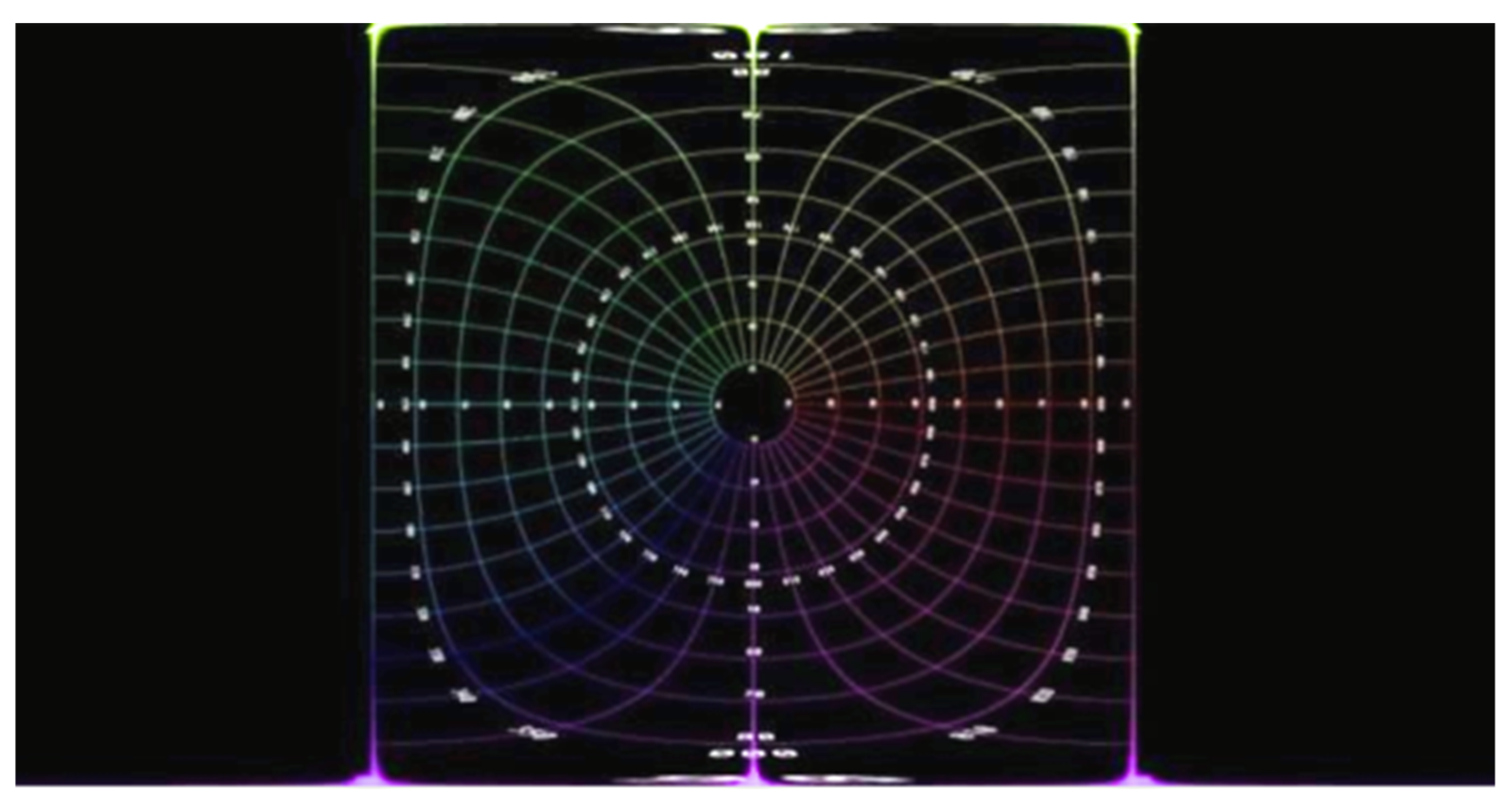

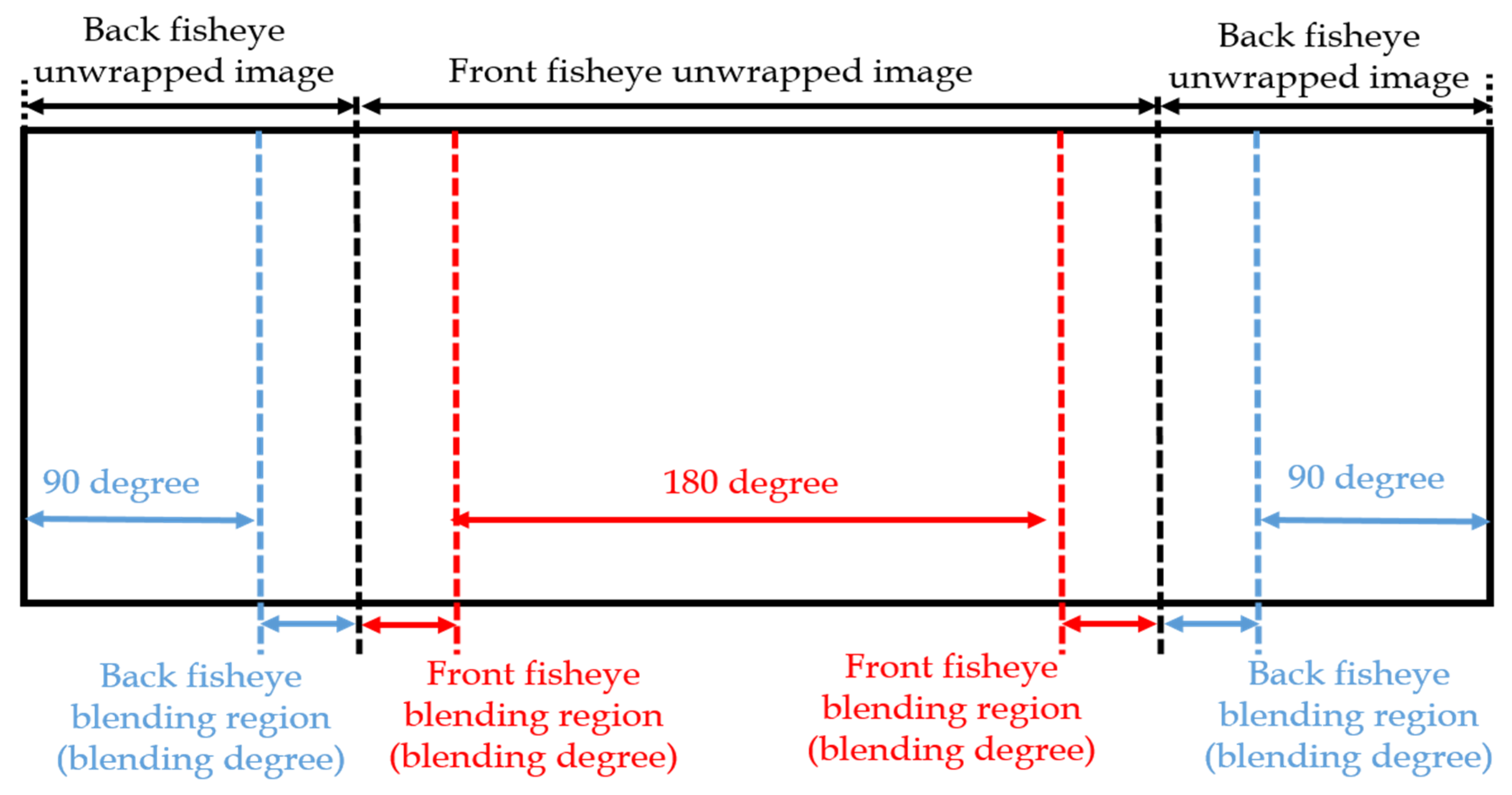

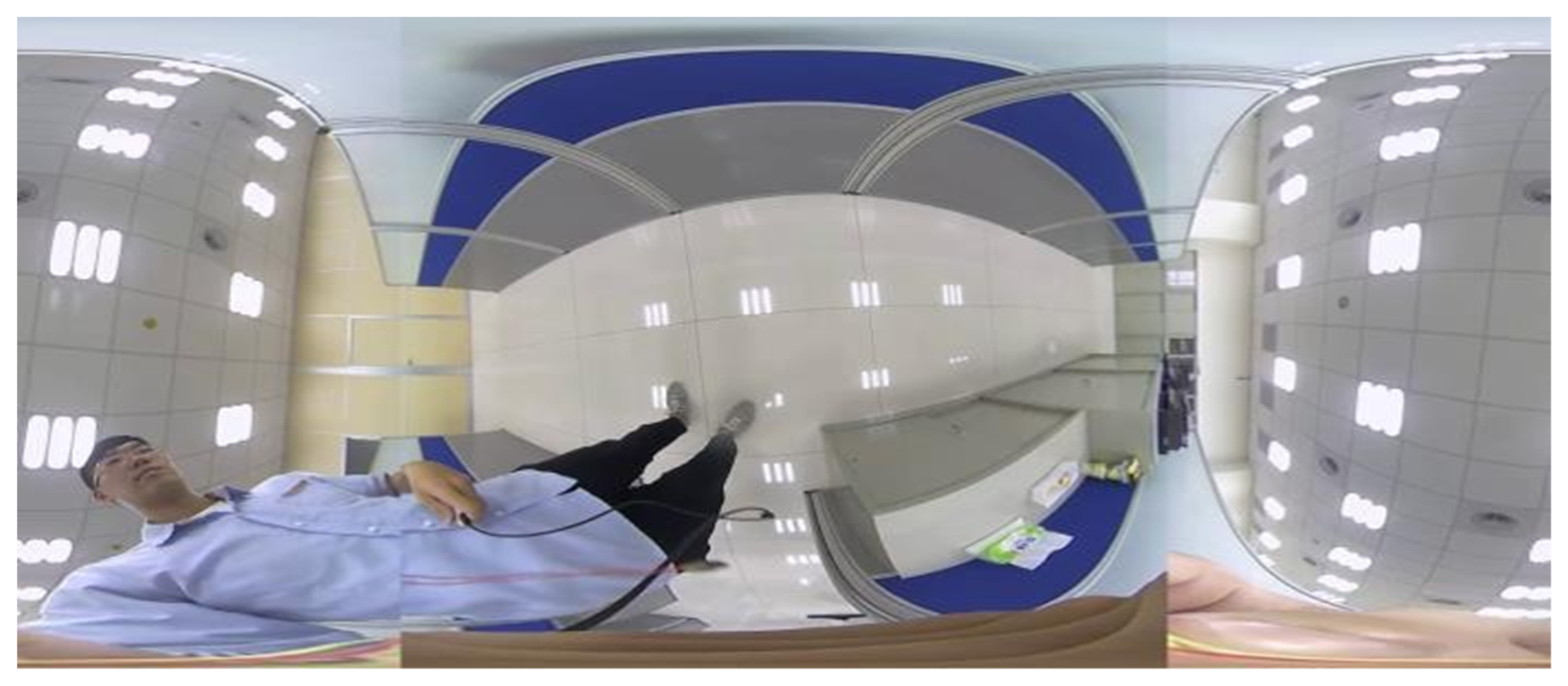

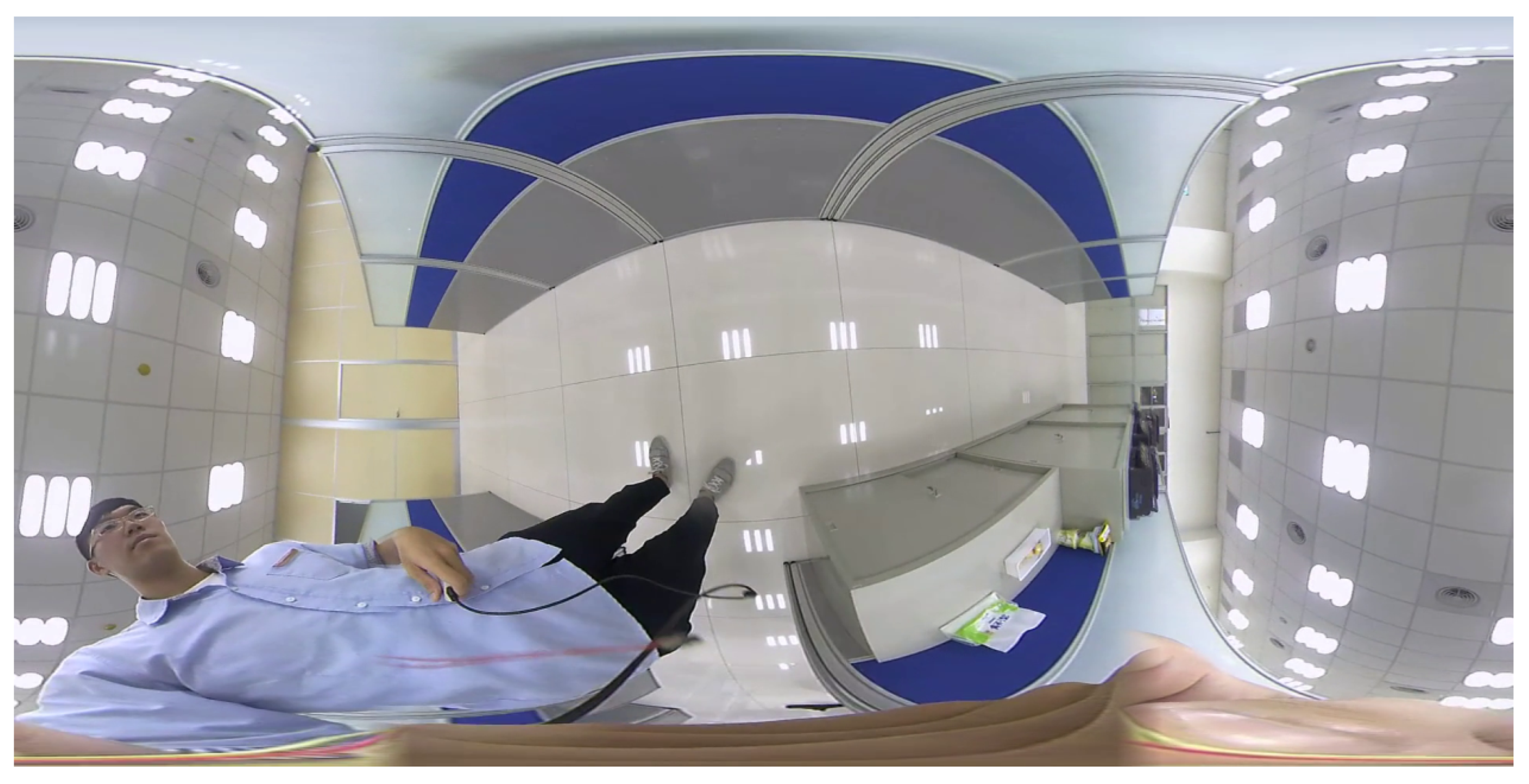

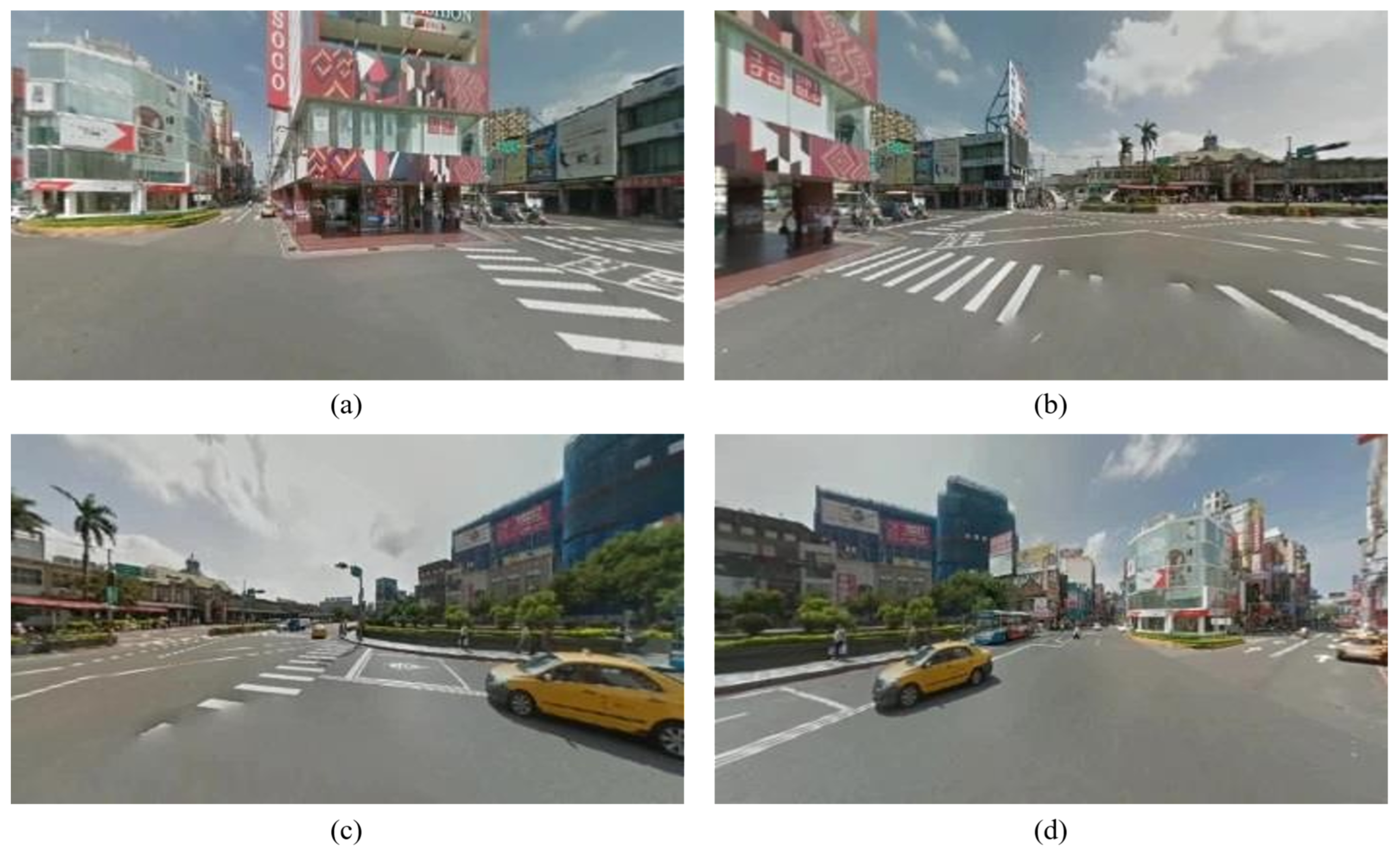

3.2. Equirectangular Panorama Generation

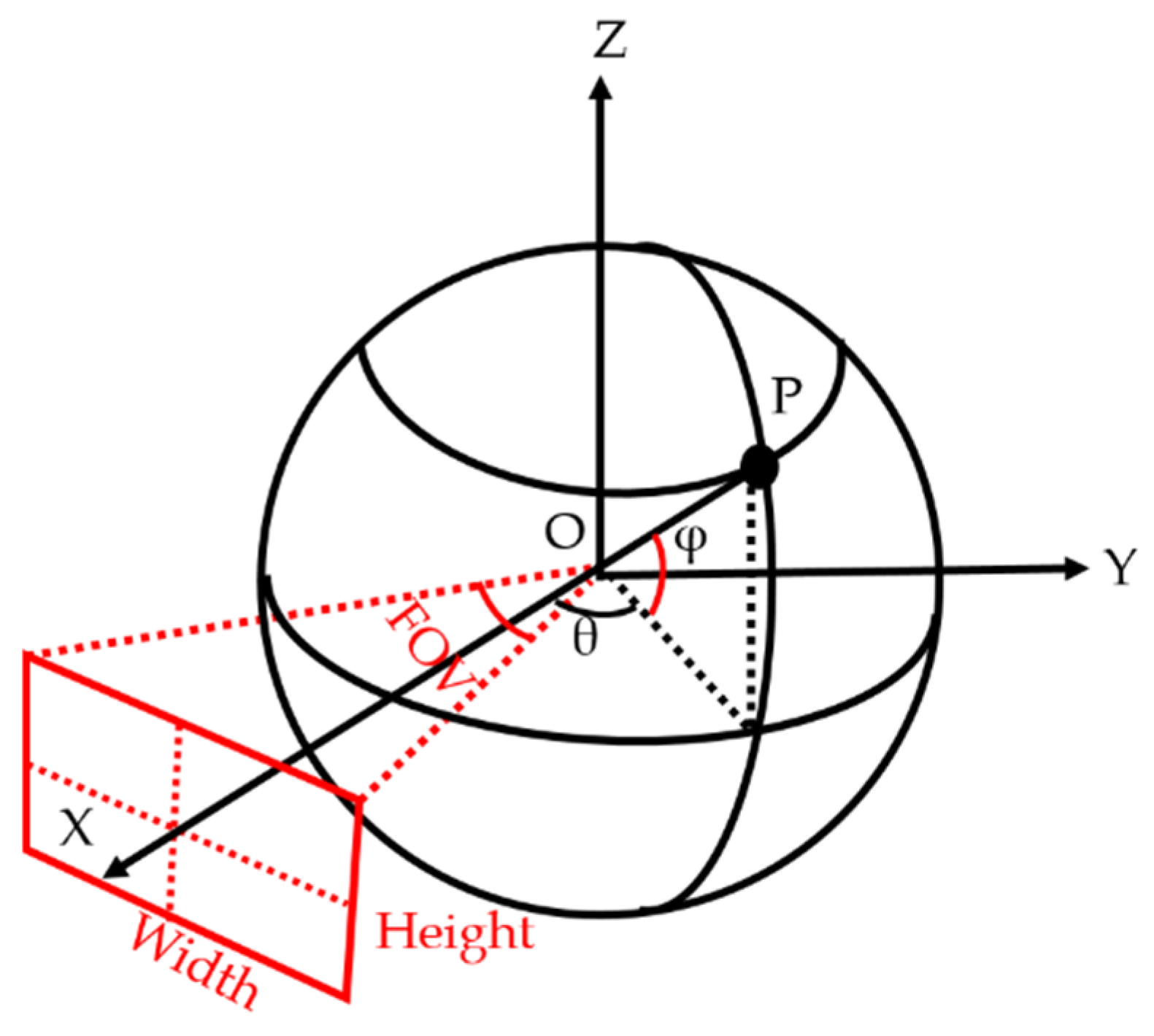

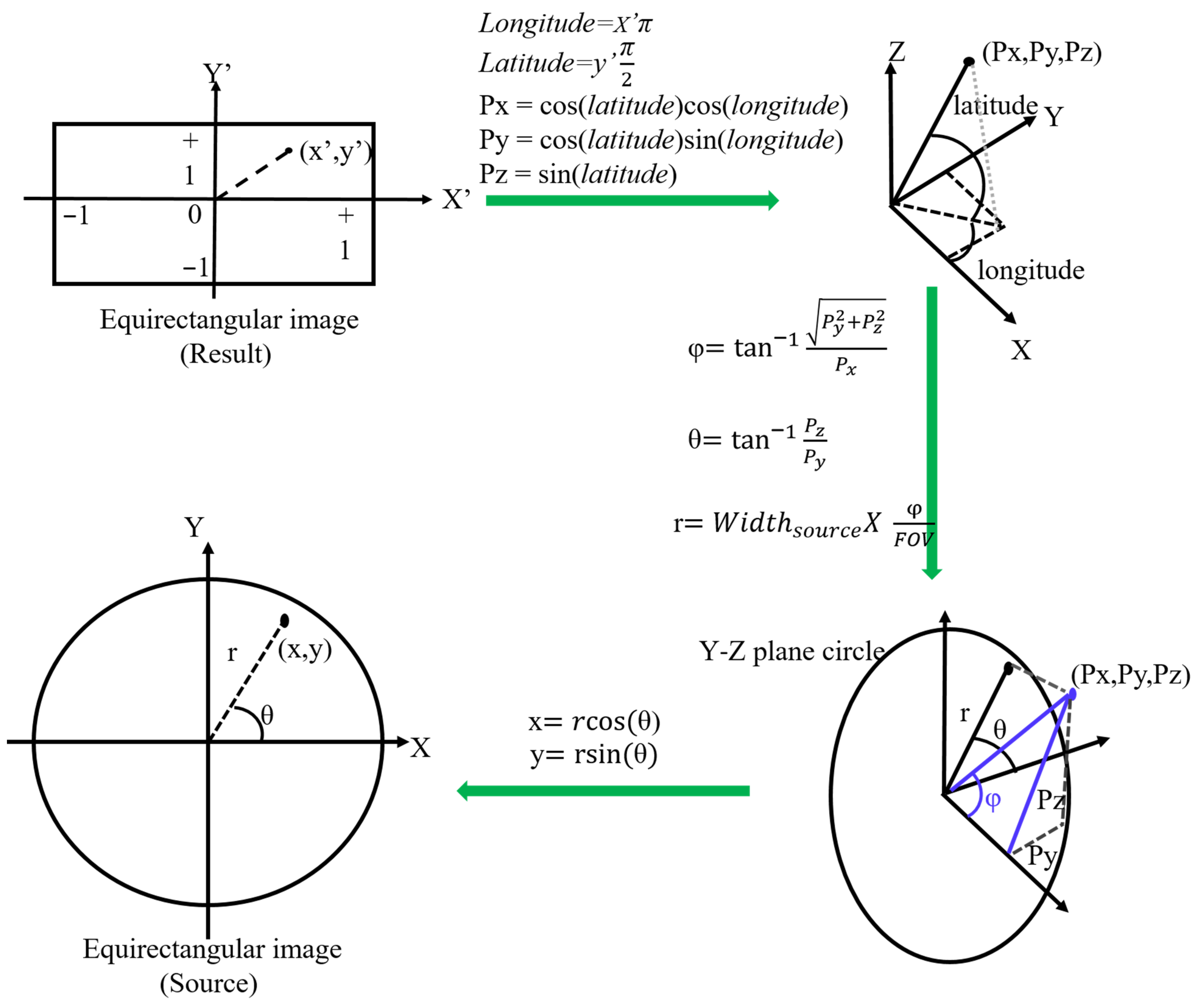

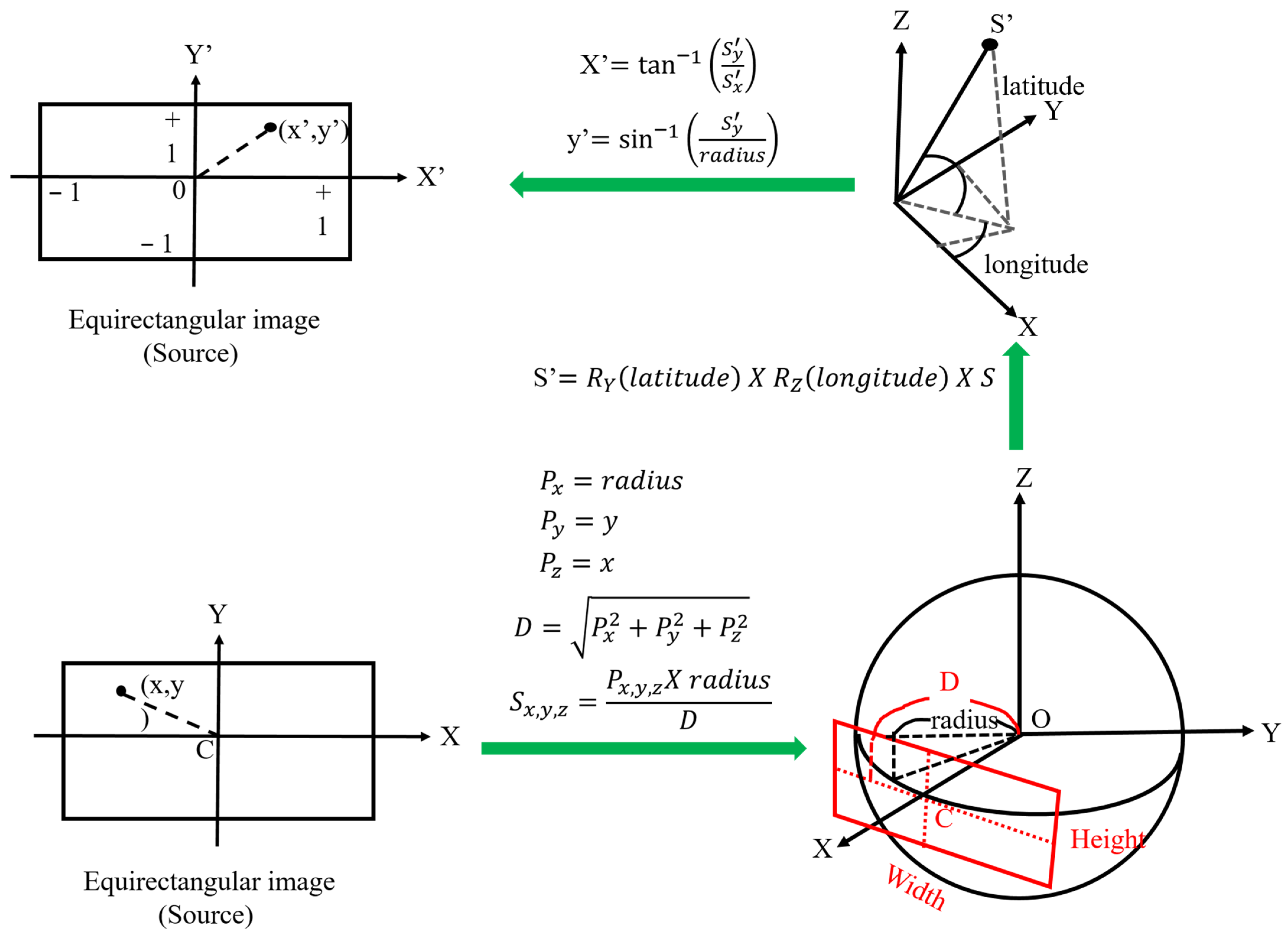

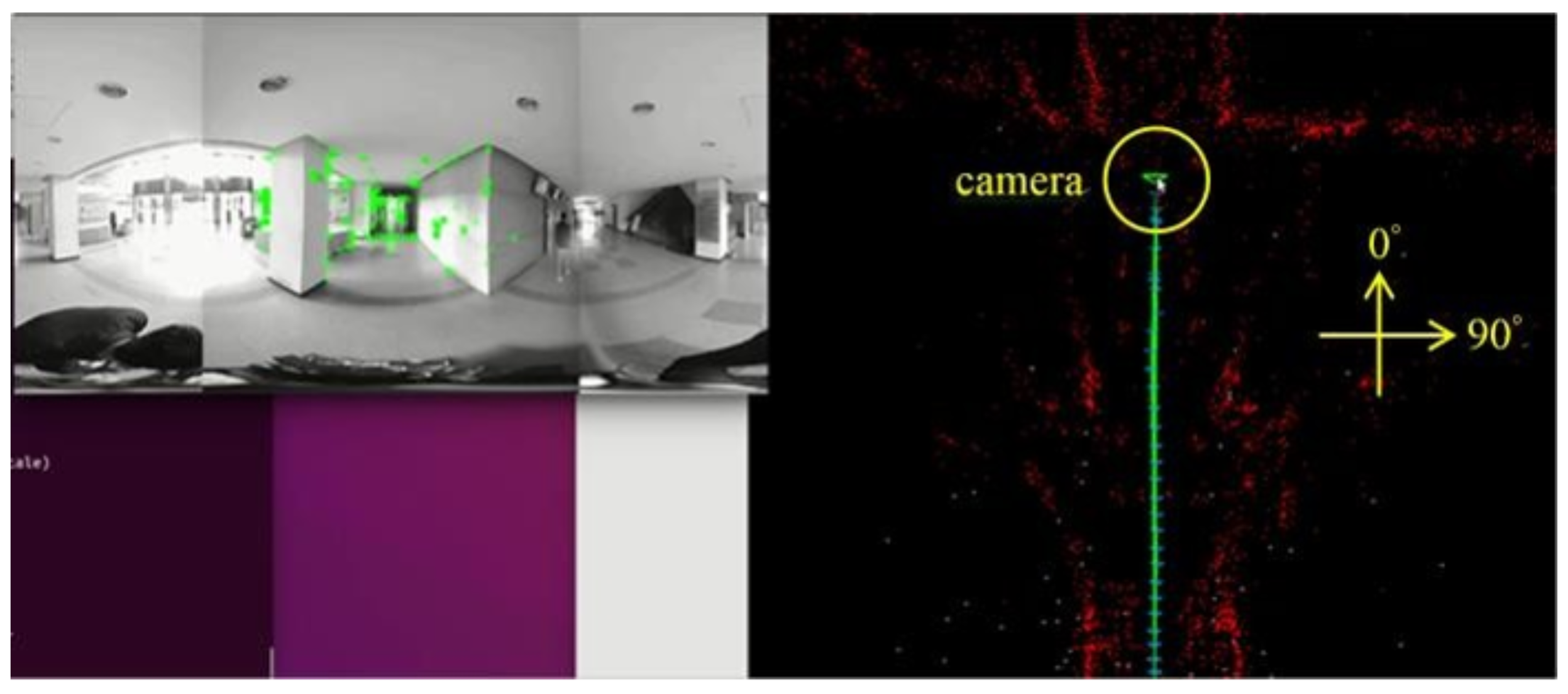

3.3. Perspective Transformation

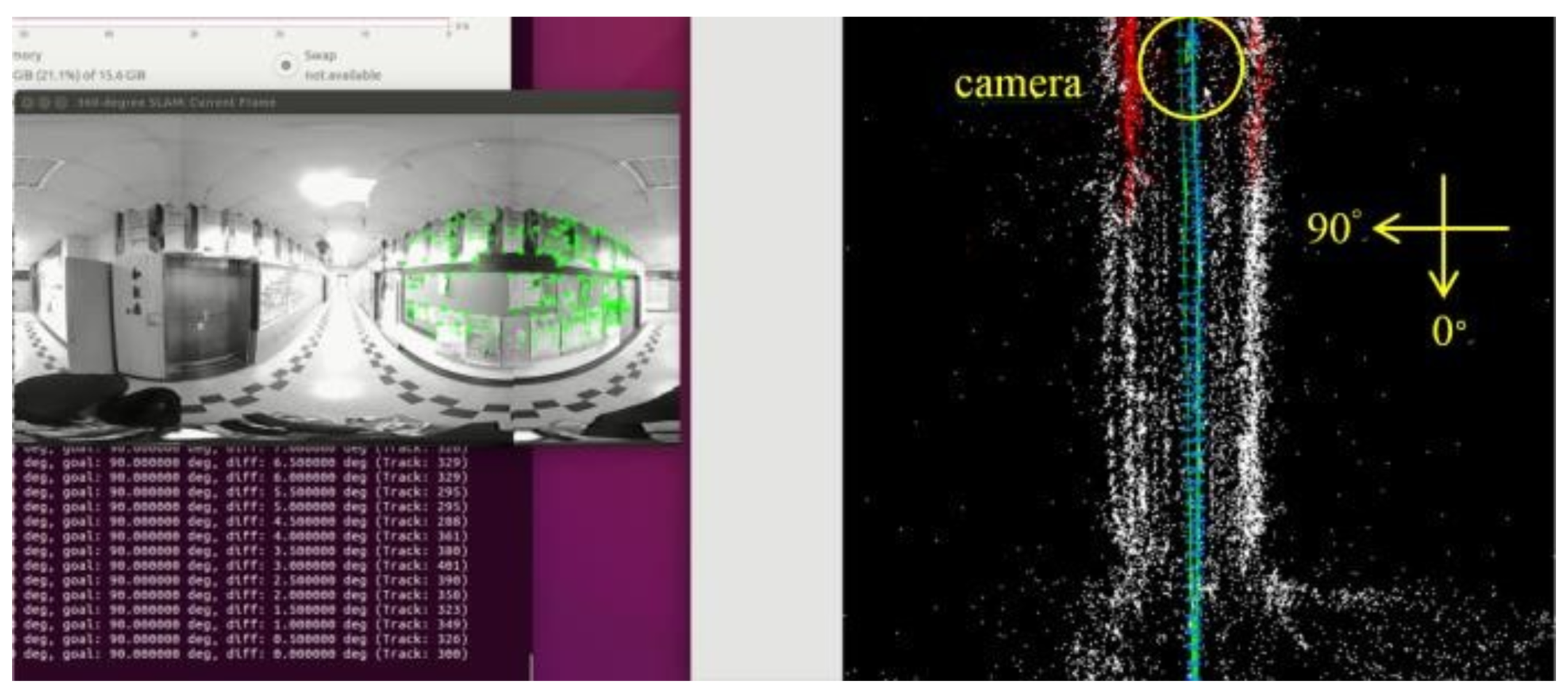

3.4. 360° Map Establishment and Automatic Yaw Degree Rotation

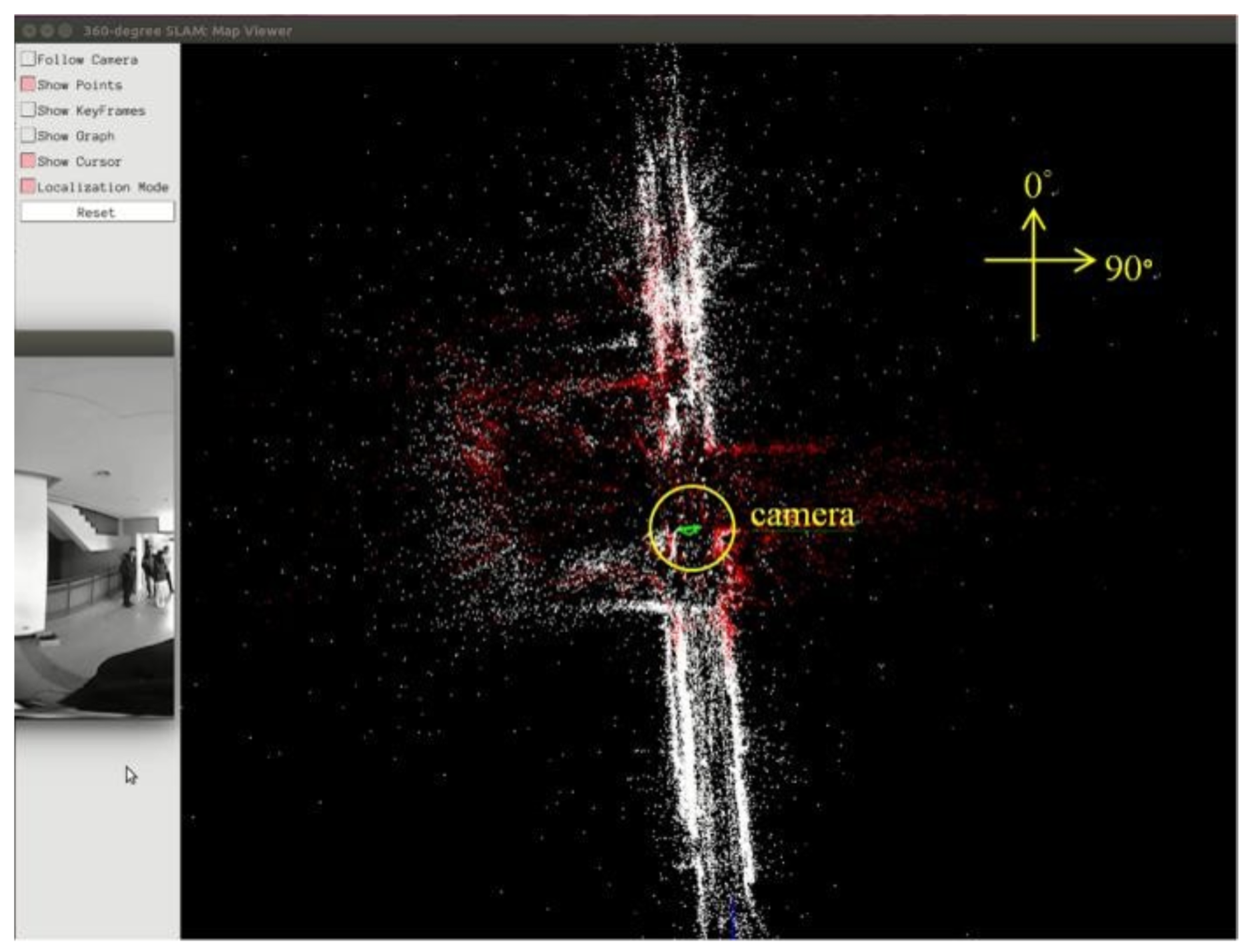

3.5. Map Saving, Loading, and Online Updating Function

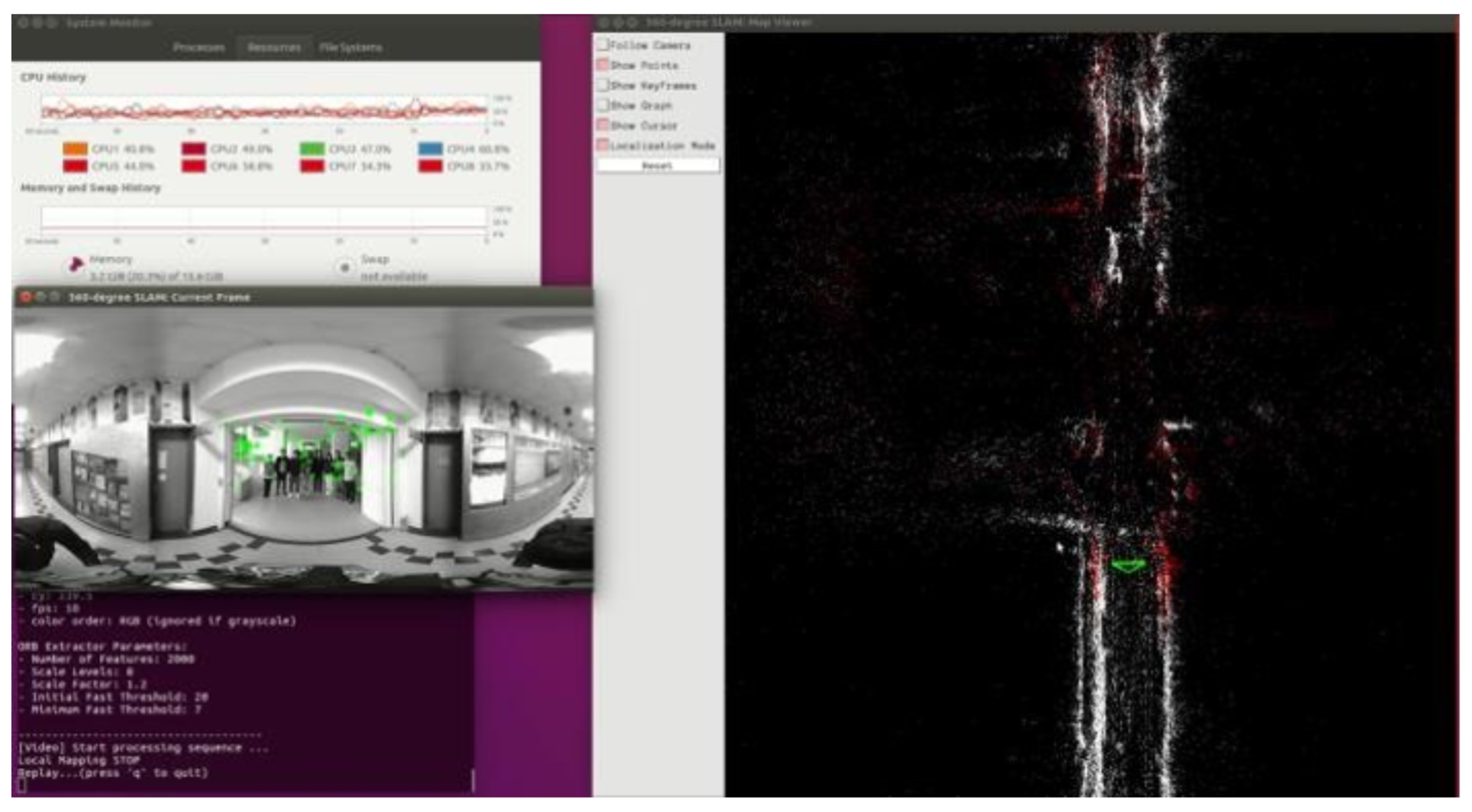

4. Implementation and Experimental Results

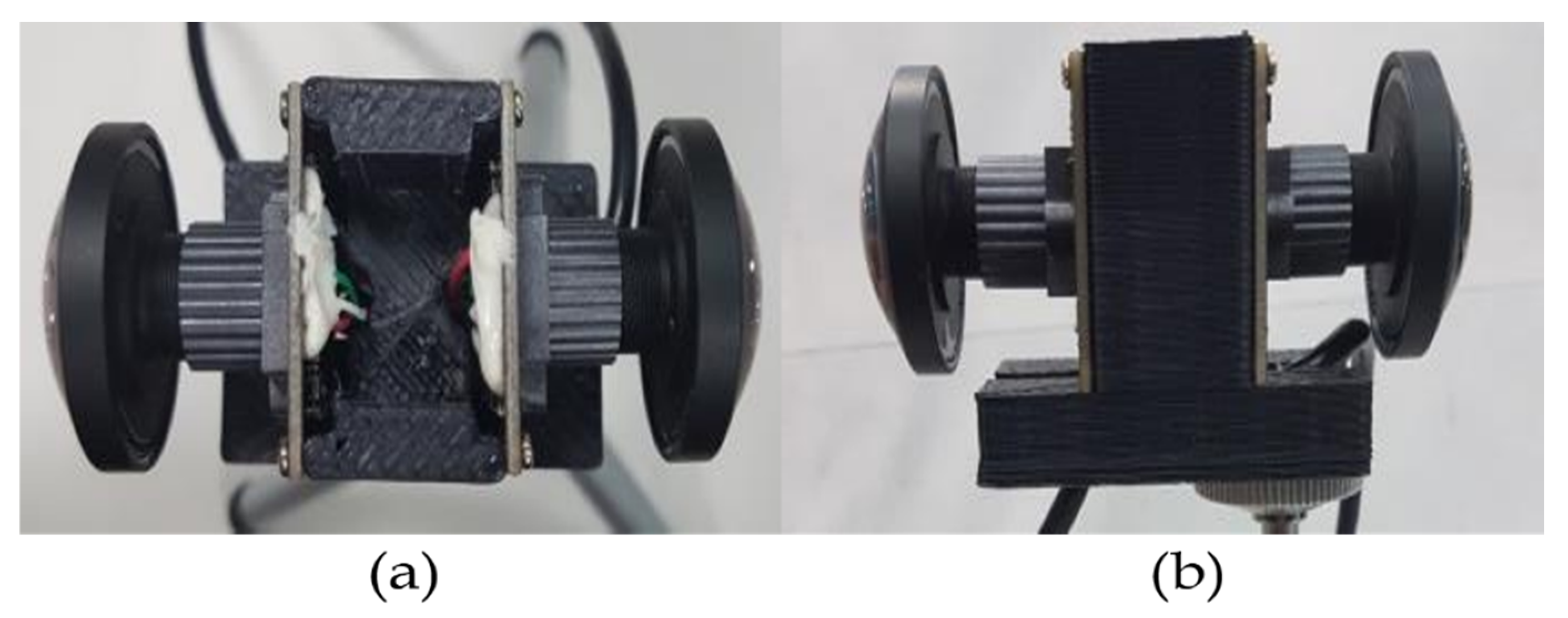

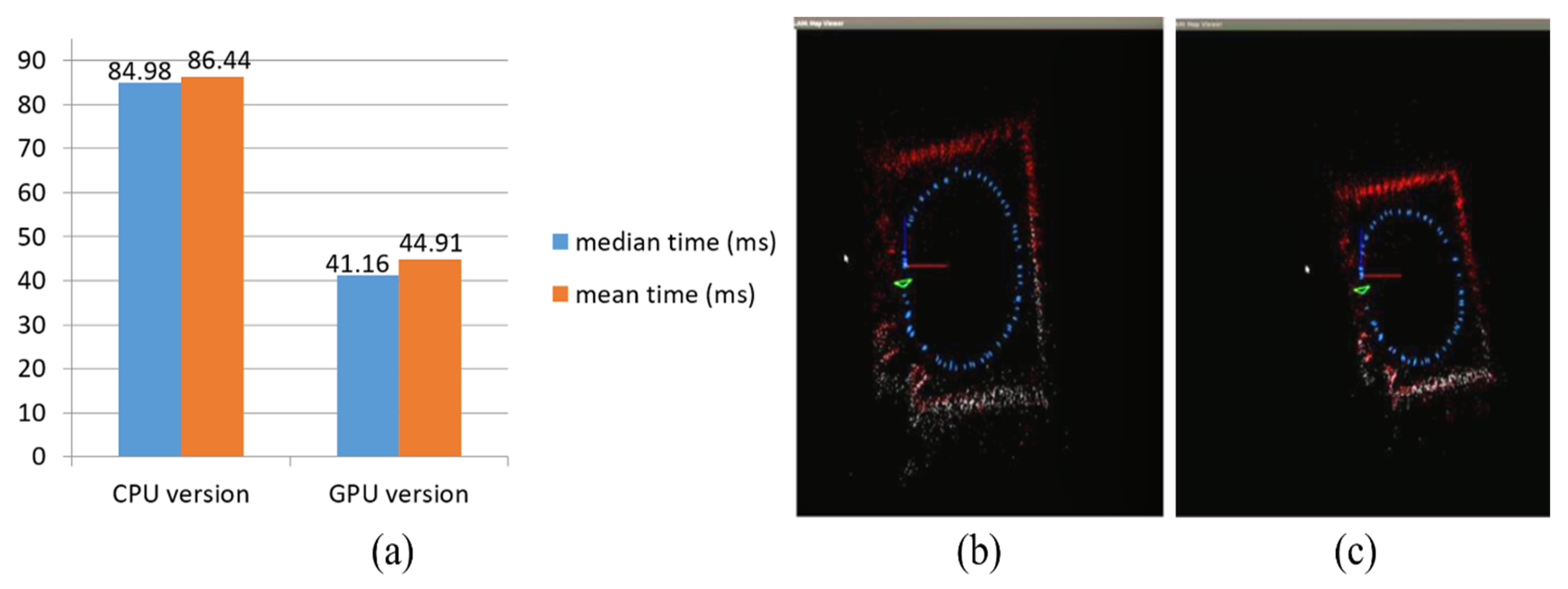

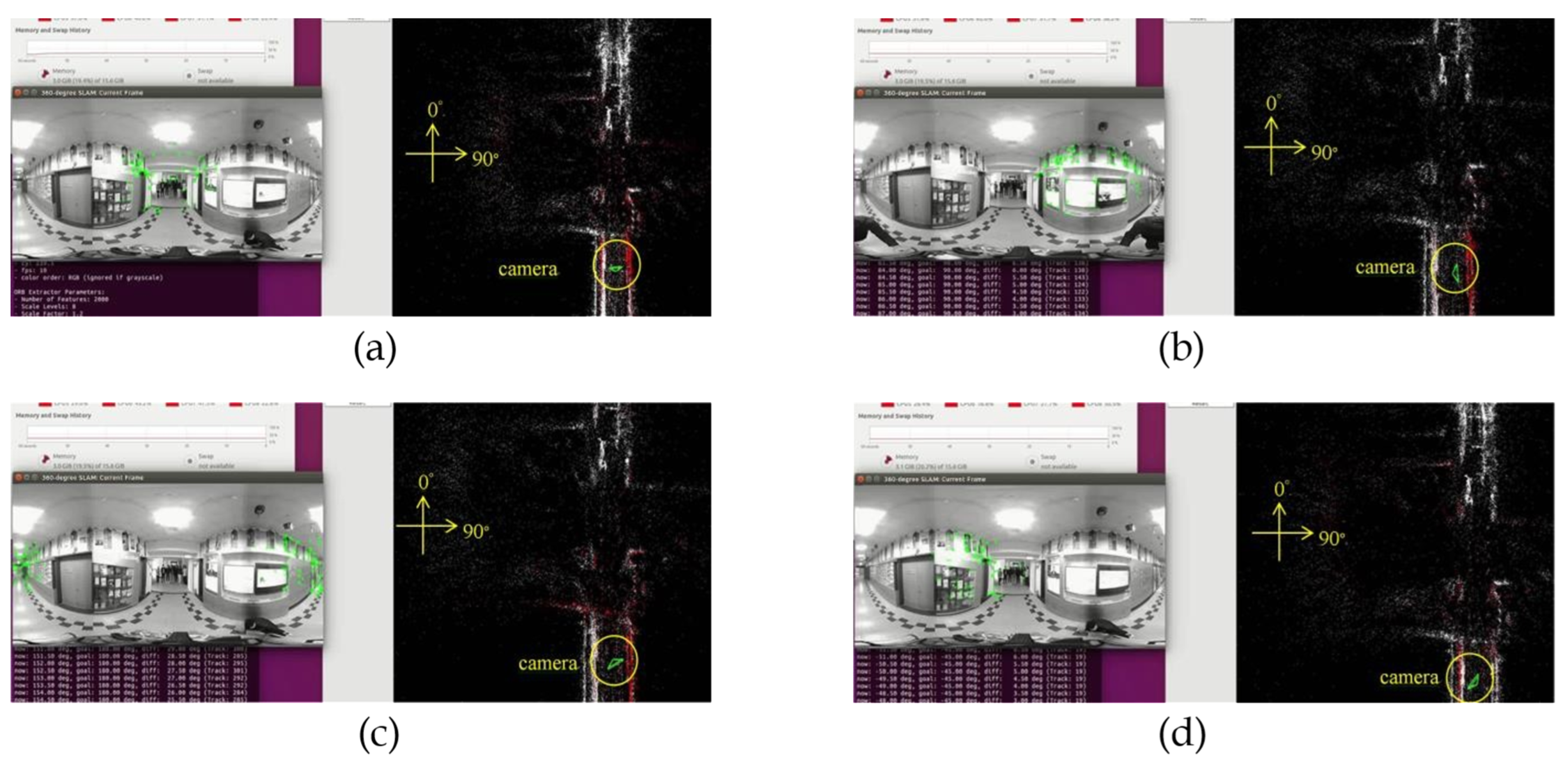

4.1. Implementation

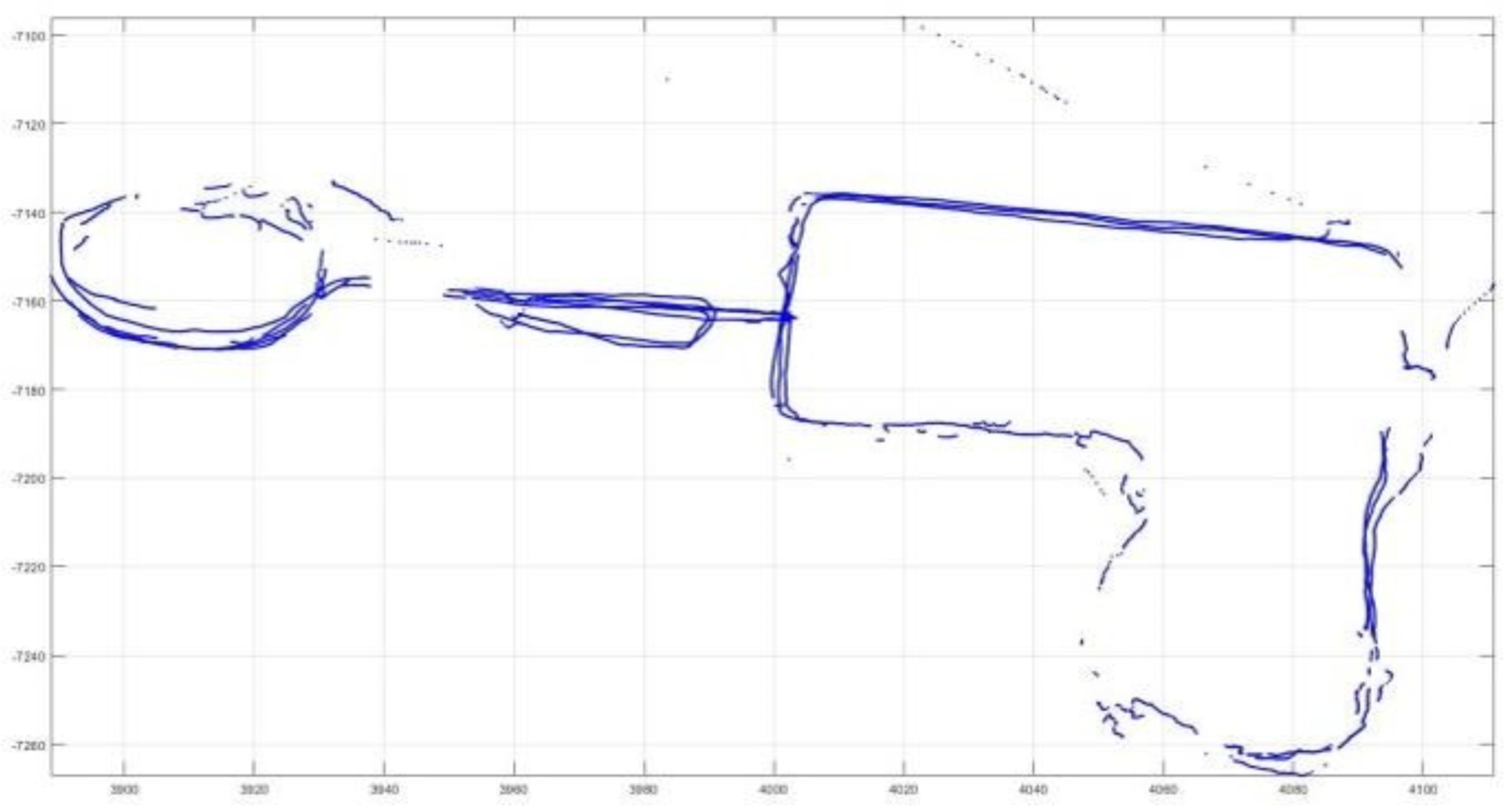

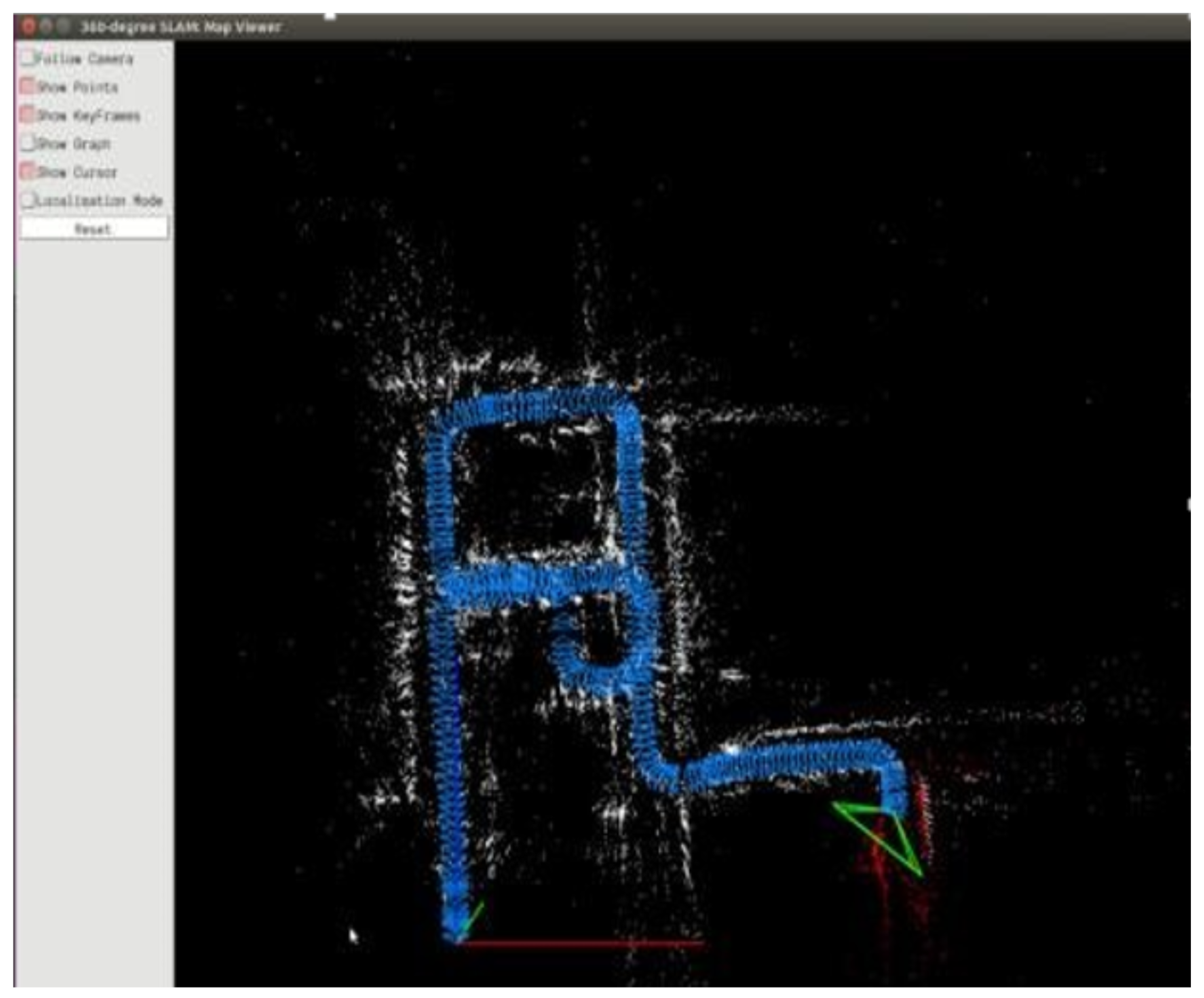

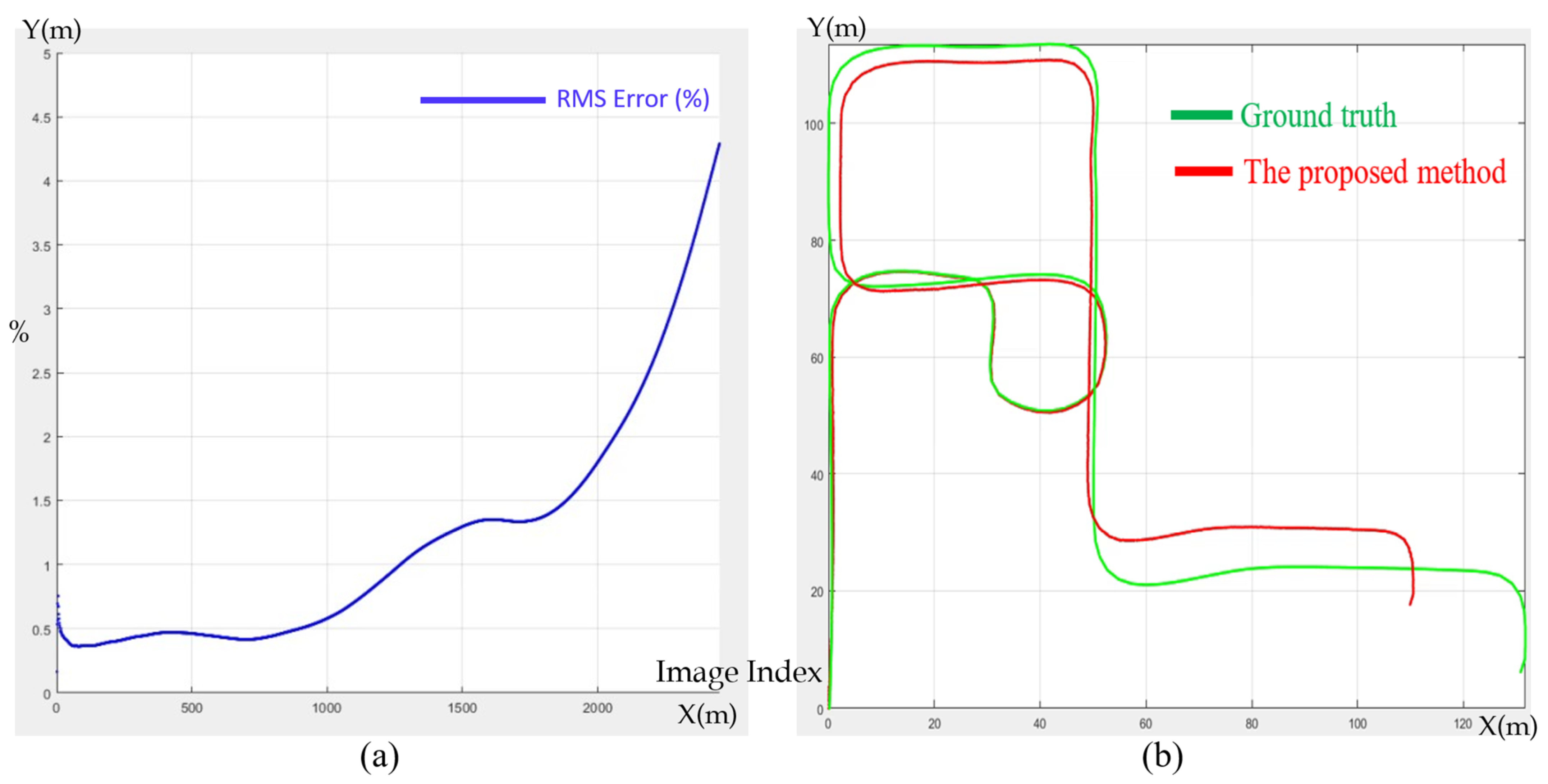

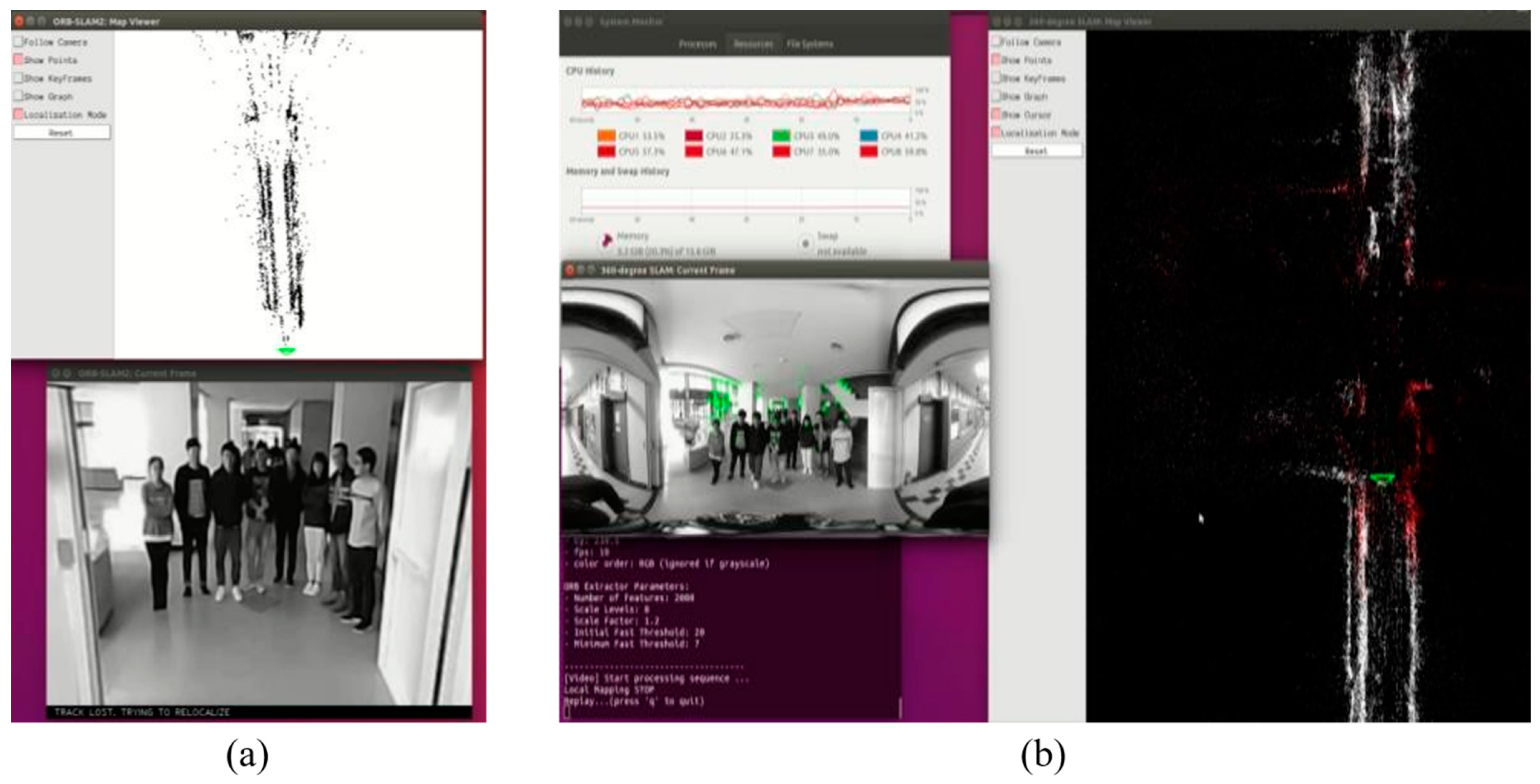

4.2. Evaluation

360° SLAM Ground Truth

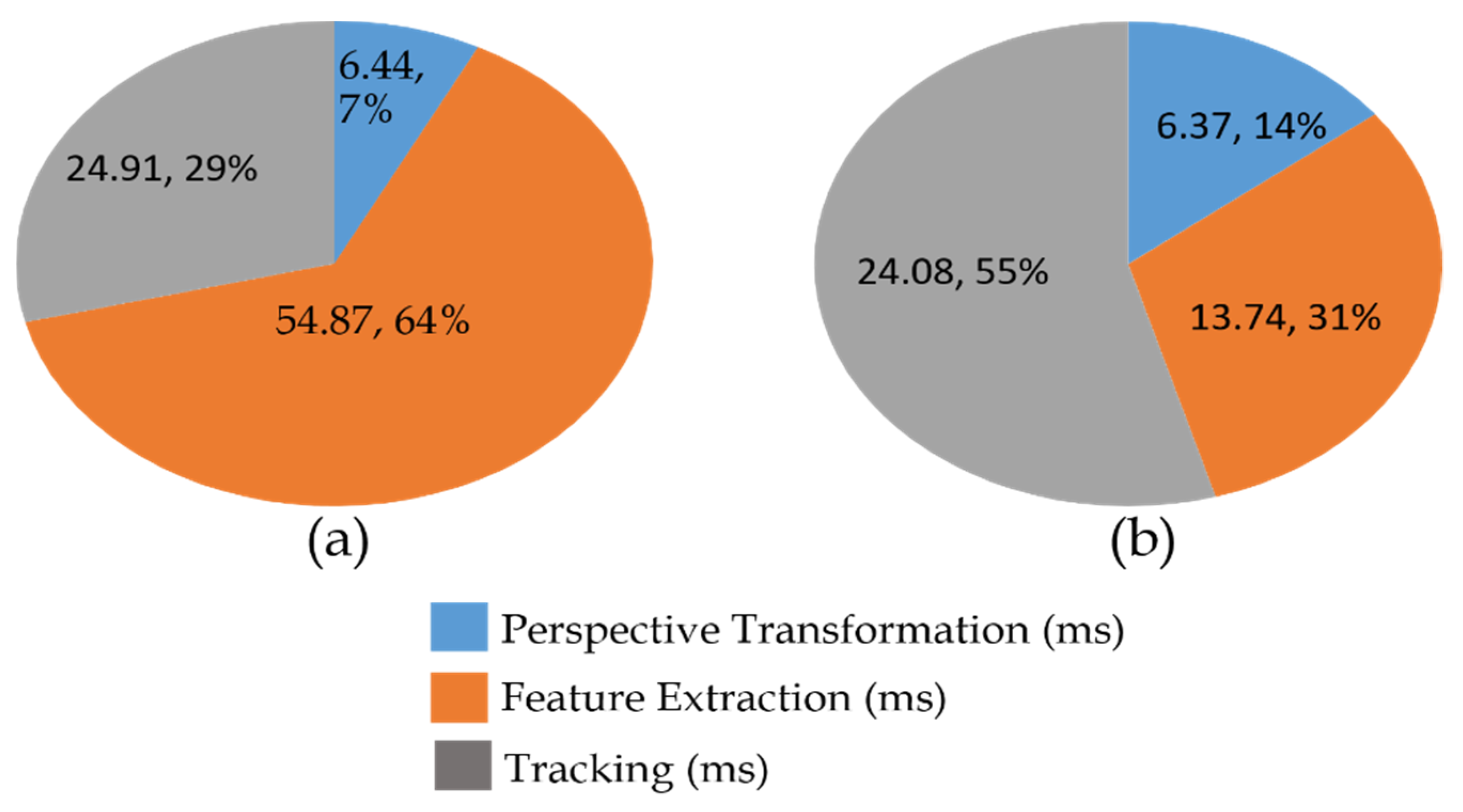

4.3. Experimental Results

4.4. Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robotics & Automation Magazine, 5 June 2006; Volume 13, 99–110. [Google Scholar]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robotics & Automation Magazine, 21 August 2006; Volume 13, 108–117. [Google Scholar]

- Stachniss, C.; Leonard, J.J.; Thrun, S. Simultaneous localization and mapping. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 1153–1176. [Google Scholar]

- Aulinas, J.; Petillot, Y.R.; Salvi, J.; Lladó, X. The SLAM Problem: A Survey; IOS Press: Amsterdam, The Netherlands, 2008; Volume 184, pp. 363–371. [Google Scholar]

- Billinghurst, M.; Clark, A.; Lee, G. A survey of augmented reality. Found. Trends® Hum. Comput. Interact. 2015, 8, 73–272. [Google Scholar] [CrossRef]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2815–2821. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR 2007), Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE international conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Chatila, R.; Laumond, J.P. Position referencing and consistent world modeling for mobile robots. In Proceedings of the IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 138–145. [Google Scholar]

- Mur-Artal, R.; Montiel JM, M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Davison, A.J. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, p. 1403. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 6, 1052–1067. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Williams, B.; Klein, G.; Reid, I. Real-time SLAM relocalisation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2007), Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Okutomi, M.; Kanade, T. A multiple-baseline stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 353–363. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Dignadice, S.J.; Red, J.R.; Bautista, A.J.; Perol, A.; Ollanda, A.; Santos, R. Application of Simultaneous Localization and Mapping in the Development of an Autonomous Robot. In Proceedings of the 2022 8th International Conference on Control, Automation and Robotics (ICCAR), Xiamen, China, 8–10 April 2022; pp. 77–80. [Google Scholar] [CrossRef]

- Gobhinath, S.; Anandapoorani, K.; Anitha, K.; Sri, D.D.; DivyaDharshini, R. Simultaneous Localization and Mapping [SLAM] of Robotic Operating System for Mobile Robots. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; pp. 577–580. [Google Scholar] [CrossRef]

- Chen, C.H.; Wang, C.C.; Lin, S.F. A Navigation Aid for Blind People Based on Visual Simultaneous Localization and Mapping. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Reynolds, S.; Fan, D.; Taha, T.M.; DeMange, A.; Jenkins, T. An Implementation of Simultaneous Localization and Mapping Using Dynamic Field Theory. In Proceedings of the NAECON 2021-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 16–19 August 2021; pp. 80–83. [Google Scholar] [CrossRef]

- Caracciolo, M.; Casciotti, O.; Lloyd, C.; Sola-Thomas, E.; Weaver, M.; Bielby, K.; Sarker, M.A.B.; Imtiaz, M.H. Autonomous Navigation System from Simultaneous Localization and Mapping. In Proceedings of the 2022 IEEE 31st Microelectronics Design & Test Symposium (MDTS), Albany, NY, USA, 23–26 May 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, X.; Hu, Q.; Zhang, X.; Wang, H. An ORB-SLAM3 Autonomous Positioning and Orientation Approach using 360-degree Panoramic Video. In Proceedings of the 2022 29th International Conference on Geoinformatics, Beijing, China, 15–18 August 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Roil, M.K.; Prakash, A. Exploring Possible Applications of ORB SLAM 2 in Education, Healthcare, and Industry: Insights into the Challenges, Features, and Effects. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Smith, M.; Baldwin, I.; Churchill, W.; Paul, R.; Newman, P. The new college vision and laser data set. Int. J. Robot. Res. 2009, 28, 595–599. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Rebecq, H.; Forster, C.; Scaramuzza, D. Benefit of large field-of-view cameras for visual odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 801–808. [Google Scholar]

| Saving the Map | Not Saving the Map | |

|---|---|---|

| Map existing | Normal mode (with online updating) | Localization mode |

| Map not found | Map establishment mode | SLAM mode |

| Number of cameras | 2 |

| Circular fisheye FOV | 200° (each camera) |

| Camera input resolution | 1024 × 768 (each camera) |

| Equirectangular panorama resolution | 1416 × 708 |

| Blending width | 40 pixels |

| Perspective transformation parameters | 720 × 480 with 130° FOV |

| Orb features extracted each frame | 2000 |

| Processor | Intel i7-3770 @ 3.40 GHZ |

|---|---|

| GPU | None |

| Memory | 16GB RAM |

| Video In | File input |

| Operation System | Ubuntu 14.04 |

| Performance | 20 fps |

| Processor | ARM Cortex-A57 (quad-core) @ 2 GHz + NVIDIA Denver2 (dual-core) @ 2 GHz |

| GPU | 256 core Pascal @ 1300 MHz |

| Memory | 8 GB 128 bit LPDDR4 @ 1866 MHz |

| Video In | File input |

| OS | Ubuntu 16.04.5 LTS |

| Performance | 20 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, B.-H.; Shivanna, V.M.; Chen, J.-S.; Guo, J.-I. 360° Map Establishment and Real-Time Simultaneous Localization and Mapping Based on Equirectangular Projection for Autonomous Driving Vehicles. Sensors 2023, 23, 5560. https://doi.org/10.3390/s23125560

Lin B-H, Shivanna VM, Chen J-S, Guo J-I. 360° Map Establishment and Real-Time Simultaneous Localization and Mapping Based on Equirectangular Projection for Autonomous Driving Vehicles. Sensors. 2023; 23(12):5560. https://doi.org/10.3390/s23125560

Chicago/Turabian StyleLin, Bo-Hong, Vinay M. Shivanna, Jiun-Shiung Chen, and Jiun-In Guo. 2023. "360° Map Establishment and Real-Time Simultaneous Localization and Mapping Based on Equirectangular Projection for Autonomous Driving Vehicles" Sensors 23, no. 12: 5560. https://doi.org/10.3390/s23125560