Artistic Robotic Arm: Drawing Portraits on Physical Canvas under 80 Seconds

Abstract

:1. Introduction

- Drawing with variable stroke widths utilizing a Chinese calligraphy pen.

- Style transfer to variable-width black and white pen drawings using CycleGAN.

- Effectively balancing between time consumption and portrait quality.

- High level of satisfaction from the public demonstration volunteers.

2. Related Work

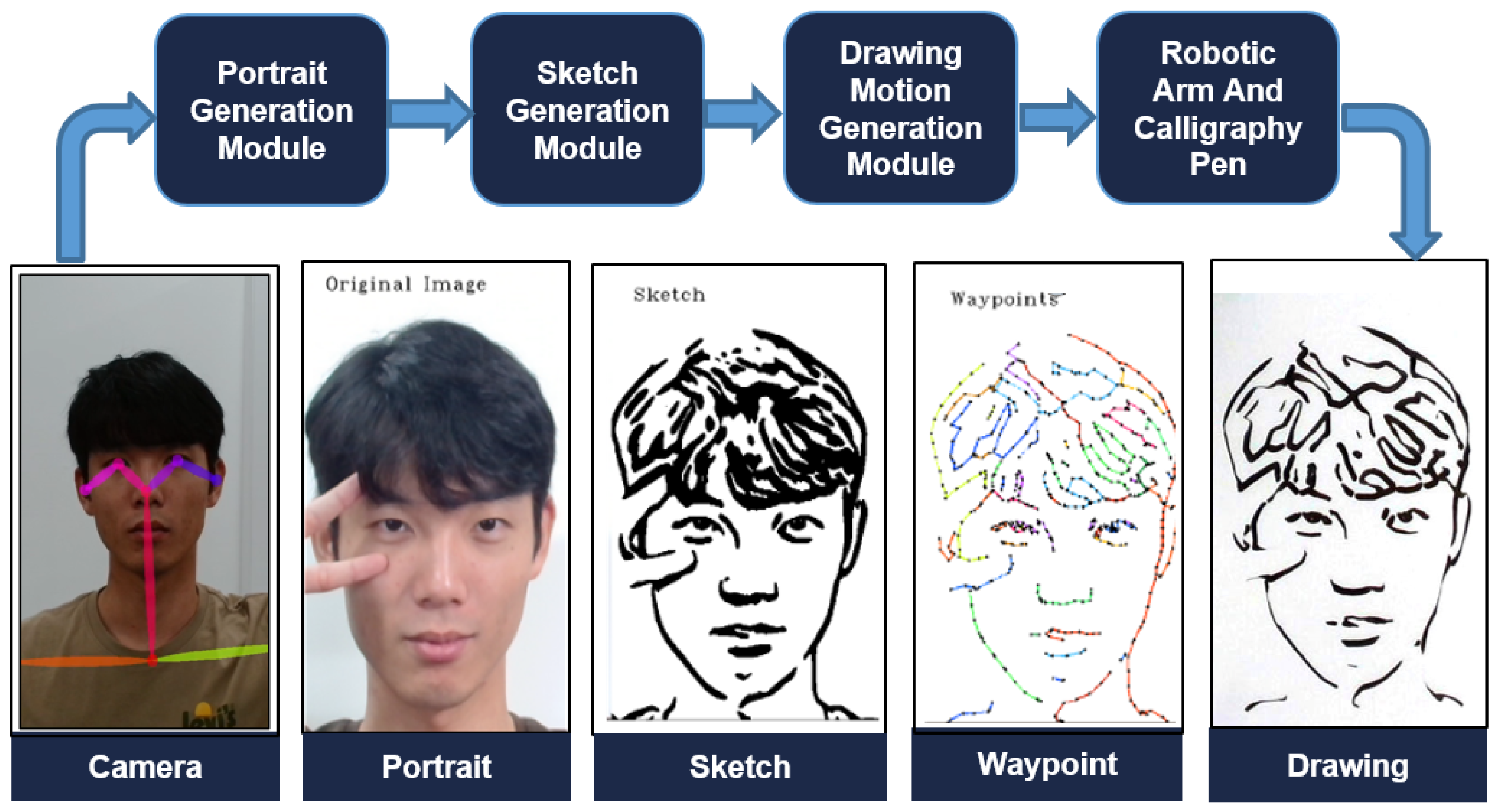

3. Proposed Methods

3.1. Portrait Generation Module

3.2. Sketch Generation Module

3.3. Drawing Motion Generation Module

3.3.1. Skeleton Extraction

3.3.2. Lines Extraction

3.3.3. Lines Clustering and Waypoints Generation

| Algorithm 1: Extract Line: Pixel to Pixel |

|

3.3.4. Eye Handling

3.4. Robot Motion Control Module

4. Hardware Setup

4.1. Robotic Arm

4.2. Pen Gripper and Canvas Pad

4.3. Calligraphy Pen

5. Experimental Results

5.1. Lab Experiment

5.2. Public Demonstration

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Almeida, D.; Karayiannidis, Y. A Lyapunov-Based Approach to Exploit Asymmetries in Robotic Dual-Arm Task Resolution. In Proceedings of the 2019 IEEE 58th Conference on Decision and Control (CDC), Nice, France, 11–13 December 2019. [Google Scholar] [CrossRef]

- Chancharoen, R.; Chaiprabha, K.; Wuttisittikulkij, L.; Asdornwised, W.; Saadi, M.; Phanomchoeng, G. Digital Twin for a Collaborative Painting Robot. Sensors 2023, 23, 17. [Google Scholar] [CrossRef] [PubMed]

- TWOMEY, R. Three Stage Drawing Transfer. Available online: https://roberttwomey.com/three-stage-drawing-transfer/ (accessed on 10 May 2023).

- Putra, R.Y.; Kautsar, S.; Adhitya, R.; Syai’in, M.; Rinanto, N.; Munadhif, I.; Sarena, S.; Endrasmono, J.; Soeprijanto, A. Neural Network Implementation for Invers Kinematic Model of Arm Drawing Robot. In Proceedings of the 2016 International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 29–30 November 2016. [Google Scholar]

- Baccaglini-Frank, A.E.; Santi, G.; Del Zozzo, A.; Frank, E. Teachers’ Perspectives on the Intertwining of Tangible and Digital Modes of Activity with a Drawing Robot for Geometry. Educ. Sci. 2020, 10, 387. [Google Scholar] [CrossRef]

- Hámori, A.; Lengyel, J.; Reskó, B. 3DOF drawing robot using LEGO-NXT. In Proceedings of the 2011 15th IEEE International Conference on Intelligent Engineering Systems, Poprad, High Tatras, Slovakia, 23–25 June 2011; pp. 293–295. [Google Scholar] [CrossRef]

- Junyou, Y.; Guilin, Q.; Le, M.; Dianchun, B.; Xu, H. Behavior-based control of brush drawing robot. In Proceedings of the 2011 International Conference on Transportation, Mechanical, and Electrical Engineering (TMEE), Changchun, China, 16–18 December 2011; pp. 1148–1151. [Google Scholar] [CrossRef]

- Hsu, C.F.; Kao, W.H.; Chen, W.Y.; Wong, K.Y. Motion planning and control of a picture-based drawing robot system. In Proceedings of the 2017 Joint 17th World Congress of International Fuzzy Systems Association and 9th International Conference on Soft Computing and Intelligent Systems (IFSA-SCIS), Otsu, Japan, 27–30 June 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Dziemian, S.; Abbott, W.W.; Faisal, A.A. Gaze-based teleprosthetic enables intuitive continuous control of complex robot arm use: Writing & drawing. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 1277–1282. [Google Scholar] [CrossRef] [Green Version]

- Sylvain, C.; Epiney, J.; Aude, B. A Humanoid Robot Drawing Human Portraits. In Proceedings of the 2005 5th IEEE-RAS International Conference on Humanoid Robots, Tsukuba, Japan, 5–7 December 2005; pp. 161–166. [Google Scholar] [CrossRef] [Green Version]

- Salameen, L.; Estatieh, A.; Darbisi, S.; Tutunji, T.A.; Rawashdeh, N.A. Interfacing Computing Platforms for Dynamic Control and Identification of an Industrial KUKA Robot Arm. In Proceedings of the 2020 21st International Conference on Research and Education in Mechatronics (REM), Cracow, Poland, 9–11 December 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Cammarata, A.; Maddío, P.D. A system-based reduction method for spatial deformable multibody systems using global flexible modes. J. Sound Vib. 2021, 504, 116118. [Google Scholar] [CrossRef]

- Cammarata, A.; Sinatra, R.; Maddío, P.D. A two-step algorithm for the dynamic reduction of flexible mechanisms. Mech. Mach. Sci. 2019, 66, 25–32. [Google Scholar] [CrossRef]

- Yang, G. Asymptotic tracking with novel integral robust schemes for mismatched uncertain nonlinear systems. Int. J. Robust Nonlinear Control. 2023, 33, 1988–2002. [Google Scholar] [CrossRef]

- Yang, G.; Yao, J.; Dong, Z. Neuroadaptive learning algorithm for constrained nonlinear systems with disturbance rejection. Int. J. Robust Nonlinear Control. 2022, 32, 6127–6147. [Google Scholar] [CrossRef]

- Beltramello, A.; Scalera, L.; Seriani, S.; Gallina, P. Artistic Robotic Painting Using the Palette Knife Technique. Robotics 2020, 9, 15. [Google Scholar] [CrossRef] [Green Version]

- Karimov, A.; Kopets, E.; Kolev, G.; Leonov, S.; Scalera, L.; Butusov, D. Image Preprocessing for Artistic Robotic Painting. Inventions 2021, 6, 19. [Google Scholar] [CrossRef]

- Karimov, A.; Kopets, E.; Leonov, S.; Scalera, L.; Butusov, D. A Robot for Artistic Painting in Authentic Colors. J. Intell. Robot. Syst. 2023, 107, 34. [Google Scholar] [CrossRef]

- Guo, C.; Bai, T.; Wang, X.; Zhang, X.; Lu, Y.; Dai, X.; Wang, F.Y. ShadowPainter: Active Learning Enabled Robotic Painting through Visual Measurement and Reproduction of the Artistic Creation Process. J. Intell. Robot. Syst. 2022, 105, 61. [Google Scholar] [CrossRef]

- Lee, G.; Kim, M.; Lee, M.; Zhang, B.T. From Scratch to Sketch: Deep Decoupled Hierarchical Reinforcement Learning for Robotic Sketching Agent. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5553–5559. [Google Scholar] [CrossRef]

- Scalera, L.; Seriani, S.; Gasparetto, A.; P., G. Non-Photorealistic Rendering Techniques for Artistic Robotic Painting. Robotics 2019, 8, 10. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, C.; Lipson, H. A robotic system for interpreting images into painted artwork. In Proceedings of the GA2008, 11th Generative Art Conference, Milan, Italy, 16–18 December 2008; pp. 372–387. [Google Scholar]

- Lindemeier, T. Hardware-Based Non-Photorealistic Rendering Using a Painting Robot. Comput. Graph. Forum 2015, 34, 311–323. [Google Scholar] [CrossRef] [Green Version]

- Luo, R.C.; Liu, Y.J. Robot Artist Performs Cartoon Style Facial Portrait Painting. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7683–7688. [Google Scholar] [CrossRef]

- Wang, T.; Toh, W.Q.; Zhang, H.; Sui, X.; Li, S.; Liu, Y.; Jing, W. RoboCoDraw: Robotic Avatar Drawing with GAN-Based Style Transfer and Time-Efficient Path Optimization. Proc. AAAI Conf. Artif. Intell. 2020, 34, 10402–10409. [Google Scholar] [CrossRef]

- Dong, L.; Li, W.; Xin, N.; Zhang, L.; Lu, Y. Stylized Portrait Generation and Intelligent Drawing of Portrait Rendering Robot. In Proceedings of the 2018 International Conference on Mechanical, Electronic and Information Technology, Shanghai, China, 15–16 April 2018. [Google Scholar]

- Patrick, T.; Frederic, F.L. Sketches by Paul the Robot. In Proceedings of the Eighth Annual Symposium on Computational Aesthetics in Graphics, Visualization, and Imaging, Annecy, France, 4–6 June 2012. [Google Scholar]

- Wu, P.L.; Hung, Y.C.; Shaw, J.S. Artistic robotic pencil sketching using closed-loop force control. Proc. Inst. Mech. Eng. Part J. Mech. Eng. Sci. 2022, 236, 9753–9762. [Google Scholar] [CrossRef]

- Gao, F.; Zhu, J.; Yu, Z.; Li, P.; Wang, T. Making Robots Draw A Vivid Portrait In Two Minutes. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 9585–9591. [Google Scholar] [CrossRef]

- Gao, Q.; Chen, H.; Yu, R.; Yang, J.; Duan, X. A Robot Portraits Pencil Sketching Algorithm Based on Face Component and Texture Segmentation. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 48–53. [Google Scholar] [CrossRef]

- Lin, C.Y.; Chuang, L.W.; Mac, T.T. Human portrait generation system for robot arm drawing. In Proceedings of the 2009 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Singapore, 14–17 July 2009; pp. 1757–1762. [Google Scholar] [CrossRef]

- Xue, T.; Liu, Y. Robot portrait rendering based on multi-features fusion method inspired by human painting. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 2413–2418. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Yi, R.; Liu, Y.J.; Lai, Y.K.; Rosin, P.L. Unpaired Portrait Drawing Generation via Asymmetric Cycle Mapping. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 9–13 June 2020; pp. 8214–8222. [Google Scholar] [CrossRef]

- ZEUS Zero 6-DOF Robotic Qrm. Available online: http://zero.globalzeus.com/en/6axis/ (accessed on 10 May 2023).

- RoboWorld ZEUS Competition. 2022. Available online: http://www.globalzeus.com/kr/sub/ir/mediaView.asp?bid=2&b_idx=182 (accessed on 10 May 2023).

|  |  |  |  |  |  |  | |

|---|---|---|---|---|---|---|---|---|

| [25] | [29] | [10] | [26] | [30] | [31] | [32] | [27] | |

| Drawing Time | 43.2 s | 2 min | 2 min | 4 min | 4–6 min | 4–6 min | Not Discussed | Not Discussed |

| Robotic Arm | UR5 6 DOF | 3 DOF | 4 DOF | 2 DOF | 3 DOF | Pica 7 DOF | Not Discussed | Not Discussed |

| Drawing Tool | Marker | Pencil | Pen | Pencil | Pencil | Pencil | Pencil | Pen |

|  |  |  | |||||

| [28] | [23] | [24] | Ours | |||||

| Drawing Time | 30 min | 17 h | 1 to 2 h | 80 s | ||||

| Robotic Arm | YASKAWA GP7 6 DOF | Reis Robotics RV20-6 6 DOF | 7 DOF | ZEUS 6 DOF | ||||

| Drawing Tool | Chinese calligraphy pen | Paint Brush | Paint brush | Chinese calligraphy pen |

| CPU | GPU | RAM |

|---|---|---|

| intel Core i7-8750H | NVIDIA RTX 2080 8 GB | 16 GB |

| Portrait Generation | 25 ms |

| Sketch Generation | 7 s |

| Drawing Motion Generation | 55 ms |

| Robot Motion Control | 80 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasrat, S.; Kang, T.; Park, J.; Kim, J.; Yi, S.-J. Artistic Robotic Arm: Drawing Portraits on Physical Canvas under 80 Seconds. Sensors 2023, 23, 5589. https://doi.org/10.3390/s23125589

Nasrat S, Kang T, Park J, Kim J, Yi S-J. Artistic Robotic Arm: Drawing Portraits on Physical Canvas under 80 Seconds. Sensors. 2023; 23(12):5589. https://doi.org/10.3390/s23125589

Chicago/Turabian StyleNasrat, Shady, Taewoong Kang, Jinwoo Park, Joonyoung Kim, and Seung-Joon Yi. 2023. "Artistic Robotic Arm: Drawing Portraits on Physical Canvas under 80 Seconds" Sensors 23, no. 12: 5589. https://doi.org/10.3390/s23125589