CACTUS: Content-Aware Compression and Transmission Using Semantics for Automotive LiDAR Data

Abstract

1. Introduction

- Semantic information allows dynamically allocating the bit rate to different parts of the PC and schedules information transmission in a content-aware manner (while discarding useless data).

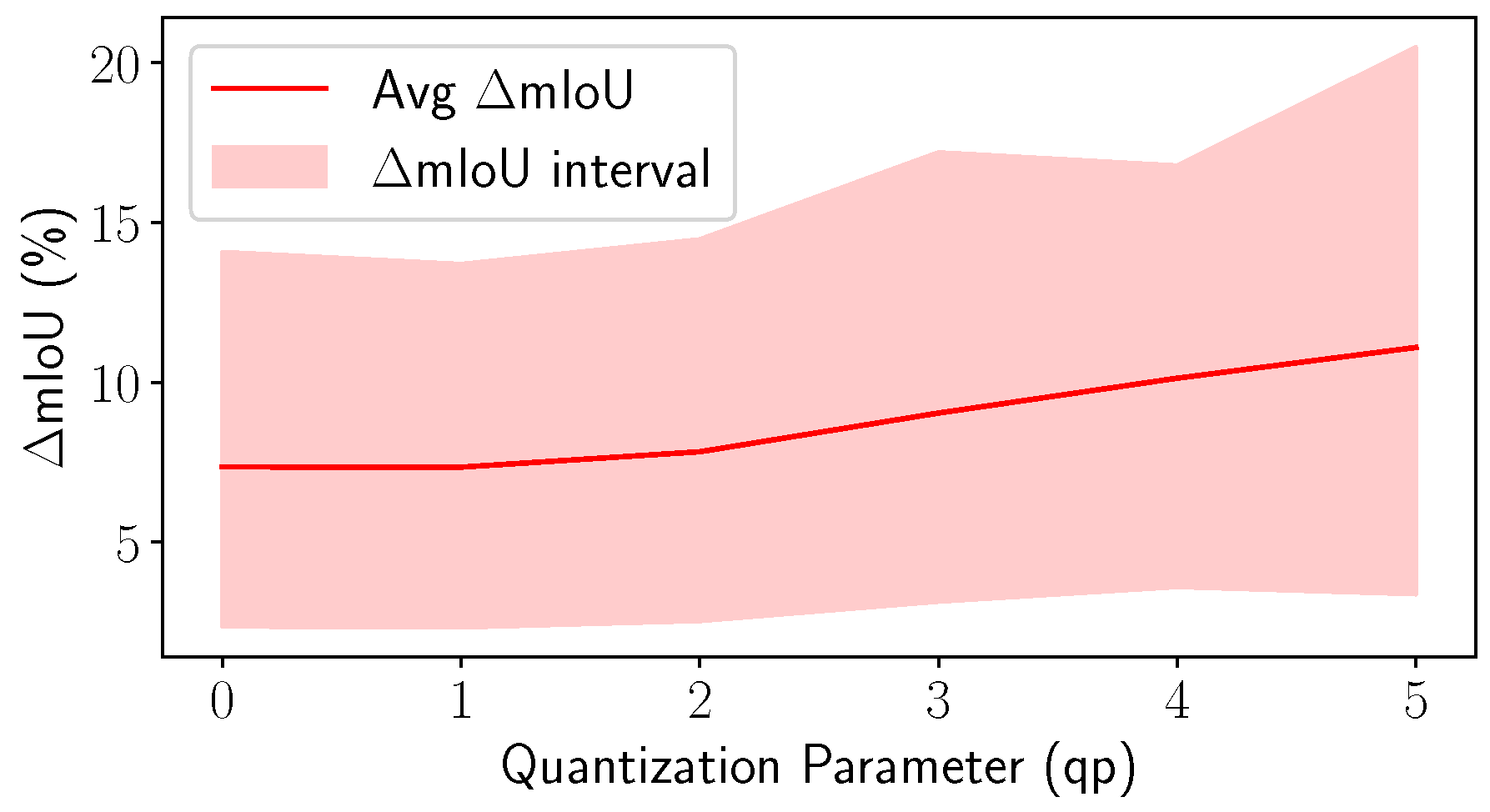

- The distortion introduced by coding operations could prevent a correct classification when data are reconstructed and segmented at the receiver (see Section 4); a cognitive source coding approach allows signaling to the receiver semantic information obtained from the originally acquired data.

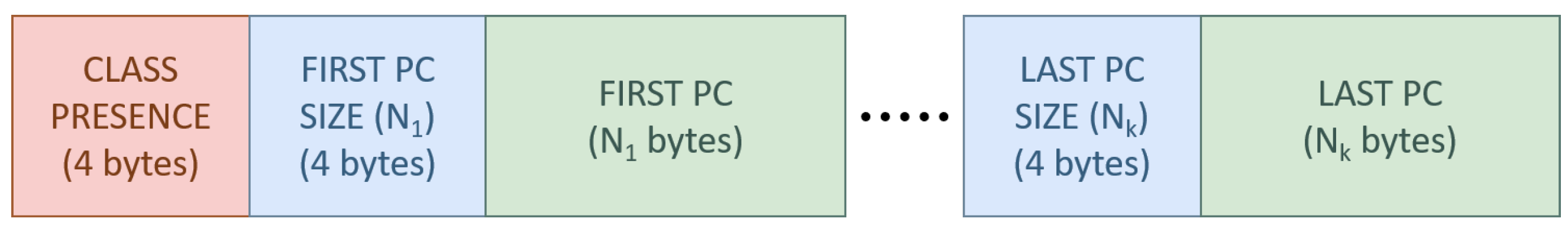

- Semantic information can be transmitted with a very limited overhead (see Section 3), sparing the segmentation task at the decoder side.

- Transmitting labels proves to be more accurate than operating SS at the decoder on the reconstructed PC (because of compression noise).

- The bitrate can be flexibly allocated by choosing the quality level or skipping some parts in a content-aware manner according to the network conditions or user preferences.

- The execution time required to compress the PC and the semantic information is lower with CACTUS than with the reference codecs. This result is obtained without considering the time required to compute the semantic information, because this step needs to be computed regardless of the coding procedure.

2. Related Work

2.1. Collaborative Strategies for LiDAR Point Clouds

2.2. An Overview of Compression Strategies for LiDAR Point Clouds

3. Methodology

3.1. Adopted Segmentation and Coding Solutions in the CACTUS Framework

3.2. Content-Aware Compression for Automotive LiDAR Point Clouds

3.3. Learned Point Cloud Coding Methods

4. Experimental Results

4.1. Experiments

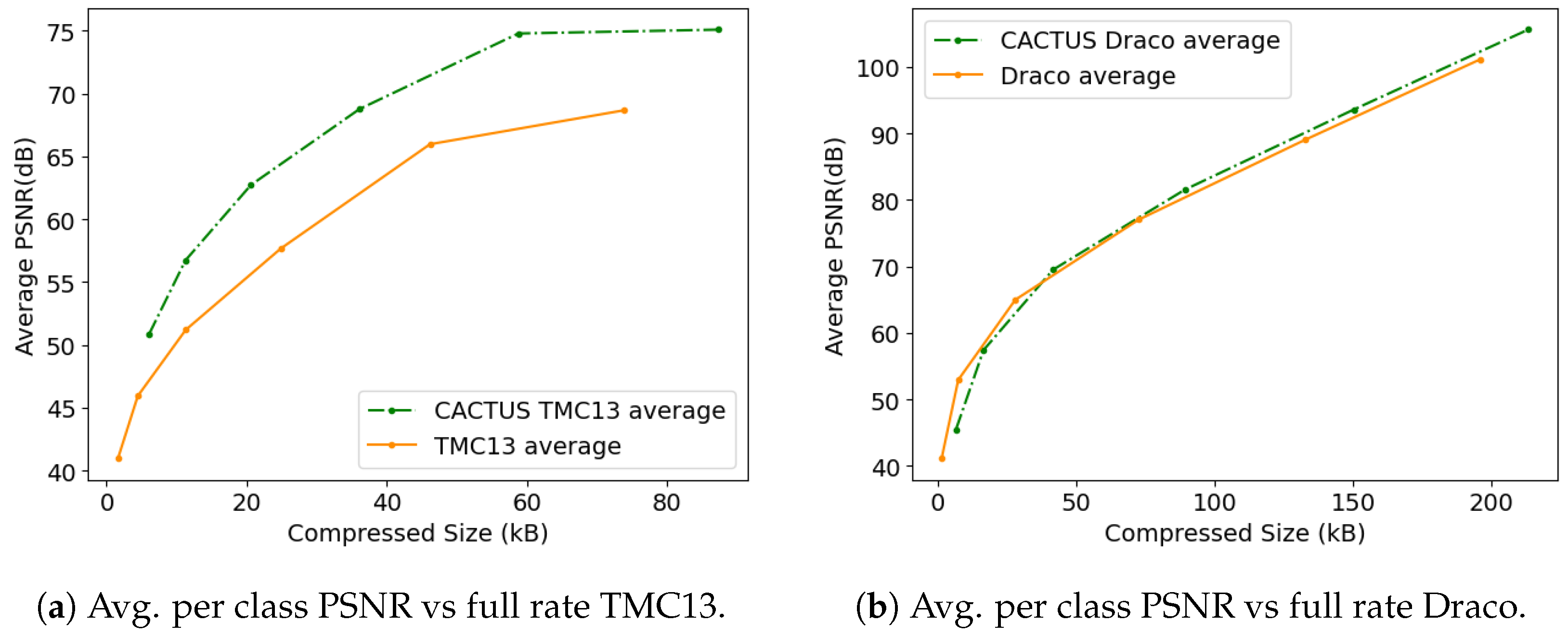

4.2. Results on TMC13 and Draco

- is the output predicted by the NN by processing the geometry of a PC compressed with factor .

- are the labels obtained by assigning to each compressed point the label predicted by RandLA-Net for its nearest neighbor in the original geometry (recoloring in TMC13).

- is the PC recolored using the original ground truth instead of the RandLA-Net prediction.

4.3. Results with Neural Codecs

- Increase: e.g., if the class of each point is assigned at random, then the total number of voxels is going to increase by a factor of n after partitioning.

- Remain constant: if the PC can be perfectly divided in n regions with no overlap, then the total number of voxels will not change.

- Decrease: there are some configurations, although rare, where there are empty regions in the PC that can be ignored after partitioning because of a better estimation of the bounds of the PC, which can lead to a lower number of voxels being fed to the codec.

5. Discussion

- Across all the proposed codecs (see Figure 3a,b and Figure 7), when semantic information needs to be transmitted, it is more effective to use CACTUS than the default codecs. This is likely due to the fact that standard attribute coding procedures are designed for continuous values (such as color and normals), and they are not suited for categorical labels, leading to sub-optimal performance. This is solved by CACTUS, which independently encodes the geometry of different semantic classes, allowing the transmission of this type of information almost for free.

- From the analysis in Appendix A and the empirical results in Figure 4, it is possible to see that when the points are encoded without changing their representation, and when the algorithm complexity is super-linear (which is almost always the case), then independently compressing partitions of the PC leads to lower encoding and decoding time. This is especially useful in the automotive scenario where working in real time is a very important requirement. This does not always apply for neural codecs, since most of them process voxelized representations. Additionally, since in those cases parallelization is often exploited, the execution time highly depends on the specific PC that is being processed and on the classes distribution.

- As can be seen from Figure 5, independently transmitting semantically disjoint partitions helps improve the quality of the class information at the receiver side for various reasons. Firstly, encoding all the PC with a standard codec can lead to the removal of duplicate points, which is non-optimal, since important information (such as the presence of a pedestrian) could be removed from the scene, and secondly, the performance of current SS strategies are hindered by compression noise, as shown by the analysis carried out in this paper.

- This type of approach has much greater flexibility than simply using a standard codec, since it allows developing rate allocation algorithms that can choose different coding parameters for each semantic class depending on the requirements of the application.

- There are some use cases where CACTUS is sub-optimal. In particular, if all points are equally important for the end task and if the receiver does not care about semantic information, then using the base codec allows achieving better RD performance. This can be easily seen from Figure 3a,b and Figure 7, where the geometry RD curves of TMC13, Draco and DSAE are above the corresponding CACTUS ones. The main reason for this is that the latter further sparsifies the PC, making it hard to fully exploit the spatial correlation in the data.

- Many LPCGCs struggle when reconstructing sparse PCs, and the issue is further amplified by CACTUS. This makes it non-trivial to seamlessly use them in the framework.

6. Conclusions and Future Works

- The codecs that are currently proposed in the literature do not use semantic information when performing compression; therefore, the performance of CACTUS might be considerably improved with an ad hoc codec that is conditioned on the class of the considered partition. One other possibility would be to make the codec auto-regressive, i.e., the decoder could exploit the already decoded parts of the PC to infer some information about the parts that it still has not reconstructed. This usually leads to bitrate savings which could mitigate the fact that independently decoding the partitions makes it harder for the system to exploit spatial redundancy.

- The effect of other SS schemes on the framework should be explored to asses their effect on the overall coding performance.

- Furthermore, this work shows that compression noise can considerably hinder the performance of scene understanding algorithms. This should strongly motivate researchers to study some new techniques to make their models more robust to this and other types of noises. For example, training the NNs on compressed data might make them much more robust to the modified data distribution.

- It might be possible to train the whole system (semantic segmentation model and codec) in an end-to-end fashion to achieve better representational capabilities and faster inference due to the features shared by the two blocks.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CACTUS | Content-Aware Compression and Transmission Using Semantics |

| DL | Deep Learning |

| DSAE | Distributed Source Auto-Encoder |

| GPCC | Geometry-based Point Cloud Coding |

| LiDAR | Light Detection and Ranging |

| LPCGC | Learned Point Cloud Geometry Codec |

| mIoU | mean Intersection over Union |

| NN | Neural Network |

| PC | Point Cloud |

| PCC | Point Cloud Coding |

| PSNR | Peak Signal-to-Noise Ratio |

| QP | Quantization Parameter |

| RAHT | Region Adaptive Hierarchical Transform |

| RD | Rate Distortion |

| SS | Semantic Segmentation |

Appendix A

References

- Prokop, M.; Shaikh, S.A.; Kim, K. Low Overlapping Point Cloud Registration Using Line Features Detection. Remote Sens. 2020, 12, 61. [Google Scholar] [CrossRef]

- Mongus, D.; Brumen, M.; Zlaus, D.; Kohek, S.; Tomazic, R.; Kerin, U.; Kolmanic, S. A Complete Environmental Intelligence System for LiDAR-Based Vegetation Management in Power-Line Corridors. Remote Sens. 2021, 13, 5159. [Google Scholar] [CrossRef]

- Arastounia, M. Automated As-Built Model Generation of Subway Tunnels from Mobile LiDAR Data. Sensors 2016, 16, 1486. [Google Scholar] [CrossRef] [PubMed]

- Camuffo, E.; Mari, D.; Milani, S. Recent Advancements in Learning Algorithms for Point Clouds: An Updated Overview. Sensors 2022, 22, 1357. [Google Scholar] [CrossRef]

- Meijer, C.; Grootes, M.; Koma, Z.; Dzigan, Y.; Gonçalves, R.; Andela, B.; van den Oord, G.; Ranguelova, E.; Renaud, N.; Kissling, W. Laserchicken—A tool for distributed feature calculation from massive LiDAR point cloud datasets. SoftwareX 2020, 12, 100626. [Google Scholar] [CrossRef]

- Marvasti, E.E.; Raftari, A.; Marvasti, A.E.; Fallah, Y.P.; Guo, R.; Lu, H. Cooperative LIDAR Object Detection via Feature Sharing in Deep Networks. In Proceedings of the 2020 IEEE 92nd Vehicular Technology Conference (VTC2020-Fall), Virtual, 18 November–16 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Dimitrievski, M.; Jacobs, L.; Veelaert, P.; Philips, W. People Tracking by Cooperative Fusion of RADAR and Camera Sensors. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 509–514. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Zhong, L.; Yongtao, Y.; Chapman, M. Process virtualization of large-scale lidar data in a cloud computing environment. Comput. Geosci. 2013, 60, 109–116. [Google Scholar] [CrossRef]

- Hegeman, J.W.; Sardeshmukh, V.B.; Sugumaran, R.; Armstrong, M.P. Distributed LiDAR data processing in a high-memory cloud-computing environment. Ann. GIS 2014, 20, 255–264. [Google Scholar] [CrossRef]

- Sarker, V.K.; Peña Queralta, J.; Gia, T.N.; Tenhunen, H.; Westerlund, T. Offloading SLAM for Indoor Mobile Robots with Edge-Fog-Cloud Computing. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Shin, S.; Kim, J.; Moon, C. Road Dynamic Object Mapping System Based on Edge-Fog-Cloud Computing. Electronics 2021, 10, 2825. [Google Scholar] [CrossRef]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- Google. Draco 3D Data Compression. 2017. Available online: https://github.com/google/draco (accessed on 15 January 2023).

- Huang, L.; Wang, S.; Wong, K.; Liu, J.; Urtasun, R. Octsqueeze: Octree-structured entropy model for lidar compression. In Proceedings of the CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 1313–1323. [Google Scholar]

- Meagher, D. Geometric modeling using octree encoding. Comput. Graph. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Tu, C.; Takeuchi, E.; Carballo, A.; Takeda, K. Point cloud compression for 3D LiDAR sensor using recurrent neural network with residual blocks. In Proceedings of the ICRA 2019, Montreal, QC, Canada, 20–24 May 2019; pp. 3274–3280. [Google Scholar]

- Varischio, A.; Mandruzzato, F.; Bullo, M.; Giordani, M.; Testolina, P.; Zorzi, M. Hybrid Point Cloud Semantic Compression for Automotive Sensors: A Performance Evaluation. arXiv 2021, arXiv:2103.03819. [Google Scholar]

- Guarda, A.F.R.; Rodrigues, N.M.M.; Pereira, F. Point Cloud Coding: Adopting a Deep Learning-based Approach. In Proceedings of the 2019 Picture Coding Symposium (PCS), Ningbo, China, 12–15 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Guarda, A.F.; Rodrigues, N.M.; Pereira, F. Adaptive deep learning-based point cloud geometry coding. IEEE J. Sel. Top. Signal Process. 2020, 15, 415–430. [Google Scholar] [CrossRef]

- Guarda, A.F.; Rodrigues, N.M.; Pereira, F. Point cloud geometry scalable coding with a single end-to-end deep learning model. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2020; pp. 3354–3358. [Google Scholar]

- Quach, M.; Valenzise, G.; Dufaux, F. Learning convolutional transforms for lossy point cloud geometry compression. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2020; pp. 4320–4324. [Google Scholar]

- Quach, M.; Valenzise, G.; Dufaux, F. Improved deep point cloud geometry compression. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Virtual, 21–24 September 2020; pp. 1–6. [Google Scholar]

- Milani, S. ADAE: Adversarial Distributed Source Autoencoder For Point Cloud Compression. In Proceedings of the IEEE ICIP 2021, Bordeaux, France, 16–19 October 2021; pp. 3078–3082. [Google Scholar]

- Milani, S. A Syndrome-Based Autoencoder For Point Cloud Geometry Compression. In Proceedings of the IEEE ICIP 2020, Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2686–2690. [Google Scholar] [CrossRef]

- Milani, S.; Calvagno, G. A cognitive approach for effective coding and transmission of 3D video. ACM Trans. Multimed. Comput. Commun. Appl. (TOMCCAP) 2011, 7S, 1–21. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the CVPR 2012, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the ICCV 2019, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- St. Peter, J.; Drake, J.; Medley, P.; Ibeanusi, V. Forest Structural Estimates Derived Using a Practical, Open-Source Lidar-Processing Workflow. Remote Sens. 2021, 13, 4763. [Google Scholar] [CrossRef]

- Cabo, C.; Ordóñez, C.; Lasheras, F.S.; Roca-Pardiñas, J.; de Cos Juez, F.J. Multiscale Supervised Classification of Point Clouds with Urban and Forest Applications. Sensors 2019, 19, 4523. [Google Scholar] [CrossRef]

- Cortés Gallardo Medina, E.; Velazquez Espitia, V.M.; Chípuli Silva, D.; Fernández Ruiz de las Cuevas, S.; Palacios Hirata, M.; Zhu Chen, A.; González González, J.Á.; Bustamante-Bello, R.; Moreno-García, C.F. Object detection, distributed cloud computing and parallelization techniques for autonomous driving systems. Appl. Sci. 2021, 11, 2925. [Google Scholar] [CrossRef]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A Taxonomy and Survey of Edge Cloud Computing for Intelligent Transportation Systems and Connected Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6206–6221. [Google Scholar] [CrossRef]

- Lee, J.; Lee, K.; Yoo, A.; Moon, C. Design and Implementation of Edge-Fog-Cloud System through HD Map Generation from LiDAR Data of Autonomous Vehicles. Electronics 2020, 9, 2084. [Google Scholar] [CrossRef]

- Nevalainen, P.; Li, Q.; Melkas, T.; Riekki, K.; Westerlund, T.; Heikkonen, J. Navigation and Mapping in Forest Environment Using Sparse Point Clouds. Remote Sens. 2020, 12, 4088. [Google Scholar] [CrossRef]

- Miller, I.D.; Cowley, A.; Konkimalla, R.; Shivakumar, S.S.; Nguyen, T.; Smith, T.; Taylor, C.J.; Kumar, V. Any Way You Look at It: Semantic Crossview Localization and Mapping With LiDAR. IEEE Robot. Autom. Lett. 2021, 6, 2397–2404. [Google Scholar] [CrossRef]

- Huang, Y.; Shan, T.; Chen, F.; Englot, B. DiSCo-SLAM: Distributed Scan Context-Enabled Multi-Robot LiDAR SLAM with Two-Stage Global-Local Graph Optimization. IEEE Robot. Autom. Lett. 2021, 36, 1150–1157. [Google Scholar] [CrossRef]

- Sualeh, M.; Kim, G.W. Dynamic Multi-LiDAR Based Multiple Object Detection and Tracking. Sensors 2019, 19, 1474. [Google Scholar] [CrossRef]

- Massa, F.; Bonamini, L.; Settimi, A.; Pallottino, L.; Caporale, D. LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars. Sensors 2020, 20, 3992. [Google Scholar] [CrossRef]

- Shan, M.; Narula, K.; Wong, Y.F.; Worrall, S.; Khan, M.; Alexander, P.; Nebot, E. Demonstrations of Cooperative Perception: Safety and Robustness in Connected and Automated Vehicle Operations. Sensors 2021, 21, 200. [Google Scholar] [CrossRef]

- Camuffo, E.; Gorghetto, L.; Badia, L. Moving Drones for Wireless Coverage in a Three-Dimensional Grid Analyzed via Game Theory. In Proceedings of the 2021 IEEE Asia Pacific Conference on Circuit and Systems (APCCAS), Penang, Malaysia, 22–26 November 2021; pp. 41–44. [Google Scholar]

- Tang, S.; Chen, B.; Iwen, H.; Hirsch, J.; Fu, S.; Yang, Q.; Palacharla, P.; Wang, N.; Wang, X.; Shi, W. VECFrame: A Vehicular Edge Computing Framework for Connected Autonomous Vehicles. In Proceedings of the 2021 IEEE International Conference on Edge Computing (EDGE), Chicago, IL, USA, 5–10 September 2021; pp. 68–77. [Google Scholar] [CrossRef]

- Chen, W.; Zhou, S.; Pan, Z.; Zheng, H.; Liu, Y. Mapless Collaborative Navigation for a Multi-Robot System Based on the Deep Reinforcement Learning. Appl. Sci. 2019, 9, 4198. [Google Scholar] [CrossRef]

- Zhou, L.; Geng, J.; Jiang, W. Joint Classification of Hyperspectral and LiDAR Data Based on Position-Channel Cooperative Attention Network. Remote Sens. 2022, 14, 3247. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, T.; Tang, X.; Lei, X.; Peng, Y. Introducing Improved Transformer to Land Cover Classification Using Multispectral LiDAR Point Clouds. Remote Sens. 2022, 14, 3808. [Google Scholar] [CrossRef]

- Chen, L.; Fan, X.; Jin, H.; Sun, X.; Cheng, M.; Wang, C. FedRME: Federated Road Markings Extraction from Mobile LiDAR Point Clouds. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Hangzhou, China, 4–6 May 2022; pp. 653–658. [Google Scholar] [CrossRef]

- Thanou, D.; Chou, P.A.; Frossard, P. Graph-based compression of dynamic 3D point cloud sequences. IEEE Trans. Image Process. 2016, 25, 1765–1778. [Google Scholar] [CrossRef]

- de Oliveira Rente, P.; Brites, C.; Ascenso, J.; Pereira, F. Graph-based static 3D point clouds geometry coding. IEEE Trans. Multimed. 2018, 21, 284–299. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, H.; Ma, Z.; Chen, T.; Liu, H.; Shen, Q. Learned point cloud geometry compression. arXiv 2019, arXiv:1909.12037. [Google Scholar]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 40–49. [Google Scholar]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point cloud auto-encoder via deep grid deformation. In Proceedings of the CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 206–215. [Google Scholar]

- Devillers, O.; Gandoin, P.M. Geometric compression for interactive transmission. In Proceedings of the VIS 2000 (Cat. No. 00CH37145), Salt Lake City, UT, USA, 8–13 October 2000; pp. 319–326. [Google Scholar]

- Milani, S.; Polo, E.; Limuti, S. A Transform Coding Strategy for Dynamic Point Clouds. IEEE Trans. Image Process. 2020, 29, 8213–8225. [Google Scholar] [CrossRef]

- Zhao, L.; Ma, K.K.; Lin, X.; Wang, W.; Chen, J. Real-Time LiDAR Point Cloud Compression Using Bi-Directional Prediction and Range-Adaptive Floating-Point Coding. IEEE Trans. Broadcast. 2022, 68, 620–635. [Google Scholar] [CrossRef]

- Sun, X.; Wang, S.; Liu, M. A Novel Coding Architecture for Multi-Line LiDAR Point Clouds Based on Clustering and Convolutional LSTM Network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2190–2201. [Google Scholar] [CrossRef]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. Rangenet++: Fast and accurate lidar semantic segmentation. In Proceedings of the IROS 2019, Macau, China, 3–8 November 2019; pp. 4213–4220. [Google Scholar]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing Machine and Deep Learning Methods for Large 3D Heritage Semantic Segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 535. [Google Scholar] [CrossRef]

- Zhou, H.; Zhu, X.; Song, X.; Ma, Y.; Wang, Z.; Li, H.; Lin, D. Cylinder3D: An Effective 3D Framework for Driving-scene LiDAR Semantic Segmentation. arXiv 2020, arXiv:2008.01550. [Google Scholar]

- De Queiroz, R.L.; Chou, P.A. Compression of 3D point clouds using a region-adaptive hierarchical transform. IEEE Trans. Image Process. 2016, 25, 3947–3956. [Google Scholar] [CrossRef]

- Pradhan, S.S.; Ramchandran, K. Distributed source coding using syndromes (DISCUS): Design and construction. IEEE Trans. Inf. Theory 2003, 49, 626–643. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- MPEG 3DG; Requirements. Common test conditions for point cloud compression; document N17229. In Proceedings of the JCT_MEET Meeting Proceedings, 2017.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mari, D.; Camuffo, E.; Milani, S. CACTUS: Content-Aware Compression and Transmission Using Semantics for Automotive LiDAR Data. Sensors 2023, 23, 5611. https://doi.org/10.3390/s23125611

Mari D, Camuffo E, Milani S. CACTUS: Content-Aware Compression and Transmission Using Semantics for Automotive LiDAR Data. Sensors. 2023; 23(12):5611. https://doi.org/10.3390/s23125611

Chicago/Turabian StyleMari, Daniele, Elena Camuffo, and Simone Milani. 2023. "CACTUS: Content-Aware Compression and Transmission Using Semantics for Automotive LiDAR Data" Sensors 23, no. 12: 5611. https://doi.org/10.3390/s23125611

APA StyleMari, D., Camuffo, E., & Milani, S. (2023). CACTUS: Content-Aware Compression and Transmission Using Semantics for Automotive LiDAR Data. Sensors, 23(12), 5611. https://doi.org/10.3390/s23125611