Remaining Useful-Life Prediction of the Milling Cutting Tool Using Time–Frequency-Based Features and Deep Learning Models

Abstract

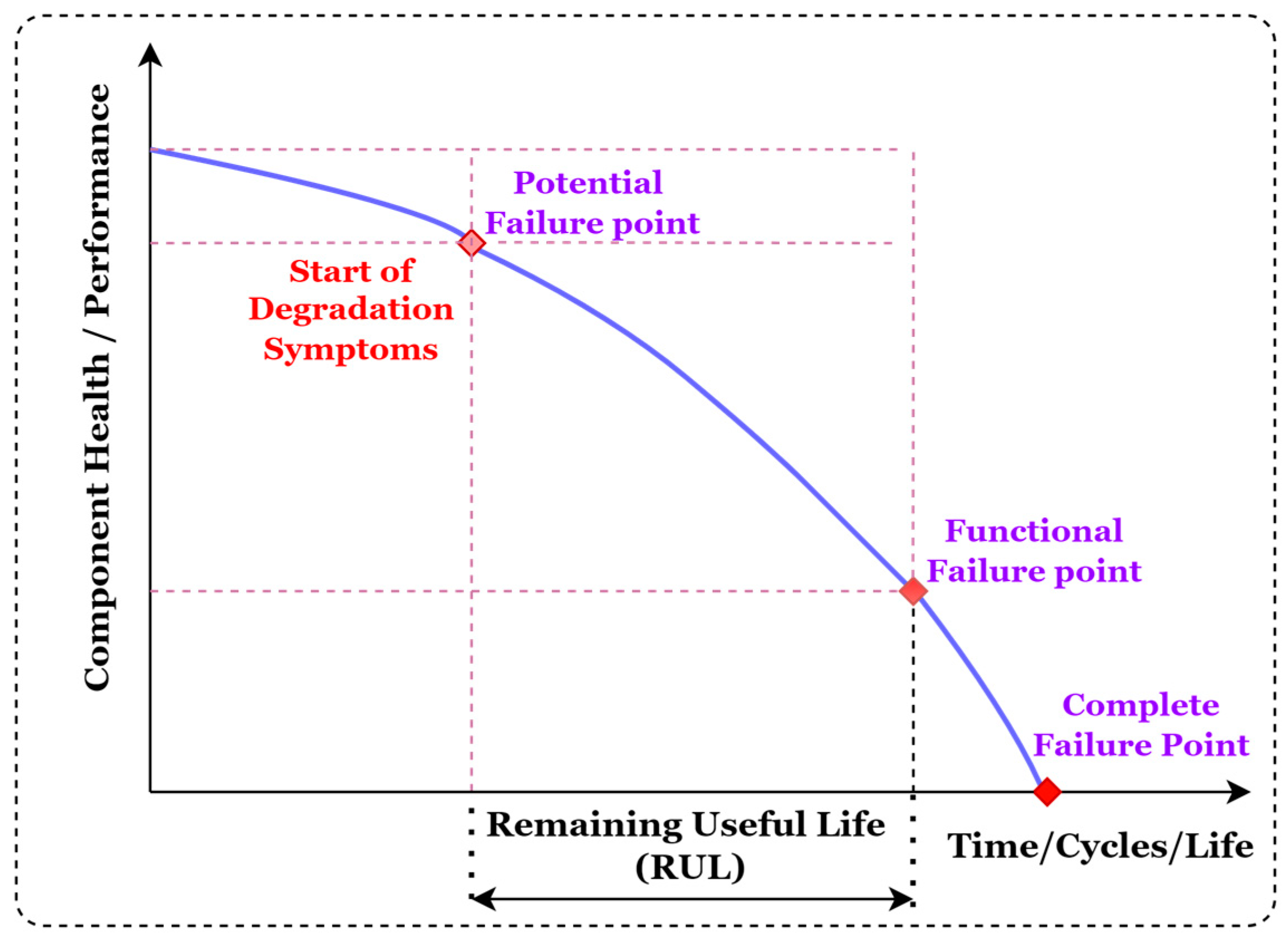

1. Introduction

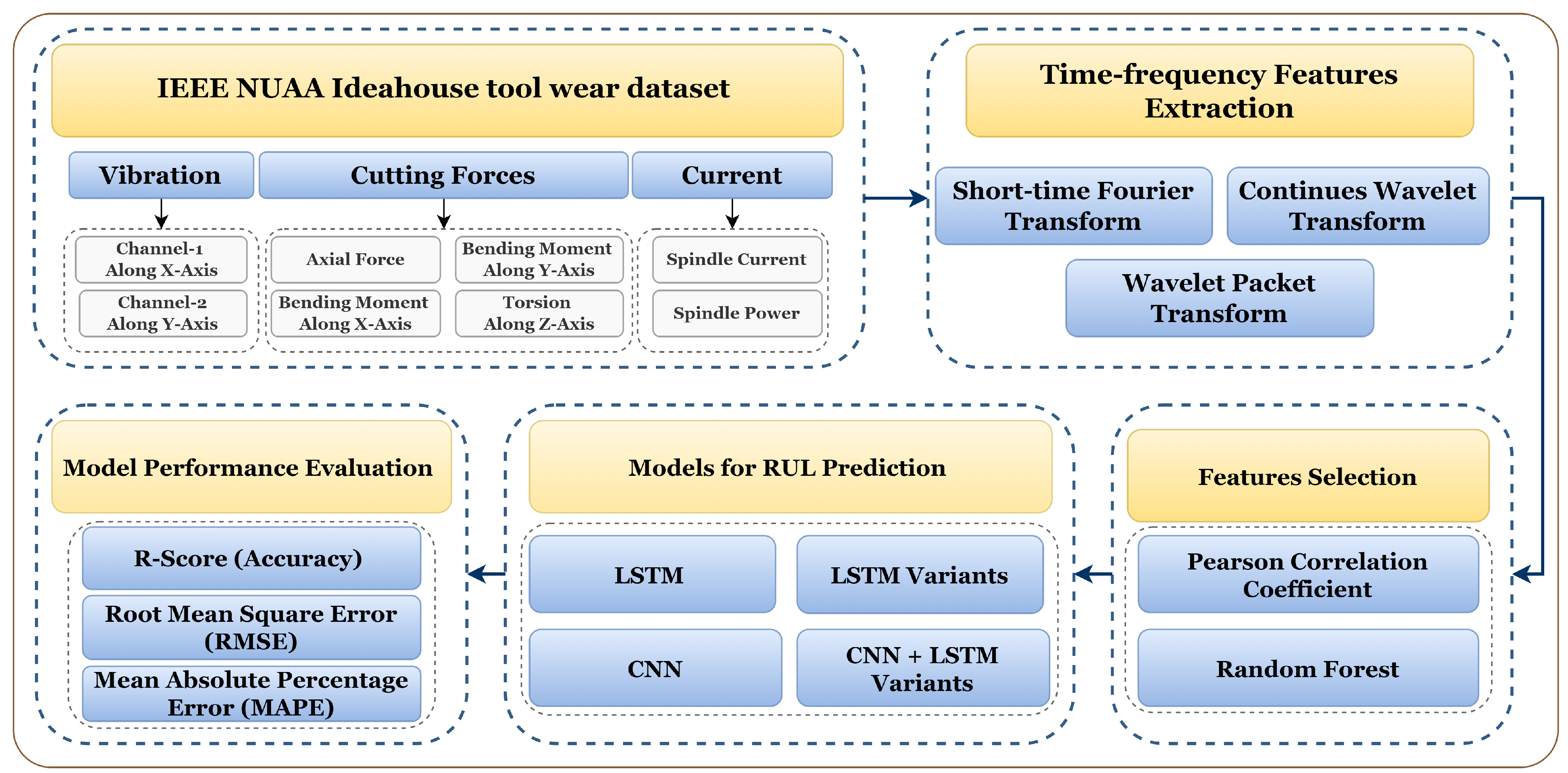

- To estimate the RUL of the milling cutting tool using the NUAA idea-house dataset [8];

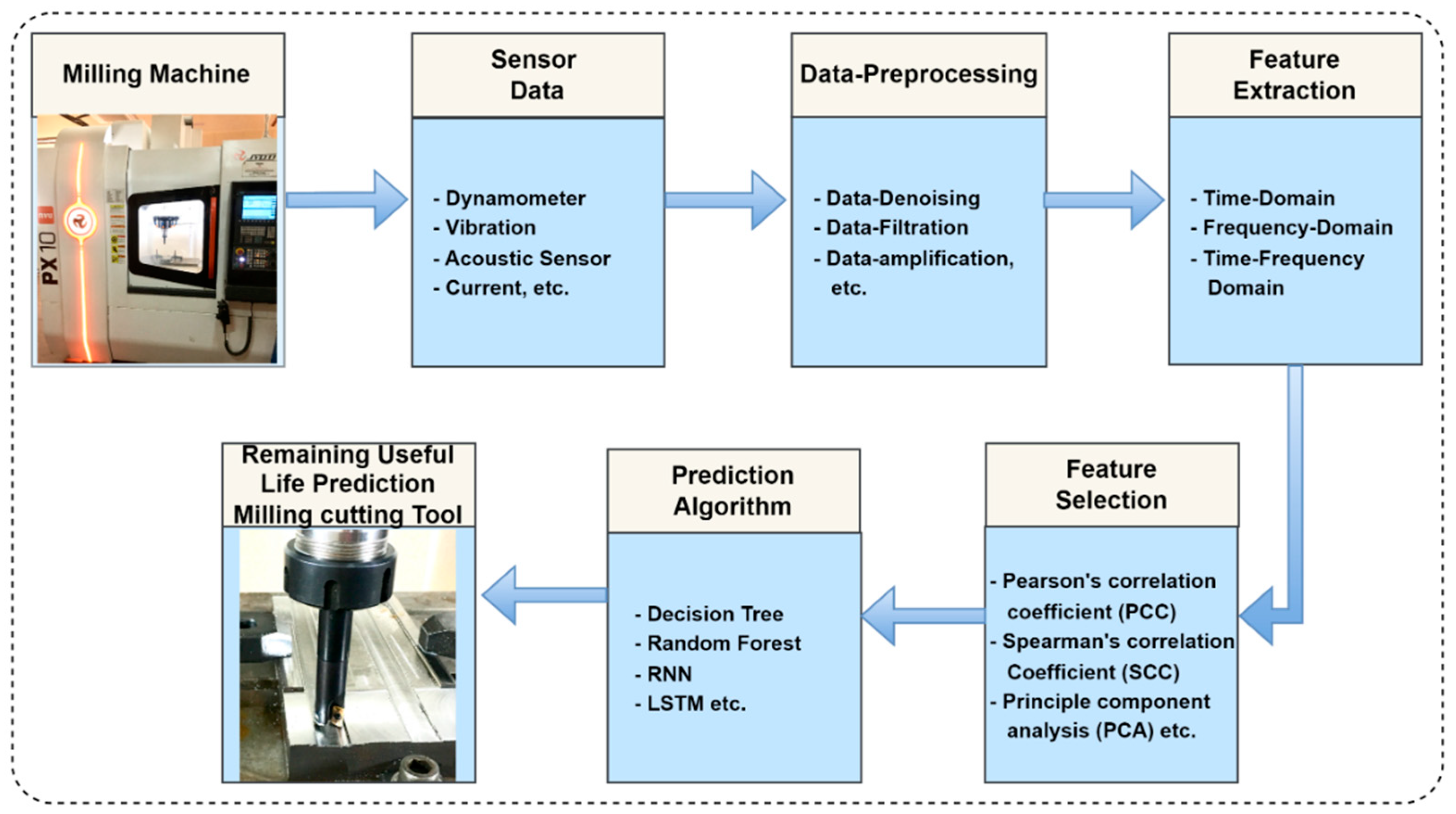

- To use the time–frequency feature extraction techniques such as STFT, CWT, and WPT to get useful insights from data with reduced data dimensions;

- To use the different machine learning and deep learning decision-making algorithms for cutting tool RUL prediction and check the performance of each model using different evaluation parameters.

2. Related Work

2.1. Physics-Based Model

2.2. Data-Driven Model

2.3. Feature Extraction and Selection

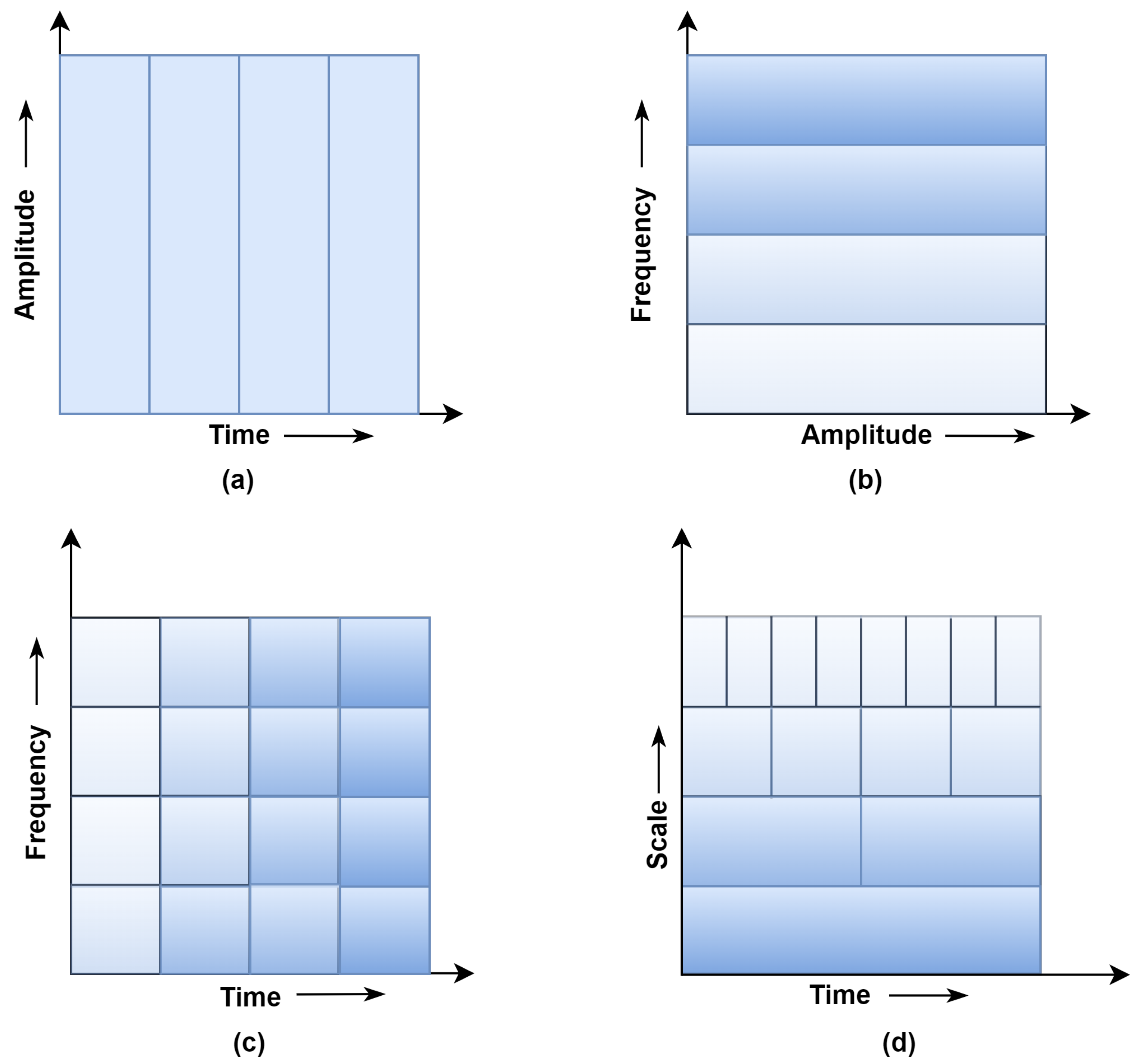

3. Time–Frequency Domain Feature Extraction

3.1. Short-Time Fourier-Transform (STFT)

3.2. Wavelet Transforms (WT)

3.2.1. Continuous Wavelet Transform (CWT)

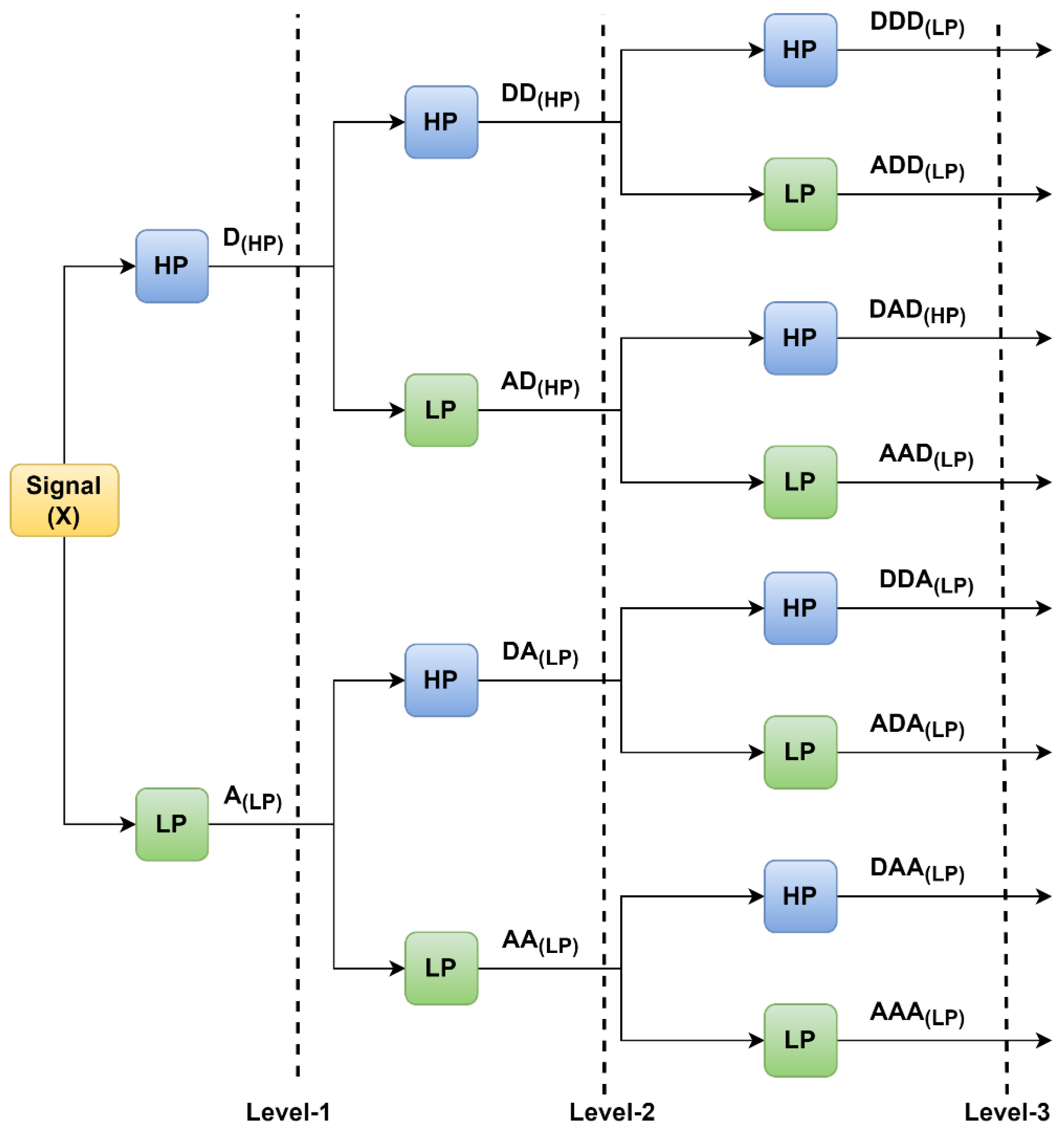

3.2.2. Wavelet Packet Transform (WPT)

4. Proposed Methodology

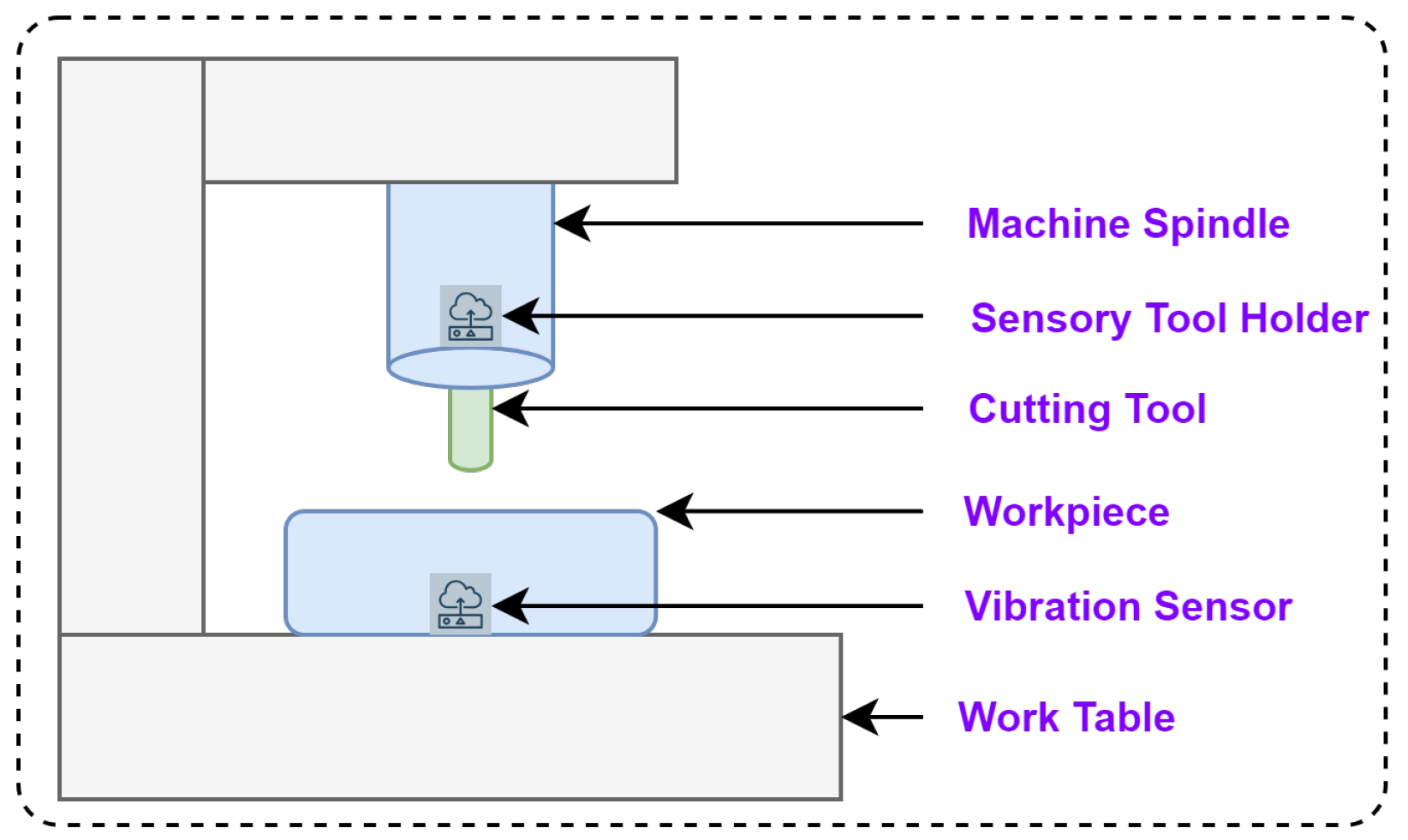

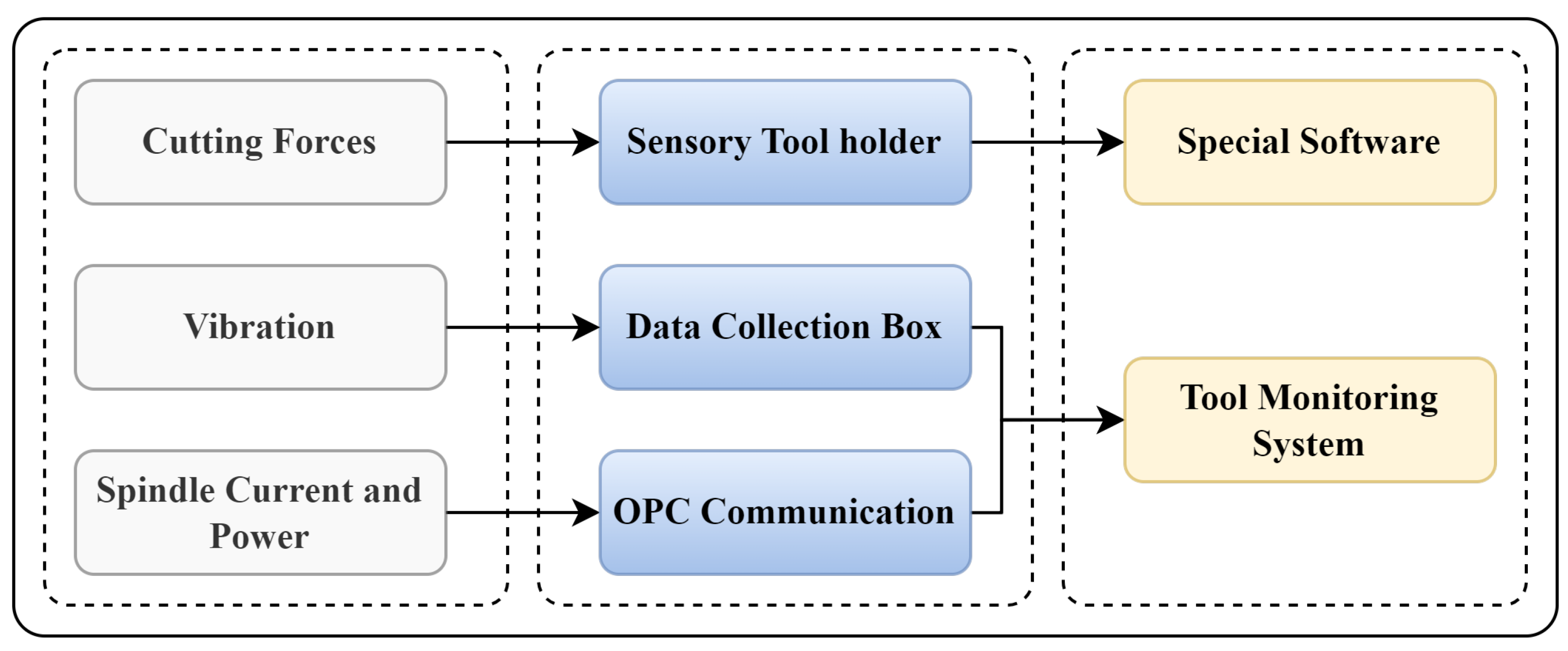

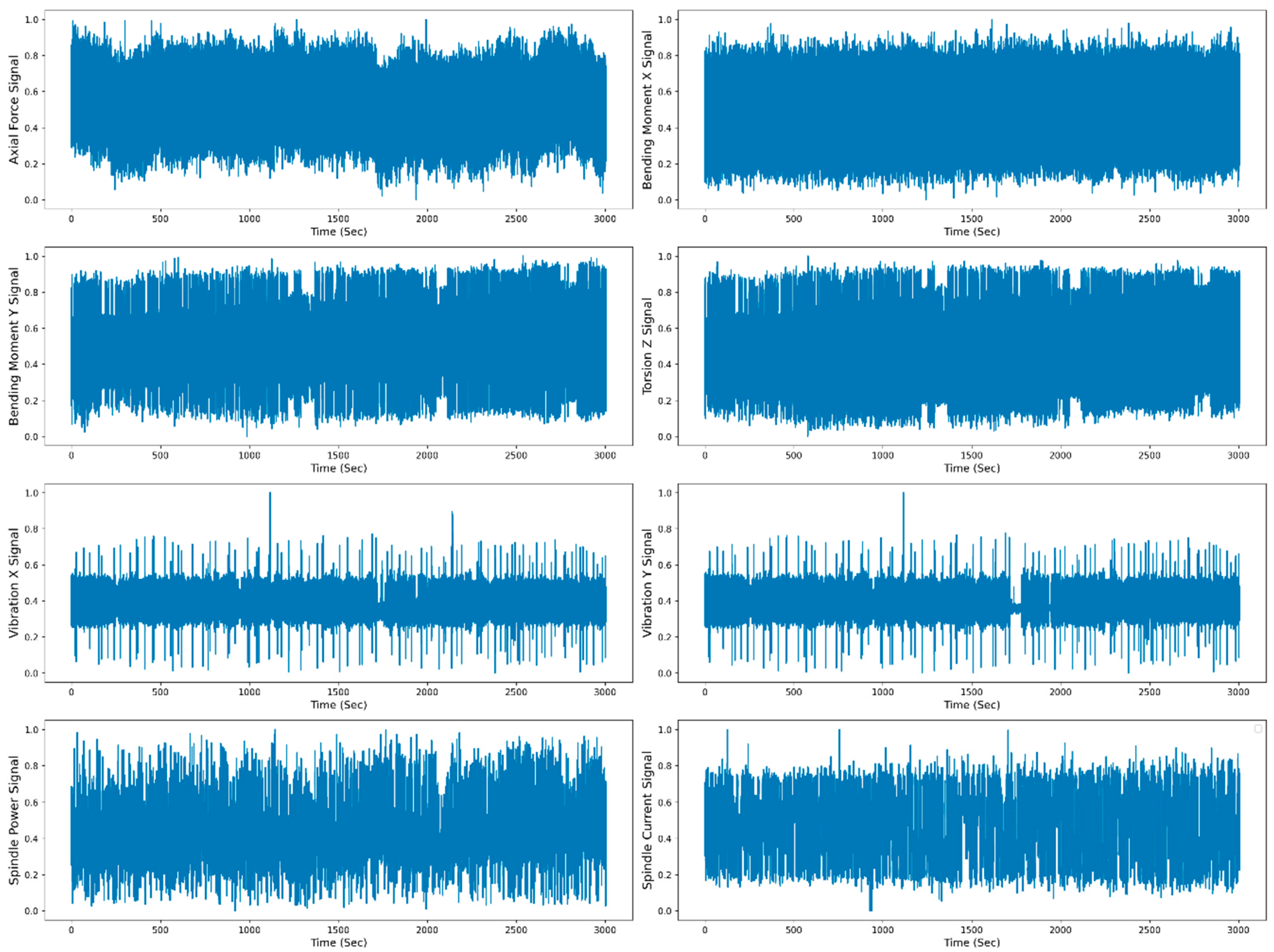

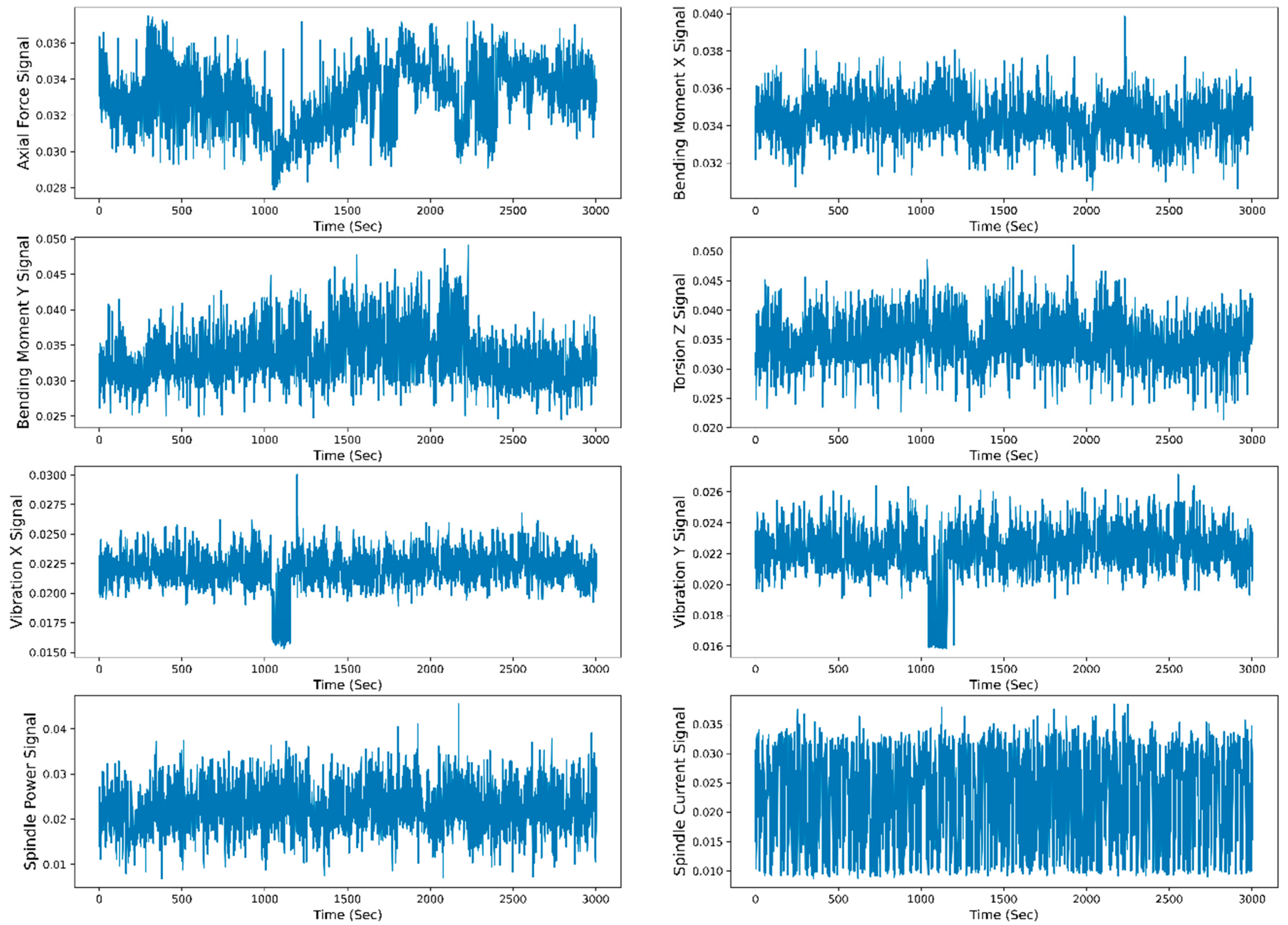

4.1. Dataset Description

4.2. Feature Extraction and Selection

4.3. Models for RUL Prediction

4.4. Performance Evaluation Parameters

5. Results and Discussion

- The feature extraction based on different TFD techniques such as CWT, STFT, and WPT and feature selection using PCC and RFR methods is discussed;

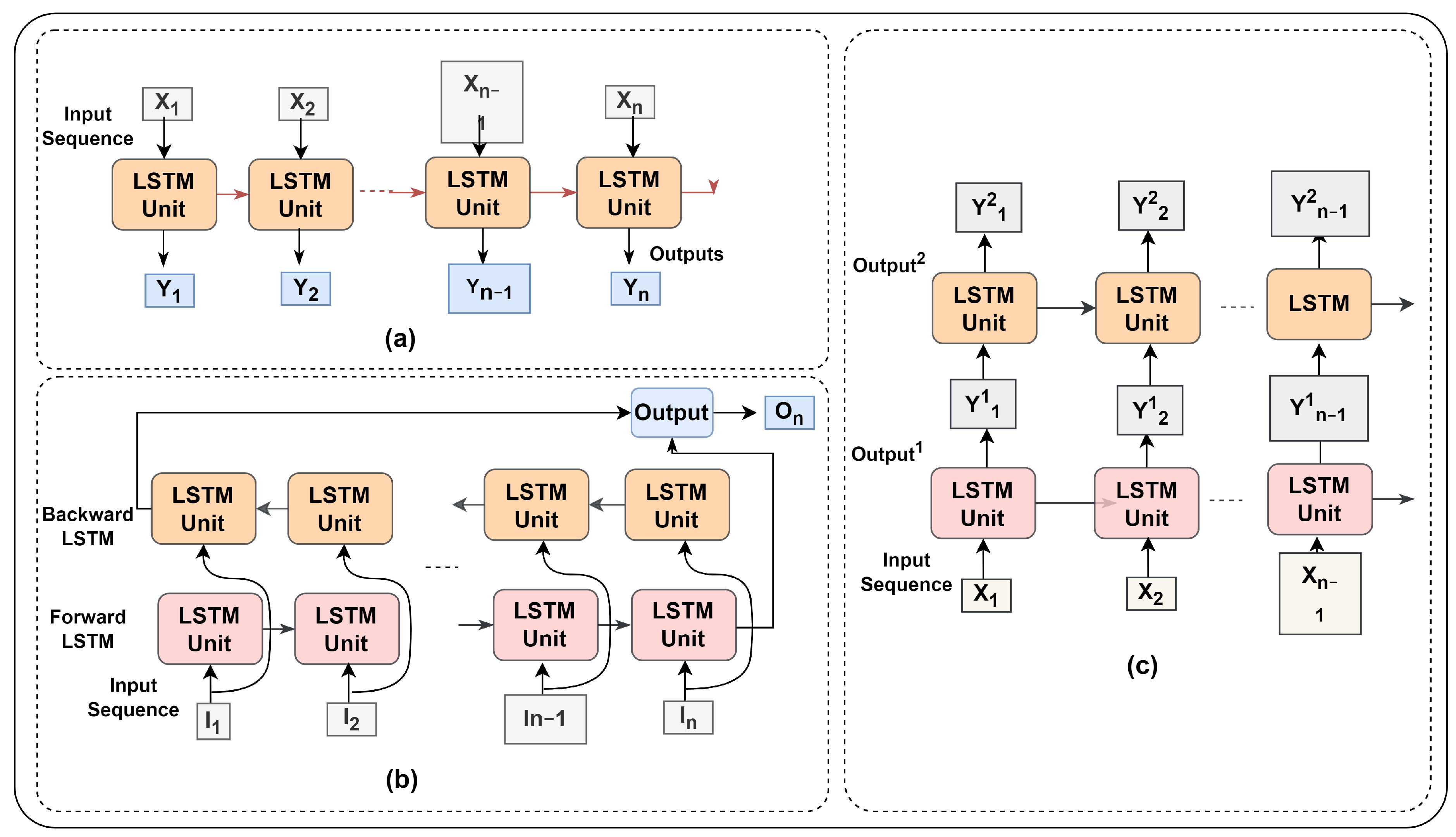

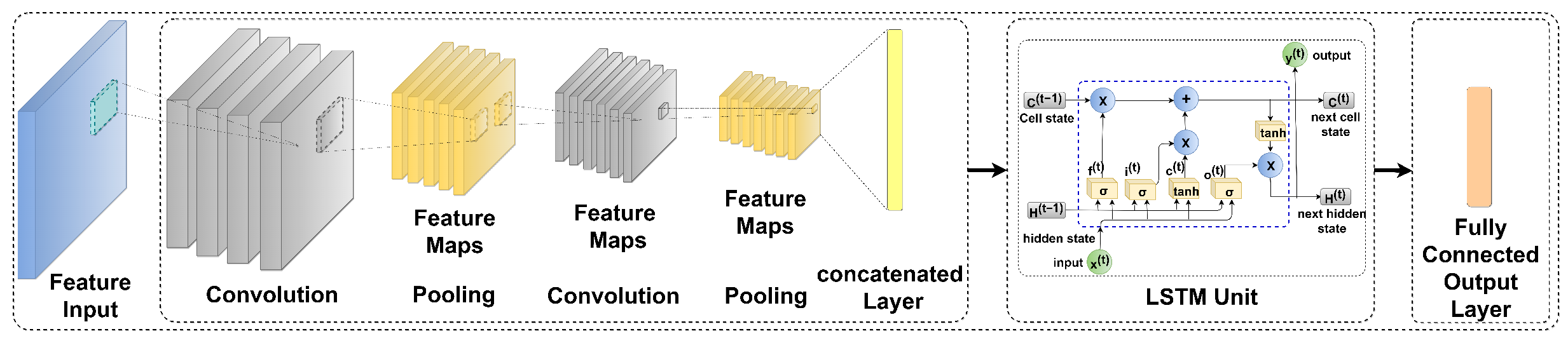

- Model performance for each TFD feature using PCC and RF feature selection techniques using different ML (SVM, RFR, and GBR) and DL models (LSTM, LSTM variants, CNN, and hybrid model consisting of CNN with LSTM variants) are evaluated;

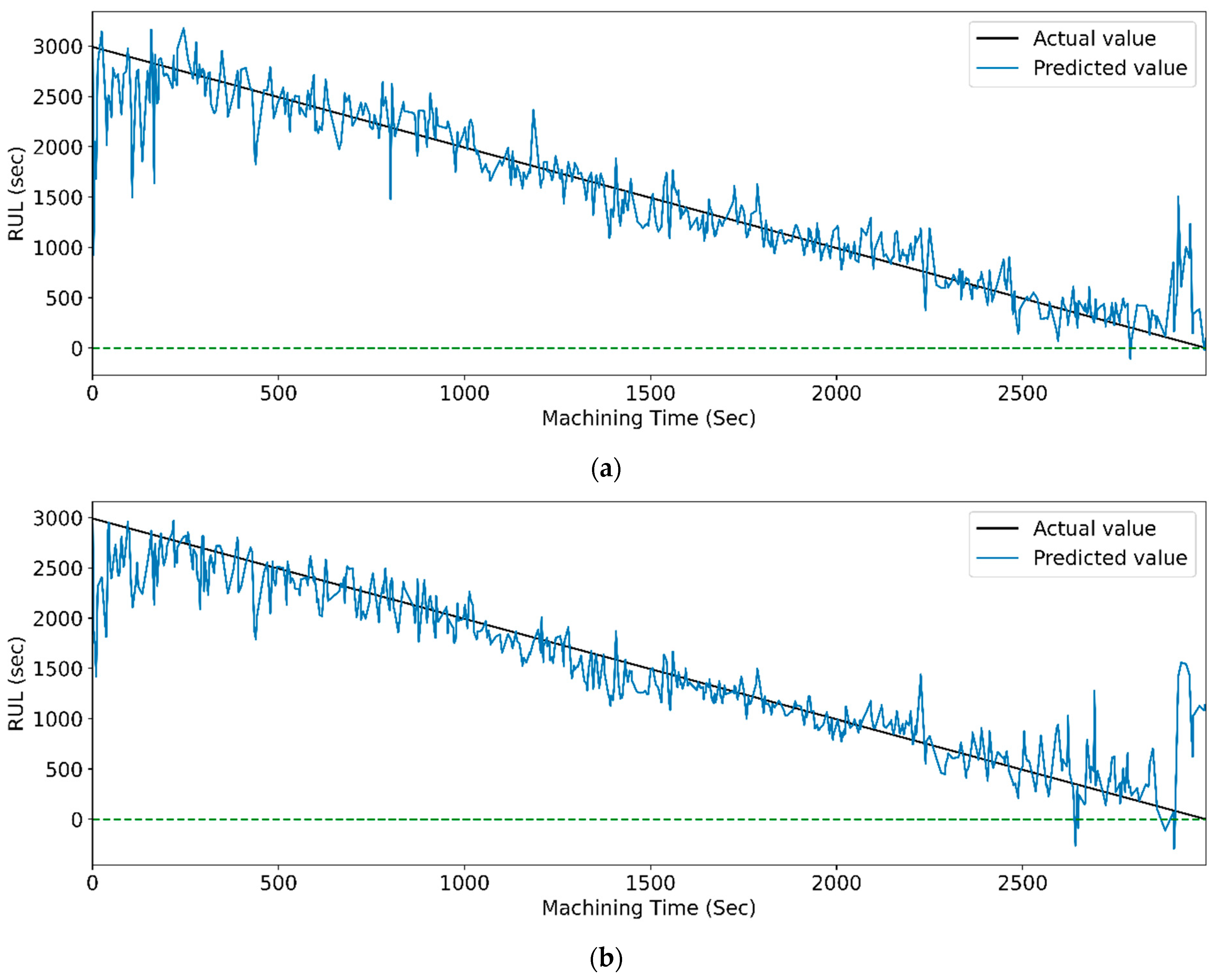

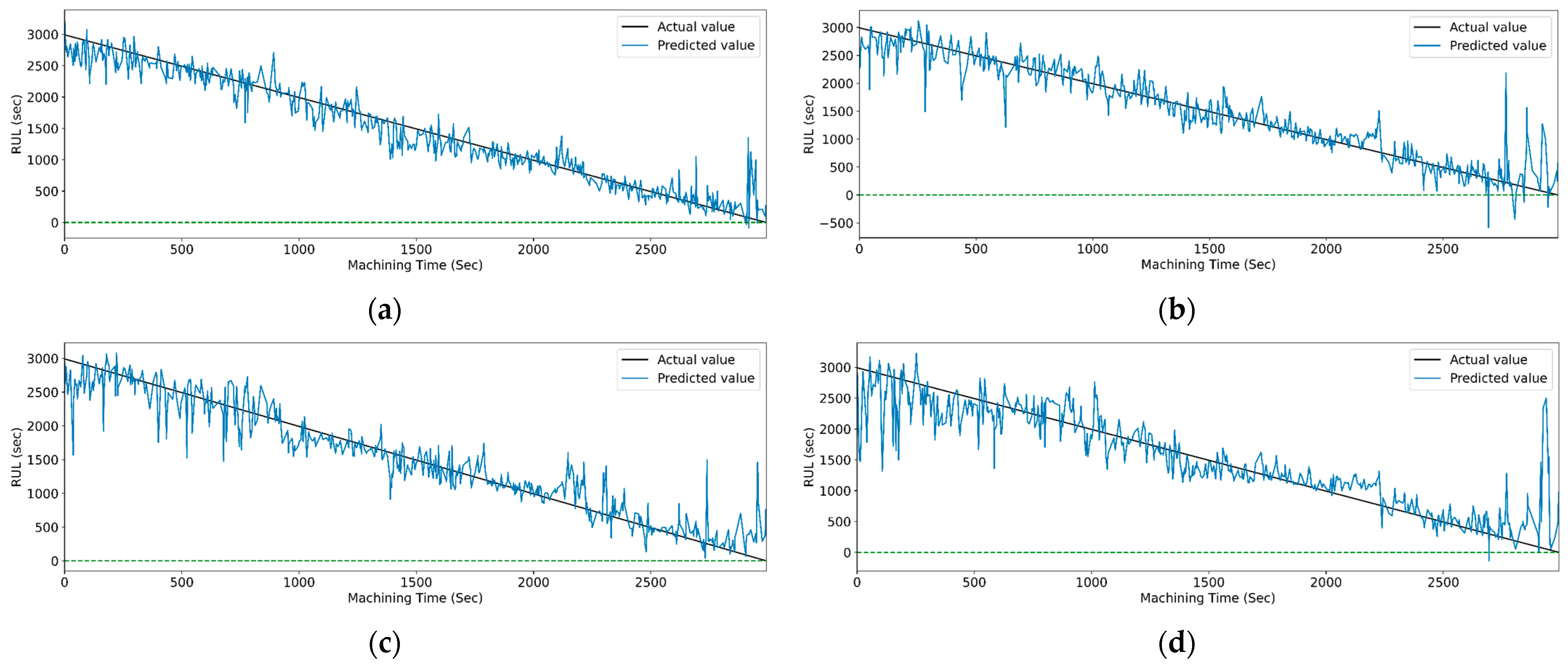

- Finally, the graphs indicating the actual and predicted RUL of the cutting tool versus the actual machining time of milling are plotted for each condition, and a summary of all the obtained results is discussed.

5.1. Feature Extraction and Selection

5.2. Machine Learning Models Performance

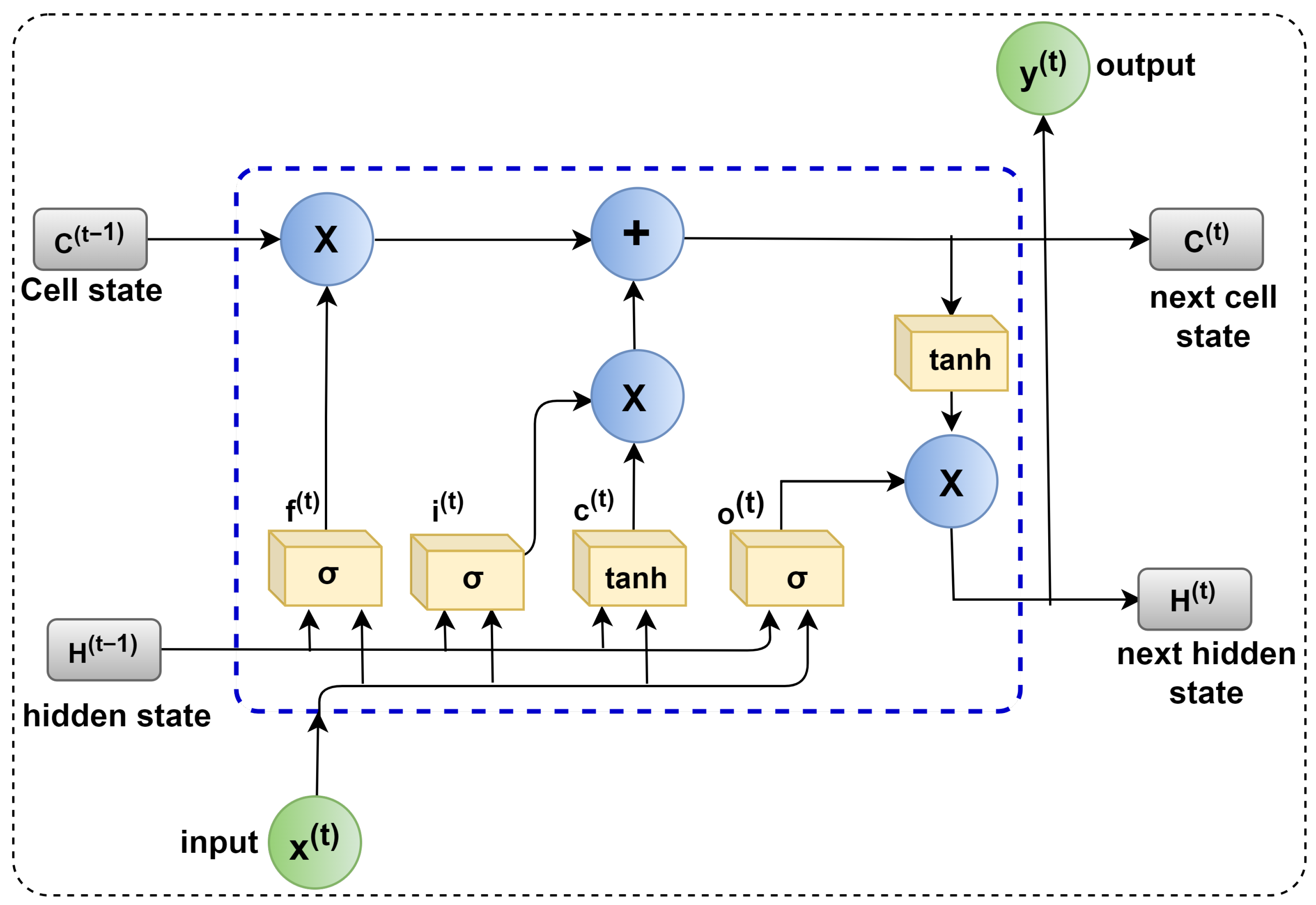

5.3. Deep Learning Model Performance

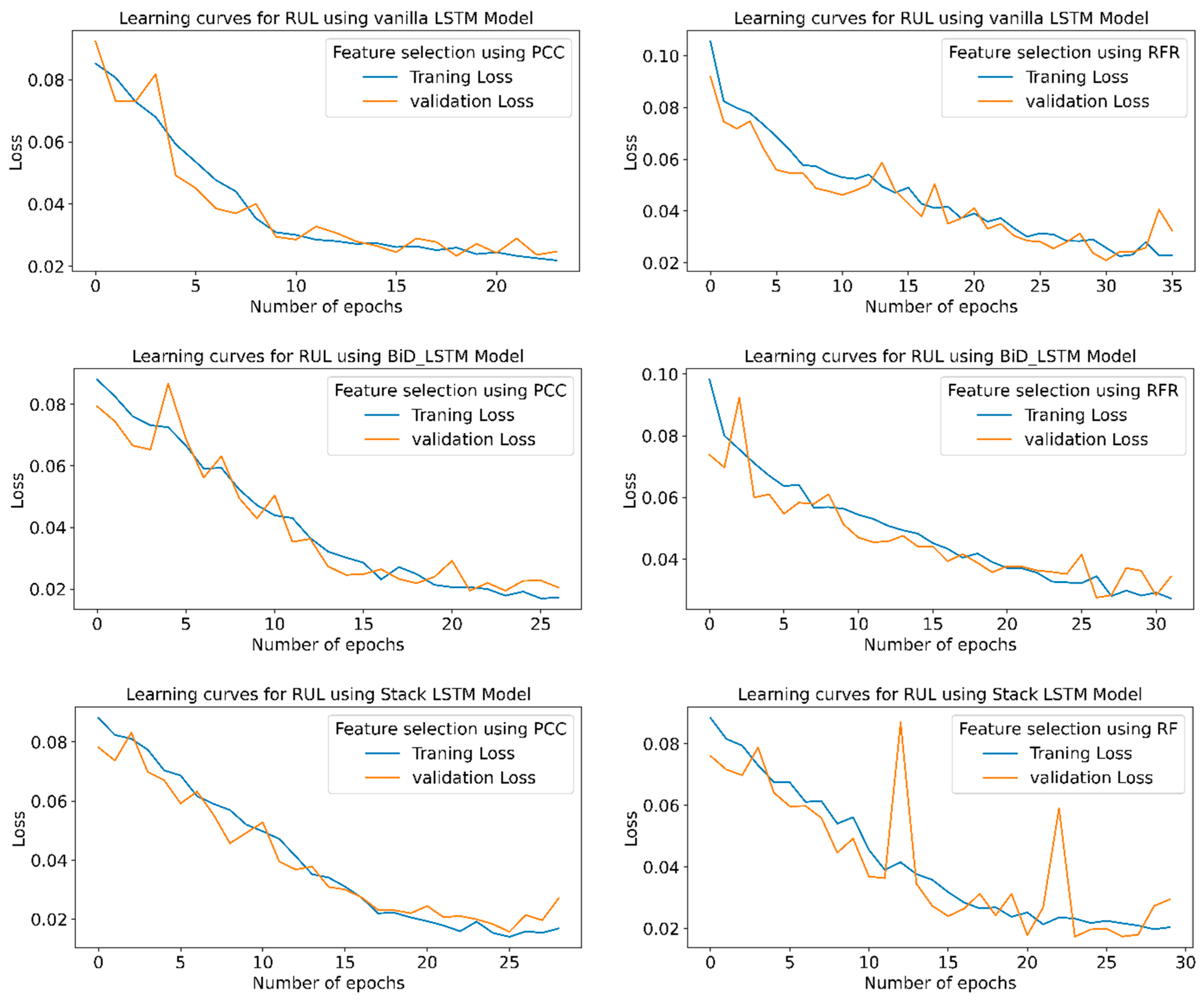

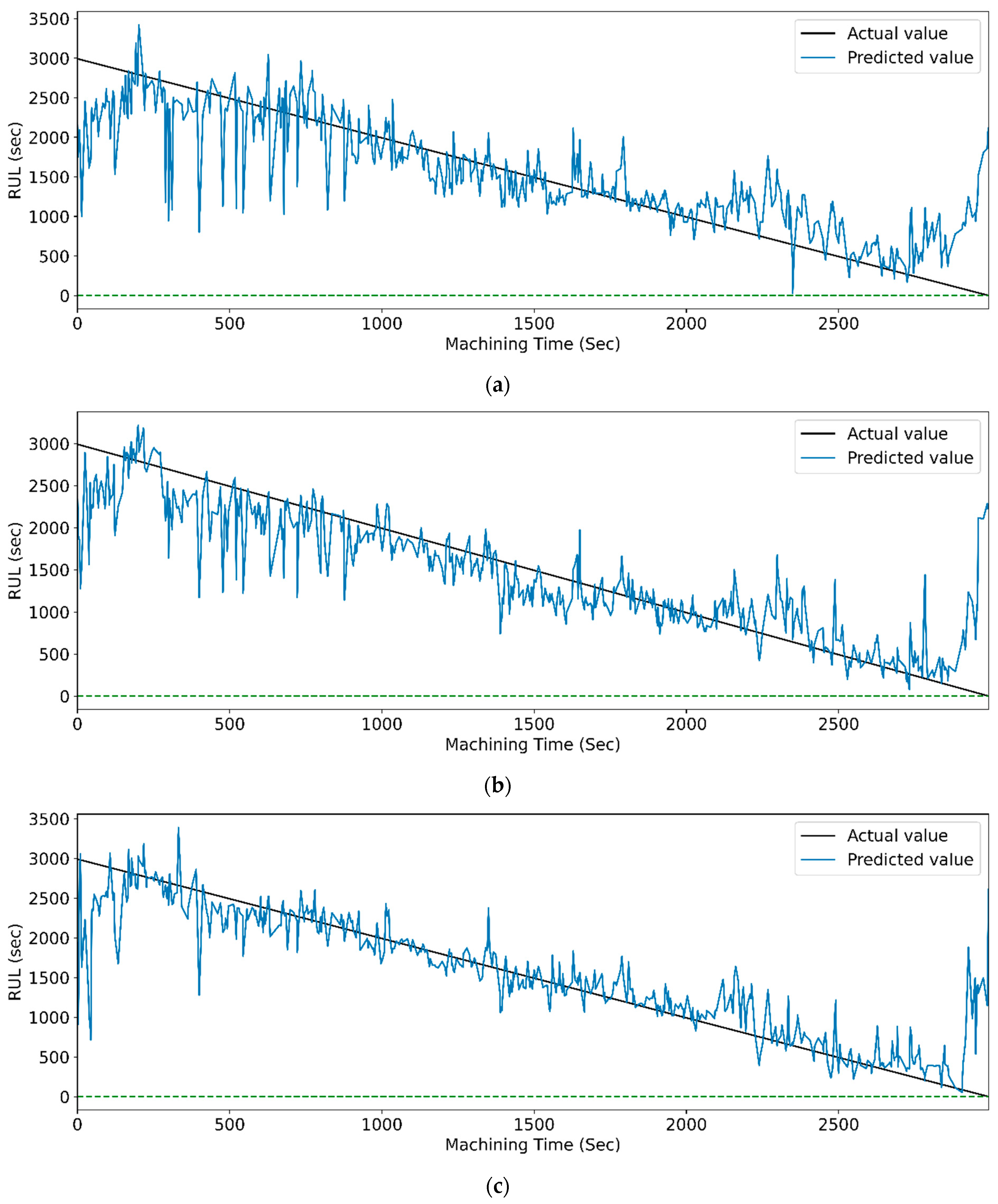

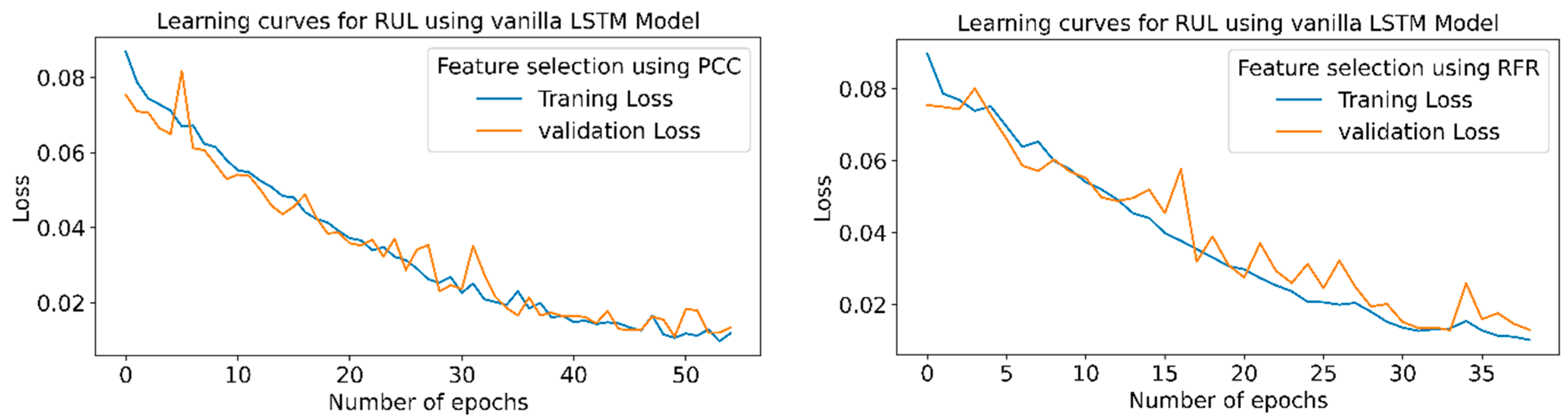

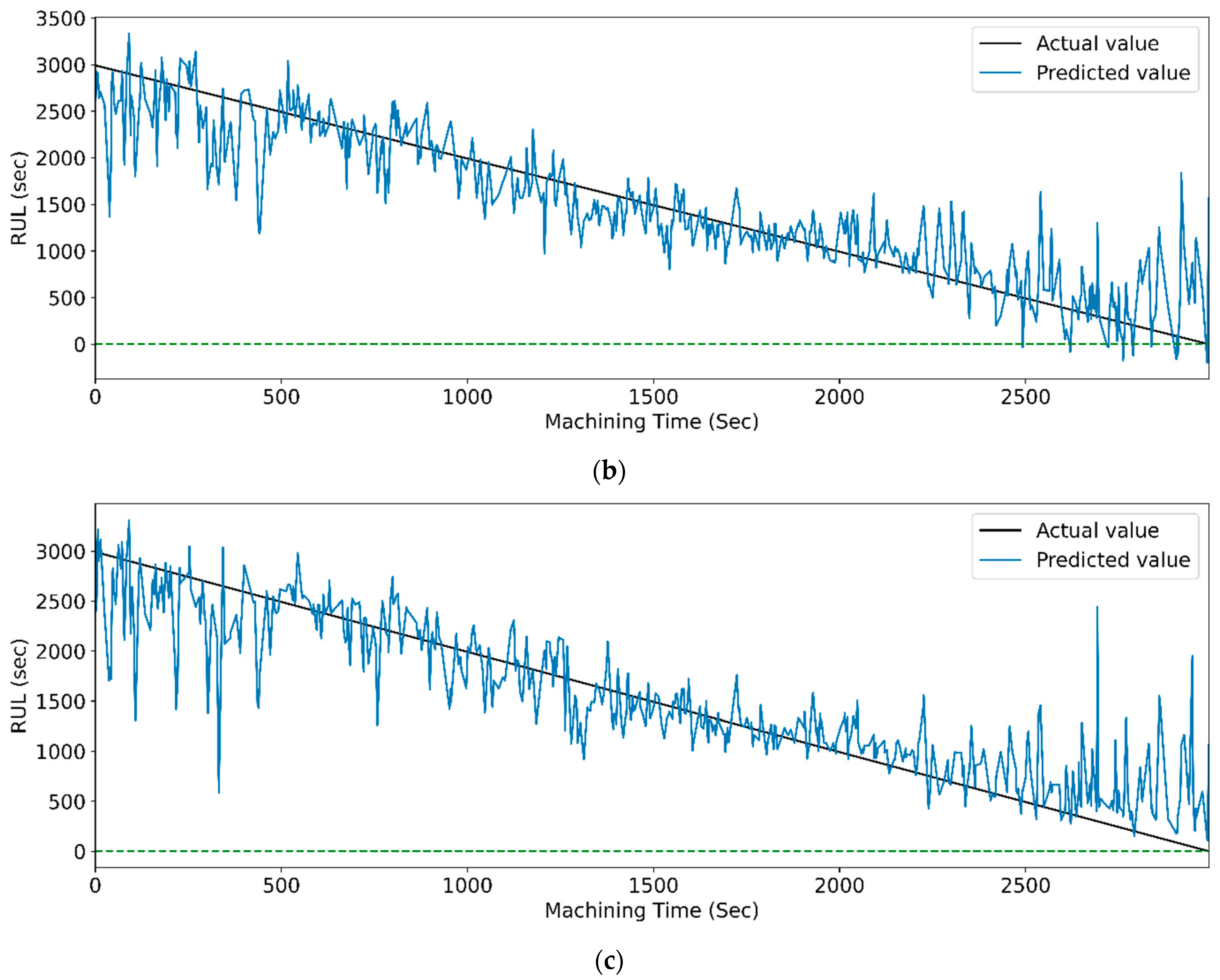

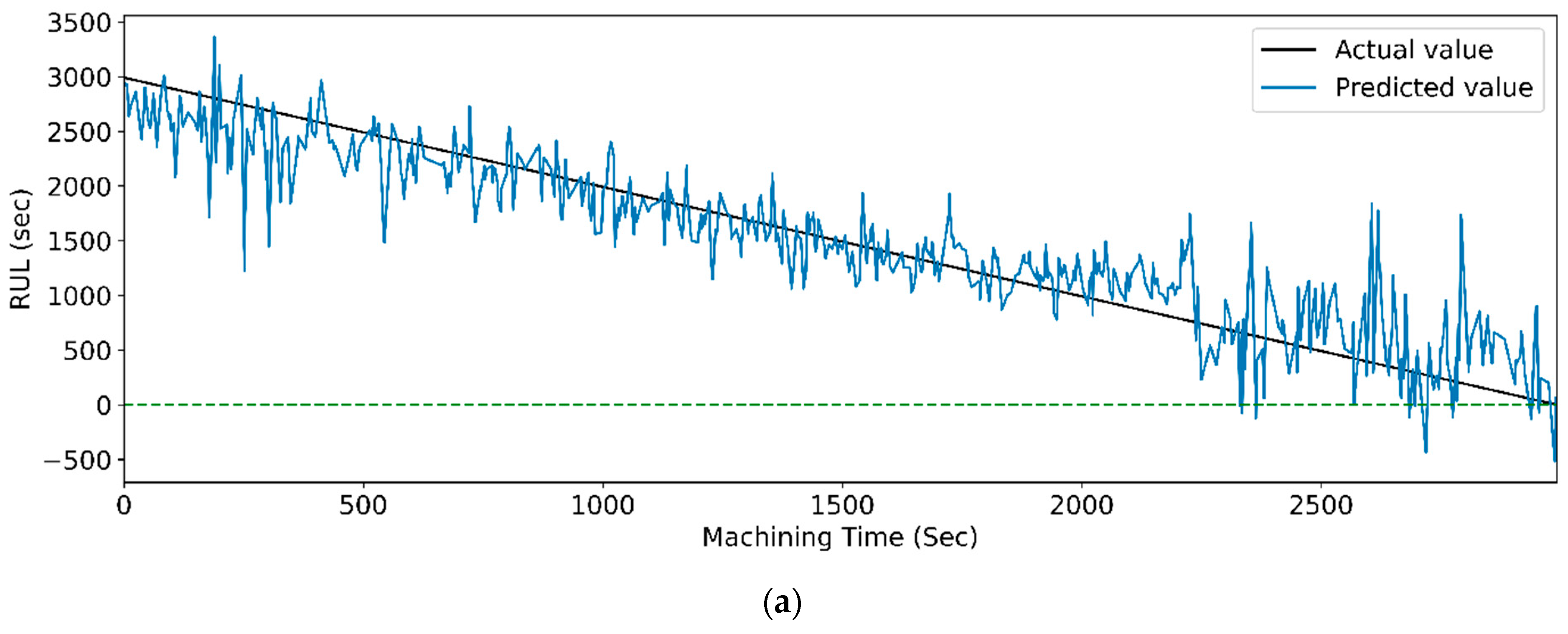

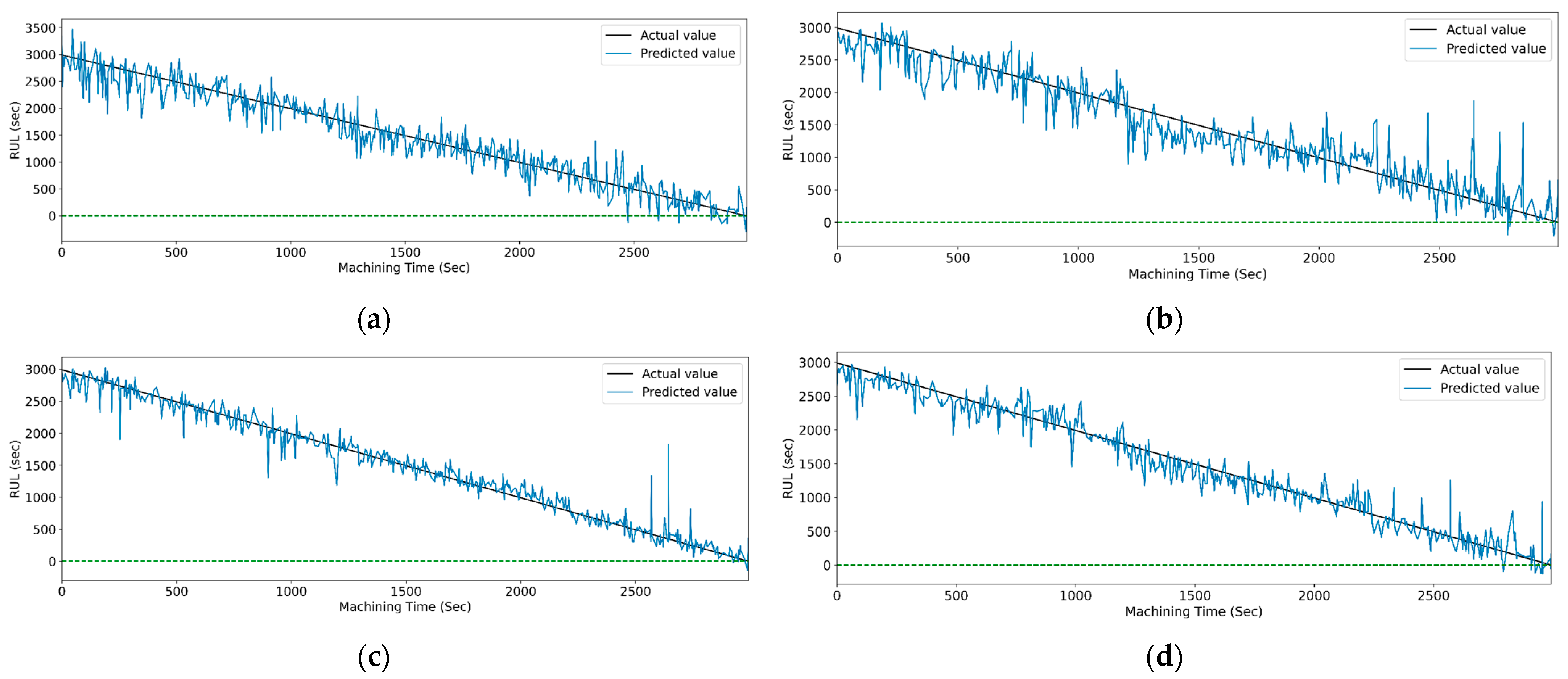

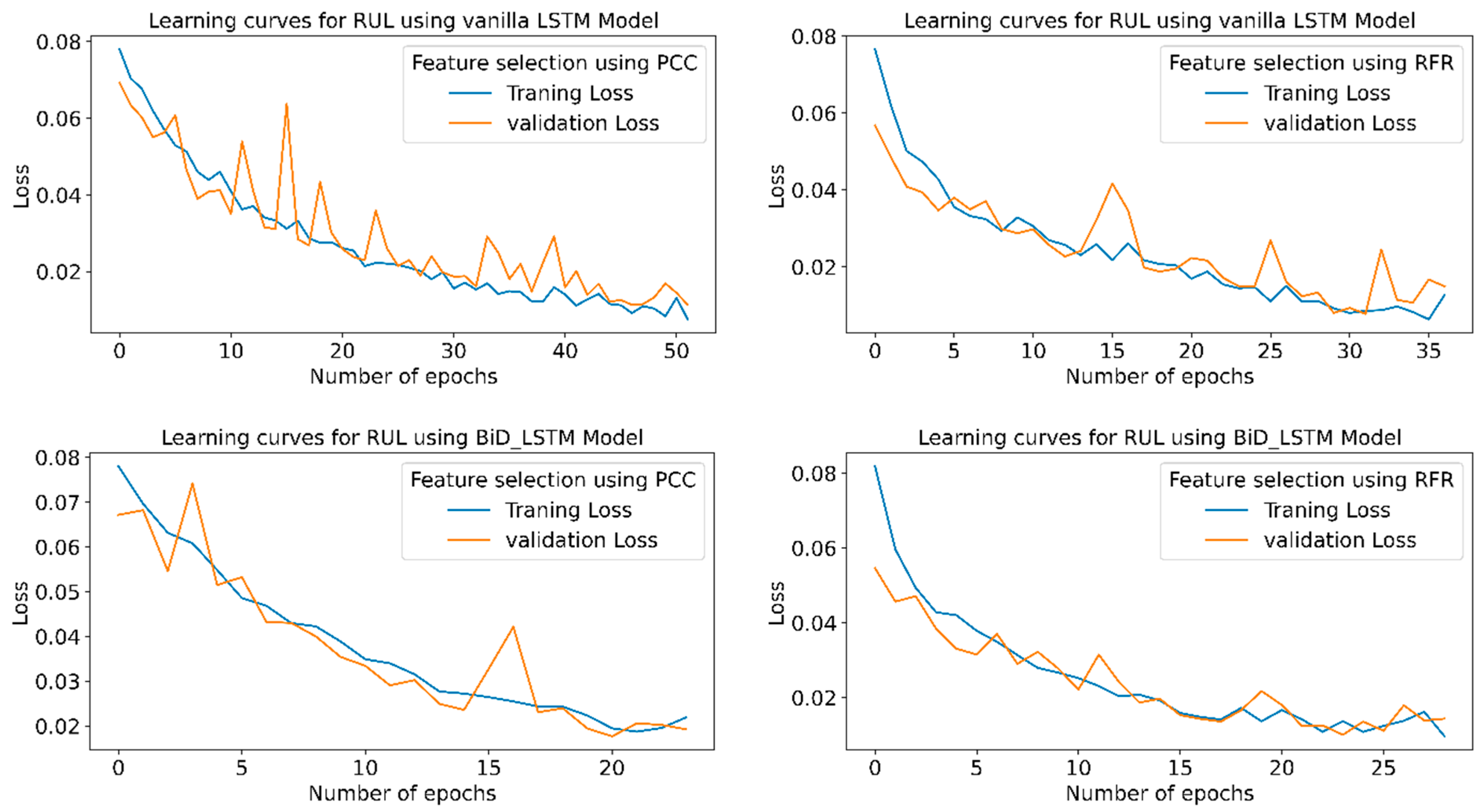

5.3.1. RUL Prediction Using STFT Feature Extraction Technique

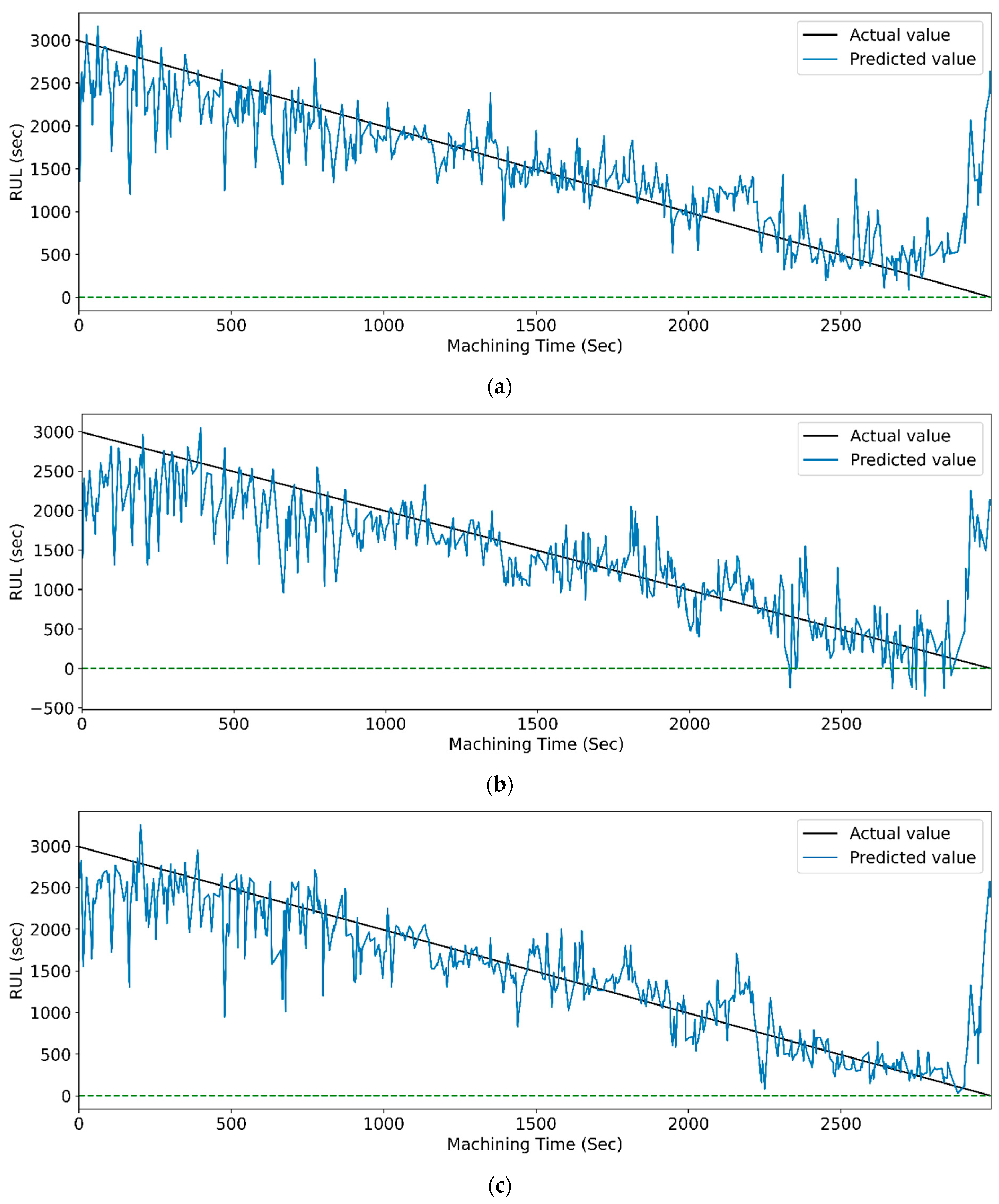

5.3.2. RUL Prediction Using CWT Feature Extraction Technique

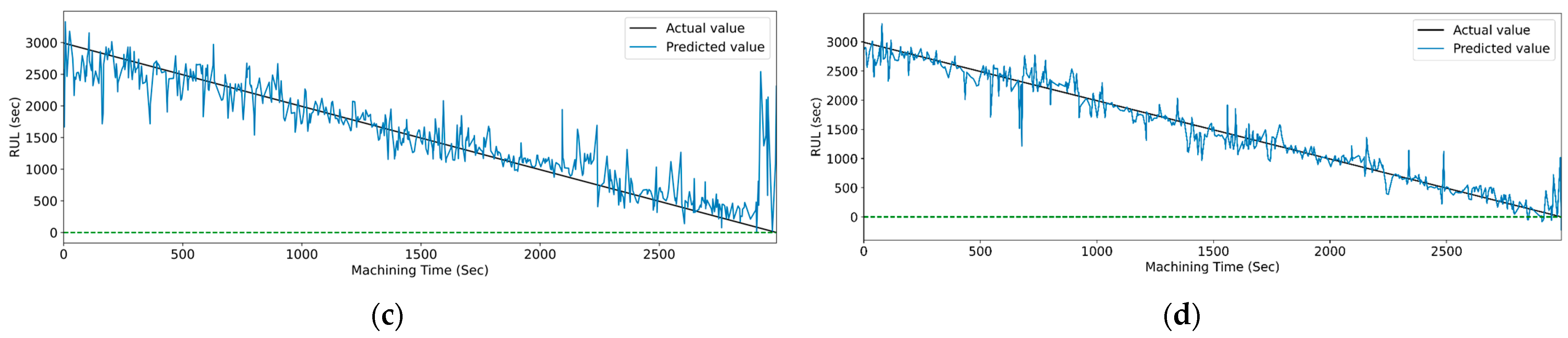

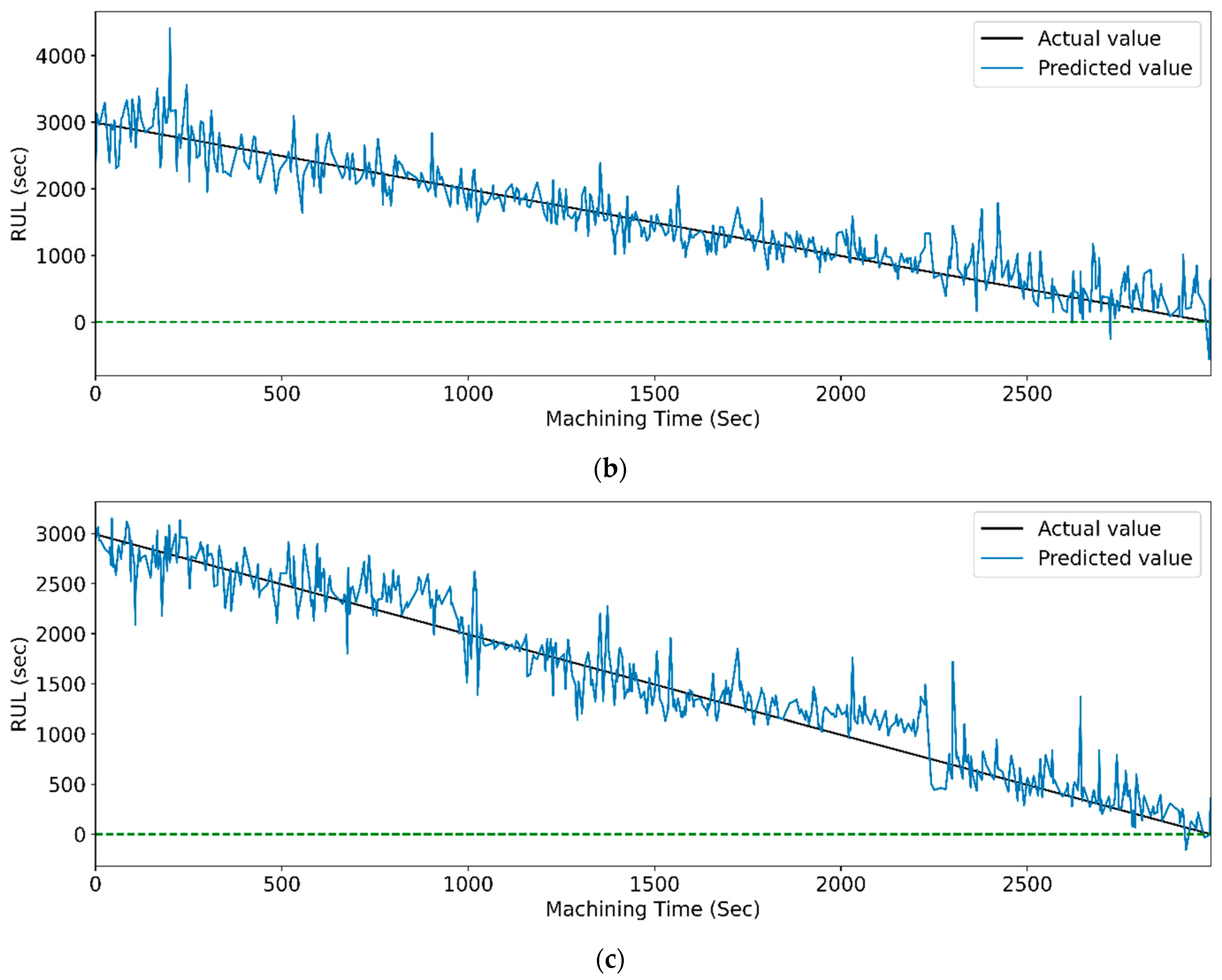

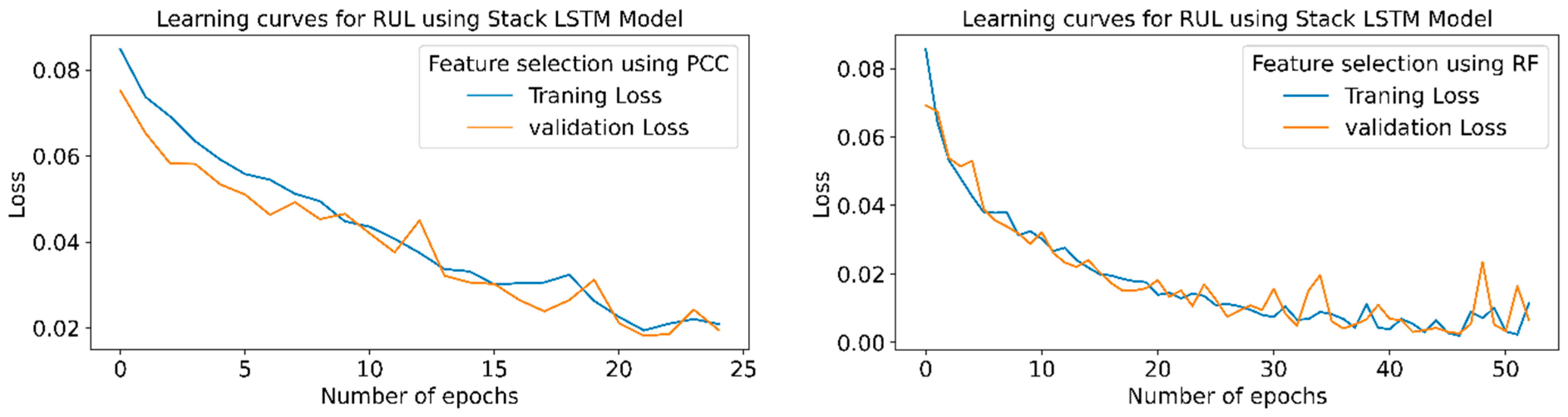

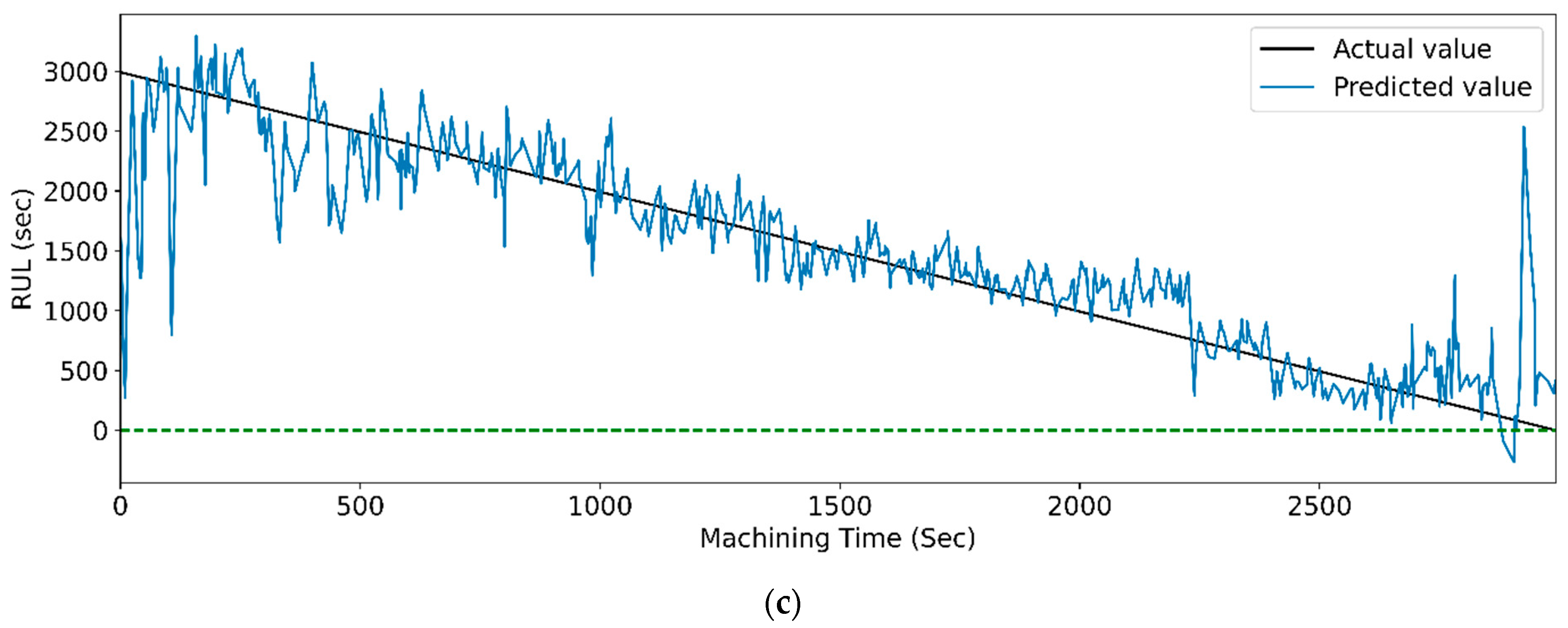

5.3.3. RUL Prediction Using WPT Feature Extraction Technique

6. Conclusions

- The RF feature selection technique performs slightly better than the PCC-based feature selection technique. The RF feature selection technique gives better results for non-linear relationships and complex models;

- The DL models such as LSTM, LSTM variants, CNN, and CNN with LSTM variants provide better prediction accuracies than ML models, as these models are effective for the time-series and complex non-linear cutting tool data for RUL estimation;

- In STFT, CWT, and WPT feature extraction techniques, the highest value of R2 score is more than 0.95 for LSTM variants and hybrid (CNN with LSTM variants) prediction models;

- The result shows that the TFD feature extraction technique is effective for RUL prediction with deep learning models such as LSTM, LSTM variants, CNN, and hybrid model CNN with LSTM variants.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tong, X.; Liu, Q.; Pi, S.; Xiao, Y. Real-time machining data application and service based on IMT digital twin. J. Intell. Manuf. 2019, 31, 1113–1132. [Google Scholar] [CrossRef]

- Javed, K.; Gouriveau, R.; Li, X.; Zerhouni, N. Tool wear monitoring and prognostics challenges: A comparison of connectionist methods toward an adaptive ensemble model. J. Intell. Manuf. 2016, 29, 1873–1890. [Google Scholar] [CrossRef]

- Zonta, T.; da Costa, C.A.; Righi, R.D.R.; de Lima, M.J.; da Trindade, E.S.; Li, G.P. Predictive maintenance in the Industry 4.0: A systematic literature review. Comput. Ind. Eng. 2020, 150, 106889. [Google Scholar] [CrossRef]

- Sayyad, S.; Kumar, S.; Bongale, A.; Kamat, P.; Patil, S.; Kotecha, K. Data-Driven Remaining Useful Life Estimation for Milling Process: Sensors, Algorithms, Datasets, and Future Directions. IEEE Access 2021, 9, 110255–110286. [Google Scholar] [CrossRef]

- Liu, Y.C.; Chang, Y.J.; Liu, S.L.; Chen, S.P. Data-driven prognostics of remaining useful life for milling machine cutting tools. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management, ICPHM 2019, San Francisco, CA, USA, 17–20 June 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Tian, Z. An artificial neural network method for remaining useful life prediction of equipment subject to condition monitoring. J. Intell. Manuf. 2009, 23, 227–237. [Google Scholar] [CrossRef]

- Wang, Y.; Zhao, Y.; Addepalli, S. Remaining Useful Life Prediction using Deep Learning Approaches: A Review. Procedia Manuf. 2020, 49, 81–88. [Google Scholar] [CrossRef]

- Li, Y.; Liu, C.; Li, D.; Hua, J.; Wan, P. Documentation of Tool Wear Dataset of NUAA_Ideahouse. IEEE Dataport. 2021. Available online: https://ieee-dataport.org/open-access/tool-wear-dataset-nuaaideahouse (accessed on 6 January 2023).

- Hanachi, H.; Yu, W.; Kim, I.Y.; Liu, J.; Mechefske, C.K. Hybrid data-driven physics-based model fusion framework for tool wear prediction. Int. J. Adv. Manuf. Technol. 2018, 101, 2861–2872. [Google Scholar] [CrossRef]

- Liang, Y.; Wang, S.; Li, W.; Lu, X. Data-Driven Anomaly Diagnosis for Machining Processes. Engineering 2019, 5, 646–652. [Google Scholar] [CrossRef]

- Wu, D.; Jennings, C.; Terpenny, J.; Gao, R.; Kumara, S. Data-Driven Prognostics Using Random Forests: Prediction of Tool Wear. 2017. Available online: http://proceedings.asmedigitalcollection.asme.org/pdfaccess.ashx?url=/data/conferences/asmep/93280/ (accessed on 6 January 2023).

- Dimla, D.E. Sensor signals for tool-wear monitoring in metal cutting operations—A review of methods. Int. J. Mach. Tools Manuf. 2000, 40, 1073–1098. [Google Scholar] [CrossRef]

- Sick, B. Online and indirect tool wear monitoring in turning with artificial neural networks: A review of more than a decade of research. Mech. Syst. Signal Process. 2002, 16, 487–546. [Google Scholar] [CrossRef]

- Sayyad, S.; Kumar, S.; Bongale, A.; Bongale, A.M.; Patil, S. Estimating Remaining Useful Life in Machines Using Artificial Intelligence: A Scoping Review. Libr. Philos. Pract. 2021, 2021, 1–26. [Google Scholar]

- Zhou, Y.; Liu, C.; Yu, X.; Liu, B.; Quan, Y. Tool wear mechanism, monitoring and remaining useful life (RUL) technology based on big data: A review. SN Appl. Sci. 2022, 4, 232. [Google Scholar] [CrossRef]

- Tran, V.T.; Pham, H.T.; Yang, B.-S.; Nguyen, T.T. Machine performance degradation assessment and remaining useful life prediction using proportional hazard model and support vector machine. Mech. Syst. Signal Process. 2012, 32, 320–330. [Google Scholar] [CrossRef]

- Liu, M.; Yao, X.; Zhang, J.; Chen, W.; Jing, X.; Wang, K. Multi-Sensor Data Fusion for Remaining Useful Life Prediction of Machining Tools by iabc-bpnn in Dry Milling Operations. Sensors 2020, 20, 4657. [Google Scholar] [CrossRef]

- Zhang, C.; Yao, X.; Zhang, J.; Jin, H. Tool Condition Monitoring and Remaining Useful Life Prognostic Based on a Wireless Sensor in Dry Milling Operations. Sensors 2016, 16, 795. [Google Scholar] [CrossRef]

- Zhou, Y.; Xue, W. A Multi-sensor Fusion Method for Tool Condition Monitoring in Milling. Sensors 2018, 18, 3866. [Google Scholar] [CrossRef] [PubMed]

- Thirukkumaran, K.; Mukhopadhyay, C.K. Analysis of Acoustic Emission Signal to Characterization the Damage Mechanism During Drilling of Al-5%SiC Metal Matrix Composite. Silicon 2020, 13, 309–325. [Google Scholar] [CrossRef]

- da Costa, C.; Kashiwagi, M.; Mathias, M.H. Rotor failure detection of induction motors by wavelet transform and Fourier transform in non-stationary condition. Case Stud. Mech. Syst. Signal Process. 2015, 1, 15–26. [Google Scholar] [CrossRef]

- Delsy, T.T.M.; Nandhitha, N.M.; Rani, B.S. RETRACTED ARTICLE: Feasibility of spectral domain techniques for the classification of non-stationary signals. J. Ambient. Intell. Humaniz. Comput. 2020, 12, 6347–6354. [Google Scholar] [CrossRef]

- Zhu, K.; Wong, Y.S.; Hong, G.S. Wavelet analysis of sensor signals for tool condition monitoring: A review and some new results. Int. J. Mach. Tools Manuf. 2009, 49, 537–553. [Google Scholar] [CrossRef]

- Hong, Y.-S.; Yoon, H.-S.; Moon, J.-S.; Cho, Y.-M.; Ahn, S.-H. Tool-wear monitoring during micro-end milling using wavelet packet transform and Fisher’s linear discriminant. Int. J. Precis. Eng. Manuf. 2016, 17, 845–855. [Google Scholar] [CrossRef]

- Segreto, T.; D’addona, D.; Teti, R. Tool wear estimation in turning of Inconel 718 based on wavelet sensor signal analysis and machine learning paradigms. Prod. Eng. 2020, 14, 693–705. [Google Scholar] [CrossRef]

- Rafezi, H.; Akbari, J.; Behzad, M. Tool Condition Monitoring based on sound and vibration analysis and wavelet packet decomposition. In Proceedings of the 2012 8th International Symposium on Mechatronics and Its Applications, Sharjah, United Arab Emirates, 10–12 April 2012; pp. 1–4. [Google Scholar] [CrossRef]

- Xiang, Z.; Feng, X. Tool Wear State Monitoring Based on Long-Term and Short-Term Memory Neural Network; Springer: Singapore, 2020; Volume 593. [Google Scholar] [CrossRef]

- Ganesan, R.; DAS, T.K.; Venkataraman, V. Wavelet-based multiscale statistical process monitoring: A literature review. IIE Trans. Inst. Ind. Eng. 2004, 36, 787–806. [Google Scholar] [CrossRef]

- Strackeljan, J.; Lahdelma, S. Smart Adaptive Monitoring and Diagnostic Systems. In Proceedings of the 2nd International Seminar on Maintenance, Condition Monitoring and Diagnostics, Oulu, Finland, 28–29 September 2005. [Google Scholar]

- Wang, L.; Gao, R. Condition Monitoring and Control for Intelligent Manufacturing; Springer: Berlin/Heidelberg, Germany, 2006; Available online: https://www.springer.com/gp/book/9781846282683 (accessed on 6 January 2023).

- Burus, C.S.; Gopinath, R.A.; Guo, H. Introduction to Wavelets and Wavelet Transform—A Primer; Prentice Hall: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Hosameldin, A.; Asoke, N. Condition Monitoring with Vibration Signals; Wiley-IEEE Press: Piscataway, NJ, USA, 2020. [Google Scholar]

- Liu, M.-K.; Tseng, Y.-H.; Tran, M.-Q. Tool wear monitoring and prediction based on sound signal. Int. J. Adv. Manuf. Technol. 2019, 103, 3361–3373. [Google Scholar] [CrossRef]

- Li, X.; Lim, B.S.; Zhou, J.H.; Huang, S.; Phua, S.J.; Shaw, K.C.; Er, M.J. Fuzzy neural network modelling for tool wear estimation in dry milling operation. In Proceedings of the Annual Conference of the Prognostics and Health Management Society, PHM 2009, San Diego, CA, USA, 27 September–1 October 2009; pp. 1–11. [Google Scholar]

- Nettleton, D. Selection of Variables and Factor Derivation. In Commercial Data Mining; Morgan Kaufmann: Boston, MA, USA, 2014; pp. 79–104. [Google Scholar] [CrossRef]

- Sayyad, S.; Kumar, S.; Bongale, A.; Kotecha, K.; Selvachandran, G.; Suganthan, P.N. Tool wear prediction using long short-term memory variants and hybrid feature selection techniques. Int. J. Adv. Manuf. Technol. 2022, 121, 6611–6633. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chan, Y.-W.; Kang, T.-C.; Yang, C.-T.; Chang, C.-H.; Huang, S.-M.; Tsai, Y.-T. Tool wear prediction using convolutional bidirectional LSTM networks. J. Supercomput. 2021, 78, 810–832. [Google Scholar] [CrossRef]

- Lindemann, B.; Maschler, B.; Sahlab, N.; Weyrich, M. A survey on anomaly detection for technical systems using LSTM networks. Comput. Ind. 2021, 131, 103498. [Google Scholar] [CrossRef]

- An, Q.; Tao, Z.; Xu, X.; El Mansori, M.; Chen, M. A data-driven model for milling tool remaining useful life prediction with convolutional and stacked LSTM network. Measurement 2019, 154, 107461. [Google Scholar] [CrossRef]

- Wu, Z.; Christofides, P.D. Economic Machine-Learning-Based Predictive Control of Non-linear Systems. Mathematics 2019, 7, 494. [Google Scholar] [CrossRef]

- Chen, C.-W.; Tseng, S.-P.; Kuan, T.-W.; Wang, J.-F. Outpatient Text Classification Using Attention-Based Bidirectional LSTM for Robot-Assisted Servicing in Hospital. Information 2020, 11, 106. [Google Scholar] [CrossRef]

- Le, X.H.; Ho, H.V.; Lee, G.; Jung, S. Application of Long Short-Term Memory (LSTM) Neural Network for Flood Forecasting. Water 2019, 11, 1387. [Google Scholar] [CrossRef]

- Chatterjee, A.; Gerdes, M.W.; Martinez, S.G. Statistical Explorations and Univariate Timeseries Analysis on COVID-19 Datasets to Understand the Trend of Disease Spreading and Death. Sensors 2020, 20, 3089. [Google Scholar] [CrossRef] [PubMed]

- Chandra, R.; Goyal, S.; Gupta, R. Evaluation of Deep Learning Models for Multi-Step Ahead Time Series Prediction. IEEE Access 2021, 9, 83105–83123. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Wang, J.; Mao, K. Learning to Monitor Machine Health with Convolutional Bi-Directional LSTM Networks. Sensors 2017, 17, 273. [Google Scholar] [CrossRef]

- Kumar, S.; Kolekar, T.; Kotecha, K.; Patil, S.; Bongale, A. Performance evaluation for tool wear prediction based on Bi-directional, Encoder–Decoder and Hybrid Long Short-Term Memory models. Int. J. Qual. Reliab. Manag. 2022, 39, 1551–1576. [Google Scholar] [CrossRef]

- Zhang, X.; Lu, X.; Li, W.; Wang, S. Prediction of the remaining useful life of cutting tool using the Hurst exponent and CNN-LSTM. Int. J. Adv. Manuf. Technol. 2021, 112, 2277–2299. [Google Scholar] [CrossRef]

- Agga, A.; Abbou, A.; Labbadi, M.; El Houm, Y.; Ali, I.H.O. CNN-LSTM: An efficient hybrid deep learning architecture for predicting short-term photovoltaic power production. Electr. Power Syst. Res. 2022, 208, 107908. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Wu, K.-D.; Shih, W.-C.; Hsu, P.-K.; Hung, J.-P. Prediction of Surface Roughness Based on Cutting Parameters and Machining Vibration in End Milling Using Regression Method and Artificial Neural Network. Appl. Sci. 2020, 10, 3941. [Google Scholar] [CrossRef]

| Signal Category | Acquisition Equipment | Sample Frequency (Hz) |

|---|---|---|

| Spindle current and power | PLC | 300 |

| Vibration | PCBTM-W356B11 | 400 |

| Cutting force | SpikeTM sensory tool holder | 600 |

| No. of Cases | Feed Per Tooth (mm/r) | Spindle Speed (r/min) | Axial Cutting Depth (mm) | Tool Material | Workpiece Material |

|---|---|---|---|---|---|

| W1 | 0.045 | 1750 | 2.5 | Solid carbide | TC4 |

| W2 | 0.045 | 1800 | 3 | ||

| W3 | 0.045 | 1850 | 3.5 | ||

| W4 | 0.05 | 1750 | 3 | ||

| W5 | 0.05 | 1800 | 3.5 | ||

| W6 | 0.05 | 1850 | 2.5 | ||

| W7 | 0.055 | 1750 | 3.5 | ||

| W8 | 0.055 | 1800 | 2.5 | ||

| W9 | 0.055 | 1850 | 3 |

| Sr. No. | Flank Wear (mm) | Sr. No | Flank Wear (mm) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Flute-1 | Flute-2 | Flute-3 | Flute-4 | Flute-1 | Flute-2 | Flute-3 | Flute-4 | ||

| 1 | 0.05 | 0.12 | 0.1 | 0.05 | 16 | 0.17 | 0.23 | 0.21 | 0.14 |

| 2 | 0.1 | 0.14 | 0.1 | 0.05 | 17 | 0.18 | 0.23 | 0.21 | 0.14 |

| 3 | 0.12 | 0.14 | 0.11 | 0.09 | 18 | 0.18 | 0.23 | 0.21 | 0.15 |

| 4 | 0.12 | 0.15 | 0.13 | 0.1 | 19 | 0.18 | 0.23 | 0.21 | 0.15 |

| 5 | 0.13 | 0.16 | 0.15 | 0.1 | 20 | 0.19 | 0.23 | 0.21 | 0.15 |

| 6 | 0.13 | 0.18 | 0.16 | 0.1 | 21 | 0.19 | 0.24 | 0.22 | 0.15 |

| 7 | 0.14 | 0.18 | 0.16 | 0.1 | 22 | 0.19 | 0.24 | 0.22 | 0.15 |

| 8 | 0.15 | 0.18 | 0.16 | 0.12 | 23 | 0.19 | 0.24 | 0.22 | 0.15 |

| 9 | 0.16 | 0.19 | 0.17 | 0.12 | 24 | 0.19 | 0.24 | 0.22 | 0.15 |

| 10 | 0.16 | 0.2 | 0.18 | 0.12 | 25 | 0.19 | 0.25 | 0.24 | 0.15 |

| 11 | 0.16 | 0.21 | 0.18 | 0.12 | 26 | 0.19 | 0.25 | 0.25 | 0.15 |

| 12 | 0.17 | 0.21 | 0.19 | 0.13 | 27 | 0.2 | 0.25 | 0.25 | 0.15 |

| 13 | 0.17 | 0.22 | 0.2 | 0.13 | 28 | 0.2 | 0.26 | 0.26 | 0.15 |

| 14 | 0.17 | 0.22 | 0.21 | 0.13 | 29 | 0.2 | 0.26 | 0.26 | 0.15 |

| 15 | 0.17 | 0.22 | 0.21 | 0.14 | 30 | 0.2 | 0.27 | 0.26 | 0.15 |

| Sr. No. | Statistical Features | Formula |

|---|---|---|

| 1 | Mean | |

| 2 | Standard deviation | |

| 3 | Variance | |

| 4 | Kurtosis | |

| 5 | Skewness | |

| 6 | Root mean square | |

| 7 | Peak to Peak | |

| 8 | Peak amplitude |

| Sr. No. | Performance Parameters | Formula |

|---|---|---|

| 1 | R-squared (R2) | |

| 2 | RMSE | |

| 3 | MAPE |

| Feature Extraction Method | Feature Count |

|---|---|

| STFT | 64 |

| CWT | 64 |

| WPT | 128 |

| Feature Count | Feature | PCC | Feature Count | Feature | PCC |

|---|---|---|---|---|---|

| 1 | stft_rms_Bending_Moment_Y | 0.563 | 12 | stft_p2p_vib_x | 0.290 |

| 2 | stft_std_Bending_Moment_Y | 0.500 | 13 | stft_peak_amp_vib_x | 0.290 |

| 3 | stft_mean_Bending_Moment_Y | 0.499 | 14 | stft_skew_Bending_Moment_Y | 0.282 |

| 4 | stft_var_Bending_Moment_Y | 0.498 | 15 | stft_rms_vib_x | 0.274 |

| 5 | stft_p2p_Bending_Moment_Y | 0.493 | 16 | stft_kurtosis_Bending_Moment_Y | 0.270 |

| 6 | stft_peak_amp_Bending_Moment_Y | 0.493 | 17 | stft_std_Bending_Moment_X | 0.256 |

| 7 | stft_mean_Torsion_Z | 0.348 | 18 | stft_var_Bending_Moment_X | 0.255 |

| 8 | stft_skew_Torsion_Z | 0.327 | 19 | stft_peak_amp_Bending_Moment_X | 0.253 |

| 9 | stft_kurtosis_Torsion_Z | 0.317 | 20 | stft_p2p_Bending_Moment_X | 0.253 |

| 10 | stft_var_vib_x | 0.300 | 21 | stft_rms_Bending_Moment_X | 0.243 |

| 11 | stft_std_vib_x | 0.297 |

| Feature Count | Features | Weight | Feature Count | Score | Weight |

|---|---|---|---|---|---|

| 1 | stft_rms_Bending_Moment_Y | 32.87 | 17 | stft_peak_amp_Torsion_Z | 1.43 |

| 2 | stft_mean_Torsion_Z | 7.13 | 18 | stft_var_vib_x | 1.39 |

| 3 | stft_skew_Torsion_Z | 4.37 | 19 | stft_var_vib_y | 1.25 |

| 4 | stft_peak_amp_vib_x | 4.15 | 20 | stft_mean_Axial_Force | 1.15 |

| 5 | stft_p2p_vib_x | 4.01 | 21 | stft_var_Torsion_Z | 0.80 |

| 6 | stft_mean_Bending_Moment_Y | 3.83 | 22 | stft_std_Torsion_Z | 0.74 |

| 7 | stft_std_vib_x | 3.20 | 23 | stft_var_Bending_Moment_Y | 0.66 |

| 8 | stft_var_Axial_Force | 2.78 | 24 | stft_kurtosis_Bending_Moment_Y | 0.65 |

| 9 | stft_rms_Axial_Force | 2.76 | 25 | stft_skew_Bending_Moment_Y | 0.62 |

| 10 | stft_std_Axial_Force | 2.70 | 26 | stft_kurtosis_Torsion_Z | 0.61 |

| 11 | stft_rms_vib_x | 2.51 | 27 | stft_mean_vib_y | 0.59 |

| 12 | stft_peak_amp_Axial_Force | 2.39 | 28 | stft_rms_vib_y | 0.58 |

| 13 | stft_p2p_vib_y | 2.00 | 29 | stft_std_Bending_Moment_Y | 0.57 |

| 14 | stft_p2p_Torsion_Z | 1.75 | 30 | stft_std_vib_y | 0.55 |

| 15 | stft_p2p_Axial_Force | 1.72 | 31 | stft_rms_Torsion_Z | 0.54 |

| 16 | stft_peak_amp_vib_y | 1.59 |

| Feature Count | Features | PCC | Feature Count | Features | PCC |

|---|---|---|---|---|---|

| 1 | cwt_rms_Bending_Moment_Y | 0.372 | 7 | cwt_p2p_Torsion_Z | 0.326 |

| 2 | cwt_std_Bending_Moment_Y | 0.372 | 8 | cwt_var_Torsion_Z | 0.322 |

| 3 | cwt_var_Bending_Moment_Y | 0.370 | 9 | cwt_peak_amp_Bending_Moment_Y | 0.306 |

| 4 | cwt_std_Torsion_Z | 0.333 | 10 | cwt_p2p_Bending_Moment_Y | 0.306 |

| 5 | cwt_rms_Torsion_Z | 0.333 | 11 | cwt_skew_Torsion_Z | 0.201 |

| 6 | cwt_peak_amp_Torsion_Z | 0.326 |

| Feature Count | Features | Weight | Feature Count | Features | Weight |

|---|---|---|---|---|---|

| 1 | cwt_mean_Bending_Moment_Y | 23.21 | 23 | cwt_kurtosis_Spindle_current | 0.93 |

| 2 | cwt_mean_Torsion_Z | 12.49 | 24 | cwt_skew_Axial_Force | 0.92 |

| 3 | cwt_mean_Bending_Moment_X | 7.28 | 25 | cwt_kurtosis_Spindle_power | 0.89 |

| 4 | cwt_rms_Torsion_Z | 5.06 | 26 | cwt_peak_amp_Bending_Moment_X | 0.89 |

| 5 | cwt_rms_Bending_Moment_Y | 3.40 | 27 | cwt_std_Bending_Moment_X | 0.87 |

| 6 | cwt_skew_Bending_Moment_Y | 2.64 | 28 | cwt_kurtosis_Axial_Force | 0.82 |

| 7 | cwt_rms_Bending_Moment_X | 2.16 | 29 | cwt_rms_Axial_Force | 0.82 |

| 8 | cwt_skew_Torsion_Z | 1.95 | 30 | cwt_skew_Spindle_power | 0.82 |

| 9 | cwt_kurtosis_Bending_Moment_X | 1.76 | 31 | cwt_var_Bending_Moment_X | 0.80 |

| 10 | cwt_var_Bending_Moment_Y | 1.62 | 32 | cwt_skew_Spindle_current | 0.73 |

| 11 | cwt_std_Torsion_Z | 1.57 | 33 | cwt_p2p_Bending_Moment_X | 0.73 |

| 12 | cwt_std_Bending_Moment_Y | 1.53 | 34 | cwt_kurtosis_vib_x | 0.71 |

| 13 | cwt_var_Torsion_Z | 1.47 | 35 | cwt_peak_amp_Axial_Force | 0.69 |

| 14 | cwt_mean_Axial_Force | 1.37 | 36 | cwt_std_Axial_Force | 0.69 |

| 15 | cwt_kurtosis_Torsion_Z | 1.30 | 37 | cwt_var_Axial_Force | 0.69 |

| 16 | cwt_kurtosis_Bending_Moment_Y | 1.27 | 38 | cwt_skew_vib_y | 0.67 |

| 17 | cwt_skew_Bending_Moment_X | 1.16 | 39 | cwt_mean_Spindle_current | 0.67 |

| 18 | cwt_peak_amp_Bending_Moment_Y | 1.05 | 40 | cwt_mean_Spindle_power | 0.66 |

| 19 | cwt_p2p_Torsion_Z | 1.04 | 41 | cwt_p2p_Axial_Force | 0.66 |

| 20 | cwt_skew_vib_x | 1.02 | 42 | cwt_mean_vib_y | 0.59 |

| 21 | cwt_peak_amp_Torsion_Z | 0.94 | 43 | cwt_p2p_Spindle_current | 0.51 |

| 22 | cwt_p2p_Bending_Moment_Y | 0.93 |

| Feature Count | Features | PCC | Feature Count | Features | PCC |

|---|---|---|---|---|---|

| 1 | a_rms_Bending_Moment_Y | 0.66 | 14 | a_peak_amp_Bending_Moment_Y | 0.36 |

| 2 | a_skew_Torsion_Z | 0.60 | 15 | a_p2p_Bending_Moment_Y | 0.36 |

| 3 | a_mean_Bending_Moment_Y | 0.57 | 16 | d_p2p_Torsion_Z | 0.34 |

| 4 | a_std_Bending_Moment_Y | 0.50 | 17 | d_peak_amp_Torsion_Z | 0.34 |

| 5 | a_var_Bending_Moment_Y | 0.50 | 18 | d_rms_Torsion_Z | 0.34 |

| 6 | d_rms_Bending_Moment_Y | 0.47 | 19 | d_std_Torsion_Z | 0.34 |

| 7 | d_std_Bending_Moment_Y | 0.47 | 20 | d_var_Torsion_Z | 0.34 |

| 8 | d_var_Bending_Moment_Y | 0.47 | 21 | a_kurtosis_vib_x | 0.31 |

| 9 | d_peak_amp_Bending_Moment_Y | 0.40 | 22 | a_kurtosis_vib_y | 0.29 |

| 10 | d_p2p_Bending_Moment_Y | 0.40 | 23 | a_peak_amp_Torsion_Z | 0.27 |

| 11 | a_std_Torsion_Z | 0.37 | 24 | a_p2p_Torsion_Z | 0.27 |

| 12 | a_var_Torsion_Z | 0.37 | 25 | a_mean_Bending_Moment_X | 0.25 |

| 13 | a_skew_Bending_Moment_Y | 0.36 | 26 | a_rms_Bending_Moment_X | 0.25 |

| Feature Count | Feature | Weight | Feature Count | Feature | Weight |

|---|---|---|---|---|---|

| 1 | a_rms_Bending_Moment_Y | 32.88 | 11 | a_mean_vib_y | 1.35 |

| 2 | a_skew_Bending_Moment_Y | 23.38 | 12 | a_p2p_Torsion_Z | 1.30 |

| 3 | a_skew_Torsion_Z | 4.60 | 13 | a_peak_amp_Torsion_Z | 1.26 |

| 4 | a_rms_vib_x | 4.58 | 14 | a_p2p_Bending_Moment_Y | 0.74 |

| 5 | a_mean_vib_x | 3.36 | 15 | a_mean_Torsion_Z | 0.72 |

| 6 | a_rms_Axial_Force | 3.22 | 16 | a_var_Bending_Moment_Y | 0.67 |

| 7 | a_kurtosis_Torsion_Z | 3.18 | 17 | a_peak_amp_Bending_Moment_Y | 0.65 |

| 8 | a_mean_Axial_Force | 2.48 | 18 | a_mean_Bending_Moment_Y | 0.61 |

| 9 | a_kurtosis_Bending_Moment_Y | 2.18 | 19 | a_std_Bending_Moment_Y | 0.59 |

| 10 | a_rms_vib_y | 1.36 |

| Feature Extraction Techniques | Prediction Models | RUL Prediction | ||

|---|---|---|---|---|

| Performance Evaluation on Testing Data | ||||

| R2 | RMSE | MAPE | ||

| STFT | SVR | 0.235 | 0.249 | 17.983 |

| RFR | 0.363 | 0.227 | 16.001 | |

| GBR | 0.316 | 0.235 | 17.871 | |

| CWT | SVR | 0.062 | 0.288 | 22.631 |

| RFR | 0.100 | 0.270 | 20.900 | |

| GBR | 0.084 | 0.273 | 21.922 | |

| WPT | SVR | 0.216 | 0.252 | 18.344 |

| RFR | 0.366 | 0.227 | 15.933 | |

| GBR | 0.320 | 0.234 | 17.510 | |

| Feature Extraction Techniques | Prediction Models | RUL Prediction | ||

|---|---|---|---|---|

| Performance Evaluation on Testing Data | ||||

| R2 | RMSE | MAPE | ||

| STFT | SVR | 0.241 | 0.248 | 18.447 |

| RFR | 0.383 | 0.224 | 16.001 | |

| GBR | 0.346 | 0.230 | 17.406 | |

| CWT | SVR | 0.081 | 0.289 | 22.820 |

| RFR | 0.102 | 0.270 | 21.111 | |

| GBR | 0.111 | 0.269 | 21.856 | |

| WPT | SVR | 0.347 | 0.230 | 16.045 |

| RFR | 0.496 | 0.2026 | 13.495 | |

| GBR | 0.452 | 0.211 | 15.194 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation on Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | Vanilla LSTM | 0.741 | 0.147 | 10.163 | 0.706 | 0.152 | 10.523 |

| Bi-direction LSTM | 0.800 | 0.129 | 08.807 | 0.755 | 0.139 | 09.175 | |

| Stack LSTM | 0.865 | 0.106 | 06.743 | 0.802 | 0.125 | 07.372 | |

| Random Forest Regressor (RFR) | Vanilla LSTM | 0.780 | 0.135 | 08.998 | 0.737 | 0.144 | 09.510 |

| Bi-direction LSTM | 0.704 | 0.157 | 10.715 | 0.654 | 0.165 | 11.461 | |

| Stack LSTM | 0.809 | 0.126 | 08.138 | 0.782 | 0.131 | 08.520 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation on Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | CNN | 0.878 | 0.101 | 07.057 | 0.775 | 0.133 | 08.946 |

| CNN-LSTM | 0.934 | 0.074 | 05.426 | 0.881 | 0.097 | 06.877 | |

| CNN-Bi-LSTM | 0.788 | 0.133 | 08.605 | 0.753 | 0.140 | 09.090 | |

| CNN-Stack-STM | 0.870 | 0.104 | 06.842 | 0.833 | 0.115 | 07.421 | |

| Random Forest Regressor (RFR) | CNN | 0.972 | 0.048 | 03.570 | 0.930 | 0.074 | 05.499 |

| CNN-LSTM | 0.829 | 0.119 | 08.067 | 0.774 | 0.134 | 08.728 | |

| CNN-Bi-LSTM | 0.906 | 0.088 | 05.891 | 0.838 | 0.113 | 07.090 | |

| CNN-Stack-LSTM | 0.964 | 0.054 | 03.681 | 0.951 | 0.062 | 04.161 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation on Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | Vanilla LSTM | 0.907 | 0.087 | 06.503 | 0.851 | 0.104 | 07.359 |

| Bi-direction LSTM | 0.864 | 0.106 | 07.505 | 0.818 | 0.120 | 08.446 | |

| Stack LSTM | 0.861 | 0.107 | 07.506 | 0.793 | 0.128 | 08.577 | |

| Random Forest Regressor (RFR) | Vanilla LSTM | 0.882 | 0.099 | 07.176 | 0.838 | 0.113 | 08.369 |

| Bi-direction LSTM | 0.909 | 0.087 | 06.582 | 0.881 | 0.097 | 07.274 | |

| Stack LSTM | 0.953 | 0.062 | 04.789 | 0.927 | 0.075 | 05.781 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation On Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | CNN | 0.981 | 0.039 | 03.157 | 0.934 | 0.072 | 05.301 |

| CNN-LSTM | 0.937 | 0.072 | 05.236 | 0.858 | 0.106 | 06.922 | |

| CNN-Bi-LSTM | 0.987 | 0.0317 | 02.447 | 0.960 | 0.051 | 03.576 | |

| CNN Stack LSTM | 0.900 | 0.091 | 06.516 | 0.828 | 0.117 | 07.814 | |

| Random Forest Regressor (RFR) | CNN | 0.962 | 0.055 | 04.415 | 0.919 | 0.080 | 06.177 |

| CNN-LSTM | 0.935 | 0.075 | 05.729 | 0.890 | 0.093 | 06.880 | |

| CNN-Bi-LSTM | 0.992 | 0.025 | 01.95 | 0.971 | 0.048 | 03.428 | |

| CNN-Stack-LSTM | 0.976 | 0.044 | 03.418 | 0.953 | 0.059 | 04.354 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation on Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | Vanilla LSTM | 0.922 | 0.080 | 06.021 | 0.857 | 0.102 | 07.140 |

| Bi-direction LSTM | 0.814 | 0.124 | 08.576 | 0.778 | 0.132 | 09.087 | |

| Stack LSTM | 0.828 | 0.119 | 07.698 | 0.771 | 0.134 | 08.134 | |

| Random Forest Regressor (RFR) | Vanilla LSTM | 0.937 | 0.072 | 04.914 | 0.901 | 0.088 | 05.721 |

| Bi-direction LSTM | 0.897 | 0.092 | 05.966 | 0.873 | 0.100 | 06.533 | |

| Stack LSTM | 0.978 | 0.042 | 03.043 | 0.964 | 0.051 | 03.676 | |

| Feature Selection Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Performance Evaluation on Training Data | Performance Evaluation on Testing Data | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| Pearson’s Correlation Coefficient (PCC) | CNN | 0.979 | 0.041 | 03.192 | 0.941 | 0.068 | 04.943 |

| CNN-LSTM | 0.946 | 0.066 | 05.023 | 0.903 | 0.087 | 05.925 | |

| CNN -Bi-LSTM | 0.950 | 0.064 | 04.700 | 0.908 | 0.086 | 05.905 | |

| CNN-Stack-LSTM | 0.878 | 0.100 | 06.915 | 0.827 | 0.117 | 07.630 | |

| Random Forest Regressor (RFR) | CNN | 0.966 | 0.052 | 03.869 | 0.926 | 0.076 | 05.522 |

| CNN-LSTM | 0.630 | 0.175 | 11.452 | 0.946 | 0.051 | 05.522 | |

| CNN-Bi-LSTM | 0.979 | 0.041 | 03.211 | 0.948 | 0.064 | 04.647 | |

| CNN-Stack-LSTM | 0.977 | 0.043 | 0.030 | 0.955 | 0.058 | 03.590 | |

| Feature Extraction Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Feature Selection Using Pearson’s Correlation Coefficient (PCC) | Feature Selection Using Random Forest (RF) | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| STFT | Vanilla LSTM | 0.706 | 0.152 | 10.523 | 0.737 | 0.144 | 09.510 |

| Bi-direction LSTM | 0.755 | 0.139 | 09.175 | 0.654 | 0.165 | 11.461 | |

| Stack LSTM | 0.802 | 0.125 | 07.372 | 0.782 | 0.131 | 08.520 | |

| CNN | 0.775 | 0.133 | 08.946 | 0.930 | 0.074 | 05.499 | |

| CNN-LSTM | 0.881 | 0.097 | 06.877 | 0.774 | 0.134 | 08.728 | |

| CNN-Bi-LSTM | 0.753 | 0.140 | 09.090 | 0.838 | 0.113 | 07.090 | |

| CNN-Stack-LSTM | 0.833 | 0.115 | 07.421 | 0.951 | 0.062 | 04.161 | |

| CWT | Vanilla LSTM | 0.851 | 0.104 | 07.359 | 0.838 | 0.113 | 08.369 |

| Bi-direction LSTM | 0.818 | 0.120 | 08.446 | 0.881 | 0.097 | 07.274 | |

| Stack LSTM | 0.793 | 0.128 | 08.577 | 0.927 | 0.075 | 05.781 | |

| CNN | 0.934 | 0.072 | 05.301 | 0.919 | 0.080 | 06.177 | |

| CNN-LSTM | 0.858 | 0.106 | 06.922 | 0.890 | 0.093 | 06.880 | |

| CNN-Bi-LSTM | 0.960 | 0.051 | 03.576 | 0.971 | 0.048 | 03.428 | |

| CNN-Stack-LSTM | 0.828 | 0.117 | 07.814 | 0.953 | 0.059 | 04.354 | |

| WPT | Vanilla LSTM | 0.857 | 0.102 | 07.140 | 0.901 | 0.088 | 05.721 |

| Bi-direction LSTM | 0.778 | 0.132 | 09.087 | 0.873 | 0.100 | 06.533 | |

| Stack LSTM | 0.771 | 0.134 | 08.134 | 0.964 | 0.051 | 03.676 | |

| CNN | 0.941 | 0.068 | 04.943 | 0.926 | 0.076 | 05.522 | |

| CNN-LSTM | 0.903 | 0.087 | 05.925 | 0.946 | 0.065 | 04.448 | |

| CNN-Bi-LSTM | 0.908 | 0.086 | 05.905 | 0.948 | 0.064 | 04.647 | |

| CNN-Stack-LSTM | 0.827 | 0.117 | 07.630 | 0.955 | 0.058 | 03.590 | |

| Feature Extraction Techniques | Prediction Models | RUL Prediction | |||||

|---|---|---|---|---|---|---|---|

| Feature Selection Using Pearson’s Correlation Coefficient (PCC) | Feature Selection Using Random Forest Regressor (RFR) | ||||||

| R2 | RMSE | MAPE | R2 | RMSE | MAPE | ||

| STFT | Vanilla LSTM | 0.949 | 0.064 | 04.647 | 0.946 | 0.066 | 04.780 |

| Bi-direction LSTM | 0.954 | 0.062 | 04.652 | 0.926 | 0.079 | 05.767 | |

| Stack LSTM | 0.832 | 0.120 | 07.369 | 0.940 | 0.071 | 04.866 | |

| CNN | 0.962 | 0.057 | 03.972 | 0.956 | 0.061 | 04.367 | |

| CNN-LSTM | 0.898 | 0.094 | 05.803 | 0.891 | 0.096 | 06.967 | |

| CNN-Bi-LSTM | 0.904 | 0.087 | 05.975 | 0.967 | 0.044 | 03.256 | |

| CNN-Stack-LSTM | 0.812 | 0.127 | 08.412 | 0.886 | 0.099 | 06.263 | |

| CWT | Vanilla LSTM | 0.883 | 0.100 | 06.506 | 0.965 | 0.054 | 03.867 |

| Bi-direction LSTM | 0.788 | 0.135 | 09.306 | 0.900 | 0.926 | 05.950 | |

| Stack LSTM | 0.793 | 0.128 | 0.085 | 0.949 | 0.066 | 04.197 | |

| CNN | 0.927 | 0.079 | 05.264 | 0.929 | 0.078 | 05.103 | |

| CNN-LSTM | 0.928 | 0.078 | 05.684 | 0.810 | 0.128 | 08.679 | |

| CNN-Bi-LSTM | 0.972 | 0.048 | 03.123 | 0.979 | 0.042 | 02.965 | |

| CNN-Stack-LSTM | 0.963 | 0.056 | 03.984 | 0.910 | 0.087 | 04.969 | |

| WPT | Vanilla LSTM | 0.979 | 0.0421 | 03.195 | 0.977 | 0.044 | 03.243 |

| Bi-direction LSTM | 0.971 | 0.048 | 03.649 | 0.824 | 0.118 | 08.063 | |

| Stack LSTM | 0.965 | 0.054 | 04.043 | 0.985 | 0.034 | 02.728 | |

| CNN | 0.971 | 0.049 | 03.672 | 0.950 | 0.064 | 04.700 | |

| CNN-LSTM | 0.975 | 0.045 | 03.478 | 0.977 | 0.043 | 03.012 | |

| CNN-Bi-LSTM | 0.878 | 0.100 | 06.915 | 0.970 | 0.044 | 03.032 | |

| CNN-Stack-LSTM | 0.827 | 0.117 | 07.630 | 0.955 | 0.059 | 03.590 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sayyad, S.; Kumar, S.; Bongale, A.; Kotecha, K.; Abraham, A. Remaining Useful-Life Prediction of the Milling Cutting Tool Using Time–Frequency-Based Features and Deep Learning Models. Sensors 2023, 23, 5659. https://doi.org/10.3390/s23125659

Sayyad S, Kumar S, Bongale A, Kotecha K, Abraham A. Remaining Useful-Life Prediction of the Milling Cutting Tool Using Time–Frequency-Based Features and Deep Learning Models. Sensors. 2023; 23(12):5659. https://doi.org/10.3390/s23125659

Chicago/Turabian StyleSayyad, Sameer, Satish Kumar, Arunkumar Bongale, Ketan Kotecha, and Ajith Abraham. 2023. "Remaining Useful-Life Prediction of the Milling Cutting Tool Using Time–Frequency-Based Features and Deep Learning Models" Sensors 23, no. 12: 5659. https://doi.org/10.3390/s23125659

APA StyleSayyad, S., Kumar, S., Bongale, A., Kotecha, K., & Abraham, A. (2023). Remaining Useful-Life Prediction of the Milling Cutting Tool Using Time–Frequency-Based Features and Deep Learning Models. Sensors, 23(12), 5659. https://doi.org/10.3390/s23125659