Human Activity Recognition Using Attention-Mechanism-Based Deep Learning Feature Combination

Abstract

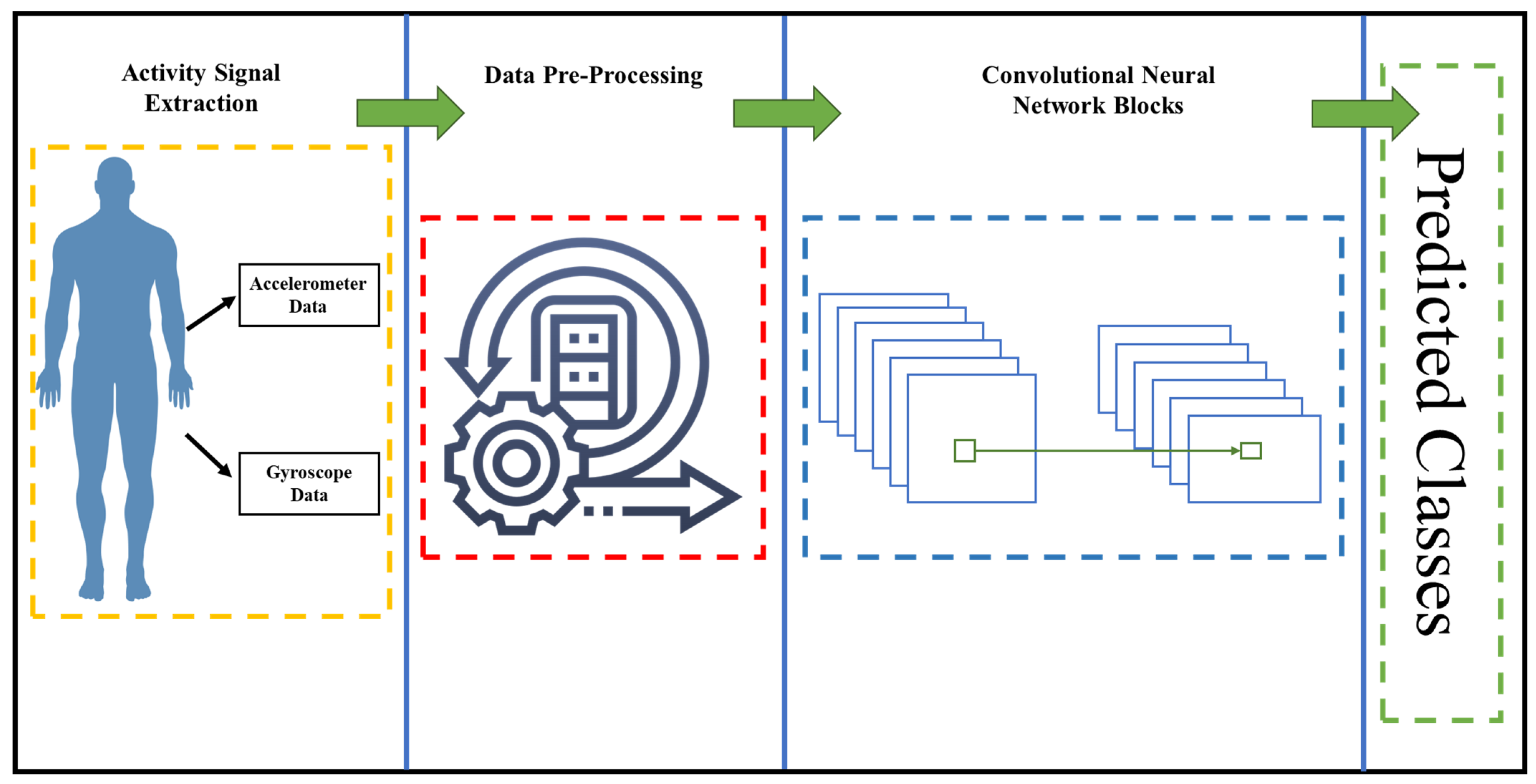

:1. Introduction

- Developing an accurate and lightweight 2D CNN model with minimal pre-processing that works for multiple datasets with minimal tuning.

- Achieving higher accuracy on the primary dataset and comparing them with the existing models’ results.

- Testing the robustness of the proposed model by analyzing two other benchmark datasets and comparing the results with other existing methods.

2. Methodology

2.1. Constructing Spectrograms from HAR Samples

2.2. Proposed Model Architecture

3. Experimental Procedure and Results

3.1. Dataset Description

3.1.1. KU-HAR Dataset

3.1.2. UCI-HAR Dataset

3.1.3. WISDM Dataset

3.2. Implementation Details

3.3. Results on KU-HAR Dataset

3.4. Results on UCI-HAR and WISDM Dataset

3.5. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Valagkouti, I.A.; Troussas, C.; Krouska, A.; Feidakis, M.; Sgouropoulou, C. Emotion Recognition in Human–Robot Interaction Using the NAO Robot. Computers 2022, 11, 72. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Chen, J.; Sun, Y.; Sun, S. Improving Human Activity Recognition Performance by Data Fusion and Feature Engineering. Sensors 2021, 21, 692. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Zuo, D.; Zhang, Z. Transition Activity Recognition System Based on Standard Deviation Trend Analysis. Sensors 2020, 20, 3117. [Google Scholar] [CrossRef]

- Bento, N.; Rebelo, J.; Barandas, M.; Carreiro, A.V.; Campagner, A.; Cabitza, F.; Gamboa, H. Comparing Handcrafted Features and Deep Neural Representations for Domain Generalization in Human Activity Recognition. Sensors 2022, 22, 7324. [Google Scholar] [CrossRef]

- Shaily, S.; Mangat, V. The Hidden Markov Model and its application to Human Activity Recognition. In Proceedings of the 2015 Recent Advances in Engineering and Computational Sciences (RAECS), Chandigarh, India, 21–22 December 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Xue, T.; Liu, H. Hidden Markov Model and Its Application in Human Activity Recognition and Fall Detection: A Review. In CSPS 2021: Communications, Signal Processing, and Systems, Proceedings of the 2021, Changbaishan, China, 24–25 July 2021; Lecture Notes in Electrical, Engineering; Liang, Q., Wang, W., Liu, X., Na, Z., Zhang, B., Eds.; Springer: Singapore, 2022; Volume 878, p. 878. [Google Scholar] [CrossRef]

- Hartmann, Y.; Liu, H.; Schultz, T. Interactive and Interpretable Online Human Activity Recognition. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Pisa, Italy, 11–15 March 2022; pp. 109–111. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, J.; Chen, Q.; Liu, Z. A Novel Selective Ensemble Learning Method for Smartphone Sensor-Based Human Activity Recognition Based on Hybrid Diversity Enhancement and Improved Binary Glowworm Swarm Optimization. IEEE Access 2022, 10, 125027–125041. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Teng, Q.; Min, F.; Song, A. Triple Cross-Domain Attention on Human Activity Recognition Using Wearable Sensors. Trans. Emerg. Top. Comput. Intell. 2022, 6, 1167–1176. [Google Scholar] [CrossRef]

- Dhammi, L.; Tewari, P. Classification of Human Activities using data captured through a Smartphone using deep learning techniques. In Proceedings of the 2021 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India, 13–14 May 2021; pp. 689–694. [Google Scholar] [CrossRef]

- Jantawong, P.; Jitpattanakul, A.; Mekruksavanich, S. Enhancement of Human Complex Activity Recognition using Wearable Sensors Data with InceptionTime Network. In Proceedings of the 2021 2nd International Conference on Big Data Analytics and Practices (IBDAP), Bangkok, Thailand, 26–27August 2021; pp. 12–16. [Google Scholar] [CrossRef]

- Shi, H.; Hou, Z.; Liang, J.; Lin, E.; Zhong, Z. DSFNet: A Distributed Sensors Fusion Network for Action Recognition. IEEE Sens. J. 2023, 23, 839–848. [Google Scholar] [CrossRef]

- Teng, Q.; Tang, Y.; Hu, G. RepHAR: Decoupling Networks With Accuracy-Speed Tradeoff for Sensor-Based Human Activity Recognition. IEEE Trans. Instrum. Meas. 2023, 72, 2505111. [Google Scholar] [CrossRef]

- Challa, S.K.; Kumar, A.; Semwal, V.B. A multibranch CNN-BiLSTM model for human activity recognition using wearable sensor data. Vis. Comput. 2022, 38, 4095–4109. [Google Scholar] [CrossRef]

- Nahid, A.-A.; Sikder, N.; Rafi, I. KU-HAR: An Open Dataset for Human Activity Recognition; Khulna University: Khulna, Bangladesh, 2020; Version 5. [Google Scholar] [CrossRef]

- Sikder, N.; Al Nahid, A. KU-HAR: An open dataset for heterogeneous human activity recognition. Pattern Recognit. Lett. 2021, 146, 46–54. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. Sensors 2020, 20, 2200. [Google Scholar]

- Reyes-Ortiz, J.; Anguita, D.; Ghio, A.; Oneto, L.; Parra, X. UCI Machine Learning Repository: Human Activity Recognition Using Smartphones Data Set. 2012. Available online: https://archive.ics.uci.edu/ml/datasets/human+activity+recognition+using+smartphones (accessed on 21 December 2022).

- Sikder, N.; Chowdhury, M.S.; Arif, A.S.M.; Al Nahid, A. Human activity recognition using multichannel convolutional neural network. In Proceedings of the 2019 5th International Conference on Advances in Electrical Engineering, ICAEE, Dhaka, Bangladesh, 26–28 September 2019; pp. 560–565. [Google Scholar] [CrossRef]

- Ronald, M.; Poulose, A.; Han, D.S. iSPLInception: An InceptionResNet DL Architecture for Human Activity Recognition. IEEE Access 2021, 9, 68985–69001. [Google Scholar] [CrossRef]

- Bhuiyan, R.A.; Tarek, S.; Tian, H. Enhanced bag-of-words representation for human activity recognition using mobile sensor data. Signal Image Video Process. 2021, 15, 1739–1746. [Google Scholar] [CrossRef]

- Mahmud, T.; Sayyed, A.Q.M.S.; Fattah, S.A.; Kung, S.Y. A Novel Multi-Stage Training Approach for Human Activity Recognition from Multimodal Wearable Sensor Data Using Deep Neural Network. IEEE Sens. J. 2021, 21, 1715–1726. [Google Scholar] [CrossRef]

- Ghate, V.; Hemalatha, C.S. Hybrid DL approaches for smartphone sensor-based human activity recognition. Multimed. Tools Appl. 2021, 80, 35585–35604. [Google Scholar] [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SigKDD Explor. Newsl. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- WISDM Lab: Dataset. 2020. Available online: http://www.cis.fordham.edu/wisdm/dataset.php (accessed on 6 May 2020).

- Peppas, K.; Tsolakis, A.C.; Krinidis, S.; Tzovaras, D. Real-time physical activity recognition on smart mobile devices using convolutional neural networks. Appl. Sci. 2020, 10, 8482. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Ud Din Tahir, S.B.; Kim, K. A study of accelerometer and gyroscope measurements in physical life-log activities detection systems. Sensors 2020, 20, 6670. [Google Scholar] [CrossRef]

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensorbased human activity recognition with spatio-temporal DL. Sensors 2021, 21, 2141. [Google Scholar] [CrossRef] [PubMed]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN Architecture for Human Activity Recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- Al-qaness, M.A.A.; Helmi, A.M.; Dahou, A.; Elaziz, M.A. The Applications of Metaheuristics for Human Activity Recognition and Fall Detection Using Wearable Sensors: A Comprehensive Analysis. Biosensors 2022, 12, 821. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Suresh, S. DeepTransHHAR: Inter-subjects Heterogeneous Activity Recognition Approach in the Non-identical Environment Using Wearable Sensors. Natl. Acad. Sci. Lett. 2022, 45, 317–323. [Google Scholar] [CrossRef]

- Sikder, N.; Ahad, A.R.; Nahid, A. Human Action Recognition Based on a Sequential DL Model. In Proceedings of the 2021 Joint 10th International Conference on Informatics, Electronics & Vision (ICIEV) and 2021 5th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 16–20 August 2021. [Google Scholar] [CrossRef]

| Class Name | Class ID | Performed Activity | Duration or Repetitions per Sample |

|---|---|---|---|

| Stand | 0 | Standing still on the floor | 1 min |

| Sit | 1 | Sitting still on a chair | 1 min |

| Talk–sit | 2 | Talking with hand movements while sitting on a chair | 1 min |

| Talk–stand | 3 | Talking with hand movements while standing up or sometimes walking around within a small area | 1 min |

| Stand–sit | 4 | Repeatedly standing up and sitting down | 5 times |

| Lay | 5 | Laying still on a plain surface (a table) | 1 min |

| Lay–stand | 6 | Repeatedly standing up and laying down | 5 times |

| Pick | 7 | Picking up an object from the floor by bending down | 10 times |

| Jump | 8 | Jumping repeatedly on a spot | 10 times |

| Push-up | 9 | Performing full push-ups with a wide-hand position | 5 times |

| Sit-up | 10 | Performing sit-ups with straight legs on a plain surface | 5 times |

| Walk | 11 | Walking 20 m at a normal pace | ≈12 s |

| Walk backward | 12 | Walking backward for 20 m at a normal pace | ≈20 s |

| Walk-circle | 13 | Walking at a normal pace along a circular path | ≈20 s |

| Run | 14 | Running 20 m at a high speed | ≈7 s |

| Stair-up | 15 | Ascending on a set of stairs at a normal pace | ≈1 min |

| Stair-down | 16 | Descending from a set of stairs at a normal pace | ≈50 s |

| Table tennis | 17 | Playing table tennis | 1 min |

| Activity | Description | No. of Samples |

|---|---|---|

| Walking | Participant walks horizontally forward in a direct position | 1722 |

| Walking (Upstairs) | Participant walks upstairs | 1544 |

| Walking (Downstairs) | Participant walks downstairs | 1406 |

| Sitting | Participant sits on a chair | 1777 |

| Standing | Participant stands inactive | 1906 |

| Laying | Participant sleeps or lies down | 1944 |

| Raw Data | Transformed Data | |

|---|---|---|

| Samples | 1,098,207 | 5424 |

| Attributes | 6 | 46 |

| Class Distribution | ||

| Walking | 38.60% | 38.40% |

| Jogging | 31.20% | 30.00% |

| Upstairs | 11.20% | 11.70% |

| Downstairs | 9.10% | 9.80% |

| Sitting | 5.50% | 5.70% |

| Standing | 4.40% | 4.60% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akter, M.; Ansary, S.; Khan, M.A.-M.; Kim, D. Human Activity Recognition Using Attention-Mechanism-Based Deep Learning Feature Combination. Sensors 2023, 23, 5715. https://doi.org/10.3390/s23125715

Akter M, Ansary S, Khan MA-M, Kim D. Human Activity Recognition Using Attention-Mechanism-Based Deep Learning Feature Combination. Sensors. 2023; 23(12):5715. https://doi.org/10.3390/s23125715

Chicago/Turabian StyleAkter, Morsheda, Shafew Ansary, Md. Al-Masrur Khan, and Dongwan Kim. 2023. "Human Activity Recognition Using Attention-Mechanism-Based Deep Learning Feature Combination" Sensors 23, no. 12: 5715. https://doi.org/10.3390/s23125715