In-Water Fish Body-Length Measurement System Based on Stereo Vision

Abstract

1. Introduction

2. Materials and Methods

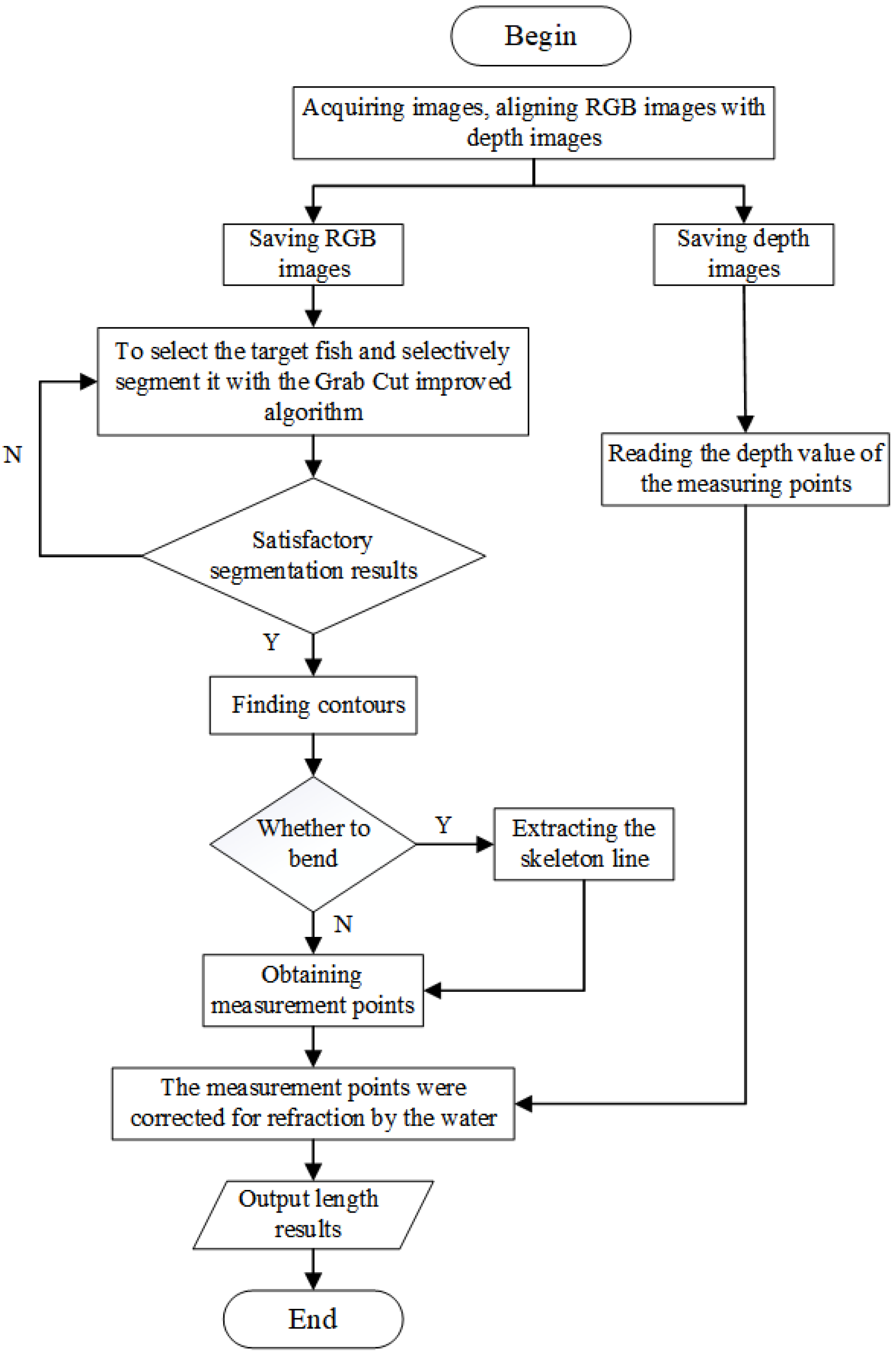

- In this study, RGB and depth images were acquired using the RealSense D435 binocular stereo camera. The acquisition distance of the camera could be adjusted arbitrarily, making it more convenient for practical measurements.

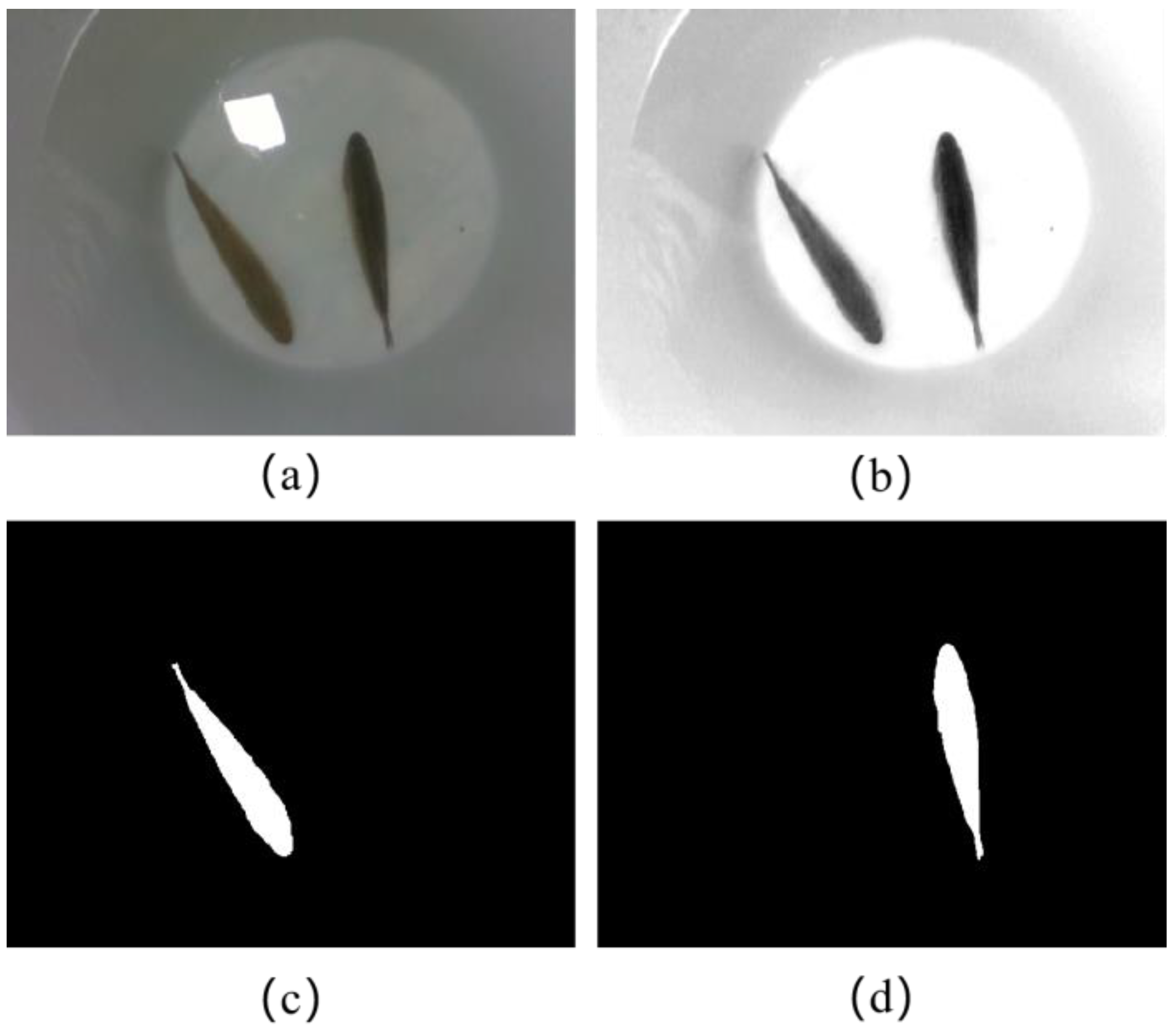

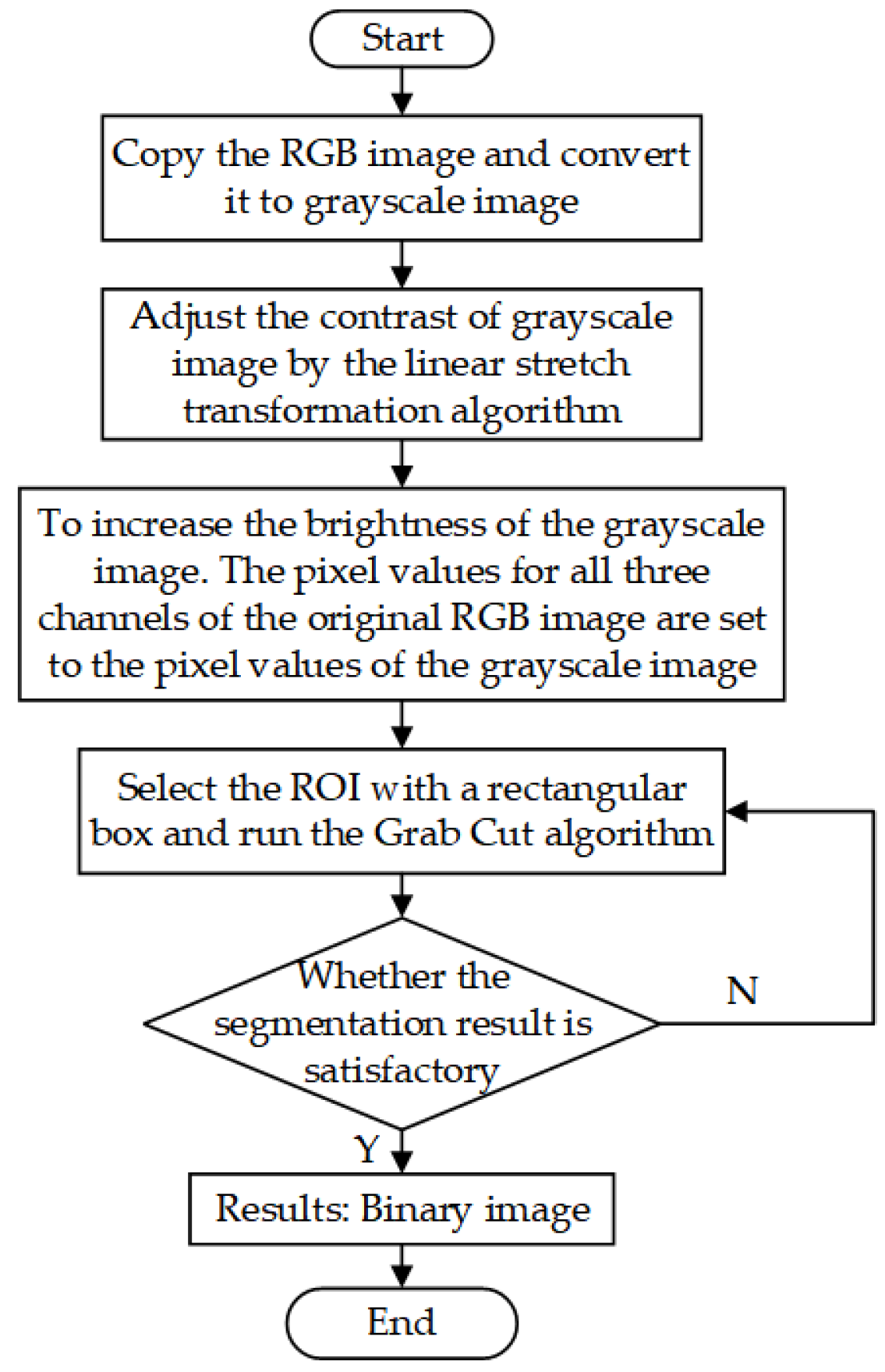

- This study utilized a high-precision interactive segmentation algorithm that accurately segments the target fish based on user requirements. This algorithm is effective in handling complex segmentation scenarios, resulting in refined segmentation outcomes. If the segmentation result is unsatisfactory, it can be segmented again.

- This paper presents an algorithm for determining the curvature of fish, enabling differentiation between curved and straight fish. This algorithm employs various measurement key point extraction methods for different types of fish to ensure accuracy. The measurement key points are projected into 3D space to calculate the 3D spatial coordinates of the measurement points. The distance between two points is then obtained from the 3D coordinates, resulting in a more precise measurement method.

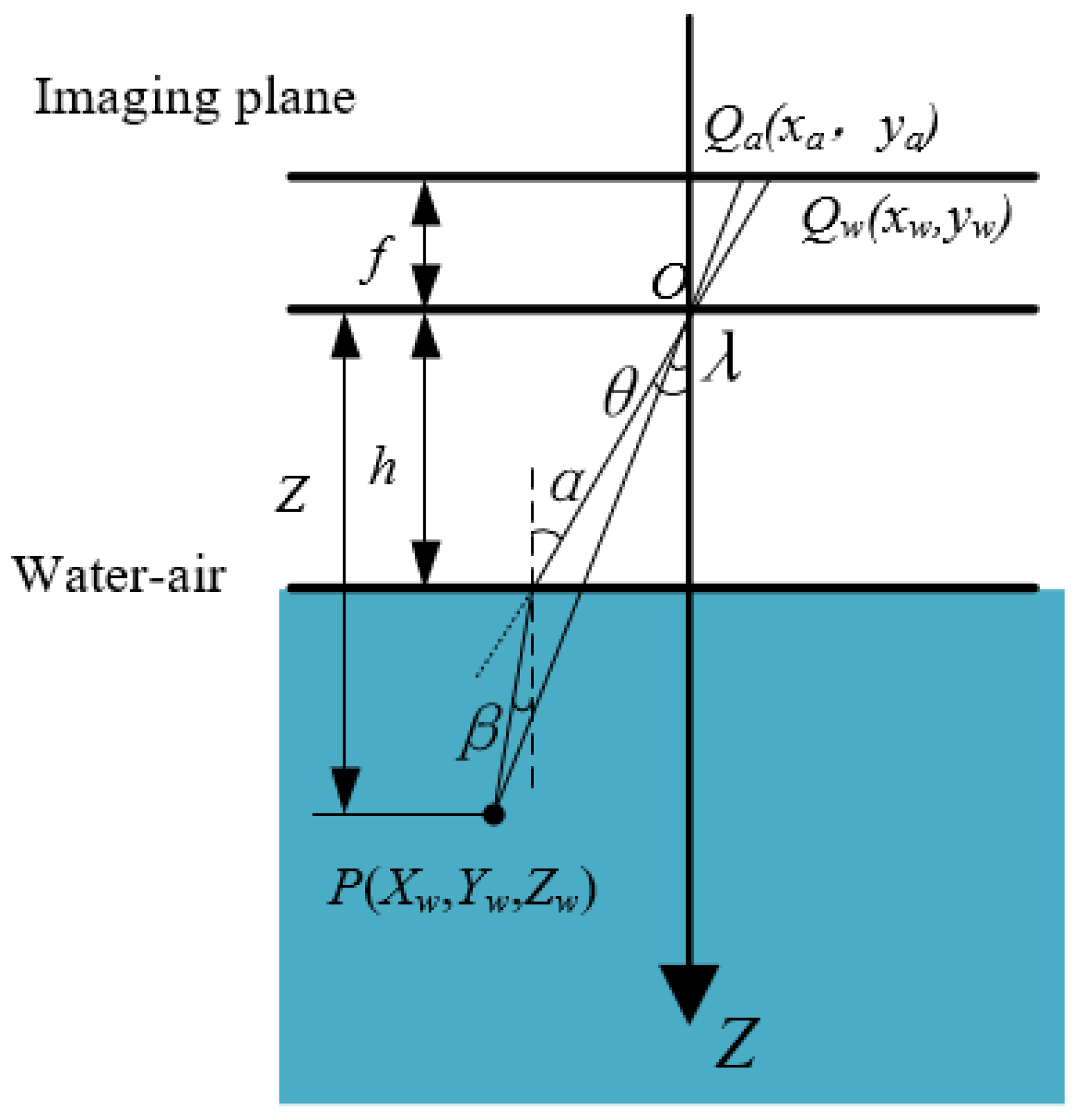

- This study analyzed the effect of water refraction on the measurement and aimed to effectively reduce it.

2.1. Experimental Setup

2.1.1. Experimental Platform

2.1.2. Image Acquisition

2.2. Image Segmentation

2.3. Measurement Method

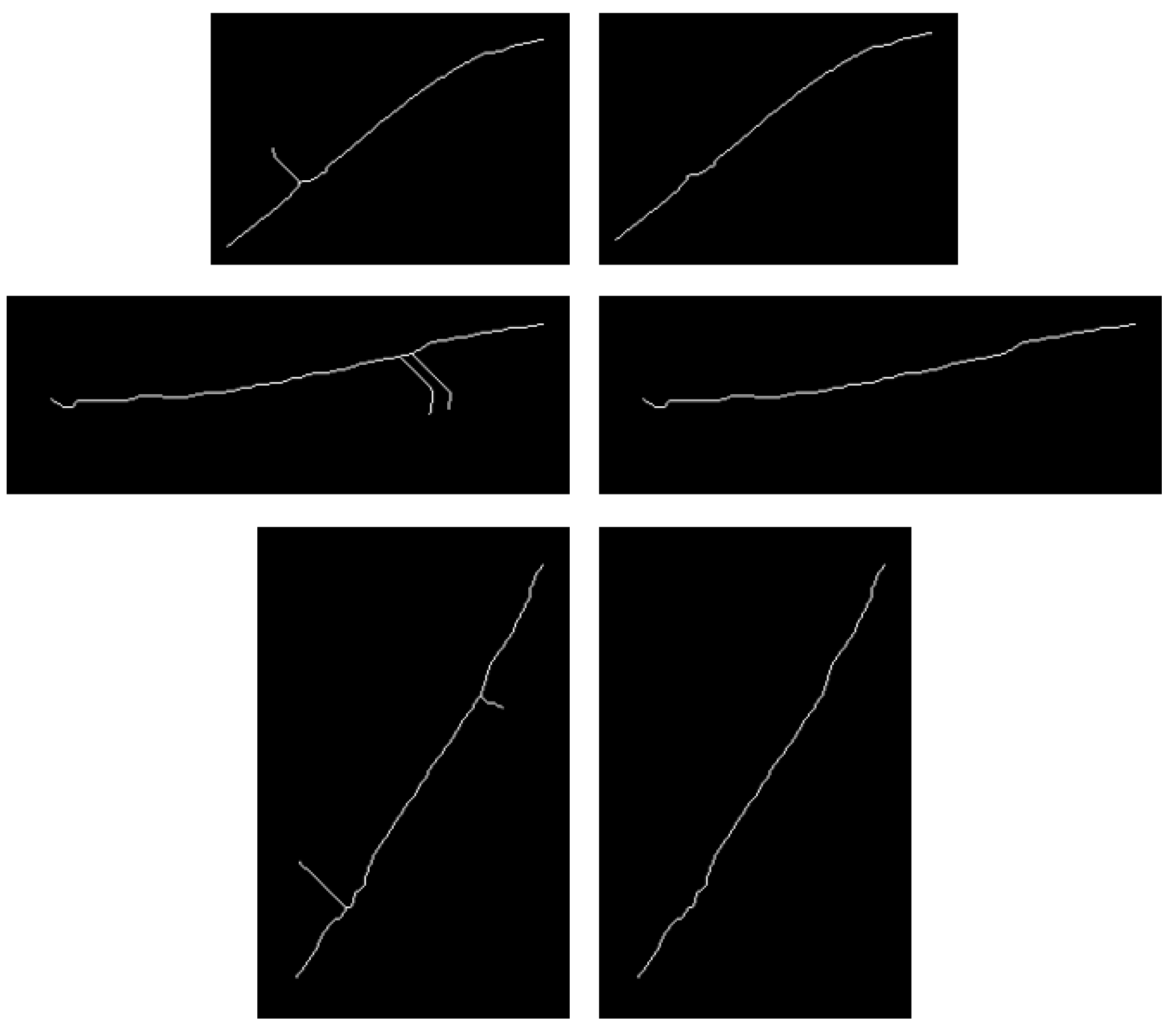

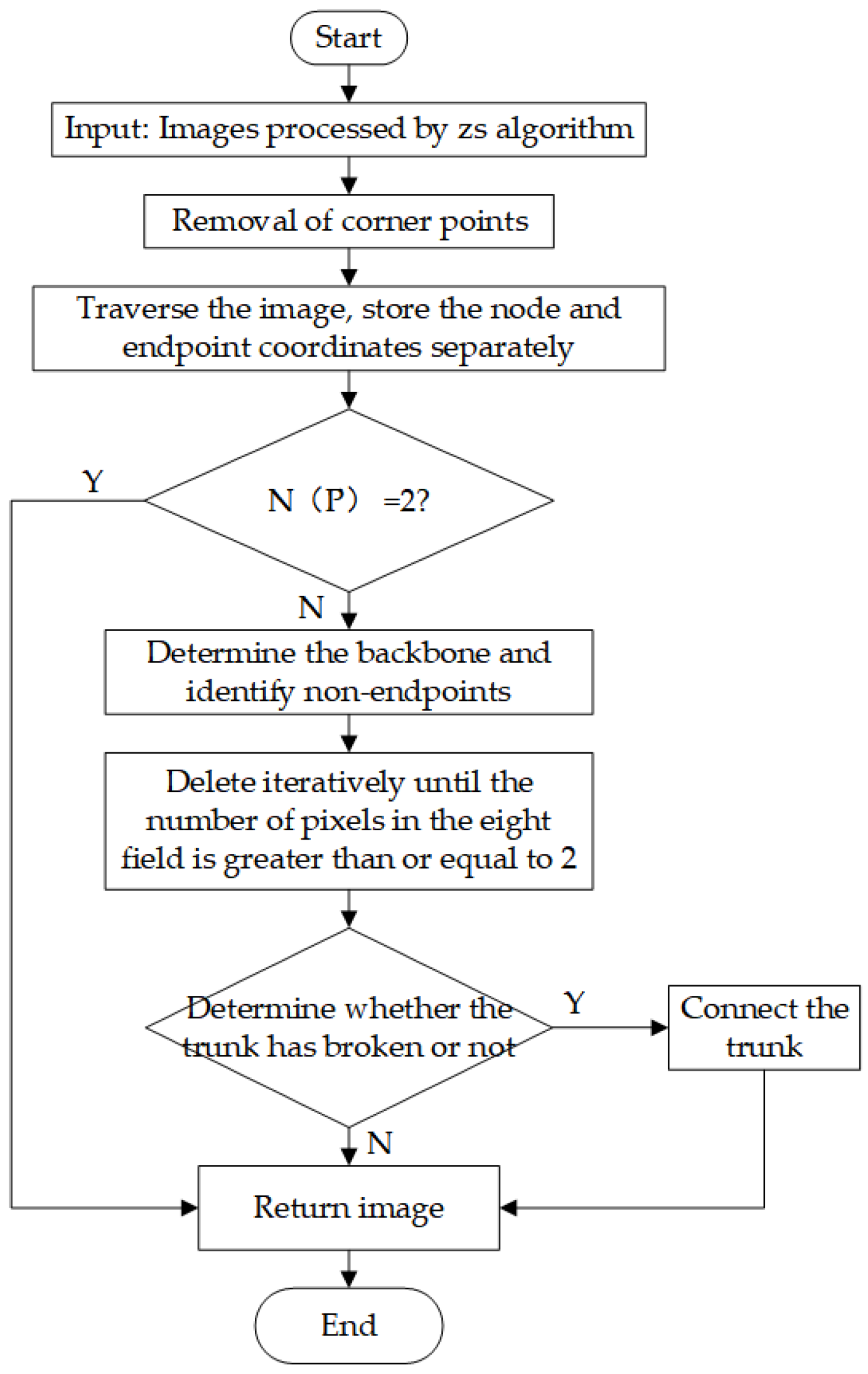

2.3.1. Linear Measurement

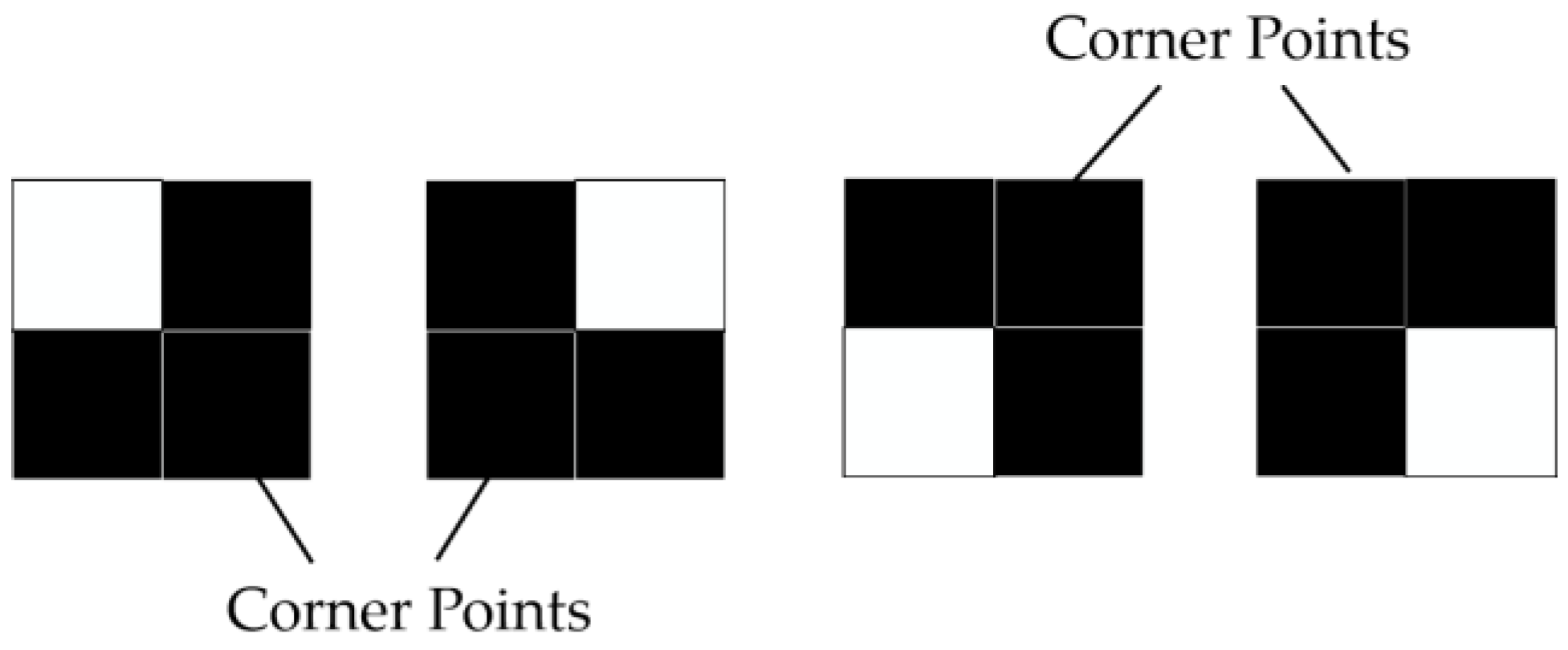

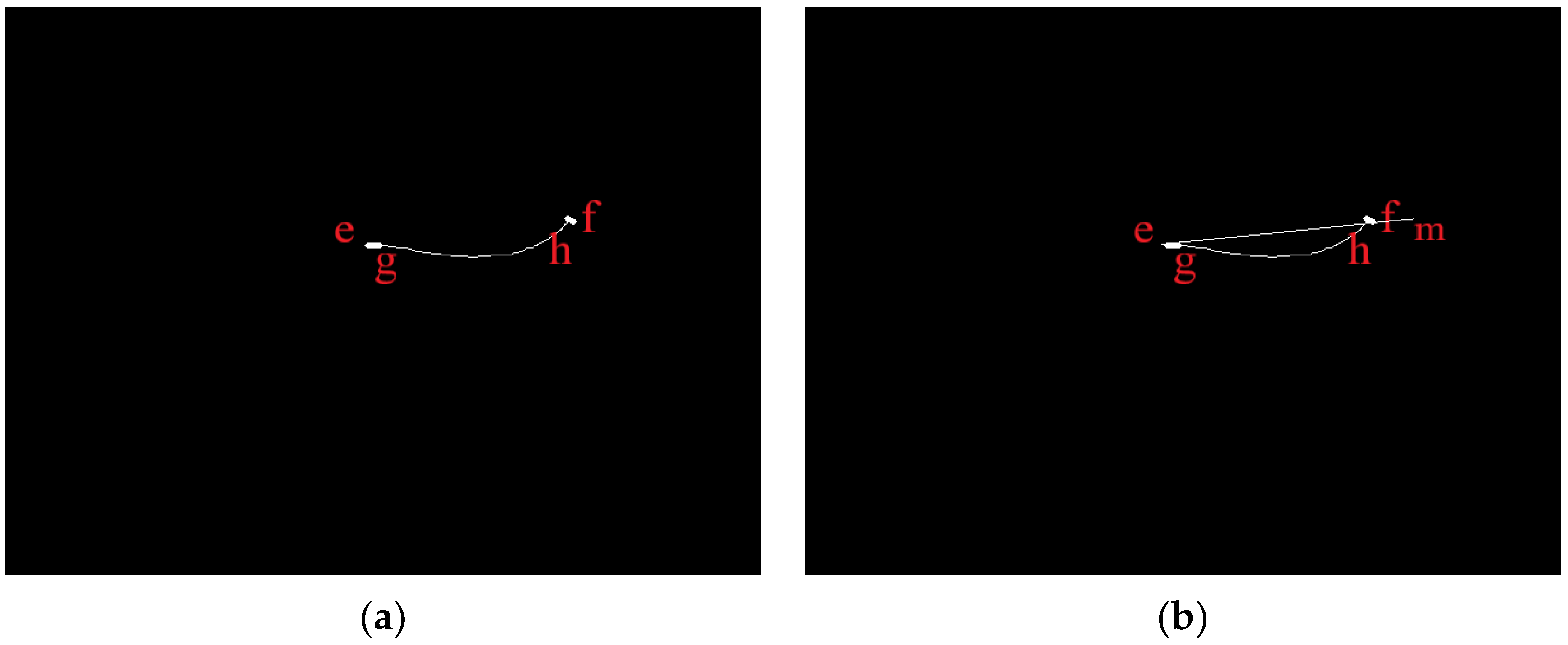

2.3.2. Bend Measurement

2.4. Correction of Water Refraction

2.5. Perch Length Measurement Software

- Data input. To accurately account for water refraction, this module requires the input of the fixed camera height and water depth in the breeding bucket. If these values are not entered, the obtained results will not take into account the effect of water refraction.

- Video presentation. The demo display section allows the user to switch the video on and off and obtain the RGB and depth data streams. Upon clicking the Save Image button, the RGB image is saved and displayed in the image processing area, while the depth image is saved in the backend.

- Image processing. This part mainly achieves the above image segmentation function. The contrast of the image is adjusted, and the Region of Interest (ROI) is selected by drawing a rectangle around the fish. Next, the image segmentation button is clicked to obtain the segmentation result. If the segmentation result is not satisfactory, the original image can be restored and the previous steps repeated. Once satisfied with the segmentation result, the Calculate button is clicked to determine the length of the fish, which will be displayed in the result area.

- Result display. This mainly shows the coordinates and depths of the measurement points, bend, and length of the target fish.

3. Results

4. Discussion

4.1. Convenience of the Method

4.2. Effectiveness of the Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, H.; Zhang, X.; Johnsson, J.I. Effects of size distribution on social interactions and growth of juvenile black rockfish (Sebastes schlegelii). Appl. Anim. Behav. Sci. 2017, 194, 135–142. [Google Scholar] [CrossRef]

- Hirt-Chabbert, J.A.; Sabetian, A.; Young, O.A. Effect of size grading on the growth performance of shortfin eel (Anguilla australis) during its yellow stage. N. Z. J. Mar. Freshw. Res. 2014, 48, 385–393. [Google Scholar] [CrossRef]

- Król, J.; Długoński, A.; Błażejewski, M.; Hliwa, P. Effect of size sorting on growth, cannibalism, and survival in Eurasian perch Perca fluviatilis L. post-larvae. Aquac. Int. 2019, 27, 945–955. [Google Scholar] [CrossRef]

- Sture, Ø.; Øye, E.R.; Skavhaug, A.; Mathiassen, J.R. A 3D machine vision system for quality grading of Atlantic salmon. Comput. Electron. Agric. 2016, 123, 142–148. [Google Scholar] [CrossRef]

- Sung, H.-J.; Park, M.-K.; Choi, J.W. Automatic Grader for Flatfishes Using Machine Vision. Int. J. Control Autom. Syst. 2020, 18, 3073–3082. [Google Scholar] [CrossRef]

- Bermejo, S. Fish age classification based on length, weight, sex and otolith morphological features. Fish. Res. 2007, 84, 270–274. [Google Scholar] [CrossRef]

- Costa, C.; Antonucci, F.; Boglione, C.; Menesatti, P.; Vandeputte, M.; Chatain, B. Automated sorting for size, sex and skeletal anomalies of cultured seabass using external shape analysis. Aquacult. Eng. 2013, 52, 58–64. [Google Scholar] [CrossRef]

- Dutta, M.K.; Issac, A.; Minhas, N.; Sarkar, B. Image processing based method to assess fish quality and freshness. J. Food Eng. 2016, 177, 50–58. [Google Scholar] [CrossRef]

- Saberioon, M.; Císař, P. Automated within tank fish mass estimation using infrared reflection system. Comput. Electron. Agric. 2018, 150, 484–492. [Google Scholar] [CrossRef]

- Saberioon, M.; Cisar, P.; Labbe, L.; Soucek, P.; Pelissier, P.; Kerneis, T. Comparative Performance Analysis of Support Vector Machine, Random Forest, Logistic Regression and k-Nearest Neighbours in Rainbow Trout (Oncorhynchus mykiss) Classification Using Image-Based Features. Sensors 2018, 18, 1027. [Google Scholar] [CrossRef]

- Bravata, N.; Kelly, D.; Eickholt, J.; Bryan, J.; Miehls, S.; Zielinski, D. Applications of deep convolutional neural networks to predict length, circumference, and weight from mostly dewatered images of fish. Ecol. Evol. 2020, 10, 9313–9325. [Google Scholar] [CrossRef]

- Tengtrairat, N.; Woo, W.L.; Parathai, P.; Rinchumphu, D.; Chaichana, C. Non-Intrusive Fish Weight Estimation in Turbid Water Using Deep Learning and Regression Models. Sensors 2022, 22, 5161. [Google Scholar] [CrossRef]

- Al-Jubouri, Q.; Al-Nuaimy, W.; Al-Taee, M.; Young, I. Towards automated length-estimation of free-swimming fish using machine vision. In Proceedings of the 2017 14th International Multi-Conference on Systems, Signals & Devices (SSD), Marrakech, Morocco, 28–31 March 2017; IEEE: New York, NY, USA, 2017; pp. 469–474. [Google Scholar]

- Hao, M.; Yu, H.; Li, D. The Measurement of Fish Size by Machine Vision—A Review. In Computer and Computing Technologies in Agriculture IX; Springer: Cham, Switzerland, 2016; pp. 15–32. [Google Scholar]

- Hong, H.; Yang, X.; You, Z.; Cheng, F. Visual quality detection of aquatic products using machine vision. Aquacult. Eng. 2014, 63, 62–71. [Google Scholar] [CrossRef]

- Torisawa, S.; Kadota, M.; Komeyama, K.; Suzuki, K.; Takagi, T. A digital stereo-video camera system for three-dimensional monitoring of free-swimming Pacific bluefin tuna, Thunnus orientalis, cultured in a net cage. Aquat. Living Resour. 2011, 24, 107–112. [Google Scholar] [CrossRef]

- Shafait, F.; Harvey, E.S.; Shortis, M.R.; Mian, A.; Ravanbakhsh, M.; Seager, J.W.; Culverhouse, P.F.; Cline, D.E.; Edgington, D.R.; Browman, H. Towards automating underwater measurement of fish length: A comparison of semi-automatic and manual stereo–video measurements. ICES J. Mar. Sci. 2017, 74, 1690–1701. [Google Scholar] [CrossRef]

- Munoz-Benavent, P.; Andreu-Garcia, G.; Valiente-Gonzalez, J.M.; Atienza-Vanacloig, V.; Puig-Pons, V.; Espinosa, V. Enhanced fish bending model for automatic tuna sizing using computer vision. Comput. Electron. Agric. 2018, 150, 52–61. [Google Scholar] [CrossRef]

- Tanaka, T.; Ikeda, R.; Yuta, Y.; Tsurukawa, K.; Nakamura, S.; Yamaguchi, T.; Komeyama, K. Annual monitoring of growth of red sea bream by multi-stereo-image measurement. Fish. Sci. 2019, 85, 1037–1043. [Google Scholar] [CrossRef]

- Shi, C.; Wang, Q.; He, X.; Zhang, X.; Li, D. An automatic method of fish length estimation using underwater stereo system based on LabVIEW. Comput. Electron. Agric. 2020, 173, 105419. [Google Scholar] [CrossRef]

- Savinov, E.; Ivashko, E. Automatic contactless weighing of fish during experiments. In Proceedings of the 2021 Ivannikov Ispras Open Conference (ISPRAS), Moscow, Russia, 2–3 December 2021; pp. 134–139. [Google Scholar]

- Al-Jubouri, Q.; Al-Nuaimy, W.; Al-Taee, M.; Young, I. An automated vision system for measurement of zebrafish length using low-cost orthogonal web cameras. Aquacult. Eng. 2017, 78, 155–162. [Google Scholar] [CrossRef]

- Abe, K.; Miyake, K.; Habe, H. Method for Measuring Length of Free-swimming Farmed Fry by 3D Monitoring. Sens. Mater. 2020, 32, 3595. [Google Scholar] [CrossRef]

- Athani, S. Identification of Different Food Grains by Extracting Colour and Structural Features using Image Segmentation Techniques. Indian J. Sci. Technol. 2017, 10, 1–7. [Google Scholar] [CrossRef]

- Kang, F.; Wang, C.; Li, J.; Zong, Z. A Multiobjective Piglet Image Segmentation Method Based on an Improved Noninteractive GrabCut Algorithm. Adv. Multimed. 2018, 2018, 1083876. [Google Scholar] [CrossRef]

- Ye, H.; Liu, C.; Niu, P. Cucumber appearance quality detection under complex background based on image processing. Int. J. Agric. Biol. Eng. 2018, 11, 171–177. [Google Scholar]

- Wang, Z.; Lv, Y.; Wu, R.; Zhang, Y. Review of GrabCut in Image Processing. Mathematics 2023, 11, 1965. [Google Scholar] [CrossRef]

- Praveen Kumar, J.; Domnic, S. Image based leaf segmentation and counting in rosette plants. Inf. Process. Agric. 2019, 6, 233–246. [Google Scholar] [CrossRef]

- Zhou, C.; Lin, K.; Xu, D.; Liu, J.; Zhang, S.; Sun, C.; Yang, X. Method for segmentation of overlapping fish images in aquaculture. Int. J. Agric. Biol. Eng. 2019, 12, 135–142. [Google Scholar] [CrossRef]

- Gaillard, M.; Miao, C.; Schnable, J.; Benes, B. Sorghum Segmentation by Skeleton Extraction. In Computer Vision—ECCV 2020 Workshops; Springer: Cham, Switzerland, 2020; pp. 296–311. [Google Scholar]

- Zhang, F.; Hu, Z.; Yang, K.; Fu, Y.; Feng, Z.; Bai, M. The Surface Crack Extraction Method Based on Machine Learning of Image and Quantitative Feature Information Acquisition Method. Remote Sens. 2021, 13, 1534. [Google Scholar] [CrossRef]

- Zhang, T.Y.; Suen, C.Y. A fast parallel algorithm for thinning digital patterns. Commun. ACM 1984, 27, 236–239. [Google Scholar] [CrossRef]

- Li, S.-Q.; Xie, X.-P.; Zhuang, Y.-J. Research on the calibration technology of an underwater camera based on equivalent focal length. Measurement 2018, 122, 275–283. [Google Scholar] [CrossRef]

- Wu, X.; Tang, X. Accurate binocular stereo underwater measurement method. Int. J. Adv. Rob. Syst. 2019, 16, 1729881419864468. [Google Scholar] [CrossRef]

- Bräuer-Burchardt, C.; Munkelt, C.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. Underwater 3D Measurements with Advanced Camera Modelling. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2022, 90, 55–67. [Google Scholar] [CrossRef]

- Fernandes, A.F.A.; Turra, E.M.; de Alvarenga, É.R.; Passafaro, T.L.; Lopes, F.B.; Alves, G.F.O.; Singh, V.; Rosa, G.J.M. Deep Learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 2020, 170, 105274. [Google Scholar] [CrossRef]

- Yu, X.; Wang, Y.; Liu, J.; Wang, J.; An, D.; Wei, Y. Non-contact weight estimation system for fish based on instance segmentation. Expert Syst. Appl. 2022, 210, 118403. [Google Scholar] [CrossRef]

- Deng, Y.; Tan, H.; Tong, M.; Zhou, D.; Li, Y.; Zhu, M. An Automatic Recognition Method for Fish Species and Length Using an Underwater Stereo Vision System. Fishes 2022, 7, 326. [Google Scholar] [CrossRef]

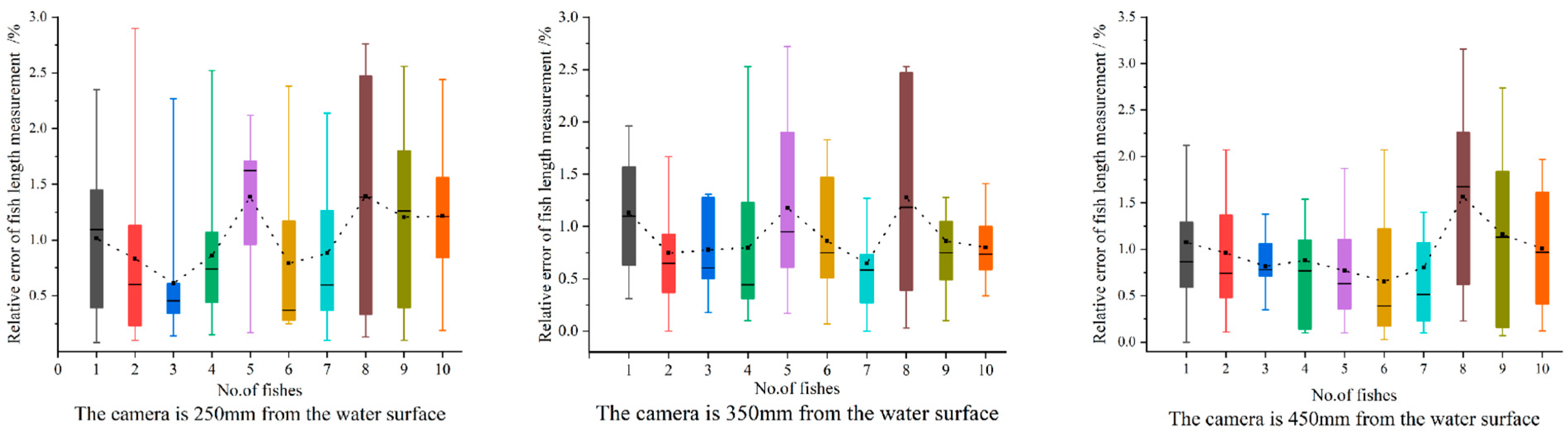

| No. | ML/mm | Group 1 (250 mm) | Group 2 (350 mm) | Group 3 (450 mm) | |||

|---|---|---|---|---|---|---|---|

| MRPE (CR) | MRPE (IR) | MRPE (CR) | MRPE (IR) | MRPE (CR) | MRPE (IR) | ||

| 1 | 255 | 1.02% | 1.17% | 1.13% | 2.07% | 1.07% | 1.68% |

| 2 | 300 | 0.83% | 1% | 0.65% | 0.8% | 0.8% | 0.99% |

| 3 | 295 | 0.61% | 0.77% | 0.86% | 0.96% | 0.65% | 0.83% |

| 4 | 270 | 0.86% | 0.92% | 0.74% | 1.44% | 0.96% | 1.92% |

| 5 | 292 | 1.38% | 1.71% | 0.79% | 0.97% | 0.88% | 1.76% |

| 6 | 282 | 0.79% | 1.06% | 0.77% | 0.89% | 0.82% | 0.97% |

| 7 | 294 | 0.87% | 1.2% | 1.12% | 1.27% | 0.77% | 1.36% |

| 8 | 305 | 1.27% | 1.31% | 0.82% | 1.03% | 0.82% | 1.3% |

| 9 | 320 | 1.21% | 1.57% | 0.76% | 1.63% | 1% | 1.76% |

| 10 | 304 | 0.9% | 1.13% | 0.96% | 1.27% | 1.33% | 1.78% |

| total | 0.97% | 1.18% | 0.86% | 1.23% | 0.91% | 1.43% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, M.; Shen, P.; Zhu, H.; Shen, Y. In-Water Fish Body-Length Measurement System Based on Stereo Vision. Sensors 2023, 23, 6325. https://doi.org/10.3390/s23146325

Zhou M, Shen P, Zhu H, Shen Y. In-Water Fish Body-Length Measurement System Based on Stereo Vision. Sensors. 2023; 23(14):6325. https://doi.org/10.3390/s23146325

Chicago/Turabian StyleZhou, Minggang, Pingfeng Shen, Hao Zhu, and Yang Shen. 2023. "In-Water Fish Body-Length Measurement System Based on Stereo Vision" Sensors 23, no. 14: 6325. https://doi.org/10.3390/s23146325

APA StyleZhou, M., Shen, P., Zhu, H., & Shen, Y. (2023). In-Water Fish Body-Length Measurement System Based on Stereo Vision. Sensors, 23(14), 6325. https://doi.org/10.3390/s23146325