Abstract

This study presents a comparison of data acquired from three LiDAR sensors from different manufacturers, i.e., Yellow Scan Mapper (YSM), AlphaAir 450 Airborne LiDAR System CHC Navigation (CHC) and DJI Zenmuse L1 (L1). The same area was surveyed with laser sensors mounted on the DIJ Matrice 300 RTK UAV platform. In order to compare the data, a diverse test area located in the north-western part of the Lublin Province in eastern Poland was selected. The test area was a gully system with high vegetation cover. In order to compare the UAV information, LiDAR reference data were used, which were collected within the ISOK project (acquired for the whole area of Poland). In order to examine the differentiation of the acquired data, both classified point clouds and DTM products calculated on the basis of point clouds acquired from individual sensors were compared. The analyses showed that the largest average height differences between terrain models calculated from point clouds were recorded between the CHC sensor and the base data, exceeding 2.5 m. The smallest differences were recorded between the L1 sensor and ISOK data—RMSE was 0.31 m. The use of UAVs to acquire very high resolution data can only be used locally and must be subject to very stringent landing site preparation procedures, as well as data processing in DTM and its derivatives.

1. Introduction

The development of advanced measurement technologies in recent decades has created new perspectives in terms of spatial data acquisition capabilities. This is particularly true for acquisition techniques based on remote sensing solutions related to the retrieval of reliable information about physical objects and their surroundings, by means of registration, measurement and interpretation of images or their digital representations obtained through sensors that are not in direct contact with these objects [,].

Remote sensing may be divided according to different criteria []. Based on the distance from the studied object, remote sensing is divided into: (i) satellite-based; (ii) medium-altitude (airborne and unmanned aerial vehicle—UAV) and (iii) ground-based. Remote sensing data recording systems are either passive (when they record solar radiation reflected from objects or emitted by an object), or active (when they emit their own electromagnetic radiation which, after contact with an object, is recorded by a sensor). Remote sensing systems are also characterised by different resolutions: (a) spatial, defining the size of the smallest object distinguishable in the image recorded by the sensor, (b) spectral, referring to the specific wavelength range of electromagnetic radiation recorded by the sensor, (c) radiometric, which specifies the number of distinguishable radiation levels, and (d) temporal, defining the frequency of information acquisition.

The studies aimed at acquiring high-precision field data use sensors that emit electromagnetic radiation of different wavelengths. Of all the light measurement techniques (light detection and ranging, LiDAR), sensors using laser radiation are most commonly used to survey the physical characteristics of environmental features. The most commonly used types of survey in this group of techniques are airborne laser scanning (ALS) and terrestrial laser scanning (TLS). These are 3D imaging technologies that use laser beams to scan and measure physical objects of the environment []. By emitting pulses of a laser beam and then measuring the parameters of the reflected beam, ALS and TLS systems can determine the distance and angles between points in a given space. In technological terms, the time of flight of the laser pulse is measured. On the basis of the time measurement, it is possible to calculate the range and thus the distance between the emitter (scanner) and the measured object that generates the backscatter echo. Information about the geometry of the active surface can thus be obtained [,]. This data can be used to create a 3D model of the environment and provide detailed information about a given area. Laser sensor-based scanners are widely used in fields such as engineering and architecture, archaeology, forestry and terrain topography mapping []. TLS enables high-precision and high-resolution data acquisition, but for technological reasons it is only qualified for spatially limited 1 km2, local applications. Traditional ALS (based on aircraft and helicopter platforms), is much more versatile and has many times greater spatial coverage [,], but incurs high data acquisition costs.

Medium- and low-altitude remote sensing solutions based on active LiDAR sensors using a BSP as a carrier platform have become increasingly common in recent years []. Using this type of system combines the advantages and reduces the disadvantages of other solutions.

LiDAR-UAV data are characterised by high or very high spatial resolution. Accurate ground surface data can also be acquired in terrain with limited accessibility and/or dense vegetation cover []. Compared to satellite data, they offer much higher resolution and their acquisition is also independent of cloud conditions []. Brede et al. [] showed that ALS produces data of comparable quality to TLS. Alternatively, high-resolution aerial RGB imagery may be acquired, which is much cheaper and easier to process into a point cloud than using lidar sensors []. Photogrammetric techniques can be a valuable alternative for vegetation surveys [], but for ground surface analyses their use is limited by the type and density of vegetation cover []. Lidar sensors can be placed on aircraft, but a significant barrier to their widespread use is the high cost of equipment and platform maintenance, as well as the need to plan data acquisition in advance, taking into account weather conditions []. Comparable advantages to ALS are offered by the more cost-effective, accessible and easier to plan LiDAR-UAV. A certain limitation for LiDAR-UAV systems is still the relatively high cost of the system [], and alternatives are proposed [,]).

LiDAR-UAVs are used in many applications, including 3D mapping [] forestry [], agriculture [] and infrastructure monitoring []. They are also used where TLS is used []; nevertheless, LiDAR-UAV can be used to scan a larger area in much less time than with ground-based methods [].High-resolution digital terrain models (DTMs) can be created from LiDAR-UAV survey data. These can form the basis of various types of geomorphometric analyses [], landforms measurements and land surface change analyses []. LiDAR-derived DTMs are currently used in many scientific fields [,] and industrial–technical activities [,,]. They provide information that is crucial or even necessary for environmental management in the broadest sense []. They can be used for modelling complex hydrological phenomena, related, for example, to the determination of the surface runoff of rainwater, the definition of catchment boundaries and drainage networks, the determination of soil moisture, and hydraulic modelling for flood risk assessment [,,,]. Other examples include modelling geohazards, including avalanche hazards, or forest fire hazards in mountainous areas [,]. Geomorphological applications include qualitative and quantitative descriptions of landforms [,,,]. They are also increasingly used in geological studies [,]. Land surface modelling is particularly relevant for assessing water erosion risk []. Of the other applications, it is worth mentioning the modelling of the spread of industrial pollutants or contamination that may be associated with transport events, for example, as well as the potential for use in forestry and agriculture [,].

In recent years, the market offer of LiDAR-UAV solutions has expanded significantly. Based on data received from various manufacturers, LiDAR sensors dedicated to UAVs offer very high accuracy and repeatability of measurements. On the other hand, Pilarska et al. [] points out that particular LiDAR-UAV solutions can generate systematic error, mainly due to the imperfection of the inertial unit, which records the system’s operating angles (roll, pitch, yaw); however, the errors are estimable. For technological reasons, it has to be assumed that measurements made with the various sensors may further differ from each other. In the last few years, studies have been undertaken to directly compare specific laser scanners with each other []. Data acquired with LiDAR-UAV techniques have also been validated by reference to products acquired with other techniques [,]. There are still few studies available that actually illustrate product differences between specific platforms. The present work fills a gap in the mentioned area.

The aim of this study is to directly compare altitude data from different LiDAR-UAV systems and data acquired using aircraft, to demonstrate whether the test results obtained are sufficiently accurate to achieve the stated goal, and whether the platform provides the ability to reliably measure a given environmental element. Derived products developed from three popular LiDAR-UAV systems were used in relation to airborne laser scanning data to better recognise the variation between the different systems. The authors assumed the role of the end user, relying on off-the-shelf system solutions currently offered on the market.

2. Materials and Methods

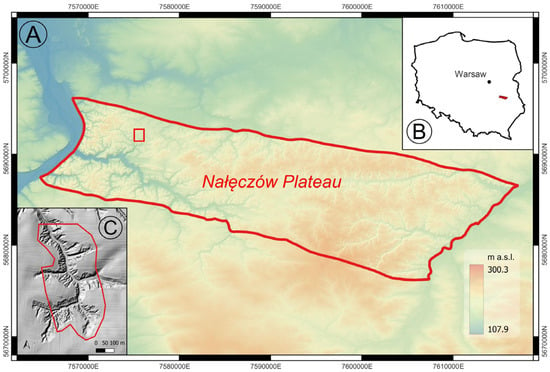

To compare the effectiveness of LiDAR-UAV sensors in terms of topographic texture analyses, a dynamically developing small gully system constituting the southern branch of the Potok Stocki valley (Nałęczów Plateau, Lublin Upland, SE Poland) was selected (Figure 1). The sub-basin of the loess gully has an area of 1.3 km2 []. The investigated gully is located within a strongly dissected loess plateau, with a thickness of loess cover reaching 30 m [].

Figure 1.

Location of the study area: (A) Nałeczów Plateau subregion with border of area of interest (AoI, red rectangle); location of investigated area in Poland (B); Area of interest (C). Boundaries of physical-geographic region according to Solon et al. [].

Three LiDAR survey systems were used for the raids: YellowScan Mapper (YSM), CHC Alpha Air 450 (CHC) and DJI Zenmuse L1 (L1). The basic parameters of each system are summarised in Table 1.

Table 1.

Technical specifications of the LiDAR-UAV systems; data from manufacturers’ technical specifications.

Due to the significant forest cover of the gully system, flights were carried out before the beginning of vegetation (no foliage), on three dates: YSM—April 2021, CHC and L1—April 2022. It was assumed that changes in the geomorphology of the study area, if they even occurred, were of minor importance due to the management of the area (existing perennial forest cover). In each case, the altitude of the airstrike was set at 75–80 m above ground level (AGL). In addition, a fixed distance of UAV movement relative to the topographic surface was set in the mission planning software (following the model of the terrain). The total area covered by a single flight was approximately 1 km2. The Yellow Scan Mapper acquired the densest point cloud (Table 2).

Table 2.

Characteristics of the point clouds analysed. Values of the basic statistics of the LiDAR-UAV point clouds refer to 1m of mesh, created from ALS reference data.

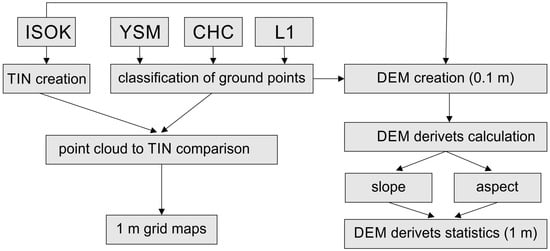

To compare the quality and relevance of the acquired data, a multi-stage framework was used to select ground points and develop high-resolution digital terrain models (DTMs) on their base; these were compared to the reference model and with each other. The following stages of data processing and benchmarking are shown in Figure 2.

Figure 2.

A flow chart of data processing and an indicators parameter calculation.

The acquired raw data were classified in LP360 7.0 software (©GeoCue 520 6th Street, Madison, AL, USA) to extract the ground points. The acquired data has been pre-processed in the dedicated software of the respective manufacturer, based on the manufacturer’s recommended procedure. The data were imported into a common spatial reference system (EPSG:2178, PL-KRON86-NH). The area of interest (AoI) was then determined by taking the largest common area for all three raids, so that a direct statistical comparison of the data with each other is possible (Figure 1C).

Simultaneously, reference datasets were prepared. It was assumed that the reference point for all three LiDAR-UAV models would be airborne laser scanning (ALS) data acquired from the resources of the Central Office of Geodesy and Cartography (GUGiK), which were prepared within National Guard Information System project (ISOK). The data were acquired as point clouds classified according to the American Society for Photogrammetry and Remote Sensing (ASPRS) standard. They are made available to the public and free of charge via the online Geoportal platform (geoportal.gov.pl (accessed on 4 November 2022)). The ALS point cloud density for the analysed area ranges from 4 pts/m2 to 12 pts/m2. Based on the reference point cloud, a TIN model was created using the Delaunay triangulation method (2.5 D, best fitting plane), yielding a total of 1,399,244 triangles; interpolation was limited to 3m (value determined empirically). The analysis of individual LiDAR-UAV point clouds was carried out against a reference TIN model, prepared from ALS data, based on a 1m grid. On this basis, the number of points collected with a LiDAR-UAV lying on the ground in a given mesh was calculated. The difference in height recorded by the LiDAR-UAV relative to the reference TIN model was also determined statistically for each mesh − mean difference, median difference, standard deviation and variance.

In order to investigate the influence of the different sensors on the derived digital terrain models for the data from each sensor, digital terrain models with a resolution of 10 cm/pix were produced in LP360 software. Additionally, for each derived model, slope exposure and slope were calculated in QGIS 3.16 software. Using a 1 m resolution grid, the results were then compared using the root mean square deviation (RMSE) and relative root mean squared error (RRMSE) statistics.

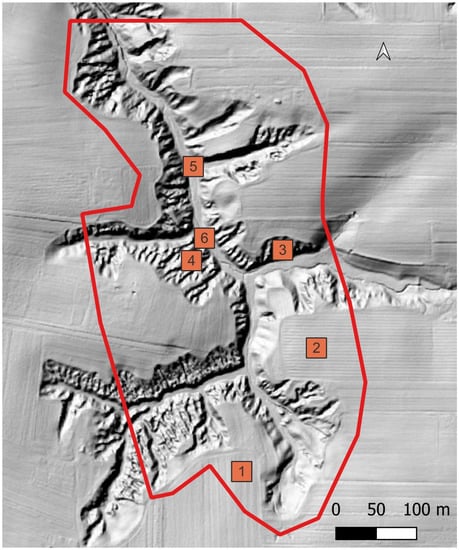

In addition, test fields of ca. 500 m2 with a relatively uniform ground surface structure—cultivated field, ravine slope, ravine bottom—were delineated within the primary area of interest (Figure 3). The data from the individual sensors were compared in relation to the forms of use and statistically collated.

Figure 3.

Location of test fields (525 m2). 1, 2—arable fields, 3, 4—locations on the slopes of the gully, 5, 6—locations in the bottom of the gully. Area of interest is marked with a red line.

The acquired data were additionally validated by direct measurement on the ground, carried out using the GNSS-RTK method, at 24 points randomly distributed in the study area.

3. Results

Comparison of Point Clouds to the Reference Model

Differences were found for all sensors while comparing the data acquired directly to the reference model created from the ALS data. A statistical summary is presented in Table 3.

Table 3.

Overview of the differences found between the point cloud acquired with the individual sensors and the reference model. Values in m.

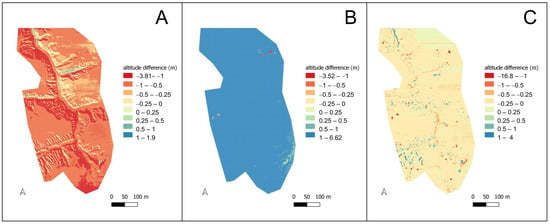

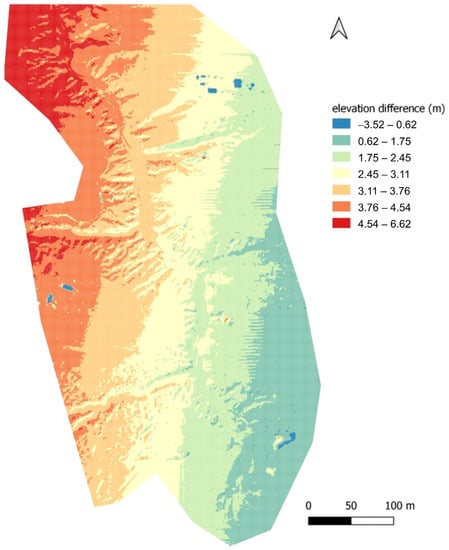

For the entire study area, large differences from the reference model were recorded, with registered altitudes being higher over almost the entire study area (Figure 4A). It is noteworthy that the differences correspond very clearly to the relief and their visualisation resembles the DTM. In the area of the forested gully the differences reached over 1 m, within the cultivated fields they were in the range of 0.5–1 m.

Figure 4.

Differences in recorded altitude between LiDAR-UAV and the reference model. (A) YSM, (B) CHC, (C) L1.

Significant differences were also found for the CHC Alpha Air. In this case, significantly lower ground altitudes were recorded than on the reference model. The difference was systematic; for the whole area it was very significant, showing a slight relationship with the relief. The high positive values are spotty, associated with the development area in the north-eastern part of the study area (Figure 4B).

For the DJI Zenmuse L1, the average difference between the recorded point and the height value read from the model was 13 cm, with a median of 12 cm. The high values of maximum and minimum difference are noteworthy. High positive differences (higher values recorded for LiDAR-UAV), reaching more than 20 metres, are found throughout the area and are mostly point and diffuse in nature (Figure 4C).

The pronounced negative differences (lower values recorded for LiDAR-UAV) are area-specific. Altitudes more than 2 m lower than the reference model were recorded at two locations. In the northern part of the area this is the bottom of the gully, in the southern end it is the edge of the gully immediately adjacent to the boundary of the arable field (Figure 4C).

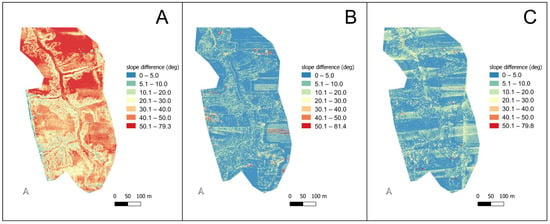

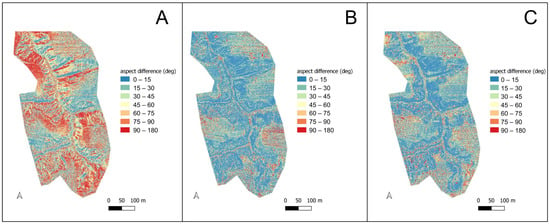

A summary of the specific errors between the values determined from the LiDAR-UAV data and the reference model is summarised in Table 4. For the L1 scanner, the average error in height determination was 0.31 m, while the largest was for the CHC, reaching over 3 metres. For gradients, the highest error was estimated for the YSM, reaching over 40 degrees; for the other two sensors it was slightly in the region of 10 degrees. In the case of aspects, a significant error was also found for the YSM, at over 70 degrees, for the L1 and CHC it was also high, at around 50 degrees.

Table 4.

RMSE for individual sensors, determined in relation to the reference model.

Significant differences were recorded for the numerical terrain model derivatives, namely slopes and aspects. In the case of the YSM scanner, differences in recorded slopes were high throughout the study area, locally reaching nearly 80° (Figure 5A). Characteristically, smaller differences were found in areas with more varied relief, relative to relatively uniformly relief areas. The CHC sensor data also varied over a wide range, locally exceeding 80° but, nevertheless, the mean difference was at a much lower level and high discrepancies were found within the cultivated fields, clearly relating to the direction of cultivation, but also in the partially developed area in the north-eastern part of the site and in parts of the ravine system (Figure 5B). In the case of L1, significant differences were spotty, mainly related to anthropogenic objects (buildings), and the direction of cultivation was also reflected, but the variation was at a relatively low level (Figure 5C).

Figure 5.

Difference in recorded slopes between LiDAR-UAV and the reference model. (A) YSM, (B) CHC, (C) L1.

In terms of the defining aspect, the differences in all cases reached 180°. In the case of YSM, they were high throughout the area (Figure 6A), both in the highly sculptured area and in locations with less variation in relief. Much lower divergences were found for CHC (Figure 6B), with the most significant differences occurring in the cultivated field and following the direction of cultivation. L1 also showed a point-to-point variation of 180°, mainly in locations associated with human activities; for this sensor, the divergence of the specific exposure from the reference model was the lowest (Figure 6C).

Figure 6.

Difference in recorded aspects between LiDAR-UAV and the reference model. (A) YSM, (B) CHC, (C) L1.

Within the designated additional test plots (cultivated field, gully slope, gully bottom), differences in individual separations were determined analogously to the analysis of the entire site. The differences of the selected parameters developed variously. In the case of absolute height, very high relative model convergence was recorded for the L1 cloud in each land use version. For the CHC sensor, significantly higher differences were found at the bottom of the gully; in the cultivated field—although large—they were lower. An inverse relationship was found for YSM—in the arable field the differences were half as high as at the bottom of the gully (Table 5).

Table 5.

Differences in selected parameters within the selected test fields.

4. Discussion

This study analyses the errors that can arise during the acquisition and processing of LiDAR data using a UAV platform. The large number of works using LiDAR-UAV data in recent years allows us to conclude that this type of data is widespread and used in many ways. However, despite the widespread use of this technology, a critical approach to both data acquisition and processing methods is required.

The significant positive differences in the case of the L1 scanner are mainly due to errors in the classification of the point cloud, individual trees were classified in class 2 points lying on the ground. A similar situation occurred for buildings in the eastern part of the study area, where the roofs of buildings were also classified as ground. An important factor may be the configuration of the point cloud classification software which, in the case of the site analysed here, must take into account the varying relief and use of the scanned area. Differences can occur in arable fields where, despite the relatively smooth slopes of the flat surface, the whole area is subject to seasonal variations in elevation due to tillage operations. On the other hand, the edges of the gully, the steep slopes and the flat bottom of the gully are subject to erosion and deposition processes, and seasonal differences here can reach several to tens of centimetres. Areas with significant coverage of tall vegetation, parts of buildings, etc., are also difficult to classify. The issue of classification of points lying on the ground in forested areas has been the subject of many studies [,], but it is not the subject of this paper.

It is worth highlighting that the large discrepancies in values occur basically only in the forested area, with a well-developed vegetation cover. The exception is the mentioned building part. The density of the base cloud is an important factor; for the reference data the average density is low, for areas with developed vegetation the number of points is further limited in the classification process.

The height interpolation used, carried out on a rare cloud, may not take into account small landforms and thus apparent differences between the LiDAR-UAV data and the reference model are generated.

In the case of large negative differences, it should be taken into account that the ALS data acquisition took place in 2011, a difference of more than a decade for an active gully form is reflected in the recorded height difference (mainly in the gully axes). This could be an important contribution to the use of LiDAR-UAV data in monitoring geomorphological change on a local scale, using data acquired over a large time interval.

In the case of the YSM cloud, no relevant differences were found that could be related to classification errors (misclassification of points from other classes to points lying on the ground). However, the spatial distribution of the differences is interesting; there is a clear relationship with land exposure, with the highest values recorded on slopes with northern and north-eastern orientation.

In the case of CHC, the differences from the reference model did not show a relationship with location relative to relief. Errors related to the misclassification of the point cloud were spotty, with the classification algorithm misassigning a class for fragments with buildings. However, a large error of a systematic nature appears for this dataset, evident when using a custom scale for data visualisation (Figure 7).

Figure 7.

Differences in recorded altitude between LiDAR-UAV–CHC. Custom scale was used.

The clear, unambiguous meridional pattern of values may indicate erroneous IMU readings during data acquisition; the raid was performed in the north–south axis, hence the error may be related to an incorrect reading of roll axis excursions. Similar conclusions were reached by Huising and Pereira [] analysing data from different sensors.

Differences in recorded terrain altitude, relative to a specific reference model, can be a subject of discussion in itself.The values of possible data recording accuracy, indicated by the manufacturers of individual systems, may suggest a high reliability of the data; however, the verification carried out shows significant discrepancies. Kamp et al. [] presented a summary of possible errors in LiDAR-based ground surface measurements. They also made a direct comparison between LiDAR-UAV data and ALS data; however, an ALS and LiDAR-UAV matching was performed on the basis of selected flat surfaces and the ALS cloud was used for additional height adjustment. Differences between models developed from LiDAR-UAV and other sources were signalled by de Magalhães and Moura [], who conducted their study in an urbanised area. The registration of incorrect values may be related to errors in the UAV’s IMU or the incorrect determination of the UAV’s flight trajectory []. Differences between the recorded value and the actual value may increase with flight altitude [], which is confirmed by the results obtained; a flight altitude of 75 m is relatively high for LiDAR-UAV acquisition.

In the context of the results obtained, it is essential to carry out checks by direct GNSS measurement on the ground and to make any elevation corrections each time. Room et al. [] signal that it is necessary to use GCPs, also with LiDAR-UAV, and that the number of points used is proportional to the quality of the model obtained. When using control points, Bakuła et al. [] achieved high convergence between LiDAR-UAV data and the reference model, using the same data source for comparison (ALS). In the case of the present study, GCPs were used as checkpoints, not control points, to verify absolute heights. The use of GCPs, however, is not required by the providers of the particular systems.

When using LiDAR-UAV data for local studies, the recorded differences may not be of great importance, as the differences in relative heights remain at a similar, acceptable level. LiDAR-UAVs have been used in recent years for crop height estimation [], where estimating the height difference over a specific time interval is crucial; in such studies, absolute height is less important.

Bartholomeus et al. [] undertook a similar study, comparing data acquired from three different systems. The study was conducted in a forested area, but with a low crown density. Despite this, they recorded height differences of up to several tens of centimetres between the different systems. One has to agree and support their conclusion that in areas of greater complexity the differences can be much higher, which was confirmed in the present study. However, similar comparative studies are still lacking in the literature; it is to be expected that technological developments will force analogous research work in the near future.

Comparative studies conducted in a terrain with quite varied relief, which the Nałęczów Plateau undoubtedly is, are a novelty and need confirmation in other research areas. The combination of the influence of the relief with the presence of dense thickets and trees makes the data from this area require much more attention and the application of the latest classification algorithms than in the case of flat areas covered only with low vegetation. Therefore, further research is needed to improve mission design in such terrain, as well as classification algorithms.

5. Conclusions

- A comparison of elevation data acquisition LiDAR-UAV systems shows large discrepancies between the various systems, exceeding the figures quoted by manufacturers. The maximum differences for the YSM scanner reached 3.80 m, for CHC they were 6.62 m, for L1 they reached 16.8 m. The high differences were mainly due to misclassification of point clouds;

- For proper data acquisition, it is necessary to use reference data, acquired by traditional techniques (ground-based GNSS surveys), to ensure the quality of the final product;

- In the heavily textured and forested area, the differences in registered altitude for the CHC scanner were much higher than for the less diverse area (3.3 vs. 2.0 m). For YSM, the relationship was opposite, but the differences were at a lower level (about 0.2 m). For L1, the differences between the differentiated survey fields were insignificant;

- The use of data with different resolutions results in errors due to the relief of the terrain and a randomness in the location of height points on the LiDAR-UAV data;

- Due to technical considerations, relatively small areas can be subjected to LiDAR-UAV analyses compared to ALS data;

- Future research should expand the range of sensors used; further comparative studies of different market solutions are advisable.

Author Contributions

Conceptualization, P.B. and M.S.; methodology, M.S. and P.B.; software, M.S.; validation, M.S. and P.B.; formal analysis, W.K.; investigation, P.B., M.S. and W.K.; resources, M.S. and P.B.; data curation, M.S. and P.B.; writing—original draft preparation, P.B. and M.S.; writing—review and editing, P.B. and M.S.; visualization, M.S. and P.B.; supervision, W.K.; project administration, P.B.; funding acquisition, W.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to data volume size and technical repository limitations.

Acknowledgments

The authors would like to thank Arkadiusz Piwowarczyk UAV Remote Sensing Specialist from NaviGate Sp. z o.o. for providing the Yellow Scan Mapper and CHC sensors for field testing and Marcin Maciejewski from the Institute of Archaeology at Maria Curie Skłodowska University for providing the DJI Zenmuse L1 sensor for field testing. DJI Zenmuse L1 sensor was purchased under the project DARIAH-PL Digital Research Infrastructure for Arts and Humanities POIR.04.02.00-00-D006/20-00.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wezyk, P.; Hawryło, P.; Zięba-Kulawik, K. Post-Hurricane Forest Mapping in Bory Tucholskie (Northern Poland) Using Random Forest Based up-Scaling Approach of ALS and Photogrammetry-Based CHM to KOMPSAT-3 and PlanetScope Imagery-based CHM to KOMPSAT-3 and PlanetScope imagery. In Trends in Earth Observation: Earth Observation Advancements in a Changing World; Chirici, D.G., Gianinetto, M., Eds.; Associazione Italiana di Telerilevamento: Roma, Italy, 2019. [Google Scholar] [CrossRef]

- De Jong, S.M.; van der Meer, F.D. Remote Sensing Image Analysis: Including the Spatial Domain; Kluwer Academic: Dordrecht, The Netherlands, 2004; ISBN 978-1-4020-2559-4. [Google Scholar]

- Turner, W. Sensing Biodiversity. Science 2014, 346, 301–302. [Google Scholar] [CrossRef] [PubMed]

- Paris, C.; Kelbe, D.; van Aardt, J.; Bruzzone, L. A Novel Automatic Method for the Fusion of ALS and TLS LiDAR Data for Robust Assessment of Tree Crown Structure. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3679–3693. [Google Scholar] [CrossRef]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian Decomposition and Calibration of a Novel Small-Footprint Full-Waveform Digitising Airborne Laser Scanner. ISPRS J. Photogramm. Remote Sens. 2006, 60, 100–112. [Google Scholar] [CrossRef]

- Yin, T.; Lauret, N.; Gastellu-Etchegorry, J.-P. Simulation of Satellite, Airborne and Terrestrial LiDAR with DART (II): ALS and TLS Multi-Pulse Acquisitions, Photon Counting, and Solar Noise. Remote Sens. Environ. 2016, 184, 454–468. [Google Scholar] [CrossRef]

- Domazetović, F.; Šiljeg, A.; Marić, I.; Panđa, L. A New Systematic Framework for Optimization of Multi-Temporal Terrestrial LiDAR Surveys over Complex Gully Morphology. Remote Sens. 2022, 14, 3366. [Google Scholar] [CrossRef]

- Heritage, G.; Hetherington, D. Towards a Protocol for Laser Scanning in Fluvial Geomorphology. Earth Surf. Process. Landf. 2007, 32, 66–74. [Google Scholar] [CrossRef]

- Migoń, P.; Jancewicz, K.; Kasprzak, M. Inherited Periglacial Geomorphology of a Basalt Hill in the Sudetes, Central Europe: Insights from LiDAR-Aided Landform Mapping. Permafr. Periglac. Process. 2020, 31, 587–597. [Google Scholar] [CrossRef]

- Polat, N.; Uysal, M. Investigating Performance of Airborne LiDAR Data Filtering Algorithms for DTM Generation. Measurement 2015, 63, 61–68. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry Applications of UAVs in Europe: A Review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Esin, A.İ.; Akgul, M.; Akay, A.O.; Yurtseven, H. Comparison of LiDAR-Based Morphometric Analysis of a Drainage Basin with Results Obtained from UAV, TOPO, ASTER and SRTM-Based DEMs. Arab. J. Geosci. 2021, 14, 340. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR Derived Canopy Height and DBH with Terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV Photograph-Based and Airborne Lidar-Based Point Clouds over Forest from a Forestry Application Perspective. Int. J. Remote Sens. 2017, 38, 2411–2426. [Google Scholar] [CrossRef]

- Ressl, C.; Brockmann, H.; Mandlburger, G.; Pfeifer, N. Dense Image Matching vs. Airborne Laser Scanning—Comparison of Two Methods for Deriving Terrain Models. Photogramm. Fernerkund. Geoinf. 2016, 2, 57–73. [Google Scholar] [CrossRef]

- Trepekli, K.; Balstrøm, T.; Friborg, T.; Fog, B.; Allotey, A.N.; Kofie, R.Y.; Møller-Jensen, L. UAV-Borne, LiDAR-Based Elevation Modelling: A Method for Improving Local-Scale Urban Flood Risk Assessment. Nat. Hazards 2022, 113, 423–451. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Línková, L. A New Method for UAV Lidar Precision Testing Used for the Evaluation of an Affordable DJI ZENMUSE L1 Scanner. Remote Sens. 2021, 13, 4811. [Google Scholar] [CrossRef]

- Yan, K.; Di Baldassarre, G.; Solomatine, D.P.; Schumann, G.J.-P. A Review of Low-Cost Space-Borne Data for Flood Modelling: Topography, Flood Extent and Water Level. Hydrol. Process. 2015, 29, 3368–3387. [Google Scholar] [CrossRef]

- Zhou, T.; Hasheminasab, S.M.; Habib, A. Tightly-Coupled Camera/LiDAR Integration for Point Cloud Generation from GNSS/INS-Assisted UAV Mapping Systems. ISPRS J. Photogramm. Remote Sens. 2021, 180, 336–356. [Google Scholar] [CrossRef]

- Scheeres, J.; de Jong, J.; Brede, B.; Brancalion, P.H.S.; Broadbent, E.N.; Zambrano, A.M.A.; Gorgens, E.B.; Silva, C.A.; Valbuena, R.; Molin, P.; et al. Distinguishing Forest Types in Restored Tropical Landscapes with UAV-Borne LIDAR. Remote Sens. Environ. 2023, 290, 113533. [Google Scholar] [CrossRef]

- Revenga, J.C.; Trepekli, K.; Oehmcke, S.; Jensen, R.; Li, L.; Igel, C.; Gieseke, F.C.; Friborg, T. Above-Ground Biomass Prediction for Croplands at a Sub-Meter Resolution Using UAV–LiDAR and Machine Learning Methods. Remote Sens. 2022, 14, 3912. [Google Scholar] [CrossRef]

- Gaspari, F.; Ioli, F.; Barbieri, F.; Belcore, E.; Pinto, L. Integration of Uav-Lidar and Uav-Photogrammetry for Infrastructure Monitoring and Bridge Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 995–1002. [Google Scholar] [CrossRef]

- Zhao, X.; Su, Y.; Hu, T.; Cao, M.; Liu, X.; Yang, Q.; Guan, H.; Liu, L.; Guo, Q. Analysis of UAV Lidar Information Loss and Its Influence on the Estimation Accuracy of Structural and Functional Traits in a Meadow Steppe. Ecol. Indic. 2022, 135, 108515. [Google Scholar] [CrossRef]

- Levick, S.R.; Whiteside, T.; Loewensteiner, D.A.; Rudge, M.; Bartolo, R. Leveraging TLS as a Calibration and Validation Tool for MLS and ULS Mapping of Savanna Structure and Biomass at Landscape-Scales. Remote Sens. 2021, 13, 257. [Google Scholar] [CrossRef]

- Niculiță, M. Geomorphometric Methods for Burial Mound Recognition and Extraction from High-Resolution LiDAR DEMs. Sensors 2020, 20, 1192. [Google Scholar] [CrossRef]

- Mitasova, H.; Hardin, E.; Starek, M.J.; Harmon, R.S.; Overton, M.F.; Carolina, N. Landscape Dynamics from LiDAR Data Time Series. In Geomorphometry; Geomorphometry.org: Redlands, CA, USA, 2011; Volume 2011, pp. 3–6. [Google Scholar]

- Singh, S.; Vinod Kumar, K.; Jagannadha Rao, M. Utilization of LiDAR DTM for Systematic Improvement in Mapping and Classification of Coastal Micro-Geomorphology. J. Indian Soc. Remote Sens. 2020, 48, 805–816. [Google Scholar] [CrossRef]

- Migoń, P.; Kasprzak, M.; Traczyk, A. How High-Resolution DEM Based on Airborne LiDAR Helped to Reinterpret Landforms—Examples from the Sudetes, SW Poland. Landf. Anal. 2013, 22, 89–101. [Google Scholar] [CrossRef]

- Munir, N.; Awrangjeb, M.; Stantic, B. Power Line Extraction and Reconstruction Methods from Laser Scanning Data: A Literature Review. Remote Sens. 2023, 15, 973. [Google Scholar] [CrossRef]

- Yin, H.; Lin, Z.; Yeoh, J.K.W. Semantic Localization on BIM-Generated Maps Using a 3D LiDAR Sensor. Autom. Constr. 2023, 146, 104641. [Google Scholar] [CrossRef]

- Catbas, N.; Avci, O. A Review of Latest Trends in Bridge Health Monitoring. In Proceedings of the Institution of Civil Engineers—Bridge Engineering; ICE Publishing: London, UK, 2023; Volume 176, pp. 76–91. [Google Scholar] [CrossRef]

- Ceccherini, G.; Girardello, M.; Beck, P.S.A.; Migliavacca, M.; Duveiller, G.; Dubois, G.; Avitabile, V.; Battistella, L.; Barredo, J.I.; Cescatti, A. Spaceborne LiDAR Reveals the Effectiveness of European Protected Areas in Conserving Forest Height and Vertical Structure. Commun. Earth Environ. 2023, 4, 97. [Google Scholar] [CrossRef]

- García-López, S.; Vélez-Nicolás, M.; Zarandona-Palacio, P.; Curcio, A.C.; Ruiz-Ortiz, V.; Barbero, L. UAV-Borne LiDAR Revolutionizing Groundwater Level Mapping. Sci. Total Environ. 2023, 859, 160272. [Google Scholar] [CrossRef]

- Pereira, L.G.; Fernandez, P.; Mourato, S.; Matos, J.; Mayer, C.; Marques, F. Quality Control of Outsourced LiDAR Data Acquired with a UAV: A Case Study. Remote Sens. 2021, 13, 419. [Google Scholar] [CrossRef]

- Bryndal, T.; Kroczak, R. Reconstruction and Characterization of the Surface Drainage System Functioning during Extreme Rainfall: The Analysis with Use of the ALS-LIDAR Data—The Case Study in Two Small Flysch Catchments (Outer Carpathian, Poland). Environ. Earth Sci. 2019, 78, 215. [Google Scholar] [CrossRef]

- Escobar Villanueva, J.R.; Iglesias Martínez, L.; Pérez Montiel, J.I. DEM Generation from Fixed-Wing UAV Imaging and LiDAR-Derived Ground Control Points for Flood Estimations. Sensors 2019, 19, 3205. [Google Scholar] [CrossRef] [PubMed]

- Pellicani, R.; Argentiero, I.; Manzari, P.; Spilotro, G.; Marzo, C.; Ermini, R.; Apollonio, C. UAV and Airborne LiDAR Data for Interpreting Kinematic Evolution of Landslide Movements: The Case Study of the Montescaglioso Landslide (Southern Italy). Geosciences 2019, 9, 248. [Google Scholar] [CrossRef]

- Viedma, O.; Almeida, D.R.A.; Moreno, J.M. Postfire Tree Structure from High-Resolution LiDAR and RBR Sentinel 2A Fire Severity Metrics in a Pinus Halepensis-Dominated Burned Stand. Remote Sens. 2020, 12, 3554. [Google Scholar] [CrossRef]

- Szypuła, B. Quality Assessment of DEM Derived from Topographic Maps for Geomorphometric Purposes. Open Geosci. 2019, 11, 843–865. [Google Scholar] [CrossRef]

- Shaw, L.; Helmholz, P.; Belton, D.; Addy, N. Comparison of Uav Lidar and Imagery for Beach Monitoring. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2-W13, 589–596. [Google Scholar] [CrossRef]

- Różycka, M.; Migoń, P.; Michniewicz, A. Topographic Wetness Index and Terrain Ruggedness Index in Geomorphic Characterisation of Landslide Terrains, on Examples from the Sudetes, SW Poland. Z. Geomorphol. Suppl. Issues 2017, 61, 61–80. [Google Scholar] [CrossRef]

- Lin, M.-L.; Chen, Y.-C.; Tseng, Y.-H.; Chang, K.-J.; Wang, K.-L. Investigation of Geological Structures Using UAV Lidar and Its Effects on the Failure Mechanism of Deep-Seated Landslide in Lantai Area, Taiwan. Appl. Sci. 2021, 11, 10052. [Google Scholar] [CrossRef]

- Furtado, C.P.Q.; Medeiros, W.E.; Borges, S.V.; Lopes, J.A.G.; Bezerra, F.H.R.; Lima-Filho, F.P.; Maia, R.P.; Bertotti, G.; Auler, A.S.; Teixeira, W.L.E. The Influence of Subseismic-Scale Fracture Interconnectivity on Fluid Flow in Fracture Corridors of the Brejões Carbonate Karst System, Brazil. Mar. Pet. Geol. 2022, 141, 105689. [Google Scholar] [CrossRef]

- Hout, R.; Maleval, V.; Mahe, G.; Rouvellac, E.; Crouzevialle, R.; Cerbelaud, F. UAV and LiDAR Data in the Service of Bank Gully Erosion Measurement in Rambla de Algeciras Lakeshore. Water 2020, 12, 2748. [Google Scholar] [CrossRef]

- Prata, G.A.; Broadbent, E.N.; de Almeida, D.R.A.; St. Peter, J.; Drake, J.; Medley, P.; Corte, A.P.D.; Vogel, J.; Sharma, A.; Silva, C.A.; et al. Single-Pass UAV-Borne GatorEye LiDAR Sampling as a Rapid Assessment Method for Surveying Forest Structure. Remote Sens. 2020, 12, 4111. [Google Scholar] [CrossRef]

- Pilarska, M.; Ostrowski, W.; Bakuła, K.; Górski, K.; Kurczyński, Z. The Potential of Light Laser Scanners Developed for Unmanned Aerial Vehicles—The Review and Accuracy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLII-2-W2, 87–95. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2021, 13, 77. [Google Scholar] [CrossRef]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy Assessment of Point Clouds from LiDAR and Dense Image Matching Acquired Using the UAV Platform for DTM Creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef]

- Rodzik, J.; Furtak, T.; Zglobicki, W. The Impact of Snowmelt and Heavy Rainfall Runoff on Erosion Rates in a Gully System, Lublin Upland, Poland. Earth Surf. Process. Landf. 2009, 34, 1938–1950. [Google Scholar] [CrossRef]

- Solon, J.; Borzyszkowski, J.; Bidłasik, M.; Richling, A.; Badora, K.; Balon, J.; Brzezińska-Wójcik, T.; Chabudziński, Ł.; Dobrowolski, R.; Grzegorczyk, I.; et al. Physico-Geographical Mesoregions of Poland: Verification and Adjustment of Boundaries on the Basis of Contemporary Spatial Data. Geogr. Pol. 2018, 91, 143–170. [Google Scholar] [CrossRef]

- Li, B.; Lu, H.; Wang, H.; Qi, J.; Yang, G.; Pang, Y.; Dong, H.; Lian, Y. Terrain-Net: A Highly-Efficient, Parameter-Free, and Easy-to-Use Deep Neural Network for Ground Filtering of UAV LiDAR Data in Forested Environments. Remote Sens. 2022, 14, 5798. [Google Scholar] [CrossRef]

- Jakovljevic, G.; Govedarica, M.; Alvarez-Taboada, F.; Pajic, V. Accuracy Assessment of Deep Learning Based Classification of LiDAR and UAV Points Clouds for DTM Creation and Flood Risk Mapping. Geosciences 2019, 9, 323. [Google Scholar] [CrossRef]

- Huising, E.J.; Gomes Pereira, L.M. Errors and Accuracy Estimates of Laser Data Acquired by Various Laser Scanning Systems for Topographic Applications. ISPRS J. Photogramm. Remote Sens. 1998, 53, 245–261. [Google Scholar] [CrossRef]

- Kamp, N.; Krenn, P.; Avian, M.; Sass, O. Comparability of Multi-Temporal DTMs Derived from Different LiDAR Platforms: Error Sources and Uncertainties in the Application of Geomorphic Impact Studies. Earth Surf. Process. Landf. 2023, 48, 1152–1175. [Google Scholar] [CrossRef]

- Magalhães, D.M.; Moura, A.C.M. Análise da Morfologia de Modelos Digitais de Superfície Gerados por VANT. Rev. Bras. Cartogr. 2021, 73, 707–722. [Google Scholar] [CrossRef]

- Bakuła, K.; Ostrowski, W.; Pilarska, M.; Szender, M.; Kurczyński, Z. Evaluation and Calibration of Fixed-Wing Multisensor Uav Mobile Mapping System: Improved Results. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2-W13, 189–195. [Google Scholar] [CrossRef]

- Fuad, N.A.; Ismail, Z.; Majid, Z.; Darwin, N.; Ariff, M.F.M.; Idris, K.M.; Yusoff, A.R. Accuracy Evaluation of Digital Terrain Model Based on Different Flying Altitudes and Conditional of Terrain Using UAV LiDAR Technology. IOP Conf. Ser. Earth Environ. Sci. 2018, 169, 012100. [Google Scholar] [CrossRef]

- Room, M.H.M.; Ahmad, A.; Rosly, M.A. Assessment of Different Unmanned Aerial Vehicle System for Production of Photogrammerty Products. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4-W16, 549–554. [Google Scholar] [CrossRef]

- Bartholomeus, H.; Calders, K.; Whiteside, T.; Terryn, L.; Krishna Moorthy, S.M.; Levick, S.R.; Bartolo, R.; Verbeeck, H. Evaluating Data Inter-Operability of Multiple UAV–LiDAR Systems for Measuring the 3D Structure of Savanna Woodland. Remote Sens. 2022, 14, 5992. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).