Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images

Abstract

:1. Introduction

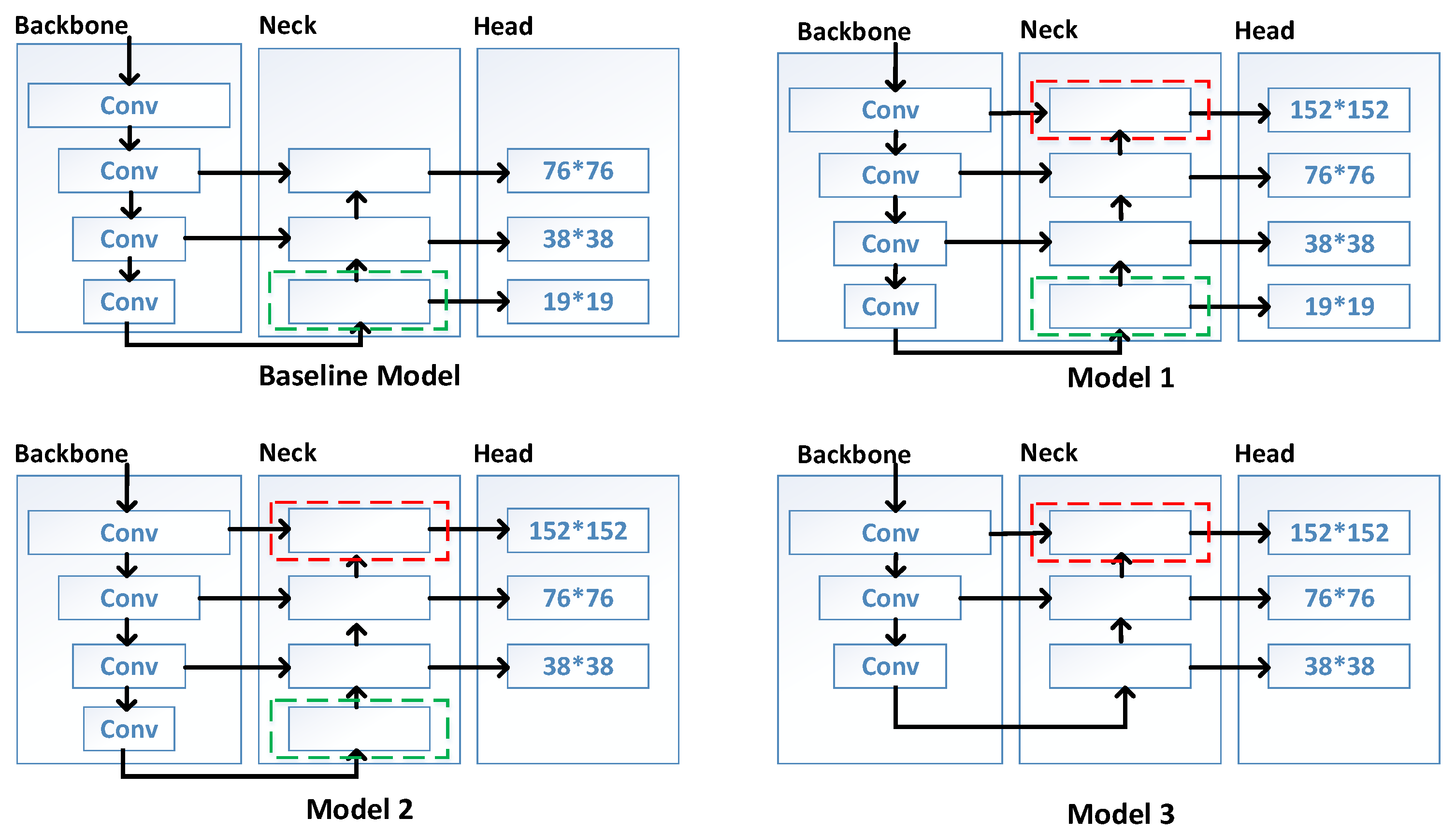

- We modified the model architecture of YOLOv5. Intending to introduce high-resolution low-level feature maps, we evaluated three model architectures through several rounds of experiments and then analyzed the reasons for their superior or inferior performance and architectural characteristics; finally, we selected the best-performing model. The top-performing model architecture managed to maximize the precision of the model in detecting small objects while imposing only a marginal increase in computational overhead.

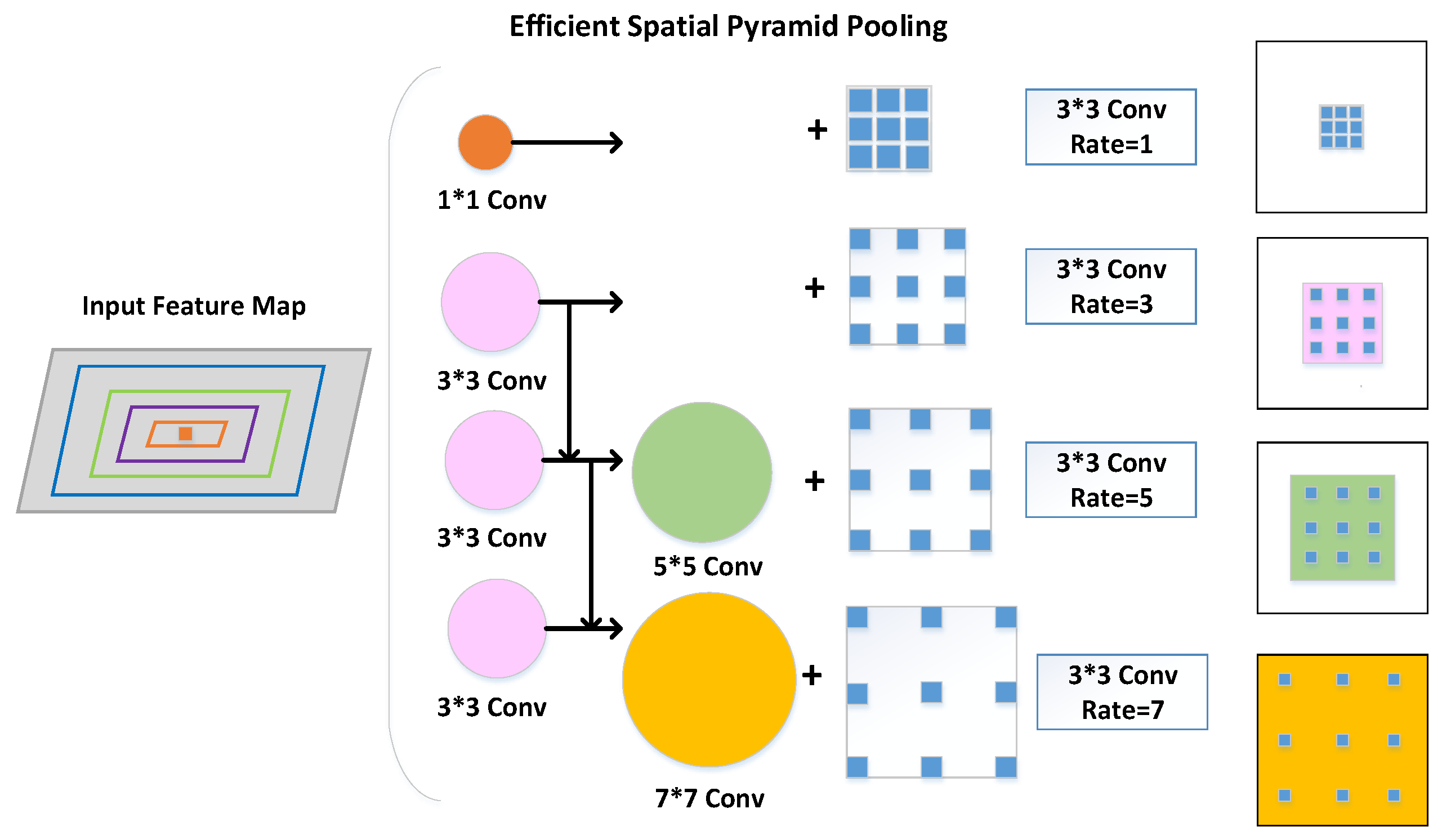

- We designed the ESPP method based on the human visual perception system to replace the original SPP approach, which enhanced the model’s ability to extract features from small objects.

- We used α-CIoU to replace the original localization loss function of the object detector. The α-CIoU function could control the parameter α to optimize the positive and negative sample imbalance problem in the bounding box regression task, allowing the detector to locate small objects more quickly and precisely.

- Our proposed embeddable S-scale EL-YOLOv5 model attained an APS of 10.8% on the DIOR dataset and 10.7% on the VisDrone dataset. This is the highest accuracy achieved to date among the available lightweight models, showcasing the superior performance of our proposal.

2. Related Work

2.1. General Object Detection

2.2. Aerial Image Objects Detection

3. Materials and Methods

3.1. Fundamental Models

3.1.1. Baseline Model

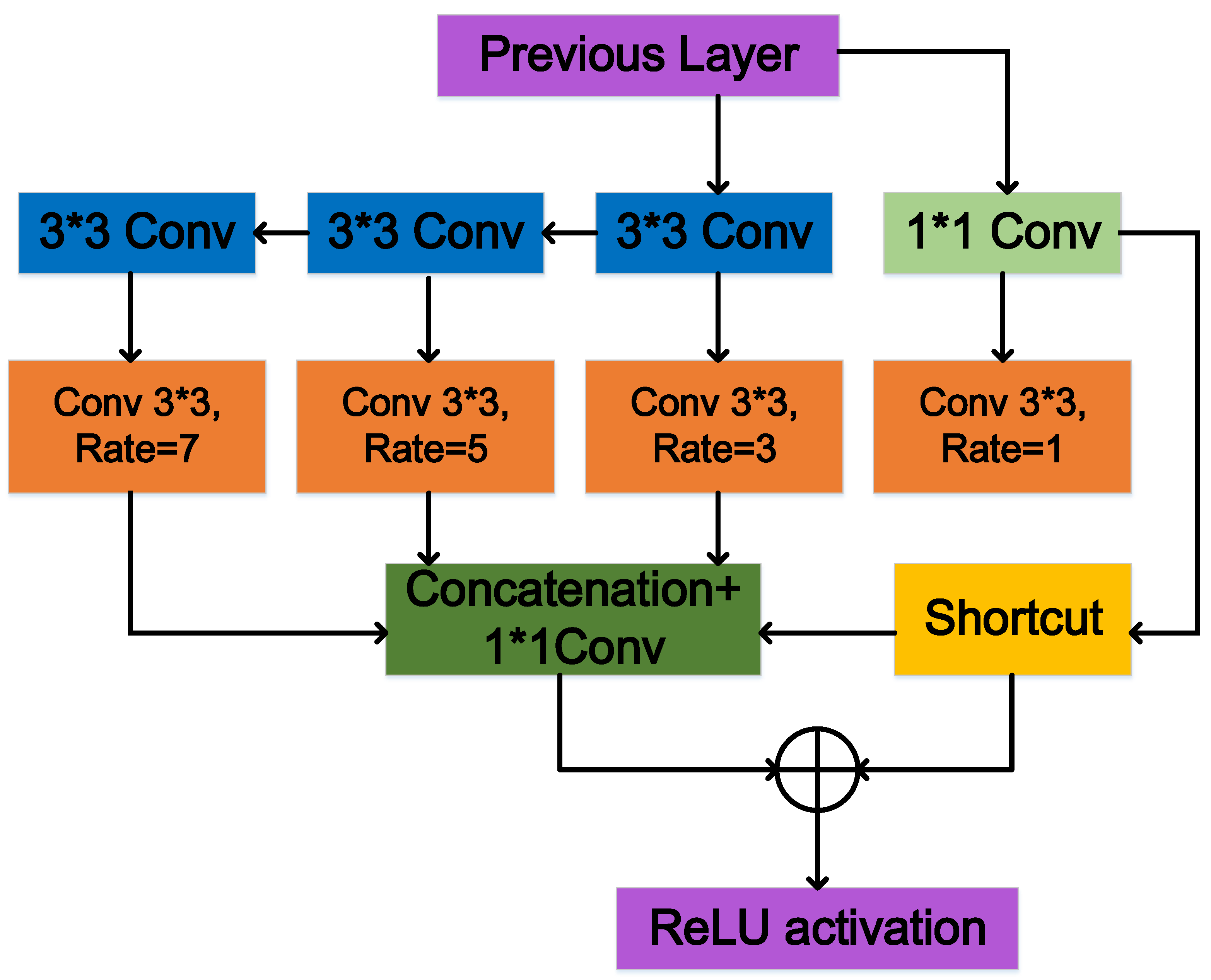

3.1.2. Receptive Field Block

3.2. Proposed Model

3.2.1. Model Architecture

3.2.2. Efficient Spatial Pyramid Pooling

| Algorithm 1 Efficient Spatial Pyramid Pooling (ESPP) |

| Input: The input feature layer, x; The number of input channels of x, Cin; The number of output channels of x, Cout; The parameter that control the size of the model, a. |

| Output: The output feature layer, out. |

| 1: Control the number of output feature channels of the convolution process by a. 2: Cout1 = Cout / a. // In general, the parameter a is set to 4 or 8, which gives excellent control over the number of parameters of the model. 3: Make an ordinary 1*1 convolution at x, get the output out1. 4: Make an ordinary 3*3 convolution at x, get the output out2. 5: The stacking of two 3*3 convolutions gives the same perceptual field as one 5*5 convolution: 6: Make an ordinary 3*3 convolution at out2, get the output out3. 7: The stacking of three 3*3 convolutions gives the same perceptual field as one 7*7 convolution: 8: Make an ordinary 3*3 convolution at out3, get the output out4. 9: Make a 3*3 atrous convolution with the atrous rate of 1 at out1, get the first branch output out5. 10: Make a 3*3 atrous convolution with the atrous rate of 3 at out2, get the second branch output out6. 11: Make a 3*3 atrous convolution with the atrous rate of 5 at out3, get the third branch output out7. 12: Make a 3*3 atrous convolution with the atrous rate of 7 at out4, get the third branch output out8. 13: Unify the four branch outputs into the same dimension: 14: Concat ([out5, out6, out7, out8], dimension), get out9. 15: Integrate feature information: 16: Make an ordinary 1*1 convolution at out1, get the shortcut. 17: net = out9*0.8 + shortcut. 18: Get the final output: 19: out = RELU (net). // add the non-linearity. 20: return out. |

3.2.3. Loss Function

4. Experiments

4.1. Experimental Setup

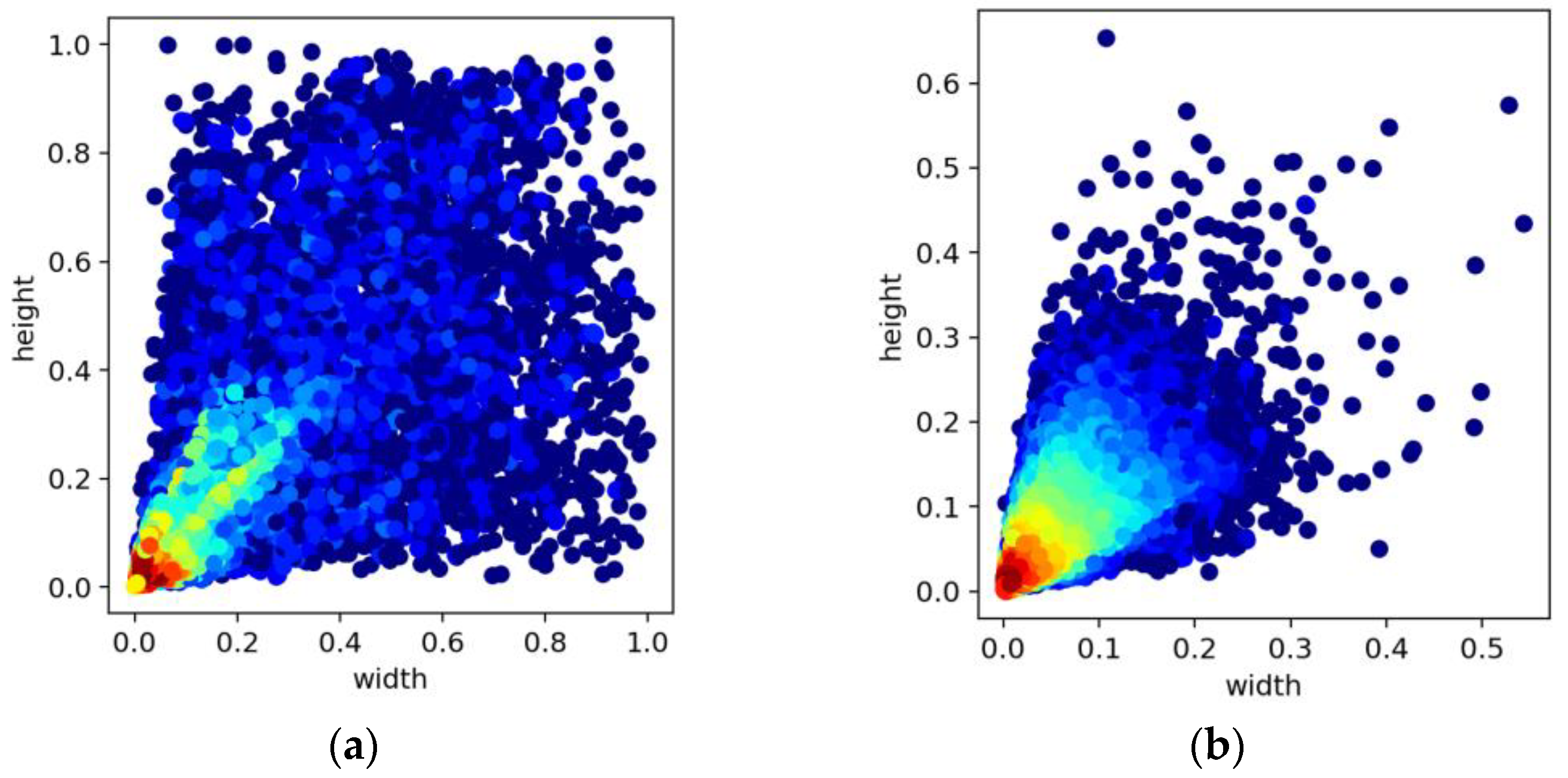

4.1.1. Datasets and Evaluation Metrics

4.1.2. Implementation Details

4.2. Experimental Results

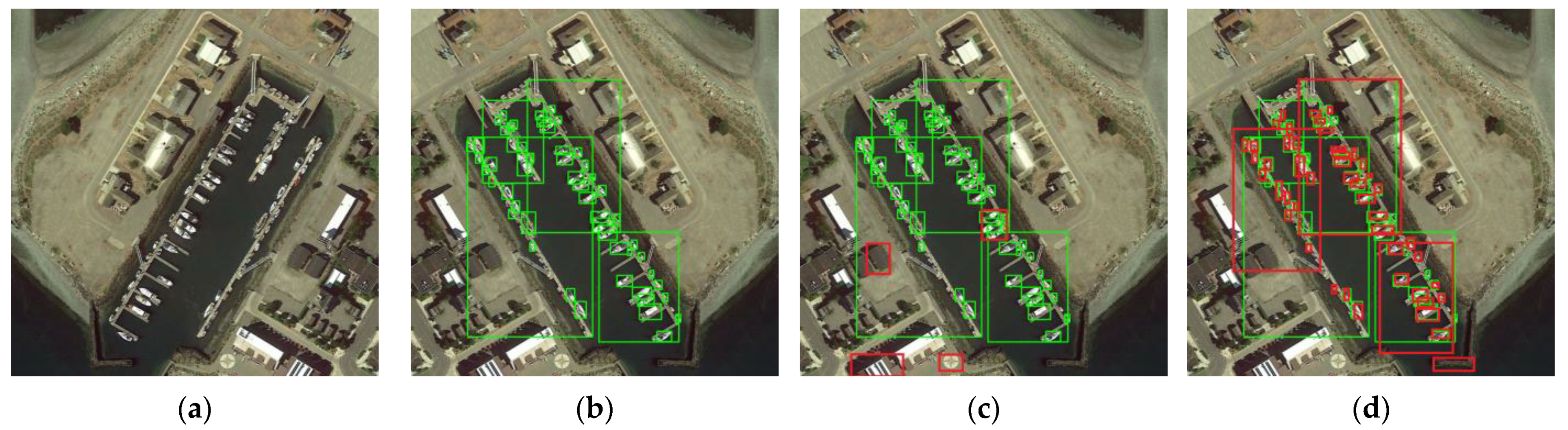

4.2.1. Experimental Results of the Model Architecture

4.2.2. Experimental Results of ESPP

4.2.3. Experimental Results of EL-YOLOv5

4.3. Ablation Experiments

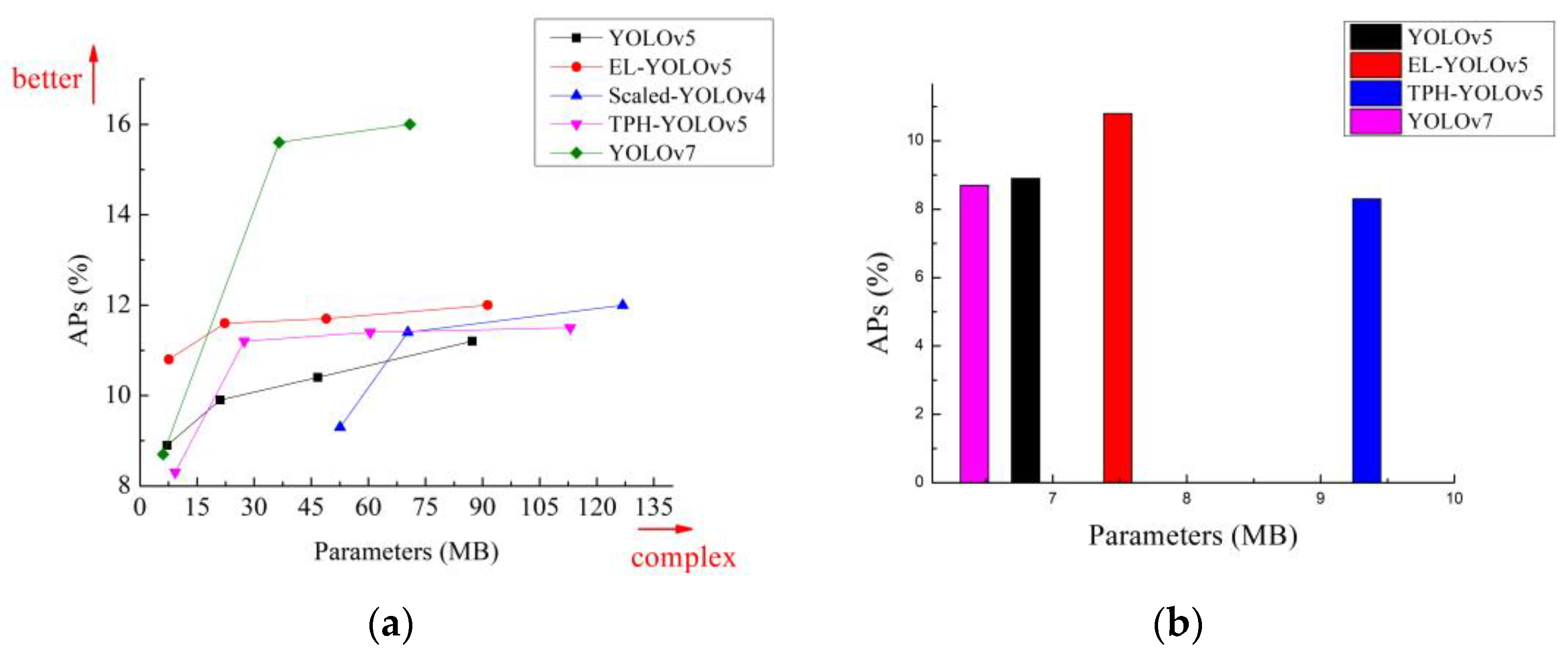

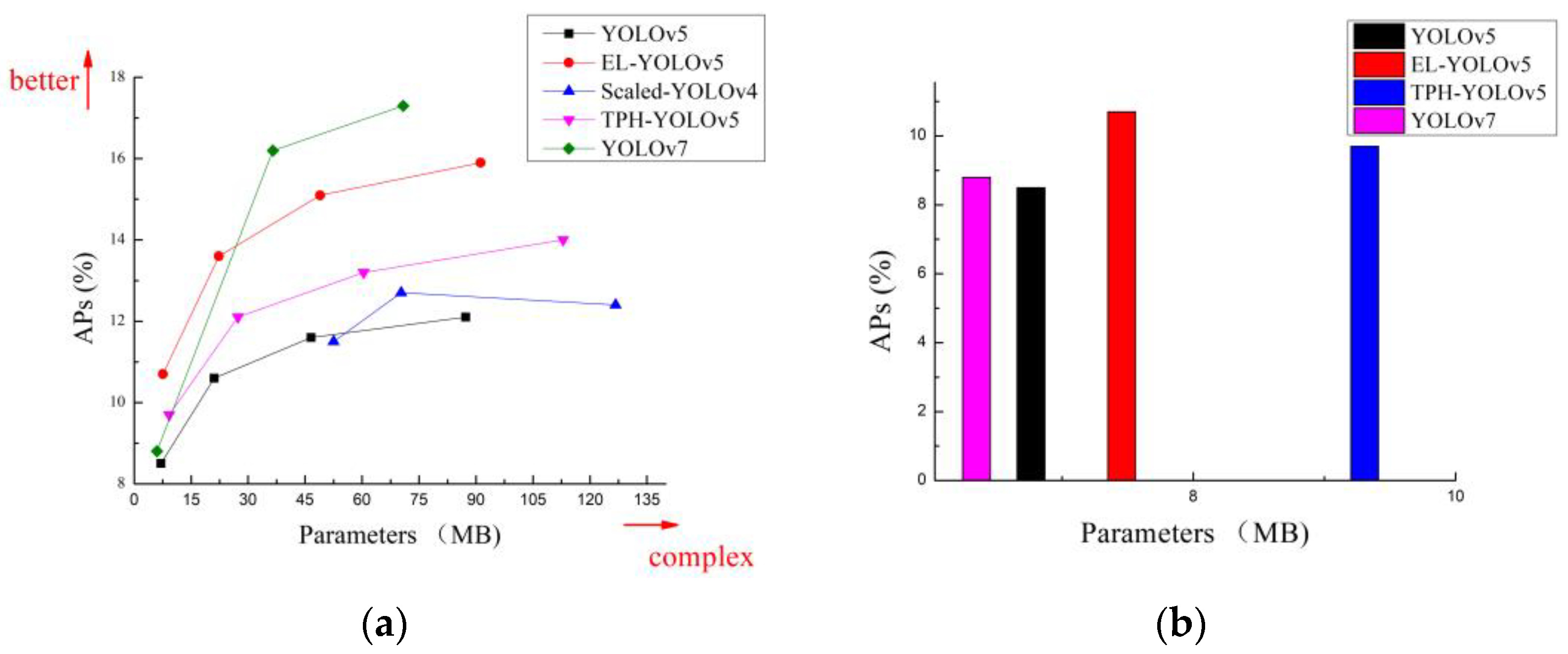

4.4. Comparisons with Other Sota Detectors

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ma, W.; Guo, Q.; Wu, Y.; Zhao, W.; Zhang, X.; Jiao, L. A Novel Multi-Model Decision Fusion Network for Object Detection in Remote Sensing Images. Remote Sens. 2019, 11, 737. [Google Scholar] [CrossRef] [Green Version]

- Xie, W.Y.; Yang, J.; Lei, J.; Li, Y.; Du, Q.; He, G. SRUN: Spectral Regularized Unsupervised Networks for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1463–1474. [Google Scholar] [CrossRef]

- Zhu, D.J.; Xia, S.X.; Zhao, J.Q.; Zhou, Y.; Jian, M.; Niu, Q.; Yao, R.; Chen, Y. Diverse sample generation with multi-branch conditional generative adversarial network for remote sensing objects detection. Neurocomputing 2020, 381, 40–51. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Everingham, M.; Eslami, S.M.A.; Van GoolL, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 99, 2999–3007. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2017, pp. 6517–6525. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3, An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4, Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; Chaurasia, A.; Xie, T.; Liu, C.; Abhiram, V.; Laughing; Tkianai; et al. Ultralytics/yolov5, v5.5-YOLOv5-P6 1280 Models, AWS, Supervisely and YouTube Integrations; Version 5.5; CERN Data Centre & Invenio: Prévessin-Moëns, France, 2022. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6, A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7, Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 8691, 346–361. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- He, J.; Erfani, S.; Ma, X.; Bailey, J.; Chi, Y.; Hua, X.S. Alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression. arXiv 2022, arXiv:2110.13675. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE Transactions on Pattern Analysis & Machine Intelligence, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. arXiv 2017, arXiv:1712.00726. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Yeh, I.H.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar]

- Wang, D.; Liu, Z.; Gu, X.; Wu, W.; Chen, Y.; Wang, L. Automatic Detection of Pothole Distress in Asphalt Pavement Using Improved Convolutional Neural Networks. Remote Sens. 2022, 14, 3892. [Google Scholar] [CrossRef]

- Kim, M.; Jeong, J.; Kim, S. ECAP-YOLO: Efficient Channel Attention Pyramid YOLO for Small Object Detection in Aerial Image. Remote Sens. 2021, 13, 4851. [Google Scholar] [CrossRef]

- Liu, Z.; Gu, X.; Chen, J.; Wang, D.; Chen, Y.; Wang, L. Automatic recognition of pavement cracks from combined GPR B-scan and C-scan images using multiscale feature fusion deep neural networks. Autom. Constr. 2023, 146, 104698. [Google Scholar] [CrossRef]

- Wu, J.; Shen, T.; Wang, Q.; Tao, Z.; Zeng, K.; Song, J. Local Adaptive Illumination-Driven Input-Level Fusion for Infrared and Visible Object Detection. Remote Sens. 2023, 15, 660. [Google Scholar] [CrossRef]

- Cao, Y.; He, Z.; Wang, L.; Wang, W.; Yuan, Y.; Zhang, D.; Zhang, J.; Zhu, P.; Van Gool, L.; Han, J.; et al. Visdrone-Det2021, The Vision Meets Drone Object detection Challenge Results. In Proceedings of the 2021 IEEE CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2847–2854. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5, Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the ECCV 2018, 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wan, J.; Zhang, B.; Zhao, Y.; Du, Y.; Tong, Z. VistrongerDet: Stronger Visual Information for Object Detection in VisDrone Images. In Proceedings of the 2021 IEEE CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2820–2829. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. arXiv 2018, arXiv:1711.07767. [Google Scholar]

- Yu, J.H.; Jiang, Y.N.; Wang, Z.Y.; Cao, Z.M.; Huang, T. UnitBox: An Advanced Object Detection Network. In Proceedings of the 24th ACM International Conference on Multimedia, New York, NY, USA, 15–19 October 2016. [Google Scholar]

- Chen, Z.; Zhang, F.; Liu, H.; Wang, L.; Zhang, Q.; Guo, L. Real-time detection algorithm of helmet and reflective vest based on improved YOLOv5. J. Real-Time Image Process. 2023, 20, 4. [Google Scholar] [CrossRef]

- Du, D.; Wen, L.; Zhu, P.; Fan, H.; Hu, Q.; Ling, H.; Shah, M.; Pan, J.; Al-Ali, A.; Mohamed, A.; et al. VisDrone-CC2020, The Vision Meets Drone Crowd Counting Challenge Results. arXiv 2021, arXiv:2107.08766. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-YOLOv4, Scaling Cross Stage Partial Network. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [Green Version]

| Dataset | <322 Pixels | 322–962 Pixels | >962 Pixels |

|---|---|---|---|

| VisDrone | 164,627 | 94,124 | 16,241 |

| DIOR | 12,792 | 7972 | 5966 |

| Method | AP50 (%) | AP75 (%) | AP50:95 (%) | APS (%) | Parameters (M) |

|---|---|---|---|---|---|

| Baseline Model | 79.4 | 61.8 | 57.1 | 8.9 | 7.11 |

| Model 1 | 78.7 | 59.0 | 54.7 | 11.1 | 7.25 |

| Model 2 | 77.5 | 58.4 | 53.9 | 10.5 | 5.44 |

| Model 3 | 65.2 | 44.4 | 42.3 | 8.7 | 1.77 |

| Method | AP50 (%) | AP75 (%) | AP50:95 (%) | APS (%) | Parameters (M) |

|---|---|---|---|---|---|

| Baseline Model | 27.4 | 14.2 | 14.9 | 8.5 | 7.08 |

| Model 1 | 31.6 | 17.2 | 17.8 | 10.6 | 7.22 |

| Model 2 | 31.3 | 16.4 | 17.3 | 10.6 | 5.43 |

| Model 3 | 30.9 | 15.5 | 16.6 | 10.3 | 1.75 |

| Method | AP50 (%) | AP75 (%) | AP50:95 (%) | APS (%) | Parameters (M) |

|---|---|---|---|---|---|

| YOLOv5s + SPP | 79.4 | 61.8 | 57.1 | 8.9 | 7.11 |

| YOLOv5s + SPPF | 79.2 | 61.7 | 57.1 | 9.1 | 7.11 |

| YOLOv5s + SimSPPF | 79.4 | 61.7 | 57.1 | 8.8 | 7.11 |

| YOLOv5s + SPPCSPC | 79.1 | 61.4 | 56.6 | 8.7 | 10.04 |

| YOLOv5s + ASPP | 79.2 | 61.4 | 56.7 | 9.0 | 15.36 |

| YOLOv5s + RFB | 78.8 | 62.1 | 57.2 | 8.3 | 7.77 |

| YOLOv5s + ESPP | 79.6 | 62.9 | 57.8 | 9.7 | 7.44 |

| Method | AP50 (%) | AP75 (%) | AP50:95 (%) | APS (%) | Parameters (M) |

|---|---|---|---|---|---|

| YOLOv5s + SPP | 27.4 | 14.2 | 14.9 | 8.5 | 7.08 |

| YOLOv5s + SPPF | 27.2 | 14.2 | 14.9 | 8.5 | 7.08 |

| YOLOv5s + SimSPPF | 27.7 | 14.3 | 15.1 | 8.7 | 7.08 |

| YOLOv5s + SPPCSPC | 27.2 | 14.1 | 14.9 | 8.4 | 10.01 |

| YOLOv5s + ASPP | 27.1 | 14.1 | 14.9 | 8.3 | 15.34 |

| YOLOv5s + RFB | 27.1 | 14.4 | 15.0 | 8.5 | 7.74 |

| YOLOv5s + ESPP | 28.4 | 15.9 | 16.1 | 9.2 | 7.42 |

| Method | Scales | P (%) | R (%) | AP50:95 (%) | APS (%) | APM (%) | APL (%) | F1 Score | Parameters (M) | Inference Time (MS) |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5 | S | 88.0 | 76.1 | 57.1 | 8.9 | 38.4 | 69.4 | 0.82 | 7.11 | 14.6 |

| M | 88.8 | 77.6 | 60.0 | 9.9 | 39.0 | 72.7 | 0.83 | 21.11 | 22.1 | |

| L | 90.2 | 77.5 | 61.8 | 10.4 | 40.1 | 74.6 | 0.83 | 46.70 | 25.2 | |

| X | 90.3 | 79.1 | 63.1 | 11.2 | 41.8 | 76.5 | 0.84 | 87.33 | 29.7 | |

| S | 83.6 | 74.2 | 55.5 | 10.8 | 36.4 | 67.1 | 0.79 | 7.59 | 18.2 | |

| M | 84.8 | 76.8 | 58.5 | 11.6 | 38.2 | 70.7 | 0.81 | 22.31 | 26.7 | |

| EL-YOLOv5 | L | 85.8 | 77.4 | 60.5 | 11.7 | 40.6 | 72.8 | 0.81 | 49.04 | 30.9 |

| X | 87.8 | 77.7 | 61.8 | 12.0 | 39.1 | 74.3 | 0.82 | 91.29 | 37.8 |

| Method | Scales | P (%) | R (%) | AP50:95 (%) | APS (%) | APM (%) | APL (%) | F1 Score | Parameters (M) | Inference Time (MS) |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5 | S | 46.8 | 36.1 | 14.9 | 8.5 | 22.4 | 30.2 | 0.41 | 7.08 | 19.7 |

| M | 53.9 | 38.2 | 17.9 | 10.6 | 26.5 | 32.1 | 0.45 | 21.07 | 24.0 | |

| L | 55.4 | 39.9 | 19.4 | 11.6 | 28.6 | 40.7 | 0.46 | 46.65 | 28.1 | |

| X | 56.9 | 40.9 | 20.0 | 12.1 | 29.5 | 39.0 | 0.48 | 87.26 | 31.8 | |

| S | 50.9 | 39.7 | 18.4 | 10.7 | 27.1 | 37.9 | 0.45 | 7.56 | 34.0 | |

| M | 54.1 | 44.5 | 21.4 | 13.6 | 30.8 | 40.7 | 0.49 | 22.27 | 37.1 | |

| EL-YOLOv5 | L | 57.9 | 45.8 | 22.9 | 15.1 | 32.3 | 42.0 | 0.51 | 48.98 | 41.0 |

| X | 56.0 | 48.4 | 23.7 | 15.9 | 33.2 | 44.7 | 0.52 | 91.21 | 47.3 |

| Category | YOLOv5s. | EL-YOLOv5s | ||||

|---|---|---|---|---|---|---|

| P (%) | R (%) | mAP50:95 (%) | P (%) | R (%) | mAP50:95 (%) | |

| pedestrian | 50.7 | 39.3 | 15.4 | 61.6 | 41.1 | 18.7 ↑3.3 |

| people | 46.2 | 34.9 | 10.1 | 49.4 | 31.7 | 10.5 ↑0.4 |

| bicycle | 28.0 | 15.9 | 3.76 | 30.5 | 15.9 | 5.32 ↑1.56 |

| car | 63.7 | 74.2 | 47.1 | 74.4 | 79.1 | 53.6 ↑6.5 |

| van | 48.6 | 38.0 | 24.1 | 45.7 | 45.7 | 28.1 ↑4.0 |

| truck | 52.0 | 33.7 | 18.0 | 51.4 | 37.2 | 22.9 ↑4.9 |

| tricycle | 42.6 | 24.8 | 9.68 | 44.3 | 29.4 | 13.5 ↑3.82 |

| awning-tricycle | 26.9 | 13.5 | 5.74 | 25.6 | 22.2 | 8.36 ↑2.62 |

| bus | 60.2 | 42.2 | 26.1 | 51.4 | 53.1 | 37.6 ↑11.5 |

| motor | 49.2 | 38.7 | 14.7 | 42.9 | 44.4 | 18.0 ↑3.3 |

| Method | P (%) | R (%) | AP50:95 (%) | APS (%) | APM (%) | APL (%) | F1 Score | Parameters (M) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 88.0 | 76.1 | 57.1 | 8.9 | 38.4 | 69.4 | 0.82 | 7.11 |

| YOLOv5s + Model 1 | 84.1 | 75.8 | 54.7 | 11.1 | 37.2 | 66.2 | 0.80 | 7.25 |

| YOLOv5s + ESPP | 88.9 | 76.1 | 57.8 | 9.7 | 37.4 | 70.3 | 0.82 | 7.44 |

| YOLOv5s + α-CIOU | 87.0 | 75.8 | 57.5 | 9.8 | 38.5 | 69.8 | 0.81 | 7.11 |

| YOLOv5s + ESPP + α-CIOU | 89.1 | 76.9 | 58.2 | 10.2 | 38.8 | 70.5 | 0.83 | 7.44 |

| EL-YOLOv5s | 83.6 | 74.2 | 55.5 | 10.8 | 36.4 | 67.1 | 0.79 | 7.59 |

| Method | P (%) | R (%) | AP50:95 (%) | APS (%) | APM (%) | APL (%) | F1 Score | Parameters (M) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 46.8 | 36.1 | 14.9 | 8.5 | 22.4 | 30.2 | 0.41 | 7.08 M |

| YOLOv5s + Model 1 | 51.8 | 39.2 | 17.8 | 10.6 | 25.8 | 34.1 | 0.45 | 7.22 M |

| YOLOv5s + ESPP | 46.8 | 36.9 | 16.1 | 9.2 | 24.5 | 34.4 | 0.41 | 7.42 M |

| YOLOv5s + α-CIOU | 50.3 | 34.0 | 16.2 | 9.5 | 24.1 | 31.3 | 0.41 | 7.08 M |

| YOLOv5s + ESPP + α-CIOU | 50.8 | 36.5 | 16.5 | 9.9 | 24.2 | 35.3 | 0.43 | 7.42 M |

| EL-YOLOv5s | 50.9 | 39.7 | 18.4 | 10.7 | 27.1 | 37.9 | 0.45 | 7.56 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, M.; Li, Z.; Yu, J.; Wan, X.; Tan, H.; Lin, Z. Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images. Sensors 2023, 23, 6423. https://doi.org/10.3390/s23146423

Hu M, Li Z, Yu J, Wan X, Tan H, Lin Z. Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images. Sensors. 2023; 23(14):6423. https://doi.org/10.3390/s23146423

Chicago/Turabian StyleHu, Mengzi, Ziyang Li, Jiong Yu, Xueqiang Wan, Haotian Tan, and Zeyu Lin. 2023. "Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images" Sensors 23, no. 14: 6423. https://doi.org/10.3390/s23146423

APA StyleHu, M., Li, Z., Yu, J., Wan, X., Tan, H., & Lin, Z. (2023). Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images. Sensors, 23(14), 6423. https://doi.org/10.3390/s23146423