1. Introduction

Pest control research has long been one of the top priorities in agriculture. Grooming behavior has important physiological significance and social functions in insects [

1]. The study of insect grooming behavior offers a deeper understanding of their habits and carries important theories for pest control, enhanced crop yields, and protection of vegetation.

Grooming is an important behavior used by many animals to maintain healthy survival. It represents a large proportion of the animal’s total workout, accounting for 30–50% of its waking time [

2]. Grooming behavior has the vital function of removing foreign bodies from the body surface [

3]. Insect grooming is a robust innate behavior involving multiple independent movement coordination [

4]. Despite the diversity in insect species, the main functions of grooming behavior are strikingly similar [

5]. These functions primarily aim to reduce pathogen exposure [

6], clean the cuticles, remove body secretions and epidermal lipids [

7,

8], collect fallen pollen as food [

9], and eliminate potential immune threats from their body surfaces [

10]. It can also be used to prevent dust or debris from inhibiting essential physiological functions (e.g., vision, reproductive behavior, or flight) by covering important body structures in a way that interferes with essential physiological functions (e.g., vision, reproductive behavior, or flight) [

11]. It has also been suggested that the grooming behavior of insects may be an important mechanism of resistance to hostile organisms [

12]. Therefore, studying grooming behavior holds significant value in understanding insect habits, providing insights into motor sequences of organisms [

13], and establishing the pharmacological basis of insect grooming behavior [

5].

As an oligophagous pest,

Bactrocera minax lays eggs on almost all citrus crops and young fruits [

14,

15,

16,

17]. The fruits gradually rot and deteriorate, making them inedible or unsaleable. Data show that the economic loss caused by the

Bactrocera minax is up to 300 million yuan annually. The rotting citrus also harms human health [

18], thus this pest has become a significant concern in citrus-growing areas of China [

19]. Studying the behavioral characteristics of grooming is an effective way of controlling

Bactrocera minax [

20]. It also aids in the development of safe and efficient control strategies [

21].

In recent years, significant progress has been made in artificial intelligence techniques. They are highly sought after in various fields for their convenience, efficiency, and time, labor, and cost savings compared with traditional techniques [

22]. Computer vision technology [

23] combined with artificial intelligence has been widely used to reduce manual labor intensity or to even partially replace manual labor [

24,

25,

26,

27,

28,

29]. Compared with manual recognition, target detection algorithms [

30] like YOLO [

31], Faster R-CNN [

32], SSD [

33], and Transformer [

34] have been able to achieve double improvement in terms of recognition accuracy and recognition efficiency. In insect behavior research, manual frame-by-frame determination remains the prevalent method for statistical analysis. However, factors like rapid behavior switching and personnel fatigue hinder desired results. To address these challenges, quantifying insect behavior using computer vision techniques is gaining prominence. By applying these tools, entomologists can uncover new insights and find answers to crucial questions [

35].

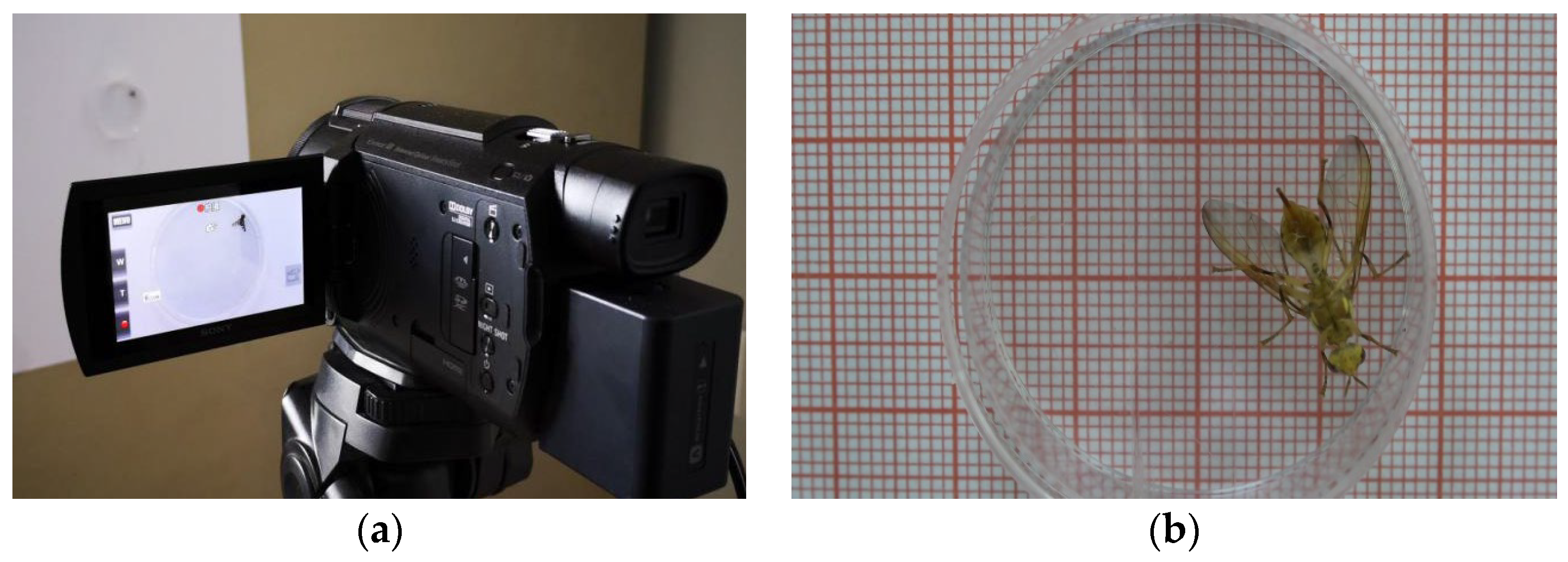

Ji [

36] first proposed using 3D convolutional neural networks for human behavior recognition. 3D convolutional neural networks have the advantage of simple network structure and can be executed end-to-end [

37]. The subsequent C3D (Convolutional 3D Network) [

38] model that emerged has also achieved better results in human behavior recognition. Hence, this study intends to extend C3D to creatures that move more frequently than humans and have smaller bodies, for example,

Bactrocera minax. We propose a method based on the combined use of target region localization and a 3D convolutional neural network to recognize the grooming behavior of

Bactrocera minax while ensuring that it can move freely. The YOLOv3 algorithm is used to obtain the bounding box of the body region of

Bactrocera minax from video input of continuous frames. The distances between the center points of the bounding boxes of adjacent frames are then compared with the set threshold. The sizes and locations of the ROIs intercepted during grooming behavior (head grooming, foreleg grooming, fore-mid leg grooming, hind leg grooming, hind mid leg grooming, and wing grooming) are the same. The ROI frames are stacked in succession and fed into the 3D convolutional neural network model, enabling the recognition of grooming behavior in

Bactrocera minax videos. This approach facilitates automatic detection and statistical analysis of insect grooming behavior over a specific period, introducing a novel and more efficient method of insect behavior research.

3. Results

3.1. Input Data Pre-Processing

ROI feature videos with seven types of behaviors were obtained using the dataset production method described in

Section 2.2.4. First, the ROI feature video dataset was converted, frame-by-frame, into frame images. The converted dataset was split into training and test sets in a 4:1 ratio. Additionally, the frame image size was standardized to

. To improve model accuracy and enhance the stability of the model while inputting 3D convolutional neural network data, this experiment converted the

pixel frame input size. The image was randomly cropped to

pixels and a continuous frame image was generated. A 3D sliding window was used to select 16 consecutive frames from this pixel region, which were then input into the network.. Before the data is input into the network, enhancement of each input data was performed using a horizontal flip of the image, with a probability of 0.5, and mean subtraction was performed along the three RGB channels of the frame image, to get an input network data size of

.

3.2. Three-Dimensional Neural Network Training Parameter Setting

In this experiment, the 3D neural network was trained through 20 iterations; the batch size used for each training was 8. The SGD optimizer was chosen because it can guarantee faster training speeds for large-scale datasets. The initial learning rate was set to 0.003 and decreased to 1/10 of the original rate every 4 training iterations. The random deactivation rate of the dropout layer following the fully connected layer was set to 0.5. This way, some neural network units could be temporarily discarded from the network based on a certain probability, weakening the correlation between neuronal nodes and enhancing the generalization ability.

3.3. Continuous Frame Target Region Interception

Extracting continuous frames of the insect’s body region (ROI) represents a crucial step in this experiment. The stability of the ROI across consecutive frames significantly influences the accuracy of grooming behavior predictions. Therefore, establishing an appropriate threshold value is necessary.

Too small a threshold can cause frequent jitter of captured ROIs in successive frames. Although the stability of inter-frame ROIs can be ensured during walking if a larger threshold is selected, insect movement prevents the body from being completely within the ROI, resulting in the inaccurate judgment of walking behavior, which further affects the detection accuracy of walking behavior in this experiment. Therefore, it is necessary to find a threshold that guarantees the stability of the interception position of the region of interest between successive frames during grooming behavior, while the body is completely within the region of interest.

Threshold selection experiments were conducted to select experimental data from 7 categories of uncropped behavioral videos (including mobile behaviors) by randomly selecting 20 videos from each category. We selected thresholds of 10–200 intervals for ROI acquisition. We combined the grooming behavior ROI stability rate and the torso coverage rate during mobile behavior to select thresholds more suitable for the experiment. The frequency of ROI changes between frames of grooming behavior was calculated to determine the stability rate (Equation (5). The ROI coverage rate during walking behavior was also calculated (Equation (6). The experimental results are shown in

Table 2.

The results in the Table show that a threshold value between 60 and 80 can indicate the ROI stability rate of grooming behavior and the torso coverage rate during walking behavior. In this experiment, 80 was chosen as the final threshold value because a larger threshold value can make the size and location of the intercepted ROI during walking more stable.

During the video recording process, we found that

Bactrocera minax often exhibited walking behaviors that were accompanied by fore-mid, hind-leg, and body movements. Based on the method proposed in this study, the grooming behavior was detected more efficiently and accurately by cropping the ROI, considering that

Bactrocera minax does not move during grooming. However, the inconsistency in the size and location of the cropped ROI between frames during walking could not be avoided. To better determine the walking behavior, two schemes were compared in this experiment. The first approach is to not perform ROI cropping on the feature dataset of walking behaviors during dataset creation. Instead, the original-sized walking behavior dataset is directly used for model training. Considering that the features of walking behavior are easier to learn than those of grooming behavior, this smoother original-size training set may have some effect on the inter-frame ROI inconsistency in the consistent video to be detected and has some generalization. The other way is using the cropped ROI dataset (with possible inconsistency in ROI size and location in consecutive frames) as the model training set for the walking behavior of

Bactrocera minax. The accuracy of the model trained using these two schemes on the test set is shown in

Table 3.

3.4. Statistical Results of Grooming Behavior Identification and Detection of Bactrocera minax

In this experiment, after preprocessing 1896 feature videos, 29,253 behavioral intervals were obtained. Each behavioral interval consists of 16 frames of images. The training set and test set were divided into 22,315 and 6938 behavior intervals, respectively. The network trained 20 epochs, with a total training time of about 40 h. The accuracy rates of the training set and test sets during the training process are shown in

Figure 9a. The accuracy curves leveled off after nine iterations, giving the best results and model convergence. The accuracy rate in the test set stabilized at about 93.45%.

Figure 9b shows the loss curve during model training. The loss curve of the test set had some fluctuations at 9–11 Epochs, probably due to some noise or extremely special poses in the data in the test set. The overall loss curve trended from decreasing to leveling off, indicating that the training was effective.

We selected another 700 pre-processed behavioral video models to evaluate the effect of behavioral classification. Each video lasted 8–10 s. The model was evaluated using test set accuracy, recall, and F1 scores [

39]. Accuracy and recall rate were calculated as shown below.

Accuracy is the simplest and most intuitive evaluation metric for classification problems. Accuracy reflects the proportion of all prediction outcomes that the model correctly predicts. However, when the target sample is unbalanced, the largest proportion of the target sample can significantly impact accuracy.

Precision is the proportion of samples for which the model predicts true positives to those predicted to be positive, reflecting the model’s accuracy in classifying a class.

Recall is the ratio of the number of samples identified as true positives to those that are positive and reflects the ability of the algorithm to find all positive samples.

The

F Score is a combination of

Precision and

Recall. When we consider

Precision and

Recall as equally important, it is called the

F1 Score.

F1 is calculated as follows:

The following results were obtained from the experiments. The model’s accuracy was 93.46%. The results of the behavior classification for each category are given in

Table 4.

The true positive and false positive rates for each category of behavior recognition can be referred to the confusion matrix in

Figure 10.

As can be seen, the model gave good results in all behavioral classifications, apart from mid-hind leg and wing grooming. After examining the original video of the predicted incorrect behavior, we speculate that Bactrocera minax changed their behavior within a 16-frame time window or multiple behaviors occurred, resulting in failure of behavior recognition.

To verify the usability of our method, we selected 15 unedited videos of Bactrocera minax. Professionals first counted the type and duration of Bactrocera minax grooming behavior before using the model. The results of the two methods were compared.

The start time and end time of each behavior were recorded through manual observation and statistical analysis. Different behavior types occurring within the same period but with duration intervals greater than 25 frames were considered as showing a difference. The comparison method is outlined in

Figure 11, and the results of the difference-degree calculation are presented in

Table 5. The average difference degree between this method and manual recognition is approximately 12%. However, the method offers a significant advantage in terms of speed, being 3–5 times faster than manual observations, thereby demonstrating practicality.

3.5. Comparison between Methods

Five grooming behavior detection methods were compared on the test set, including Zhang’s method [

21], based on spatiotemporal context, and Zou’s method [

20], which utilizes keypoints recognition with DeepLabCut. The third and fourth methods involved experimenting with different data processing approaches, while directly employing the C3D network [

40]. The final results indicate that the method proposed in this study can achieve real-time detection and analysis of grooming behavior in

Bactrocera minax videos while maintaining accuracy. The experimental results of different methods are presented in

Table 6.

4. Discussion

The study of insect behavior holds both theoretical and practical significance in the utilization of beneficial insects and in pest control [

41]. As insect behavior analysis continues to advance, statistical analysis of insect behavior categories over a specific timeframe has become integral to fundamental research. To enhance the efficiency and accuracy of insect behavior identification, this study proposes a mechanism that combines computer vision technology and deep learning; specifically, a three-dimensional convolutional neural network is employed for insect grooming behavior recognition. During the experiment, the research team collected various insects, including

Bactrocera minax,

Bactrocera cucurbitae (Coquillett),

Procecidochares utilis, and

Bactrocera dorsalis Hendel, from Jingzhou in Hubei Province, Kunming in Yunnan Province, and Haikou in Hainan Province. We compared the behavioral characteristics of these insects, ultimately discovering that

Bactrocera minax exhibits higher activity levels, more frequent grooming behaviors, and more easily recognizable behavior. Therefore,

Bactrocera minax was used as the main object of the experiment.

In recent years, bioinformatics analysis, which combines biological experimental data with computer technology, has found widespread applications in entomology [

42]. This includes the utilization of deep learning methods for automatic insect counting [

43] and various pest detection techniques [

44,

45,

46]. Unlike traditional image processing methods, these approaches leverage deep learning techniques to construct neural networks that offer enhanced prediction generalization and improved feature extraction in different environmental contexts. Automatic insect counting and pest detection focus more on spatial information and often use a two-dimensional convolution kernel to extract the required feature information. However, in behavior recognition, the time between successive frames is particularly important, thus this study chose to use three-dimensional convolution. Denis S. Willett [

47] classified the feeding patterns of insects by automatically monitoring the voltage changes on the insect food source feeder circuit, an advance that greatly reduced the time and effort required to analyze the insects. This approach requires the placement of equipment on the insect’s body. Our team preferred to start from another perspective to identify and detect insect behavior in a non-invasive way, reducing the intervention of equipment on the insects and reducing operational costs.

There are limited methods available for detecting insect grooming behavior from videos. Zhang [

21] proposed a method that combines spatiotemporal context and convolutional neural networks, achieving promising results. This approach generates spatiotemporal feature images by fusing temporal and spatial features and classifies behavior based on these images. Another method, presented by Nath [

48], utilizes key point tracking (DeepLabCut) to estimate animal posture. DeepLabCut demonstrates robust performance, even with a small number of training sets and challenging backgrounds such as cluttered and unevenly illuminated conditions. Zou [

20] applied body key point tracking to

Bactrocera minax in their experiments. However, the key point tracking was disturbed by the fact that the body of

Bactrocera minax is relatively small in the video and the body parts are obscured from each other during the behavior.

Convolutional neural networks (CNNs) have advanced behavior recognition, with 3D convolution expanding it to the spatiotemporal domain [

40]. Inspired by the success of C3D in human behavior recognition, our team sought to apply it to insects. Initial attempts using the C3D structure and raw-size video data yielded unsatisfactory detection results. Scaling and random cropping of frame images, along with the limited appearance of

Bactrocera minax in the dataset (7% of the field of view), resulted in significant feature loss and poor detection outcomes. To address this, our team proposed the idea of cropping insect body parts; subsequent experiments validated the feasibility of this approach.

After the final optimization of the network, the total recognition accuracy of the method used in this study to determine the grooming behavior of Bactrocera minax was 93.46% and the discrepancy with the manual recognition results was about 12%. When conducting the variance experiments, we found that most of the variances occurred when Bactrocera minax performed behaviors over very short durations that could be overlooked by human eyes. However, the method used in this study was able to recognize these behaviors. In the case of multiple rapid switching between foreleg grooming and head grooming, manual observation may record only one behavior, and such rapid switching between behaviors is common in the dataset in this experiment.

The next direction that needs to be investigated is improving the accuracy rate while ensuring detection speed. We recommend the following three aspects: The first aspect is to expand the size of the training set by changing data with incorrect labels after manual comparison and adding these to the training set. The second aspect is the optimization of the structure of the network. For example, a deeper 3D CNN structure may help in the extraction of behavioral features. Hara [

49] proposed that a deep network 3D ResNet applies to behavioral detection, where the 3D residual structure can effectively avoid the gradient explosion and gradient disappearance generated by the deep network. This structure can be added to the network structure of this experiment to extract deeper behavioral features. The third aspect can be initiated from the prediction result determination method. For example, the frame image input is divided into two branches. One branch of the model determines the results frame by frame using the method described in this article, while the other branch adopts a skipping frame method to determine the results. The skipping frame method better reflects the motion trend. Then, the final prediction result is obtained by combining these two results, allowing the model to fully learn the entire motion process.