LSD-YOLOv5: A Steel Strip Surface Defect Detection Algorithm Based on Lightweight Network and Enhanced Feature Fusion Mode

Abstract

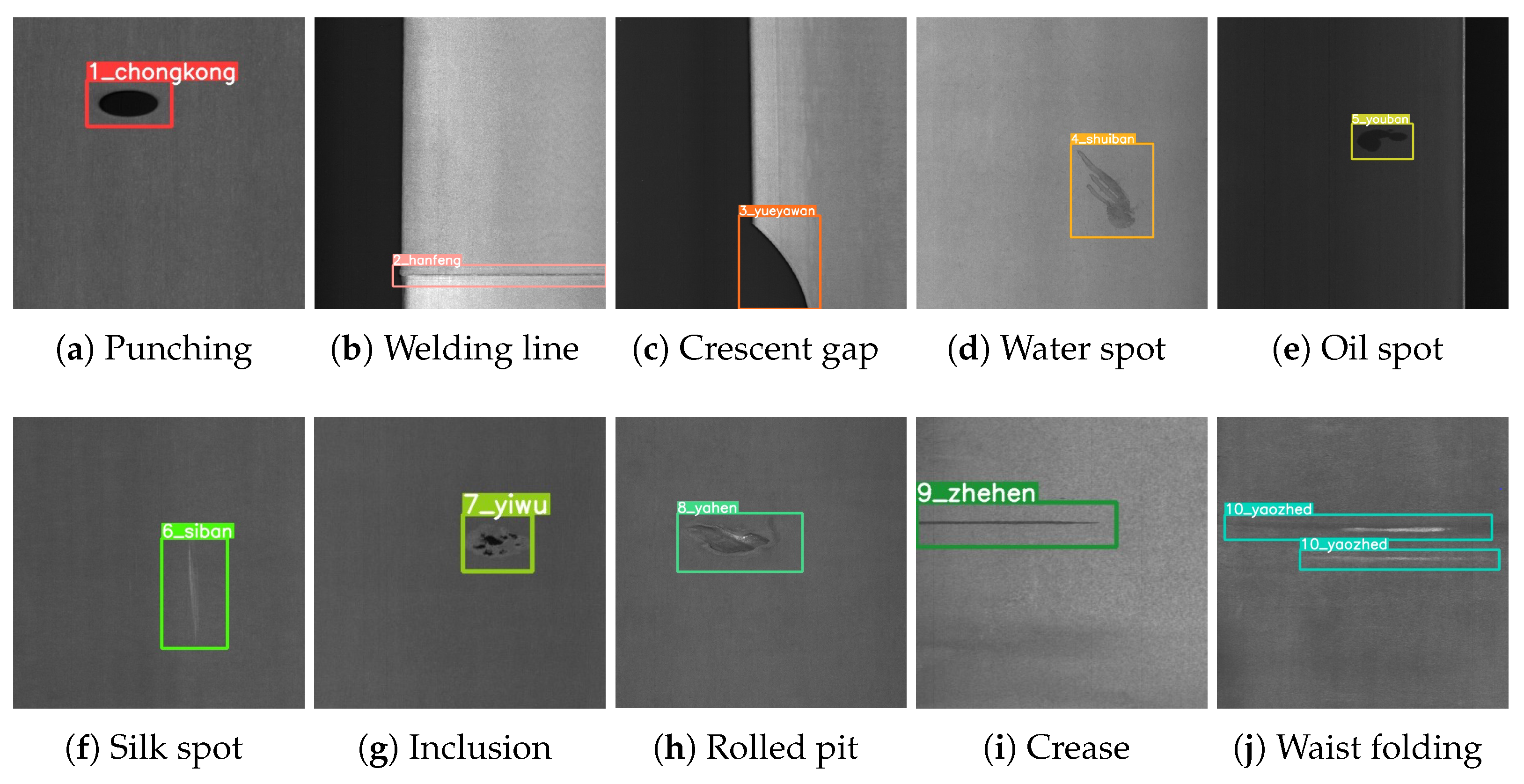

1. Introduction

- We developed a lightweight steel strip surface defect detection model, LSD-YOLOv5.

- We proposed a new, efficient feature extraction network by integrating the R-Stem module and CA-MbV2 module into the backbone network. This has led to a significant reduction in model parameters, while also improving the speed of detection.

- A smaller bidirectional feature pyramid network (BiFPN-S) was implemented in the model to effectively integrate feature information at multiple scales.

- We improved the recognition efficiency of overlapping targets by employing the Soft-DIoU-NMS prediction frame screening algorithm.

2. Materials and Methods

2.1. Overall Framework of Steel Strip Defect Detection

2.2. Dataset Processing

2.3. The Proposed LSD-YOLOv5

2.3.1. The Backbone Network of LSD-YOLOv5

2.3.2. The Feature Pyramid Network of LSD-YOLOv5

2.3.3. The Non-Maximum Suppression of LSD-YOLOv5

3. Experiments and Results

3.1. Experimental Environment and Parameter Setting

3.2. Evaluation Metrics

3.3. Ablation Experiments and Analysis

3.3.1. Ablation Experiment of LSD-YOLOv5

3.3.2. Visualization Results on Ablation Experiment

3.4. Comparative Experiments and Analysis

3.5. Performance Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wen, X.; Shan, J.; He, Y.; Song, K. Steel Surface Defect Recognition: A Survey. Coatings 2022, 13, 17. [Google Scholar] [CrossRef]

- Luo, Q.; Fang, X.; Su, J.; Zhou, J.; Zhou, B.; Yang, C.; Liu, L.; Gui, W.; Tian, L. Automated visual defect classification for flat steel surface: A survey. IEEE Trans. Instrum. Meas. 2020, 69, 9329–9349. [Google Scholar] [CrossRef]

- Wang, H.; Li, Z.; Wang, H. Few-shot steel surface defect detection. IEEE Trans. Instrum. Meas. 2021, 71, 1–12. [Google Scholar] [CrossRef]

- Tang, B.; Chen, L.; Sun, W.; Lin, Z.K. Review of surface defect detection of steel products based on machine vision. IET Image Process. 2023, 17, 303–322. [Google Scholar] [CrossRef]

- Zhao, W.; Song, K.; Wang, Y.; Liang, S.; Yan, Y. FaNet: Feature-aware Network for Few Shot Classification of Strip Steel Surface Defects. Measurement 2023, 208, 112446. [Google Scholar] [CrossRef]

- Ghanei, S.; Kashefi, M.; Mazinani, M. Eddy current nondestructive evaluation of dual phase steel. Mater. Des. 2013, 50, 491–496. [Google Scholar] [CrossRef]

- Keo, S.A.; Brachelet, F.; Breaban, F.; Defer, D. Steel detection in reinforced concrete wall by microwave infrared thermography. NDT E Int. 2014, 62, 172–177. [Google Scholar] [CrossRef]

- Zhang, H.; Gao, B.; Tian, G.Y.; Woo, W.L.; Bai, L. Metal defects sizing and detection under thick coating using microwave NDT. NDT E Int. 2013, 60, 52–61. [Google Scholar] [CrossRef]

- Wang, A.; Sha, M.; Liu, L.; Chu, M. A new process industry fault diagnosis algorithm based on ensemble improved binary-tree SVM. Chin. J. Electron. 2015, 24, 258–262. [Google Scholar] [CrossRef]

- Hussain, N.; Khan, M.A.; Tariq, U.; Kadry, S.; Yar, M.A.E.; Mostafa, A.M.; Alnuaim, A.A.; Ahmad, S. Multiclass Cucumber Leaf Diseases Recognition Using Best Feature Selection. Comput. Mater. Contin. 2022, 70, 3281–3294. [Google Scholar] [CrossRef]

- Hussain, N.; Khan, M.A.; Kadry, S.; Tariq, U.; Mostafa, R.R.; Choi, J.I.; Nam, Y. Intelligent deep learning and improved whale optimization algorithm based framework for object recognition. Hum. Cent. Comput. Inf. Sci 2021, 11, 2021. [Google Scholar]

- Chu, M.; Gong, R. Invariant feature extraction method based on smoothed local binary pattern for strip steel surface defect. ISIJ Int. 2015, 55, 1956–1962. [Google Scholar] [CrossRef]

- Wang, Y.; Xia, H.; Yuan, X.; Li, L.; Sun, B. Distributed defect recognition on steel surfaces using an improved random forest algorithm with optimal multi-feature-set fusion. Multimed. Tools Appl. 2018, 77, 16741–16770. [Google Scholar] [CrossRef]

- Gola, J.; Webel, J.; Britz, D.; Guitar, A.; Staudt, T.; Winter, M.; Mücklich, F. Objective microstructure classification by support vector machine (SVM) using a combination of morphological parameters and textural features for low carbon steels. Comput. Mater. Sci. 2019, 160, 186–196. [Google Scholar] [CrossRef]

- Luo, Q.; Sun, Y.; Li, P.; Simpson, O.; Tian, L.; He, Y. Generalized completed local binary patterns for time-efficient steel surface defect classification. IEEE Trans. Instrum. Meas. 2018, 68, 667–679. [Google Scholar] [CrossRef]

- Ashour, M.W.; Khalid, F.; Abdul Halin, A.; Abdullah, L.N.; Darwish, S.H. Surface defects classification of hot-rolled steel strips using multi-directional shearlet features. Arab. J. Sci. Eng. 2019, 44, 2925–2932. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Q.; Gu, J.; Su, L.; Li, K.; Pecht, M. Visual inspection of steel surface defects based on domain adaptation and adaptive convolutional neural network. Mech. Syst. Signal Process. 2021, 153, 107541. [Google Scholar] [CrossRef]

- Chen, X.; Lv, J.; Fang, Y.; Du, S. Online detection of surface defects based on improved YOLOV3. Sensors 2022, 22, 817. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Wei, M.; Li, Q.; Fu, Y.; Gan, Y.; Liu, H.; Ruan, J.; Liang, J. Surface Defect Detection of Steel Strip with Double Pyramid Network. Appl. Sci. 2023, 13, 1054. [Google Scholar] [CrossRef]

- Akhyar, F.; Liu, Y.; Hsu, C.Y.; Shih, T.K.; Lin, C.Y. FDD: A deep learning–based steel defect detectors. Int. J. Adv. Manuf. Technol. 2023, 126, 1093–1107. [Google Scholar] [CrossRef] [PubMed]

- Selamet, F.; Cakar, S.; Kotan, M. Automatic detection and classification of defective areas on metal parts by using adaptive fusion of faster R-CNN and shape from shading. IEEE Access 2022, 10, 126030–126038. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, C.; Yang, G.; Huang, Z.; Li, G. Msft-yolo: Improved yolov5 based on transformer for detecting defects of steel surface. Sensors 2022, 22, 3467. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wang, W.; Li, Z.; Shu, S.; Lang, X.; Zhang, T.; Dong, J. Development of a cross-scale weighted feature fusion network for hot-rolled steel surface defect detection. Eng. Appl. Artif. Intell. 2023, 117, 105628. [Google Scholar] [CrossRef]

- Li, G.; Zhao, S.; Zhou, M.; Li, M.; Shao, R.; Zhang, Z.; Han, D. YOLO-RFF: An Industrial Defect Detection Method Based on Expanded Field of Feeling and Feature Fusion. Electronics 2022, 11, 4211. [Google Scholar] [CrossRef]

- Kou, X.; Liu, S.; Cheng, K.; Qian, Y. Development of a YOLO-V3-based model for detecting defects on steel strip surface. Measurement 2021, 182, 109454. [Google Scholar] [CrossRef]

- Zhao, W.; Chen, F.; Huang, H.; Li, D.; Cheng, W. A new steel defect detection algorithm based on deep learning. Comput. Intell. Neurosci. 2021, 2021, 5592878. [Google Scholar] [CrossRef]

- Liu, R.; Huang, M.; Gao, Z.; Cao, Z.; Cao, P. MSC-DNet: An efficient detector with multi-scale context for defect detection on strip steel surface. Measurement 2023, 209, 112467. [Google Scholar] [CrossRef]

- Li, M.; Wang, H.; Wan, Z. Surface defect detection of steel strips based on improved YOLOv4. Comput. Electr. Eng. 2022, 102, 108208. [Google Scholar] [CrossRef]

- Tian, R.; Jia, M. DCC-CenterNet: A rapid detection method for steel surface defects. Measurement 2022, 187, 110211. [Google Scholar] [CrossRef]

- Zhou, W.; Hong, J. FHENet: Lightweight Feature Hierarchical Exploration Network for Real-Time Rail Surface Defect Inspection in RGB-D Images. IEEE Trans. Instrum. Meas. 2023, 72, 1–8. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, R.; Duan, G.; Tan, J. TruingDet: Towards high-quality visual automatic defect inspection for mental surface. Opt. Lasers Eng. 2021, 138, 106423. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L. Deep metallic surface defect detection: The new benchmark and detection network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.J.; Li, X.; Ling, C.X. Pelee: A real-time object detection system on mobile devices. Adv. Neural Inf. Process. Syst. 2018, 31, 5278. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhang, D.Y.; Luo, H.S.; Wang, D.Y.; Zhou, X.G.; Li, W.F.; Gu, C.Y.; Zhang, G.; He, F.M. Assessment of the levels of damage caused by Fusarium head blight in wheat using an improved YoloV5 method. Comput. Electron. Agric. 2022, 198, 107086. [Google Scholar] [CrossRef]

- Farady, I.; Kuo, C.C.; Ng, H.F.; Lin, C.Y. Hierarchical Image Transformation and Multi-Level Features for Anomaly Defect Detection. Sensors 2023, 23, 988. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Su, Y.; Wang, X.; Yu, J.; Luo, Y. An improved method MSS-YOLOv5 for object detection with balancing speed-accuracy. Front. Phys. 2023, 10, 1349. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

| Model | Precision (%) | mAP (%) | Recall (%) | Params (M) | Inference Time (ms) |

|---|---|---|---|---|---|

| YOLOv5s(baseline) | 67.6 | 65.5 | 62.3 | 7.04 | 14.2 |

| +MobileNetV2 | 64.1 | 63.8 | 61.4 | 1.25 | 9.3 |

| +CA-MbV2 | 66.3 | 64.7 | 62.6 | 1.83 | 9.9 |

| +R-Stem+CA-MbV2 | 67.7 | 65.9 | 63.5 | 1.98 | 10.1 |

| +R-Stem+CA-MbV2+BiFPN-S | 68.9 | 67.2 | 64.9 | 2.71 | 10.7 |

| LSD-YOLOv5 | 69.8 | 67.9 | 66.8 | 2.71 | 11.1 |

| Model | Precision (%) | mAP (%) | Recall (%) | Params (M) | Flops (G) | FPS |

|---|---|---|---|---|---|---|

| Faster R-CNN | 68.6 | 68.2 | 67.8 | 63.57 | 263.5 | 12.6 |

| SSD | 53.3 | 51.6 | 52.1 | 27.32 | 39.2 | 54.7 |

| YOLOv4 | 60.2 | 57.5 | 56.3 | 52.36 | 121.3 | 41.5 |

| YOLOv5s | 67.6 | 65.5 | 62.3 | 7.04 | 15.9 | 70 |

| YOLOv7 | 62.0 | 61.3 | 60.6 | 37.21 | 103.6 | 56.3 |

| LSD-YOLOv5 | 69.8 | 67.9 | 66.8 | 2.71 | 9.1 | 90.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Wan, F.; Lei, G.; Xiong, Y.; Xu, L.; Xu, C.; Zhou, W. LSD-YOLOv5: A Steel Strip Surface Defect Detection Algorithm Based on Lightweight Network and Enhanced Feature Fusion Mode. Sensors 2023, 23, 6558. https://doi.org/10.3390/s23146558

Zhao H, Wan F, Lei G, Xiong Y, Xu L, Xu C, Zhou W. LSD-YOLOv5: A Steel Strip Surface Defect Detection Algorithm Based on Lightweight Network and Enhanced Feature Fusion Mode. Sensors. 2023; 23(14):6558. https://doi.org/10.3390/s23146558

Chicago/Turabian StyleZhao, Huan, Fang Wan, Guangbo Lei, Ying Xiong, Li Xu, Chengzhi Xu, and Wen Zhou. 2023. "LSD-YOLOv5: A Steel Strip Surface Defect Detection Algorithm Based on Lightweight Network and Enhanced Feature Fusion Mode" Sensors 23, no. 14: 6558. https://doi.org/10.3390/s23146558

APA StyleZhao, H., Wan, F., Lei, G., Xiong, Y., Xu, L., Xu, C., & Zhou, W. (2023). LSD-YOLOv5: A Steel Strip Surface Defect Detection Algorithm Based on Lightweight Network and Enhanced Feature Fusion Mode. Sensors, 23(14), 6558. https://doi.org/10.3390/s23146558