Machine-Learning Algorithms for Process Condition Data-Based Inclusion Prediction in Continuous-Casting Process: A Case Study

Abstract

:1. Introduction

- Introduces time-series-driven analysis and defect prediction into the continuous-casting process.

- An EMD algorithm has been implemented to effectively decompose continuous-casting sensor signals and enhance the signal by reconstructing a multi-variate signal using the most informative IMFs.

- Conducts a comprehensive study on the topics in continuous-casting time-series-based quality prediction, ranging from feature engineering and data balancing to the application of machine-learning models.

2. Materials and Methods

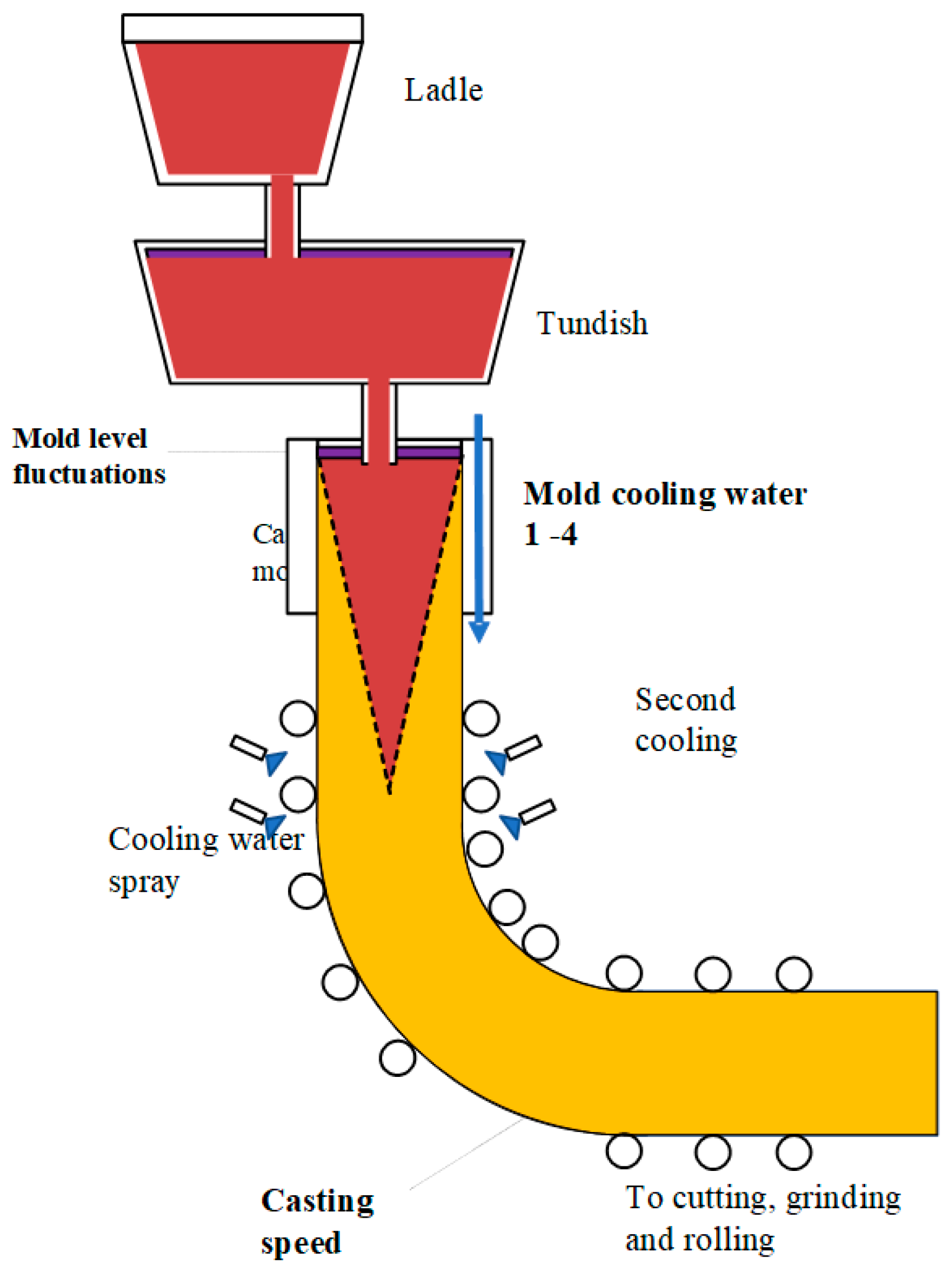

2.1. Casting Slabs and Quality-Control Issues

- Deoxidation.

- Composition control of special slags in the tundish and casting mold.

- The temperature, molten steel flow rate, and level control of the ladle, tundish, and mold.

- The control of oscillation and electromagnetic stirring of the casting mold.

2.2. Data Source

2.3. Preprocessing and Feature Engineering

2.3.1. Signal Processing

- The number of extrema and the number of zero-crossings must either equal or differ at most by one.

2.3.2. Statistical Feature Extraction and Selection

2.4. Algorithms for Classification and Data Resampling

2.4.1. Machine-Learning Algorithms

- K-Nearest Neighbors (KNN) [31]: The KNN algorithm is one of the most simple and basic supervised learning methods for classification and regression in industry. As the name “K-nearest neighbors” suggests, the algorithm is based on the idea that, if most of the k-nearest neighbors of a sample x, which are selected by a specific distance metric throughout the feature space, belong to a certain class label L, then this sample also belongs to this class label. However, KNNs are subject to some problems such as noises and outliers, class imbalance, dependency on neighborhood parameters, and high computing costs for large datasets.

- Support Vector Machines (SVM): SVM is a supervised learning method widely applied in classification and regression tasks. By mapping the data into high-dimensional space with kernel functions and constructing proper hyperplanes [32], SVM could effectively handle various classification datasets, even when the data distributions are nonlinear [33]. However, the SVM models are hard to fit on massive data and are sensitive to kernel parameters and normalization strategies. As a result, it might be a suboptimal choice when outliers and missing values are constant in input datasets.

- Decision Trees (DT): DT models are competitive alternatives for supervised learning tasks such as prediction, forecasting, and statistical feature selection. A DT classification model selects the best attribute using attribute-selecting measures to split records, designates the attribute as a decision node, breaks the dataset into smaller subsets, and recursively iterates the process on the child decision nodes until the stopping criteria are met [33]. DT models are simple and naturally interpreted, but may suffer from high variance and excessive complexity in large high-dimensional datasets.

- DT ensembles: The ensemble algorithm is based on the concept of combining multiple “weak” classifiers into a “strong” classifier [34]. The most popular ensemble models are arguably those based on DT algorithms, such as ransom forests (RF) and Adaptive Boosting (AdaBoost) models, which would be validated in this case study. In RF models, each DT is built simultaneously based on randomly generated subsets of the dataset. On the other hand, weak trees in AdaBoost are trained sequentially, each one trying to correct its predecessor.

- Artificial Neural Networks (ANN): ANNs are widely applied in various data-driven classification and forecasting. A typical ANN is a multi-layer, which could map highly complex patterns through multiple nonlinear transformations [35]. The back-propagation neural network (BPNN) is one of the most prevalent ANN models, which was tested and compared in this case study. Given the input/output pairs, BPNN can have its weights adjusted by the back-propagation (BP) algorithm to capture the non-linear relationships in complex datasets [36].

2.4.2. Resampling of Imbalanced Data

- Random under-sampling (RUS) and random over-sampling (ROS): RUS and ROS are among the simplest algorithms for balancing the data. The RUS method randomly selects major class instances and removes them from the dataset until the desired class distribution is achieved [17], while ROS randomly selects minor class instances and adds their copies into the dataset until the dataset reaches a more balanced distribution.

- Near-Miss (NM): The Near-Miss under-sampling technique works based on the widely-used nearest-neighbor method. First, the distances between majority and minority instances are calculated. Then, the nearest majority neighbors of the minority instances are selected and retained [37]. In this case study, the 3rd version of the Near-Miss algorithm was selected for estimation.

- Synthetic Minority Over-sampling Technique (SMOTE): SMOTE is a synthetic over-sampling algorithm that carries out an oversampling approach to rebalance the original dataset [38,39]. Instead of producing merely replicas of minority classes, SMOTE works by iteratively finding the nearest neighbors of the minority instances and generating new minority instances through the computation of distances.

3. Results and Discussions

3.1. Evaluation Metrics

3.2. Comparison between Machine-Learning Models

- KNN: The optimum number of neighbors found was 3, and the best distance metric was determined as cosine. The weight of the points was considered by the inverse of their distance to the query instance.

- SVM: Two SVM classifiers, one using a linear kernel function and the other using a sigmoid kernel. The C parameter of linear SVM was determined as 23.21, and the gamma was set to 0.825, while the C and gamma for sigmoid SVM were found to be 8.67 and 0.01, respectively. Since ROC AUC was introduced as the optimization target, probability estimation was enabled.

- DT: The feature selection criterion was set as “gini”; the maximum depth of tree mode was limited to 50 in order to decrease overfitting.

- RF: The optimum number of DTs in the ensemble was found to be 200, with the maximum feature set to 80 to decrease overfitting.

- AdaBoost: The base estimators were chosen as DTs with max deep of 1. The optimal number of estimators was found to be 45, with the optimal number determined as “SAMME.R”, and the learning rate found to be 0.25

- ANN: The optimal multi-level perceptron (MLP) network consisted of 3 hidden layers with 50 nodes each. The learning rate was established as “inverse scaling”, which gradually decreases the learning rate. The “sgd” optimizer was used to train the networks.

- Among all the machine-learning algorithms, DT and RF models generally performed better in terms of sensitivity to defective samples in the dataset, while still maintaining certain capability to correctly classify the normal instances. The RF model obtained relatively stable performances against the changes in sampling rate, indicating that the RF model is possibly the most capable among all the machine-learning algorithms investigated, given the specific dataset and training strategy.

- Overall, the performance of the ANN (MLP) model dropped more severely as the under-sampling technique removes the normal cases from the training set. The authors consider that the weights and biases generally take large training sets to fully converge, and thus are not suitable for under-sampling algorithm-processed datasets.

- Performances of all machine-learning models were lower than expected, which possibly indicates that the distinctions between normal and defective samples are minor from the feature space, and thus feature engineering procedures might have to be improved.

- All machine-learning algorithms except SVMs and ANN have obtained short computing times (under 0.1s) on the Case-4 NM dataset. The authors suggest that the longer computing time of SVM could be the result of the nonlinearity of feature space, which makes it harder to create optimal hyperplanes for classification.

- As expected, accuracy and weighted F1 scores obtained from the test set are substantially insensitive metrics for imbalanced classification tasks, which offer deceptively optimistic results. As is presented in Case 0, considering the defective rate of the raw dataset was merely 4.2%, a model labeling all samples as negative (“normal”), could obtain a high accuracy of 95.8% while providing nearly no useful information for quality prediction and knowledge discovery. For a similar reason, although ANN models have been generally more “accurate” on all the datasets, generally low recall rates could not provide much information for defect selection, and they could not satisfy the target of the case study.

- In such imbalanced classification cases where the F1 scores of majority classes were superb while those of minority classes were terrible, the combination of recall, macro-F1, and ROC AUC could be an optimal combination of performance assessment.

- Through the under-sampling of training samples, the prediction ability of the model for defective samples could be improved, while the prediction abilities for models could drop significantly, which is frequently referred to as the “precision-recall tradeoff”. Thus, considering the overall performances, Case-4-NM datasets were regarded as favorable for model training.

3.3. Impacts of Resampling Techniques

4. Conclusions and Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Louhenkilpi, S. Continuous Casting of Steel. In Treatise on Process Metallurgy; Elsevier: Amsterdam, The Netherlands, 2014; pp. 373–434. ISBN 978-0-08-096988-6. [Google Scholar]

- Cemernek, D.; Cemernek, S.; Gursch, H.; Pandeshwar, A.; Leitner, T.; Berger, M.; Klösch, G.; Kern, R. Machine Learning in Continuous Casting of Steel: A State-of-the-Art Survey. J. Intell. Manuf. 2022, 33, 1561–1579. [Google Scholar] [CrossRef]

- Sinha, A.K.; Sahai, Y. Mathematical Modeling of Inclusion Transport and Removal in Continuous Casting Tundishes. ISIJ Int. 1993, 33, 556–566. [Google Scholar] [CrossRef]

- Thomas, B.G.; Zhang, L. Flow Dynamics and Inclusion Transport in Continuous Casting of Steel. In Proceedings of the NSF Conference “Design, Service, and Manufacturing Grantees and Research”, Dallas, TX, USA, 5–8 January 2004; p. 24. [Google Scholar]

- Zhang, L.; Wang, Y. Modeling the Entrapment of Nonmetallic Inclusions in Steel Continuous-Casting Billets. JOM 2012, 64, 1063–1074. [Google Scholar] [CrossRef]

- Zhang, K.-T.; Liu, J.-H.; Cui, H. Effect of Flow Field on Surface Slag Entrainment and Inclusion Adsorption in a Continuous Casting Mold. Steel Res. Int. 2020, 91, 1900437. [Google Scholar] [CrossRef]

- Gupta, V.K.; Jha, P.K.; Jain, P.K. Numerical Investigation of Solidification Behavior and Inclusion Transport with M-EMS in Continuous Casting Mold. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2023. [Google Scholar] [CrossRef]

- Wang, J.-J.; Zhang, L.-F.; Chen, W.; Wang, S.-D.; Zhang, Y.-X.; Ren, Y. Kinetic model of the composition transformation of slag inclusions in molten steel in continuous casting mold. Chin. J. Eng. 2021, 43, 786–796. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, L.; Wang, Y.; Chen, W.; Wang, X.; Zhang, J. Effect of Electromagnetic Stirring on Inclusions in Continuous Casting Blooms of a Gear Steel. Met. Mater. Trans. B 2021, 52, 2341–2354. [Google Scholar] [CrossRef]

- Li, B.; Lu, H.; Zhong, Y.; Ren, Z.; Lei, Z. Numerical Simulation for the Influence of EMS Position on Fluid Flow and Inclusion Removal in a Slab Continuous Casting Mold. ISIJ Int. 2020, 60, 1204–1212. [Google Scholar] [CrossRef]

- Thomas, B.G. Review on Modeling and Simulation of Continuous Casting. Steel Res. Int. 2018, 89, 1700312. [Google Scholar] [CrossRef]

- Matsko, I.I. Adaptive Fuzzy Decision Tree with Dynamic Structure for Automatic Process Control System of Continuous Cast Billet Production. IOSRJEN 2012, 02, 53–55. [Google Scholar] [CrossRef]

- Zhao, L.; Dou, R.; Yin, J.; Yao, Y. Intelligent Prediction Method of Quality for Continuous Casting Process. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 3–5 October 2016; pp. 1761–1764. [Google Scholar]

- Cuartas, M.; Ruiz, E.; Ferreño, D.; Setién, J.; Arroyo, V.; Gutiérrez-Solana, F. Machine Learning Algorithms for the Prediction of Non-Metallic Inclusions in Steel Wires for Tire Reinforcement. J. Intell. Manuf. 2021, 32, 1739–1751. [Google Scholar] [CrossRef]

- Kong, Y.; Chen, D.; Liu, Q.; Long, M. A Prediction Model for Internal Cracks during Slab Continuous Casting. Metals 2019, 9, 587. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Xu, K.; He, F.; Zhang, Z. Application of Time Series Data Anomaly Detection Based on Deep Learning in Continuous Casting Process. ISIJ Int. 2022, 62, 689–698. [Google Scholar] [CrossRef]

- Wu, X.; Jin, H.; Ye, X.; Wang, J.; Lei, Z.; Liu, Y.; Wang, J.; Guo, Y. Multiscale Convolutional and Recurrent Neural Network for Quality Prediction of Continuous Casting Slabs. Processes 2021, 9, 33. [Google Scholar] [CrossRef]

- Dhaliwal, S.S.; Nahid, A.-A.; Abbas, R. Effective Intrusion Detection System Using XGBoost. Information 2018, 9, 149. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S. Cost-Sensitive KNN Classification. Neurocomputing 2020, 391, 234–242. [Google Scholar] [CrossRef]

- Memiş, S.; Enginoğlu, S.; Erkan, U. Fuzzy Parameterized Fuzzy Soft K-Nearest Neighbor Classifier. Neurocomputing 2022, 500, 351–378. [Google Scholar] [CrossRef]

- Kaminska, O.; Cornelis, C.; Hoste, V. Fuzzy Rough Nearest Neighbour Methods for Aspect-Based Sentiment Analysis. Electronics 2023, 12, 1088. [Google Scholar] [CrossRef]

- Kaushik, K.; Bhardwaj, A.; Dahiya, S.; Maashi, M.S.; Al Moteri, M.; Aljebreen, M.; Bharany, S. Multinomial Naive Bayesian Classifier Framework for Systematic Analysis of Smart IoT Devices. Sensors 2022, 22, 7318. [Google Scholar] [CrossRef]

- Erkan, U. A Precise and Stable Machine Learning Algorithm: Eigenvalue Classification (EigenClass). Neural Comput. Appl. 2021, 33, 5381–5392. [Google Scholar] [CrossRef]

- Nian, Y.; Zhang, L.; Zhang, C.; Ali, N.; Chu, J.; Li, J.; Liu, X. Application Status and Development Trend of Continuous Casting Reduction Technology: A Review. Processes 2022, 10, 2669. [Google Scholar] [CrossRef]

- Mills, K.C. Structure and Properties of Slags Used in the Continuous Casting of Steel: Part 1 Conventional Mould Powders. ISIJ Int. 2016, 56, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Thomas, B.G.; Cai, K.; Cui, J.; Zhu, L. Inclusion Investigation during Clean Steel Production at Baosteel. In Isstech-Conference Proceedings; Iron and Steel Society: Warrendale, PA, USA, 2003; pp. 141–156. [Google Scholar]

- Teshima, T.; Kubota, J.; Suzuki, M.; Ozawa, K.; Masaoka, T.; Miyahara, S. Influence of Casting Conditions on Molten Steel Flow in Continuous Casting Mold at High Speed Casting of Slabs. Tetsu-to-Hagane 1993, 79, 576–582. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Long, J.; Wang, X.; Zhou, W.; Zhang, J.; Dai, D.; Zhu, G. A Comprehensive Review of Signal Processing and Machine Learning Technologies for UHF PD Detection and Diagnosis (I): Preprocessing and Localization Approaches. IEEE Access 2021, 9, 69876–69904. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.-C.; Tung, C.C.; Liu, H.H. The Empirical Mode Decomposition and the Hilbert Spectrum for Nonlinear and Non-Stationary Time Series Analysis. Proc. R. Soc. Lond. A 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Junaid, M.; Ali, S.; Siddiqui, I.F.; Nam, C.; Qureshi, N.M.F.; Kim, J.; Shin, D.R. Performance Evaluation of Data-Driven Intelligent Algorithms for Big Data Ecosystem. Wirel. Pers. Commun. 2022, 126, 2403–2423. [Google Scholar] [CrossRef]

- Kramer, O. K-Nearest Neighbors. In Dimensionality Reduction with Unsupervised Nearest Neighbors; Intelligent Systems Reference Library; Springer: Berlin/Heidelberg, Germany, 2013; Volume 51, pp. 13–23. ISBN 978-3-642-38651-0. [Google Scholar]

- Chorowski, J.; Wang, J.; Zurada, J.M. Review and Performance Comparison of SVM- and ELM-Based Classifiers. Neurocomputing 2014, 128, 507–516. [Google Scholar] [CrossRef]

- Menezes, A.G.C.; Araujo, M.M.; Almeida, O.M.; Barbosa, F.R.; Braga, A.P.S. Induction of Decision Trees to Diagnose Incipient Faults in Power Transformers. IEEE Trans. Dielectr. Electr. Insul. 2022, 29, 279–286. [Google Scholar] [CrossRef]

- Schapire, R.E. The Strength of Weak Learnability. Mach Learn 1990, 5, 197–227. [Google Scholar] [CrossRef] [Green Version]

- Saravanan, N.; Siddabattuni, V.N.S.K.; Ramachandran, K.I. Fault Diagnosis of Spur Bevel Gear Box Using Artificial Neural Network (ANN), and Proximal Support Vector Machine (PSVM). Appl. Soft Comput. 2010, 10, 344–360. [Google Scholar] [CrossRef]

- Rajakarunakaran, S.; Venkumar, P.; Devaraj, D.; Rao, K.S.P. Artificial Neural Network Approach for Fault Detection in Rotary System. Appl. Soft Comput. 2008, 8, 740–748. [Google Scholar] [CrossRef]

- Mqadi, N.M.; Naicker, N.; Adeliyi, T. Solving Misclassification of the Credit Card Imbalance Problem Using Near Miss. Math. Probl. Eng. 2021, 2021, 7194728. [Google Scholar] [CrossRef]

- Fernandez, A.; Garcia, S.; Herrera, F.; Chawla, N.V. SMOTE for Learning from Imbalanced Data: Progress and Challenges, Marking the 15-Year Anniversary. J. Artif. Intell. Res. 2018, 61, 863–905. [Google Scholar] [CrossRef]

- Dablain, D.; Krawczyk, B.; Chawla, N.V. DeepSMOTE: Fusing Deep Learning and SMOTE for Imbalanced Data. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Abdolmaleki, A.; Rezvani, M.H. An Optimal Context-Aware Content-Based Movie Recommender System Using Genetic Algorithm: A Case Study on MovieLens Dataset. J. Exp. Theor. Artif. Intell. 2022, 1–27. [Google Scholar] [CrossRef]

- Fawcett, T. An Introduction to ROC Analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Muschelli, J. ROC and AUC with a Binary Predictor: A Potentially Misleading Metric. J. Classif. 2020, 37, 696–708. [Google Scholar] [CrossRef] [Green Version]

- Hills, J.; Lines, J.; Baranauskas, E.; Mapp, J.; Bagnall, A. Classification of Time Series by Shapelet Transformation. Data Min. Knowl. Discov. 2013, 28, 851–881. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.-L.; Wan, X.; Gao, Z.; Li, X.; Feng, B. Research on Modelling and Optimization of Hot Rolling Scheduling. J. Ambient. Intell. Hum. Comput. 2019, 10, 1201–1216. [Google Scholar] [CrossRef]

| Sample Cast No. | Status | Parameter Settings | Value | Parameter Output | Range |

|---|---|---|---|---|---|

| 210CS5300 | Defective | CS (m/min) | 1.31 | CS | 1.21–1.31 |

| MLF (mm) | 46 | MLF | 44.8–47.5 | ||

| CW1 (L/min) | 2520 | CW1 | 2504–2530 | ||

| CW2 (L/min) | 360 | CW2 | 357–362 | ||

| CW3 (L/min) | 2520 | CW3 | 2503–2531 | ||

| CW4 (L/min) | 360 | CW4 | 357–363 | ||

| 205CS5400 | Normal | CS (m/min) | 1.2 | CS | 1.198–1.262 |

| MLF (mm) | 50 | MLF | 45.7–52.2 | ||

| CW1 (L/min) | 2450 | CW1 | 2430–2466 | ||

| CW2 (L/min) | 360 | CW2 | 358–362 | ||

| CW3 (L/min) | 2450 | CW3 | 2431–2458 | ||

| CW4 (L/min) | 360 | CW4 | 357–362 | ||

| 210CS0200 | Normal | CS (m/min) | 1.2 | CS | 1.012–1.263 |

| MLF (mm) | 90 | MLF | 87.4–93.4 | ||

| CW1 (L/min) | 400 | CW1 | 389–407 | ||

| CW2 (L/min) | 1950 | CW2 | 1945–1956 | ||

| CW3 (L/min) | 400 | CW3 | 398.7–401.8 | ||

| CW4 (L/min) | 1950 | CW4 | 1947–1956.2 | ||

| 210CS5100 | Defective | CS (m/min) | 1.22 | CS | 1.128–1.221 |

| MLF (mm) | 46 | MLF | 44.3–48.2 | ||

| CW1 (L/min) | 2520 | CW1 | 2512–2540 | ||

| CW2 (L/min) | 360 | CW2 | 358–362 | ||

| CW3 (L/min) | 2520 | CW3 | 2509–2530 | ||

| CW4 (L/min) | 360 | CW4 | 354–363 |

| Actual Label | |||

|---|---|---|---|

| 0 | 1 | ||

| Predicted label | 0 | TN | FP |

| 1 | FN | TP | |

| Case | Model | Recall: Normal | Recall: Defective | Macro-F1 | Weighted-F1 | ROC AUC | Accuracy |

|---|---|---|---|---|---|---|---|

| Case 4-NM | RF | 0.76 | 0.41 | 0.53 | 0.83 | 0.64 | 0.77 |

| DT | 0.68 | 0.42 | 0.45 | 0.70 | 0.57 | 0.67 | |

| SVM-linear | 0.78 | 0.25 | 0.47 | 0.82 | 0.60 | 0.75 | |

| SVM-sigmoid | 0.79 | 0.27 | 0.49 | 0.80 | 0.55 | 0.77 | |

| ANN | 0.98 | 0.11 | 0.56 | 0.93 | 0.63 | 0.94 | |

| KNN | 0.86 | 0.19 | 0.50 | 0.83 | 0.54 | 0.83 | |

| AdaBoost | 0.98 | 0.11 | 0.56 | 0.93 | 0.63 | 0.94 | |

| Case 3-NM | RF | 0.71 | 0.42 | 0.47 | 0.79 | 0.62 | 0.70 |

| DT | 0.66 | 0.58 | 0.45 | 0.63 | 0.60 | 0.65 | |

| SVM-linear | 0.72 | 0.27 | 0.45 | 0.76 | 0.51 | 0.70 | |

| SVM-sigmoid | 0.82 | 0.28 | 0.51 | 0.87 | 0.61 | 0.82 | |

| ANN | 0.89 | 0.18 | 0.51 | 0.86 | 0.54 | 0.86 | |

| KNN | 0.69 | 0.46 | 0.46 | 0.76 | 0.57 | 0.68 | |

| AdaBoost | 0.96 | 0.11 | 0.54 | 0.92 | 0.60 | 0.92 | |

| Case 2-NM | RF | 0.55 | 0.59 | 0.4 | 0.67 | 0.62 | 0.55 |

| DT | 0.53 | 0.58 | 0.36 | 0.58 | 0.55 | 0.53 | |

| SVM-linear | 0.59 | 0.43 | 0.45 | 0.63 | 0.51 | 0.58 | |

| SVM-sigmoid | 0.79 | 0.22 | 0.49 | 0.83 | 0.55 | 0.77 | |

| ANN | 0.48 | 0.46 | 0.36 | 0.51 | 0.52 | 0.49 | |

| KNN | 0.57 | 0.42 | 0.40 | 0.67 | 0.53 | 0.55 | |

| AdaBoost | 0.88 | 0.16 | 0.5 | 0.88 | 0.58 | 0.85 | |

| Case 1-NM | RF | 0.32 | 0.83 | 0.28 | 0.44 | 0.58 | 0.32 |

| DT | 0.41 | 0.7 | 0.34 | 0.52 | 0.57 | 0.39 | |

| SVM-linear | 0.48 | 0.61 | 0.33 | 0.56 | 0.56 | 0.49 | |

| SVM-sigmoid | 0.50 | 0.48 | 0.36 | 0.63 | 0.51 | 0.49 | |

| ANN | 0.28 | 0.87 | 0.22 | 0.39 | 0.52 | 0.34 | |

| KNN | 0.62 | 0.42 | 0.34 | 0.57 | 0.55 | 0.43 | |

| AdaBoost | 0.58 | 0.44 | 0.4 | 0.7 | 0.54 | 0.58 | |

| Case 0 | RF | 1 | 0 | 0.49 | 0.93 | 0.65 | 0.96 |

| DT | 1 | 0 | 0.49 | 0.93 | 0.63 | 0.96 | |

| SVM-linear | 0.96 | 0.08 | 0.52 | 0.92 | 0.51 | 0.92 | |

| SVM-sigmoid | 0.96 | 0.06 | 0.50 | 0.92 | 0.50 | 0.92 | |

| ANN | 1 | 0 | 0.49 | 0.93 | 0.55 | 0.96 | |

| KNN | 1 | 0 | 0.49 | 0.93 | 0.52 | 0.96 | |

| AdaBoost | 0.95 | 0.12 | 0.53 | 0.91 | 0.61 | 0.91 |

| Cases | Models | Computing Time |

|---|---|---|

| Case 4-NM | RF | 0.087 s |

| DT | 0.039 s | |

| SVM-linear | 8.957 s | |

| SVM-sigmoid | 0.596 s | |

| ANN | 0.263 s | |

| KNN | 0.075 s | |

| AdaBoost | 0.069 s |

| Cases | Models | Recall: Normal | Recall: Defective | Macro-F1 | Weighted-F1 | ROC AUC | Accuracy |

|---|---|---|---|---|---|---|---|

| Case 4-NM | RF | 0.76 | 0.41 | 0.53 | 0.83 | 0.64 | 0.77 |

| Case 4-RUS | 0.68 | 0.47 | 0.45 | 0.77 | 0.61 | 0.67 | |

| Case 4-ROS | 0.99 | 0 | 0.49 | 0.93 | 0.63 | 0.93 | |

| Case 4-SMOTE | 0.94 | 0.01 | 0.50 | 0.92 | 0.65 | 0.94 |

| Cases | Model | Computing Time |

|---|---|---|

| Case 4-NM | RF | 0.087 s |

| Case 4-RUS | 0.089 s | |

| Case 4-ROS | 0.093 s | |

| Case 4-SMOTE | 0.091 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Gao, Z.; Sun, J.; Liu, L. Machine-Learning Algorithms for Process Condition Data-Based Inclusion Prediction in Continuous-Casting Process: A Case Study. Sensors 2023, 23, 6719. https://doi.org/10.3390/s23156719

Zhang Y, Gao Z, Sun J, Liu L. Machine-Learning Algorithms for Process Condition Data-Based Inclusion Prediction in Continuous-Casting Process: A Case Study. Sensors. 2023; 23(15):6719. https://doi.org/10.3390/s23156719

Chicago/Turabian StyleZhang, Yixiang, Zenggui Gao, Jiachen Sun, and Lilan Liu. 2023. "Machine-Learning Algorithms for Process Condition Data-Based Inclusion Prediction in Continuous-Casting Process: A Case Study" Sensors 23, no. 15: 6719. https://doi.org/10.3390/s23156719