Weak Localization of Radiographic Manifestations in Pulmonary Tuberculosis from Chest X-ray: A Systematic Review

Abstract

1. Introduction

1.1. Tuberculosis (TB)

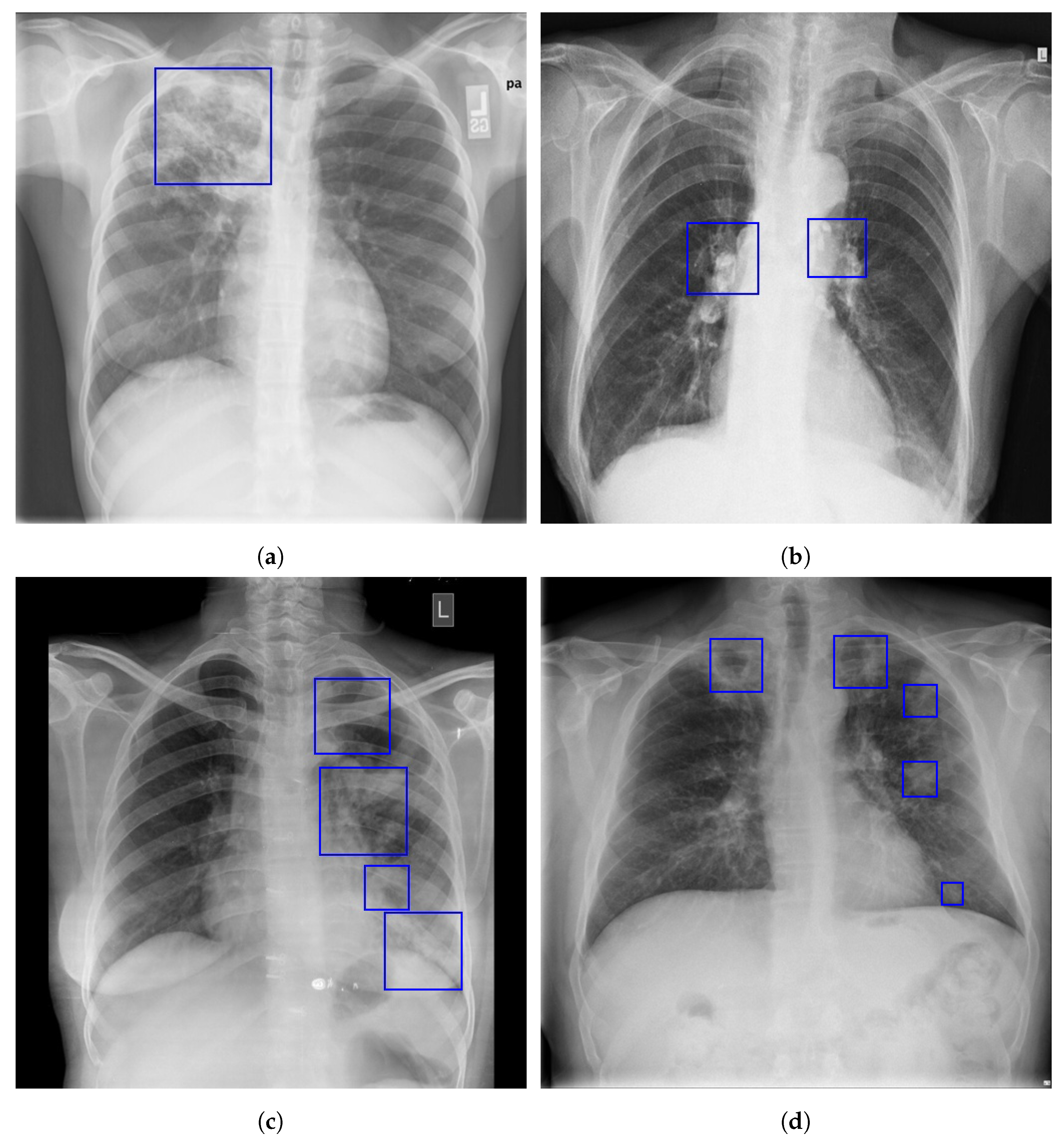

1.2. Radiographic Features of Pulmonary TB

1.3. Localization of Pulmonary Tuberculosis in a Chest X-ray

2. Related Work

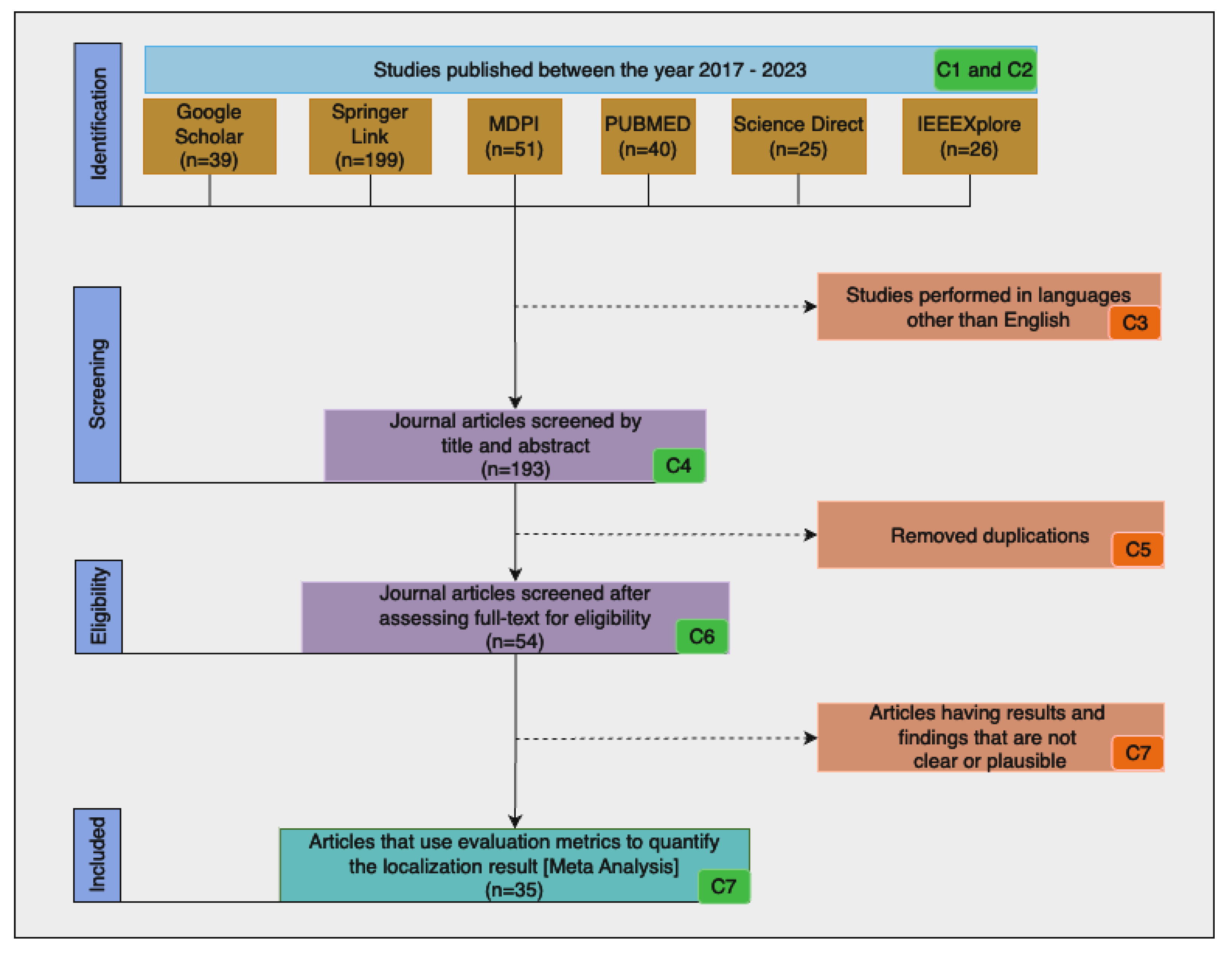

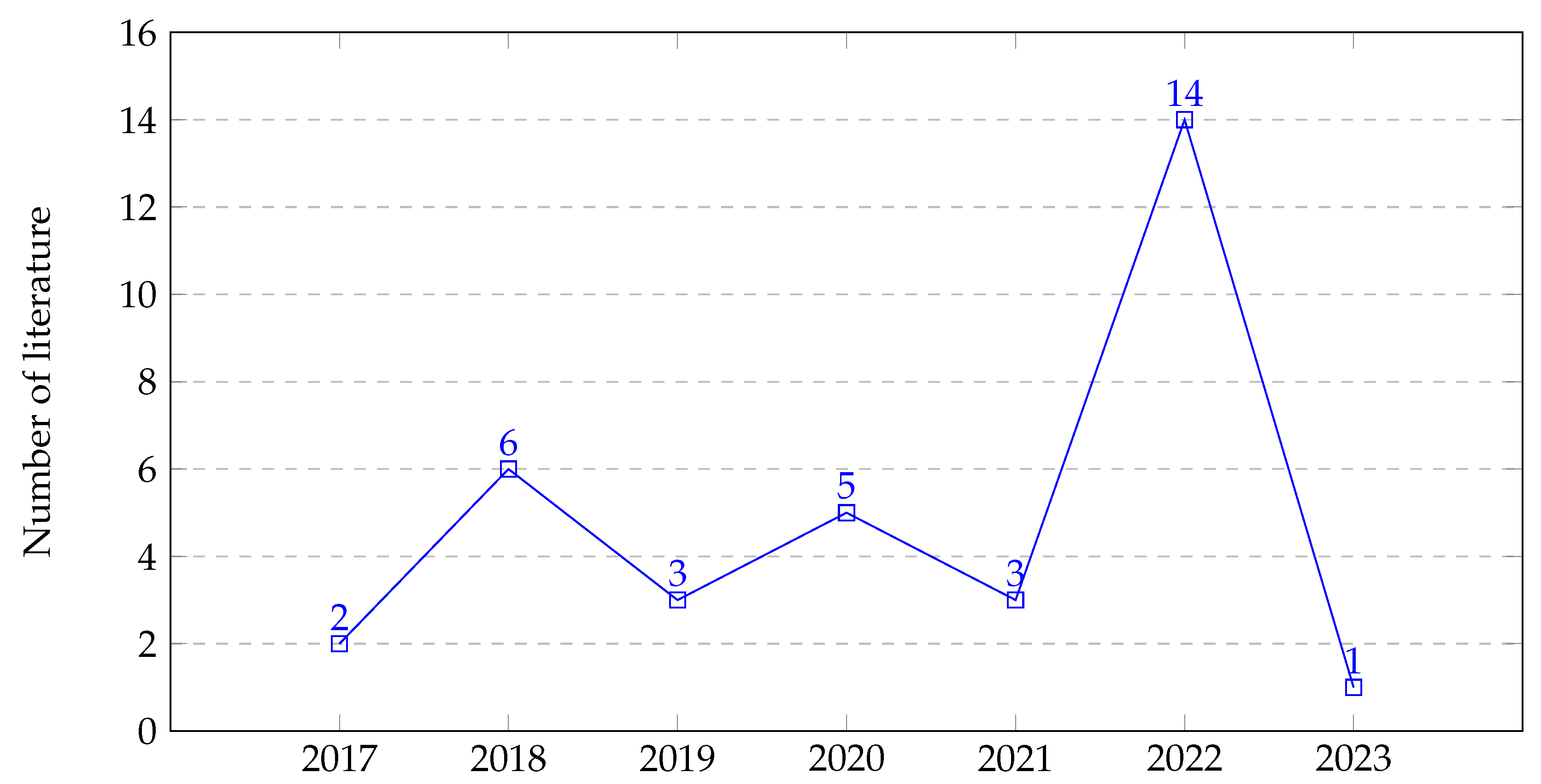

3. Methodology

| Algorithm 1: The steps followed to complete the review as per the PRISMA guideline. |

|

3.1. Research Questions

- RQ1: Are there any freely available labeled chest radiography datasets? What are their characteristics?

- RQ2: What are the challenges in accurately localizing pulmonary tuberculosis in a chest X-ray?

- RQ3: What are the weakly supervised learning techniques in localizing pulmonary tuberculosis in a chest X-ray?

- RQ4: What are the limitations and challenges in the weakly supervised localization of pulmonary TB in a chest X-ray?

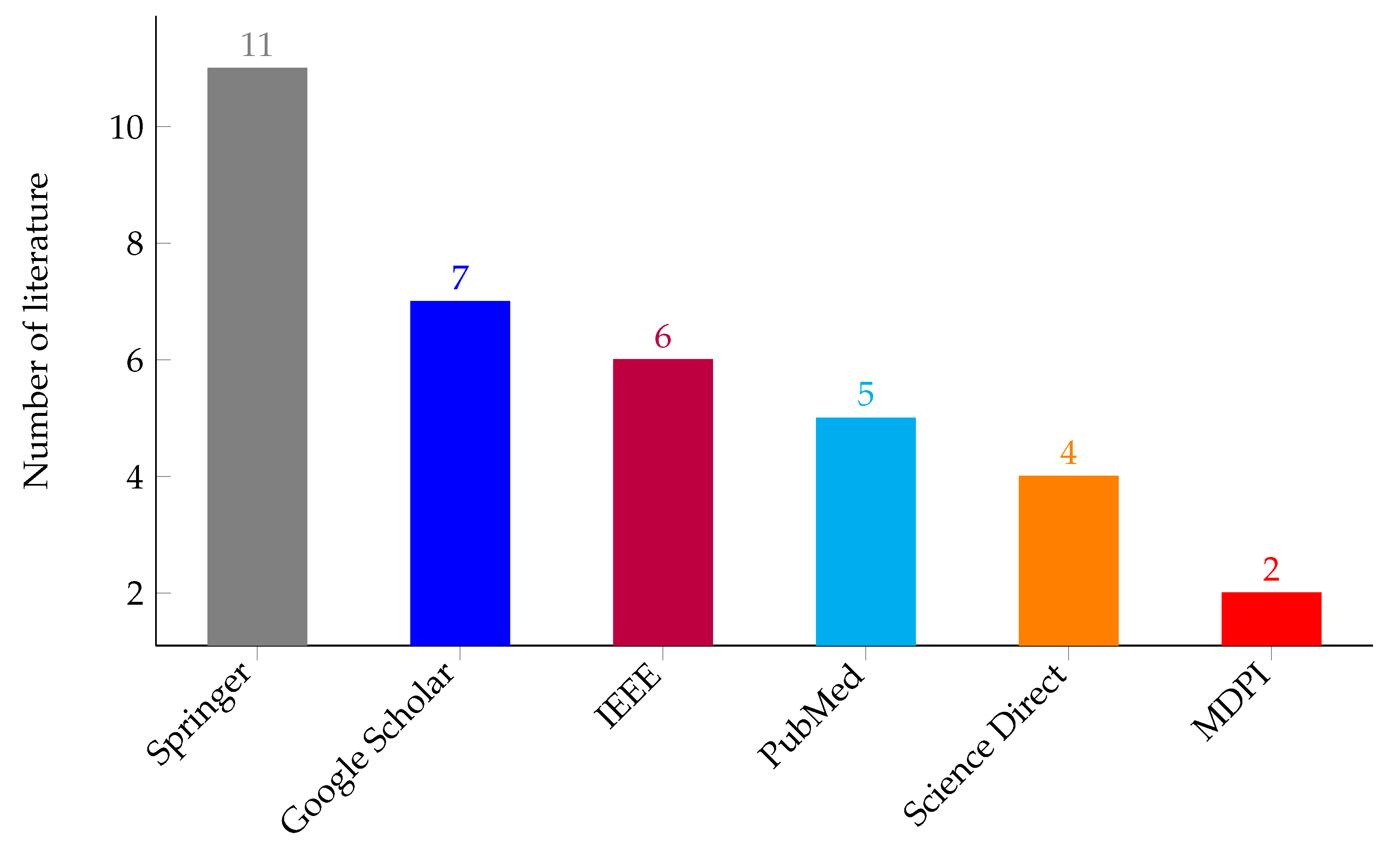

3.2. Search Strategies

| Algorithm 2: The pseudo-code used to create search strings. |

|

4. Publicly Available Datasets for Tuberculosis Localization in Chest X-ray

- NIAID [36] contains 1299 instances in total from five different countries (Azerbaijan, Belarus, Moldova, Georgia, and Romania), 976 (75.1%) of which are multi- or extensively drug-resistant and 38.2% of which contain X-ray images.

- CheXpert [40] is a large public dataset consisting of 224,316 chest radiographs from 65,240 patients that have been classified as positive, negative, or ambiguous based on the presence of 14 observations (atelectasis, cardiomegaly, consolidation, edema, pleural effusion, pneumonia, pneumothorax, enlarged cardiom, lung lesion, lung opacity, pleural other, fracture, support devices, and no findings).

- VinDr-CXR [41] is a dataset containing 100,000 images collected from two major hospitals in Vietnam. Out of this raw data, the publicly released dataset has 18,000 images carefully annotated by 17 expert radiologists, with 22 local labels for rectangles enclosing anomalies and 6 global labels for suspected diseases. The dataset is further divided into 15,000 training sets and 3000 test sets. The test sets’ scans were labeled by the collective opinion of five radiologists, as opposed to the training set’s scans, which were each independently labeled by three radiologists.

- MIMIC-CXR [42] comprises 227,835 images for 65,379 patients who visited the emergency room at Beth Israel Deaconess Medical Center between 2011 and 2016. It is the biggest dataset, containing a semi-structured free-text radiology report that contains the radiological findings of the images and was created contemporaneously during ordinary clinical care.

- Shenzhen [38] contains 662 CXR images, comprising 326 images for normal cases and 336 images depicting TB. These CXR images, which include pediatric CXR, were all taken within a month.

- Montgomery [38] has 138 frontal CXR images, comprising 58 images showing TB and 80 for normal cases. It was gathered in association with the Montgomery County and the US Department of Health and Human Services.

- Indiana [43] is a publicly accessible dataset gathered from various hospitals and provided to the University of Indiana School of Medicine. It contains 7470 CXR images (frontal and lateral) and 3955 related reports. The CXR images in this collection depict a number of disorders, including pleural effusion, cardiac hypertrophy, opacity, and pulmonary edema.

- ChestX-ray8 [44] contains 108,948 posterior CXR images in total, of which 24,636 have one or more anomalies. The remaining 84,312 images are from normal patients. The dataset was gathered between 1992 and 2015. Every image in the collection can have many labels, and the labels are for eight different diseases, including pneumothorax, cardiomegaly, effusion, atelectasis, masses, pneumonia, infiltration, and nodules. Natural language processing (NLP) algorithms are used to text-mine the labels from the related radiological reports.

- ChestX-ray14 [44] is an updated ChestX-ray8 dataset that includes six additional frequent chest abnormalities (hernia, fibrosis, pleural thickening, consolidation, emphysema, and edema). ChestX-ray14 has 112,120 frontal view CXR images from 30,805 different patients, of which 51,708 have one or more abnormalities and the remaining 60,412 do not. Using NLP methods, ChestX-ray14 was also labeled.

- Pediatric-CXR [45] is made up of 5856 chest X-ray scans of pediatric patients from the Guangzhou Women and Children’s Medical Center in China, 1583 of which are normal cases and 4273 of which have pneumonia.

- Padchest (Pathology detection in chest radiographs ) [46] was gathered from 2009 to 2017. There are 168,861 CXR images in it, which were gathered from 67,000 patients at the San Juan Hospital in Spain.

- Japanese Society of Radiological Technology (JSRT) [47] was gathered in 1998 by the Japanese Society of Radiological Technology in coordination with the Japanese Radiological Society from 13 Japanese institutions and 1 American institution. There are 247 posteroanterior CXR images in all, comprising 93 non-nodule CXR images, 100 CXRs with malignant nodules, and 54 with benign nodules. Data from JSRT include metadata, such as the nodule location, gender, age, and nodule diagnosis (malignant/benign). The CXR image size is 2048 × 2048 pixels with a spatial resolution of 0.175 mm/pixel and 12-bit gray levels.

- RSNA-Pneumonia-CXR [48] was gathered by the Radiological Society of North America (RSNA) and the Society of Thoracic Radiology (STR). There are 30,000 CXR images in the dataset in total, of which 15,000 have pneumonia or other related disorders like infiltration and consolidation identified.

- Belarus dataset [49] is a CXR dataset compiled for a study on drug resistance started by the National Institute of Allergy and Infectious Diseases, Ministry of Health, Republic of Belarus. It comprises 306 CXR images of 169 patients.

| Dataset | Quantity and Size in Pixels | Cases/Findings | Pixel-Level/ Bounding Box Annotation |

|---|---|---|---|

| NIAID [36] | 496 | TB | N/A |

| TBX11K [37] | 11,200 images with 512 × 512 pixels | Healthy, sick but non-TB, active TB, latent TB, and uncertain | N/A |

| CheXPert [40] | 224,316 | Atelectasis, cardiomegaly, consolidation, edema, pleural effusion, pneumonia, pneumothorax, enlarged cardiom, lung lesion, lung opacity, pleural other, fracture, support devices, and no findings | N/A |

| VinDr-CXR [41] | 18,000 | Aortic enlargement, atelectasis, cardiomegaly, calcification, clavicle fracture, consolidation, edema, emphysema, enlarged PA, interstitial lung disease (ILD), infiltration, lung cavity, lung cyst, lung opacity, mediastinal shift, nodule/mass, pulmonary fibrosis, pneumothorax, pleural thickening, pleural effusion, rib fracture, other lesions, lung tumor, pneumonia, tuberculosis, other diseases, chronic obstructive pulmonary disease (copd), and no finding | Available |

| MIMIC-CXR [42] | 227,835 images with 2544 × 305 pixels | 14 findings | N/A |

| Montgomery [38] | 138 images with 4020 × 4892 pixels | TB and normal | N/A |

| Shenzhen [38] | 662 images with 3000 × 3000 pixels | TB and normal | N/A |

| Indiana [43] | 7470 images with 512 × 512 pixels | 10 findings including opacity, cardiomegaly, pleural effusion, and pulmonary edema | N/A |

| ChestX-ray8 [44] | 108,948 images with 1024 × 1024 pixels | Pneumothorax, cardiomegaly, effusion, atelectasis, mass, pneumonia, infiltration, and nodule | Available |

| ChestX-ray14 [44] | 112,120 images with 1024 × 1024 pixels | Pneumothorax, cardiomegaly, effusion, atelectasis, mass, pneumonia, infiltration, nodule, hernia, fibrosis, pleural thickening, consolidation, emphysema, and edema | N/A |

| Pediatric-CXR [45] | 5856 | Normal, bacterial pneumonia, viral pneumonia | N/A |

| Padchest [46] | 168,861 | 193 findings | N/A |

| JSRT [47] | 247 with 2048 × 2048 pixels | Non-nodule, malignant nodules, and benign nodules | Available |

| RSNA-Pneumonia-CXR [48] | 30,000 | Pneumonia, infiltration, and consolidation | N/A |

| Belarus dataset [49] | 306 with 2248 × 2248 pixels | TB and normal | N/A |

5. Weakly Supervised Segmentation and Localization

5.1. Self-Training Weakly Supervised Segmentation

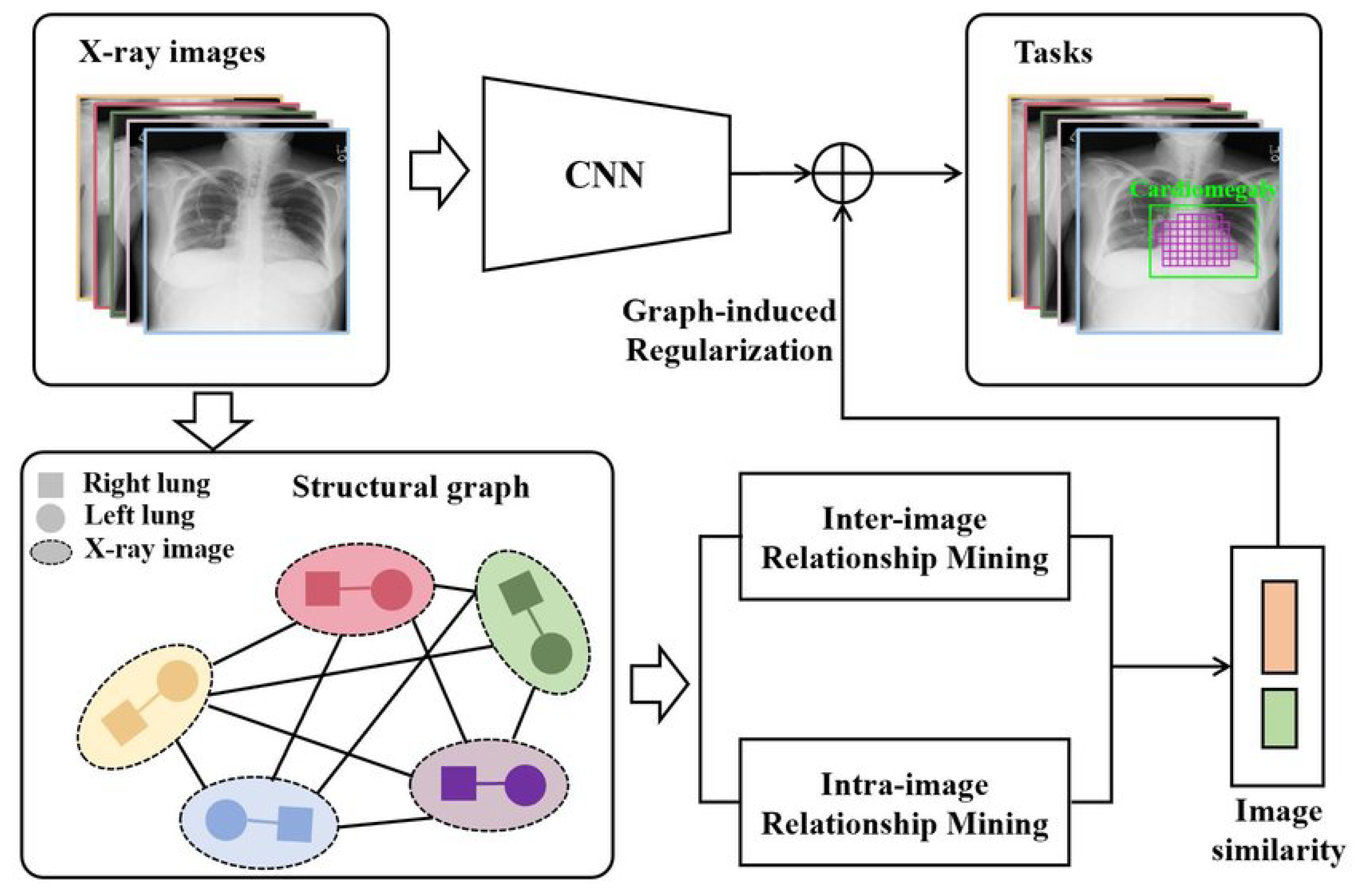

5.2. Graphical-Model-Based Weakly Supervised Segmentation

5.3. Variants of Multiple-Instance Learning (MIL) for Weakly Supervised Segmentation

5.4. Weak Localization by Extracting Visualization from Classification Task

5.4.1. Occlusion Sensitivity

5.4.2. Saliency Map

5.4.3. Class Activation Map (CAM)

5.4.4. Grad-CAM (++)

5.4.5. Score-CAM

5.4.6. Class-Selective Relevance Map (CRM)

5.4.7. GSInquire

5.4.8. Attention Mechanism

5.5. Seeding-Based Weakly Supervised Segmentation

6. Discusion

| Author and Year | Dataset | Method |

|---|---|---|

| Wang et al., 2017 [44] | ChestX-ray8 | CAM with Log-Sum-Exp (LSE) [113] |

| Islam et al., 2017 [84] | Indiana, JSRT, and Shenzhen datasets | Occlusion sensitivity [73] |

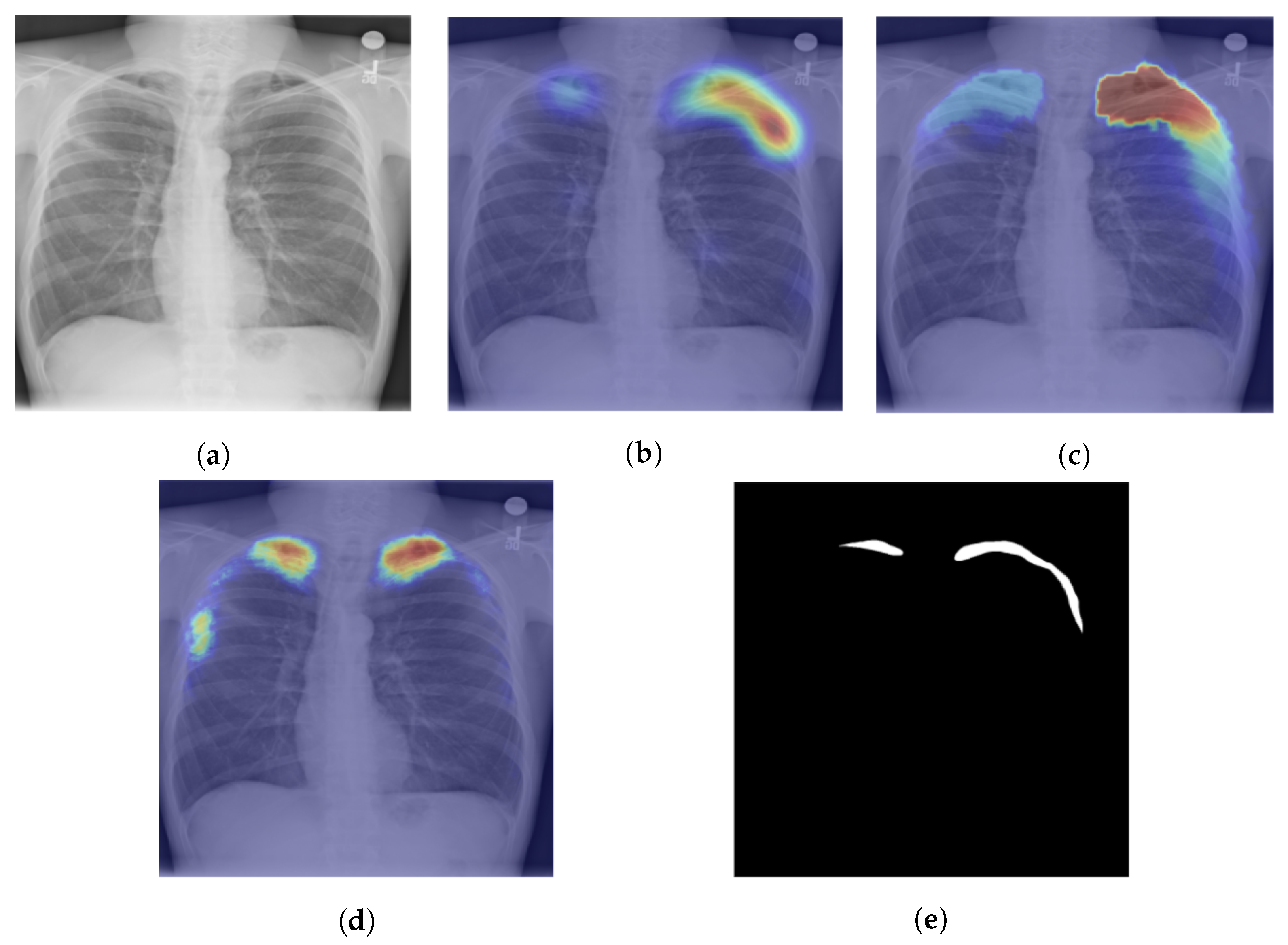

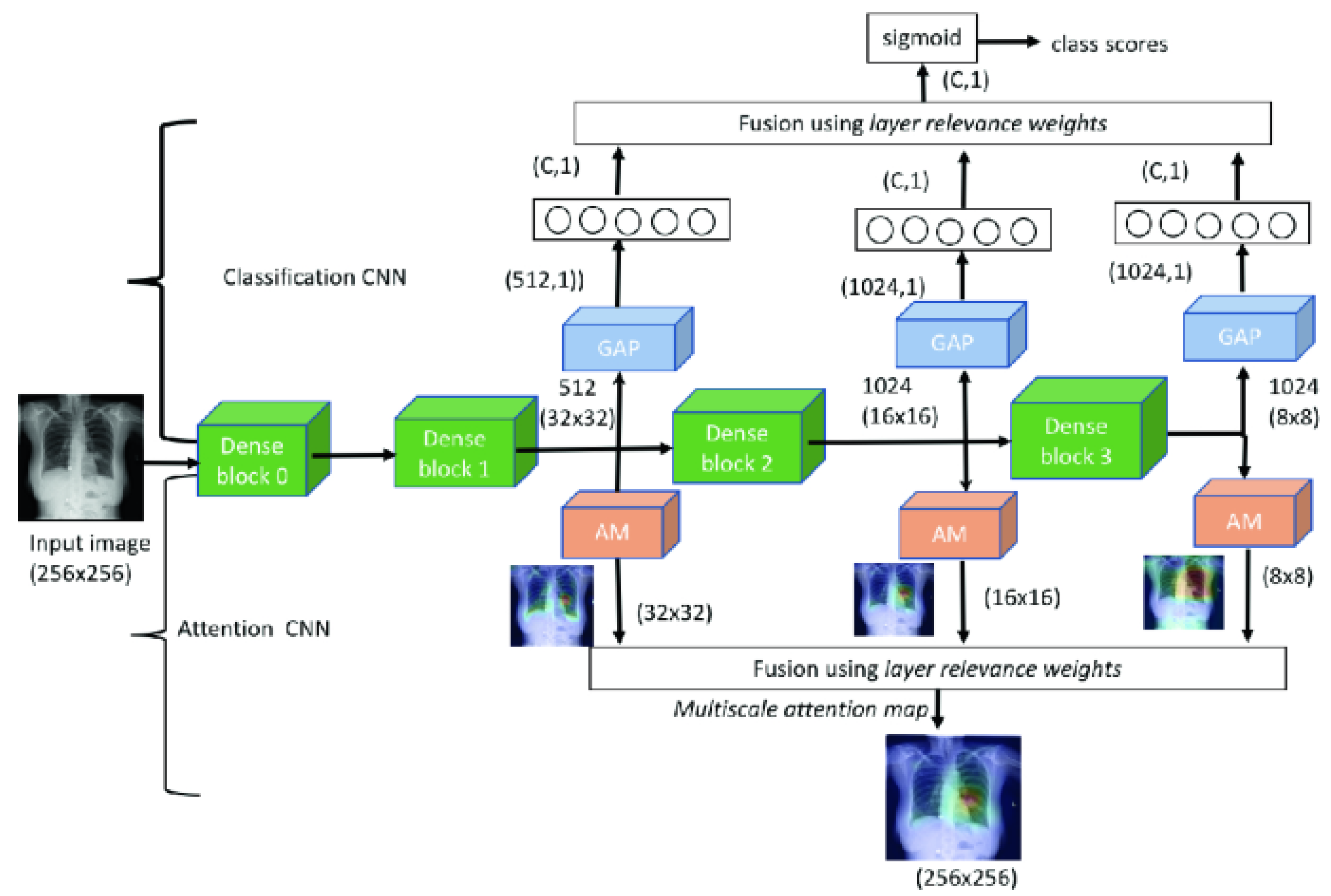

| Seda et al., 2018 [103] | ChestX-ray14 | Multiscale attention map and layer relevance weights |

| Li et al., 2018 [69] | ChestX-ray14 | Multiple-instance learning (MIL) [114] |

| Liu et al., 2018 [115] | Shenzhen, Montgomery, and 2443 frontal chest X-rays from Huiying Medical Technology | CAM [75] |

| Tang et al., 2018 [110] | ChestX-ray14 | CAM [75] with attention-guided iterative refinement |

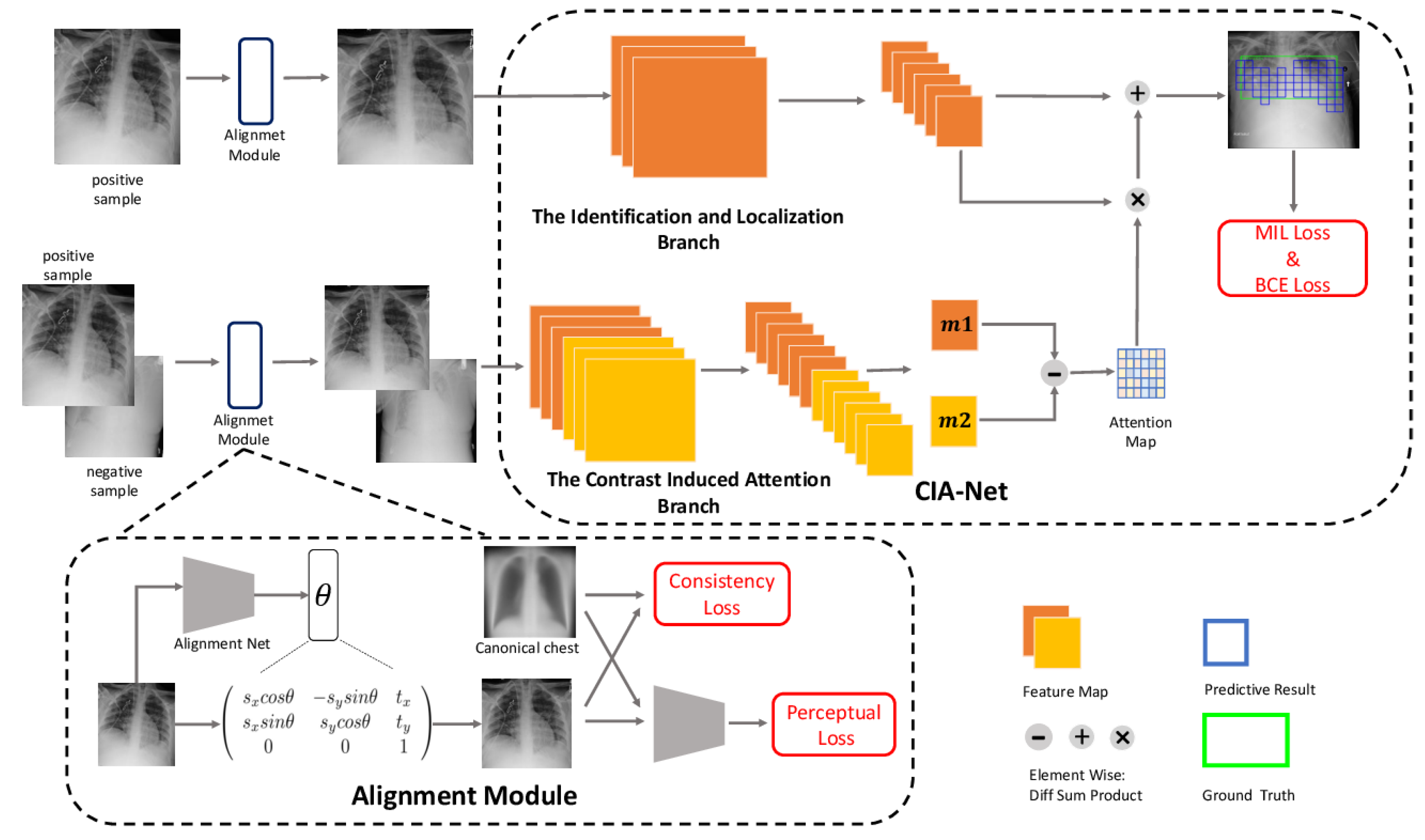

| Wang et al., 2018 [104] | ChestX-ray14, Indiana | Multilevel attentions and saliency-weighted global average pooling |

| Zhou et al., 2018 [116] | ChestX-ray14 | Adaptive DenseNet |

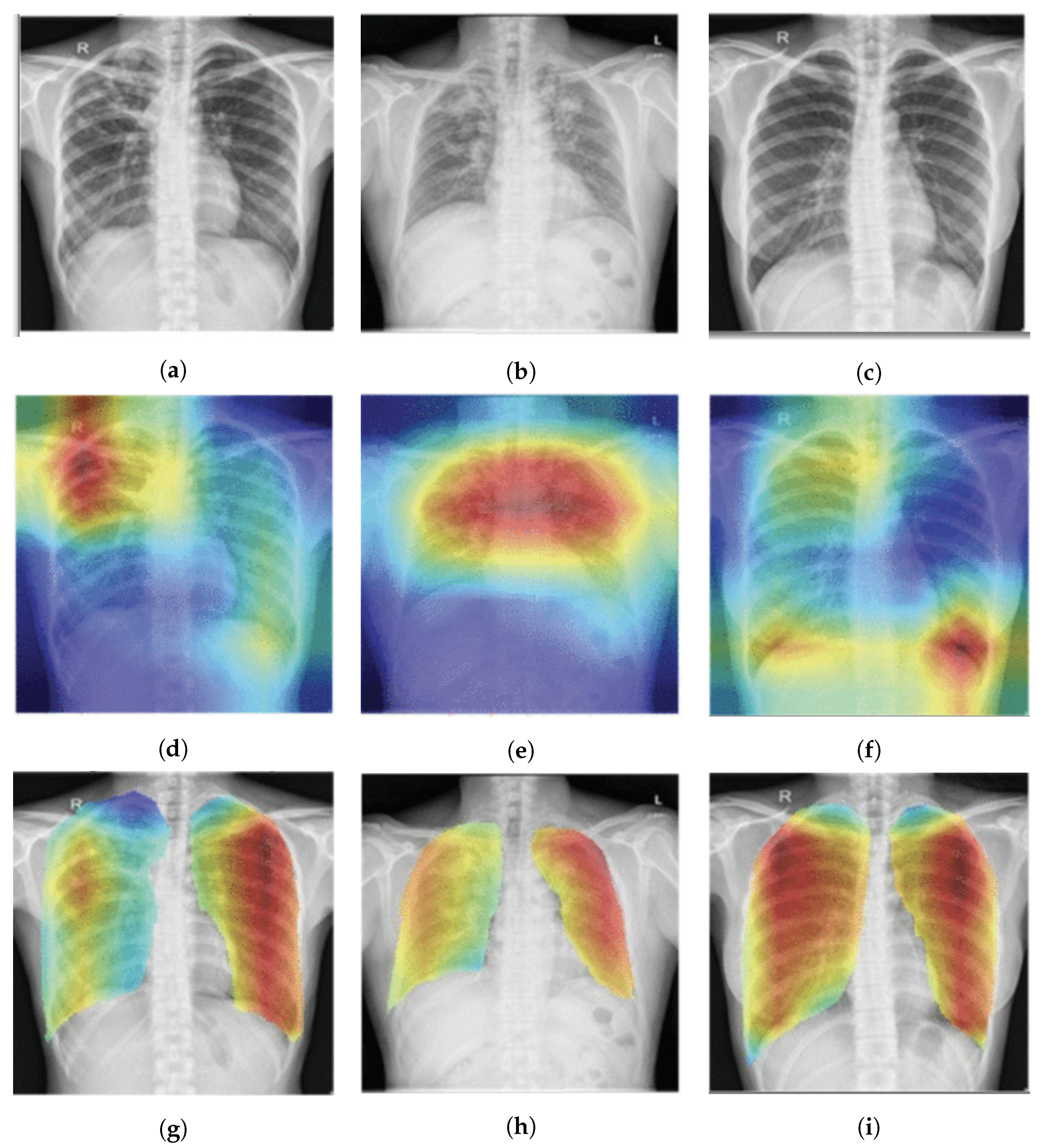

| Pas et al., 2019 [85] | Belarus Tuberculosis Portal, Montgomery, Shenzhen and NIH CXR datasets | Saliency maps [74] and grad-CAMs [76] |

| Liu et al., 2019 [105] | ChestX-ray14 | Attention networks |

| Rahman et al., 2019 [49] | NLM, Belarus, NIAID TB, and RSNA datasets | Score-CAM [78] and t-Distributed Stochastic Neighbor Embedding (t-SNE) [90] |

| Guo and Passi, 2020 [87] | Shenzhen and the NIH CXR dataset | Class activation map (CAM) [75] |

| Singh et al., 2020 [106] | The posteroanterior CXRs from Christian Medical College in Vellore, India | Multiscale attention map |

| Ouyang et al., 2020 [107] | NIH ChestX-ray14 and CheXpert datasets | Hierarchical attention network [80] |

| Chandra et al., 2020 [117] | Montgomery | Fuzzy C-Means (FCM) and K-Means (KM) |

| Viniavskyi, Dobko, and Dobosevych, 2020 [89] | SIIM-ACRPneumothorax | Grad-CAM++ [77], conditional random fields (CRF) [58], and inter-pixel relation network (IRNet) [118] |

| Rajaraman et al., 2021 [91] | TBX11K CXR dataset | Saliency maps and a CRM-based localization algorithm [79] |

| Mamalakis et al., 2021 [119] | Pediatric CXR and Shenzhen | Heatmaps [119] |

| Qi et al., 2021 [64] | Chest-Xray14 | Graph-Regularized Embedding Network (GREN) |

| Wong et al., 2022 [95] | NLM, Belarus, NIAID TB, and RSNA datasets | Visual attention condensers [120] and GSInquire [94] |

| Rajaraman et al., 2022 [92] | The dataset contains 224,316 CXRs collected from 65,240 patients at the Stanford University Hospital in California. | Class-selective relevance maps (CRMs) [79] |

| Nafisah and Muhammad, 2022 [88] | Montgomery, Shenzhen, and Belarus CXH datasets | Grad-CAM [76] and t-SNE visualization technique [90] |

| Bhandari et al., 2022 [121] | Shenzhen, Montgomery, and Belarus datasets | LIME [122], SHAP [123], and Grad-CAM [76] |

| Mehrotra et al., 2022 [124] | Indiana, Shenzhen, and Montgomery | Class activation map (CAM) [75] |

| Zhou et al., 2022 [125] | Shenzhen and Montgomery | Heatmap |

| Rajaraman et al., 2022 [92] | CheXpert CXR and PadChest CXR datasets | Class-selective relevance maps (CRMs) [79], and attention maps [126] |

| Visuña et al., 2022 [127] | COVID-QU-Ex, NIAID, Belarus, RSNA Pneumonia, Shenzhen, and Montgomery | Grad-CAM [76] |

| Prasitpuriprecha et al., 2022 [128] | Shenzhen and Montgomery | Grad-CAMs [76] |

| Souza et al., 2022 [112] | Chest-Xray14 | CAM refined with PCM [129] |

| Malik et al., 2022 [130] | RSNA, Chest-Xray14, and other COVID-19 CXRs | Grad-CAMs [76] |

| Tsai et al., 2022 [131] | Montgomery, Shenzhen, Tbx11k, and Belarus | Grad-CAMs [76] |

| Li et al., 2022 [132] | CheXpert | GL-MLL |

| Pan et al., 2022 [108] | In addition to TBX11K, the author also prepared TBX-Att dataset from TBX11K | Multihead cross attention with attribute reasoning |

| Kazemzadeh et al., 2023 [133] | Indiana, Shenzhen, Montgomery, and other data collected from different countries | Grad-CAMs [76] |

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chakaya, J.; Khan, M.; Ntoumi, F.; Aklillu, E.; Fatima, R.; Mwaba, P.; Kapata, N.; Mfinanga, S.; Hasnain, S.E.; Katoto, P.D.; et al. Global Tuberculosis Report 2020–Reflections on the Global TB burden, treatment and prevention efforts. Int. J. Infect. Dis. 2021, 113, S7–S12. [Google Scholar] [CrossRef]

- Chakaya, J.; Petersen, E.; Nantanda, R.; Mungai, B.N.; Migliori, G.B.; Amanullah, F.; Lungu, P.; Ntoumi, F.; Kumarasamy, N.; Maeurer, M.; et al. The WHO Global Tuberculosis 2021 Report–not so good news and turning the tide back to End TB. Int. J. Infect. Dis. 2022, 124, S26–S29. [Google Scholar] [CrossRef]

- Vonasek, B.; Ness, T.; Takwoingi, Y.; Kay, A.W.; van Wyk, S.S.; Ouellette, L.; Marais, B.J.; Steingart, K.R.; Mandalakas, A.M. Screening tests for active pulmonary tuberculosis in children. Cochrane Database Syst. Rev. 2021. [Google Scholar] [CrossRef]

- World Health Organization. WHO Operational Handbook on Tuberculosis: Module 2: Screening: Systematic Screening for Tuberculosis Disease; World Health Organization: Geneva, Switzerland, 2021.

- van’t Hoog, A.H.; Meme, H.K.; Laserson, K.F.; Agaya, J.A.; Muchiri, B.G.; Githui, W.A.; Odeny, L.O.; Marston, B.J.; Borgdorff, M.W. Screening strategies for tuberculosis prevalence surveys: The value of chest radiography and symptoms. PLoS ONE 2012, 7, e38691. [Google Scholar] [CrossRef] [PubMed]

- Hoog, A.; Langendam, M.; Mitchell, E.; Cobelens, F.; Sinclair, D.; Leeflang, M.; Lonnroth, K. A Systematic Review of the Sensitivity and Specificity of Symptom and Chest Radiography Screening for Active Pulmonary Tuberculosis in HIV-Negative Persons and Persons with Unknown HIV Status; World Health Organization: Geneva, Switzerland, 2013. [CrossRef]

- Steingart, K.R.; Sohn, H.; Schiller, I.; Kloda, L.A.; Boehme, C.C.; Pai, M.; Dendukuri, N. Xpert® MTB/RIF assay for pulmonary tuberculosis and rifampicin resistance in adults. Cochrane Database Syst. Rev. 2013. [Google Scholar] [CrossRef]

- Nachiappan, A.C.; Rahbar, K.; Shi, X.; Guy, E.S.; Mortani Barbosa, E.J., Jr.; Shroff, G.S.; Ocazionez, D.; Schlesinger, A.E.; Katz, S.I.; Hammer, M.M. Pulmonary tuberculosis: Role of radiology in diagnosis and management. Radiographics 2017, 37, 52–72. [Google Scholar] [CrossRef] [PubMed]

- Lyon, S.M.; Rossman, M.D. Pulmonary tuberculosis. Microbiol. Spectr. 2017, 5, 1–5. [Google Scholar] [CrossRef]

- Gaillard, F.; Cugini, C. Tuberculosis (pulmonary manifestations). Available online: https://radiopaedia.org/articles/tuberculosis-pulmonary-manifestations-1?lang=us (accessed on 18 January 2023). [CrossRef]

- Caws, M.; Marais, B.; Heemskerk, D.; Farrar, J. Tuberculosis in Adults and Children; Springer Nature: The Hague, The Netherlands, 2015. [Google Scholar]

- Al Ubaidi, B. The radiological diagnosis of pulmonary tuberculosis (TB) in primary care. RadioPaedia 2018, 4, 73. [Google Scholar]

- Bindhu, V. Biomedical image analysis using semantic segmentation. J. Innov. Image Process. JIIP 2019, 1, 91–101. [Google Scholar]

- Hady, M.F.A.; Schwenker, F. Semi-supervised Learning. In Handbook on Neural Information Processing; Bianchini, M., Maggini, M., Jain, L.C., Eds.; Intelligent Systems Reference Library; Springer: Berlin/Heidelberg, Germany, 2013; Volume 49, pp. 215–239. [Google Scholar] [CrossRef]

- Schwenker, F.; Trentin, E. Pattern classification and clustering: A review of partially supervised learning approaches. Pattern Recognit. Lett. 2014, 37, 4–14. [Google Scholar] [CrossRef]

- Schwenker, F.; Trentin, E. Partially supervised learning for pattern recognition. Pattern Recognit. Lett. 2014, 37, 1–3. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Biratu, E.S.; Schwenker, F.; Ayano, Y.M.; Debelee, T.G. A survey of brain tumor segmentation and classification algorithms. J. Imaging 2021, 7, 179. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.W.; Heng, P.A. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med. Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef] [PubMed]

- Asgari Taghanaki, S.; Abhishek, K.; Cohen, J.P.; Cohen-Adad, J.; Hamarneh, G. Deep semantic segmentation of natural and medical images: A review. Artif. Intell. Rev. 2021, 54, 137–178. [Google Scholar] [CrossRef]

- Soomro, T.A.; Zheng, L.; Afifi, A.J.; Ali, A.; Yin, M.; Gao, J. Artificial intelligence (AI) for medical imaging to combat coronavirus disease (COVID-19): A detailed review with direction for future research. Artif. Intell. Rev. 2022, 55, 1409–1439. [Google Scholar] [CrossRef]

- Debelee, T.G.; Schwenker, F.; Rahimeto, S.; Yohannes, D. Evaluation of modified adaptive k-means segmentation algorithm. Comput. Vis. Media 2019, 5, 2. [Google Scholar] [CrossRef]

- Liu, L.; Wu, F.X.; Wang, Y.P.; Wang, J. Multi-receptive-field CNN for semantic segmentation of medical images. IEEE J. Biomed. Health Inform. 2020, 24, 3215–3225. [Google Scholar] [CrossRef]

- Biratu, E.S.; Schwenker, F.; Debelee, T.G.; Kebede, S.R.; Negera, W.G.; Molla, H.T. Enhanced region growing for brain tumor MR image segmentation. J. Imaging 2021, 7, 22. [Google Scholar] [CrossRef]

- Feyisa, D.W.; Debelee, T.G.; Ayano, Y.M.; Kebede, S.R.; Assore, T.F. Lightweight Multireceptive Field CNN for 12-Lead ECG Signal Classification. Comput. Intell. Neurosci. 2022, 2022, 8413294. [Google Scholar] [CrossRef]

- Wang, R.; Lei, T.; Cui, R.; Zhang, B.; Meng, H.; Nandi, A.K. Medical image segmentation using deep learning: A survey. IET Image Process. 2022, 16, 1243–1267. [Google Scholar] [CrossRef]

- Soomro, T.A.; Zheng, L.; Afifi, A.J.; Ali, A.; Soomro, S.; Yin, M.; Gao, J. Image segmentation for MR brain tumor detection using machine learning: A Review. IEEE Rev. Biomed. Eng. 2022, 16, 70–90. [Google Scholar] [CrossRef] [PubMed]

- Çallı, E.; Sogancioglu, E.; van Ginneken, B.; van Leeuwen, K.G.; Murphy, K. Deep learning for chest X-ray analysis: A survey. Med. Image Anal. 2021, 72, 102125. [Google Scholar] [CrossRef] [PubMed]

- Santosh, K.; Allu, S.; Rajaraman, S.; Antani, S. Advances in Deep Learning for Tuberculosis Screening using Chest X-rays: The Last 5 Years Review. J. Med. Syst. 2022, 46, 82. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.; Pujar, G.V.; Kumar, S.A.; Bhagyalalitha, M.; Akshatha, H.S.; Abuhaija, B.; Alsoud, A.R.; Abualigah, L.; Beeraka, N.M.; Gandomi, A.H. Evolution of machine learning in tuberculosis diagnosis: A review of deep learning-based medical applications. Electronics 2022, 11, 2634. [Google Scholar] [CrossRef]

- Cenggoro, T.W.; Pardamean, B. Systematic Literature Review: An Intelligent Pulmonary TB Detection from Chest X-rays. In Proceedings of the 2021 1st International Conference on Computer Science and Artificial Intelligence (ICCSAI), Jakarta, Indonesia, 28 October 2021; Volume 1, pp. 136–141. [Google Scholar]

- Feng, Y.; Teh, H.S.; Cai, Y. Deep learning for chest radiology: A review. Curr. Radiol. Rep. 2019, 7, 24. [Google Scholar] [CrossRef]

- Ouyang, X.; Xue, Z.; Zhan, Y.; Zhou, X.S.; Wang, Q.; Zhou, Y.; Wang, Q.; Cheng, J.Z. Weakly supervised segmentation framework with uncertainty: A study on pneumothorax segmentation in chest X-ray. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 613–621. [Google Scholar]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B. PRISMA-S: An extension to the PRISMA statement for reporting literature searches in systematic reviews. Syst. Rev. 2021, 10, 39. [Google Scholar] [CrossRef]

- Brennan, S.E.; Munn, Z. PRISMA 2020: A reporting guideline for the next generation of systematic reviews. JBI Evid. Synth. 2021, 19, 906–908. [Google Scholar] [CrossRef]

- Rosenthal, A.; Gabrielian, A.; Engle, E.; Hurt, D.E.; Alexandru, S.; Crudu, V.; Sergueev, E.; Kirichenko, V.; Lapitskii, V.; Snezhko, E.; et al. The TB portals: An open-access, web-based platform for global drug-resistant-tuberculosis data sharing and analysis. J. Clin. Microbiol. 2017, 55, 3267–3282. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, Y.H.; Ban, Y.; Wang, H.; Cheng, M.M. Rethinking computer-aided tuberculosis diagnosis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2646–2655. [Google Scholar]

- Jaeger, S.; Candemir, S.; Antani, S.; Wáng, Y.X.J.; Lu, P.X.; Thoma, G. Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quant. Imaging Med. Surg. 2014, 4, 475. [Google Scholar]

- Chauhan, A.; Chauhan, D.; Rout, C. Role of gist and PHOG features in computer-aided diagnosis of tuberculosis without segmentation. PLoS ONE 2014, 9, e112980. [Google Scholar] [CrossRef]

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 590–597. [Google Scholar]

- Nguyen, H.Q.; Lam, K.; Le, L.T.; Pham, H.H.; Tran, D.Q.; Nguyen, D.B.; Le, D.D.; Pham, C.M.; Tong, H.T.; Dinh, D.H.; et al. Vindr-cxr: An open dataset of chest X-rays with radiologist’s annotations. Sci. Data 2022, 9, 429. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.; Pollard, T.J.; Berkowitz, S.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.y.; Mark, R.G.; Horng, S. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 2019, 6, 317. [Google Scholar] [CrossRef] [PubMed]

- Demner-Fushman, D.; Kohli, M.D.; Rosenman, M.B.; Shooshan, S.E.; Rodriguez, L.; Antani, S.; Thoma, G.R.; McDonald, C.J. Preparing a collection of radiology examinations for distribution and retrieval. J. Am. Med. Inform. Assoc. 2016, 23, 304–310. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Kaggle. Chest X-ray Images (Pneumonia), 2018. Available online: https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia (accessed on 23 January 2023).

- Bustos, A.; Pertusa, A.; Salinas, J.M.; de la Iglesia-Vayá, M. Padchest: A large chest X-ray image dataset with multi-label annotated reports. Med. Image Anal. 2020, 66, 101797. [Google Scholar] [CrossRef]

- Van Ginneken, B.; Stegmann, M.B.; Loog, M. Segmentation of anatomical structures in chest radiographs using supervised methods: A comparative study on a public database. Med. Image Anal. 2006, 10, 19–40. [Google Scholar] [CrossRef]

- Kaggle. RSNA Pneumonia Detection Challenge, 2015. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge (accessed on 27 April 2022).

- Rahman, T.; Khandakar, A.; Kadir, M.A.; Islam, K.R.; Islam, K.F.; Mazhar, R.; Hamid, T.; Islam, M.T.; Kashem, S.; Mahbub, Z.B.; et al. Reliable tuberculosis detection using chest X-ray with deep learning, segmentation and visualization. IEEE Access 2020, 8, 191586–191601. [Google Scholar] [CrossRef]

- Faußer, S.; Schwenker, F. Semi-supervised clustering of large data sets with kernel methods. Pattern Recognit. Lett. 2014, 37, 78–84. [Google Scholar] [CrossRef]

- Amini, M.R.; Feofanov, V.; Pauletto, L.; Devijver, E.; Maximov, Y. Self-Training: A Survey. arXiv 2022, arXiv:2202.12040. [Google Scholar]

- Schwenker, F.; Trentin, E. (Eds.) Partially Supervised Learning—First IAPR TC3 Workshop, PSL 2011, Ulm, Germany, September 15–16, 2011, Revised Selected Papers. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7081. [Google Scholar] [CrossRef]

- Zhou, Z.; Schwenker, F. (Eds.) Partially Supervised Learning—Second IAPR International Workshop, PSL 2013, Nanjing, China, May 13-14, 2013 Revised Selected Papers. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8183. [Google Scholar] [CrossRef]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 16–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Cong, L.; Ding, S.; Wang, L.; Zhang, A.; Jia, W. Image segmentation algorithm based on superpixel clustering. IET Image Process. 2018, 12, 2030–2035. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Li, S.Z. Markov Random Field Modeling in Image Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Wallach, H.M. Conditional Random Fields: An Introduction; Technical Reports; CIS: Philadelphia, PA, USA, 2004; p. 22. [Google Scholar]

- Zhang, L.; Yang, Y.; Gao, Y.; Yu, Y.; Wang, C.; Li, X. A probabilistic associative model for segmenting weakly supervised images. IEEE Trans. Image Process. 2014, 23, 4150–4159. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Song, M.; Liu, Z.; Liu, X.; Bu, J.; Chen, C. Probabilistic graphlet cut: Exploiting spatial structure cue for weakly supervised image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1908–1915. [Google Scholar]

- Sarajlić, A.; Malod-Dognin, N.; Yaveroğlu, Ö.N.; Pržulj, N. Graphlet-based characterization of directed networks. Sci. Rep. 2016, 6, 35098. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Stricker, M.A.; Orengo, M. Similarity of color images. In Proceedings of the Storage and Retrieval for Image and Video Databases III, SPIE, San Diego/La Jolla, CA, USA, 5–10 February 1995; Volume 2420, pp. 381–392. [Google Scholar]

- Qi, B.; Zhao, G.; Wei, X.; Fang, C.; Pan, C.; Li, J.; He, H.; Jiao, L. GREN: Graph-Regularized Embedding Network for Weakly-Supervised Disease Localization in X-ray images. arXiv 2021, arXiv:2107.06442. [Google Scholar] [CrossRef]

- Li, M.; Chen, D.; Liu, S. Weakly supervised segmentation loss based on graph cuts and superpixel algorithm. Neural Process. Lett. 2022, 54, 2339–2362. [Google Scholar] [CrossRef]

- Han, Y.; Cheng, L.; Huang, G.; Zhong, G.; Li, J.; Yuan, X.; Liu, H.; Li, J.; Zhou, J.; Cai, M. Weakly supervised semantic segmentation of histological tissue via attention accumulation and pixel-level contrast learning. Phys. Med. Biol. 2022, 68, 045010. [Google Scholar] [CrossRef]

- Carbonneau, M.A.; Cheplygina, V.; Granger, E.; Gagnon, G. Multiple instance learning: A survey of problem characteristics and applications. Pattern Recognit. 2018, 77, 329–353. [Google Scholar] [CrossRef]

- Quellec, G.; Cazuguel, G.; Cochener, B.; Lamard, M. Multiple-instance learning for medical image and video analysis. IEEE Rev. Biomed. Eng. 2017, 10, 213–234. [Google Scholar] [CrossRef]

- Li, Z.; Wang, C.; Han, M.; Xue, Y.; Wei, W.; Li, L.J.; Fei-Fei, L. Thoracic disease identification and localization with limited supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8290–8299. [Google Scholar]

- Vezhnevets, A.; Buhmann, J.M. Towards weakly supervised semantic segmentation by means of multiple instance and multitask learning. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3249–3256. [Google Scholar]

- Shotton, J.; Johnson, M.; Cipolla, R. Semantic texton forests for image categorization and segmentation. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Ilse, M.; Tomczak, J.; Welling, M. Attention-based deep multiple instance learning. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 2127–2136. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-weighted visual explanations for convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 24–25. [Google Scholar]

- Kim, I.; Rajaraman, S.; Antani, S. Visual interpretation of convolutional neural network predictions in classifying medical image modalities. Diagnostics 2019, 9, 38. [Google Scholar] [CrossRef]

- Ding, F.; Yang, G.; Liu, J.; Wu, J.; Ding, D.; Xv, J.; Cheng, G.; Li, X. Hierarchical attention networks for medical image segmentation. arXiv 2019, arXiv:1911.08777. [Google Scholar]

- Ayano, Y.M.; Schwenker, F.; Dufera, B.D.; Debelee, T.G. Interpretable Machine Learning Techniques in ECG-Based Heart Disease Classification: A Systematic Review. Diagnostics 2022, 13, 111. [Google Scholar] [CrossRef] [PubMed]

- Dasanayaka, S.; Shantha, V.; Silva, S.; Meedeniya, D.; Ambegoda, T. Interpretable machine learning for brain tumour analysis using MRI and whole slide images. Softw. Impacts 2022, 13, 100340. [Google Scholar] [CrossRef]

- Bonyani, M.; Yeganli, F.; Yeganli, S.F. Fast and Interpretable Deep Learning Pipeline for Breast Cancer Recognition. In Proceedings of the 2022 Medical Technologies Congress (TIPTEKNO), Antalya, Turkey, 31 October–2 November 2022. [Google Scholar] [CrossRef]

- Islam, M.T.; Aowal, M.A.; Minhaz, A.T.; Ashraf, K. Abnormality detection and localization in chest X-rays using deep convolutional neural networks. arXiv 2017, arXiv:1705.09850. [Google Scholar]

- Pasa, F.; Golkov, V.; Pfeiffer, F.; Cremers, D.; Pfeiffer, D. Efficient deep network architectures for fast chest X-ray tuberculosis screening and visualization. Sci. Rep. 2019, 9, 6268. [Google Scholar] [CrossRef] [PubMed]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Guo, R.; Passi, K.; Jain, C.K. Tuberculosis diagnostics and localization in chest X-rays via deep learning models. Front. Artif. Intell. 2020, 3, 583427. [Google Scholar] [CrossRef]

- Nafisah, S.I.; Muhammad, G. Tuberculosis detection in chest radiograph using convolutional neural network architecture and explainable artificial intelligence. Neural Comput. Appl. 2022, 1–21. [Google Scholar] [CrossRef]

- Viniavskyi, O.; Dobko, M.; Dobosevych, O. Weakly-supervised segmentation for disease localization in chest X-ray images. In Proceedings of the International Conference on Artificial Intelligence in Medicine, Minneapolis, MN, USA, 25–28 August 2020; pp. 249–259. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Rajaraman, S.; Folio, L.R.; Dimperio, J.; Alderson, P.O.; Antani, S.K. Improved semantic segmentation of tuberculosis—Consistent findings in chest X-rays using augmented training of modality-specific u-net models with weak localizations. Diagnostics 2021, 11, 616. [Google Scholar] [CrossRef]

- Rajaraman, S.; Zamzmi, G.; Folio, L.R.; Antani, S. Detecting Tuberculosis-Consistent Findings in Lateral Chest X-rays Using an Ensemble of CNNs and Vision Transformers. Front. Genet. 2022, 13, 864724. [Google Scholar] [CrossRef]

- Wong, A.; Shafiee, M.J.; Chwyl, B.; Li, F. Ferminets: Learning generative machines to generate efficient neural networks via generative synthesis. arXiv 2018, arXiv:1809.05989. [Google Scholar]

- Lin, Z.Q.; Shafiee, M.J.; Bochkarev, S.; Jules, M.S.; Wang, X.Y.; Wong, A. Do explanations reflect decisions? A machine-centric strategy to quantify the performance of explainability algorithms. arXiv 2019, arXiv:1910.07387. [Google Scholar]

- Wong, A.; Lee, J.R.H.; Rahmat-Khah, H.; Sabri, A.; Alaref, A.; Liu, H. TB-Net: A tailored, self-attention deep convolutional neural network design for detection of tuberculosis cases from chest X-ray images. Front. Artif. Intell. 2022, 5, 827299. [Google Scholar] [CrossRef]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14, 2016. pp. 354–370. [Google Scholar]

- Cui, Z.; Chen, W.; Chen, Y. Multi-scale convolutional neural networks for time series classification. arXiv 2016, arXiv:1603.06995. [Google Scholar]

- Chen, Y.; Zhao, D.; Lv, L.; Li, C. A visual attention based convolutional neural network for image classification. In Proceedings of the 2016 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; pp. 764–769. [Google Scholar]

- Yang, X. An overview of the attention mechanisms in computer vision. J. Phys. Conf. Ser. 2020, 1693, 012173. [Google Scholar] [CrossRef]

- Mei, X.; Pan, E.; Ma, Y.; Dai, X.; Huang, J.; Fan, F.; Du, Q.; Zheng, H.; Ma, J. Spectral-spatial attention networks for hyperspectral image classification. Remote Sens. 2019, 11, 963. [Google Scholar] [CrossRef]

- Zhu, B.; Hofstee, P.; Lee, J.; Al-Ars, Z. An attention module for convolutional neural networks. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2021: 30th International Conference on Artificial Neural Networks, Bratislava, Slovakia, 14–17 September 2021; Proceedings, Part I 30, 2021. pp. 167–178. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Sedai, S.; Mahapatra, D.; Ge, Z.; Chakravorty, R.; Garnavi, R. Deep multiscale convolutional feature learning for weakly supervised localization of chest pathologies in X-ray images. In Proceedings of the International Workshop on Machine Learning in Medical Imaging, Granada, Spain, 16 September 2018; pp. 267–275. [Google Scholar]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Summers, R.M. Tienet: Text-image embedding network for common thorax disease classification and reporting in chest X-rays. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9049–9058. [Google Scholar]

- Liu, J.; Zhao, G.; Fei, Y.; Zhang, M.; Wang, Y.; Yu, Y. Align, attend and locate: Chest X-ray diagnosis via contrast induced attention network with limited supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October 27–2 November 2019; pp. 10632–10641. [Google Scholar]

- Singh, A.; Lall, B.; Panigrahi, B.K.; Agrawal, A.; Agrawal, A.; Thangakunam, B.; Christopher, D. Deep Learning for Automated Screening of Tuberculosis from Indian Chest X-rays: Analysis and Update. arXiv 2020, arXiv:2011.09778. [Google Scholar]

- Ouyang, X.; Karanam, S.; Wu, Z.; Chen, T.; Huo, J.; Zhou, X.S.; Wang, Q.; Cheng, J.Z. Learning hierarchical attention for weakly-supervised chest X-ray abnormality localization and diagnosis. IEEE Trans. Med. Imaging 2020, 40, 2698–2710. [Google Scholar] [CrossRef]

- Pan, C.; Zhao, G.; Fang, J.; Qi, B.; Liu, J.; Fang, C.; Zhang, D.; Li, J.; Yu, Y. Computer-Aided Tuberculosis Diagnosis with Attribute Reasoning Assistance. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2022: 25th International Conference, Singapore, 18–22 September 2022; Proceedings, Part I, 2022. pp. 623–633. [Google Scholar]

- Kolesnikov, A.; Lampert, C.H. Seed, expand and constrain: Three principles for weakly-supervised image segmentation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 695–711. [Google Scholar]

- Tang, Y.; Wang, X.; Harrison, A.P.; Lu, L.; Xiao, J.; Summers, R.M. Attention-guided curriculum learning for weakly supervised classification and localization of thoracic diseases on chest radiographs. In Proceedings of the Machine Learning in Medical Imaging: 9th International Workshop, MLMI 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16 September 2018; Proceedings 9, 2018. pp. 249–258. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- D’Souza, G.; Reddy, N.; Manjunath, K. Localization of lung abnormalities on chest X-rays using self-supervised equivariant attention. Biomed. Eng. Lett. 2022, 13, 21–30. [Google Scholar] [CrossRef]

- Pinheiro, P.O.; Collobert, R. From image-level to pixel-level labeling with convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1713–1721. [Google Scholar]

- Babenko, B. Multiple instance learning: Algorithms and applications. View Artic. PubMed/NCBI Google Sch. 2008, 19, 1–19. [Google Scholar]

- Liu, J.; Liu, Y.; Wang, C.; Li, A.; Meng, B.; Chai, X.; Zuo, P. An original neural network for pulmonary tuberculosis diagnosis in radiographs. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2018: 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Proceedings, Part II 27, 2018. pp. 158–166. [Google Scholar]

- Zhou, B.; Li, Y.; Wang, J. A weakly supervised adaptive densenet for classifying thoracic diseases and identifying abnormalities. arXiv 2018, arXiv:1807.01257. [Google Scholar]

- Chandra, T.B.; Verma, K.; Jain, D.; Netam, S.S. Localization of the suspected abnormal region in chest radiograph images. In Proceedings of the 2020 First International Conference on Power, Control and Computing Technologies (ICPC2T), Raipur, India, 3–5 January 2020; pp. 204–209. [Google Scholar]

- Ahn, J.; Cho, S.; Kwak, S. Weakly supervised learning of instance segmentation with inter-pixel relations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Beach, CA, USA, 15–20 June 2019; pp. 2209–2218. [Google Scholar]

- Mamalakis, M.; Swift, A.J.; Vorselaars, B.; Ray, S.; Weeks, S.; Ding, W.; Clayton, R.H.; Mackenzie, L.S.; Banerjee, A. DenResCov-19: A deep transfer learning network for robust automatic classification of COVID-19, pneumonia, and tuberculosis from X-rays. Comput. Med. Imaging Graph. 2021, 94, 102008. [Google Scholar] [CrossRef] [PubMed]

- Wong, A.; Famouri, M.; Shafiee, M.J. Attendnets: Tiny deep image recognition neural networks for the edge via visual attention condensers. arXiv 2020, arXiv:2009.14385. [Google Scholar]

- Bhandari, M.; Shahi, T.B.; Siku, B.; Neupane, A. Explanatory classification of CXR images into COVID-19, Pneumonia and Tuberculosis using deep learning and XAI. Comput. Biol. Med. 2022, 150, 106156. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Mehrotra, R.; Agrawal, R.; Ansari, M. Diagnosis of hypercritical chronic pulmonary disorders using dense convolutional network through chest radiography. Multimed. Tools Appl. 2022, 81, 7625–7649. [Google Scholar] [CrossRef]

- Zhou, W.; Cheng, G.; Zhang, Z.; Zhu, L.; Jaeger, S.; Lure, F.Y.; Guo, L. Deep learning-based pulmonary tuberculosis automated detection on chest radiography: Large-scale independent testing. Quant. Imaging Med. Surg. 2022, 12, 2344. [Google Scholar] [CrossRef]

- Zhou, D.; Kang, B.; Jin, X.; Yang, L.; Lian, X.; Jiang, Z.; Hou, Q.; Feng, J. Deepvit: Towards deeper vision transformer. arXiv 2021, arXiv:2103.11886. [Google Scholar]

- Visuña, L.; Yang, D.; Garcia-Blas, J.; Carretero, J. Computer-aided diagnostic for classifying chest X-ray images using deep ensemble learning. BMC Med. Imaging 2022, 22, 178. [Google Scholar] [CrossRef]

- Prasitpuriprecha, C.; Jantama, S.S.; Preeprem, T.; Pitakaso, R.; Srichok, T.; Khonjun, S.; Weerayuth, N.; Gonwirat, S.; Enkvetchakul, P.; Kaewta, C.; et al. Drug-Resistant Tuberculosis Treatment Recommendation, and Multi-Class Tuberculosis Detection and Classification Using Ensemble Deep Learning-Based System. Pharmaceuticals 2022, 16, 13. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, J.; Kan, M.; Shan, S.; Chen, X. Self-supervised equivariant attention mechanism for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12275–12284. [Google Scholar]

- Malik, H.; Anees, T.; Din, M.; Naeem, A. CDC_Net: Multi-classification convolutional neural network model for detection of COVID-19, pneumothorax, pneumonia, lung Cancer, and tuberculosis using chest X-rays. Multimed. Tools Appl. 2022, 82, 13855–13880. [Google Scholar] [CrossRef] [PubMed]

- Tsai, A.C.; Zhou, Z.G.; Ou, Y.Y.; Wang, I.J.F. Tuberculosis Detection Based on Multiple Model Ensemble in Chest X-ray Image. In Proceedings of the 2022 10th International Conference on Orange Technology (ICOT), Virtual, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Li, L.; Cao, P.; Yang, J.; Zaiane, O.R. Modeling global and local label correlation with graph convolutional networks for multi-label chest X-ray image classification. Med. Biol. Eng. Comput. 2022, 60, 2567–2588. [Google Scholar] [CrossRef] [PubMed]

- Kazemzadeh, S.; Yu, J.; Jamshy, S.; Pilgrim, R.; Nabulsi, Z.; Chen, C.; Beladia, N.; Lau, C.; McKinney, S.M.; Hughes, T.; et al. Deep learning detection of active pulmonary tuberculosis at chest radiography matched the clinical performance of radiologists. Radiology 2023, 306, 124–137. [Google Scholar] [CrossRef] [PubMed]

| ID | Inclusion | Exclusion | Description |

|---|---|---|---|

| C1 | X | - | Studies performed between the years 2017 and 2023 |

| C2 | X | - | Studies that are original article and conference papers |

| C3 | - | X | Studies performed in languages other than English |

| C4 | X | - | Studies that involve the localization and segmentation of pulmonary TB in a chest X-ray |

| C5 | - | X | Duplicate publications |

| C6 | - | X | Studies that use machine learning or DL methods |

| C7 | - | X | Studies that are not presented with clear and plausible results |

| C8 | X | - | Articles that use evaluation metrics to quantify the localization result |

| Approach | Description |

|---|---|

| Occlusion sensitivity | A technique used to understand the importance of different regions of an image in influencing the prediction of a deep neural network. It involves systematically, occluding different parts of the image and measuring the change in the prediction accuracy. By comparing the accuracy of the model with and without occlusion, we can determine the importance of different regions in the image. |

| Saliency maps | Saliency maps are heatmaps that highlight the regions of an image that are most important for the prediction made by a deep neural network. They are generated by computing the gradient of the prediction score with respect to the input image. Higher gradient values indicate regions that have a larger impact on the prediction, and these regions are visually highlighted in the saliency map. |

| Class activation map (CAM) | A technique used to generate a heatmap that highlights the discriminative regions of an image that contributed to a specific class prediction. It is typically used in convolutional neural networks (CNNs) with a global average pooling layer, and it involves multiplying the feature maps of the last convolutional layer with the weights of the fully connected layer corresponding to the predicted class. |

| Grad-CAM | The Grad-CAM is an extension of the CAM that overcomes some of its limitations. It generates a heatmap by taking the gradient of the predicted class score with respect to the feature maps of the last convolutional layer and then weights the feature maps based on the magnitude of the gradient. This allows the Grad-CAM to provide more fine-grained and localized explanations compared to the CAM. |

| Grad-CAM++ | The Grad-CAM++ is an improved version of the Grad-CAM that further refines the localization accuracy of the heatmap by incorporating second-order gradients. It computes the second-order gradients of the predicted class score with respect to the feature maps, which provides additional information for determining the importance of different regions in the image. |

| Score-CAM | A technique that uses the predicted class score and the gradient of the class score with respect to the feature maps of the last convolutional layer to generate a heatmap. It weights the feature maps based on the product of the predicted class score and the gradient, which helps to highlight the regions that have a higher impact on the final prediction. |

| Class-selective relevance maps (CRMs) | A technique that generates relevance maps by combining the gradients of the predicted class score with respect to the input image and the gradients of the class score with respect to the feature maps. It uses a combination of global average pooling and global max pooling to capture both local and global information in the image. |

| Attention networks | A class of neural networks that dynamically focus on different regions of an image based on their importance for the task at hand. They use mechanisms such as self-attention or soft attention to assign weights to different regions of an image, which are then used to compute the final prediction. Attention networks are typically used in tasks that require sequential processing, such as machine translation or image captioning. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feyisa, D.W.; Ayano, Y.M.; Debelee, T.G.; Schwenker, F. Weak Localization of Radiographic Manifestations in Pulmonary Tuberculosis from Chest X-ray: A Systematic Review. Sensors 2023, 23, 6781. https://doi.org/10.3390/s23156781

Feyisa DW, Ayano YM, Debelee TG, Schwenker F. Weak Localization of Radiographic Manifestations in Pulmonary Tuberculosis from Chest X-ray: A Systematic Review. Sensors. 2023; 23(15):6781. https://doi.org/10.3390/s23156781

Chicago/Turabian StyleFeyisa, Degaga Wolde, Yehualashet Megersa Ayano, Taye Girma Debelee, and Friedhelm Schwenker. 2023. "Weak Localization of Radiographic Manifestations in Pulmonary Tuberculosis from Chest X-ray: A Systematic Review" Sensors 23, no. 15: 6781. https://doi.org/10.3390/s23156781

APA StyleFeyisa, D. W., Ayano, Y. M., Debelee, T. G., & Schwenker, F. (2023). Weak Localization of Radiographic Manifestations in Pulmonary Tuberculosis from Chest X-ray: A Systematic Review. Sensors, 23(15), 6781. https://doi.org/10.3390/s23156781