Transformers for Energy Forecast

Abstract

:1. Introduction

2. Literature Review

3. Methodology

3.1. Baseline Models

- represents the input gate activation at time step t,

- represents the forget gate activation at time step t,

- represents the candidate cell state at time step t,

- represents the cell state at time step t,

- represents the output gate activation at time step

- represents the hidden state (output) at time step t,

- represents the input at time step t,

- represents the hidden state at the previous time step (),

- represents the cell state at the previous time step (),

- represents the sigmoid activation function,

- ⊙ represents the element-wise multiplication (Hadamard product).

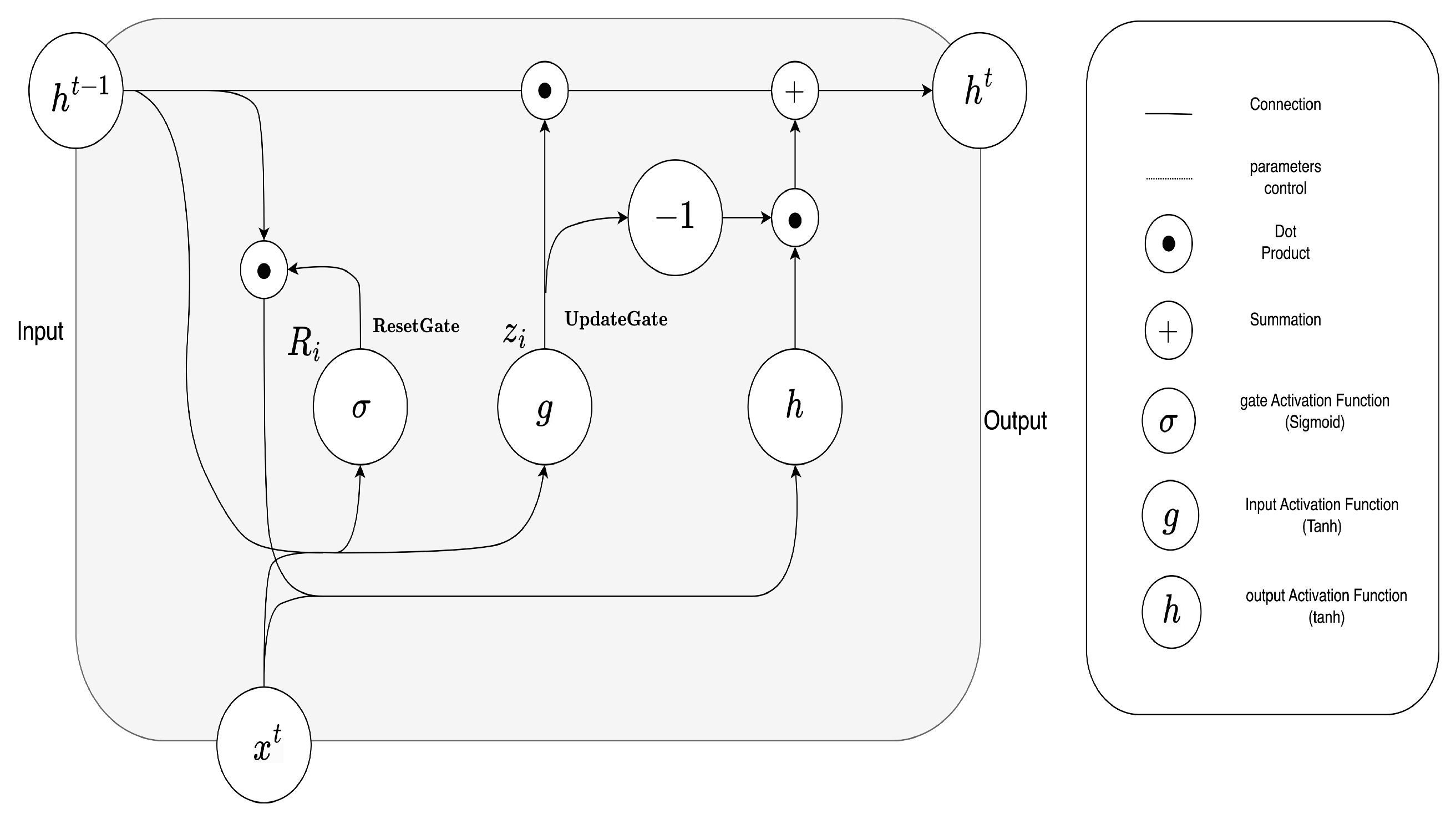

- represents the update gate activation at time step t,

- represents the reset gate activation at time step t,

- represents the candidate hidden state at time step t,

- represents the hidden state (output) at time step t,

- represents the input at time step t,

- represents the hidden state at the previous time step (),

- W represents weight matrices,

- b represents bias vectors,

- represents the sigmoid activation function,

- ⊙ represents the element-wise multiplication (Hadamard product).

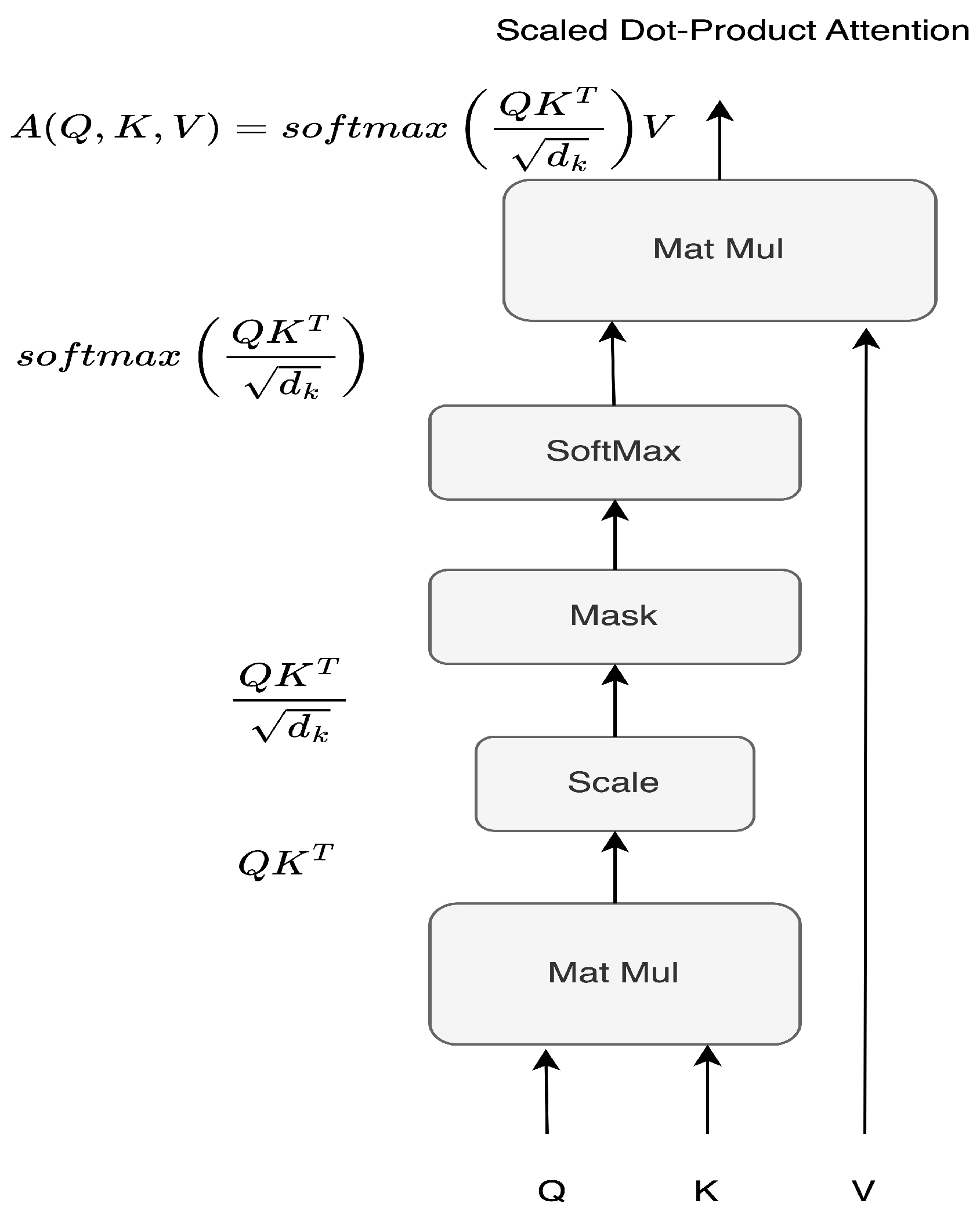

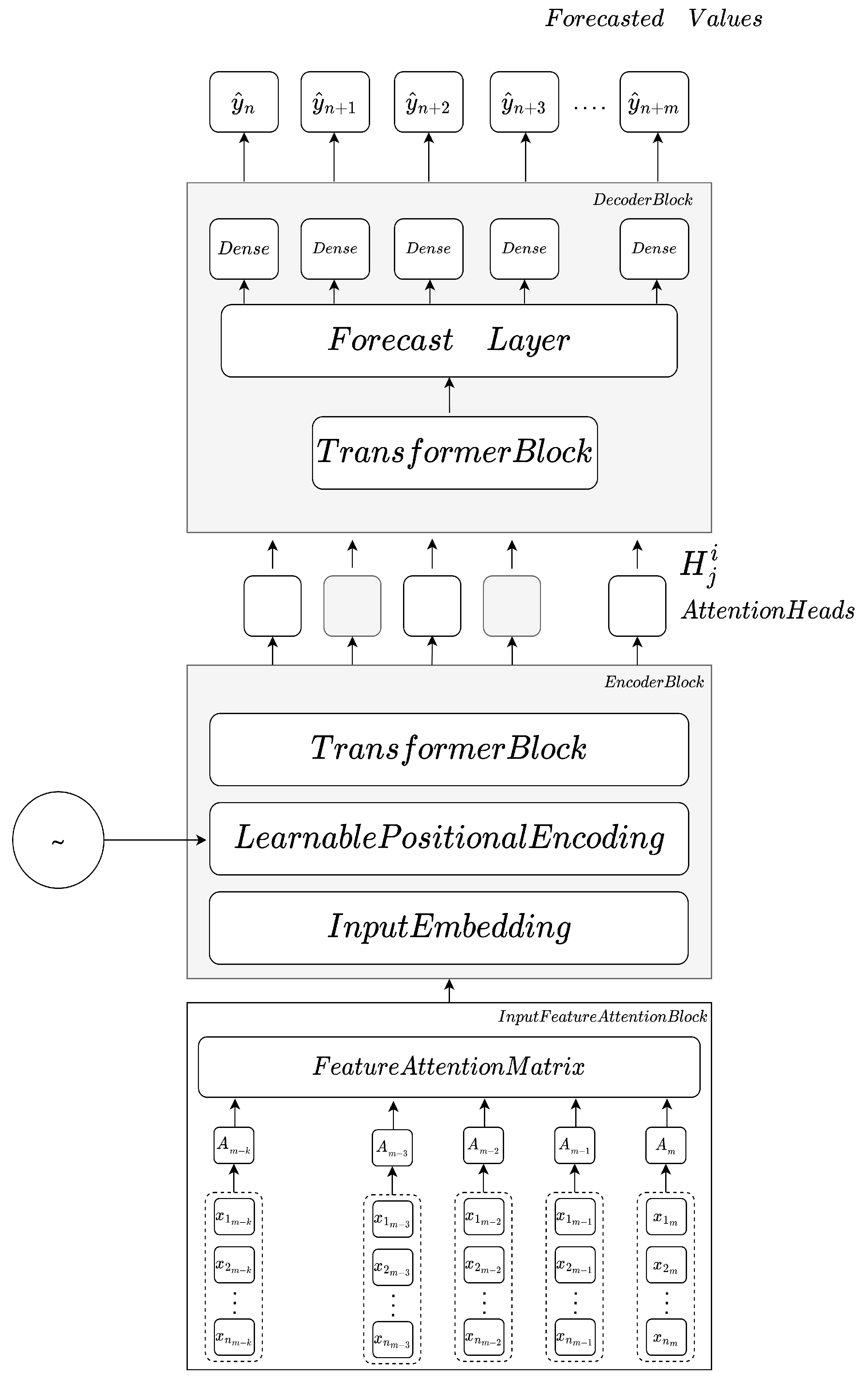

3.2. Proposed Transformer Multistep

4. Setup And Forecasting

4.1. Models

4.2. Evaluation Metrics

4.3. Dataset

- Real-time historical data from the INESC TEC building.

- Encompassing two-year time span.

- Totaling 8760 × 2 sample points (2 years).

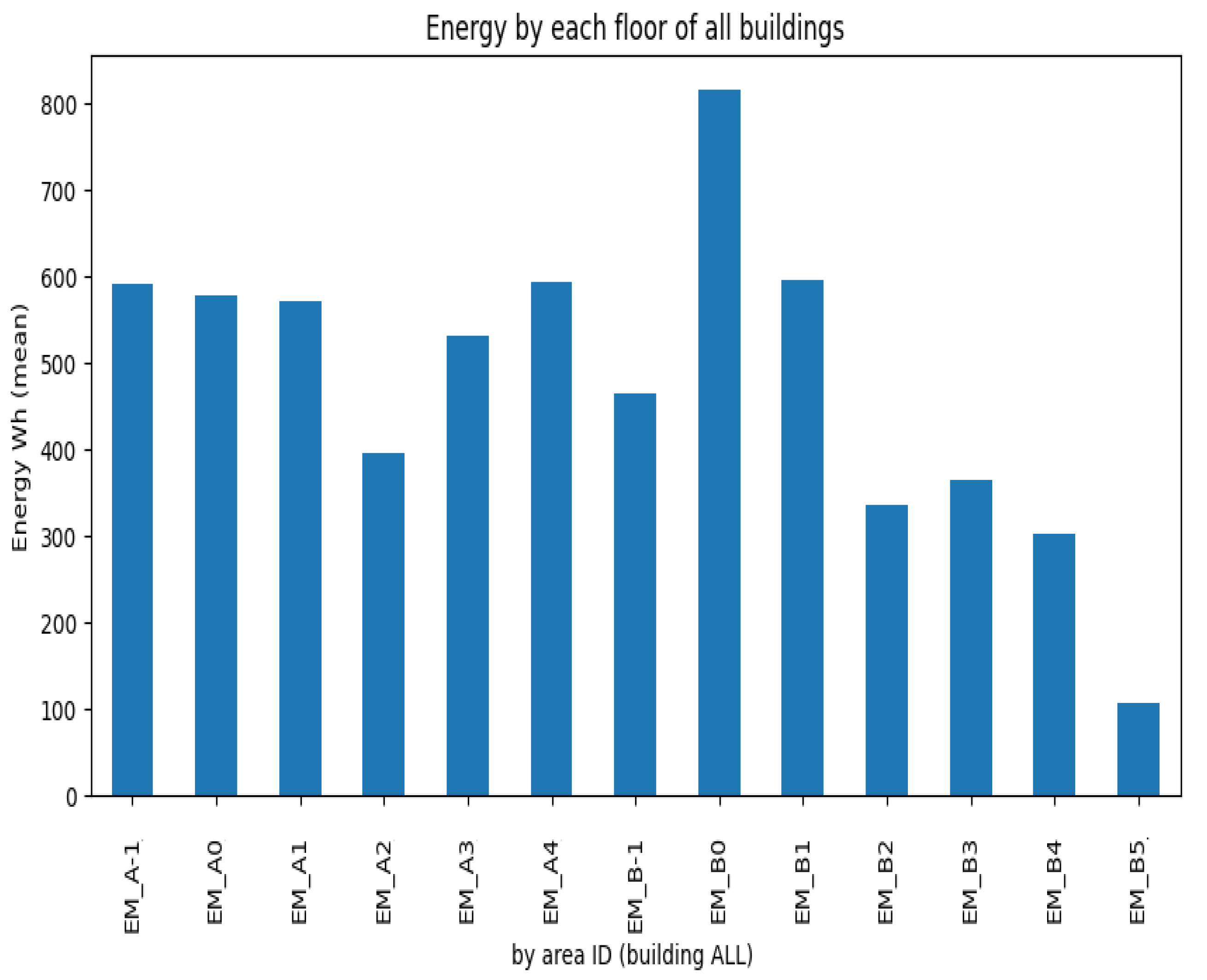

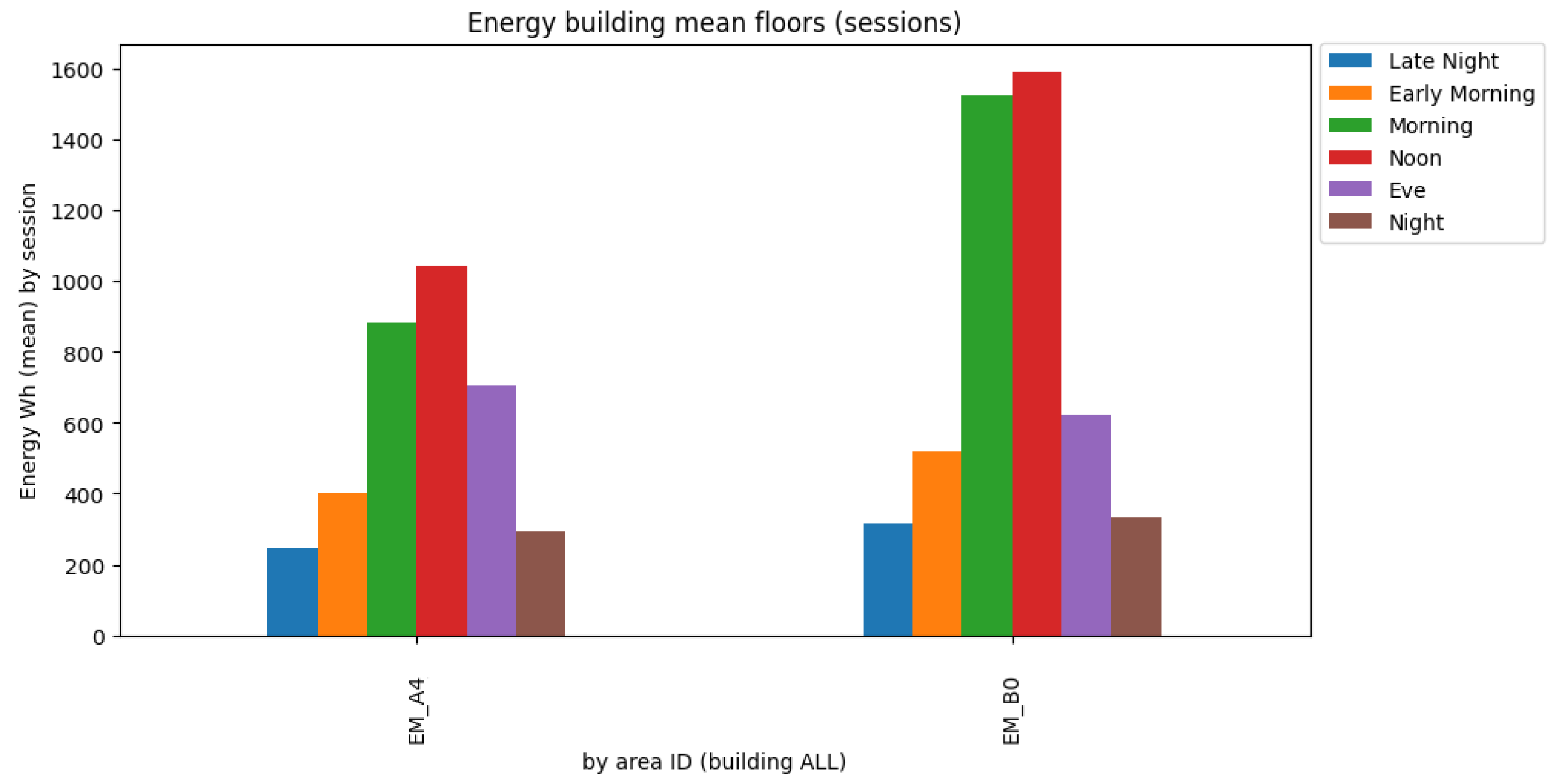

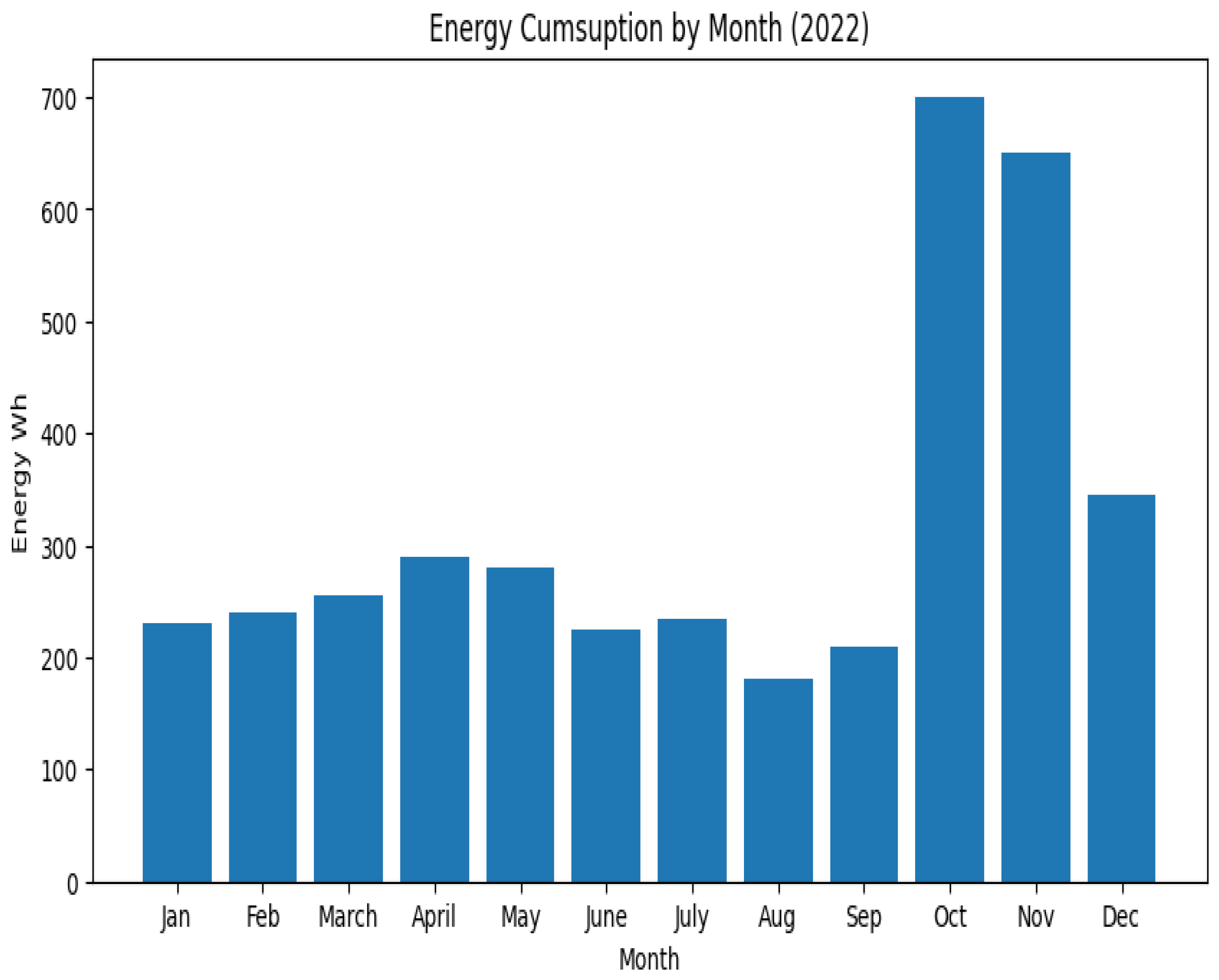

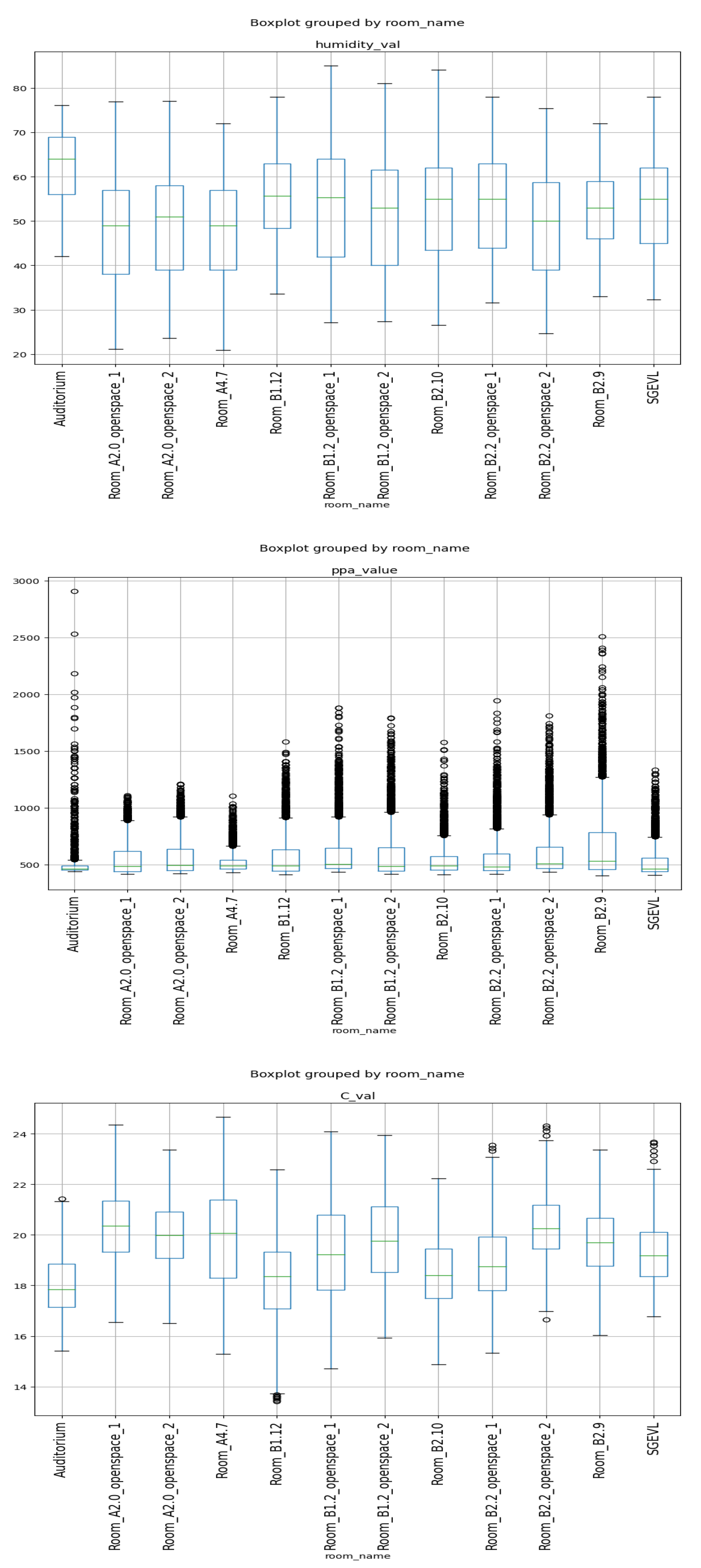

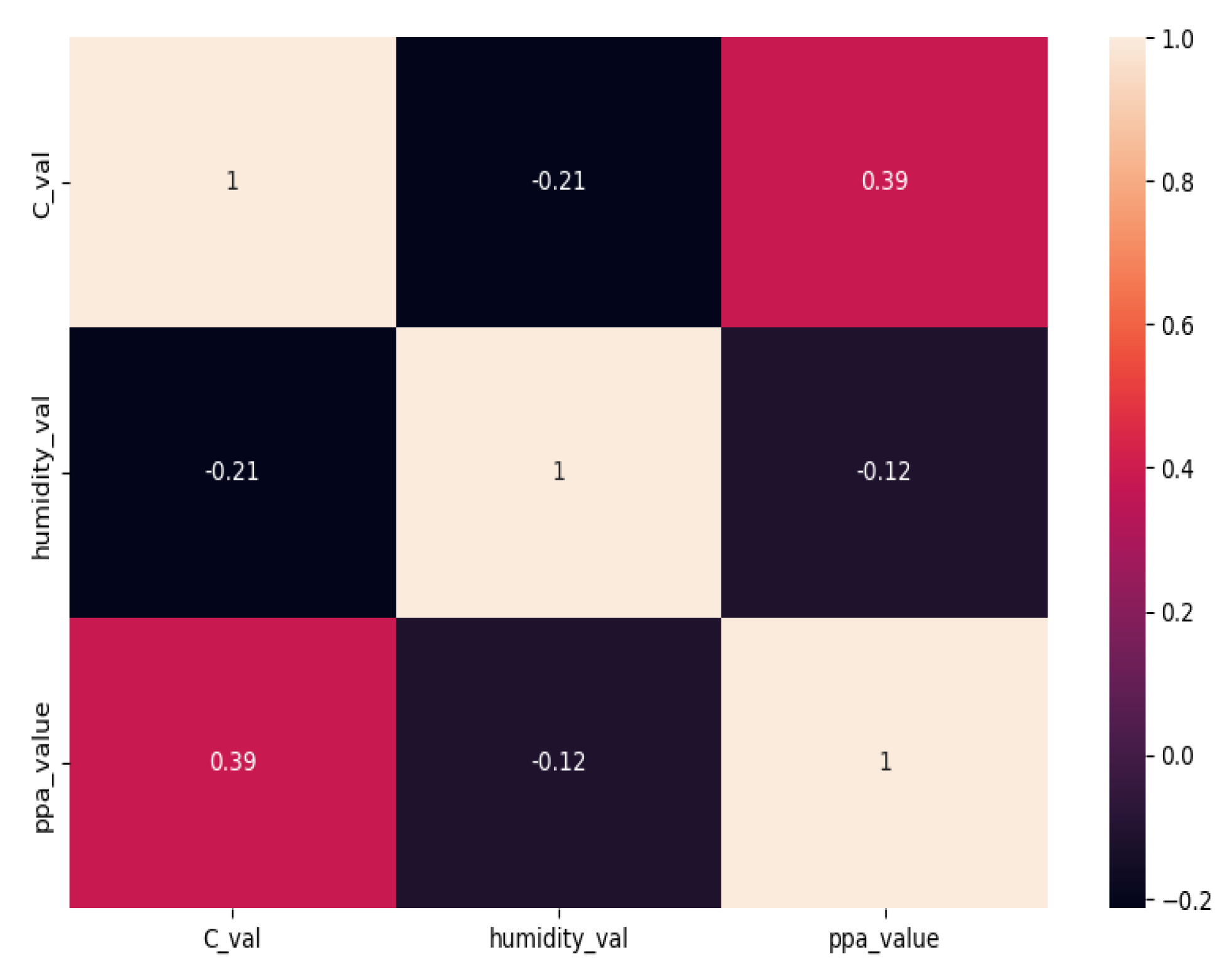

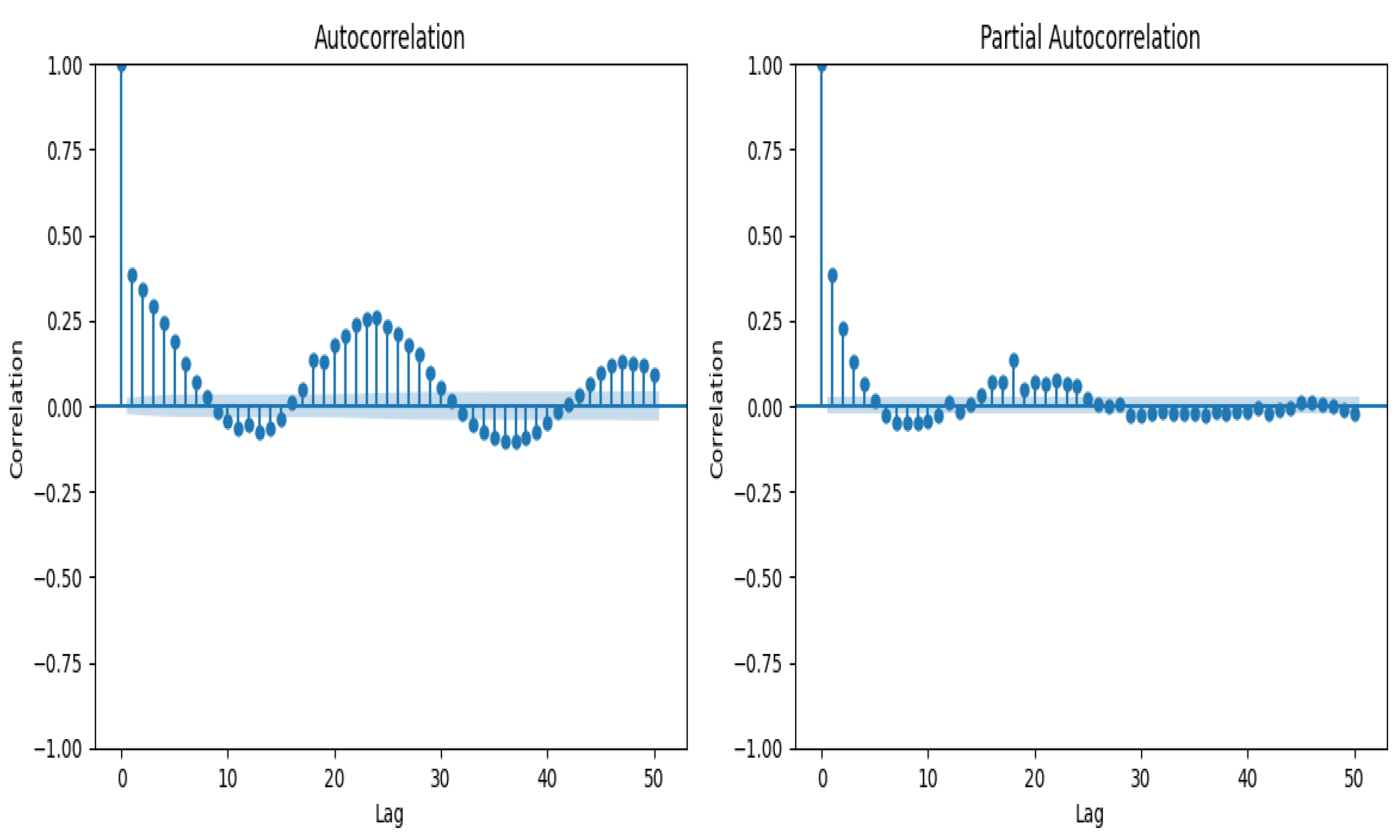

4.4. Data Analysis

4.5. Time-Series Analysis and Pre-Processing

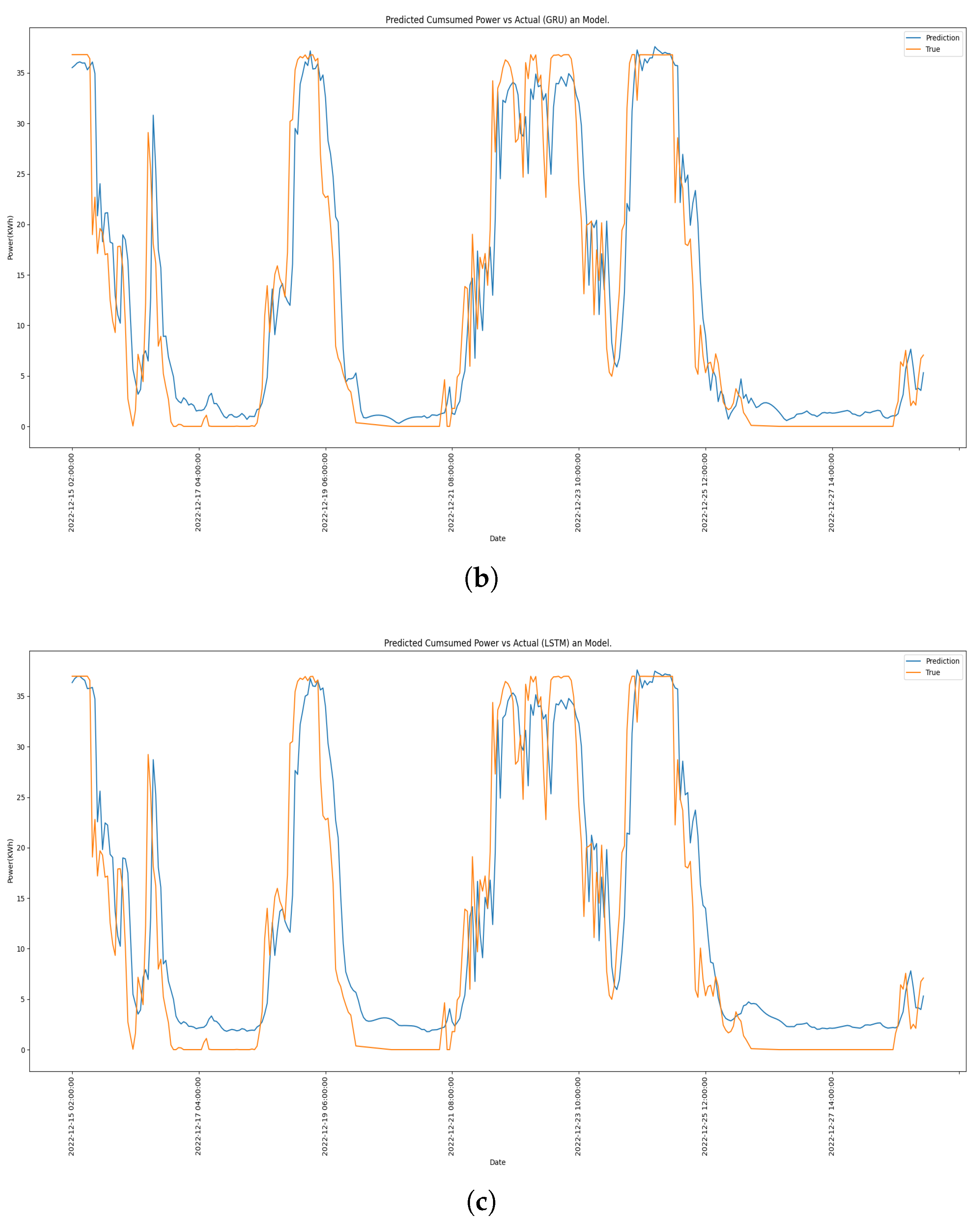

5. Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| NLP | Natural language |

| CNN | Convolution neural networks |

| ViT | Visual transformers |

| AUC | Area under the curve |

| MLP | Multi layer perceptron |

| BERT | Bidirectional encoder representation of transformers |

| DT | Digital twin |

| GRU | Gated recurrent unit |

| LSTM | Long short term memory |

| RNN | Recurrent neural network |

| SGD | Stochastic gradient descent |

| MSE | Mean-squared error |

| MAPE | Mean absolute percentage error |

| ACF | Auto-correlation function |

| PACF | Partial auto-correlation function |

References

- Bielecki, J. Energy security: Is the wolf at the door? Q. Rev. Econ. Financ. 2002, 42, 235–250. [Google Scholar]

- Madni, A.M.; Madni, C.C.; Lucero, S.D. Leveraging digital twin technology in model-based systems engineering. Systems 2019, 7, 7. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.; Zhu, X.; Anduv, B.; Jin, X.; Du, Z. Digital twins model and its updating method for heating, ventilation and air conditioning system using broad learning system algorithm. Energy 2022, 251, 124040. [Google Scholar] [CrossRef]

- Teng, S.Y.; Touš, M.; Leong, W.D.; How, B.S.; Lam, H.L.; Máša, V. Recent advances on industrial data-driven energy savings: Digital twins and infrastructures. Renew. Sustain. Energy Rev. 2021, 135, 110208. [Google Scholar] [CrossRef]

- Jiang, X.; Ling, H.; Yan, J.; Li, B.; Li, Z. Forecasting electrical energy consumption of equipment maintenance using neural network and particle swarm optimization. Math. Probl. Eng. 2013, 2013, 194730. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Yin, Y.; Zhang, S.; Xu, G. Data-driven prediction of energy consumption of district cooling systems (DCS) based on the weather forecast data. Sustain. Cities Soc. 2023, 90, 104382. [Google Scholar] [CrossRef]

- Khahro, S.H.; Kumar, D.; Siddiqui, F.H.; Ali, T.H.; Raza, M.S.; Khoso, A.R. Optimizing energy use, cost and carbon emission through building information modelling and a sustainability approach: A case-study of a hospital building. Sustainability 2021, 13, 3675. [Google Scholar] [CrossRef]

- Gröger, G.; Plümer, L. CityGML–Interoperable semantic 3D city models. ISPRS J. Photogramm. Remote. Sens. 2012, 71, 12–33. [Google Scholar] [CrossRef]

- Runge, J.; Zmeureanu, R. A review of deep learning techniques for forecasting energy use in buildings. Energies 2021, 14, 608. [Google Scholar] [CrossRef]

- Mahjoub, S.; Chrifi-Alaoui, L.; Marhic, B.; Delahoche, L. Predicting Energy Consumption Using LSTM, Multi-Layer GRU and Drop-GRU Neural Networks. Sensors 2022, 22, 4062. [Google Scholar]

- Somu, N.; MR, G.R.; Ramamritham, K. A deep learning framework for building energy consumption forecast. Renew. Sustain. Energy Rev. 2021, 137, 110591. [Google Scholar] [CrossRef]

- Tekler, Z.D.; Chong, A. Occupancy prediction using deep learning approaches across multiple space types: A minimum sensing strategy. Build. Environ. 2022, 226, 109689. [Google Scholar] [CrossRef]

- Low, R.; Cheah, L.; You, L. Commercial vehicle activity prediction with imbalanced class distribution using a hybrid sampling and gradient boosting approach. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1401–1410. [Google Scholar] [CrossRef]

- Berriel, R.F.; Lopes, A.T.; Rodrigues, A.; Varejao, F.M.; Oliveira-Santos, T. Monthly energy consumption forecast: A deep learning approach. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), IEEE, Anchorage, AL, USA, 14–19 May 2017; pp. 4283–4290. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Sanzana, M.R.; Maul, T.; Wong, J.Y.; Abdulrazic, M.O.M.; Yip, C.C. Application of deep learning in facility management and maintenance for heating, ventilation, and air conditioning. Autom. Constr. 2022, 141, 104445. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), IEEE, Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar]

- Ayoobi, N.; Sharifrazi, D.; Alizadehsani, R.; Shoeibi, A.; Gorriz, J.M.; Moosaei, H.; Khosravi, A.; Nahavandi, S.; Chofreh, A.G.; Goni, F.A.; et al. Time series forecasting of new cases and new deaths rate for COVID-19 using deep learning methods. Results Phys. 2021, 27, 104495. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Deb, C.; Zhang, F.; Yang, J.; Lee, S.E.; Shah, K.W. A review on time series forecasting techniques for building energy consumption. Renew. Sustain. Energy Rev. 2017, 74, 902–924. [Google Scholar] [CrossRef]

- Tekler, Z.D.; Ono, E.; Peng, Y.; Zhan, S.; Lasternas, B.; Chong, A. ROBOD, room-level occupancy and building operation dataset. In Building Simulation; Springer: Berlin, Germany, 2022; Volume 15, pp. 2127–2137. [Google Scholar]

- Miller, C.; Kathirgamanathan, A.; Picchetti, B.; Arjunan, P.; Park, J.Y.; Nagy, Z.; Raftery, P.; Hobson, B.W.; Shi, Z.; Meggers, F. The Building Data Genome Project 2, energy meter data from the ASHRAE Great Energy Predictor III competition. Sci. Data 2020, 7, 368. [Google Scholar] [CrossRef] [PubMed]

- Dong, B.; Liu, Y.; Mu, W.; Jiang, Z.; Pandey, P.; Hong, T.; Olesen, B.; Lawrence, T.; O’Neil, Z.; Andrews, C.; et al. A global building occupant behavior database. Sci. Data 2022, 9, 369. [Google Scholar] [CrossRef] [PubMed]

| LSTM | |||||

|---|---|---|---|---|---|

| N Cells | N Nodes | Window | Parameters | MAPE | MSE |

| 400 | 128 | 32 | 18M | 18.11% | 15.43% |

| 300 | 128 | 32 | 16M | 16.34% | 13.35% |

| 200 | 256 | 32 | 13M | 17.41% | 14.45% |

| 300 | 256 | 32 | 14M | 14.26% | 11.65% |

| 200 | 128 | 24 | 12M | 12.42% | 10.02% |

| 250 | 256 | 32 | 14M | 10.02% | 7.04% |

| GRU | |||||

| N Cells | N Nodes | Window | Parameters | MAPE | MSE |

| 400 | 128 | 32 | 14M | 23.56% | 21.43% |

| 300 | 128 | 32 | 13M | 15.98% | 12.54% |

| 200 | 256 | 32 | 12M | 13.93% | 11.56% |

| 300 | 256 | 12 | 13M | 15.34% | 12.76% |

| 200 | 128 | 26 | 11M, | 11.66% | 09.43% |

| 250 | 128 | 32 | 12M | 13.59% | 10.49% |

| Transformer | |||||

| Heads | Enc/Deco | Window | Parameters | MAPE | MSE |

| 10 | 10/10 | 32 | 24M | 12.33% | 10.61% |

| 10 | 6/6 | 32 | 14M | 11.25% | 9.75% |

| 10 | 5/5 | 32 | 13M | 10.43% | 8.27% |

| 6 | 10/10 | 32 | 20M | 10.24% | 8.11% |

| 6 | 6/6 | 32 | 16M | 7.09% | 5.42% |

| 5 | 5/5 | 32 | 13M | 8.36% | 6.62% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliveira, H.S.; Oliveira, H.P. Transformers for Energy Forecast. Sensors 2023, 23, 6840. https://doi.org/10.3390/s23156840

Oliveira HS, Oliveira HP. Transformers for Energy Forecast. Sensors. 2023; 23(15):6840. https://doi.org/10.3390/s23156840

Chicago/Turabian StyleOliveira, Hugo S., and Helder P. Oliveira. 2023. "Transformers for Energy Forecast" Sensors 23, no. 15: 6840. https://doi.org/10.3390/s23156840

APA StyleOliveira, H. S., & Oliveira, H. P. (2023). Transformers for Energy Forecast. Sensors, 23(15), 6840. https://doi.org/10.3390/s23156840