Multitarget-Tracking Method Based on the Fusion of Millimeter-Wave Radar and LiDAR Sensor Information for Autonomous Vehicles

Abstract

1. Introduction

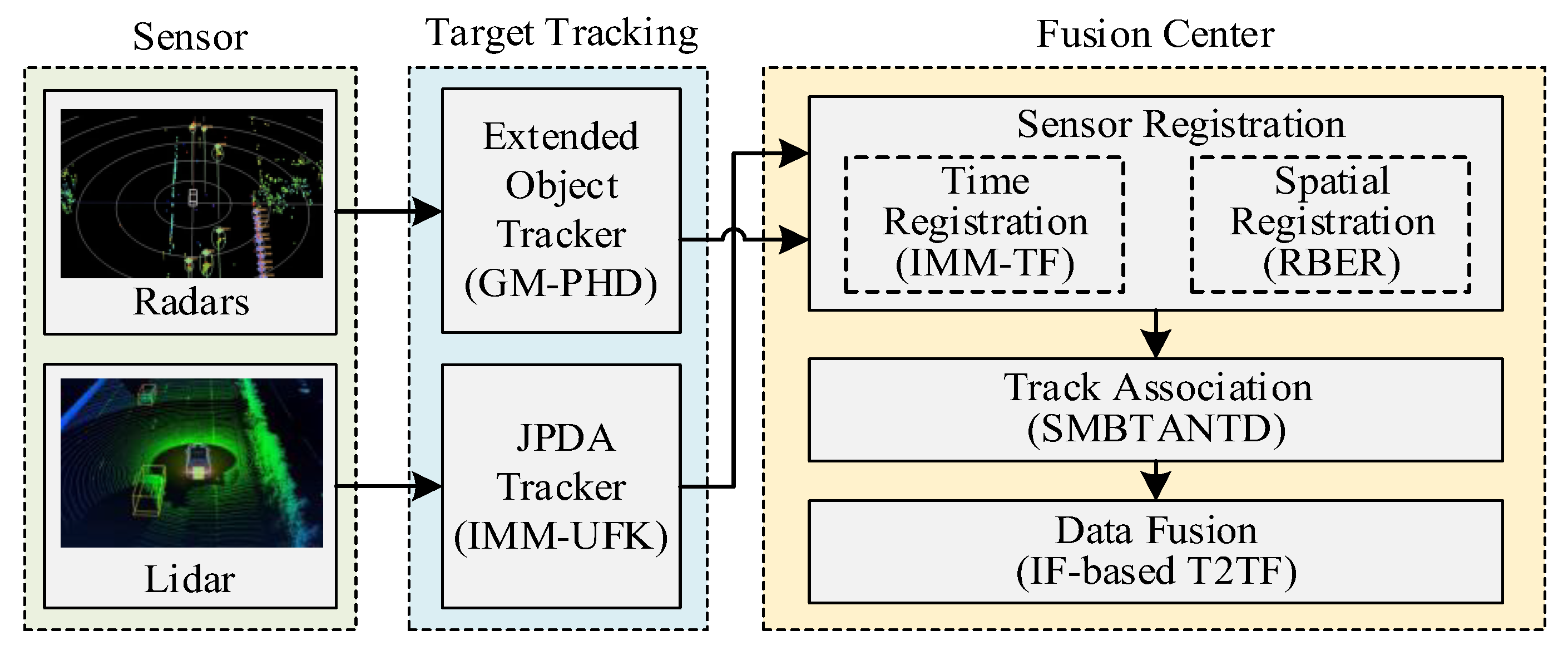

2. Program Framework

- Single-sensor target tracking: The extended target tracker is constructed according to the GM-PHD algorithm and the rectangular target model, and the millimeter-wave radar sensor uses the extended target tracker to track multiple targets and generate the local track of the target object. Then, a JPDA tracker configured with the Interacting multiple modules–unscented Kalman filter (IMM-UKF) algorithm is built, which is used by the lidar to track multiple targets and generate the local tracks of target objects.

- The spatiotemporal registration of sensors: The Kalman filter method is used to register the asynchronous measurement information of each sensor to the same target at the same time, so as to realize sensor time registration. The Residual Bias Estimation Registration (RBER) method is used to estimate and compensate the detection information of the space public target, so as to realize sensor space registration.

- Sensor track association: using the sequential m-best track association algorithm based on the new target density (SMBTANTD), each time in an iterative manner, tracks from the next sensor are introduced and correlated with the previous results.

- Sensor data fusion: the IF heterogeneous sensor fusion algorithm is used to avoid the repeated calculation of public information and realize the optimal combination of track information provided by millimeter-wave radar and lidar, so as to obtain more accurate target status information.

3. Track Fusion and Management

3.1. Single-Sensor Target Tracking

3.1.1. Millimeter-Wave Radar Target Tracking

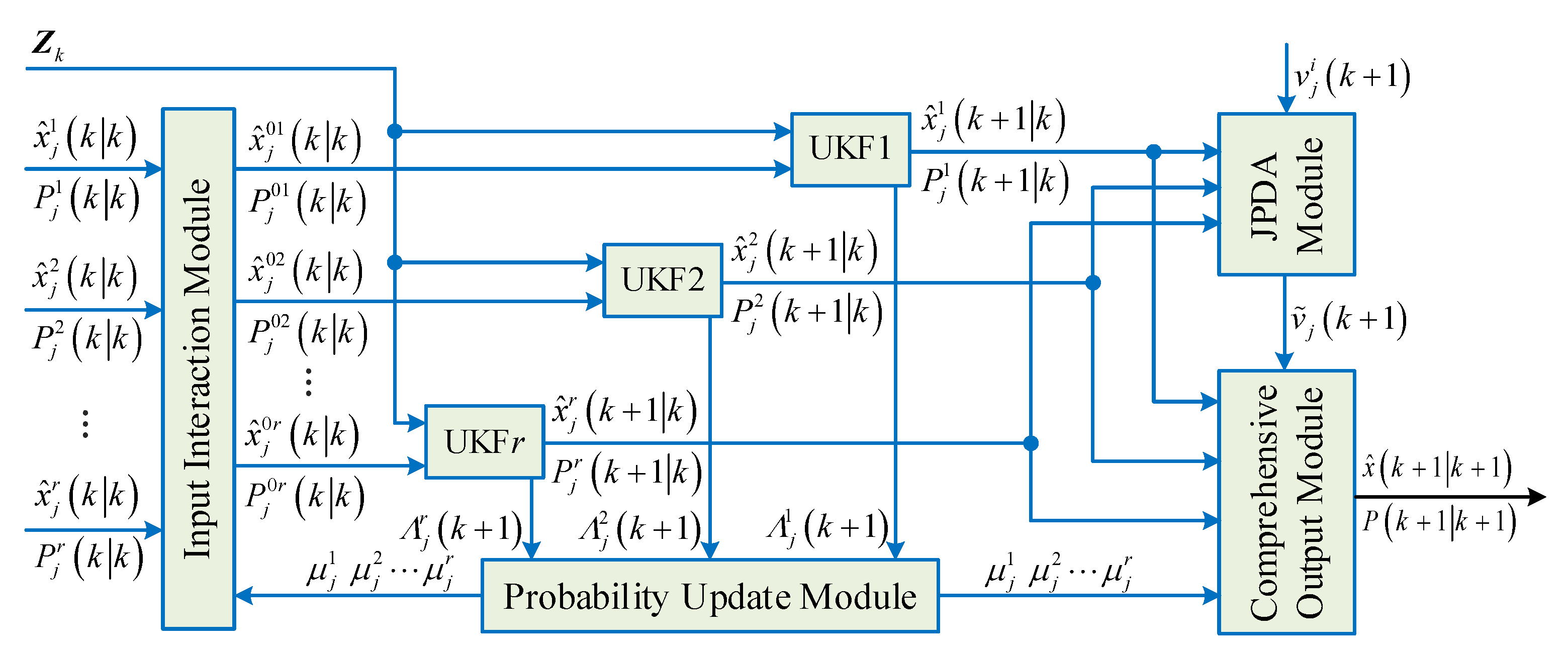

3.1.2. LiDAR Target Tracking

3.2. Sensor Spatiotemporal Registration

3.2.1. Time Registration

3.2.2. Spatial Registration

3.3. Sensor Track Association

3.4. Heterogeneous Track-to-Track Fusion

4. Simulation Verification and Analysis

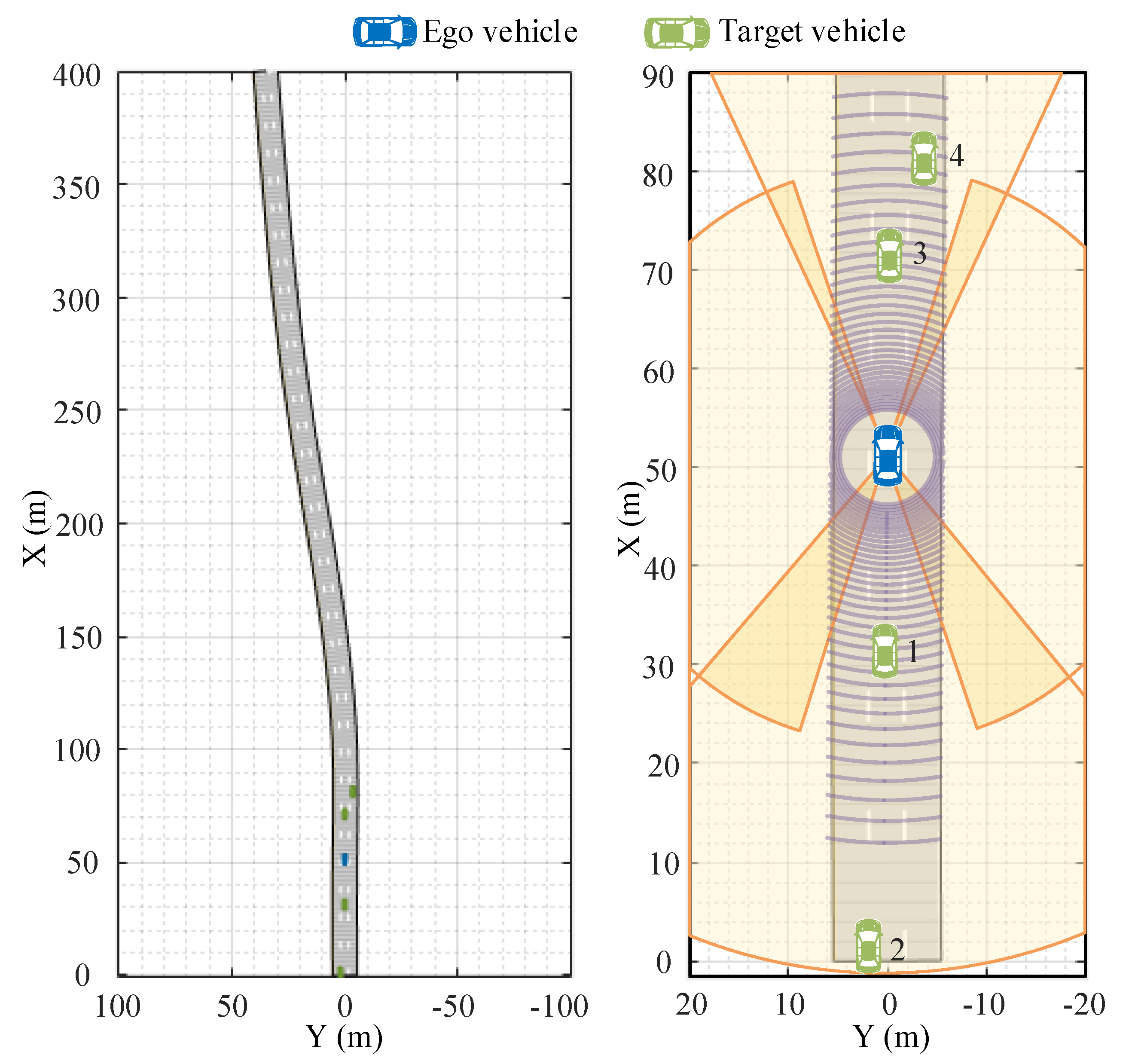

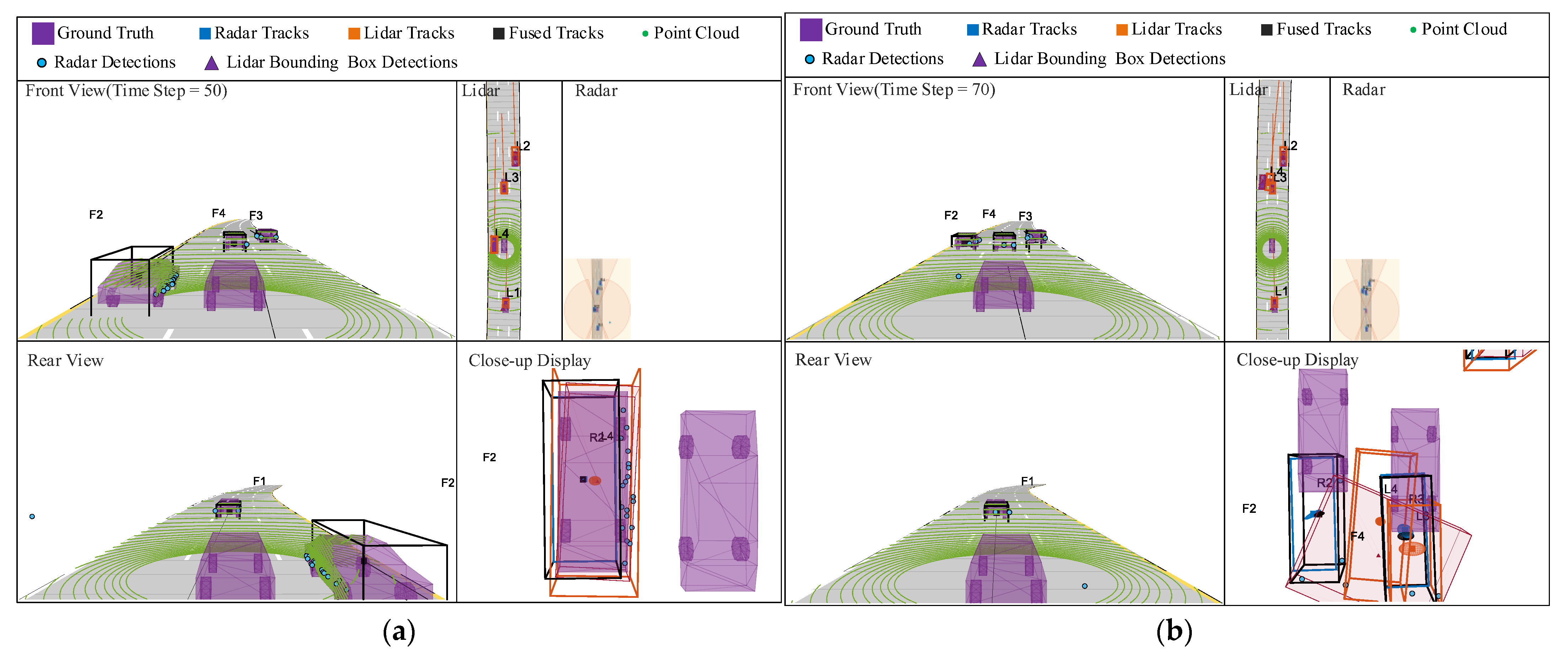

4.1. Simulation Scene Visualization Analysis

4.2. Quantitative Index Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hou, W.; Li, W.; Li, P. Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion. Sensors 2023, 23, 5110. [Google Scholar]

- Shi, J.; Sun, D.; Qin, D.; Hu, M.; Kan, Y.; Ma, K.; Chen, R. Planning the trajectory of an autonomous wheel loader and tracking its trajectory via adaptive model predictive control. Robot. Auton. Syst. 2020, 131, 103570. [Google Scholar]

- Piao, C.; Gao, J.; Yang, Q.; Shi, J. Adaptive cruise control method based on hierarchical control and multi-objective optimization. Trans. Inst. Meas. Control 2022, 45, 1298–1312. [Google Scholar] [CrossRef]

- Ramadani, A.; Bui, M.; Wendler, T.; Schunkert, H.; Ewert, P.; Navab, N. A survey of catheter tracking concepts and methodologies. Med. Image Anal. 2022, 82, 102584. [Google Scholar]

- Beck, J.; Arvin, R.; Lee, S.; Khattak, A.; Chakraborty, S. Automated vehicle data pipeline for accident reconstruction: New insights from LiDAR, camera, and radar data. Accid. Anal. Prev. 2023, 180, 106923. [Google Scholar]

- Zhang, Z.; Jiang, J.; Wu, J.; Zhu, X. Efficient and optimal penetration path planning for stealth unmanned aerial vehicle using minimal radar cross-section tactics and modified A-Star algorithm. ISA Trans. 2023, 134, 42–57. [Google Scholar]

- Adurthi, N. Scan Matching-Based Particle Filter for LIDAR-Only Localization. Sensors 2023, 23, 4010. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar]

- Nazari, M.; Pashazadeh, S.; Mohammad-Khanli, L. An Adaptive Density-Based Fuzzy Clustering Track Association for Distributed Tracking System. IEEE Access 2019, 7, 135972–135981. [Google Scholar] [CrossRef]

- Sharma, A.; Chauhan, S. Sensor Fusion for Distributed Detection of Mobile Intruders in Surveillance Wireless Sensor Networks. IEEE Sens. J. 2020, 20, 15224–15231. [Google Scholar] [CrossRef]

- Dash, D.; Jayaraman, V. A Probabilistic Model for Sensor Fusion Using Range-Only Measurements in Multistatic Radar. IEEE Sens. Lett. 2020, 4, 7500604. [Google Scholar]

- Joo, J.E.; Choi, S.; Chon, Y.; Park, S.M. A Low-Cost Measurement Methodology for LiDAR Receiver Integrated Circuits. Sensors 2023, 23, 6002. [Google Scholar] [CrossRef]

- Xiao, F. Multi-sensor data fusion based on the belief divergence measure of evidences and the belief entropy. Inform. Fusion 2019, 46, 23–32. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inform. Fusion 2013, 14, 28–44. [Google Scholar]

- Kahraman, S.; Bacher, R. A comprehensive review of hyperspectral data fusion with lidar and sar data. Annu. Rev. Control 2021, 51, 236–253. [Google Scholar]

- Lee, J.; Hong, J.; Park, K. Frequency Modulation Control of an FMCW LiDAR Using a Frequency-to-Voltage Converter. Sensors 2023, 23, 4981. [Google Scholar]

- Caron, F.; Davy, M.; Duflos, E.; Vanheeghe, P. Particle Filtering for Multisensor Data Fusion with Switching Observation Models: Application to Land Vehicle Positioning. IEEE Trans. Signal Process. 2007, 55, 2703–2719. [Google Scholar]

- Gao, S.; Zhong, Y.; Li, W. Random Weighting Method for Multisensor Data Fusion. IEEE Sens. J. 2011, 11, 1955–1961. [Google Scholar] [CrossRef]

- Wang, X.; Fu, C.; Li, Z.; Lai, Y.; He, J. DeepFusionMOT: A 3D multi-object tracking framework based on camera-LiDAR fusion with deep association. IEEE Robot. Autom. Lett. 2022, 7, 8260–8267. [Google Scholar] [CrossRef]

- Wang, X.; Fu, C.; He, J.; Wang, S.; Wang, J. StrongFusionMOT: A Multi-Object Tracking Method Based on LiDAR-Camera Fusion. IEEE Sens. J. 2022, 23, 11241–11252. [Google Scholar] [CrossRef]

- Chang, K.; Bar-Shalom, Y. Joint probabilistic data association for multitarget tracking with possibly unresolved measurements and maneuvers. IEEE Trans. Automat. Control 1984, 29, 585–594. [Google Scholar] [CrossRef]

- Blackman, S.S. Multiple hypothesis tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Mahler, R. PHD filters of higher order in target number. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1523–1543. [Google Scholar] [CrossRef]

- Xing, J.; Wu, W.; Cheng, Q.; Liu, R. Traffic state estimation of urban road networks by multi-source data fusion: Review and new insights. Phys. A Stat. Mech. Appl. 2022, 595, 127079. [Google Scholar] [CrossRef]

- Pastor, A.; Sanjurjo-Rivo, M.; Escobar, D. Initial orbit determination methods for track-to-track association. Adv. Space Res. 2021, 68, 2677–2694. [Google Scholar] [CrossRef]

- You, L.; Zhao, F.; Cheah, L.; Jeong, K.; Zegras, P.C.; Ben-Akiva, M. A Generic Future Mobility Sensing System for Travel Data Collection, Management, Fusion, and Visualization. IEEE Trans. Intell. Transp. 2020, 21, 4149–4160. [Google Scholar] [CrossRef]

- Yao, G.; Saltus, R.; Dani, A. Image Moment-Based Extended Object Tracking for Complex Motions. IEEE Sens. J. 2020, 20, 6560–6572. [Google Scholar] [CrossRef]

- Liu, Z.; Shang, Y.; Li, T.; Chen, G.; Wang, Y.; Hu, Q.; Zhu, P. Robust Multi-Drone Multi-Target Tracking to Resolve Target Occlusion: A Benchmark. IEEE Trans. Multimed. 2023, 25, 1462–1476. [Google Scholar] [CrossRef]

- Ghahremani, M.; Williams, K.; Corke, F.; Tiddeman, B.; Liu, Y.; Wang, X.; Doonan, J.H. Direct and accurate feature extraction from 3D point clouds of plants using RANSAC. Comput. Electron. Agric. 2021, 187, 106240. [Google Scholar] [CrossRef]

- Akca, A.; Efe, M.Ö. Multiple Model Kalman and Particle Filters and Applications: A Survey. IFAC PapersOnLine 2019, 52, 73–78. [Google Scholar] [CrossRef]

- Zhang, F.; Ge, R.; Zhao, Y.; Kang, Y.; Wang, Z.; Qu, X. Multi-sensor registration method based on a composite standard artefact. Opt. Laser Eng. 2020, 134, 106205. [Google Scholar] [CrossRef]

- Blair, W.D.; Rice, T.R.; Alouani, A.T.; Xia, P. Asynchronous Data Fusion for Target Tracking with a Multitasking Radar and Optical Sensor. In Proceedings of the Acquisition, Tracking, and Pointing V, Orlando, FL, USA, 1 August 1991; Volume 1482, pp. 234–245. [Google Scholar]

- Zhu, H.; Wang, C. Joint track-to-track association and sensor registration at the track level. Digit. Signal Process. 2015, 41, 48–59. [Google Scholar] [CrossRef]

- Li, S.; Cheng, Y.; Brown, D.; Tharmarasa, R.; Zhou, G.; Kirubarajan, T. Comprehensive Time-Offset Estimation for Multisensor Target Tracking. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 2351–2373. [Google Scholar] [CrossRef]

- Taghavi, E.; Tharmarasa, R.; Kirubarajan, T.; Bar-Shalom, Y.; Mcdonald, M. A practical bias estimation algorithm for multisensor-multitarget tracking. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2–19. [Google Scholar] [CrossRef]

- Wang, J.; Zeng, Y.; Wei, S.; Wei, Z.; Wu, Q.; Savaria, Y. Multi-Sensor Track-to-Track Association and Spatial Registration Algorithm Under Incomplete Measurements. IEEE Trans. Signal Process. 2021, 69, 3337–3350. [Google Scholar] [CrossRef]

- Rakai, L.; Song, H.; Sun, S.; Zhang, W.; Yang, Y. Data association in multiple object tracking: A survey of recent techniques. Expert Syst. Appl. 2022, 192, 116300. [Google Scholar] [CrossRef]

- Kaplan, L.; Bar-Shalom, Y.; Blair, W. Assignment costs for multiple sensor track-to-track association. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 655–677. [Google Scholar] [CrossRef]

- Danchick, R.; Newnam, G.E. Reformulating Reid’s MHT Method with Generalised Murty K-best Ranked Linear Assignment Algorithm. IET Radar Sonar Navig. 2006, 153, 13–22. [Google Scholar] [CrossRef]

- He, X.; Tharmarasa, R.; Pelletier, M.; Kirubarajan, T. Accurate Murty’s Algorithm for Multitarget Top Hypothesis Extraction. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Mallick, M.; Chang, K.; Arulampalam, S.; Yan, Y. Heterogeneous Track-to-Track Fusion in 3-D Using IRST Sensor and Air MTI Radar. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 3062–3079. [Google Scholar] [CrossRef]

- Tian, X.; Yuan, T.; Bar-Shalom, Y. Track-to-Track Fusion in Linear and Nonlinear Systems; Springer: Berlin/Heidelberg, Germany, 2015; pp. 21–41. [Google Scholar]

- Rahmathullah, A.S.; García-Fernández, Á.F.; Svensson, L. Generalized Optimal Sub-Pattern Assignment Metric. In Proceedings of the 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–8. [Google Scholar]

| Parameter | Ego Vehicle | Target Vehicle 1 | Target Vehicle 2 | Target Vehicle 3 | Target Vehicle 4 |

|---|---|---|---|---|---|

| Speed (m/s) | 25 | 25 | 35 | 25 | 25 |

| Length (m) | 4.7 | 4.7 | 4.7 | 4.7 | 4.7 |

| Width (m) | 1.8 | 1.8 | 1.8 | 1.8 | 1.8 |

| Height (m) | 1.4 | 1.4 | 1.4 | 1.4 | 1.4 |

| Road centers | [0, 0; 50, 0; 100, 0; 250, 20; 400, 35] | ||||

| Sample time (s) | 0.1 | ||||

| Lane specifications | 3.0 | ||||

| Front Radar | Rear Radar | Left Radar | Right Radar | LiDAR | |

|---|---|---|---|---|---|

| Mounting location (m) | (3.7, 0.0, 0.6) | (−1.0, 0.0, 0.6) | (1.3, 0.9, 0.6) | (1.3, −0.9, 0.6) | (3.7, 0.0, 0.6) |

| Mounting angles (deg) | (0.0, 0.0, 0.0) | (180.0, 0.0, 0.0) | (90.0, 0.0, 0.0) | (−90.0, 0.0, 0.0) | (0.0, 0.0, 2.0) |

| Horizontal field of view (deg) | (5.0, 30.0) | (5.0, 90.0) | (5.0, 160.0) | (5.0, 160.0) | (5.0, 360.0) |

| Vertical field of view (deg) | - | - | - | - | (5.0 40.0) |

| Azimuth resolution (deg) | 6.0 | 6.0 | 6.0 | 6.0 | 0.2 |

| Range limits (m) | (0.0, 250.0) | (0.0, 100.0) | (0.0, 30.0) | (0.0, 30.0) | (0.0, 200.0) |

| Range resolution (m) | 2.5 | 2.5 | 2.5 | 2.5 | 1.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, J.; Tang, Y.; Gao, J.; Piao, C.; Wang, Z. Multitarget-Tracking Method Based on the Fusion of Millimeter-Wave Radar and LiDAR Sensor Information for Autonomous Vehicles. Sensors 2023, 23, 6920. https://doi.org/10.3390/s23156920

Shi J, Tang Y, Gao J, Piao C, Wang Z. Multitarget-Tracking Method Based on the Fusion of Millimeter-Wave Radar and LiDAR Sensor Information for Autonomous Vehicles. Sensors. 2023; 23(15):6920. https://doi.org/10.3390/s23156920

Chicago/Turabian StyleShi, Junren, Yingjie Tang, Jun Gao, Changhao Piao, and Zhongquan Wang. 2023. "Multitarget-Tracking Method Based on the Fusion of Millimeter-Wave Radar and LiDAR Sensor Information for Autonomous Vehicles" Sensors 23, no. 15: 6920. https://doi.org/10.3390/s23156920

APA StyleShi, J., Tang, Y., Gao, J., Piao, C., & Wang, Z. (2023). Multitarget-Tracking Method Based on the Fusion of Millimeter-Wave Radar and LiDAR Sensor Information for Autonomous Vehicles. Sensors, 23(15), 6920. https://doi.org/10.3390/s23156920