BoxStacker: Deep Reinforcement Learning for 3D Bin Packing Problem in Virtual Environment of Logistics Systems

Abstract

:1. Introduction

- How is the courier logistics problem of loading and unloading boxes solved? The evaluation process is carried out by applying Reinforcement Learning to develop the bin packing algorithm.

- How can solutions that can perform similarly to reality be provided? This will be solved by building the experiment environment with a 3D game engine.

- To what degree are the proposed solutions optimal? This will be analyzed by evaluating the feasible solutions made by DRL and finding the best positions for packed boxes.

2. Literature Survey

2.1. Bin Packing Problem

2.2. Deep Reinforcement Learning in 3D BPP

2.3. Reinforcement Learning in 3D Game Engine

2.4. Summary and Opportunities

- Three-dimensional box stacking has high-dimensional problems which cannot be solved by legacy heuristic algorithms. This method had the disadvantage that it may become impractical to use when the size of the problem becomes too large due to the “curse of dimensionality”. This can make it challenging to apply these methods to real-world problems.

- Even though DRL can provide available solutions, its performance is often far away from reality in many cases.

- Using open space made by a physical game engine to give a degree of freedom to all arbitrarily shaped boxes is the challenge of the previous literature.

- Simple modeling of 3D bin packing is better for adapting to new configurations of warehouse problems.

- A more realistic environment of bin packing is helpful to realize realistic situations without statistical simulation.

3. Methodology

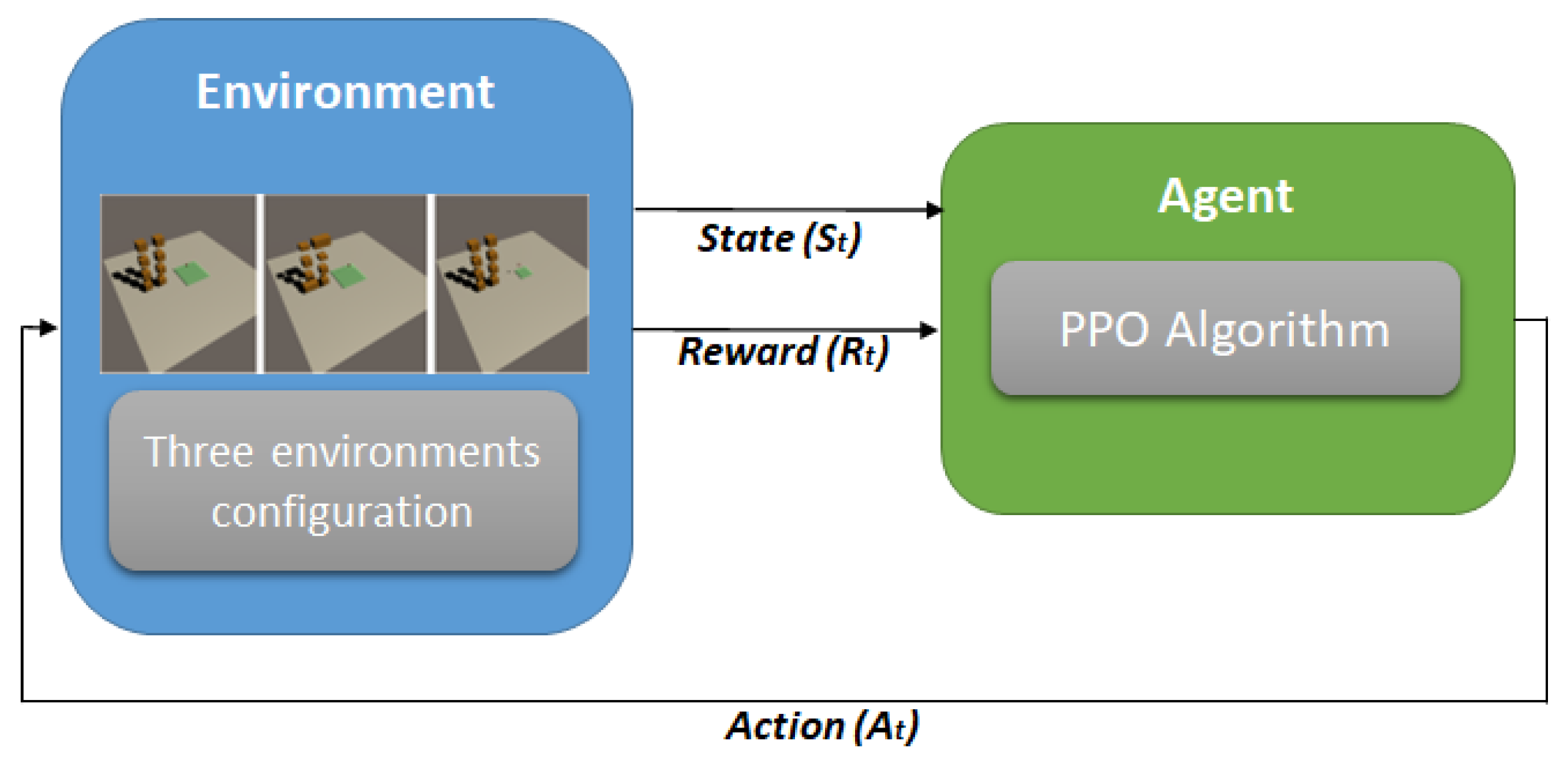

3.1. System Specifications

- 1.

- Define goals or specific tasks to be learned.

- 2.

- Define agents.

- 3.

- Define agents’ behavior and observation.

- 4.

- Define the reward.

| Algorithm 1: Box Stacker Agent Learning |

|

- 5.

- Define the conditions of the start and end of the episode.

- 6.

- Reinforcement learning model (algorithm) selection.

3.2. Algorithm

3.3. Workflow of Deep Reinforcement Learning

4. Experiment and Results

4.1. Experimental Planning

4.2. Experiment Scenarios

4.3. Hyperparameter Tuning

4.4. Cummulative Rewards Comparation

4.5. Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, F.; Hauser, K. Stable bin packing of non-convex 3D objects with a robot manipulator. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8698–8704. [Google Scholar]

- Zhao, X.; Bennell, J.A.; Bektaş, T.; Dowsland, K. A comparative review of 3D container loading algorithms. Int. Trans. Oper. Res. 2016, 23, 287–320. [Google Scholar] [CrossRef] [Green Version]

- Tanaka, T.; Kaneko, T.; Sekine, M.; Tangkaratt, V.; Sugiyama, M. Simultaneous Planning for Item Picking and Placing by Deep Reinforcement Learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 9705–9711. [Google Scholar]

- Levin, M.S. Towards bin packing (preliminary problem survey, models with multiset estimates). arXiv 2016, arXiv:1605.07574. [Google Scholar]

- Zuo, Q.; Liu, X.; Chan, W.K.V. A Constructive Heuristic Algorithm for 3D Bin Packing of Irregular Shaped Items. In Proceedings of the INFORMS International Conference on Service Science, Beijing, China, 2–4 July 2022; pp. 393–406. [Google Scholar]

- Hu, H.; Zhang, X.; Yan, X.; Wang, L.; Xu, Y. Solving a new 3d bin packing problem with Deep Reinforcement Learning method. arXiv 2017, arXiv:1708.05930. [Google Scholar]

- Ramos, A.G.; Silva, E.; Oliveira, J.F. A new load balance methodology for container loading problem in road transportation. Eur. J. Oper. Res. 2018, 266, 1140–1152. [Google Scholar] [CrossRef]

- Kolhe, P.; Christensen, H. Planning in Logistics: A survey. In Proceedings of the 10th Performance Metrics for Intelligent Systems Workshop, Baltimore, MD, USA, 28–30 September 2010; pp. 48–53. [Google Scholar]

- Den Boef, E.; Korst, J.; Martello, S.; Pisinger, D.; Vigo, D. A Note on Robot-Packable and Orthogonal Variants of the Three-Dimensional Bin Packing Problem; Technical Report 03/02; Department of Computer Science, University of Copenhagen: Copenhagen, Denmark, 2003. [Google Scholar]

- Crainic, T.G.; Perboli, G.; Tadei, R. Extreme point-based heuristics for three-dimensional bin packing. Informs J. Comput. 2008, 20, 368–384. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, J.F.; Resende, M.G. A biased random key genetic algorithm for 2D and 3D bin packing problems. Int. J. Prod. Econ. 2013, 145, 500–510. [Google Scholar] [CrossRef] [Green Version]

- De Andoin, M.G.; Osaba, E.; Oregi, I.; Villar-Rodriguez, E.; Sanz, M. Hybrid quantum-classical heuristic for the bin packing problem. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Boston, MA, USA, 9–13 July 2022; pp. 2214–2222. [Google Scholar]

- De Andoin, M.G.; Oregi, I.; Villar-Rodriguez, E.; Osaba, E.; Sanz, M. Comparative Benchmark of a Quantum Algorithm for the Bin Packing Problem. In Proceedings of the 2022 IEEE Symposium Series on Computational Intelligence (SSCI), Singapore, 4–7 December 2022; pp. 930–937. [Google Scholar]

- Bozhedarov, A.; Boev, A.; Usmanov, S.; Salahov, G.; Kiktenko, E.; Fedorov, A. Quantum and quantum-inspired optimization for solving the minimum bin packing problem. arXiv 2023, arXiv:2301.11265. [Google Scholar]

- Ross, P.; Schulenburg, S.; Marín-Bläzquez, J.G.; Hart, E. Hyper-heuristics: Learning to combine simple heuristics in bin packing problems. In Proceedings of the 4th Annual Conference on Genetic and Evolutionary Computation, New York, NY, USA, 9–13 July 2002; pp. 942–948. [Google Scholar]

- Bortfeldt, A.; Wäscher, G. Constraints in container loading–A state-of-the-art review. Eur. J. Oper. Res. 2013, 229, 1–20. [Google Scholar]

- Kundu, O.; Dutta, S.; Kumar, S. Deep-pack: A vision-based 2d online bin packing algorithm with deep Reinforcement Learning. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–7. [Google Scholar]

- Le, T.P.; Lee, D.; Choi, D. A Deep Reinforcement Learning-based Application Framework for Conveyor Belt-based Pick-and-Place Systems using 6-axis Manipulators under Uncertainty and Real-time Constraints. In Proceedings of the 2021 18th International Conference on Ubiquitous Robots (UR), Gangneung-si, Republic of Korea, 12–14 July 2021; pp. 464–470. [Google Scholar]

- Erbayrak, S.; Özkır, V.; Yıldırım, U.M. Multi-objective 3D bin packing problem with load balance and product family concerns. Comput. Ind. Eng. 2021, 159, 107518. [Google Scholar] [CrossRef]

- Jia, J.; Shang, H.; Chen, X. Robot Online 3D Bin Packing Strategy Based on Deep Reinforcement Learning and 3D Vision. In Proceedings of the 2022 IEEE International Conference on Networking, Sensing and Control (ICNSC), Shanghai, China, 15–18 December 2022; pp. 1–6. [Google Scholar]

- Huang, S.; Wang, Z.; Zhou, J.; Lu, J. Planning irregular object packing via hierarchical reinforcement learning. IEEE Robot. Autom. Lett. 2022, 8, 81–88. [Google Scholar] [CrossRef]

- Oucheikh, R.; Löfström, T.; Ahlberg, E.; Carlsson, L. Rolling Cargo Management Using a Deep Reinforcement Learning Approach. Logistics 2021, 5, 10. [Google Scholar] [CrossRef]

- Vinyals, O.; Fortunato, M.; Jaitly, N. Pointer networks. arXiv 2015, arXiv:1506.03134. [Google Scholar]

- Bello, I.; Pham, H.; Le, Q.V.; Norouzi, M.; Bengio, S. Neural combinatorial optimization with Reinforcement Learning. arXiv 2016, arXiv:1611.09940. [Google Scholar]

- Bo, A.; Lu, J.; Zhao, C. Deep Reinforcement Learning in POMDPs for 3-D palletization problem. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 577–582. [Google Scholar]

- Mower, C.; Stouraitis, T.; Moura, J.; Rauch, C.; Yan, L.; Behabadi, N.Z.; Gienger, M.; Vercauteren, T.; Bergeles, C.; Vijayakumar, S. ROS-PyBullet Interface: A framework for reliable contact simulation and human-robot interaction. In Proceedings of the Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 1411–1423. [Google Scholar]

- Bálint, B.A.; Lämmle, A.; Salteris, Y.; Tenbrock, P. Benchmark of the Physics Engine MuJoCo and Learning-based Parameter Optimization for Contact-rich Assembly Tasks. Procedia CIRP 2023, 119, 1059–1064. [Google Scholar] [CrossRef]

- Kempka, M.; Wydmuch, M.; Runc, G.; Toczek, J.; Jaśkowski, W. Vizdoom: A doom-based ai research platform for visual reinforcement learning. In Proceedings of the 2016 IEEE Conference on Computational Intelligence and Games (CIG), Santorini, Greece, 20–23 September 2016; pp. 1–8. [Google Scholar]

- Sørensen, J.V.; Ma, Z.; Jørgensen, B.N. Potentials of game engines for wind power digital twin development: An investigation of the Unreal Engine. Energy Inform. 2022, 5, 1–30. [Google Scholar] [CrossRef]

- Kaur, D.P.; Singh, N.P.; Banerjee, B. A review of platforms for simulating embodied agents in 3D virtual environments. Artif. Intell. Rev. 2023, 56, 3711–3753. [Google Scholar] [CrossRef]

- Wang, S.; Mao, Z.; Zeng, C.; Gong, H.; Li, S.; Chen, B. A new method of virtual reality based on Unity3D. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Oroojlooy, A.; Hajinezhad, D. A review of cooperative multi-agent Deep Reinforcement Learning. Appl. Intell. 2023, 53, 13677–13722. [Google Scholar]

- Zhao, H.; She, Q.; Zhu, C.; Yang, Y.; Xu, K. Online 3D bin packing with constrained Deep Reinforcement Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 741–749. [Google Scholar]

- Verma, R.; Singhal, A.; Khadilkar, H.; Basumatary, A.; Nayak, S.; Singh, H.V.; Kumar, S.; Sinha, R. A generalized Reinforcement Learning algorithm for online 3d bin packing. arXiv 2020, arXiv:2007.00463. [Google Scholar]

- Duan, L.; Hu, H.; Qian, Y.; Gong, Y.; Zhang, X.; Xu, Y.; Wei, J. A multi-task selected learning approach for solving 3D flexible bin packing problem. arXiv 2018, arXiv:1804.06896. [Google Scholar]

- Gleave, A.; Dennis, M.; Legg, S.; Russell, S.; Leike, J. Quantifying differences in reward functions. arXiv 2020, arXiv:2006.13900. [Google Scholar]

- Devidze, R.; Radanovic, G.; Kamalaruban, P.; Singla, A. Explicable reward design for Reinforcement Learning agents. Adv. Neural Inf. Process. Syst. 2021, 34, 20118–20131. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 1889–1897. [Google Scholar]

- Xu, C.; Zhu, R.; Yang, D. Karting racing: A revisit to PPO and SAC algorithm. In Proceedings of the 2021 International Conference on Computer Information Science and Artificial Intelligence (CISAI), Kunming, China, 17–19 September 2021; pp. 310–316. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Batch Size | 10 |

| Buffer Size | 100 |

| Learning Rate | 3.0 × 10−4 |

| Epoch | 3 |

| Beta | 5.0 × 10−4 |

| Epsilon | 0.2 |

| Lambd | 0.99 |

| Max Steps | 50,000 |

| Hyperparameter | Value |

|---|---|

| Batch Size | 10 |

| Buffer Size | 100 |

| Learning Rate | 2.0 × 10−4 |

| Epoch | 3 |

| Beta | 5.0 × 10−4 |

| Epsilon | 0.2 |

| Lambd | 0.99 |

| Max Steps | 40,000 |

| Environment Setting | Cumulative Rewards Using Old Hyperparameter | Cumulative Rewards Using New Hyperparameter |

|---|---|---|

| Boxes with the same size | 7.823 | 12.66 |

| Boxes with different size | 5.866 | 6.101 |

| Smaller plane | 56.03 | 238.1 |

| Solutions | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Box 1 | 0.3659 | 0.3675 | 0.3678 | 0.3674 | 0.3672 |

| Box 2 | 0.4071 | 0.4071 | 0.4071 | 0.4071 | 0.4071 |

| Box 3 | 0.4076 | 0.4075 | 0.4075 | 0.4075 | 0.4075 |

| Box 4 | 0.9169 | 0.9168 | 0.9168 | 0.9169 | 0.9169 |

| Box 5 | 0.6786 | 0.6780 | 0.6774 | 0.6775 | 0.6776 |

| Box 6 | 0.9997 | 1.0001 | 1.0004 | 1.0003 | 1.0003 |

| Box 7 | 0.9635 | 0.9610 | 0.9601 | 0.9604 | 0.9607 |

| Box 8 | 1.0913 | 1.0401 | 1.0131 | 1.0147 | 1.0163 |

| Total Gaps | 5.8307 | 5.7780 | 5.7502 | 5.7520 | 5.7537 |

| Heuristics | RL without Engine | This Study | |

|---|---|---|---|

| Roll-out | Limited | Limited | Efficient |

| Stability | Prone to Issues | Prone to Issues | Enhanced |

| Arbitrary Shape Handling | Limited | Limited | Yes |

| Visualization | Limited | Limited | Intuitive |

| Ease of Modification | Difficult | Difficult | Easy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murdivien, S.A.; Um, J. BoxStacker: Deep Reinforcement Learning for 3D Bin Packing Problem in Virtual Environment of Logistics Systems. Sensors 2023, 23, 6928. https://doi.org/10.3390/s23156928

Murdivien SA, Um J. BoxStacker: Deep Reinforcement Learning for 3D Bin Packing Problem in Virtual Environment of Logistics Systems. Sensors. 2023; 23(15):6928. https://doi.org/10.3390/s23156928

Chicago/Turabian StyleMurdivien, Shokhikha Amalana, and Jumyung Um. 2023. "BoxStacker: Deep Reinforcement Learning for 3D Bin Packing Problem in Virtual Environment of Logistics Systems" Sensors 23, no. 15: 6928. https://doi.org/10.3390/s23156928

APA StyleMurdivien, S. A., & Um, J. (2023). BoxStacker: Deep Reinforcement Learning for 3D Bin Packing Problem in Virtual Environment of Logistics Systems. Sensors, 23(15), 6928. https://doi.org/10.3390/s23156928