Temporal Estimation of Non-Rigid Dynamic Human Point Cloud Sequence Using 3D Skeleton-Based Deformation for Compression

Abstract

1. Introduction

2. Dynamic Point Cloud Sequence

2.1. Dynamic Point Cloud

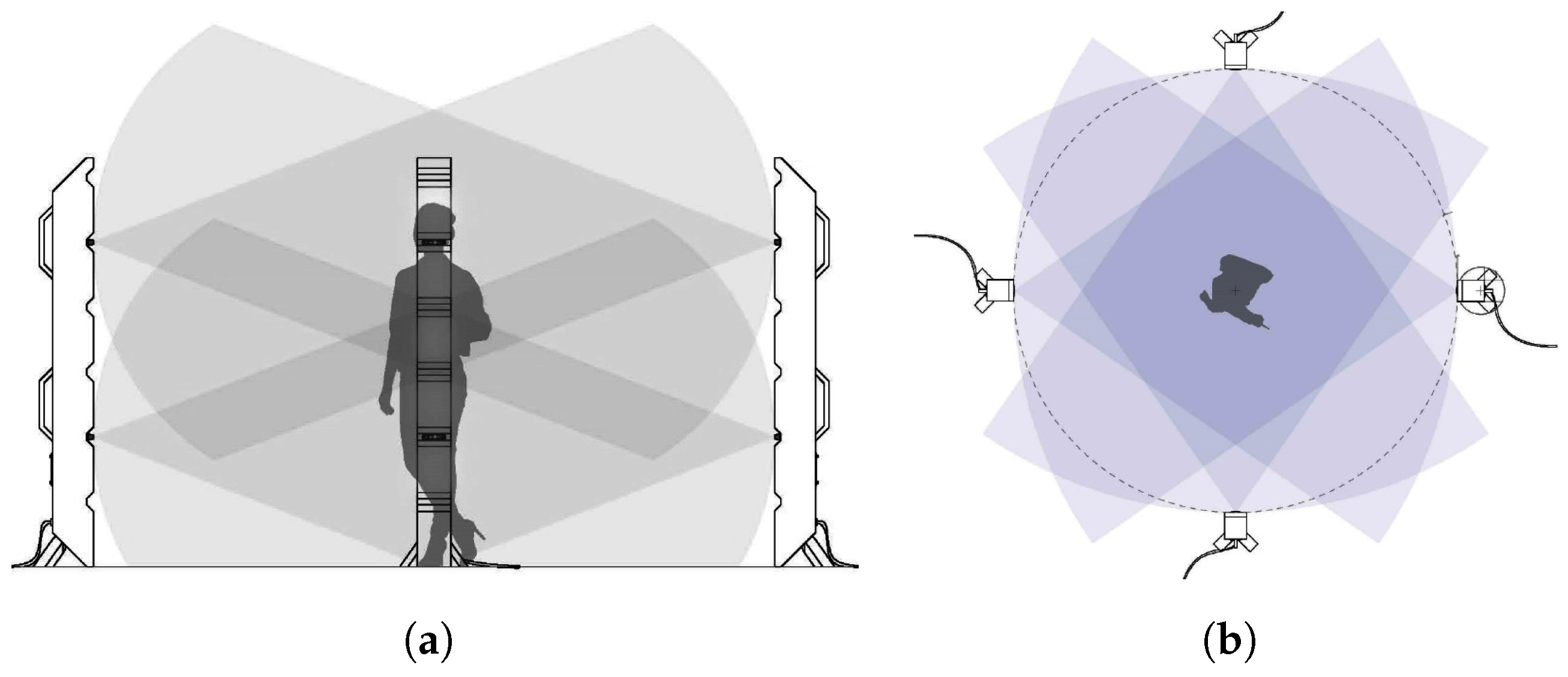

2.2. Dynamic Point Cloud Capture

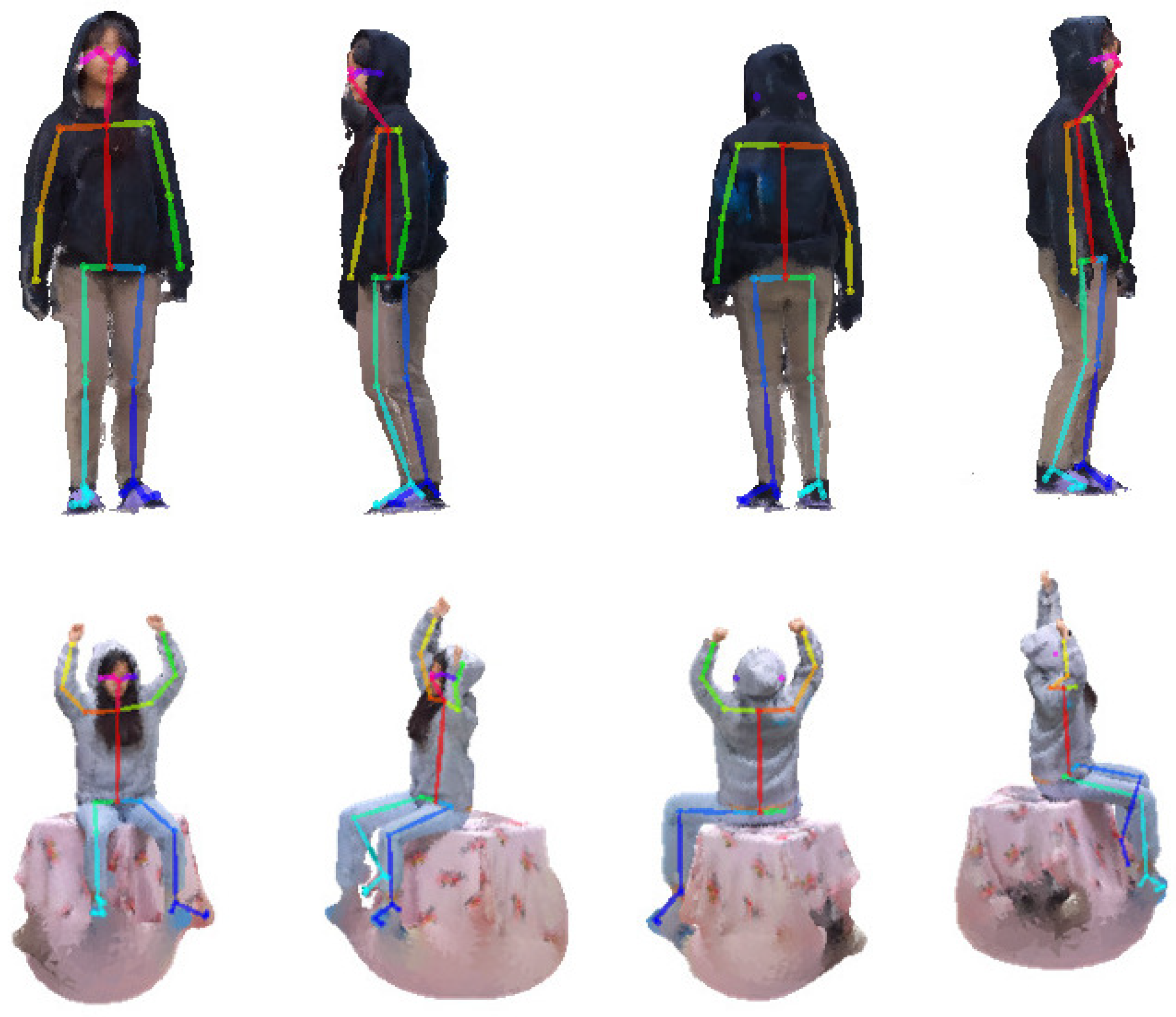

2.3. 3D Pose Estimation

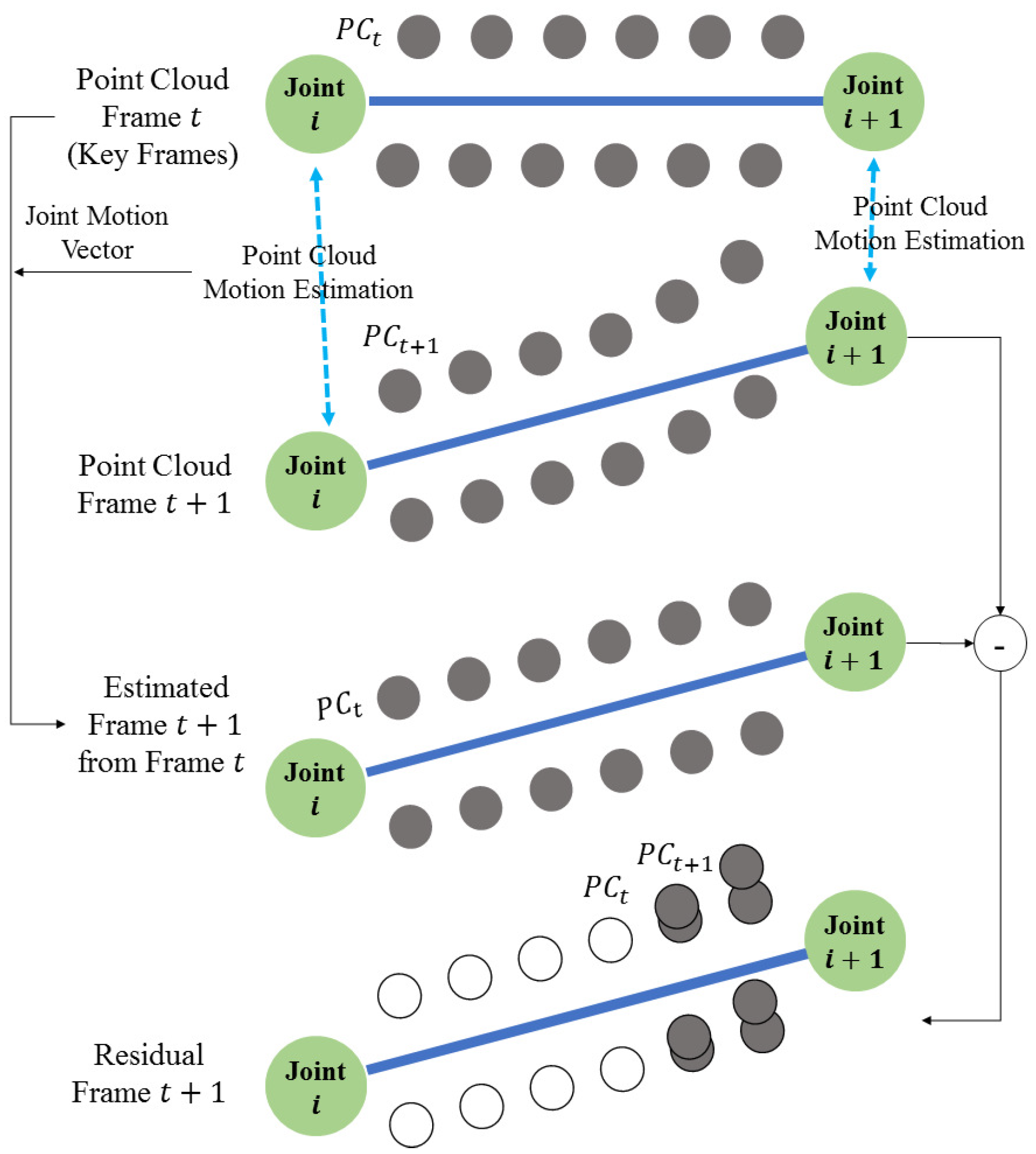

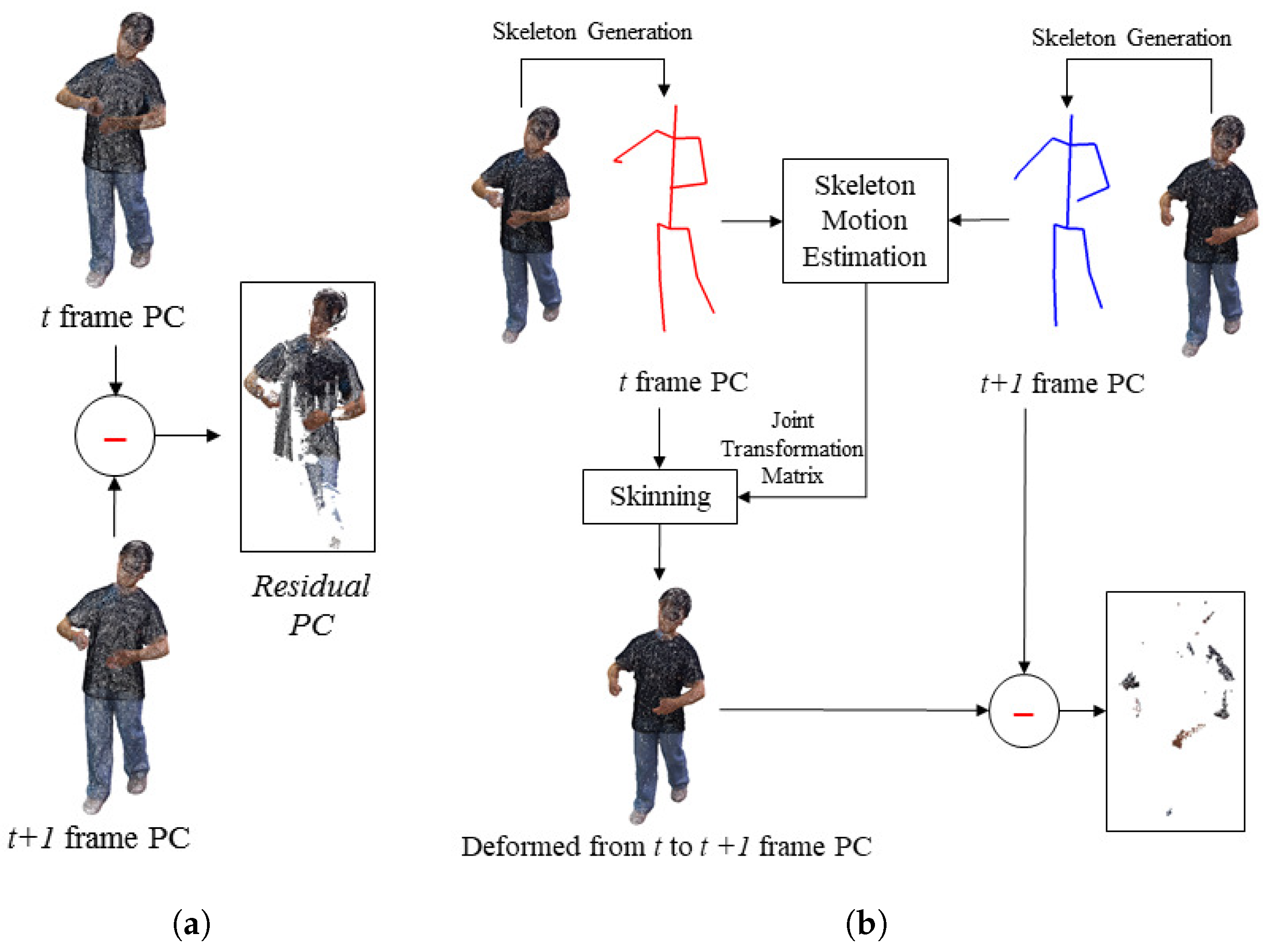

3. Temporal Prediction of Dynamic Point Cloud

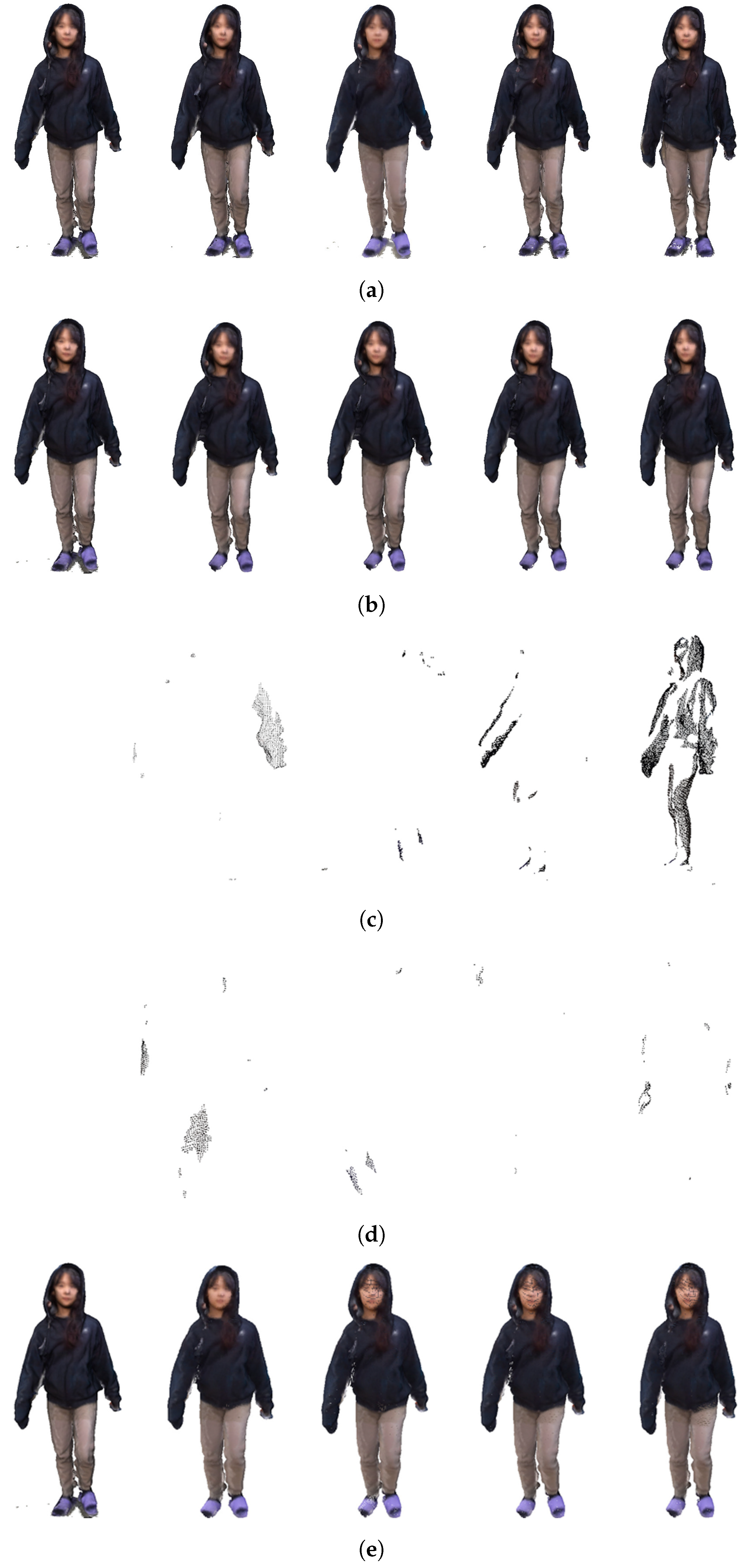

3.1. Prediction and Reconstruction

3.2. Group of PCF

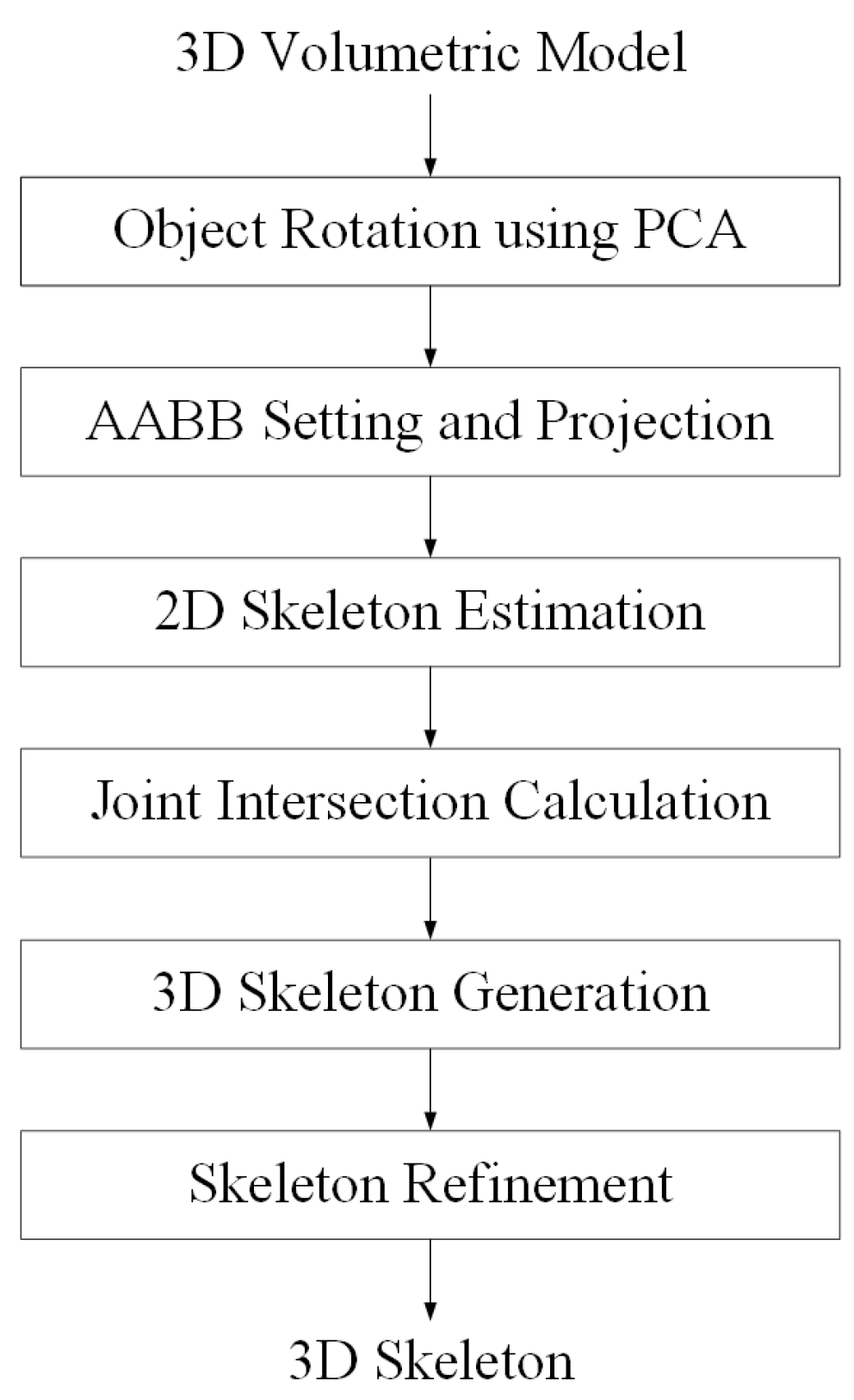

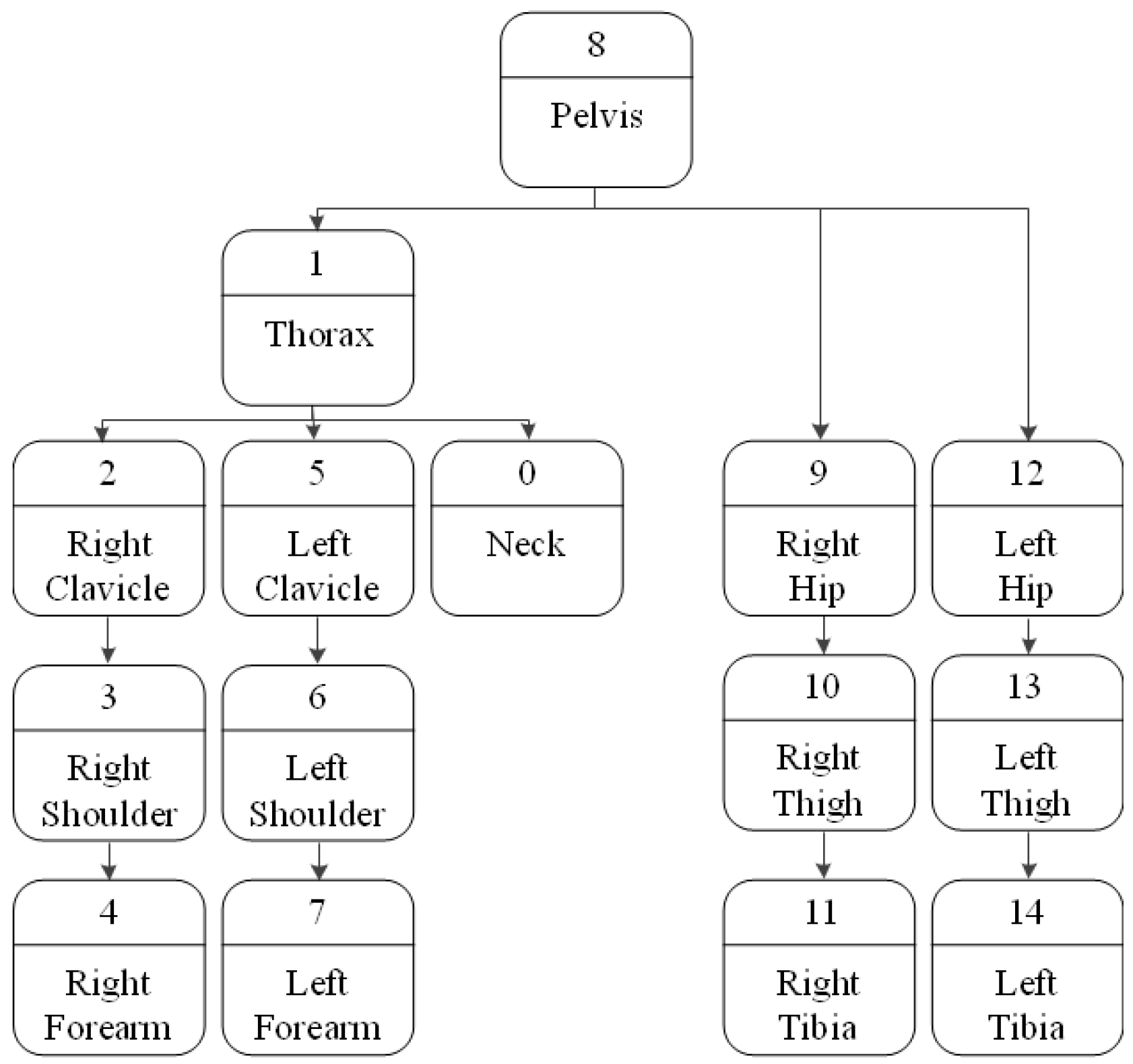

3.3. 3D Skeleton Extraction

3.4. Skeleton Motion Estimation

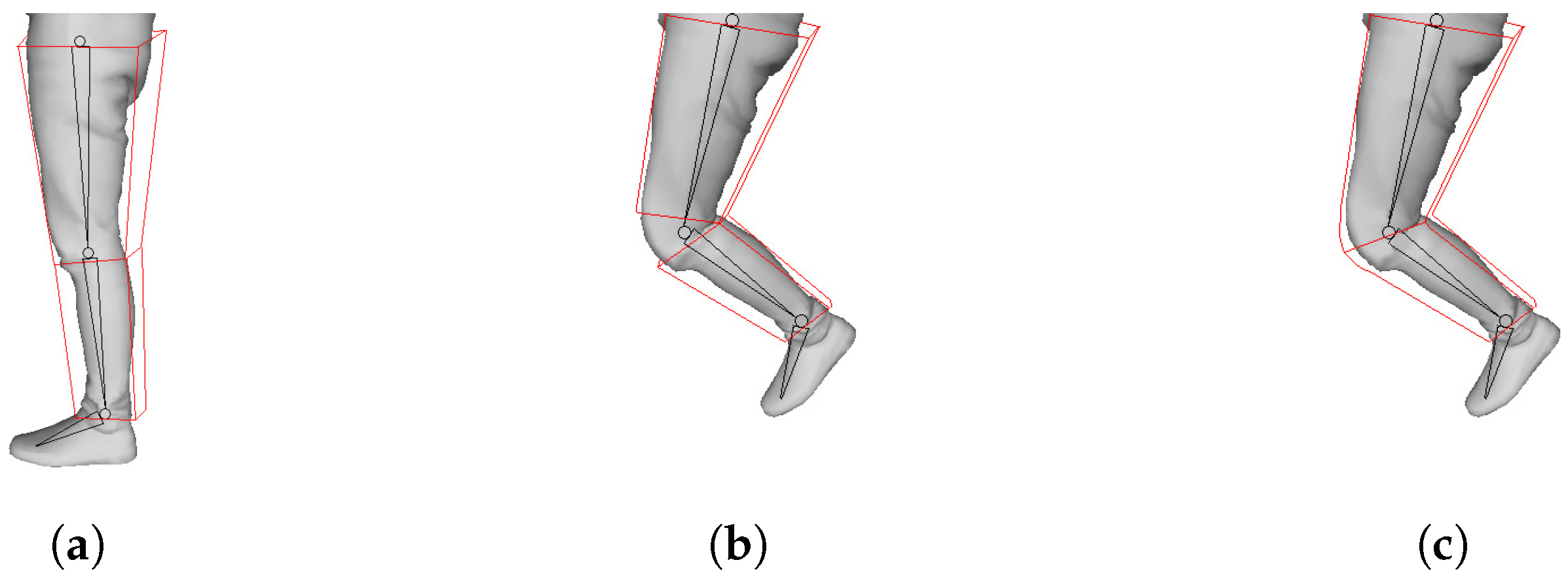

3.5. Deformation of 3D Point Cloud

3.6. Residual Point Cloud

4. Experimental Result

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pavez, E.; Chou, P.A.; De Queiroz, R.L.; Ortega, A. Dynamic polygon clouds: Representation and compression for VR/AR. APSIPA Trans. Signal Inf. Process. 2018, 7, e15. [Google Scholar] [CrossRef]

- Kammerl, J.; Blodow, N.; Rusu, R.B.; Gedikli, S.; Beetz, M.; Steinbach, E. Real-time compression of point cloud streams. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 778–785. [Google Scholar]

- Schwarz, S.; Preda, M.; Baroncini, V.; Budagavi, M.; Cesar, P.; Chou, P.A.; Cohen, R.A.; Krivokuća, M.; Lasserre, S.; Li, Z.; et al. Emerging MPEG standards for point cloud compression. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 9, 133–148. [Google Scholar] [CrossRef]

- Briceno, H.M.; Sander, P.V.; McMillan, L.; Gortler, S.; Hoppe, H. Geometry videos: A new representation for 3D animations. In Proceedings of the 2003 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, San Diego, CA, USA, 26–27 July 2003; pp. 136–146. [Google Scholar]

- Collet, A.; Chuang, M.; Sweeney, P.; Gillett, D.; Evseev, D.; Calabrese, D.; Hoppe, H.; Kirk, A.; Sullivan, S. High-quality streamable free-viewpoint video. ACM Trans. Graph. (ToG) 2015, 34, 1–13. [Google Scholar] [CrossRef]

- Jackins, C.L.; Tanimoto, S.L. Oct-trees and their use in representing three-dimensional objects. Comput. Graph. Image Process. 1980, 14, 249–270. [Google Scholar] [CrossRef]

- Meagher, D. Geometric modeling using octree encoding. Comput. Graph. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Schnabel, R.; Klein, R. Octree-based Point-Cloud Compression. PBG@ SIGGRAPH 2006, 3, 111–121. [Google Scholar]

- Pathak, K.; Birk, A.; Poppinga, J.; Schwertfeger, S. 3D forward sensor modeling and application to occupancy grid based sensor fusion. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 2059–2064. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3d is here: Point cloud library (pcl). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Mekuria, R.; Blom, K.; Cesar, P. Design, implementation, and evaluation of a point cloud codec for tele-immersive video. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 828–842. [Google Scholar] [CrossRef]

- Thanou, D.; Chou, P.A.; Frossard, P. Graph-based compression of dynamic 3D point cloud sequences. IEEE Trans. Image Process. 2016, 25, 1765–1778. [Google Scholar] [PubMed]

- Kim, K.J.; Park, B.S.; Kim, J.K.; Kim, D.W.; Seo, Y.H. Holographic augmented reality based on three-dimensional volumetric imaging for a photorealistic scene. Opt. Express 2020, 28, 35972–35985. [Google Scholar] [PubMed]

- Lee, S. Convergence Rate of Optimization Algorithms for a Non-Strictly Convex Function; Institute of Control Robotics and Systems: Seoul, Republic of Korea, 2019; pp. 349–350. [Google Scholar]

- Kim, K.J.; Park, B.S.; Kim, D.W.; Kwon, S.C.; Seo, Y.H. Real-time 3D Volumetric Model Generation using Multiview RGB-D Camera. J. Broadcast Eng. 2020, 25, 439–448. [Google Scholar]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Barequet, G.; Har-Peled, S. Efficiently approximating the minimum-volume bounding box of a point set in three dimensions. J. Algorithms 2001, 38, 91–109. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Debernardis, S. Poisson Mesh Reconstruction for Accurate Object Tracking With Low-Fidelity Point Clouds. J. Comput. Inf. Sci. Eng. 2016, 17, 011003. [Google Scholar] [CrossRef]

- Pan, H.; Huo, H.; Cui, G.; Chen, S. Modeling for deformable body and motion analysis: A review. Math. Probl. Eng. 2013, 2013, 786749. [Google Scholar]

- Kwolek, B.; Rymut, B. Reconstruction of 3D human motion in real-time using particle swarm optimization with GPU-accelerated fitness function. J. Real-Time Image Process. 2020, 17, 821–838. [Google Scholar]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. (ToG) 2013, 32, 1–13. [Google Scholar]

- Bang, S.; Lee, S.H. Computation of skinning weight using spline interface. In ACM SIGGRAPH 2018 Posters; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–2. [Google Scholar]

- Cushman, R. Open Source Rigging in Blender: A Modular Approach. Ph.D. Thesis, Clemson University, Clemson, SC, USA, 2011. [Google Scholar]

| Item | Frame | Key Frame | Non-Key Frame | Ratio |

|---|---|---|---|---|

| Original | Number of Point Cloud | 348,597 | 341,334 | 100.00% |

| Data size (KB) | 17,699 | 16,427 | 100.00% | |

| Residual | Number of Point Cloud | 348,597 | 26,473 | 7.76% |

| Data size (KB) | 17,699 | 1061 | 6.46% | |

| Residual with Deformation | Number of Point Cloud | 348,597 | 2190 | 0.64% |

| Data size (KB) | 6923 | 35 | 0.01% |

| Frame | t + 1 | t + 2 | t + 3 | t + 4 |

|---|---|---|---|---|

| Mean Distance (m) | 0.004571 | 0.006422 | 0.009824 | 0.014579 |

| Standard Deviation (m) | 0.002758 | 0.00506 | 0.007838 | 0.009799 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-K.; Jang, Y.-W.; Lee, S.; Hwang, E.-S.; Seo, Y.-H. Temporal Estimation of Non-Rigid Dynamic Human Point Cloud Sequence Using 3D Skeleton-Based Deformation for Compression. Sensors 2023, 23, 7163. https://doi.org/10.3390/s23167163

Kim J-K, Jang Y-W, Lee S, Hwang E-S, Seo Y-H. Temporal Estimation of Non-Rigid Dynamic Human Point Cloud Sequence Using 3D Skeleton-Based Deformation for Compression. Sensors. 2023; 23(16):7163. https://doi.org/10.3390/s23167163

Chicago/Turabian StyleKim, Jin-Kyum, Ye-Won Jang, Sol Lee, Eui-Seok Hwang, and Young-Ho Seo. 2023. "Temporal Estimation of Non-Rigid Dynamic Human Point Cloud Sequence Using 3D Skeleton-Based Deformation for Compression" Sensors 23, no. 16: 7163. https://doi.org/10.3390/s23167163

APA StyleKim, J.-K., Jang, Y.-W., Lee, S., Hwang, E.-S., & Seo, Y.-H. (2023). Temporal Estimation of Non-Rigid Dynamic Human Point Cloud Sequence Using 3D Skeleton-Based Deformation for Compression. Sensors, 23(16), 7163. https://doi.org/10.3390/s23167163