1. Introduction

The colorimetric conversion of digital cameras plays an important role not only in the field of color display but also in many other fields such as color measurement, machine vision, and so on. Today, with the rapid development of wide-color-gamut display technology, a series of color display standards with wide color gamut have been put forward, such as Adobe-RGB, DCI-P3, and Rec.2020. Meanwhile, many kinds of multi-primary display systems with wide color gamut have been demonstrated [

1,

2,

3,

4,

5,

6,

7,

8,

9].

Figure 1a shows the wide-color-gamut blocks displayed on a six-primary LED array display developed by our laboratory. It can be seen from

Figure 1b that the color gamut of the six-primary LED array display is much wider than that of the sRGB standard. To meet the needs of wide-color-gamut display technology, it is necessary for us to develop a colorimetric characterization method for digital cameras in the case of wide color gamut.

Until now, different kinds of colorimetric characterization methods have been developed, including polynomial transforms [

10,

11,

12,

13,

14,

15,

16], artificial neural networks (ANN) [

17,

18,

19,

20,

21,

22,

23], and look-up tables [

24]. In 2016, Gong et al. [

12] demonstrated a color calibration method between different digital cameras with ColorChecker SG cards and the polynomial transform method and achieved an average color difference of 1.12 (CIEDE2000). In 2022, Xie et al. [

18] proposed a colorimetric characterization method for color imaging systems with ColorChecker SG cards based on a multi-input PSO-BP neural network and achieved average color differences of 1.53 (CIEDE2000) and 2.06 (CIE1976L*a*b*). However, the methods mentioned above were mainly used for cameras with standard color space such as an sRGB system. In 2023, Li et al. [

17] employed multi-layer BP artificial neural networks (ML-BP-ANN) to realize colorimetric characterization for wide-color-gamut cameras, but the color conversion differences of some high-chroma samples were undesirable.

In addition to conventional multi-layer BP artificial neural networks, the prevailing deep learning neural networks have also been used for the color conversion of color imagers. For example, in 2022, Yeh et al. [

19] proposed using a lightweight deep neural network model for the image color conversion of underwater object detection. However, the aim of the model proposed by Yeh et al. was to transform color images to corresponding grayscale images to solve the problem of underwater color absorption; therefore, it had nothing to do with colorimetric characterization. For another example, in 2023, Wang et al. [

21] proposed a five-stage convolutional neural network (CNN) to model the colorimetric characterization of a color image sensor and achieved an average color difference of 0.48 (CIE1976L*a*b*) for 1300 color samples from IT8.7/4 cards. It should be noted that the color difference accuracy achieved by Wang et al.’s CNN is roughly equal to that achieved by the ML-BP-ANN of Li et al. [

17] for samples from ColorChecker SG cards. However, in the architectures of the CNN proposed by Wang et al., a BP neural network (BPNN) with a single hidden layer and 2048 neurons in the hidden layer was employed to perform color conversion from the RGB space of the imager to the L*a*b* space of the CIELAB system. This indicates that the CNN proposed by Wang et al. cannot yet replace the BP neural network. Moreover, another interesting neural network model named the radial basis function neural network (RBFNN) was demonstrated for the colorimetric characterization of digital cameras by Ma et al. [

19] in 2020. The architecture of the RBFNN used by Ma et al. was made up of an input layer, a hidden layer, and an output layer, and the error back-propagation (BP) algorithm was also used for the training of the RBFNN. In summary, until now, different kinds of artificial neural networks used for color conversion have been put forward; however, neural networks employing the BP algorithm continue to prevail.

In this paper, to improve the colorimetric characterization accuracy of wide-color-gamut cameras, an optimization method for training samples was proposed and studied. In the method, the training and testing samples were grouped according to hue angles and chromas, and by using the Pearson correlation coefficient as the evaluation standard, the linear correlation between the RGB and XYZ of the sample groups could be evaluated. Meanwhile, two colorimetric characterization methods—polynomial formulas with different terms and the multi-layer BP artificial neural network (ML-BP-ANN)—were used, respectively, to verify the efficiency of the proposed method.

2. The Problems of Wide Color Gamut

Practically, the RGB values of a digital camera can be calculated with Formula (1), where

,

, and

denote the spectral sensitivities of the RGB channels, respectively,

denotes the spectral reflectance of the object,

denotes the spectrum of the light source, and

,

, and

and are the normalized constants.

Meanwhile, the tristimulus of the object can be calculated with Formula (2), where

,

, and

denote the color matching functions of a CIE1931XYZ system, and

k is a normalized constant.

It can be seen from Formulas (1) and (2) that there exists a nonlinear conversion between the (

R,

G,

B) and the (

X,

Y,

Z). In fact, different methods have been used to model the conversion from the RGB space to the XYZ space [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24]. Among them, polynomial transforms and the BP artificial neural network (BP-ANN) are the most popular.

2.1. Polynomial Transforms

When the color sample sets () and () of digital cameras are given, where i = 1 to N, and N is the total number of samples, a polynomial transformation model can be established for converting the [R, G, B] space to the [X, Y, Z] space for the cameras.

The simplest method is a linear model, as follows:

where

A is a matrix:

Usually, by using the color sample sets () and () of the cameras, and the least squares method, the elements of matrix A can be estimated, and then the linear transformation model Formula (3) can be established.

However, for digital cameras, the conversion between the (R, G, B) space and the (X, Y, Z) space is nonlinear, so it is necessary to adopt the nonlinear models as follows.

In summary, polynomial transforms can be expressed with Formulas (8) and (9), where

A is a constant matrix of three lines by

n rows, and

n is the number of terms in a polynomial formula.

To conclude, the elements of matrix A can be decided by means of the least square method, Wiener estimation method, principal component analysis, and so on. In the paper, the least squares method was used to estimate the elements of matrix A.

2.2. Artificial Neural Network ML-BP-ANN

The multi-layer BP artificial neural network (ML-BP-ANN) can also be used to perform the conversion from the [

R,

G,

B] space to the [

X,

Y,

Z] space in the case of wide color gamut [

17]. The architecture of an ML-BP-ANN is shown in

Figure 2, where

W(0),

W(1), and

W(q) denote the link weights, and θ denotes the threshold of the neurons, and

ai,

bj,

dp denote the output values of the neurons in each layer, respectively. It should be noted that the number of hidden layers and the neurons in each hidden layer can be decided based on the training and testing experiments [

17], and the values of the link weights and the values of the thresholds can be decided through training. More importantly, the color sample sets (

) and (

) for the training should be uniformly distributed in the whole color space of the cameras.

In order to obtain desired conversion accuracy for all color samples, the training samples should be uniformly distributed in the whole color space. For instance, when the chromaticity coordinates of the color samples are distributed within a color gamut area such as the sRGB gamut triangle, the desired conversion accuracy can be easily obtained using the polynomial formulas with the terms of the cubic, the quartic, or the quantic [

11,

12,

13,

16].

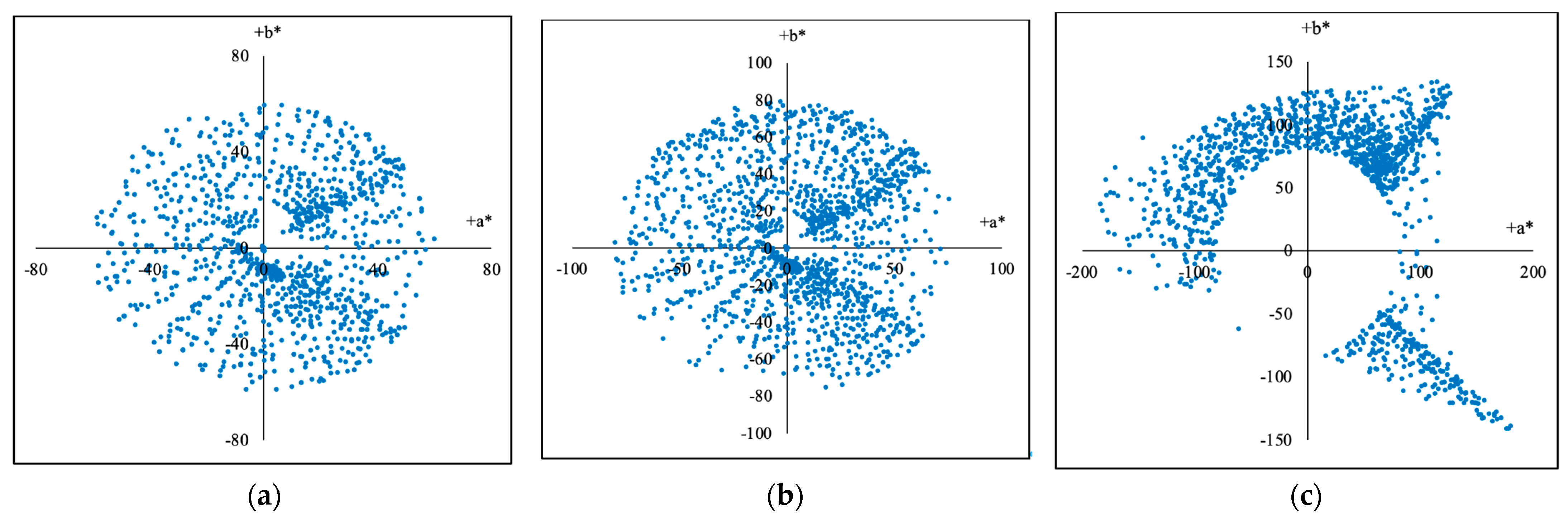

However, in the case of wide color gamut, the chromaticity coordinates of the samples are distributed in the whole color space such as in a CIE1931XYZ system, and the chromas of the samples are usually bigger than those of conventional samples such as the IT8 chart, the Munsell or NCS system, the Professional Colour Communicator (PCC), X-rite ColorChecker SG, and so on. Therefore, in the case of wide color gamut, it is difficult for us to establish the color conversions with desired conversion accuracy.

To solve the problem above, we propose using an optimal method to facilitate color conversions for wide-color-gamut cameras. The method makes use of the Pearson correlation coefficient to evaluate the linear correlation between the RGB values and the XYZ values in a training group, so that a training group with optimal linear correlation can be obtained and color conversion models with desired approximation accuracy can be established.

3. Evaluating the Samples Using Linear Correlation

Theoretically, the Pearson correlation coefficient can be used to describe the linear correlation between two random variables. Similarly, we can use the Pearson correlation coefficient to evaluate the linear correlation between the RGB space of a digital camera and the XYZ space of a CIE1931XYZ system. Therefore, in a sample group, the (

) and the (

) are supposed as two random variables, respectively, the vectors

,

are used to describe the coordinates (

) and (

), respectively, and

,

are used to express the module of

and

, respectively, as shown in Formula (10).

Hence, the Pearson correlation coefficient (PCC) between (

) and (

) can be calculated using Formula (11).

where

n is the number of the samples in a sample group.

Beside the Pearson correlation coefficient mentioned above, there are many other statistical methods to analyze the relationship between (

) and (

). For example, the standard deviations

and

as shown in Formula (12) can be used to estimate the similarity between the vectors

and

. However, the values of

and

here are variable along with the values of (

) and (

), so it is not a suitable method to solve our problem.

Beside Formula (12), many other methods can be used to calculate the similarity between () and (). For example, both wavelet analysis and the gray symbiotic matrix can be used to evaluate the similarity between the chromaticity coordinate patterns of () and (). Obviously, such methods are much more complicated than the Pearson correlation coefficient method.