Color Conversion of Wide-Color-Gamut Cameras Using Optimal Training Groups

Abstract

:1. Introduction

2. The Problems of Wide Color Gamut

2.1. Polynomial Transforms

2.2. Artificial Neural Network ML-BP-ANN

3. Evaluating the Samples Using Linear Correlation

4. Experiment

4.1. The Spectral Sensitivity of the Camrea

4.2. Preparing the Sample Groups

4.2.1. The Basic Samples

4.2.2. Extending the Samples

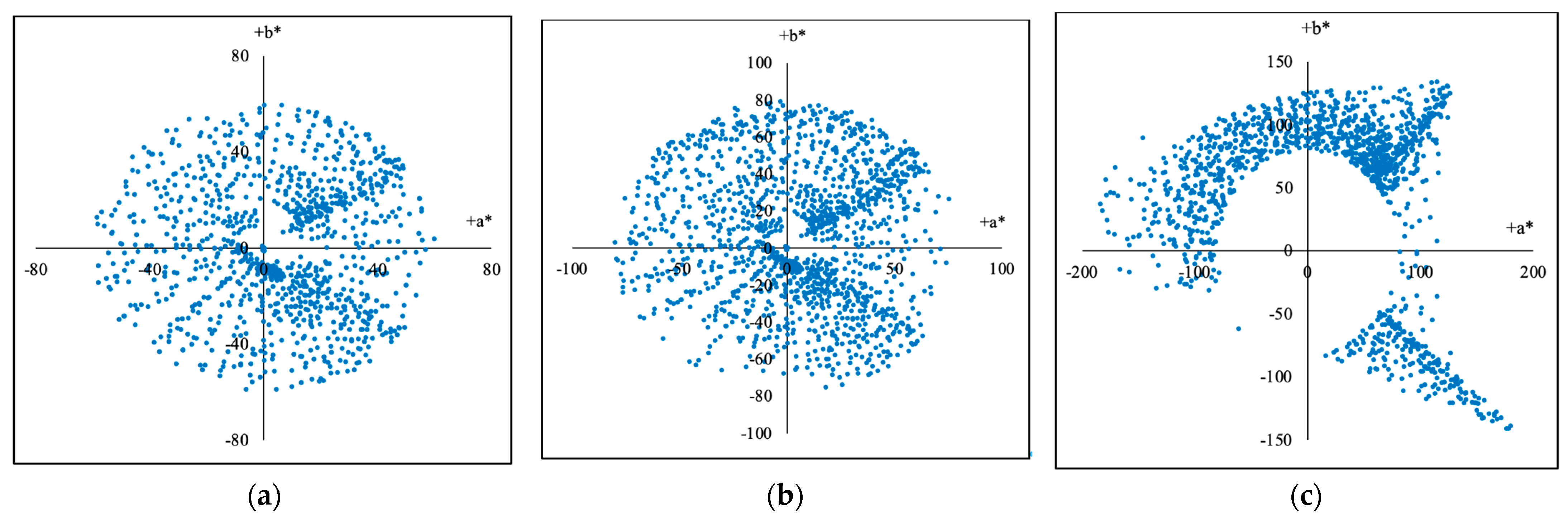

4.2.3. Dividing the Samples

4.3. The Correlation Coefficients

4.4. Training and Testing

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Y.; Xi, Y.; Zhang, X. Research on color conversion model of multi-primary-color display. SID Int. Symp. 2021, 52, 982–984. [Google Scholar] [CrossRef]

- Hexley, A.C.; Yöntem, A.; Spitschan, M.; Smithson, H.E.; Mantiuk, R. Demonstrating a multi-primary high dynamic range display system for vision experiments. J. Opt. Soc. Am. A 2020, 37, 271–284. [Google Scholar] [CrossRef] [PubMed]

- Huraibat, K.; Perales, E.; Viqueira, V.; Martínez-Verdú, F.M. A multi-primary empirical model based on a quantum dots display technology. Color Res. Appl. 2020, 45, 393–400. [Google Scholar] [CrossRef]

- Lin, S.; Tan, G.; Yu, J.; Chen, E.; Weng, Y.; Zhou, X.; Xu, S.; Ye, Y.; Yan, Q.F.; Guo, T. Multi-primary-color quantum-dot down-converting films for display applications. Opt. Express 2019, 27, 28480–28493. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-J.; Shin, M.-H.; Lee, J.-Y.; Kim, J.-H.; Kim, Y.-J. Realization of 95% of the Rec. 2020 color gamut in a highly efficient LCD using a patterned quantum dot film. Opt. Express 2017, 25, 10724–10734. [Google Scholar] [PubMed]

- Xiong, Y.; Deng, F.; Xu, S.; Gao, S. Performance analysis of multi-primary color display based on OLEDs/PLEDs. Opt. Commun. 2017, 398, 49–55. [Google Scholar] [CrossRef]

- Kim, G. Optical Design Optimization for LED Chip Bonding and Quantum Dot Based Wide Color Gamut Displays; University of California: Irvine, CA, USA, 2017. [Google Scholar]

- Masaoka, K.; Nishida, Y.; Sugawara, M.; Nakasu, E. Design of Primaries for a Wide-Gamut Television Colorimetry. IEEE Trans. Broadcast. 2010, 56, 452–457. [Google Scholar] [CrossRef]

- Zhang, X.; Qin, H.; Zhou, X.; Liu, M. Comparative evaluation of color reproduction ability and energy efficiency between different wide-color-gamut LED display approaches. Opt. Int. J. Light Electron Opt. 2021, 225, 165894. [Google Scholar]

- Rowlands, D.A. Color conversion matrices in digital cameras: A tutorial. Opt. Eng. 2020, 59, 110801. [Google Scholar]

- Ji, J.; Fang, S.; Shi, Z.; Xia, Q.; Li, Y. An efficient nonlinear polynomial color characterization method based on interrelations of color spaces. Color Res. Appl. 2020, 45, 1023–1039. [Google Scholar] [CrossRef]

- Gong, R.; Wang, Q.; Shao, X.; Liu, J. A color calibration method between different digital cameras. Optik 2016, 127, 3281–3285. [Google Scholar] [CrossRef]

- Molada-Tebar, A.; Lerma, J.L.; Marqués-Mateu, Á. Camera characterization for improving color archaeological documentation. Color Res. Appl. 2018, 43, 47–57. [Google Scholar] [CrossRef]

- Wu, X.; Fang, J.; Xu, H.; Wang, Z. High dynamic range image reconstruction in device-independent color space based on camera colorimetric characterization. Optik 2017, 140, 776–785. [Google Scholar]

- Cheung, V.; Westland, S.; Connah, D.; Ripamonti, C. A comparative study of the characterization of colour cameras by means of neural networks and polynomial transforms. Color. Technol. 2004, 120, 19–25. [Google Scholar] [CrossRef]

- Hong, G.; Luo, M.R.; Rhodes, P.A. A study of digital camera colorimetric characterization based on polynomial modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Liao, N.; Li, H.; Lv, N.; Wu, W. Colorimetric characterization of the wide-color-gamut camera using the multilayer artificial neural network. J. Opt. Soc. Am. A 2023, 40, 629. [Google Scholar] [CrossRef]

- Liu, L.; Xie, X.; Zhang, Y.; Cao, F.; Liang, J.; Liao, N. Colorimetric characterization of color imaging systems using a multi-input PSO-BP neural network. Color Res. Appl. 2022, 47, 855–865. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Lin, C.-H.; Kang, L.-W.; Huang, C.-H.; Lin, M.-H.; Chang, C.-Y.; Wang, C.-C. Lightweight deep neural network for Joint learning of underwater object detection and color conversion. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6129–6143. [Google Scholar] [CrossRef]

- Ma, K.; Shi, J. Colorimetric Characterization of digital camera based on RBF neural network. Optoelectron. Imaging Multimed. Technol. VII. SPIE 2020, 11550, 282–288. [Google Scholar]

- Wang, P.T.; Tseng, C.W.; Chou, J.J. Colorimetric characterization of color image sensors based on convolutional neural network modeling. Sens. Mater. 2019, 31, 1513–1522. [Google Scholar]

- Miao, H.; Zhang, L. The color characteristic model based on optimized BP neural network. Lect. Notes Electr. Eng. 2016, 369, 55–63. [Google Scholar]

- Li, X.; Zhang, T.; Nardell, C.A.; Smith, D.D.; Lu, H. New color management model for digital camera based on immune genetic algorithm and neural network. Proc. SPIE 2007, 6786, 678632. [Google Scholar]

- Hung, P.-C. Colorimetric calibration in electronic imaging devices using a look-up-table model and interpolations. J. Electron. Imaging 1993, 2, 53–61. [Google Scholar] [CrossRef]

| Division | Q1train | Q2train | Q3train | Q4train | Q2813train | Q96train |

|---|---|---|---|---|---|---|

| Number of samples | 1089 | 801 | 279 | 644 | 2813 | 96 |

| Range of hue angle hab | 0 to 90 | 90 to 180 | 180 to 270 | 270 to 360 | 0 to 360 | 0 to 360 |

| PCC | 0.942 | 0.964 | 0.938 | 0.966 | 0.924 | 0.994 |

| Division | Q1test | Q2test | Q3test | Q4test | Q2812test |

|---|---|---|---|---|---|

| Number of samples | 1099 | 802 | 275 | 636 | 2812 |

| Range of hue angle hab | 0 to 90 | 90 to 180 | 180 to 270 | 270 to 360 | 0 to 360 |

| PCC | 0.940 | 0.966 | 0.937 | 0.963 | 0.922 |

| Division | C1train | C2train | C3train |

|---|---|---|---|

| Number of samples | 1037 | 1488 | 1325 |

| Range of chroma C*ab | 0 to 60 | 0 to 70 | above 70 |

| PCC | 0.990 | 0.983 | 0.844 |

| Division | C1test | C2test | C3test |

|---|---|---|---|

| Number of samples | 1027 | 1491 | 1321 |

| Range of chroma C*ab | 0 to 60 | 0 to 70 | above 70 |

| PCC | 0.989 | 0.981 | 0.842 |

| Polynomial Formulas and BP-Ann | CIE1976L*a*b* Color Difference ΔEab | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q1train | Q2train | Q3train | Q4train | Q2813train | Q96train | |||||||

| EV. | Max. | EV. | Max. | EV. | Max. | EV. | Max. | EV. | Max. | EV. | Max. | |

| Quadratic | 4.29 | 28.25 | 6.56 | 43.56 | 4.63 | 31.83 | 3.45 | 19.57 | 10.90 | 85.82 | 1.40 | 5.79 |

| Cubic | 3.52 | 27.93 | 3.09 | 29.79 | 3.92 | 28.56 | 2.86 | 17.87 | 6.50 | 48.76 | 1.08 | 4.76 |

| Quartic | 3.05 | 33.30 | 2.98 | 27.31 | 3.82 | 27.19 | 2.59 | 17.47 | 5.53 | 46.59 | 1.01 | 4.19 |

| Quintic | 2.74 | 29.50 | 2.79 | 27.27 | 3.27 | 25.39 | 1.98 | 21.82 | 4.53 | 79.97 | 0.78 | 3.40 |

| Ann 20 × 4 | 3.04 | 28.76 | 2.87 | 27.35 | 3.25 | 26.12 | 2.01 | 21.16 | 4.66 | 48.77 | 0.82 | 3.52 |

| Ann 20 × 8 | 2.98 | 27.05 | 2.70 | 26.16 | 3.11 | 24.88 | 1.86 | 20.58 | 4.32 | 45.32 | 0.65 | 3.12 |

| Polynomial Formulas and BP-Ann | CIE1976L*a*b* Color Difference ΔEab | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Q1test | Q2test | Q3test | Q4test | Q2812test | ||||||

| EV. | Max. | EV. | Max. | EV. | Max. | EV. | Max. | EV. | Max. | |

| Quadratic | 4.31 | 27.56 | 6.56 | 63.25 | 4.89 | 30.83 | 3.44 | 21.03 | 10.99 | 88.26 |

| Cubic | 3.53 | 28.01 | 3.22 | 43.81 | 4.13 | 26.26 | 2.90 | 17.32 | 6.52 | 55.85 |

| Quartic | 3.09 | 36.48 | 3.08 | 43.79 | 4.10 | 26.49 | 2.62 | 14.38 | 5.52 | 48.17 |

| Quintic | 2.80 | 34.26 | 2.92 | 43.27 | 3.74 | 23.59 | 2.10 | 29.68 | 4.57 | 147.08 |

| Ann 20 × 4 | 2.88 | 34.69 | 3.05 | 43.83 | 3.80 | 23.92 | 2.11 | 29.97 | 4.62 | 47.71 |

| Ann 20 × 8 | 2.72 | 32.91 | 2.83 | 41.65 | 3.62 | 22.19 | 2.02 | 28.68 | 4.15 | 46.61 |

| Polynomial Formulas and BP-Ann | CIE1976L*a*b* Color Difference ΔEab | |||||

|---|---|---|---|---|---|---|

| C1train | C2train | C3train | ||||

| EV. | Max. | EV. | Max. | EV. | Max. | |

| Quadratic | 3.23 | 29.30 | 5.75 | 54.51 | 16.43 | 52.78 |

| Cubic | 2.25 | 23.06 | 3.13 | 34.75 | 8.67 | 45.57 |

| Quartic | 2.10 | 21.88 | 2.92 | 29.19 | 7.46 | 44.29 |

| Quintic | 1.92 | 19.37 | 2.68 | 24.80 | 5.92 | 43.27 |

| Ann 20 × 4 | 1.98 | 19.96 | 2.79 | 25.12 | 5.96 | 43.33 |

| Ann 20 × 8 | 1.85 | 17.98 | 2.17 | 23.63 | 5.86 | 42.18 |

| Polynomial Formulas and BP-Ann | CIE1976L*a*b* Color Difference ΔEab | |||||

|---|---|---|---|---|---|---|

| C1test | C2test | C3test | ||||

| EV. | Max. | EV. | Max. | EV. | Max. | |

| Quadratic | 3.21 | 33.12 | 5.83 | 53.37 | 16.69 | 57.22 |

| Cubic | 2.20 | 26.08 | 3.11 | 32.24 | 8.81 | 48.29 |

| Quartic | 2.09 | 43.12 | 2.87 | 27.49 | 7.53 | 46.28 |

| Quintic | 1.92 | 19.49 | 2.77 | 46.03 | 5.99 | 47.75 |

| Ann 20 × 4 | 1.94 | 19.06 | 2.79 | 25.77 | 6.04 | 46.73 |

| Ann 20 × 8 | 1.82 | 17.95 | 2.05 | 23.38 | 5.67 | 45.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Liao, N.; Li, Y.; Li, H.; Wu, W. Color Conversion of Wide-Color-Gamut Cameras Using Optimal Training Groups. Sensors 2023, 23, 7186. https://doi.org/10.3390/s23167186

Li Y, Liao N, Li Y, Li H, Wu W. Color Conversion of Wide-Color-Gamut Cameras Using Optimal Training Groups. Sensors. 2023; 23(16):7186. https://doi.org/10.3390/s23167186

Chicago/Turabian StyleLi, Yasheng, Ningfang Liao, Yumei Li, Hongsong Li, and Wenmin Wu. 2023. "Color Conversion of Wide-Color-Gamut Cameras Using Optimal Training Groups" Sensors 23, no. 16: 7186. https://doi.org/10.3390/s23167186

APA StyleLi, Y., Liao, N., Li, Y., Li, H., & Wu, W. (2023). Color Conversion of Wide-Color-Gamut Cameras Using Optimal Training Groups. Sensors, 23(16), 7186. https://doi.org/10.3390/s23167186