Automatic Tree Height Measurement Based on Three-Dimensional Reconstruction Using Smartphone

Abstract

:1. Introduction

2. Materials and Methods

2.1. Tree Materials

2.2. Measurement of True Tree Height

2.3. Methods

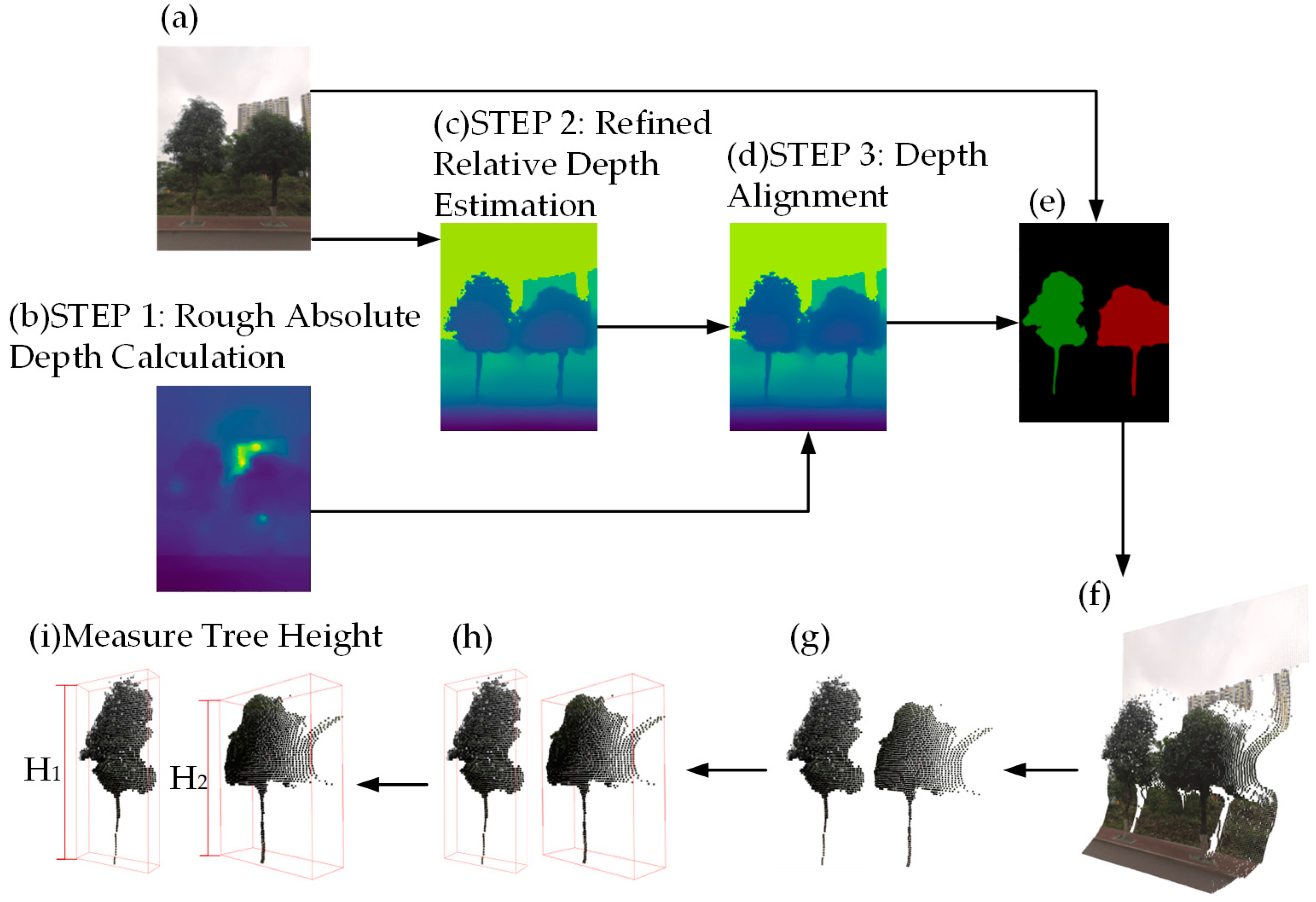

2.3.1. Depth Estimation

- Step 1:

- Absolute Depth Calculation with ARCore

- Step 2:

- Relative Depth Estimation with MidasNet

- Step 3:

- Depth Alignment

2.3.2. Tree Image Segmentation

- Tree Image Segmentation Dataset Creation

- 2.

- Image segmentation model selection

- (1)

- Depth map is introduced as an additional input to Attention-UNet.

- (2)

- At each layer, the encoder performs two convolution and activation operations to obtain RGB feature maps and depth feature maps separately, followed by a feature fusion. The fused results are then transmitted to the attention gates of the decoder through skip connections at each stage, enabling complementary utilization of the two modalities.

- 3.

- Network Architecture of the Improved Attention-UNet

- 4.

- Training the Improved Attention-UNet

2.3.3. Tree 3D Reconstruction

- Scene 3D Reconstruction

- 2.

- Point Cloud Segmentation and Denoising

2.3.4. Tree Height Measurement

2.3.5. App Design and User Experience

3. Results

3.1. Results of Depth Estimation

3.2. The Training Results of the Tree Image Segmentation Network

3.3. Results of Tree 3D Reconstruction

3.4. Accuracy of Tree Height Measurement

3.5. Time of Tree Height Measurement

4. Discussion

4.1. Tree Height Measurement

4.2. Depth Estimation

4.3. Tree Image Segmentation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Comparison with Previous Research

| Reference | Device | Manual Involvement | Single Perspective per Tree | Measured Tree Height Range/m | Reported Average Relative Error/% |

|---|---|---|---|---|---|

| This study | Smartphone (Honor 10) | No | Yes | 3.7–24.4 | 3.20 |

| Song et al. [5] | Smartphone equipped with a fisheye lens and rangefinder | Yes (obtain the horizontal distance) | Yes | Unknown | 1.62 |

| Collazos et al. [9] | Smartphone (Motorola C6) and handled laser distance meter | Yes (obtain total trunk height) | No | 4.7–14.0 | 7.46 |

| Lian [10] | Gimbal Camera (Osmo Pocket) | Yes (place a reference object) | No | 8.5–13 | 3.29 |

| Sun [11] | Monocular Camera (Nikon D3400) | No | No | 3.0–21.0 | 4.10 |

| Zhang et al. [12] | USB 3.0 Binocular Camera | No | No | 1.3–7.3 | 2.10 |

| Yin et al. [13] | USB 3.0 Binocular Camera | No | No | 3.4–4.4 | 2.22 |

| Gao et al. [14] | Smartphone (MI 2S) | No | Yes | 2.7–7.1 | 6.50 |

| Coelho et al. [16] | Monocular Camera | Yes (place a reference object) | No | 6.7–12.3 | 4.80 |

Appendix A.2. Comparison of Image Segmentation Performance in VOC2007 Dataset

| Class | Attention-UNet | Depth-Attention-UNet | ||

|---|---|---|---|---|

| PA | IoU | PA | IoU | |

| Aeroplane | 0.9250 | 0.8590 | 0.9450 | 0.8750 |

| Bicycle | 0.8900 | 0.7390 | 0.9150 | 0.8000 |

| Bird | 0.8750 | 0.7350 | 0.9050 | 0.7750 |

| Boat | 0.8200 | 0.5850 | 0.8600 | 0.7150 |

| Bottle | 0.7050 | 0.4360 | 0.7350 | 0.5800 |

| Bus | 0.9300 | 0.8570 | 0.9400 | 0.8900 |

| Car/Automobile | 0.9350 | 0.8600 | 0.9200 | 0.8440 |

| Cat | 0.9000 | 0.7940 | 0.9200 | 0.8300 |

| Chair | 0.6000 | 0.3360 | 0.6850 | 0.4600 |

| Cow | 0.8550 | 0.7080 | 0.8750 | 0.7700 |

| Diningtable | 0.7800 | 0.6300 | 0.7700 | 0.5830 |

| Dog | 0.8900 | 0.7520 | 0.9100 | 0.8100 |

| Horse | 0.8850 | 0.7990 | 0.9150 | 0.8550 |

| Motorbike | 0.9100 | 0.8040 | 0.9300 | 0.8500 |

| Person | 0.8950 | 0.7880 | 0.9100 | 0.8200 |

| Pottedplant | 0.5350 | 0.2990 | 0.6050 | 0.4300 |

| Sheep | 0.8350 | 0.6780 | 0.8700 | 0.7500 |

| Sofa | 0.8700 | 0.7210 | 0.9000 | 0.7900 |

| Train | 0.9250 | 0.8720 | 0.9450 | 0.8950 |

| TV/Monitor | 0.8150 | 0.6230 | 0.8150 | 0.6900 |

| Average | 0.8388 | 0.6938 | 0.8635 | 0.7506 |

References

- Jian, C. Forest, Forestry and ecological civilization construction. Contemp. Hortic. 2018, 11, 153–154. [Google Scholar]

- Jinqian, L. Research on the correlation model between the economic value of living trees and forest factors. Green Financ. Account. 2019, 2, 15–18. [Google Scholar]

- Qin, S.; Jianfeng, H.; David, C.; Yunlong, Y. Biomass estimation and carbon storage of Taxodium hybrid Zhongshanshan plantations in the Yangtze River Basin. Forests 2022, 13, 1725. [Google Scholar] [CrossRef]

- Newnham, G.J.; Armston, J.D.; Calders, K.; Disney, M.I.; Lovell, J.L.; Schaaf, C.B.; Strahler, A.H.; Danson, F.M. Terrestrial laser scanning for plot-scale forest measurement. Curr. For. Rep. 2015, 1, 239–251. [Google Scholar] [CrossRef]

- Song, J.; Zhao, Y.; Song, W.; Zhou, H.; Zhu, D.; Huang, Q.; Fan, Y.; Lu, C. Fisheye image detection of trees using improved YOLOX for tree height estimation. Sensors 2022, 22, 3636. [Google Scholar] [CrossRef] [PubMed]

- Mayamanikandan, T.; Reddy, R.S.; Jha, C.S. Non-destructive tree volume estimation using terrestrial lidar data in teak dominated central Indian forests. In Proceedings of the 2019 IEEE Recent Advances in Geoscience and Remote Sensing: Technologies, Standards and Applications (TENGARSS), Dalian, China, 11–13 November 2019. [Google Scholar]

- Tian, J.; Dai, T.; Li, H.; Liao, C.; Teng, W.; Hu, Q.; Ma, W.; Xu, Y. A novel tree height extraction approach for individual trees by combining TLS and UAV image-based point cloud integration. Forests 2019, 10, 537. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, Z.; Sun, G. Automatic Extraction Method of Tree Measurement Factors Based on Point Cloud Data. Trans. Chin. Soc. Agric. Mach. 2017, 48, 179–185. [Google Scholar]

- Collazos, D.T.; Cano, V.R.; Villota, J.C.P.; Toro, W.M. A photogrammetric system for dendrometric feature estimation of individual trees. In Proceedings of the 2018 IEEE 2nd Colombian Conference on Robotics and Automation (CCRA), Bogotá, Colombia, 17–19 October 2018. [Google Scholar]

- Lian, Y. Forest Close-Up Photogrammetry Observation and Tree Factor Extraction. Master’s Thesis, Beijing Forestry University, Beijing, China, 2019. [Google Scholar]

- Sun, Y. Study on Single Tree Structure Parameters Extraction Based on Close-Range Photogrammetry. Master’s Thesis, Northeast Forestry University, Harbin, China, 2020. [Google Scholar]

- Zhang, Z.; Zhao, P.; Han, J. Research on measurement method of single tree height using binocular vision. J. For. Eng. 2021, 6, 156–164. [Google Scholar]

- Yin, P.; Xu, A.; Ye, J.; Xia, F.; Wang, Z. Measuing method of tree attributes based on consumer-grade binocular camera. J. Zhejiang AF Univ. 2023, 40, 436–445. [Google Scholar]

- Gao, L.; Xu, A. Tree Height Measurement Method with Intelligent Terminal. J. Northeast For. Univ. 2018, 46, 28–34. [Google Scholar]

- Wu, X.; Zhou, S.; Xu, A.; Chen, B. Passive measurement method of tree diameter at breast height using a smartphone. Comput. Electron. Agric. 2019, 163, 104875. [Google Scholar] [CrossRef]

- Coelho, J.; Fidalgo, B.; Crisóstomo, M.M.; Salas-González, R.; Coimbra, A.P.; Mendes, M. Non-destructive fast estimation of tree stem height and volume using image processing. Symmetry 2021, 13, 374. [Google Scholar] [CrossRef]

- Juyal, P.; Sharma, S. Estimation of tree volume using Mask R-CNN based deep learning. In Proceedings of the 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Polonnaruwa, Sri Lanka, 6–9 July 2020. [Google Scholar]

- Itakura, K.; Hosoi, F. Simple and effective tool for estimating tree trunk diameters and tree species classification. Appl. Opt. 2020, 59, 558–563. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Study on Calculation Method of Number and Height of Trees Based on Computer Vision. Master’s Thesis, Northeast Forestry University, Harbin, China, 2022. [Google Scholar]

- ARCore. Available online: https://developers.google.com/ar (accessed on 6 December 2022).

- Valentin, J.; Kowdle, A.; Barron, J.T.; Wadhwa, N.; Dzitsiuk, M.; Schoenberg, M.; Verma, V.; Csaszar, A.; Turner, E.; Dryanovski, I.; et al. Depth from motion for smartphone AR. ACM Trans. Graph. (ToG) 2018, 37, 1–19. [Google Scholar] [CrossRef]

- Fanello, S.R.; Valentin, J.; Rhemann, C.; Kowdle, A.; Tankovich, V.; Davidson, P.; Izadi, S. Ultrastereo: Efficient learning-based matching for active stereo systems. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, Z.; Snavely, N. MegaDepth: Learning Single-View Depth Prediction from Internet Photos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Xian, K.; Shen, C.; Cao, Z.; Lu, H.; Xiao, Y.; Li, R.; Luo, Z. Monocular relative depth perception with web stereo data supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, C.; Lucey, S.; Perazzi, F.; Wang, O. Web stereo video supervision for depth prediction from dynamic scenes. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Québec City, QC, Canada, 16–19 September 2019. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Chen, S.; Guo, W.; Kang, Y.; Yang, S.; Jiang, N. High-Resolution Representations for Labeling Pixels and Regions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 18th International Conference, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018. [Google Scholar]

- OkHttp. Available online: https://square.github.io/okhttp/4.x/okhttp/okhttp3/ (accessed on 15 May 2023).

- Gson. Available online: https://github.com/google/gson/releases (accessed on 15 May 2023).

- Obj. Available online: https://github.com/javagl (accessed on 15 May 2023).

- Flask. Available online: https://flask.palletsprojects.com/en/2.3.x/ (accessed on 15 May 2023).

- PyTorch. Available online: https://download.pytorch.org/whl/torch/ (accessed on 28 March 2023).

- Open3D. Available online: http://www.open3d.org/docs/release/getting_started.html (accessed on 28 March 2023).

- OpenCV. Available online: https://opencv.org/blog/2023/07/02/opencv-4-8-0/ (accessed on 28 March 2023).

- ARCore. Available online: https://developers.google.com/ar/reference/java/com/google/ar/core/Frame#acquireDepthImage16Bits- (accessed on 16 June 2023).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html (accessed on 22 May 2023).

| Species | Number of Trees | Min/Max Tree Height (m) |

|---|---|---|

| Sterculia nobilis | 26 | 3.7/5.1 |

| Taxus chinensis | 19 | 25.1/26.0 |

| Alstonia scholaris | 17 | 17.2/21.3 |

| Mangifera indica | 14 | 9.6/13.7 |

| Sindora tonkinensis | 12 | 19.8/24.4 |

| Bombax malabaricum | 11 | 21.1/23.8 |

| Trachycarpus fortunei | 6 | 5.1/18.5 |

| Areca catechu L. | 5 | 13.7/24.1 |

| Parameter | Value |

|---|---|

| Input size | 384 × 384 |

| Batch size | 8 |

| Initial learning rate | 0.0001 |

| Total iteration steps | 300 |

| Parameter | Value |

|---|---|

| Input size | 512 × 512 |

| Batch size | 8 |

| Initial learning rate | 0.001 |

| Total iteration steps | 100 |

| Parameter | Value |

|---|---|

| Input size | 512 × 512 |

| Batch size | 8 |

| Initial learning rate | 0.001 |

| Total iteration steps | 200 |

| Model | IoU (%) | PA (%) |

|---|---|---|

| Attention-UNet | 91.20 | 96.27 |

| Ours | 95.31 | 98.14 |

| Sample No. | True Value/m | Measured Value/m | Shooting Distance/m | Relative Error/% |

|---|---|---|---|---|

| 1 | 3.7 | 3.78 | 2.47 | 2.16% |

| 2 | 4.8 | 4.63 | 3.20 | 3.54% |

| 3 | 5.6 | 5.42 | 3.73 | 3.21% |

| 4 | 6.5 | 6.73 | 4.33 | 3.54% |

| 5 | 7.4 | 7.63 | 4.93 | 3.11% |

| 6 | 8.7 | 9.02 | 5.80 | 3.68% |

| 7 | 9.3 | 9.58 | 6.20 | 3.01% |

| 8 | 10.8 | 11.04 | 7.20 | 2.22% |

| 9 | 11.3 | 11.63 | 7.53 | 2.92% |

| 10 | 12 | 12.23 | 8.00 | 1.92% |

| 11 | 13.7 | 14.12 | 9.13 | 3.07% |

| 12 | 15.9 | 15.2 | 10.60 | 4.40% |

| 13 | 17.2 | 16.39 | 11.47 | 4.71% |

| 14 | 18.5 | 19.32 | 12.33 | 4.43% |

| 15 | 19.8 | 20.66 | 13.20 | 4.34% |

| Sample No. | Depth Estimation/ms | Tree Image Segmentation/ms | 3D Reconstruction/ms | 3D Point Cloud Segmentation/ms | Denoising/ms | Tree Height Extraction/ms | Overall Process/ms |

|---|---|---|---|---|---|---|---|

| 1 | 1483.10 | 1809.27 | 6.09 | 3.00 | 11.27 | 0.09 | 3312.82 |

| 2 | 1531.66 | 1981.08 | 6.42 | 3.00 | 10.63 | 0.10 | 3532.89 |

| 3 | 1422.50 | 1845.22 | 6.88 | 3.00 | 13.28 | 0.10 | 3290.98 |

| 4 | 1455.29 | 1709.31 | 6.29 | 3.00 | 14.83 | 0.10 | 3188.81 |

| 5 | 1512.97 | 1581.32 | 6.38 | 3.00 | 11.20 | 0.10 | 3114.97 |

| 6 | 1467.25 | 1627.70 | 6.46 | 3.00 | 13.01 | 0.10 | 3117.52 |

| 7 | 1426.75 | 1936.47 | 6.69 | 2.99 | 10.81 | 0.10 | 3383.81 |

| 8 | 1436.96 | 1897.49 | 6.24 | 2.99 | 10.77 | 0.10 | 3354.54 |

| 9 | 1520.31 | 1503.55 | 6.18 | 2.99 | 11.14 | 0.10 | 3044.27 |

| 10 | 1466.61 | 1859.39 | 6.83 | 3.00 | 11.55 | 0.09 | 3347.47 |

| Average | 1472.34 | 1775.08 | 6.45 | 3.00 | 11.85 | 0.10 | 3268.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Y.; Huang, R.; Hua, B.; Pan, Y.; Mei, Y.; Dong, M. Automatic Tree Height Measurement Based on Three-Dimensional Reconstruction Using Smartphone. Sensors 2023, 23, 7248. https://doi.org/10.3390/s23167248

Shen Y, Huang R, Hua B, Pan Y, Mei Y, Dong M. Automatic Tree Height Measurement Based on Three-Dimensional Reconstruction Using Smartphone. Sensors. 2023; 23(16):7248. https://doi.org/10.3390/s23167248

Chicago/Turabian StyleShen, Yulin, Ruwei Huang, Bei Hua, Yuanguan Pan, Yong Mei, and Minghao Dong. 2023. "Automatic Tree Height Measurement Based on Three-Dimensional Reconstruction Using Smartphone" Sensors 23, no. 16: 7248. https://doi.org/10.3390/s23167248

APA StyleShen, Y., Huang, R., Hua, B., Pan, Y., Mei, Y., & Dong, M. (2023). Automatic Tree Height Measurement Based on Three-Dimensional Reconstruction Using Smartphone. Sensors, 23(16), 7248. https://doi.org/10.3390/s23167248