Research Method of Discontinuous-Gait Image Recognition Based on Human Skeleton Keypoint Extraction

Abstract

:1. Introduction

- (1)

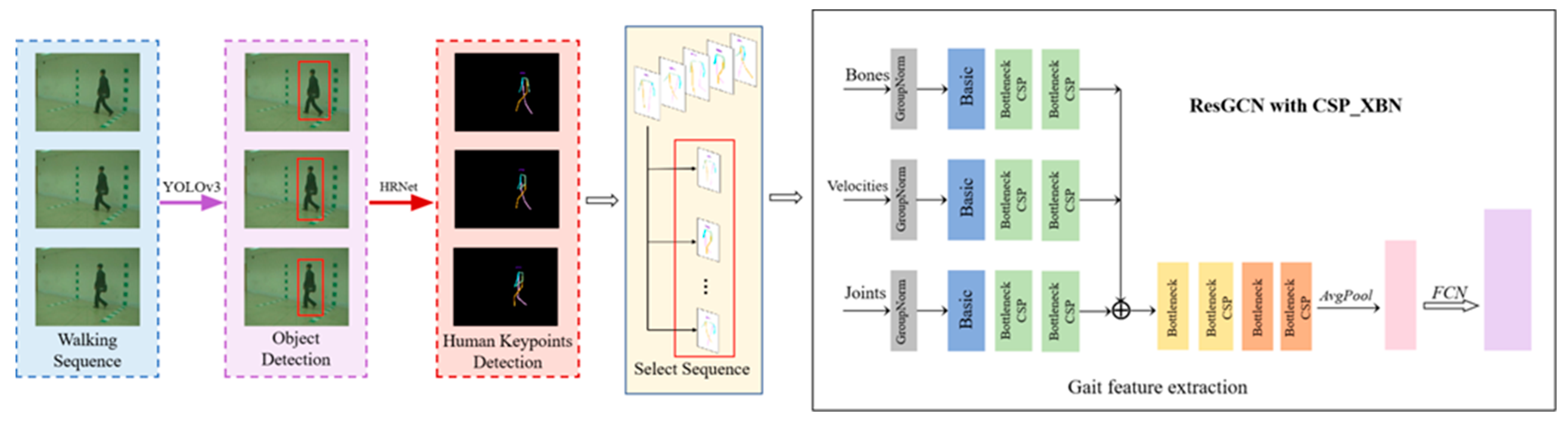

- We attempt to extract gait features from discontinuous video images of walking, which have rarely been studied in gait recognition. We designed a discontinuous frame-level image extraction module, which filters and integrates image sequence information, and sends the relevant information obtained to the gait feature extraction network, to extract relevant features and identify pedestrians, improving the network’s gait characteristics for discontinuous time-series’ learning ability.

- (2)

- Compared with ResGCN, the improved gait recognition algorithm (based on ResGCN) uses a bottleneck for feature dimensionality reduction. It also reduces the number of layers of the feature map, reduces the amount of computation, increases the cross stage partial connection (CSP) structure and XBNBlock, and upgrades the spatial graph convolution (SCN) and temporal graph convolutional (TCN) network modules, with a bottleneck structure in the network. This maintains the same size of input and output, on the basis of reducing network parameters, so as to enhance the learning ability of the response module. It can also reduce memory consumption.

- (3)

- Our paper demonstrates the effectiveness of the improved network algorithm on the commonly used gait data set CASIA-B, and the recognition accuracy is better than other current model-based recognition methods, especially for pedestrian gait images influenced by a certain appearance. The effectiveness and generalization of the algorithm proposed in this paper were tested on both the CASIA-A dataset and a self-built small-scale real environment dataset.

2. Related Works

2.1. Human Keypoint Detection Research

- (1)

- Regression-based keypoint detection, i.e., learning information from input images to the keypoints of human bones through an end-to-end network framework. DeepPose [15] applied the Deep Neural Network (DNN) to the problem of human keypoint detection for the first time, and proposed a cascaded regressor that can directly predict the coordinates of keypoints, using a complete image and a seven-layer general convolutional DNN as input. The detection works well on series datasets, with obvious changes and occlusions. The authors of [16] proposed a structure-aware regression method based on ResNet-50, using body structure information to design bone-based representations. Dual-source deep convolutional neural networks (DS-CNN) [17] used a holistic view to learn the appearance of local parts of the human body, and to detect and localise joints. The authors of [18] proposed a multi-task framework for joint 2D and 3D recognition of human skeleton keypoint detection from video sequences of still images. In [19], a 2D human pose estimation keypoint regression based on the subspace attention module (SAMKR), was proposed. Each keypoint was divided into an independent regression branch and its feature map equally divided into a specified number of feature map subspaces, deriving different attention maps for each feature map subspace.

- (2)

- Detection based on the heat map detection method, i.e., the heat map of K keypoints of the human body is detected first, and the probability that the key point is located at (x, y) is then represented by the heat map pixel value Li(x, y). The heat map of bone keypoints is generated by a two-dimensional Gaussian model [20] centred on the location of the real keypoint, and the bone keypoint detection network is then trained by minimising the difference between the predicted heat map and the real heat map. Heatmap-based detection methods can preserve more spatial location information and provide richer supervision information. A hybrid architecture [21], consisting of deep convolutional networks and Markov random fields, can be used to detect human skeleton keypoints in monocular images. In [22], the image was discretised into a logarithmic polar coordinate region, centred on the skeletal keypoint, and a VGG network was employed to predict the category confidence of each paired skeletal keypoint. According to the relative confidence scores, the final heatmap for each keypoint was generated by a deconvolutional network. HRNet [23] proposed a high-resolution network structure. This model was the first multi-layer network structure to maintain the initial resolution of the image, while increasing the feature receptive field. This structure continuously expands the features through multi-stage cascaded residual modules. Receptive fields, multi-network branches to extract feature information with different resolutions, and multi-scale feature information fusion, make the network more accurate in detecting the keypoints of human skeletons. Since the heat map representation is more robust than the coordinate representation, more current research is based on the heat map representation.

2.2. Gait Recognition Research Methods

3. Cross-Gait Recognition Network for Keypoint Extraction

3.1. Human Keypoint Extraction Network

3.2. Gait Feature Extraction and Recognition

- (1)

- The spatial GCN operation of the graph convolutional network (GCN) for each frame t in the skeleton sequence is expressed as:

- (2)

- A residual connection with a bottleneck structure: The bottleneck structure involves inserting two 1 × 1 convolutional layers before and after the shared convolutional layer. In this paper, the bottleneck structure is used to replace a set of temporal and spatial convolutions in the ResGCN architecture. The structures of SCN and TCN with the bottleneck structure are shown in Figure 3 and Figure 4, respectively. The lower half of the structures in the figures is added to the upper half to achieve residual connections, allowing a reduction in the number of feature channels during convolution computations using a channel reduction rate r.

- (3)

- The fully connected (FC) layer that generates feature vectors takes the residual matrix R as input. Each output of the FC layer corresponds to the attention weights of each GCN layer.

- (4)

- Supervised contrastive (SupCon) loss function: For the experimental dataset, the data is processed in batches. The pre-divided dataset is sequentially input to the training network, and two augmented copies of the batch data are obtained through data augmentation. Both augmented samples undergo forward propagation using the encoder network to obtain normalized embeddings of dimension 2048. During training, the feature representations are propagated forward through the projection network, and the supervised contrastive loss function is computed on the output of the projection network. The formula for calculating the SupCon loss is as follows:

3.2.1. CSP Structure

3.2.2. XBNBlock

4. Experiments

4.1. Computer Configuration

4.2. Dataset and Training Details

5. Experimental Results and Discussion

5.1. Basic Experiment

5.2. Multipart Figures

5.3. Tests in CASIA-A

5.4. Real-Scene Gait Image Test

6. Conclusions

- (1)

- Due to the differences between the gait dataset used in the experiment and the real-world environment, the next step for the work involves testing the algorithm on videos collected in real environments, considering more walking views, and different walking conditions and lighting conditions. This is necessary to achieve pedestrian recognition with algorithm adaptability to the diversity of the database.

- (2)

- The various algorithm modules selected in the framework of this paper are continuously being upgraded and iterated with the development of deep learning theory. In future research work, we can consider to replacing the pedestrian object-detection network with a network that provides better detection results. For the human skeleton keypoint-detection network, it is advisable to use a multi-person skeleton keypoint-detection network to address pedestrian gait recognition in crowded places and other scenarios.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shuping, N.; Feng, W. The research on fingerprint recognition algorithm fused with deep learning. In Proceeding of the 2020 IEEE International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 6–8 November 2020; pp. 1044–1047. [Google Scholar] [CrossRef]

- Danlami, M.; Jamel, S.; Ramli, S.N.; Azahari, S.R.M. Comparing the Legendre Wavelet filter and the Gabor Wavelet filter For Feature Extraction based on Iris Recognition System. In Proceedings of the 2020 IEEE 6th International Conference on Optimisation and Applications (ICOA), Beni Mellal, Morocco, 20–21 April 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, H.T.; Peng, G.Y.; Chang, K.C.; Lin, J.Y.; Chen, Y.H.; Lin, Y.K.; Huang, C.Y.; Chen, J.C. Enhance Face Recognition Using Time-series Face Images. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Penghu, Taiwan, 15–17 September 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Katsanis, S.H.; Claes, K.; Doerr, M.; Cook-Deegan, R.; Tenenbaum, J.D.; Evans, B.J.; Lee, M.K.; Anderton, J.; Weinberg, S.M.; Wagner, J.K. U.S. Adult Perspectives on Facial Images, DNA, and Other Biometrics. IEEE Trans. Technol. Soc. 2022, 3, 9–15. [Google Scholar] [CrossRef] [PubMed]

- Ghali, N.S.; Haldankar, D.D.; Sonkar, R.K. Human Personality Identification Based on Handwriting Analysis. In Proceedings of the 2022 5th International Conference on Advances in Science and Technology (ICAST), Mumbai, India, 2–3 December 2022; pp. 393–398. [Google Scholar] [CrossRef]

- Wang, L.; Hong, B.; Deng, Y.; Jia, H. Identity Recognition System based on Walking Posture. In Proceeding of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 3406–3411. [Google Scholar] [CrossRef]

- Nixon, M.S.; Carter, J.N. Automatic Recognition by Gait. Proc. IEEE 2006, 94, 2013–2024. [Google Scholar] [CrossRef]

- Sun, J.; Wu, P.Q.; Shen, Y.; Yang, Z.X. Relationship between personality and gait: Predicting personality with gait features. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1227–1231. [Google Scholar] [CrossRef]

- Killane, I.; Donoghue, O.A.; Savva, G.M.; Cronin, H.; Kenny, R.A.; Reilly, R.B. Variance between walking speed and neuropsychological test scores during three gait tasks across the Irish longitudinal study on aging (TILDA) dataset. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6921–6924. [Google Scholar] [CrossRef]

- Stevenage, S.V.; Nixon, M.S.; Vince, K. Visual analysis of gait as a cue to identity. Appl. Cogn. Psychol. 1999, 13, 513–526. [Google Scholar] [CrossRef]

- Nixon, M.S.; Carter, J.N. On gait as a biometric: Progress and prospects. In Proceedings of the 2004 12th European Signal Processing Conference, Vienna, Austria, 6–10 September 2004; pp. 1401–1404. [Google Scholar]

- Xia, Y.; Ma, J.; Yu, C.; Ren, X.; Antonovich, B.A.; Tsviatkou, V.Y. Recognition System of Human Activities Based on Time-Frequency Features of Accelerometer Data. In Proceedings of the 2022 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 18–20 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Verma, A.; Merchant, R.A.; Seetharaman, S.; Yu, H. An intelligent technique for posture and fall detection using multiscale entropy analysis and fuzzy logic. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 2479–2482. [Google Scholar] [CrossRef]

- Munea, T.L.; Jembre, Y.Z.; Weldegebriel, H.T.; Chen, L.; Huang, C.; Yang, C. The Progress of Human Pose Estimation: A Survey and Taxonomy of Models Applied in 2D Human Pose Estimation. IEEE Access 2020, 8, 133330–133348. [Google Scholar] [CrossRef]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar] [CrossRef]

- Sun, X.; Shang, J.; Liang, S.; Wei, Y. Compositional Human Pose Regression. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2621–2630. [Google Scholar] [CrossRef]

- Fan, X.; Zheng, K.; Lin, Y.; Wang, S. Combining local appearance and holistic view: Dual-Source Deep Neural Networks for human pose estimation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1347–1355. [Google Scholar] [CrossRef]

- Luvizon, D.C.; Picard, D.; Tabia, H. 2D/3D Pose Estimation and Action Recognition Using Multitask Deep Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA; 2018; pp. 5137–5146. [Google Scholar] [CrossRef]

- Chen, L.; Zhou, D.; Liu, R.; Zhang, Q. SAMKR: Bottom-up Keypoint Regression Pose Estimation Method Based on Subspace Attention Module. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Tompson, J.; Goroshin, R.; Jain, A.; LeCun, Y.; Bregler, C. Efficient object localisation using Convolutional Networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 9 June 2015; pp. 648–656. [Google Scholar] [CrossRef]

- Tompson, J.; Jain, A.; LeCun, Y.; Bregler, C. Joint training of a convolutional network and a graphical model for human pose estimation. In Advances in Neural Information Processing Systems; IEEE: New York, NY, USA, 17 September 2014; pp. 152–167. [Google Scholar]

- Lifshitz, I.; Fetaya, E.; Ullman, S. Human Pose Estimation Using Deep Consensus Voting. In Proceedings of the Computer Vision–ECCV Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science. Springer: Cham, Swizterland, 2016; Volume 9906. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5686–5696. [Google Scholar] [CrossRef]

- Lam, T.H.W.; Cheung, K.H.; Liu, J. Gait flow image: A silhouette-based gait representation for human identification. Pattern Recognit. 2011, 44, 973–987. [Google Scholar] [CrossRef]

- Ariyanto, G.; Nixon, M.S. Model-based 3D gait biometrics. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–7. [Google Scholar] [CrossRef]

- El-Alfy, H.; Mitsugami, I.; Yagi, Y. Gait Recognition Based on Normal Distance Maps. IEEE Trans. Cybern. 2018, 48, 1526–1539. [Google Scholar] [CrossRef] [PubMed]

- Martin-Félez, R.; Mollineda, R.A.; Sánchez, J.S. Towards a More Realistic Appearance-Based Gait Representation for Gender Recognition. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3810–3813. [Google Scholar] [CrossRef]

- Hirose, Y.; Nakamura, K.; Nitta, N.; Babaguchi, N. Anonymization of Human Gait in Video Based on Silhouette Deformation and Texture Transfer. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3375–3390. [Google Scholar] [CrossRef]

- An, W.; Yu, S.; Makihara, Y.; Wu, X.; Xu, C.; Yu, Y.; Liao, R.; Yagi, Y. Performance Evaluation of Model-Based Gait on Multi-View Very Large Population Database with Pose Sequences. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 421–430. [Google Scholar] [CrossRef]

- Song, C.; Huang, Y.; Huang, Y.; Ning, J.; Liang, W. GaitNet: An end-to-end network for gait based human identification. Pattern Recognit. 2019, 96, 106988. [Google Scholar] [CrossRef]

- Chao, H.; He, Y.; Zhang, J.; He, Y.; Feng, J. GaitSet: Regarding Gait as a Set for Cross-View Gait Recognition. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 12 December 2018. [Google Scholar] [CrossRef]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. GaitPart: Temporal Part-Based Model for Gait Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14213–14221. [Google Scholar] [CrossRef]

- Wolf, T.; Babaee, M.; Rigoll, G. Multi-view gait recognition using 3d convolutional neural networks. In Proceedings of the ICIP 2016, Phoenix, AZ, USA, 25–28 September 2016; pp. 4165–4169. [Google Scholar]

- Huang, Z.; Xue, D.; Shen, X.; Tian, X.; Li, H.; Huang, J.; Hua, X.S. 3D Local Convolutional Neural Networks for Gait Recognition. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 14900–14909. [Google Scholar] [CrossRef]

- Chen, K.; Wu, S.; Li, Z. Gait Recognition Based on GFHI and Combined Hidden Markov Model. In Proceedings of the 2020 13th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Chengdu, China, 17–19 October 2020; pp. 287–292. [Google Scholar] [CrossRef]

- Etoh, H.; Omura, Y.; Kaminishi, K.; Chiba, R.; Takakusaki, K.; Ota, J. Proposal of a Neuromusculoskeletal Model Considering Muscle Tone in Human Gait. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 289–294. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, X.; Zhu, K.; Niu, H. Thigh Skin Strain Model for Human Gait Movement. In Proceedings of the 2021 IEEE Asia Conference on Information Engineering (ACIE), Sanya, China, 29–31 January 2021; pp. 27–31. [Google Scholar] [CrossRef]

- Tanawongsuwan, R.; Bobick, A. Gait recognition from time-normalised joint-angle trajectories in the walking plane. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; pp. II–II. [Google Scholar] [CrossRef]

- Zhen, H.; Deng, M.; Lin, P.; Wang, C. Human gait recognition based on deterministic learning and Kinect sensor. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1842–1847. [Google Scholar] [CrossRef]

- Liao, R.; Cao, C.; Garcia, E.B.; Yu, S.; Huang, Y. Pose-Based Temporal-Spatial Network (PTSN) for Gait Recognition with Carrying and Clothing Variations. In Biometric Recognition, Proceedings of the 12th Chinese Conference, CCBR 2017, Shenzhen, China, 28–29 October 2017; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10568, p. 10568. [Google Scholar] [CrossRef]

- Liao, R.; Yu, S.; An, W.; Huang, Y. A Model-based Gait Recognition Method with Body Pose and Human Prior Knowledge. Pattern Recognit. 2019, 98, 107069. [Google Scholar] [CrossRef]

- Zhe, C.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 14 April 2017. [Google Scholar]

- Teepe, T.; Khan, A.; Gilg, J.; Herzog, F.; Hörmann, S.; Rigoll, G. Gaitgraph: Graph Convolutional Network for Skeleton-Based Gait Recognition. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2314–2318. [Google Scholar] [CrossRef]

- Liu, X.; You, Z.; He, Y.; Bi, S.; Wang, J. Symmetry-Driven hyper feature GCN for skeleton-based gait recognition. Pattern Recognit. J. Pattern Recognit. Soc. 2022, 125, 125. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. arXiv 2020, arXiv:2004.11362. [Google Scholar]

- Wang, C.; Liao, H.M.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. Cspnet: A new backbone that can enhance learning capability of cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 20 November 2020; pp. 390–391. [Google Scholar]

- Huang, L.; Zhou, Y.; Wang, T.; Luo, J.; Liu, X. Delving into the Estimation Shift of Batch Normalisation in a Network. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 21 March 2022. [Google Scholar]

- Wu, Y.; He, K. Group Normalisation; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Yu, S.; Tan, D.; Tan, T. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR'06), 20–24 August 2006. [Google Scholar]

| Block | Module |

|---|---|

| Block 0 | GroupNorm |

| Block 1 | Basic |

| BottleneckCSP_XBN | |

| BottleneckCSP_XBN | |

| Block 2 | Bottleneck |

| BottleneckCSP_XBN | |

| Bottleneck | |

| BottleneckCSP_XBN | |

| Block 3 | AvgPool2D |

| Block 0 | FCN |

| Configuration | Quantity |

|---|---|

| System | Ubuntu 18.04.5 LTS |

| CPU | Intel Xeon(R) Gold 5218: 2.30 GHz, 16 cores, 32 threads |

| Memory | 62.6 GB |

| GPU | NVIDIA GeForce RTX 2080 Ti: 11264 MB, 14000 MHz |

| Disk | Jesis X760S 512 GB + TOSHIBA MG04ACA4 4.4 TB |

| OS type | 64-bit |

| Python | version 3.6.9 |

| Nvidia driver | version 450.36.06 |

| CUDA | version 11.0 |

| cuDNN | version 8.0.2 |

| Pytorch | version 1.5.1 |

| OpenCV | version 4.2.0 |

| Gallery NM#1–4 | 0–180° | Mean | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Probe | 0° | 18° | 36° | 54° | 72° | 90° | 108° | 126° | 144° | 162° | 180° | ||

| NM #5–6 | GaitGraph [43] | 85.3 | 88.5 | 91.0 | 92.5 | 87.2 | 86.5 | 88.4 | 89.2 | 87.9 | 85.9 | 81.9 | 87.7 |

| GaitGraph [43] with CSP, XBN(Ours) | 87.2 (↑1.9) | 91.0 (↑2.5) | 90.8 | 92.8 (↑0.3) | 91.5 (↑4.3) | 91.3 (↑4.8) | 90.2 (↑1.8) | 90.5 (↑1.3) | 89.7 (↑1.8) | 88.2 (↑2.3) | 86.4 (↑45) | 90.0 (↑2.3) | |

| GaitGraph [43] with CSP, XBN, image discontinuty(Ours) | 83.1 | 89.5 (↑1.0) | 89.6 | 89.5 | 89.4 (↑2.2) | 88.6 (↑2.1) | 88.2 | 88.6 | 90.5 (↑2.6) | 86.8 (↑0.9) | 86.0 (↑4.1) | 88.2 (↑0.5) | |

| BG #1–2 | GaitGraph [43] | 75.8 | 76.7 | 75.9 | 76.1 | 71.4 | 73.9 | 78.0 | 74.7 | 75.4 | 75.4 | 69.2 | 74.8 |

| GaitGraph [43] with CSP, XBN(Ours) | 77.5 (↑1.7) | 81.0 (↑4.3) | 79.6 (↑3.7) | 80.3 (↑4.2) | 78.4 (↑7.0) | 77.1 (↑3.2) | 77.7 | 78.3 (↑3.6) | 77.6 (↑2.2) | 78.4 (↑3.0) | 71.6 (↑2.4) | 77.9 (↑3.1) | |

| GaitGraph [43] with CSP, XBN, image discontinuty(Ours) | 74.3 | 77.9 (↑1.2) | 79.1 (↑3.2) | 81.4 (↑5.3) | 75.5 (↑4.1) | 77.5 (↑3.6) | 77.2 | 77.5 (↑2.8) | 77.4 (↑2.0) | 74.8 | 70.6 (↑1.4) | 76.6 (↑1.8) | |

| CL #1–2 | GaitGraph [43] | 69.6 | 66.1 | 68.8 | 67.2 | 64.5 | 62.0 | 69.5 | 65.6 | 65.7 | 66.1 | 64.3 | 66.3 |

| GaitGraph [43] with CSP, XBN(Ours) | 67.0 | 64.5 | 69.5 (↑0.7) | 69.6 (↑2.4) | 71.4 (↑6.9) | 70.0 (↑8.0) | 73.2 (↑3.7) | 69.6 (↑4.0) | 71.8(↑6.1) | 74.4 (↑8.3) | 74.8 (↑10.5) | 70.5 (↑4.2) | |

| GaitGraph [43] with CSP, XBN, image discontinuty(Ours) | 63.6 | 69.0 (↑2.9) | 72.4 (↑3.6) | 69.6 (↑2.4) | 72.5 (↑8.0) | 73.1 (↑11.1) | 71.2 (↑1.7) | 70.6 (↑5.0) | 71.0 (↑5.3) | 68.2 (↑2.1) | 64.6 (↑0.3) | 69.6 (↑3.3) | |

| Model | Probe | Mean | ||

|---|---|---|---|---|

| NM | BG | CL | ||

| GaitGraph [43] | 87.7 | 74.8 | 66.3 | 76.3 |

| GaitGraph [43] with CSP | 88.3 | 76.7 | 68.9 | 78.0 |

| GaitGraph [43] with CSP and XBNBlock | 90.0 | 77.9 | 70.5 | 79.5 |

| GaitGraph [43] with mage discontinuity | 73.9 | 62.0 | 54.1 | 63.3 |

| GaitGraph [43] with CSP and XBN, image discontinuity | 88.2 | 76.6 | 69.6 | 78.1 |

| Type | Model | Probe | Mean | ||

|---|---|---|---|---|---|

| NM | BG | CL | |||

| appearance-based | GaitNet [30] | 91.6 | 85.7 | 58.9 | 78.7 |

| GaitSet [31] | 95.0 | 87.2 | 70.4 | 84.2 | |

| GaitPart [32] | 96.2 | 91.5 | 78.7 | 88.8 | |

| 3DLocal [34] | 97.5 | 94.3 | 83.7 | 91.8 | |

| model-based | PoseGait [40] | 60.5 | 39.6 | 29.8 | 43.3 |

| GaitGraph [43] | 87.7 | 74.8 | 66.3 | 76.3 | |

| Ours | 90.0 | 77.9 | 70.5 | 79.5 | |

| Model | Average Detection Time (ms) | Weight Size (MB) |

|---|---|---|

| GaitGraph [43] | 0.0996 | 4.1 |

| GaitGraph [43] with CSP | 0.0650 | 3.8 |

| GaitGraph [43] with CSP and XBNBlock | 0.0650 | 3.8 |

| GaitGraph [43] with CSP and XBN, image discontinuity | 0.0660 | 3.9 |

| Model | Probe | Mean | ||

|---|---|---|---|---|

| 0° | 45° | 90° | ||

| GaitGraph [43] | 88.8 | 87.1 | 84.2 | 86.7 |

| GaitGraph [43] with CSP and XBNBlock (Ours) | 95.4 | 92.9 | 89.6 | 92.6 |

| GaitGraph [43] with CSP and XBN, image discontinuity (Ours) | 91.2 | 89.2 | 86.5 | 89.0 |

| Gallery NM#1–2 | View | Mean | |||

|---|---|---|---|---|---|

| Probe | 36° | 108° | 162° | ||

| BG#1–2 | Ours | 89.2 | 87.9 | 85.8 | 87.6 |

| Ours with image discontinuity | 85.4 | 83.8 | 82.9 | 84.0 | |

| CL#1–2 | Ours | 78.7 | 76.2 | 75.8 | 76.9 |

| Ours with image discontinuity | 75.4 | 73.7 | 72.9 | 74.0 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, K.; Li, X. Research Method of Discontinuous-Gait Image Recognition Based on Human Skeleton Keypoint Extraction. Sensors 2023, 23, 7274. https://doi.org/10.3390/s23167274

Han K, Li X. Research Method of Discontinuous-Gait Image Recognition Based on Human Skeleton Keypoint Extraction. Sensors. 2023; 23(16):7274. https://doi.org/10.3390/s23167274

Chicago/Turabian StyleHan, Kun, and Xinyu Li. 2023. "Research Method of Discontinuous-Gait Image Recognition Based on Human Skeleton Keypoint Extraction" Sensors 23, no. 16: 7274. https://doi.org/10.3390/s23167274

APA StyleHan, K., & Li, X. (2023). Research Method of Discontinuous-Gait Image Recognition Based on Human Skeleton Keypoint Extraction. Sensors, 23(16), 7274. https://doi.org/10.3390/s23167274