Online Video Anomaly Detection

Abstract

:1. Introduction

2. Common Datasets and Assessment Criteria

2.1. Common Single-Scene Anomaly Detection Datasets

- (1)

- UMN dataset. The UMN dataset [8] has 11 videos containing three different scenes: campus lawn, indoor and square. This dataset belongs to the global abnormal behavior dataset.

- (2)

- UCSD Pedestrian dataset. The UCSD Pedestrian dataset [9] was captured on a university campus pavement. The current frame-level anomaly detection accuracies achieved on the UCSD Ped1 and UCSD Ped2 datasets are 97.4% and 97.8%, respectively. Although the accuracy rates are relatively high, this dataset is still one of the few more popular benchmark datasets due to its relatively adequate number of anomalies and anomaly types.

- (3)

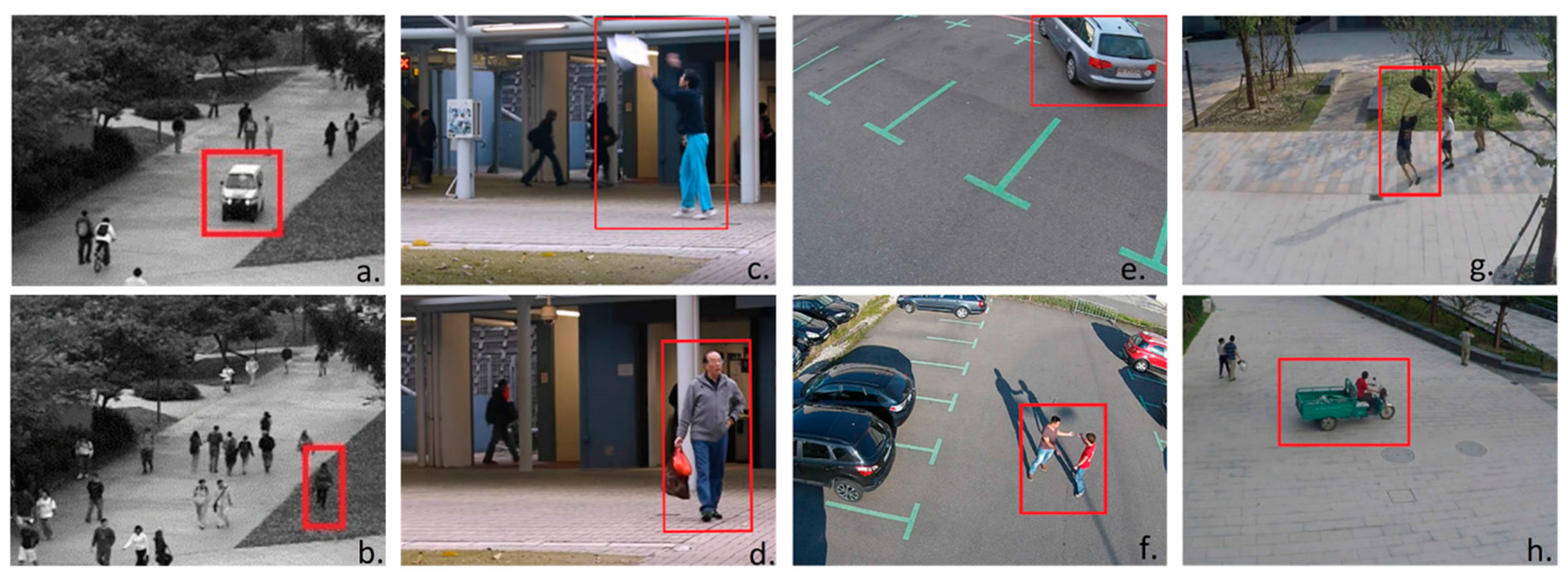

- CUHK Avenue dataset. The CUHK Avenue dataset [10] contains 16 training videos and 21 test videos with a total of 47 abnormal events, including throwing objects, loitering and running. The size of the people changes depending on the position and angle of the camera.

- (4)

- ShanghaiTech (Shanghai, China) dataset. The ShanghaiTech dataset [11] contains 13 scenes with complex lighting conditions and camera angles, containing 130 abnormal events and over 270,000 training frames. In addition, pixel-level annotations of the abnormal events are given.

- (5)

- UCF-Crime dataset. The UCF-Crime dataset [12] is a large dataset of 128 h of video. It consists of 1900 continuous unedited surveillance videos containing a total of 13 real-life aberrant behaviors, namely abuse, arrest, arson, assault, road accident, burglary, explosion, combat, robbery, shooting, theft, shoplifting and vandalism.

- (6)

- The DOTA dataset. The DOTA dataset [13] is the first traffic anomaly dataset to provide detailed spatio-temporal annotation of abnormal objects. It contains 4677 videos, and each video contains exactly one abnormal event. The anomalies consider seven common traffic participant categories, namely pedestrians, cars, trucks, buses, motorbikes, bicycles and riders.

2.2. Evaluation Criteria

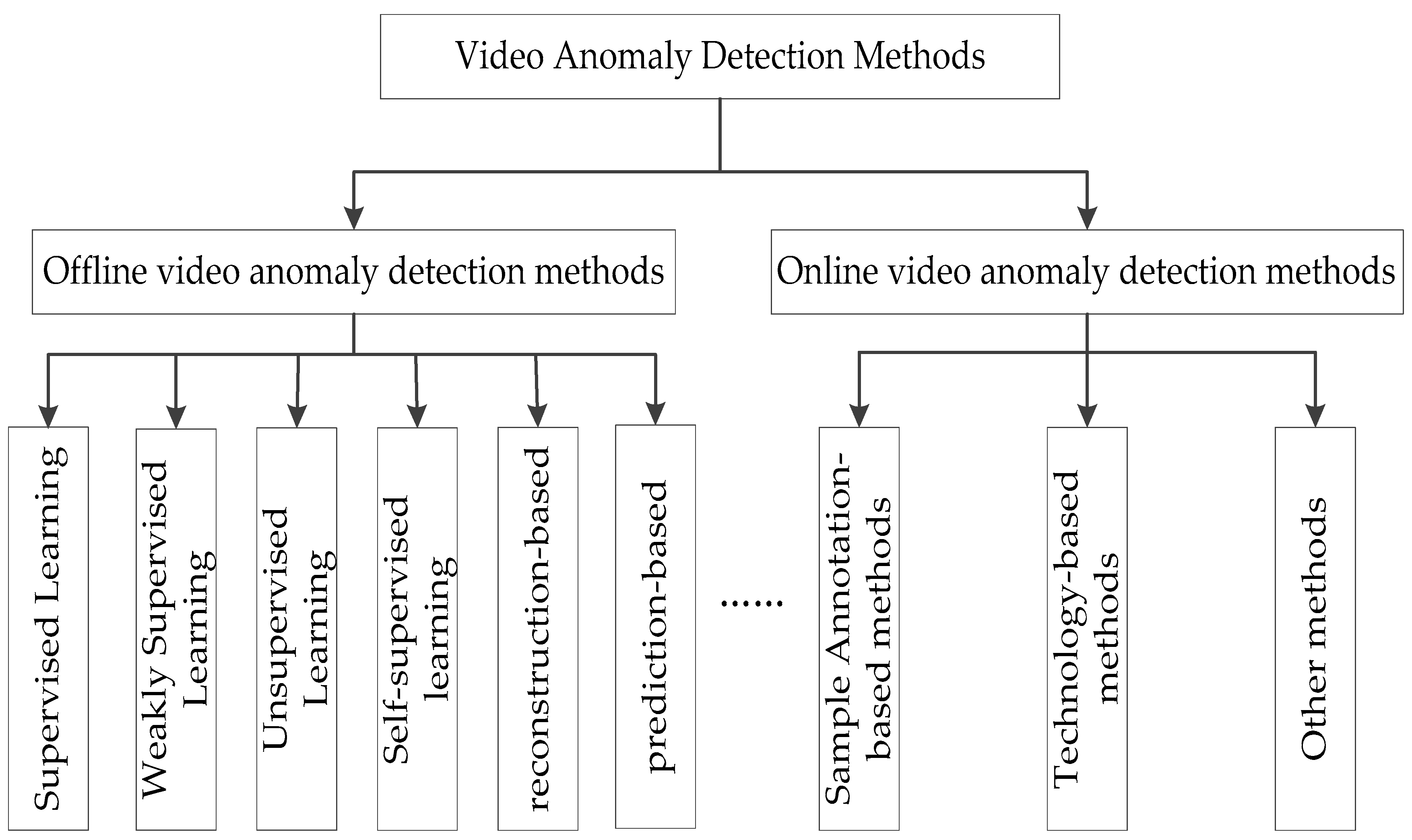

3. Offline Video Anomaly Detection Methods

4. Online Video Anomaly Detection Methods

4.1. Online Anomaly Detection Based on Sample Annotation

4.1.1. Online Anomaly Detection Based on Supervised Learning

4.1.2. Online Anomaly Detection Based on Unsupervised Learning

4.1.3. Online Anomaly Detection Based on Weakly Supervised Learning

| Reference | Method | Architecture | Remarks |

|---|---|---|---|

| Doshi et al. [35] | Supervised learning | GAN and YOLOv3 | A multi-objective neural anomaly detection framework was proposed. |

| Aboah et al. [36] | YOLO and decision-making tree | The Yolo object detection framework was adopted, and the final anomaly was detected and analyzed using a decision tree. | |

| Rossi et al. [37] | Transformer and LSTM | The video swin transformer model was used, and the LSTM module was introduced to model long short-term memory. | |

| Luo et al. [38] | Sparse-coding-inspired DNN | Using spatially optimized temporally coherent sparse coding and temporally stacked recurrent neural network auto-encoders. | |

| Chaker et al. [39] | Unsupervised learning | Social network model | The detection, location and global update of online abnormal behavior were realized. |

| Dang et al. [40] | AlexNet and one-class SVM + Bayesian encoding | Deep learning features and Bayesian technology were used to detect and locate environmental anomalies, and a path-planning method was proposed. | |

| Zhao et al. [41] | Dynamic sparse coding | Online sparse reconstruction methods and online reconfigurability were proposed to detect abnormal events in videos. | |

| Yao et al. [13] | Two-stream RNNS | A new dataset and evaluation metric STAUC were introduced to perform online anomaly detection. | |

| Sultani et al. [12] | Deep multiple-instance learning | A MIL algorithm with sparsity and smoothness constraints was proposed. | |

| Harada et al. [43] | Online growing neural gas | The anomaly detection of the changing data space was realized. | |

| Nawaratne et al. [44] | Incremental spatio-temporal learner | It updated and distinguished new abnormal and normal behaviors in real time and was used for anomaly detection and location in monitoring. | |

| Monakhov et al. [45] | GridHTM | The visual analysis method based on the extended HTM algorithm was studied, and the data were simplified by the segmentation technique. | |

| Chriki et al. [46] | One-class support fvector machine | Four different features were extracted with GoogleNet, HOG, PCA-HOG and HOG3D for automated abnormal event detection to form four OCSVM models. | |

| Bozcan et al. [47] | GridNet | Achieved anomaly detection that was insensitive to vision-related issues and proposed a novel loss function based on grid representation. | |

| Khaire et al. [48] | Weakly supervised learning | CNN-BiLSTM | The proposed CNN-BiLSTM auto-encoder framework implements semi-supervised anomaly detection. |

| Mozaffari et al. [49] | KNN | Presenting a framework for multivariate, data-driven and sequential detection of anomalies in high-dimensional systems based on the availability of labeled data. | |

| Majhi et al. [50] | Dissimilarity attention module | A dissimilarity attention module with local context information was introduced into anomaly detection for real-time applications. | |

| Liu et al. [51] | TPP and OAL | Accurate segment-level pseudo-label generation and future semantic prediction were achieved through a two-stage framework of “decoupling and parsing”. | |

| Huang et al. [52] | TTFA and SDFE | Proposing a transformer-style TTFA and a boot SDFE. |

4.2. Online Anomaly Detection Based on Technical Methods

4.2.1. Online Anomaly Detection Based on Deep Learning

4.2.2. Online Anomaly Detection Based on Transfer Learning

| Reference | Method | Architecture | Remarks |

|---|---|---|---|

| Ouyang et al. [53] | Deep learning | Randomly initialized multi-layer | The perceptron online optimization method and incremental learner were proposed. |

| Ullah et al. [55] | Vision transformer | A visual transformer with a sequential learning model and an attention mechanism was used. | |

| Doshi et al. [56] | Transfer learning | The proposed semantic-embedding-based video anomaly detection method and transfer learning were used in different surveillance scenarios. | |

| Rendón-Segador et al. [57] | 3D DenseNet, multi-head self-attention mechanism and bidirectional convolutional LSTM | Violence in videos was detected by encoding relevant spatio-temporal features. | |

| Ullah et al. [58] | CNN and LSTM | A surveillance scene dataset for indoor and outdoor environments was presented. | |

| Kim et al. [59] | KCF and 3DResNet | An architecture that can extract pedestrian information in real time and detect abnormal behavior was used. | |

| Mehmood et al. [60] | CNN | Proposing a lightweight framework that utilizes convolutional neural networks for training. | |

| Lin et al. [61] | 3DCNN and C3DGAN | Combined with 3D CNN and non-local mechanism, the synthetic data were converted into realistic video sequences through an adaptive recurrent 3D GAN. | |

| Fang et al. [62] | CNN | Based on the improved yolov3 algorithm, the detection optimization was carried out using the frame-alternating two-thread method. | |

| Direkoglu et al. [63] | MII and CNN | A new concept of MII was formed and combined with CNN for global abnormal crowd behavior detection. | |

| Doshi et al. [64] | Transfer learning | KNN and KMeans clustering | A framework was proposed to determine the occurrence time of exceptions. |

| Doshi et al. [65] | Any-shot learning | A new framework with continuous learning capability was proposed for anomaly detection of statistical arbitrary shot sequences. | |

| Doshi et al. [66] | Continual and few-shot learning | A continual learning algorithm was proposed. | |

| Doshi et al. [67] | Multi-task learning | Proposing a multi-task learning framework and introducing a deep metric learning method based on semantic embedding. | |

| Doshi et al. [68] | MOVAD | Combining an interpretable transfer learning feature extractor with a novel kNN-RNN sequential anomaly detector. | |

| Kale et al. [69] | ResNet and DML | Transfer-learning-based ResNet object tracking and DML methods were proposed. |

4.3. Other Methods

4.4. Comparison

4.4.1. Comparison of Advantages and Disadvantages of Different Methods

4.4.2. Performance Comparison of Methods

5. Summary and Outlook

- (1)

- The models have a strong dependency on the task scenario and are difficult to directly transfer. The models only perform well on the datasets described in the paper, and the feature structure of the data is difficult to change due to strict requirements on some data formats (input size, color channels, text format, etc.); the trained feature extractors cannot be easily transferred to other tasks. Although these problems are mitigated by the “pre-training + fine-tuning” method, the transferability of the model needs to be further enhanced. Therefore, improving the transfer capability of models in online video anomaly detection is a problem worth investigating.

- (2)

- A real-time anomaly detection framework was built. Although most of the current methods can achieve high accuracy in anomaly detection, they cannot be deployed in real time. One of the key reasons is that the time cost of extracting effective features in the video is too high. From the perspective of practical applications, detecting anomalies in time and accurately can effectively reduce the loss caused by abnormal events. Therefore, it is necessary to design new methods for efficient video data preprocessing and feature extraction in the future, so as to break through the limitation of processing speed, so that more systems can be used for real-time detection scenarios.

- (3)

- A sustainable learning architecture for anomaly detection should be built. With prolonged monitoring, abnormal events are diverse and variable, and learned abnormal events may become normal events, making it difficult to identify abnormal behavior without expert interaction. Existing feature learning models trained offline are unable to adapt to such changes. Therefore, more online learning models with automatic update capability are needed to continuously update the anomaly detection knowledge base and improve the accuracy of detection in a continuous learning process.

- (4)

- The ability to locate the occurrence time of abnormal events should be enhanced. In video anomaly detection, it is necessary not only to detect and locate the abnormal event itself, but also to know the time when the anomaly occurs. The methods of temporal modeling and event analysis can be further explored to better understand the relationship and dynamic development of events in videos. This may include the use of methods such as spatio-temporal attention mechanisms, time-series models and event graphs.

- (5)

- Online and offline learning should be bridged with transformers. In practice, some decision tasks are not achievable outside of an online framework, and some environments are open-ended, meaning that strategies must be constantly adapted to handle tasks not seen during online interactions. Therefore, we believe that bridging online and offline learning is necessary. However, most of the current research advances based on decision transformers have focused on offline learning frameworks. Similar to offline reinforcement learning algorithms, distributional variation in online fine-tuning still exists; hence, there is a need for a special design of a decision transformer to address related issues. How to train an online decision transformer from scratch is a problem worth investigating.

- (6)

- A more appropriate online anomaly evaluation system should be found to make the evaluation of abnormal behavior more objective and comprehensive. The current evaluation metrics for video anomaly detection are mainly the area under the ROC curve (AUC) and the equal error rate (EER), but due to the unbalanced and heterogeneous nature of the data in the video anomaly detection datasets, using the equal error rate to evaluate the anomaly detection results will lead to large errors in practical application scenarios. The use of AUC to evaluate a model from frame-level and pixel-level criteria is also not very representative of the overall performance of the model. Therefore, it is important to establish a comprehensive and robust evaluation system for online video anomaly detection.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nayak, R.; Pati, U.C.; Das, S.K. A comprehensive review on deep learning-based methods for video anomaly detection. Image Vis. Comput. 2021, 106, 104078. [Google Scholar] [CrossRef]

- Si-Qi, W.A.N.G.; Jing-tao, H.U.; Guang, Y.U.; En, Z.H.U.; Zhi-Ping, C.A.I. A survey of video abnormal event detection. Comput. Eng. Sci. 2020, 42, 1393–1405. [Google Scholar]

- Saligrama, V.; Konrad, J.; Jodoin, P. Video Anomaly Identification. IEEE Signal Process. Mag. 2010, 27, 18–33. [Google Scholar] [CrossRef]

- Peipei, Z.H.O.U.; Qinghai, D.I.N.G.; Haibo, L.U.O.; Xinglin, H. Anomaly Detection and Location in Crowded Surveillance Videos. Acta Opt. Sin. 2018, 38, 0815007. [Google Scholar] [CrossRef]

- Patrikar, D.R.; Parate, M.R. Anomaly detection using edge computing in video surveillance system. Int. J. Multimed. Inf. Retr. 2022, 11, 85–110. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Deng, J.D.; Woodford, B.J.; Shahi, A. Online weighted clustering for real-time abnormal event detection in video surveillance. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 1 October 2016; pp. 536–540. [Google Scholar]

- Mahmood, S.A.; Abid, A.M.; Lafta, S.H. Anomaly event detection and localization of video clips using global and local outliers. Indones. J. Electr. Eng. Comput. Sci. 2021, 24, 1063. [Google Scholar] [CrossRef]

- Bird, N.; Atev, S.; Caramelli, N.; Martin, R.; Masoud, O.; Papanikolopoulos, N. Real time, Online Detection of Abandoned Objects In Public Areas. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 3775–3780. [Google Scholar]

- Ramachandra, B.; Jones, M. Street Scene: A new dataset and evaluation protocol for video anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 14–19 June 2020; pp. 2569–2578. [Google Scholar]

- Mahadevan, V.; Li, W.; Bhalodia, V. Anomaly Detection in Crowded Scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1975–1981. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-Image Crowd Counting Via Multi-Column Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June 2016; pp. 589–597. [Google Scholar]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6479–6488. [Google Scholar]

- Yao, Y.; Wang, X.; Xu, M.; Pu, Z.; Wang, Y.; Atkins, E.; Crandall, D.J. DoTA: Unsupervised Detection of Traffic Anomaly in Driving Videos. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 444–459. [Google Scholar] [CrossRef]

- Del Giorno, A.; Bagnell, J.A.; Hebert, M. A Discriminative Framework for Anomaly Detection in Large Videos. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 334–349. [Google Scholar]

- Tudor Ionescu, R.; Smeureanu, S.; Alexe, B.; Popescu, M. Unmasking the Abnormal Events in Video. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 14–17 October 2017; pp. 2895–2903. [Google Scholar]

- Zaheer, M.Z.; Mahmood, A.; Khan, M.H.; Segu, M.; Yu, F.; Lee, S.I. Generative Cooperative Learning for Unsupervised Video Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14744–14754. [Google Scholar]

- Cho, M.; Kim, T.; Kim, W.J.; Cho, S.; Lee, S. Unsupervised video anomaly detection via normalizing flows with implicit latent features. Pattern Recognit. 2022, 129, 108703. [Google Scholar] [CrossRef]

- Sun, C.; Jia, Y.; Song, H.; Wu, Y. Adversarial 3d convolutional auto-encoder for abnormal event detection in videos. IEEE Trans. Multimed. 2020, 23, 3292–3305. [Google Scholar] [CrossRef]

- Li, G.; Cai, G.; Zeng, X.; Zhao, R. Scale-Aware Spatio-Temporal Relation Learning for Video Anomaly Detection. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2022; pp. 333–350. [Google Scholar]

- Purwanto, D.; Chen, Y.T.; Fang, W.H. Dance with Self-Attention: A New Look of Conditional Random Fields on Anomaly Detection In Videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 173–183. [Google Scholar]

- Wu, J.C.; Hsieh, H.Y.; Chen, D.J.; Fuh, F.S.; Liu, T.L. Self-supervised Sparse Representation for Video Anomaly Detection. In European Conference on Computer Vision 23 October 2022; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Wang, G.; Wang, Y.; Qin, J.; Zhang, D.; Bao, X.; Huang, D. Video Anomaly Detection by Solving Decoupled Spatio-Temporal Jigsaw Puzzles. In European Conference on Computer Vision, 23 October 2022; Springer: Cham, Switzerland, 2022; pp. 494–511. [Google Scholar]

- Li, C.L.; Sohn, K.; Yoon, J. Cutpaste: Self-Supervised Learning for Anomaly Detection And Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 13–19 June 2021; pp. 9664–9674. [Google Scholar]

- Zhong, Y.; Chen, X.; Jiang, J.; Ren, F. A cascade reconstruction model with generalization ability evaluation for anomaly detection in videos. Pattern Recognit. 2022, 122, 108336. [Google Scholar] [CrossRef]

- Li, C.; Li, H.; Zhang, G. Future frame prediction based on generative assistant discriminative network for anomaly detection. Appl. Intell. 2023, 53, 542–559. [Google Scholar] [CrossRef]

- Chang, Y.; Tu, Z.; Xie, W.; Luo, B.; Zhang, S.; Sui, H.; Yuan, J. Video anomaly detection with spatio-temporal dissociation. Pattern Recognit. 2022, 122, 108213. [Google Scholar] [CrossRef]

- Chen, D.; Yue, L.; Chang, X. NM-GAN: Noise-modulated generative adversarial network for video anomaly detection. Pattern Recognit. 2021, 116, 107969. [Google Scholar] [CrossRef]

- Li, H.; Sun, X.; Li, C.; Shen, X.; Chen, J.; Chen, J.; Xie, Z. MPAT: Multi-path attention temporal method for video anomaly detection. Multimed. Tools Appl. 2023, 82, 12557–12575. [Google Scholar] [CrossRef]

- Georgescu, M.I.; Ionescu, R.T.; Khan, F.S. A background-agnostic framework with adversarial training for abnormal event detection in video. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4505–4523. [Google Scholar] [CrossRef]

- Chen, C.; Xie, Y.; Lin, S.; Yao, A.; Jiang, G.; Zhang, W.; Qu, Y.; Qiao, R.; Ren, B.; Ma, L. Comprehensive Regularization in a Bi-directional Predictive Network for Video Anomaly Detection. In Proceedings of the American Association for Artificial Intelligence, Palo Alto, CA, USA, 22 February–1 March 2022; pp. 1–9. [Google Scholar]

- Cai, R.; Zhang, H.; Liu, W.; Gao, S.; Hao, Z. Appearance-Motion Memory Consistency Network for Video Anomaly Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; Volume 35, pp. 938–946. [Google Scholar]

- Hao, Y.; Li, J.; Wang, N.; Wang, X.; Gao, G. Spatiotemporal consistency-enhanced network for video anomaly detection. Pattern Recognit. 2022, 121, 108232. [Google Scholar] [CrossRef]

- Slavic, G.; Baydoun, M.; Campo, D.; Marcenaro, L.; Regazzoni, C. Multilevel anomaly detection through variational auto-encoders and bayesian models for self-aware embodied agents. IEEE Trans. Multimed. 2021, 24, 1399–1414. [Google Scholar] [CrossRef]

- Behera, S.; Dogra, D.P.; Bandyopadhyay, M.K.; Roy, P.P. Understanding crowd flow patterns using active-Langevin model. Pattern Recognit. 2021, 119, 108037. [Google Scholar] [CrossRef]

- Doshi, K.; Yilmaz, Y. Online anomaly detection in surveillance videos with asymptotic bound on false alarm rate. Pattern Recognit. 2021, 114, 107865. [Google Scholar] [CrossRef]

- Aboah, A. A vision-based system for traffic anomaly detection using deep learning and decision trees. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4207–4212. [Google Scholar]

- Rossi, L.; Bernuzzi, V.; Fontanini, T.; Bertozzi, M.; Prati, A. Memory-augmented Online Video Anomaly Detection. arXiv 2023, arXiv:2302.10719. [Google Scholar]

- Luo, W.; Liu, W.; Lian, D.; Tang, J.; Duan, L.; Peng, X.; Gao, S. Video anomaly detection with sparse coding inspired deep neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1070–1084. [Google Scholar] [CrossRef] [PubMed]

- Chaker, R.; Aghbari, Z.A.; Junejo, I.N. Social network model for crowd anomaly detection and localization. Pattern Recognit. 2017, 61, 266–281. [Google Scholar] [CrossRef]

- Dang, T.; Khattak, S.; Papachristos, C.; Alexis, K. Anomaly Detection and Cognizant Path Planning for Surveillance Operations using Aerial Robots. In Proceedings of the International Conference on Unmanned Aircraft Systems, Atlanta, GA, USA, 11–14 June 2019. [Google Scholar]

- Zhao, B.; Li, F.F.; Xing, E.P. Online Detection of Unusual Events In Videos Via Dynamic Sparse Coding. In Proceedings of the Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Bao, W.; Yu, Q.; Kong, Y. Uncertainty-Based Traffic Accident Anticipation with Spatio-Temporal Relational Learning. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12 October 2020; pp. 2682–2690. [Google Scholar]

- Sun, Q.; Liu, H.; Harada, T. Online growing neural gas for anomaly detection in changing surveillance scenes. Pattern Recognit. J. Pattern Recognit. Soc. 2017, 64, 187–201. [Google Scholar] [CrossRef]

- Nawaratne, R.; Alahakoon, D.; De Silva, D.; Yu, X. Spatiotemporal anomaly detection using deep learning for real-time video surveillance. IEEE Trans. Ind. Inform. 2019, 16, 393–402. [Google Scholar] [CrossRef]

- Monakhov, V.; Thambawita, V.; Halvorsen, P.; Riegler, M.A. GridHTM: Grid-Based Hierarchical Temporal Memory for Anomaly Detection in Videos. Sensors 2023, 23, 2087. [Google Scholar] [CrossRef] [PubMed]

- Chriki, A.; Touati, H.; Snoussi, H.; Kamoun, F. Deep learning and handcrafted features for one-class anomaly detection in UAV video. Multimed. Tools Appl. 2021, 80, 2599–2620. [Google Scholar] [CrossRef]

- Bozcan, I.; Le Fevre, J.; Pham, H.X.; Kayacan, E. Gridnet: Image-agnostic conditional anomaly detection for indoor surveillance. IEEE Robot. Autom. Lett. 2021, 6, 1638–1645. [Google Scholar] [CrossRef]

- Khaire, P.; Kumar, P. A semi-supervised deep learning based video anomaly detection framework using RGB-D for surveillance of real-world critical environments. Forensic Sci. Int. Digit. Investig. 2022, 40, 301346. [Google Scholar] [CrossRef]

- Mozaffari, M.; Doshi, K.; Yilmaz, Y. Online multivariate anomaly detection and localization for high-dimensional settings. Sensors 2022, 22, 8264. [Google Scholar] [CrossRef]

- Majhi, S.; Das, S.; Brémond, F. DAM: Dissimilarity Attention Module for Weakly-supervised Video Anomaly Detection. In Proceedings of the 17th IEEE International Conference on Advanced Video and Signal Based Surveillance, Washington, DC, USA, 16–19 November 2021; pp. 1–8. [Google Scholar]

- Liu, T.; Zhang, C.; Lam, K.M.; Kong, J. Decouple and Resolve: Transformer-Based Models for Online Anomaly Detection from Weakly Labeled Videos. IEEE Trans. Inf. Forensics Secur. 2022, 18, 15–28. [Google Scholar] [CrossRef]

- Huang, C.; Liu, C.; Wen, J.; Wu, L.; Xu, Y.; Jiang, Q.; Wang, Y. Weakly Supervised Video Anomaly Detection via Self-Guided Temporal Discriminative Transformer. IEEE Trans. Cybern. 2022, 29, 1–14. [Google Scholar] [CrossRef]

- Ouyang, Y.; Shen, G.; Sanchez, V. Look at adjacent frames: Video anomaly detection without offline training. In Proceedings of the Computer Vision-ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022; Part V. Springer Nature: Cham, Switzerland, 2023; pp. 642–658. [Google Scholar]

- Tariq, S.; Farooq, H.; Jaleel, A.; Wasif, S.M. Anomaly detection with particle filtering for online video surveillance. IEEE Access 2021, 9, 19457–19468. [Google Scholar]

- Ullah, W.; Hussain, T.; Baik, S.W. Vision transformer attention with multi-reservoir echo state network for anomaly recognition. Inf. Process. Manag. 2023, 60, 103289. [Google Scholar] [CrossRef]

- Doshi, K.; Yilmaz, Y. Towards Interpretable Video Anomaly Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 2655–2664. [Google Scholar]

- Rendón-Segador, F.J.; Álvarez-García, J.A.; Enríquez, F.; Deniz, O. Violencenet: Dense multi-head self-attention with bidirectional convolutional lstm for detecting violence. Electronics 2021, 10, 1601. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Obaidat, M.S.; Muhammad, K.; Ullah, A.; Baik, S.W.; Cuzzolin, F.; Rodrigues, J.J.P.C. An intelligent system for complex violence pattern analysis and detection. Int. J. Intell. Syst. 2022, 37, 10400–10422. [Google Scholar] [CrossRef]

- Kim, D.; Kim, H.; Mok, Y.; Paik, J. Real-time surveillance system for analyzing abnormal behavior of pedestrians. Appl. Sci. 2021, 11, 6153. [Google Scholar] [CrossRef]

- Mehmood, A. LightAnomalyNet: A Lightweight Framework for Efficient Abnormal Behavior Detection. Sensors 2021, 21, 8501. [Google Scholar] [CrossRef]

- Lin, W.; Gao, J.; Wang, Q.; Liu, X. Learning to detect anomaly events in crowd scenes from synthetic data. Neurocomputing 2021, 436, 248–259. [Google Scholar] [CrossRef]

- Fang, M.; Chen, Z.; Przystupa, K.; Li, T.; Majka, M.; Kochan, O. Examination of abnormal behavior detection based on improved YOLOv3. Electronics 2021, 10, 197. [Google Scholar] [CrossRef]

- Direkoglu, C. Abnormal crowd behavior detection using motion information images and convolutional neural networks. IEEE Access 2020, 8, 80408–80416. [Google Scholar] [CrossRef]

- Doshi, K.; Yilmaz, Y. Fast Unsupervised Anomaly Detection in Traffic Videos. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops IEEE, Virtual, 14–19 June 2020. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Any-shot Sequential Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Virtual, 14–19 June 2020; pp. 934–935. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Continual Learning for Anomaly Detection In Surveillance Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision And Pattern Recognition Workshops, Virtual, 14–19 June 2020; pp. 254–255. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Multi-Task Learning for Video Surveillance with Limited Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3889–3899. [Google Scholar]

- Doshi, K.; Yilmaz, Y. A Modular and Unified Framework for Detecting and Localizing Video Anomalies. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 3982–3991. [Google Scholar]

- Kale, S.; Shriram, R. Suspicious activity detection using transfer learning based resnet tracking from surveillance videos. In Proceedings of the 12th International Conference on Soft Computing and Pattern Recognition, online, India, 15–18 December 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 208–220. [Google Scholar]

- Li, N.; Wu, X.; Guo, H.; Xu, D.; Ou, Y.; Chen, Y. Anomaly detection in video surveillance via gaussian process. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1555011. [Google Scholar] [CrossRef]

- Li, N.; Chang, F.; Liu, C. Spatial-temporal cascade autoencoder for video anomaly detection in crowded scenes. IEEE Trans. Multimed. 2020, 23, 203–215. [Google Scholar] [CrossRef]

- Cheoi, K.J. Temporal saliency-based suspicious behavior pattern detection. Appl. Sci. 2020, 10, 1020. [Google Scholar] [CrossRef]

- Leyva, R.; Sanchez, V.; Li, C.T. Video anomaly detection with compact feature sets for online performance. IEEE Trans. Image Process. 2017, 26, 3463–3478. [Google Scholar] [CrossRef] [PubMed]

- Leyva, R.; Sanchez, V.; Li, C.T. Abnormal Event Detection in Videos Using Binary Features. In Proceedings of the 40th International Conference on Telecommunications and Signal Processing, Barcelona, Spain, 5–7 July 2017; pp. 621–625. [Google Scholar]

- Leyva, R.; Sanchez, V.; Li, C.T. Fast Detection of Abnormal Events In Videos With Binary Features. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, AB, Canada, 15–20 April 2018; pp. 1318–1322. [Google Scholar]

- Geng, Y.; Du, J.; Liang, M. Abnormal event detection in tourism video based on salient spatio-temporal features and sparse combination learning. World Wide Web 2019, 22, 689–715. [Google Scholar] [CrossRef]

- Feng, J.; Zhang, C.; Hao, P. Online Anomaly Detection in Videos by Clustering Dynamic Exemplars. In Proceedings of the 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 3097–3100. [Google Scholar]

- Pennisi, A.; Bloisi, D.D.; Iocchi, L. Online real-time crowd behavior detection in video sequences. Comput. Vis. Image Underst. 2016, 144, 166–176. [Google Scholar] [CrossRef]

- Li, S.; Cheng, Y.; Zhao, L.; Wang, Y. Anomaly detection with multi-scale pyramid grid templates. Multimed. Tools Appl. 2023, 30, 1–19. [Google Scholar] [CrossRef]

| Datasets | Labeling | Resolution | Type of Exception | Duration |

|---|---|---|---|---|

| UMN | Frame-Level | 320 × 240 | Escape, panic | 27 min |

| UCSD Ped1 | Pixel-Level and Frame-Level | 238 × 158 | Cycling, small vehicles | 10 min |

| UCSD Ped2 | Pixel-Level and Frame-Level | 240 × 360 | Cycling, small vehicles | 10 min |

| CUHK Avenue | Frame-Level | 640 × 360 | Running, throwing objects, or walking in the wrong direction | 30 min |

| ShanghaiTech | Frame-Level | 856 × 480 | Cycling, pushing prams, climbing over railings, running | 3.01 h |

| UCF-Crime | Frame-Level | 320 × 240 | Abuse, arrest, arson, assault, road accidents, burglary, explosions, fighting, robbery, shooting, theft, shoplifting and vandalism | 128 h |

| DOTA | Frame-Level | 1280 × 720 | Pedestrians, cars, trucks, buses, motorbikes, bicycles and riders | 35 min |

| Reference | Method | Architecture | Remarks |

|---|---|---|---|

| Li et al. [70] | Other methods | Online clustering algorithm | An online clustering algorithm was used to construct the basic behavior model. |

| Li et al. [71] | ST-CAAE | A cuboid-patch-based spatio-temporal cascading auto-encoder (ST-CAAE) was proposed to improve the speed of anomaly detection in the next stage. | |

| Cheoi et al. [72] | Optical flow and gradient features | A method for automatic detection of suspicious behavior using CCTV video streams was investigated. | |

| Leyva et al. [73] | Foreground occupancy and optical flow functions | The joint response of the local spatio-temporal neighborhood model was considered to improve the detection accuracy. | |

| Leyva et al. [74] | Binary features | Binary features were introduced to detect abnormal events in videos. | |

| Leyva et al. [75] | Binary features | It used binary features to encode motion information and for low-complexity probabilistic model detection. | |

| Geng et al. [76] | Sparse ensemble learning algorithms | Real-time abnormal event detection was realized by a sparse combination learning algorithm. | |

| Feng et al. [77] | Incremental learning machine | A hierarchical event model for online anomaly detection in surveillance video was proposed. | |

| Pennisi et al. [78] | Visual feature extraction and Image segmentation | A statistical analysis method combining feature detection and image segmentation was proposed. | |

| Li et al. [79] | Particle filtering | A new particle prediction model and a weighted likelihood model were proposed. |

| Method Categories | Basis of Judgement | Advantages | Disadvantages | |

|---|---|---|---|---|

| Methods based on sample annotation | Supervised learning | The optimal model is trained by training samples and applied to new data and output results so that the model has predictive ability. | Effectively use the information of data annotation to improve the prediction performance of the model. | Exception types are more complex, with poor performance and longer latency in complex scenarios. |

| Unsupervised learning | The unlabeled training dataset needs to be analyzed to understand the statistical regularities between samples. | It reduces false alarms and missed detections caused by model threshold aging and avoids concept drift in unstable environments. | Lack of ability to automatically update and distinguish anomalies online based on scene changes. | |

| Weakly supervised learning | Incomplete, inexact or imprecise labeling information is called weak supervision and is widely used in label learning. | Real-time online detection of anomalies without additional buffer time. | The single-stage framework is not suitable for solving multi-stage problems and is computationally expensive. | |

| Methods based on technical approach | Deep learning | A deep neural network is constructed, and a large number of sample data are used as input to obtain the model and learn its internal laws and representation levels. | Good portability is directly proportional to the amount of data, and deep learning is highly data-dependent. | There are challenges and high computational complexity in dealing with online learning, noise and concept drift. |

| Transfer learning | Using the similarity between data, tasks or models, a model that has learned in an old domain is applied to a new domain to solve a new problem. | The computational complexity of the training and detection phases can be significantly reduced. | The combination with the model has certain limitations. | |

| Other methods | Optical flow features | When the object is moving, the brightness pattern of its corresponding point on the image is also moving. | Optical flow expresses the information of image changes and object motion, which is suitable for detecting abnormal behavior activities in videos. | When changes in optical flow are not obvious, abnormal behavior cannot be accurately detected. |

| Binary features | A number of point pairs are randomly selected around a feature point, and their gray values are combined into a binary string, which is used as the feature descriptor of the feature point. | Frame processing time can be reduced, greatly increasing the speed of computing. | Some important feature information can be lost, resulting in a loss of accuracy. | |

| Author/Year | Model Structures | Frame Levels AUC/EER/% | ||||||

|---|---|---|---|---|---|---|---|---|

| UCSD PED1 | UCSD PED2 | ShanghaiTech | CUHK Avenue | UCF-Crime | UMN | DOTA | ||

| Pennisi [78]/2016 | Statistic Analysis | -/- | -/- | -/- | -/- | -/- | 95.0/- | -/- |

| Chaker [39]/2017 | SNN | -/- | 87.9/- | -/- | -/- | -/- | -/- | -/- |

| Sun [43]/2017 | Online GNG | 93.75/- | 94.09/- | -/- | -/- | -/- | -/- | -/- |

| Leyva [75]/2017 | Binary Features | -/- | -/- | -/- | -/- | -/- | 88.3/- | -/- |

| Sultani [12]/2018 | MIL | -/- | -/- | -/- | 75.41/- | -/- | -/- | -/- |

| Leyva [76]/2018 | Binary Features | -/48.1 | -/38.4 | -/- | -/- | -/- | -/- | -/- |

| Doshi [65]/2020 | Continual Learning | -/- | 97.8/- | 71.62/- | 86.4/- | -/- | -/- | -/- |

| Li [71]/2020 | ST-CAAE | -/- | 87.1/- | -/- | -/- | -/- | -/- | -/- |

| Doshi [35]/2021 | GAN | -/- | 97.2/- | 70.9/- | -/- | -/- | -/- | -/- |

| Majhi [50]/2021 | Cov-DAM | -/- | -/- | 88.22/- | -/- | 82.67/- | -/- | -/- |

| Dist-DAM | -/- | -/- | 88.86/- | -/- | 82.57/- | -/- | -/- | |

| Huang [52]/2022 | TTFA and SDFE | -/- | -/- | 98.06/- | -/- | 84.04/- | -/- | -/- |

| Nawaratne [44]/2022 | ISTL | 75.2/29.8 | 91.1/8.9 | -/- | 79.8/29.2 | -/- | -/- | -/- |

| Liu [51]/2022 | Hybrid Conv-Transformer | -/- | -/- | -/- | -/- | 85.18/- | -/- | -/- |

| Doshi [67]/2022 | DNN and Continual Learning | -/- | 95.6/- | 70.12/- | 86.4/- | -/- | -/- | -/- |

| Doshi [68]/2022 | Continual Learning | -/- | 97.2/- | 73.62/- | 88.7/- | -/- | -/- | -/- |

| Rossi [37]/2023 | VST | -/- | -/- | -/- | 82.11/- | -/- | -/- | 82.1/- |

| Yao [13]/2023 | FOL-Ensemble | -/- | -/- | -/- | -/- | -/- | -/- | 73.0/- |

| Ouyang [53]/2023 | MLP | -/- | 96.5/- | 83.1/- | 90.2/- | -/- | -/- | -/- |

| Ullah [55]/2023 | 3D-CNN | -/- | -/- | -/- | -/- | 51.0/- | -/- | -/- |

| Doshi [56]/2023 | Continual Learning | -/- | -/- | 68.9/- | 79.0/- | -/- | -/- | -/- |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Song, J.; Jiang, Y.; Li, H. Online Video Anomaly Detection. Sensors 2023, 23, 7442. https://doi.org/10.3390/s23177442

Zhang Y, Song J, Jiang Y, Li H. Online Video Anomaly Detection. Sensors. 2023; 23(17):7442. https://doi.org/10.3390/s23177442

Chicago/Turabian StyleZhang, Yuxing, Jinchen Song, Yuehan Jiang, and Hongjun Li. 2023. "Online Video Anomaly Detection" Sensors 23, no. 17: 7442. https://doi.org/10.3390/s23177442

APA StyleZhang, Y., Song, J., Jiang, Y., & Li, H. (2023). Online Video Anomaly Detection. Sensors, 23(17), 7442. https://doi.org/10.3390/s23177442