The experiments in this paper included two parts: simulation experiments under controlled scenarios and real-vehicle experiments. According to the experimental results, it can be seen that the VIDAR-based road-surface-pothole-detection method proposed in this paper can effectively detect potholes in the road environment. Through comparison experiments with the classical method, our method was proved to have a high detection accuracy.

4.1. Simulation Experiments

On the experimental platform, experimental equipment such as IMUs and cameras were installed. To ensure the effectiveness of the experiment, a foam board with stickers was used as a road, while holes of different sizes were drilled in this foam board as road potholes. The video captured by the camera generated image sequences at a frame rate of 20 fps on which the road-surface-pothole-detection algorithm was experimented. In order to simulate the road environment more realistically, two types of environments were set up: a road environment with only one pothole, and a road environment with multiple potholes of different sizes, as shown in

Figure 10.

The vehicles in the simulation experiment are car models and the potholes are man-made potholes. The length of the car model was 15 cm, the width was 5.8 cm, the wheel diameter was 2.9 cm, the wheel width is 0.8 cm, and the chassis height is 0.8 cm.

The feature region and feature point extraction process for road-surface potholes using the MSER-based fast image region-matching method is shown in

Figure 11.

Figure 11 is divided into four stages. The blue asterisks in the second stage represent the MSERs feature points; the block colors in the third stage represent the obstacle contours identified through the feature points; and the yellow rectangular boxes in the fourth stage represent the obstacle frames extracted through the feature points.

After the potholes were successfully detected, the images were matched and feature points were removed from two consecutive frames using a fast MSER-based image-region matching method, as shown in

Figure 12.

Figure 12a,b represent the processing for two consecutive image frames. The block shading represents the position of the obstacle in the second frame image. The green crosses represent the feature points of the first frame image, the red circles represent the corresponding feature points of the second frame image, and the yellow line segments indicate the correspondence.

The feature point matching results in

Figure 12 revealed that, in addition to the studied road-surface pothole feature points,

Figure 12a matched the vehicle’s edge feature points;

Figure 12b matched the wall stain at the rear of the experimental platform. According to the road-surface-pothole determination process in

Figure 9, the above redundant feature points do not affect the detection of road-surface potholes and the calculation of the length, width and depth of potholes.

The inspection accuracy described in this paper consists of two components: one is the ability to detect potholes; the other is the difference between the dimensional information of the detected potholes and the true measurement (i.e., measurement error). In particular, the ability to detect potholes is judged by the relevant parameters of the confusion matrix.

The accuracy (A), recall (R) and precision (P) of its ability to detect potholes were measured by four metrics TP, FP, TN and FN. Let a be a pothole correctly identified as a positive example, b be a pothole incorrectly identified as a positive example, c be a pothole correctly identified as a negative example, and d be a pothole incorrectly identified as a negative example. Then, , , , and .

Therefore, the accuracy (

A), recall (

R) and precision (

P) are calculated, as shown in Equations (10)–(12):

At the same time, for the method proposed in this paper, the dimensional error in pit detection is calculated, as shown in Equation (13).

Among them, is the measurement error; is the actual width of the pothole; is the measured width; is the actual length of the pothole; is the measured length; is the actual depth of the pothole; and is the measured depth.

The simulation experiment collected information from 15 potholes. The test results are shown in

Table 1.

The data in

Table 1 show that of the 15 sets of potholes, 13 can be correctly identified and 2 cannot be identified. Further analysis revealed that these 2 could not be identified because their pothole width and length were too short and were rejected by the algorithm in the image-matching process.

Through calculation, the proposed method in this paper was found to have an accuracy (A) of 86.67%, a recall (R) of 86.67% and a precision (P) of 100% in simulated experiments.

The difference between the dimensional information of the detected potholes and the true measurement is shown in

Table 2.

In the analysis of the data in

Table 2, the method proposed in this paper was found to have an average error of 4.76% in the detection of pit length, width and depth in simulated experiments.

Through analysis of the results in

Table 1 and

Table 2, it was concluded that the VIDAR-based road-surface-pothole-detection method proposed in this paper is effective in detecting potholes in simulated experiments.

4.2. Experiments on Real Vehicles

In the real-vehicle experiments, an electric vehicle was used as the experimental vehicle (as shown in

Figure 13). The relevant equipment is as follows: the MV-VDF300SC industrial digital camera was mounted on the vehicle as a monocular vision sensor; the HEC295IMU was mounted on the bottom of the experimental vehicle for real-time positioning and reading of the vehicle’s movement; the GPS was used to pinpoint the vehicle’s position; and the computing unit was used for real-time data processing. The digital camera was a USB2.0 standard interface with the advantages of a high resolution, high accuracy and high definition, and the relevant parameters are shown in

Table 3. In the actual calculation process, due to the complexity of multi-sensor data sources, fuzzy logic was used to deal with complex systems [

21] to combine the acquired information in a coordinated way, to improve the efficiency of the system and to process the information efficiently in real scenarios.

In this paper, the Zhengyou Zhang calibration method was used to calibrate the camera. The calibration process and calibration procedure are shown in

Figure 14.

The aberrations of the camera included three types of aberrations: radial aberrations, thin-lens aberrations and centrifugal aberrations. The superposition of by these three types of aberrations caused a nonlinear distortion, the model of which can be represented in the image coordinate system, as shown in (14).

Among them, and are the centrifugal aberration coefficients of the camera; and are the radial aberration coefficients of the camera; and and are the thin-lens aberration coefficients of the camera.

To facilitate the calculation, the centrifugal distortion of the camera is not considered in this paper, so the internal reference matrix of the camera used in this paper can be expressed as (15):

The calibration of the external parameters of the camera can be calculated by obtaining the edge object points of the lane line, and the calibration results are shown in

Table 4.

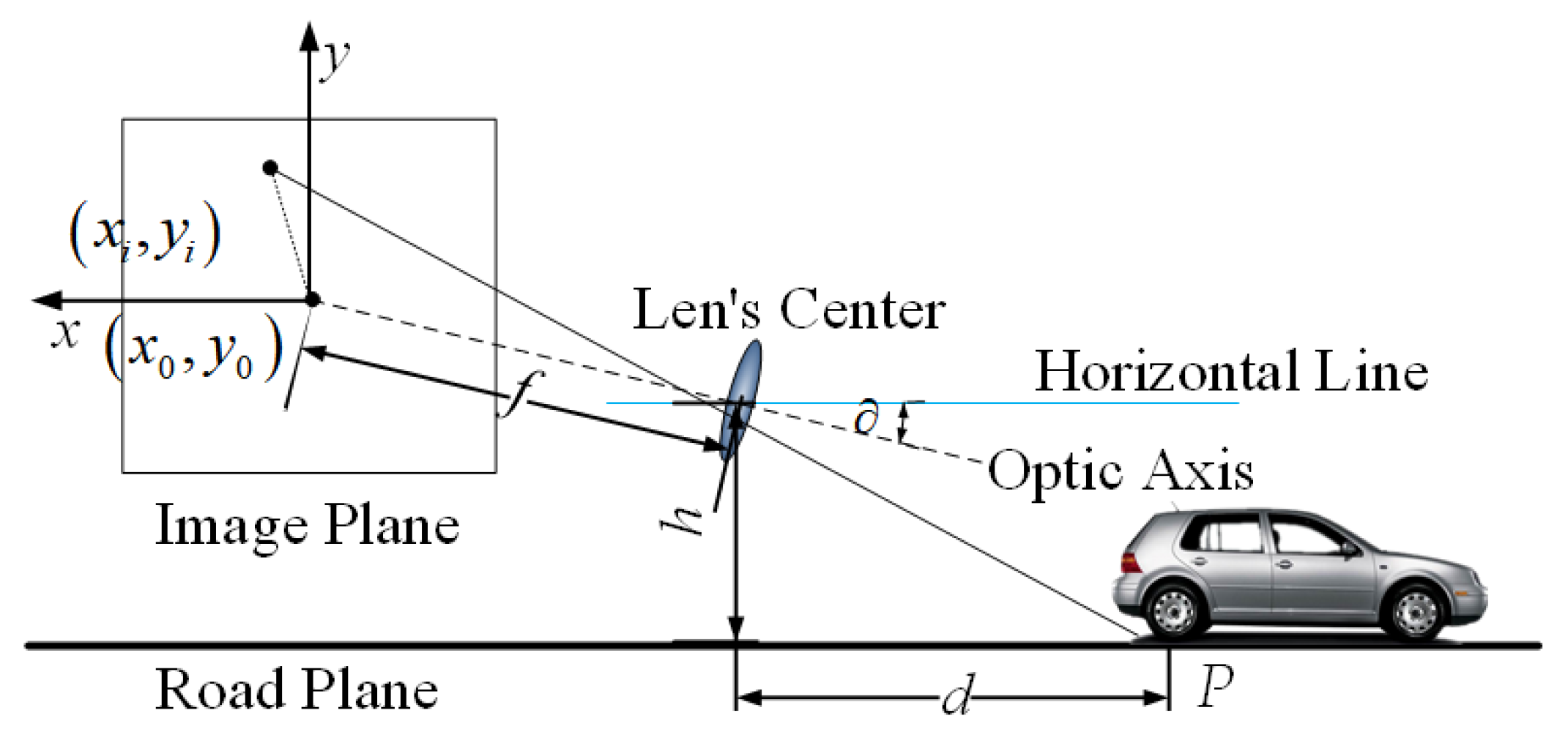

Throughout the real-vehicle experiments, the IMU obtained the angular acceleration of the self-vehicle; the camera’s pitch angle was obtained and updated by solving the camera pose using the Quaternion method. The image was processed by a fast MSER-based image area-matching method, and the self-vehicle acceleration was used to calculate the horizontal distance between the self-vehicle and the road-surface pothole and the maximum width and maximum length of the road-surface pothole. The depth of the road-surface pothole was solved by using small-aperture imaging and a vertical distance from the obstacle that was always constant during real-vehicle movement.

Based on the above principles, the evaluation of the real-vehicle experiment was continued using the evaluation indicators in

Section 4.1. Some of the test results are shown in

Figure 15. The results of the experimental results are shown in

Table 5 and

Table 6.

Upon calculation, the proposed method in this paper had an accuracy (A) of 91.43%, a recall (R) of 91.43% and a precision (P) of 100% in the real-vehicle experiments.

The data in

Table 5 show that of the 35 sets of potholes, 32 could be correctly identified and 3 could not be identified. Further analysis revealed that 3 sets of data could not be detected due to the depth of the pits being too shallow to be correctly identified as potholes.

In order to better cope with the different sizes and types of potholes in the road surface, we stipulated that one of the lengths or widths of the potholes used in the experiments should be greater than 150 mm, and the depth should be greater than 50 mm.

Analysis of the 35 sets of data shown in

Table 6 revealed that the VIDAR-based road-surface-pothole-detection method proposed in this paper could detect the width, length and depth of road-surface potholes more accurately in the actual inspection process, and the average error for the 35 sets of data was 6.23%.

The experimental pits were categorized into six types based on length, width and depth, as shown in

Table 7.

In order to further verify the accuracy and correctness of the method proposed in this paper, the detection results of the Faster-RCNN and YOLO-v5 detection methods were compared with the VIDAR-based pothole-detection method proposed in this paper.

Shaoqing Ren proposed the Faster-RCNN model in 2015 [

22]. The model uses a small Region Proposal Network (RPN) instead of the Selective Search algorithm, which substantially reduces the number of proposal boxes, improves the drawback that Selective Search is too slow in generating proposal windows, and increases the processing speed of images.

YOLO (You Only Look Once) is one of the most typical algorithms in the field of target detection and is able to perform the task of target detection very well. The YOLO-v5, proposed by Glenn Jocher in 2020, introduces adaptive anchor-frame calculation and adaptive scaling techniques, which have the advantages of a simple structure, fast speed and high accuracy. For the YOLO-v5 [

23] detection method, images of various types of potholes on the road were divided into a training set and a validation set, and the image sequences shown in

Section 4.1 of this paper were used as the test set.

In the comparison experiment, the experimental vehicles were in the same position, stationary, and used the VIDAR-based pothole-detection method, the Faster-RCNN method, and the YOLO-v5 method, to detect 80 potholes of different sizes.

The results of the three methods are shown in

Table 8, and the average error of the three methods in detecting the pothole information are shown in

Table 9:

In conjunction with

Table 8, the accuracy (

A), recall (

R), precision (

P) and detection time of the three methods were further analyzed, as shown in

Table 10.

Analysis of the experimental results in

Table 9 and

Table 10 reveals that the vidar-based pothole-detection method excludes the interference of road obstacles and only marks and calculates the non-road obstacle feature points, so it can detect potholes more correctly than faster-rcnn and yolo-v5s. from the overall results, we can see that as vidar solved the camera pose problem through imu, the vidar-based pothole-detection method proposed in this paper improved the correct rate by 16.89%, recall rate by 13.01%, accuracy by 6.04% and detection error by 12.45% compared to the faster-rcnn method; compared to the yolo-v5 method, the correct rate improved by 10.8%, the recall rate by 8.78%, the precision by 2.9% and the detection error by 8.4%. However, since the length, width and depth information of the pothole needs to be detected and the depth information of the pothole is obtained from the target tracking and data parsing of multiple images, the method proposed in this paper does not have a significant advantage in detection time.