Automatic Liver Tumor Segmentation from CT Images Using Graph Convolutional Network

Abstract

:1. Introduction

- Using SLIC algorithm for optimal clustering of CT images.

- Using deep graph convolutional networks to segment the liver and liver tumors for the first time.

- Evaluation of the proposed network in noisy environments and maintaining the algorithm’s stability for various SNRs.

- Providing customized architecture based on the combination of SLIC and Chebyshev graph convolutional network.

- Compared to previous research, achieving the highest accuracy in segmenting and diagnosing liver and liver tumors on the LiTS17 dataset.

2. Materials and Methods

2.1. Database Settings

2.2. Graph Convolution

2.3. Chebyshev Graph Convolution

2.4. SLIC-Based Graph Embedding

2.5. Segmentation Metrics

3. Proposed SLIC-Based Deep Graph Network (SLIC-DGN)

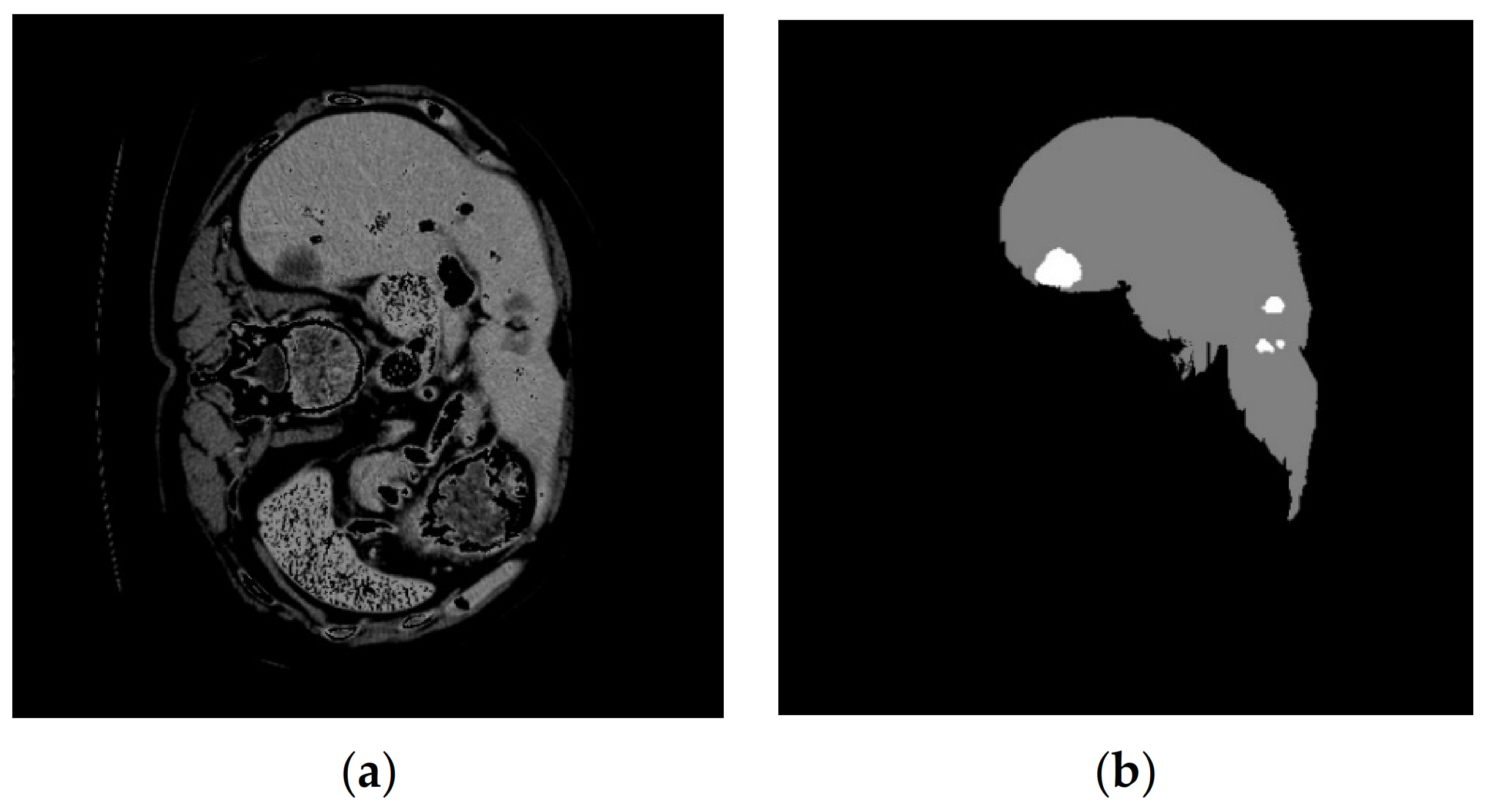

3.1. Images Preprocessing

3.2. Graph Embedding Stage

3.3. Architecture of the Proposed Method

- (a)

- A convolutional layer on a Chebyshev graph with batch normalization, Relu activation, and batch filtering.

- (b)

- The architecture of the previous step is repeated three times.

- (c)

- A dropout layer is considered to avoid the overfitting phenomenon.

- (d)

- The flattened feature vector is fed into a dense (FC) layer.

- (e)

- Scores in the FC layer are computed using a softmax.

3.4. Training and Evaluation

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, L.; Wei, X.; Gu, D.; Xu, Y.; Zhou, H. Human liver cancer organoids: Biological applications, current challenges, and prospects in hepatoma therapy. Cancer Lett. 2023, 555, 216048. [Google Scholar] [CrossRef] [PubMed]

- Shahini, N.; Bahrami, Z.; Sheykhivand, S.; Marandi, S.; Danishvar, M.; Danishvar, S.; Roosta, Y. Automatically identified EEG signals of movement intention based on CNN network (End-To-End). Electronics 2022, 11, 3297. [Google Scholar] [CrossRef]

- Guo, Z.; Zhao, Q.; Jia, Z.; Huang, C.; Wang, D.; Ju, W.; Zhang, J.; Yang, L.; Huang, S.; Chen, M.; et al. A randomized-controlled trial of ischemia-free liver transplantation for end-stage liver disease. J. Hepatol. 2023, 79, 94–402. [Google Scholar] [CrossRef] [PubMed]

- Aliseda, D.; Martí-Cruchaga, P.; Zozaya, G.; Rodríguez-Fraile, M.; Bilbao, J.I.; Benito-Boillos, A.; Martínez De La Cuesta, A.; Lopez-Olaondo, L.; Hidalgo, F.; Ponz-Sarvisé, M. Liver Resection and Transplantation Following Yttrium-90 Radioembolization for Primary Malignant Liver Tumors: A 15-Year Single-Center Experience. Cancers 2023, 15, 733. [Google Scholar] [CrossRef] [PubMed]

- Conticchio, M.; Maggialetti, N.; Rescigno, M.; Brunese, M.C.; Vaschetti, R.; Inchingolo, R.; Calbi, R.; Ferraro, V.; Tedeschi, M.; Fantozzi, M.R. Hepatocellular carcinoma with bile duct tumor thrombus: A case report and literature review of 890 patients affected by uncommon primary liver tumor presentation. J. Clin. Med. 2023, 12, 423. [Google Scholar] [CrossRef] [PubMed]

- Loomba, R.; Friedman, S.L.; Shulman, G.I. Mechanisms and disease consequences of nonalcoholic fatty liver disease. Cell 2021, 184, 2537–2564. [Google Scholar] [CrossRef] [PubMed]

- Asrani, S.K.; Devarbhavi, H.; Eaton, J.; Kamath, P.S. Burden of liver diseases in the world. J. Hepatol. 2019, 70, 15. [Google Scholar] [CrossRef] [PubMed]

- Zhi, Y.; Zhang, H.; Gao, Z. Vessel Contour Detection in Intracoronary Images via Bilateral Cross-Domain Adaptation. IEEE J. Biomed. Health Inform. 2023, 27, 3314–3325. [Google Scholar] [CrossRef]

- Guo, S.; Liu, X.; Zhang, H.; Lin, Q.; Xu, L.; Shi, C.; Gao, Z.; Guzzo, A.; Fortino, G. Causal knowledge fusion for 3D cross-modality cardiac image segmentation. Inf. Fusion 2023, 99, 101864. [Google Scholar] [CrossRef]

- Nardo, A.D.; Schneeweiss-Gleixner, M.; Bakail, M.; Dixon, E.D.; Lax, S.F.; Trauner, M. Pathophysiological mechanisms of liver injury in COVID-19. Liver Int. 2021, 41, 20–32. [Google Scholar] [CrossRef]

- Starekova, J.; Hernando, D.; Pickhardt, P.J.; Reeder, S.B. Quantification of liver fat content with CT and MRI: State of the art. Radiology 2021, 301, 250–262. [Google Scholar] [CrossRef] [PubMed]

- Eslam, M.; Sanyal, A.J.; George, J.; Sanyal, A.; Neuschwander-Tetri, B.; Tiribelli, C.; Kleiner, D.E.; Brunt, E.; Bugianesi, E.; Yki-Järvinen, H. MAFLD: A consensus-driven proposed nomenclature for metabolic associated fatty liver disease. Gastroenterology 2020, 158, 1999–2014.e1. [Google Scholar] [CrossRef] [PubMed]

- Zheng, R.; Wang, Q.; Lv, S.; Li, C.; Wang, C.; Chen, W.; Wang, H. Automatic liver tumor segmentation on dynamic contrast enhanced mri using 4D information: Deep learning model based on 3D convolution and convolutional lstm. IEEE Trans. Med. Imaging 2022, 41, 2965–2976. [Google Scholar] [CrossRef] [PubMed]

- Hänsch, A.; Chlebus, G.; Meine, H.; Thielke, F.; Kock, F.; Paulus, T.; Abolmaali, N.; Schenk, A. Improving automatic liver tumor segmentation in late-phase MRI using multi-model training and 3D convolutional neural networks. Sci. Rep. 2022, 12, 12262. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, M.; Qadri, S.F.; Qadri, S.; Saeed, I.A.; Zareen, S.S.; Iqbal, Z.; Alabrah, A.; Alaghbari, H.M.; Rahman, M.; Md, S. A lightweight convolutional neural network model for liver segmentation in medical diagnosis. Comput. Intell. Neurosci. 2022, 2022, 7954333. [Google Scholar] [CrossRef] [PubMed]

- Rahman, H.; Bukht, T.F.N.; Imran, A.; Tariq, J.; Tu, S.; Alzahrani, A. A Deep Learning Approach for Liver and Tumor Segmentation in CT Images Using ResUNet. Bioengineering 2022, 9, 368. [Google Scholar] [CrossRef] [PubMed]

- Manjunath, R.; Kwadiki, K. Modified U-NET on CT images for automatic segmentation of liver and its tumor. Biomed. Eng. Adv. 2022, 4, 100043. [Google Scholar] [CrossRef]

- Di, S.; Zhao, Y.; Liao, M.; Yang, Z.; Zeng, Y. Automatic liver tumor segmentation from CT images using hierarchical iterative superpixels and local statistical features. Expert Syst. Appl. 2022, 203, 117347. [Google Scholar] [CrossRef]

- Dickson, J.; Lincely, A.; Nineta, A. A Dual Channel Multiscale Convolution U-Net Methodfor Liver Tumor Segmentation from Abdomen CT Images. In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 7–9 April 2022; pp. 1624–1628. [Google Scholar]

- Tummala, B.M.; Barpanda, S.S. Liver tumor segmentation from computed tomography images using multiscale residual dilated encoder-decoder network. Int. J. Imaging Syst. Technol. 2022, 32, 600–613. [Google Scholar] [CrossRef]

- Sabir, M.W.; Khan, Z.; Saad, N.M.; Khan, D.M.; Al-Khasawneh, M.A.; Perveen, K.; Qayyum, A.; Azhar Ali, S.S. Segmentation of Liver Tumor in CT Scan Using ResU-Net. Appl. Sci. 2022, 12, 8650. [Google Scholar] [CrossRef]

- Dong, X.; Zhou, Y.; Wang, L.; Peng, J.; Lou, Y.; Fan, Y. Liver cancer detection using hybridized fully convolutional neural network based on deep learning framework. IEEE Access 2020, 8, 129889–129898. [Google Scholar] [CrossRef]

- Tang, Y.; Tang, Y.; Zhu, Y.; Xiao, J.; Summers, R.M. E2 Net: An edge enhanced network for accurate liver and tumor segmentation on CT scans. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part IV. Springer: Berlin/Heidelberg, Germany, 2020; pp. 512–522. [Google Scholar]

- Li, L.; Ma, H. Rdctrans u-net: A hybrid variable architecture for liver ct image segmentation. Sensors 2022, 22, 2452. [Google Scholar] [CrossRef] [PubMed]

- Ansari, M.Y.; Yang, Y.; Balakrishnan, S.; Abinahed, J.; Al-Ansari, A.; Warfa, M.; Almokdad, O.; Barah, A.; Omer, A.; Singh, A.V. A lightweight neural network with multiscale feature enhancement for liver CT segmentation. Sci. Rep. 2022, 12, 14153. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.A.; Luo, Y.; Wu, F.-X. RMS-UNet: Residual multi-scale UNet for liver and lesion segmentation. Artif. Intell. Med. 2022, 124, 102231. [Google Scholar] [CrossRef] [PubMed]

- Bogoi, S.; Udrea, A. A Lightweight Deep Learning Approach for Liver Segmentation. Mathematics 2023, 11, 95. [Google Scholar] [CrossRef]

- Bilic, P.; Christ, P.; Li, H.B.; Vorontsov, E.; Ben-Cohen, A.; Kaissis, G.; Szeskin, A.; Jacobs, C.; Mamani, G.E.H.; Chartrand, G. The liver tumor segmentation benchmark (lits). Med. Image Anal. 2023, 84, 102680. [Google Scholar] [CrossRef] [PubMed]

- Lazcano, A.; Herrera, P.J.; Monge, M. A Combined Model Based on Recurrent Neural Networks and Graph Convolutional Networks for Financial Time Series Forecasting. Mathematics 2023, 11, 224. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, D.; Miao, C. EEG-based emotion recognition using regularized graph neural networks. IEEE Trans. Affect. Comput. 2020, 13, 1290–1301. [Google Scholar] [CrossRef]

- Fabijanska, A. Graph Convolutional Networks for Semi-Supervised Image Segmentation. IEEE Access 2022, 10, 104144–104155. [Google Scholar] [CrossRef]

- Hajipour Khire Masjidi, B.; Bahmani, S.; Sharifi, F.; Peivandi, M.; Khosravani, M.; Hussein Mohammed, A. CT-ML: Diagnosis of breast cancer based on ultrasound images and time-dependent feature extraction methods using contourlet transformation and machine learning. Comput. Intell. Neurosci. 2022, 2022, 1493847. [Google Scholar] [CrossRef]

- Qingyun, F.; Zhaokui, W. Fusion Detection via Distance-Decay Intersection over Union and Weighted Dempster–Shafer Evidence Theory. J. Aerosp. Inf. Syst. 2023, 20, 114–125. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Yousefi Rezaii, T.; Mousavi, Z.; Meshini, S. Automatic stage scoring of single-channel sleep EEG using CEEMD of genetic algorithm and neural network. Comput. Intell. Electr. Eng. 2018, 9, 15–28. [Google Scholar]

- Sabahi, K.; Sheykhivand, S.; Mousavi, Z.; Rajabioun, M. Recognition COVID-19 cases using deep type-2 fuzzy neural networks based on chest X-ray image. Comput. Intell. Electr. Eng. 2023, 14, 75–92. [Google Scholar]

- Zhang, X.; Liu, C.-A. Model averaging prediction by K-fold cross-validation. J. Econom. 2023, 235, 280–301. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Y.; Jin, Y.; Xu, J.; Xu, X. Mdu-net: Multi-scale densely connected u-net for biomedical image segmentation. Health Inf. Sci. Syst. 2023, 11, 13. [Google Scholar] [CrossRef] [PubMed]

- Weng, W.; Zhu, X.; Jing, L.; Dong, M. Attention Mechanism Trained with Small Datasets for Biomedical Image Segmentation. Electronics 2023, 12, 682. [Google Scholar] [CrossRef]

- Zhang, R.; Lu, W.; Gao, J.; Tian, Y.; Wei, X.; Wang, C.; Li, X.; Yu, M. RFI-GAN: A reference-guided fuzzy integral network for ultrasound image augmentation. Inf. Sci. 2023, 623, 709–728. [Google Scholar] [CrossRef]

| Layer | Shape of Weight Tensor | Shape of Bias | Number of Parameters |

|---|---|---|---|

| First graph convolution layer | [K1, 16, 16] | [16] | 256 × K1 + 16 |

| Batch normalization layer | [16] | [16] | 32 |

| Second graph convolution layer | [K2, 16, 16] | [16] | 256 × K2 + 16 |

| Batch normalization layer | [16] | [16] | 32 |

| Third graph convolution layer | [K3, 16, 16] | [16] | 256 × K3 + 16 |

| Batch normalization layer | [16] | [16] | 32 |

| Fourth graph convolution layer | [K4, 16, 2] | [2] | 32 × K4 + 2 |

| Batch normalization layer | [16] | [16] | 32 |

| Softmax layer | - | [2] | 2 × C × K4 |

| Parameters | Search Space | Optimal Value |

|---|---|---|

| Optimizer | Adamax, SGD, Adam, RMSprop, Adadelta | Adam |

| Number of graph convolution layers | 1, 2, 3, 4, 5, 6, 7 | 4 |

| Size of ouput in first layer | 8, 16, 32, 64 | 16 |

| Size of output in second layer | 8, 16, 32, 64 | 16 |

| Siz.e of output in third layer | 8, 16, 32, 64 | 16 |

| Learning rate | 0.1, 0.01, 0.001, 0.0001 | 0.001 |

| Dropout rate | 0.1, 0.2, 0.3 | 0.2 |

| W.eight decay of Adam optimizer | 5 × 10−5, 5 × 10−4 | 5 × 10−4 |

| Liver Segmentation | Tumor Segmentation | |

|---|---|---|

| Accuracy (%) | 99.1 | 98.7 |

| Dice Coefficient (%) | 91.1 | 90 |

| Mean IoU (%) | 90.8 | 89.2 |

| Sensitivity (%) | 99.4 | 97.9 |

| Recall (%) | 99.4 | 98.9 |

| Precision (%) | 91.2 | 88.7 |

| References | Method | Dataset | Liver Segmentation (%) | Tumor Segmentation (%) |

|---|---|---|---|---|

| Hänsch et al. [14] | 3D-CNN | DCE-MRI | 70 | 59 |

| Dickson et al. [19] | DCMC-U-Net | LiTS17 | 97 | 80 |

| Dong et al. [22] | Hybridized FCNN | LiTS17 | 97.22 | 87 |

| Tang et al. [23] | E2Net | LiTS17, 3Diracadb01 | 97 | 74 |

| Li et al. [24] | RDCTrans U-Net | LiTS17 | 93 | 89 |

| Ansari et al. [25] | Res32-PAC-UNet | LiTS17 | 95 | - |

| Khan et al. [26] | RMS-U-Net | 3Dircadb01, LiTS17 | 97.50 | 86.70 |

| Bogui et al. [27] | U-NeXt | LiTS17 | 99 | - |

| Proposed model | SLIC-DGN | LiTS17 | 99.1 | 90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khoshkhabar, M.; Meshgini, S.; Afrouzian, R.; Danishvar, S. Automatic Liver Tumor Segmentation from CT Images Using Graph Convolutional Network. Sensors 2023, 23, 7561. https://doi.org/10.3390/s23177561

Khoshkhabar M, Meshgini S, Afrouzian R, Danishvar S. Automatic Liver Tumor Segmentation from CT Images Using Graph Convolutional Network. Sensors. 2023; 23(17):7561. https://doi.org/10.3390/s23177561

Chicago/Turabian StyleKhoshkhabar, Maryam, Saeed Meshgini, Reza Afrouzian, and Sebelan Danishvar. 2023. "Automatic Liver Tumor Segmentation from CT Images Using Graph Convolutional Network" Sensors 23, no. 17: 7561. https://doi.org/10.3390/s23177561

APA StyleKhoshkhabar, M., Meshgini, S., Afrouzian, R., & Danishvar, S. (2023). Automatic Liver Tumor Segmentation from CT Images Using Graph Convolutional Network. Sensors, 23(17), 7561. https://doi.org/10.3390/s23177561