Abstract

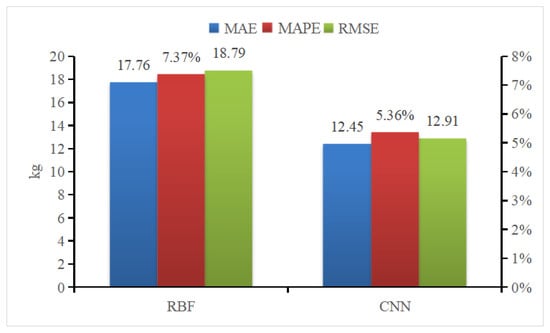

The measurement of pig weight holds significant importance for producers as it plays a crucial role in managing pig growth, health, and marketing, thereby facilitating informed decisions regarding scientific feeding practices. On one hand, the conventional manual weighing approach is characterized by inefficiency and time consumption. On the other hand, it has the potential to induce heightened stress levels in pigs. This research introduces a hybrid 3D point cloud denoising approach for precise pig weight estimation. By integrating statistical filtering and DBSCAN clustering techniques, we mitigate weight estimation bias and overcome limitations in feature extraction. The convex hull technique refines the dataset to the pig’s back, while voxel down-sampling enhances real-time efficiency. Our model integrates pig back parameters with a convolutional neural network (CNN) for accurate weight estimation. Experimental analysis indicates that the mean absolute error (MAE), mean absolute percent error (MAPE), and root mean square error (RMSE) of the weight estimation model proposed in this research are 12.45 kg, 5.36%, and 12.91 kg, respectively. In contrast to the currently available weight estimation methods based on 2D and 3D techniques, the suggested approach offers the advantages of simplified equipment configuration and reduced data processing complexity. These benefits are achieved without compromising the accuracy of weight estimation. Consequently, the proposed method presents an effective monitoring solution for precise pig feeding management, leading to reduced human resource losses and improved welfare in pig breeding.

1. Introduction

Pork is an essential source of animal protein and fat for European, Chinese, and some other populations, which plays a crucial role in ensuring food security and social stability [1,2,3]. In China, the pig industry is a traditionally advantageous industry that contributes greatly to sustaining the agricultural market, fostering the growth of the national economy, and increasing farmers’ incomes. As such, the quantity of pig breeding has witnessed a notable increase. However, conventional pig breeding methods have proven inadequate for keeping pace with the rapid development of pig output. The integration of science and technology has facilitated the widespread adoption of contemporary technologies in various aspects of aquaculture production.

Consequently, the improvement of pig production and quality has consistently remained a central objective in the development of agricultural strategies [4,5,6]. Among these objectives, pig weight stands out as one of the most influential factors in pig production and pork quality. It provides essential information on feed conversion rate, growth rate, disease, development uniformity, and the health status of pig herds [7,8,9,10]. Presently, the traditional manual pig driving weighing method involves direct contact weighing on a scale. This method is known for its time-consuming, labor-intensive nature, and is prone to causing the stress reaction in pigs. These limitations make it challenging to meet the requirements of large-scale breeding for real-time monitoring of pig weight [11].

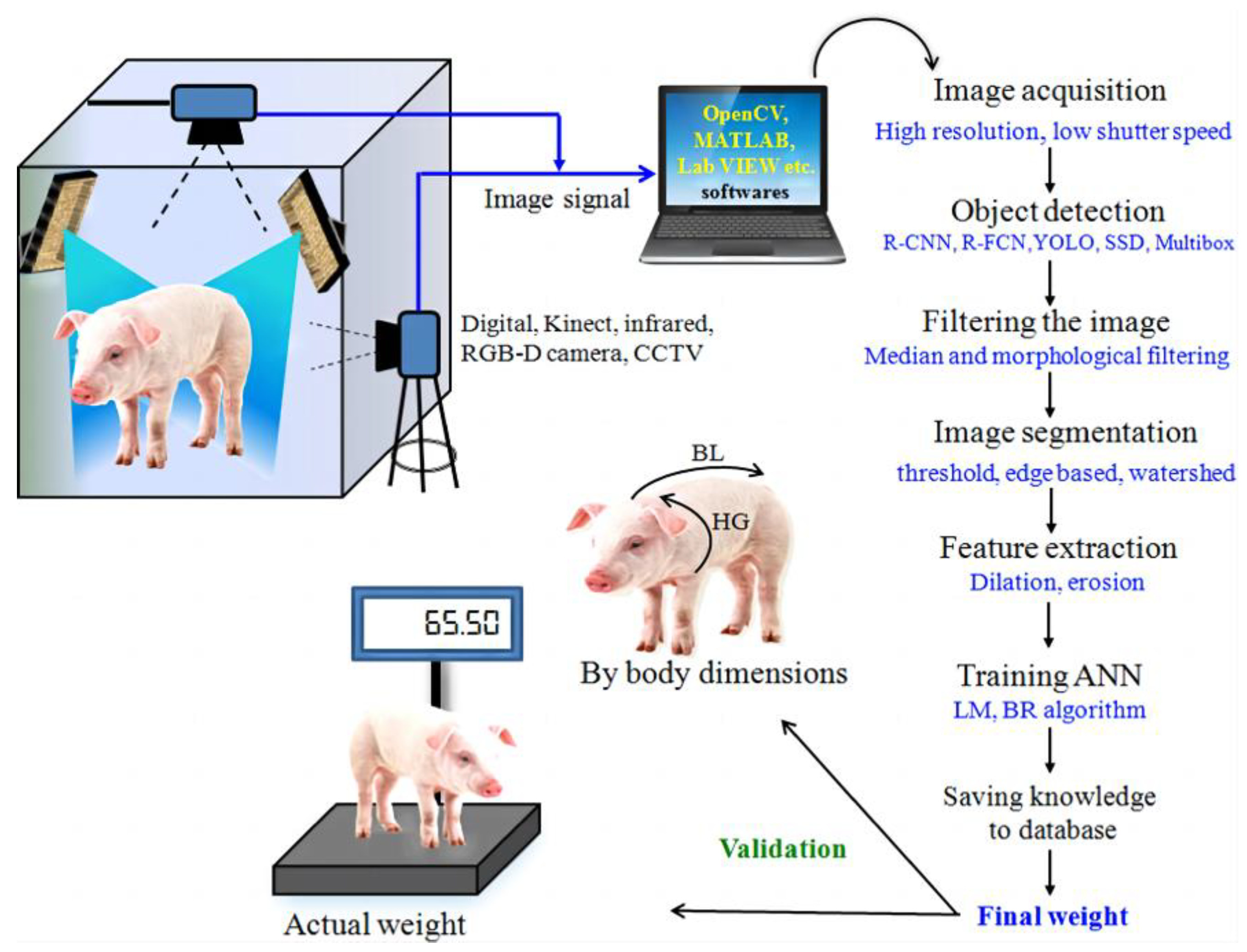

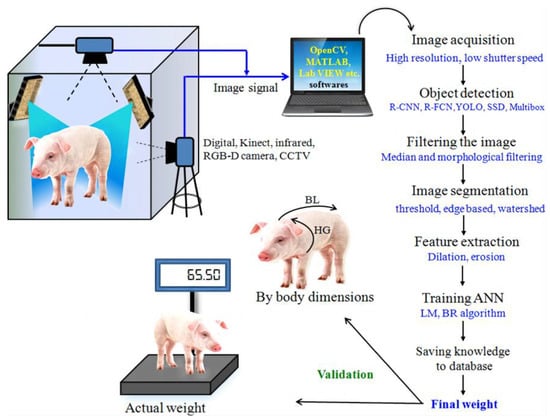

Non-contact monitoring of pig weight has progressively become a hotspot for research, with weight estimation primarily relying on 2D and 3D images [12,13,14,15]. In 2D imaging, segmentation methods are employed to delineate the pig’s outline, while weight estimation is based on a relationship model using geometric parameters and actual body weight, as illustrated in Figure 1. In 2017, Okinda [16] used HD cameras to capture overhead images of pigs, applied the background difference method to eliminate background interference, and devised a weight estimation model using linear regression and adaptive fuzzy reasoning. This model necessitates manual selection of optimal inference images due to the influence of porcine posture and movement. In 2018, Suwannakhun [17] et al. used an adaptive threshold segmentation method to identify the pig’s body region and developed a body weight estimation model leveraging eight features, including color, texture, center of mass, main axis length, minimum axis length, eccentricity, and area, to mitigate the impact of pig movement. Nevertheless, 2D methods for estimation often fall short in accurately estimating the animal’s volume or parameters associated with its body surface. Furthermore, variations in camera parameters, object distance, and lighting conditions have a significant impact on the measurement findings, resulting in limited adaptability and posing challenges in enhancing accuracy in real-world multi-scene applications. In the context of weight regression estimation, the conventional regression approach exhibits limited capability in feature learning and fails to comprehensively capture the interplay among the defining factors of pig body size.

Figure 1.

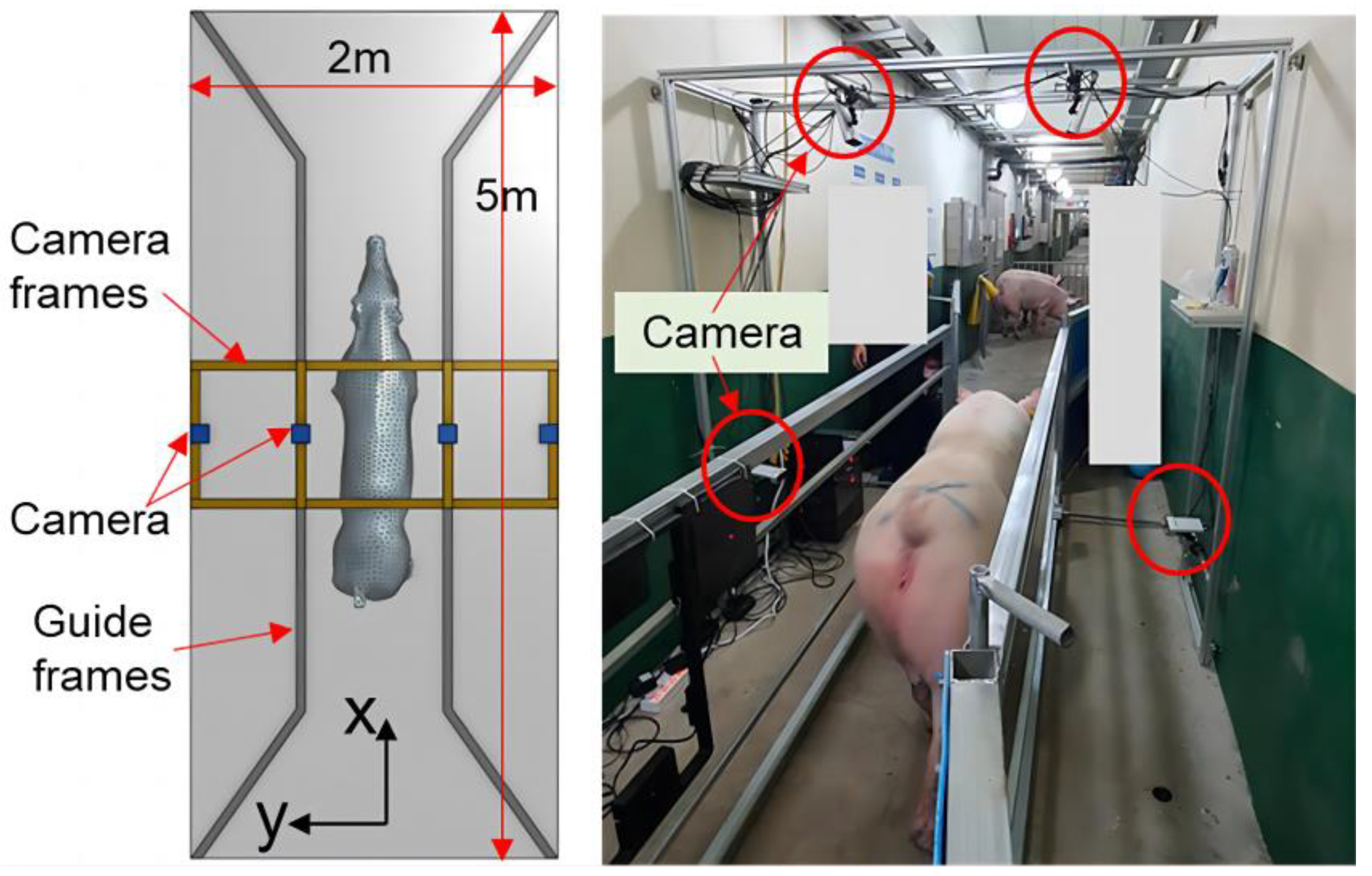

The fundamental stages involved in 2D image processing for the purpose of estimating pig weight [12].

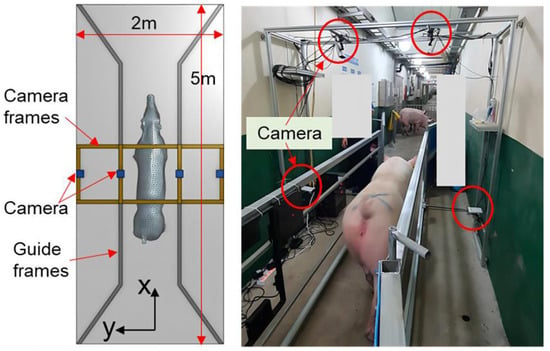

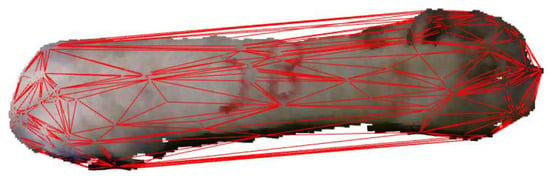

Research aimed at estimating the weight of pig based on three-dimensional data has been undertaken to address these problems. Liu [18] et al. used binocular vision technology to collect three-dimensional image data of pigs. They extracted growth-related data, including chest circumference, buttock height, body length, body height, and body width, through image processing methods. After that, they developed the estimation model based on linear functions, nonlinear functions, machine learning algorithms, deep learning algorithms, among others, to estimate pig weight. The correlation coefficient with the measured value was 97%, and the average relative error was 2.5%, outperforming models solely relying on back area measurements. As the price of depth cameras continues to fall, and their performance steadily improves, they have been increasingly employed in studies of animal body measurement, body condition and weight estimation, automatic behavioral identification, etc. In 2021, He [19] et al. used a D430 camera to capture depth images of pigs. They employed instance segmentation, distance independence, noise reduction, and rotational correction to eliminate background interference and constructed a BotNet-based model for estimating the body weight of pigs. In 2023, Kwon [20] et al. introduced a method for accurately estimating pig weight using point clouds, as depicted in Figure 2. They used a rapid and robust reconstructed grid model to address the problem of unrecognized noise or missing points and optimized deep neural networks for precise weight estimation. While the aforementioned techniques have demonstrated enhanced precision in estimating the weight of pigs, it is crucial to acknowledge that the hardware systems employed in these experiments are intricate. In real-world production settings, the available space is often limited, hindering the ability to conduct multi-directional or azimuthal livestock detection. Meanwhile, data collection conditions may not be optimal, posing challenges in meeting current manufacturing demands.

Figure 2.

Configuration of the acquisition system for point clouds [20].

In conclusion, there is a pressing requirement for a precise and cost-effective intelligent system to estimate pig weight accurately. Such a system should be applicable to large-scale pig farms, facilitating the monitoring and analysis of pig growth processes and serving as an effective tool for precise pig feeding and management.

The primary contributions of this article can be summarized as follows:

- (1)

- Point cloud filtering, the denoising of large-scale and small-scale discrete points is achieved using statistical filtering and DBSCAN point cloud segmentation, respectively, resulting in the accurate segmentation of the pig back image. What is more, the voxel downsampling approach is employed to reduce the point cloud data’s resolution on the pig’s back, so as to reduce the volume of point cloud data while preserving the inherent characteristics of the original dataset.

- (2)

- In terms of the weight estimation, the plane projection technique is employed to determine the body size of the pig, while the convex hull detection method is utilized to eliminate the head and tail regions of the pig. The point set information in the pig point cloud data is input into the convolutional neural network to construct the pig weight estimation model, which realizes the full learning of features and accurate estimation of pig weight.

- (3)

- As for actual breeding, the estimation method constructed in this research overcomes the problems of high cost, data processing difficulties, space occupation, and layout restrictions while ensuring the estimation accuracy, which can provide technical support for precise feeding, selection, and breeding of pigs.

This paper is structured as follows: Section 2 introduces the point cloud data collection, noise reduction segmentation, feature extraction, and weight estimation methods; in Section 3, the performance of denoising segmentation and the weight estimation model is analyzed. Section 4 discusses the comparison with existing methods, the limitations, improvements of the research, and outlines potential future work. Finally, Section 5 presents the conclusions.

2. Materials and Methods

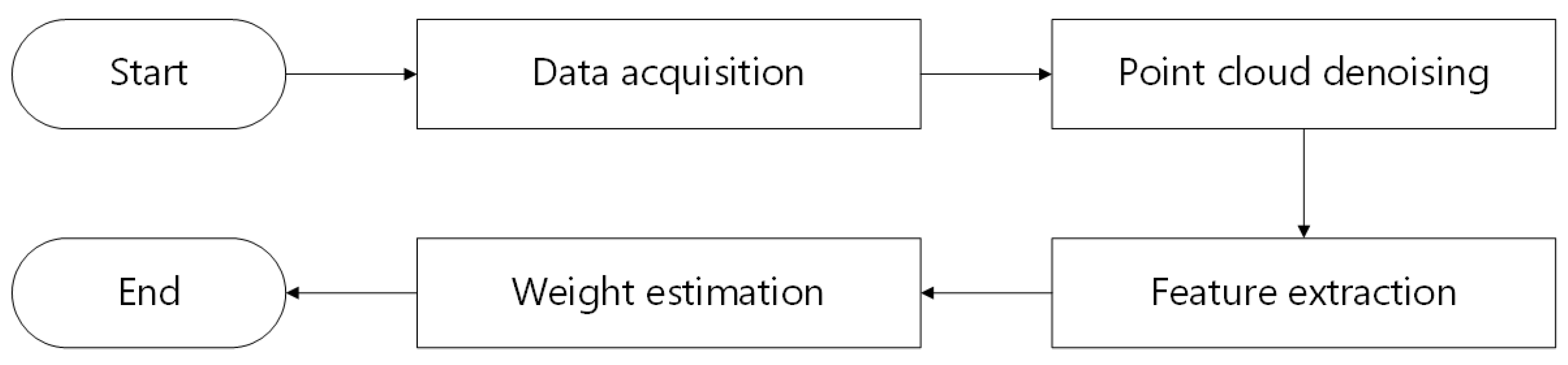

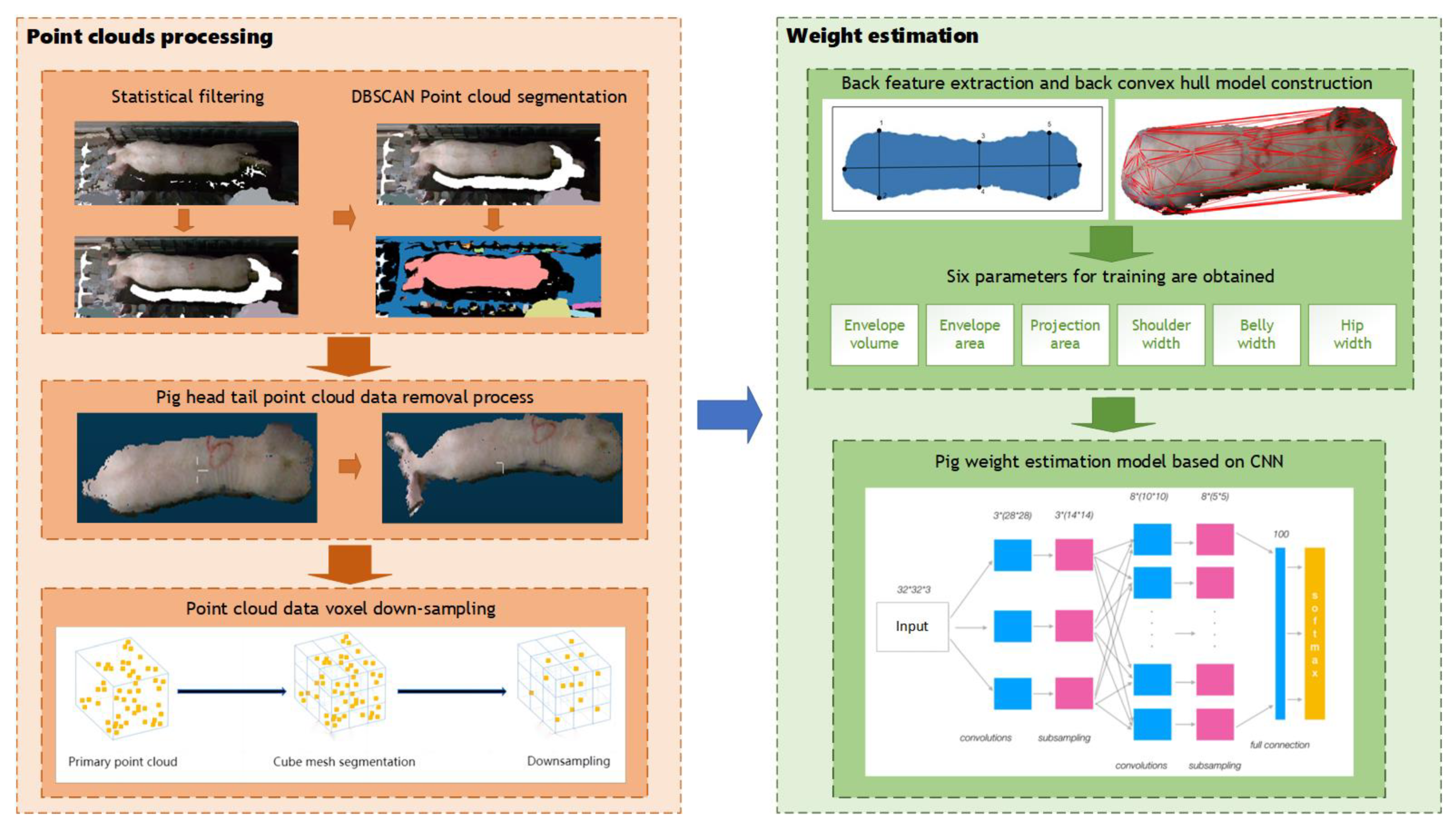

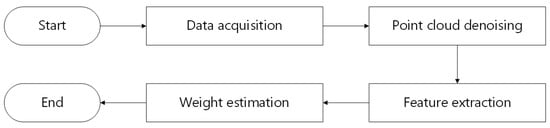

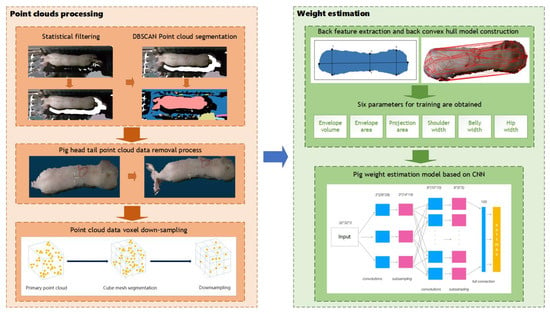

This section primarily describes the experimental objects and techniques employed. Figure 3 depicts the technical path of this research.

Figure 3.

The technical path.

2.1. Animals and Housing

The experimental period spanned from 7 June to 27 June 2022 and the experimental site was located in Wens Pig Farm, Xinxing County, Yunfu City, Guangdong Province, China. The experimental subjects were 198 pigs (Large White × Landrace breed), with body weights ranging from 190 kg to 300 kg. The equipment was installed in the passageway connecting the gestation house to the farrowing house of the farm, which had a width of 1.7 m and a height of 2.8 m. All experimental design and procedures conducted in this study received approval from the Animal Care and Use Committee of Nanjing Agricultural University, in compliance with the Regulations for the Administration of Affairs Concerning Experimental Animals of China (Certification NJAU.No20220530088).

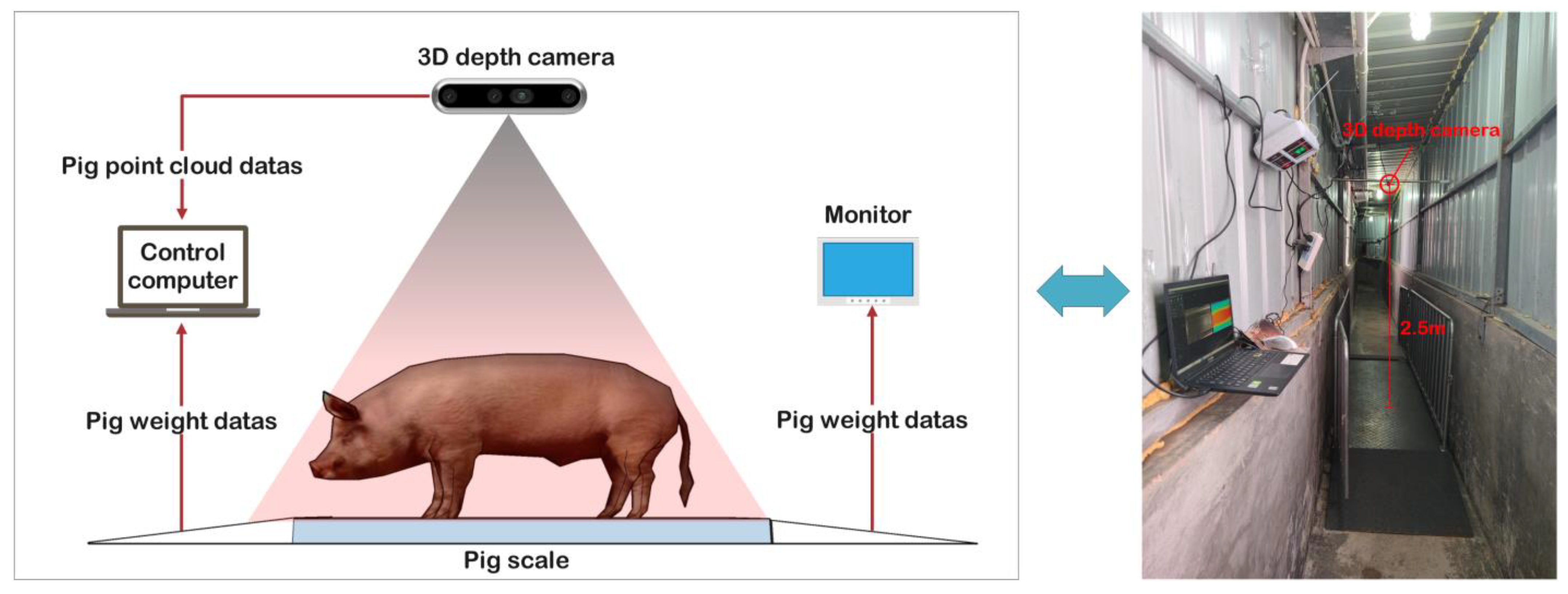

2.2. Data Acquisition

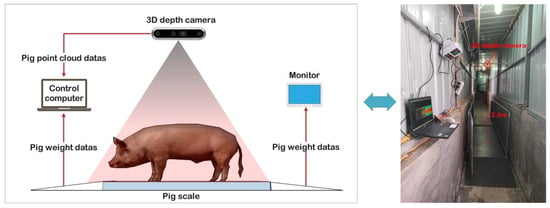

A 3D depth Intel RealSense D435i camera (manufactured by Intel Corp., Santa Clara, CA, USA) was used to acquire color and depth images. This camera features a complete depth processing module, a vision processing module, and a signal processing module, capable of delivering depth data and color information data at a rate of 90 frames per second. What is more, it was installed at 2.5 m directly above the XK3190-A12 weighing scale (manufactured by Henan Nanshang Husbandry Technology Co., Ltd., Nanyang, China). An illustration of the pig weight data acquisition system is presented in Figure 4.

Figure 4.

The pig weight data acquisition system.

2.3. Data Set Construction

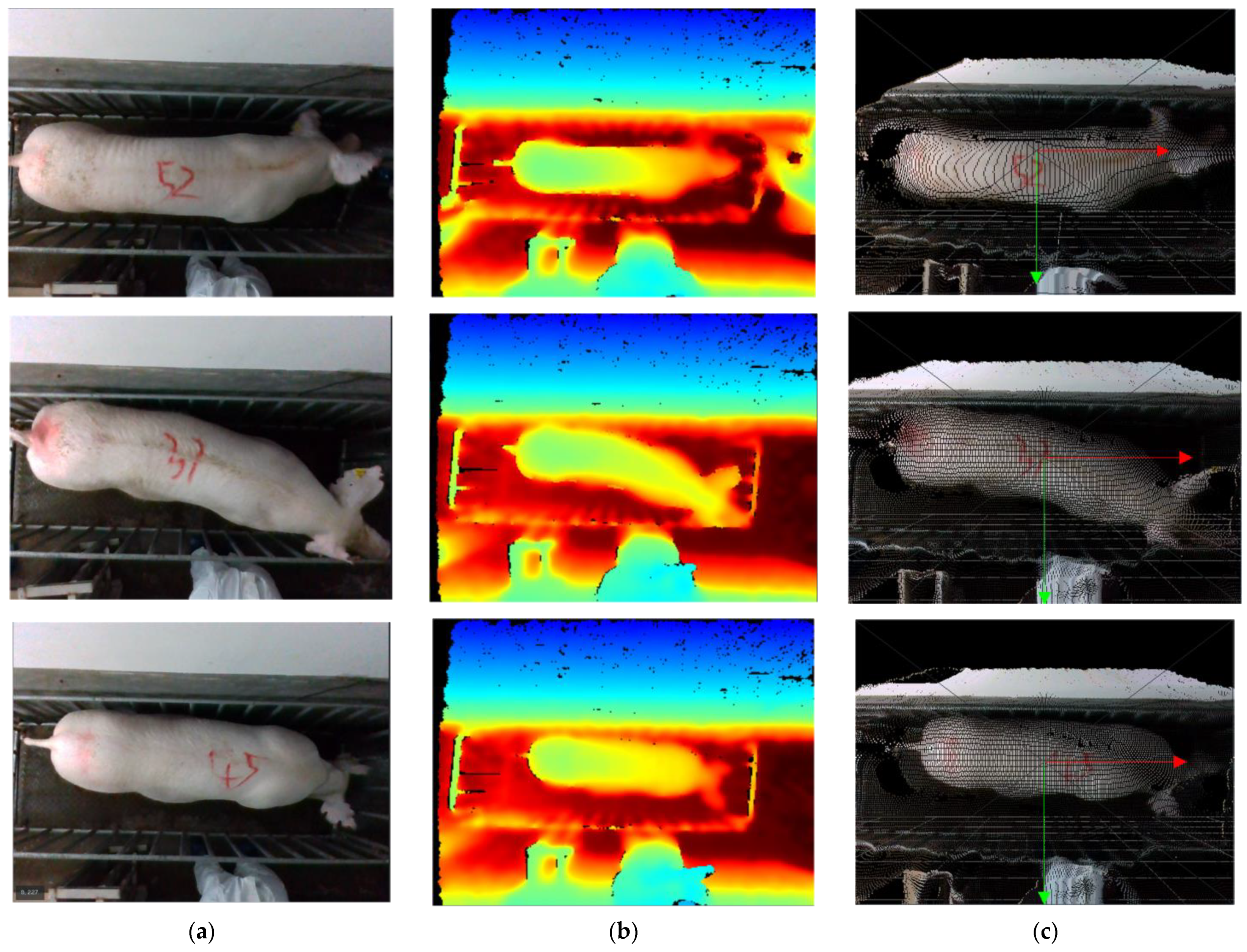

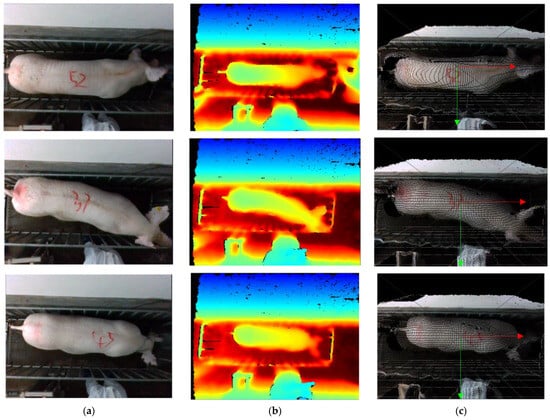

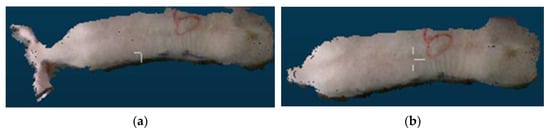

To improve the efficiency of data acquisition, this research used ROS parsing for video parsing to acquire 3D point cloud data in PLY format. Each data set comprises a color image and a depth image, captured at a resolution of 640 × 480 pixels and a frame rate of 30 frames per second. A portion of the dataset fragment is depicted in Figure 5.

Figure 5.

The dataset fragment. (a) Color images; (b) Depth images; (c) color images after mapping.

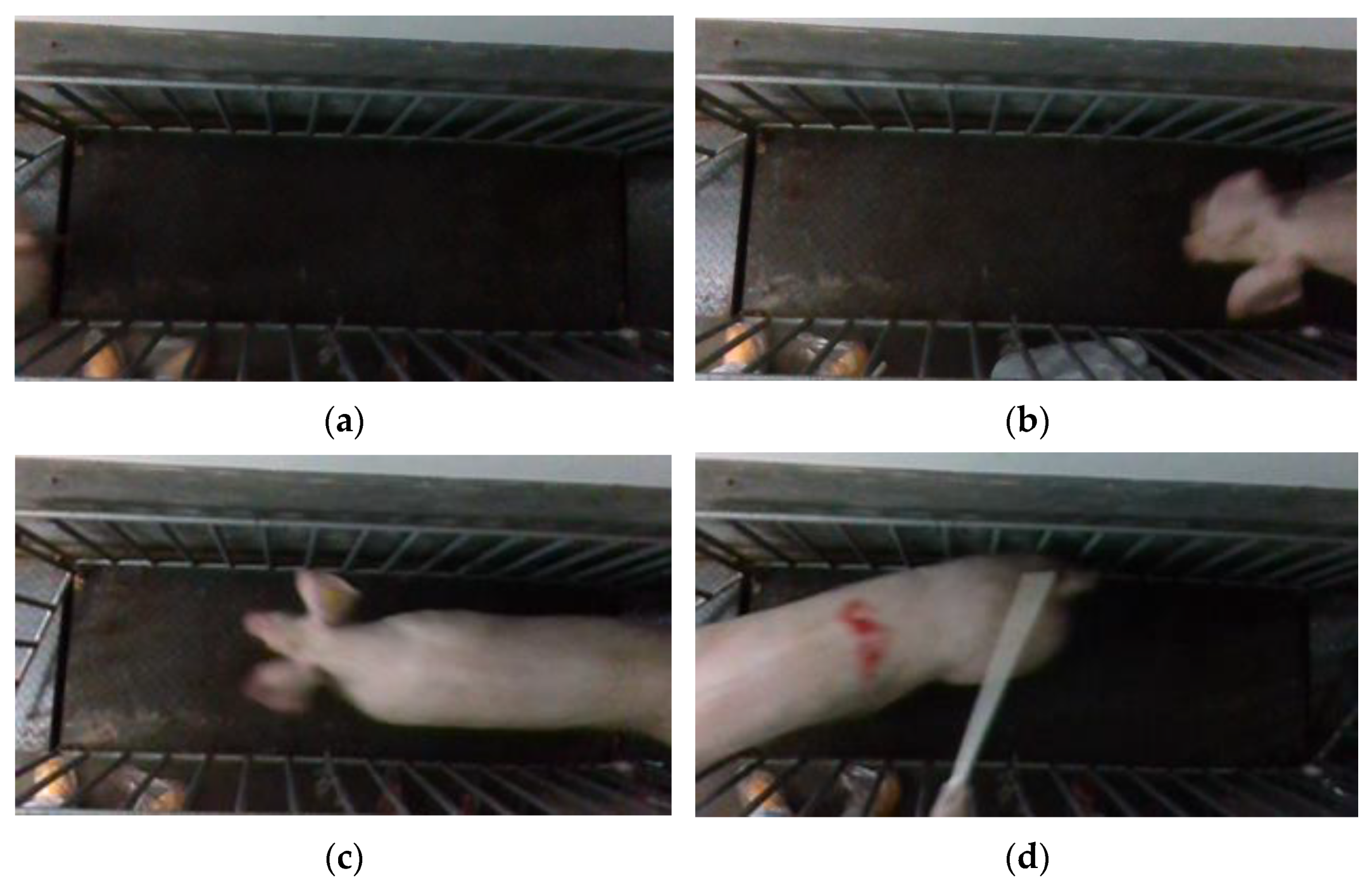

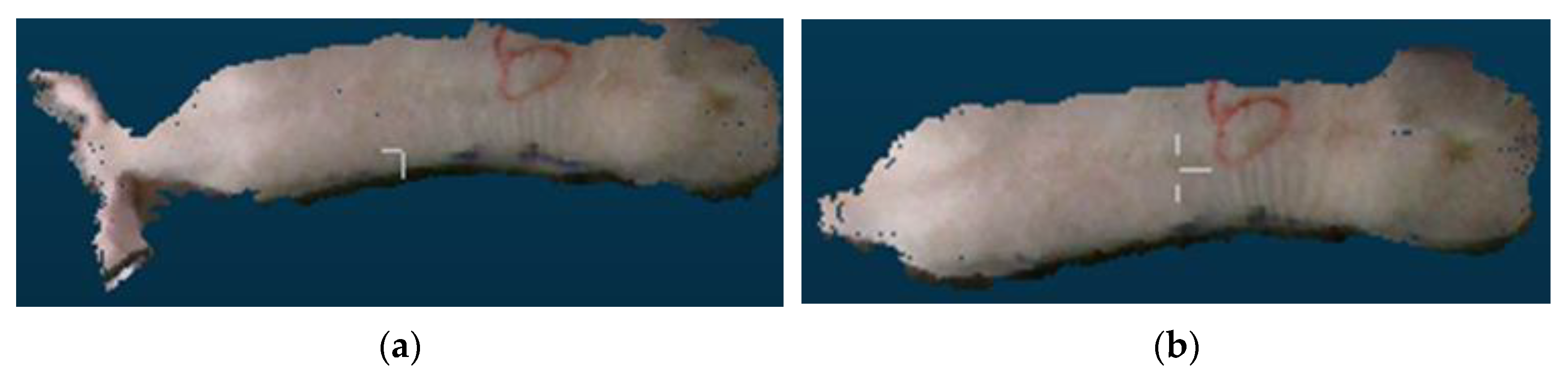

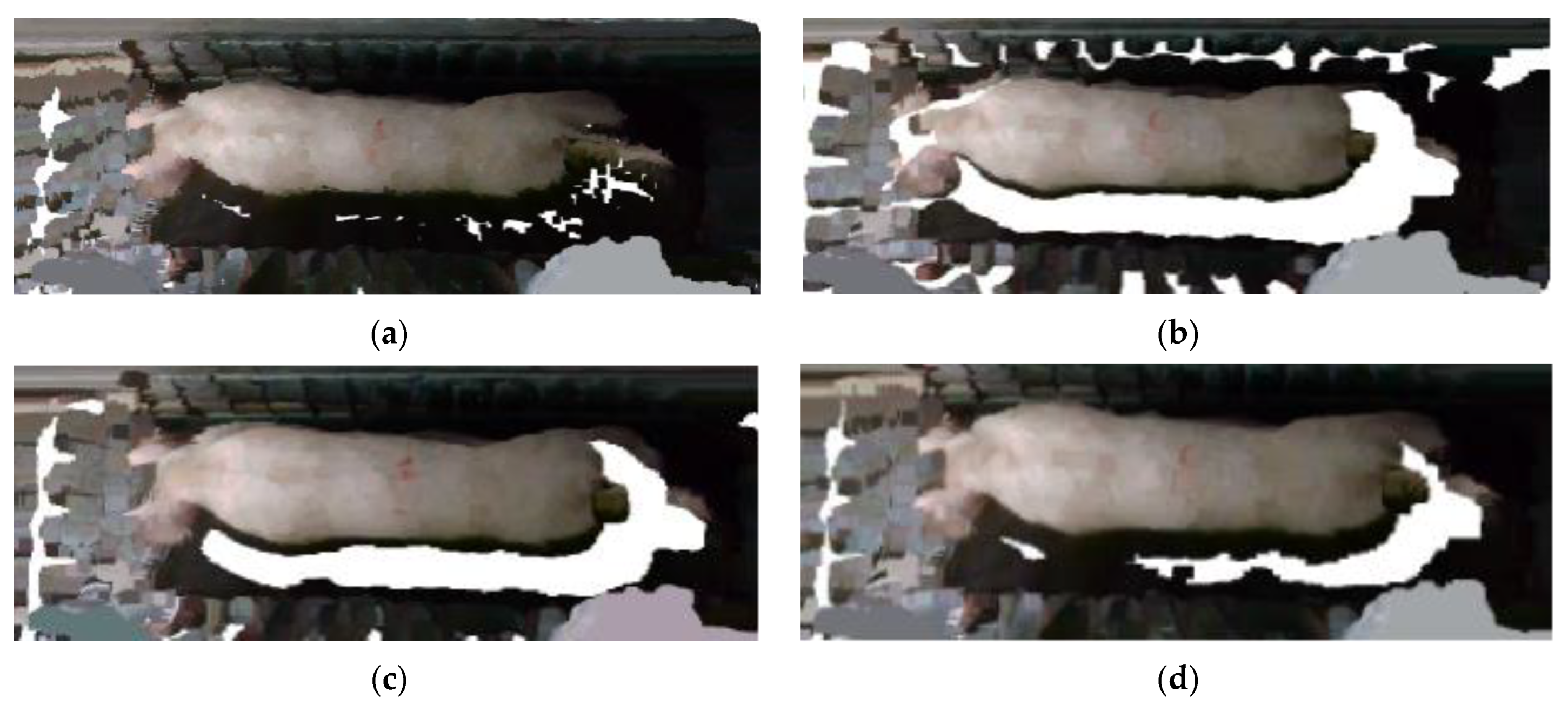

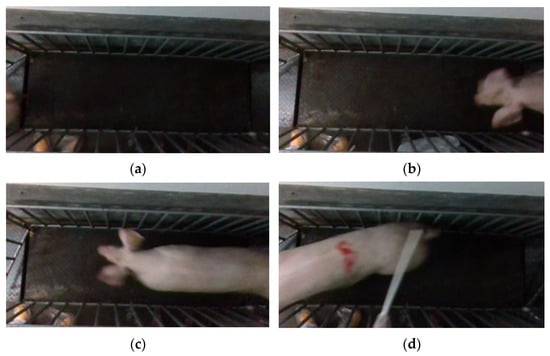

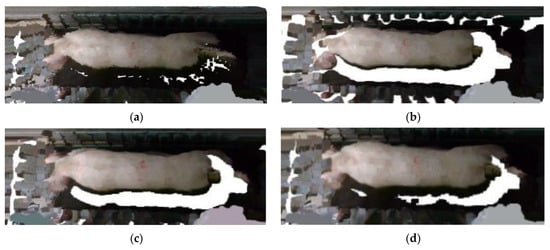

Point cloud files with missing point clouds and incomplete pig images were excluded, as shown in Figure 6. Only point cloud files featuring complete pig images were retained. To ensure data sufficiency and meet the requirements of the pig weight estimation model, 50 point cloud files were selected for each pig. Finally, a total of 10,000 sets of pig 3D point cloud data were acquired.

Figure 6.

Incomplete pig picture. (a) No pig; (b) pig with missing body; (c) pig with missing rump; (d) pig with missing head.

2.4. Model Construction

2.4.1. Overall Process

The primary components of the method proposed in this research are point cloud segmentation and weight estimation, as illustrated in Figure 7. In order to address the adhesion problem between pigs and the background in the segmentation of pig point cloud, statistical filtering and DBSCAN was used to separate pig point cloud from background. To obtain complete point cloud data of the pigs’ backs, the plane projection is employed to capture images of the pig’s back. After that, convex hull detection was applied to remove extraneous head and tail point cloud data. To reduce the computational complexity of subsequent processing, voxel down-sampling was used to reduce the quantity of point cloud data on pigs’ backs. Finally, for precise pig weight estimation, we devised a weight estimation model based on back parameter characteristics and a convolutional neural network (CNN) during the weight estimation phase.

Figure 7.

Overall process.

2.4.2. Data Denoising

The collected data contain some three-dimensional point cloud noise, attributed to occluders, equipment limitations, and external conditions [21]. This noise can compromise the accuracy of expressing useful information, which results in a large error in pig weight estimation. To address this issue, the noise points within the point cloud were separated into large-scale outlier points and small-scale discrete points based on their distribution characteristics. Prior research has demonstrated that employing a diverse range of hybrid filtering algorithms tailored to specific attributes of noise might yield superior outcomes [22]. In this research, a statistical filter was used to remove large-scale discrete points, and DBSCAN was used to remove small-scale discrete points.

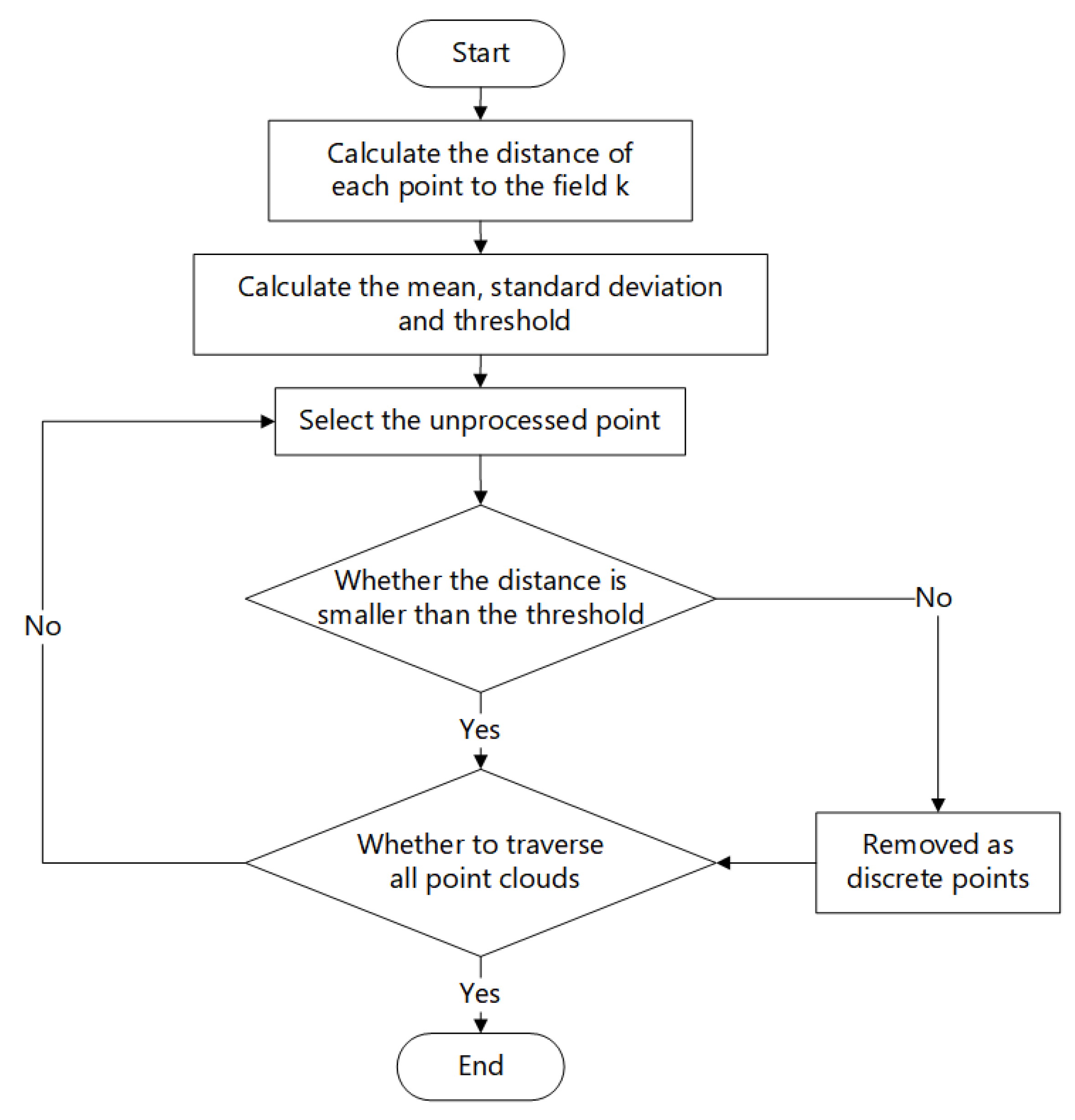

Preserving the local features of point cloud data is of utmost importance when capturing the physical characteristics of pigs. Within the collected 3D point cloud data, there exists large-scale noise, primarily consisting of sparse points that deviate from the pig’s body point cloud and hover above it. What is more, there are smaller, denser point clouds that are more distant from the center of the pig’s body point cloud. In the present, for the removal of such discrete points, techniques like passthrough filtering, statistical filtering, conditional filtering, guided filtering, and others are usually employed [23]. Passthrough filtering serves as an effective manner in removing noise in large-scale backgrounds. What should be noticed is that it almost does not work when it comes to randomly generated discrete noise and may even lead to inadvertent removal of pig point cloud data.

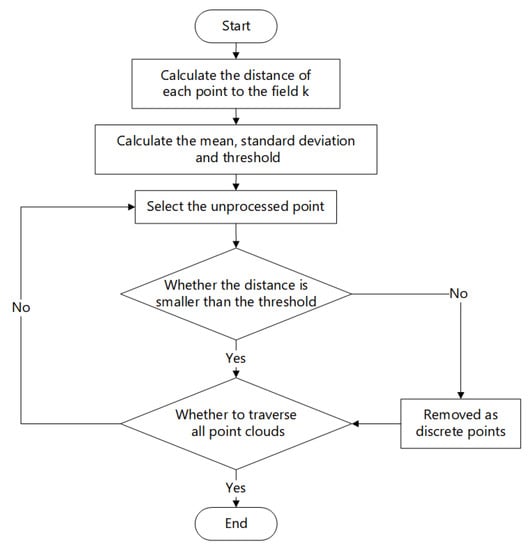

This study mainly employs the open-source library Open3D (version 0.13.0) to process three-dimensional point cloud images. Open3D is an open-source library that is available in the rapid development of software for handling 3D data. The statistical filtering algorithm employs the distance between the queried point in the point cloud data and all neighboring point sets in its vicinity to perform statistical analysis and eliminate large-scale discrete points [24]. When the point cloud density of a particular location falls below the predetermined threshold, these points are removed as discrete points. The exact procedure is outlined as follows:

Read into the 3D point cloud data set , and establish the k-d tree data structure of the point cloud data.

For each point in the point cloud, define the required nearest neighbor parameter , establish the neighborhood based on the value of , and calculate the average distance from the nearest nearest neighbor, as shown in Equations (1) and (2), where is the spatial distance between point and point , and is the average distance between point and its nearest neighbors.

On the basis of the last step, calculate the average distance of the average distance of all neighbors and the standard deviation , as shown in Equation (3):

Calculate whether the average distance between the current and the k nearest neighbor is greater than the set threshold L. When > L, delete point ; when < L, retain point . As shown in Equation (4), σ is the calculated coefficient, which is generally valued according to the distribution of the cloud data of the measured point.

Traverse all points in the point cloud for calculation until all point cloud data is processed and filtered point cloud data is output. The statistical filtering process is presented in Figure 8.

Figure 8.

Statistical filtering process diagram.

Using statistical filtering for denoising 3D point cloud data can remove some of the large-scale noise, though there still exists some. These noises mixed with the pig’s data point can interfere with the surface smoothness of 3D model reconstruction. To address this issue, a secondary denoising step is required, incorporating additional filtering algorithms. Employing a hybrid filtering approach serves a dual purpose—it removes small-scale noise and mitigates the remaining large-scale noise. Clustering algorithms help tackle small-scale noise, to certain degree. By identifying points with high similarity within clusters, noise points can be distinguished from valid data points. Clustering algorithms usually include K-Means clustering, DBSCAN clustering, region growing clustering, etc. K-Means clustering relies on geometric distance and proves advantageous when dealing with point clouds where the number and location of seed points are known [23]. However, when applied to interconnected point clouds, it may cause issues such as over-segmentation or under-segmentation due to the reliance on single growth criteria or stopping conditions. In contrast, DBSCAN clustering leverages point cloud density for clustering. During the clustering process, it randomly selects a core object as a seed and proceeds to identify all sample sets that are density-reachable from this core object. This process repeats until all core objects are clustered. DBSCAN clustering excels in situations where different regions of the pig’s body exhibit similar point cloud densities, coming up with robust clustering results.

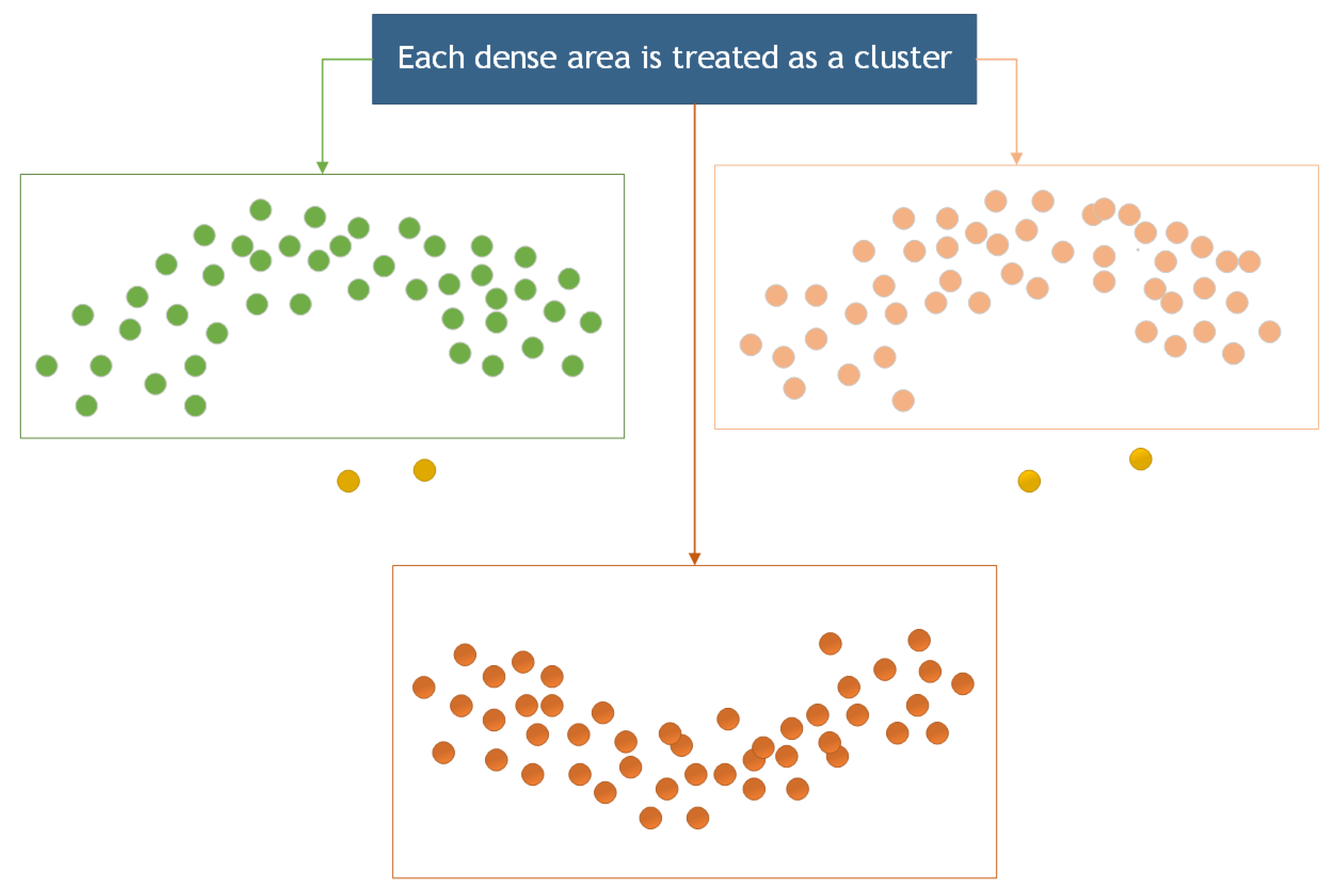

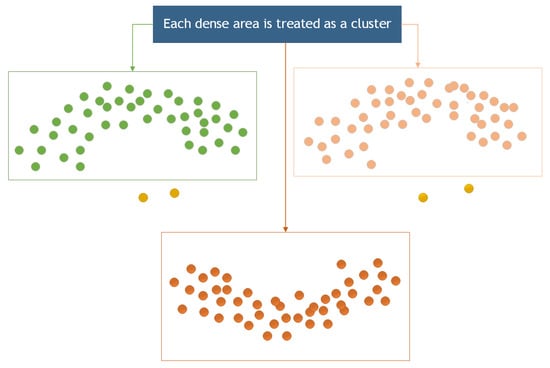

The density clustering algorithm has been employed to remove noise from a point cloud because of its sensitivity and effectiveness in recognizing small-scale noise points [25]. In this section, the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm was for noise removal from the point cloud. DBSCAN is an algorithm for density-based spatial clustering that clusters regions with sufficient density [26]. It also defines a cluster as the largest set of density-connected points, allowing for the identification of clusters with arbitrary shapes in a noisy spatial database [27,28], as illustrated in Figure 9.

Figure 9.

Cluster region division.

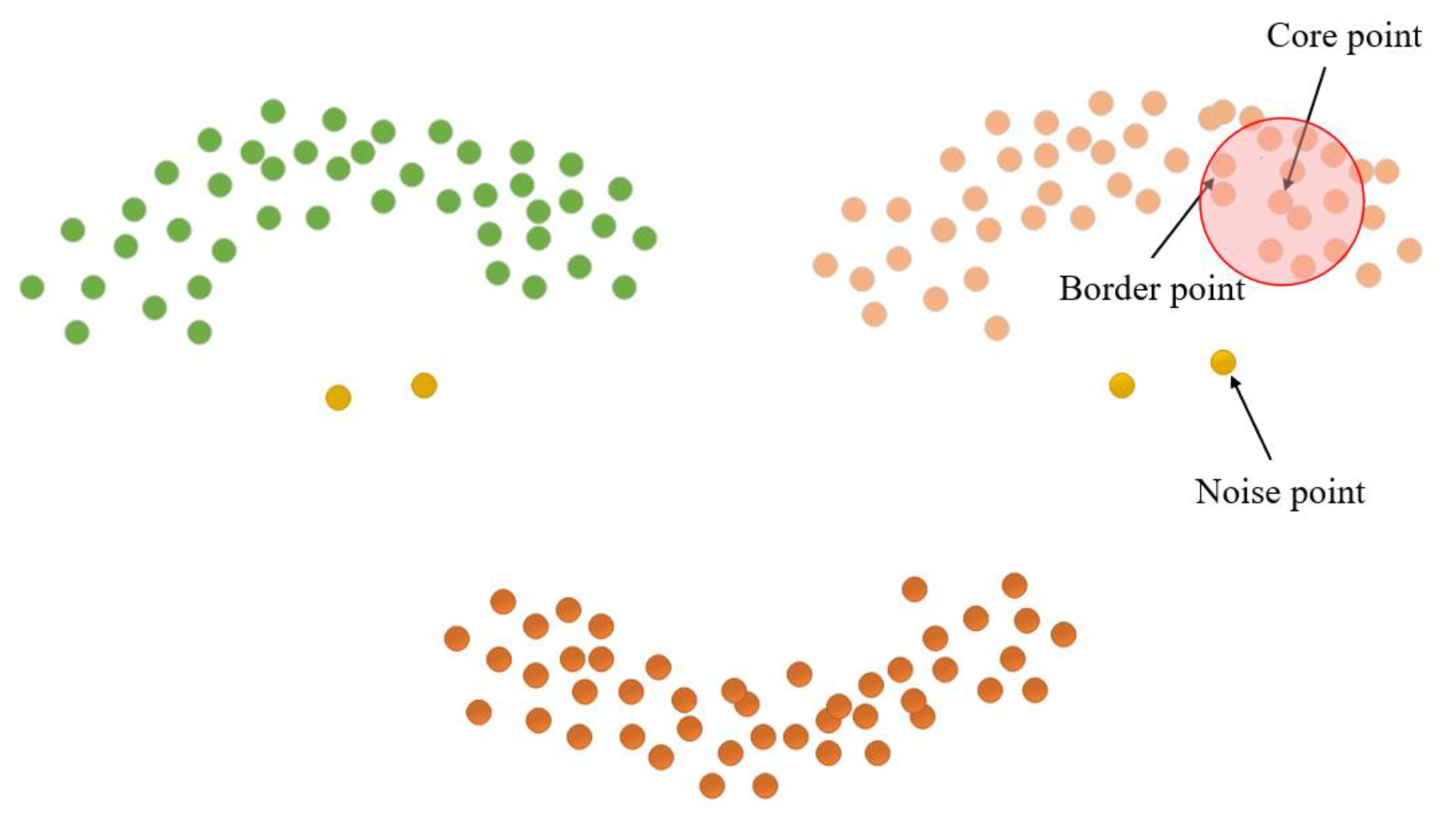

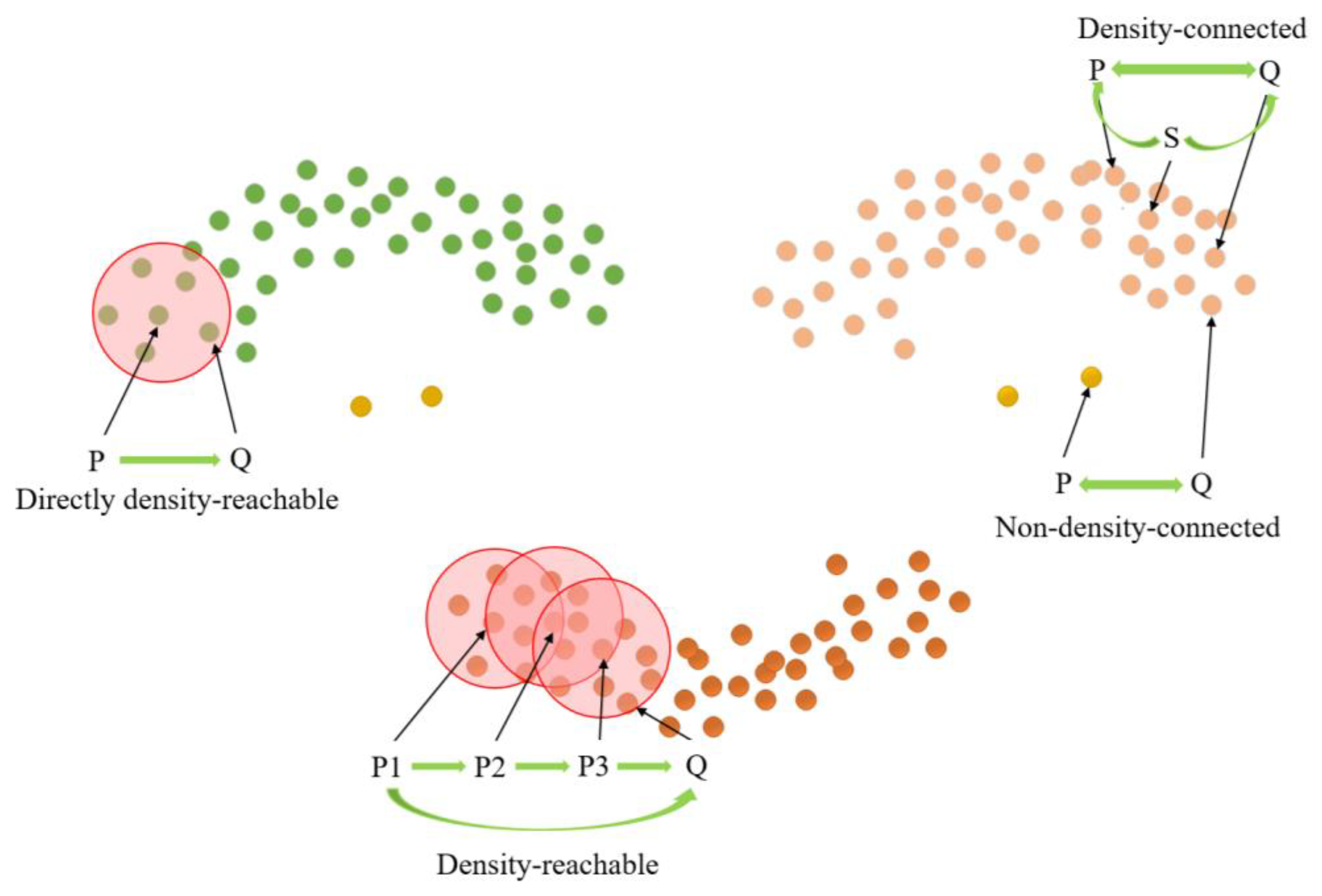

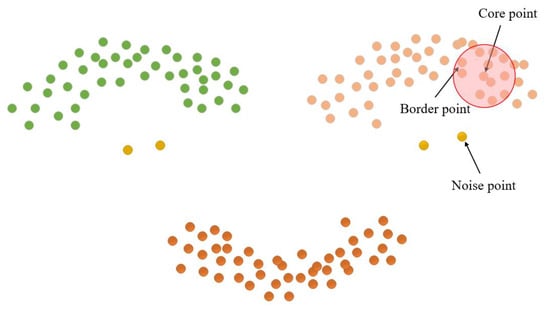

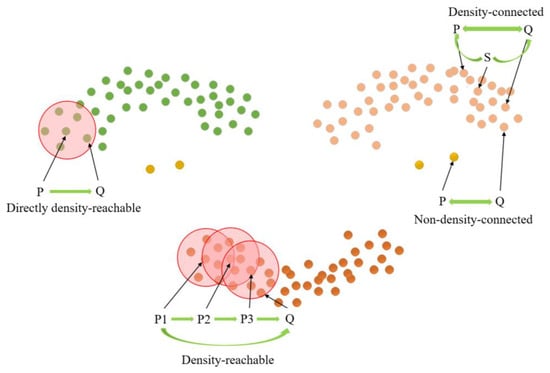

The length parameter epsilon (Eps) and minimum points (MinPts) are crucial factors in determining the clustering effect of the DBSCAN clustering algorithm [29]. MinPts sets the threshold for the minimum number of data points needed to form a density region, while Eps quantifies the distance between a data point and its neighbors. Core points, border points, and noise points can be derived from these two parameters, as illustrated in Figure 10. Notably, there exist four relationships between these three data points: Directly density-reachable, density-reachable, density-connected, and non-density-connected, as illustrated in Figure 11.

Figure 10.

Cluster point classification.

Figure 11.

Cluster density relation.

The pseudocode for the clustering algorithm based on DBSCAN used in this research is shown in Algorithm 1.

| Algorithm 1. The pseudocode for the clustering algorithm based on DBSCAN |

| Input: Datasets Ds, parameter c and MinPts Output: Clustering result Cl DBSCAN (Ds, c, MinPts) Cl ← 0 |

| every point in Ds is unlabeled

for each unlabeled point p ∈ Ds do mark p as labeled set c_neighborhood as p’s c_neighborhood if sizeof (c_neighborhood) < MinPts then mark p as noise point else Cl ← Cl + 1 add p to Cl neighbor ← c_neighborhood for each point q in neighbor if q is unlabeled mark q as labeled set c_neighborhood’ as q’s c_neighborhood if sizeof (c_neighborhood’) >= MinPts then neighbor ← neighbor ∪ c_neighborhood’ end if if q is not assigned to any cluster add q to cluster Cl end if |

| end for end if |

| end for |

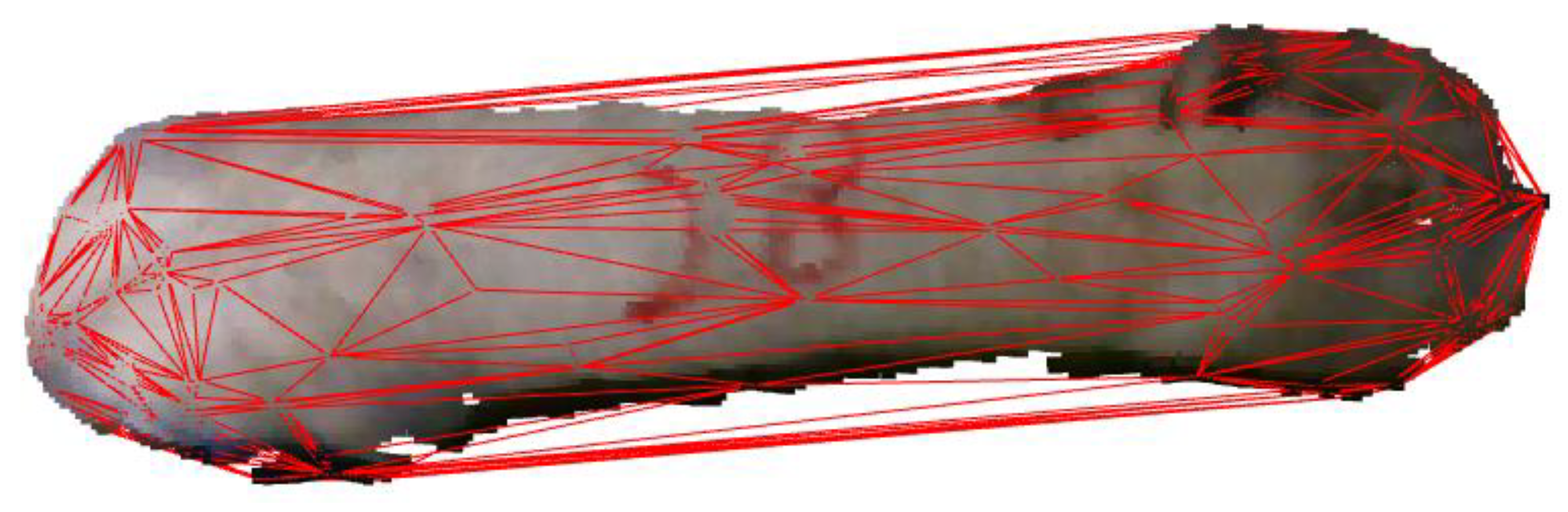

2.4.3. Splitting the Head and Tail

It is necessary to remove the head and tail point cloud data because the poses of pigs’ heads and tails frequently change, which has a remarkable impact on such essential characteristic parameters as the envelope volume, envelope surface area, back area, and body length. Initially, the data from the point cloud were projected onto a plane to create a 2D outline of the pig’s back. The minimum envelope polygon of the pig’s body was derived from the envelope vertices and line segments corresponding to the pig’s head and tail. The sag degree of the pig contour was calculated based on the point where the contour envelope overlapped with the pig’s contour and the pixel distance between neighboring points and the pig’s contour. A deeper depression indicated a higher probability of head and tail split points. Given the fixed direction of the pig’s head, the short axis of some particles in the pig’s body was chosen as the dividing line, and the point furthest from this axis was considered the dividing point of the tail, while the point closest to the axis was considered the dividing point of the head. Figure 12 depicts the comparison before and after head and tail removal.

Figure 12.

Comparison before and after head and tail removal: (a) original image; (b) processed image.

2.4.4. Point Cloud Sampling

This research performs voxel down-sampling of point cloud data because the amount of 3D point cloud data is exceedingly large and irregular, which has a profound effect on the model’s input. The particular procedure is as follows [30]:

Based on the point cloud data within the dot cloud files, the maximum and minimum values of X, Y, Z in the 3D space coordinates are obtained. The maximum values of the 3D coordinates are represented as xmax, ymax, zmax, while the minimum values are denoted as xmin, ymin, zmin. We used NumPy libraries to construct a variation of the synchronized function, generating an n × 3 matrix concerning x, y, z. Each entry represents the 3D coordinates xyz of the point, with the relationships between z, x, and y as follows:

The side length of the regular cube grid is represented as r. The smallest bounding body of the point cloud data is determined by the maximum and minimum values of 3D coordinate points in the point cloud data.

Calculate the size of the voxel grid.

Calculate the index of each regular small cube grid, and the index is represented by h as follows:

Sort the elements in h by size, and then calculate the center of gravity for each regular cube to represent each point in the small grid.

2.4.5. Back Feature Extraction

Several studies have demonstrated a correlation between pig body measurement, body weight, and other growth parameters. A relationship model can be used to estimate the body weight of pigs in an effective manner [31,32]. Body size parameters were extracted based on critical points along the back contour of the pigs, and a relationship model between body size and body weight was established.

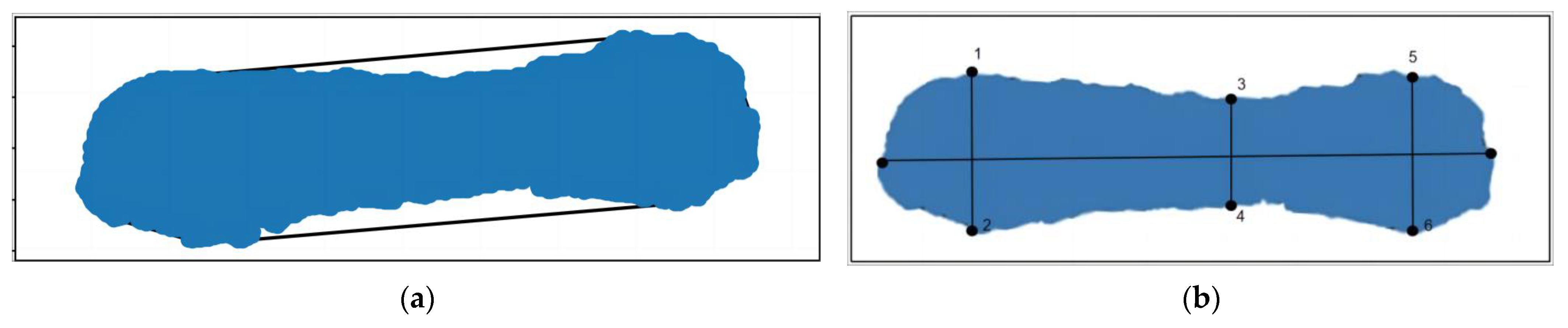

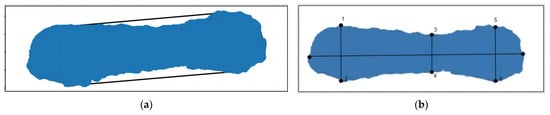

During the process of acquiring pig 3D point cloud data, the pig’s body is often inclined, making it difficult to extract body size parameters. In this research, a minimum horizontal bounding box was created for the point cloud on the pig’s back. The bounding box maintained the parallelism of the border with the X and Y axes, ensuring that the rear image remained horizontal. As shown in Figure 13, the 3D point cloud data were projected onto the horizontal plane to obtain the horizontal projection of the point cloud from the pig’s back.

Figure 13.

Pig point cloud projection. (a) Before horizontal processing; (b) after horizontal processing.

The six key points along the pig’s outline were extracted. represented the shortest distance between the two ends of the outline, while represented the greatest distance between the ends of the left side and signified the longest distance between the ends of the right side. The calculation formula is as follows, where x and y represent the coordinates of the respective points on the plane:

Then, the convex hull model of the pig back was constructed, and the point cloud of the pig back was encapsulated in the form of minimum convex hull so that the area and volume of the convex hull model could be calculated. The extraction results are depicted in Figure 14.

Figure 14.

Pig back envelope.

2.4.6. Weight Estimation

Nowadays, methods for estimating pigs’ weight mainly include three groups: classical regression methods (including Linear Regression, Polynomial Regression, Ridge Regression, and Logistic Regression), machine learning methods (including Decision Tree Regression, Random Forest Regression, and Support Vector Regression, SVR), and deep learning methods (like Multi-Layer Perceptron, MLP, and Convolutional Neural Network, CNN). Classical regression models, though commonly used, may be instable due to the nonlinear relationships and complex features among body feature parameters. Machine learning regression methods, though flexible in handling nonlinear relationships, can be sensitive to outliers and noise within the training data. In contrast, deep learning-based regression methods excel in handling nonlinear patterns and demonstrate great scalability compared to the first two groups of regression estimation methods. CNN possesses strong data-solving capabilities and can delve deeply into data relationship information [33]. As a network with multiple layers, CNN consists primarily of input layer, convolutional layer, down-sampling layer, fully connected layer, and output layer [34]. Its fundamental concept lies in the use of neuron weight sharing, which reduces the diversity of network parameters, simplifies the network, and enhances execution efficiency [35].

Input layer: The initial sample data are input into the network to facilitate the feature learning of the convolutional layer, and the input data are preprocessed to ensure that the data align more closely with the requirements of network training.

Convolution layer: In this layer, the input features from the previous layer undergo convolution with filters, and the biased top is added. Nonlinear functions are applied to obtain the final output of the convolutional layer.

The output of the convolution layer is expressed as follows:

where represents the activation value of feature in layer l − 1, represents the convolution kernel of layer l feature and the previous layer feature , while B denotes the bias value. describes the weighted sum of feature in the l layer, and represents the activation function, with indicating the convolution operation.

All outputs are connected with the adjacent neurons in the upper layer, which helps train the sample features, reduce the parameter connections, and improve learning efficiency.

Down-sampling layer: In this layer, the output features are processed via pooling using the down-sampling function. After that, weighted and biased calculations are performed on the processed features to obtain the output of the down-sampling layer:

where represents the weight coefficient, and down(·) is the down-sampling function.

Fully connected layer: In the fully connected layer, all two-dimensional features are transformed into one-dimensional features, which serve as the output of the layer. And the output of the layer is obtained through weighted summation:

Output layer: The main job is to classify and identify the feature vector obtained by the CNN model and output the result.

The training process of CNN mainly involves two phases: the forward propagation phase and the backward propagation phase. During the former one, input data are processed through intermediate layers to generate output values and a loss function. In the latter phase, when there is an error between the output values and the target values, the parameters of each layer in the network are optimized through gradient descent based on the loss function.

2.5. Model Evaluation

To test the performance of the pig weight estimation model, metrics such as the mean absolute error (MAE), mean absolute percentage error (MAPE), and root mean square error (RMSE) were calculated by comparing the actual weight of the pig with the estimated weight.

where P represents the estimated value of the model, R represents the actual value, N signifies the number of samples, and k denotes the current sample number.

3. Results

3.1. Performance Comparison of Different Point Cloud Filtering Methods

To investigate the filtering effects of different thresholds, a comparative experiment was conducted with thresholds of 1, 2, and 4, and neighborhood points of 30. Figure 15 depicts the filtering effects of distinct thresholds. When the threshold value is set to 0.1, there is excessive filtering of the pig point cloud, which leads to partial loss of pig head point cloud data. On the other hand, when the threshold is set to 2, most of the discrete points can be effectively eliminated. When the threshold is set to 4, only a small number of discrete points are removed, and the impact is not apparent. Consequently, a threshold value of 2 was selected for the statistical filtering parameter in this study.

Figure 15.

Comparison of statistical filtering effects: (a) Original image; (b) filtering effect with a threshold of 1; (c) filtering effect with a threshold of 2; (d) filtering effect with a threshold of 4.

To compare the filtering effects of different filters, the guided filter was used as a comparison in this research. The guided filter is a kind of edge-preserving smoothing method commonly employed in image enhancement, image dehazing, and other applications [36]. Figure 16 depicts the effect of guided filtering. The comparison reveals that while the guided filter was capable of preserving the entire point cloud data of the pigs, it did not filter the discrete point cloud data from the surrounding environment of the pigs. That is why the filtering effect was worse than that of statistical filtering.

Figure 16.

Comparison of guided filtering effect: (a) Original image; (b) guided filtering effect image.

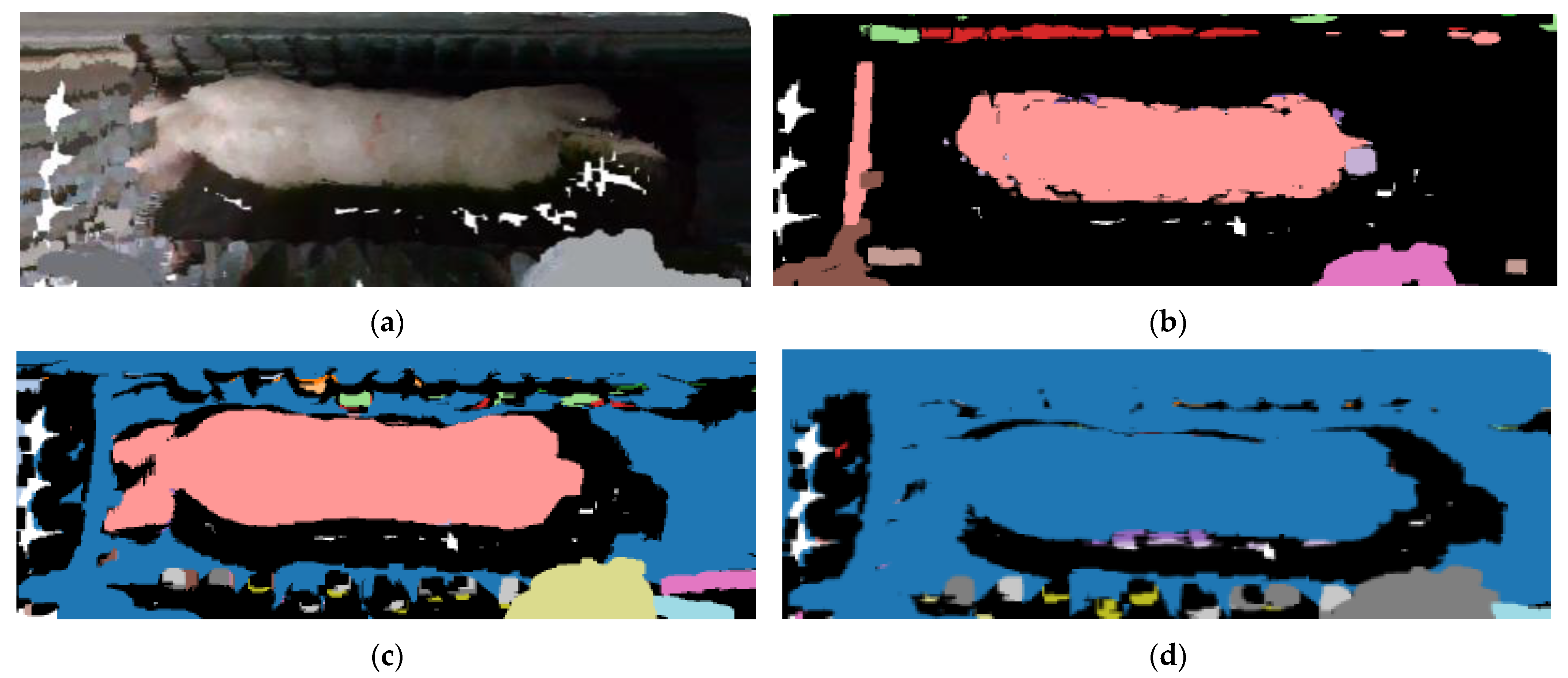

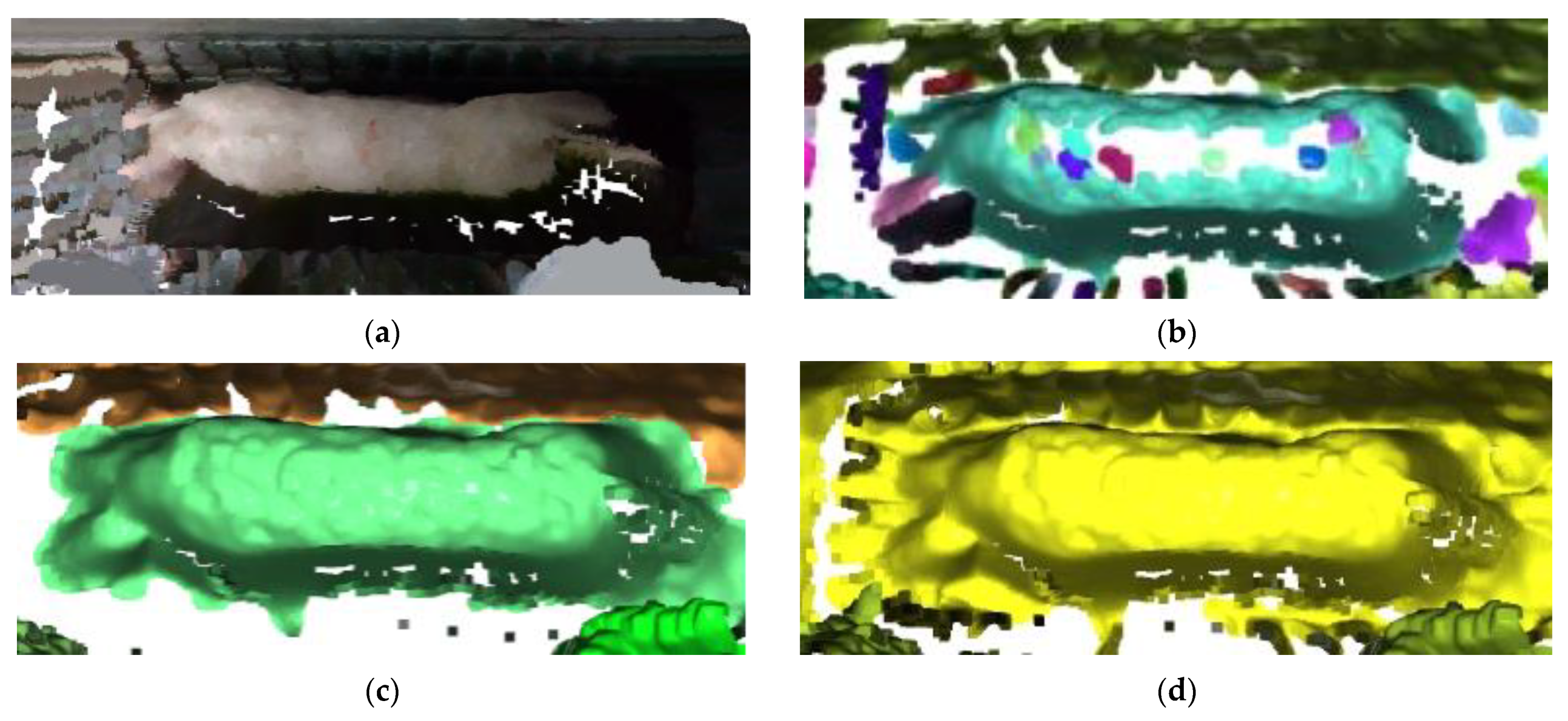

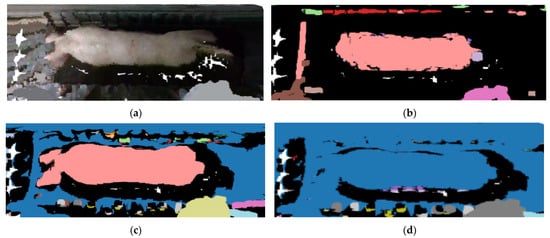

3.2. Performance Comparison of Different Segmentation Methods for Point Cloud

For the purpose of exploring the influence of different radius of neighborhood on the clustering effect, a comparative experiment was conducted in this study using the radius of neighborhood (Eps) of 0.01, 0.02, and 0.025. The clustering effect of different Eps is shown in Figure 17. When Eps is set to 0.01, the pig’s body trunk is divided, but the head and tail are categorized as background. Also, the outline edge of the pig body is blurred, and the point cloud part of the pig is missing. Setting Eps to 0.02 bring about a complete separation of the pig, with a clear and smooth outline. When the Eps is set to 0.025, parts of the pig outline become visible, but the pig cannot be separated from the background. Therefore, the threshold value for Eps was set to 0.02 as the Eps of DBSCAN point cloud density clustering.

Figure 17.

Comparison of DBSCAN Point cloud segmentation with different Eps value: (a) Original image; (b) segmentation effect with an Eps of 0.01; (c) segmentation effect with an Eps of 0.02; (d) segmentation effect with an Eps of 0.025.

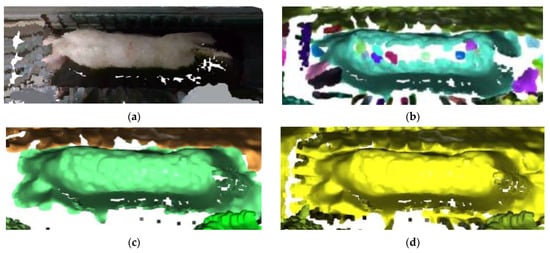

This research selected the region growing segmentation for point cloud as a reference for comparing the effects of various cloud segmentation methods. The region growing segmentation method is recognized for its flexibility, preservation of similarity, extensibility, computational efficiency, and interpretability, making it one of the most favored methods in point cloud segmentation [37]. The algorithm selects the seed point as the starting point and expands the seed region by adding neighboring points that meet specific conditions [38]. The curvature threshold (ct) is an important parameter influencing the region growing segmentation algorithm. Figure 18 illustrates the segmentation outcomes under different curvature thresholds. When ct = 0.01, a substantial portion of the pig’s back point cloud is missing, and some is erroneously classified as outliers. With ct = 0.02, the number of clusters in the point cloud data decreases, which brings about substantial filling of the pig’s back point cloud. Moreover, the segmentation correctly identifies and categorizes pig back point clouds as the primary class, but the issue of pig point cloud adhesion to the background remains severe. Finally, when ct = 0.05, the pig point cloud blends with the background, which means a failure. In comparison to the region growing segmentation algorithm, the DBSCAN-based segmentation model demonstrates greater robustness in handling parameters and noise. DBSCAN, as a clustering algorithm, offers advantages in point cloud segmentation tasks, especially in settings where there is no need to predefine the number of clusters. It exhibits robustness against noise and outliers while being capable of handling clusters with irregular shapes.

Figure 18.

Comparison of segmentation effect of region growing segmentation for point cloud: (a) Original image; (b) segmentation effect with a ct of 0.01; (c) segmentation effect with a ct of 0.02; (d) segmentation effect with a ct of 0.05.

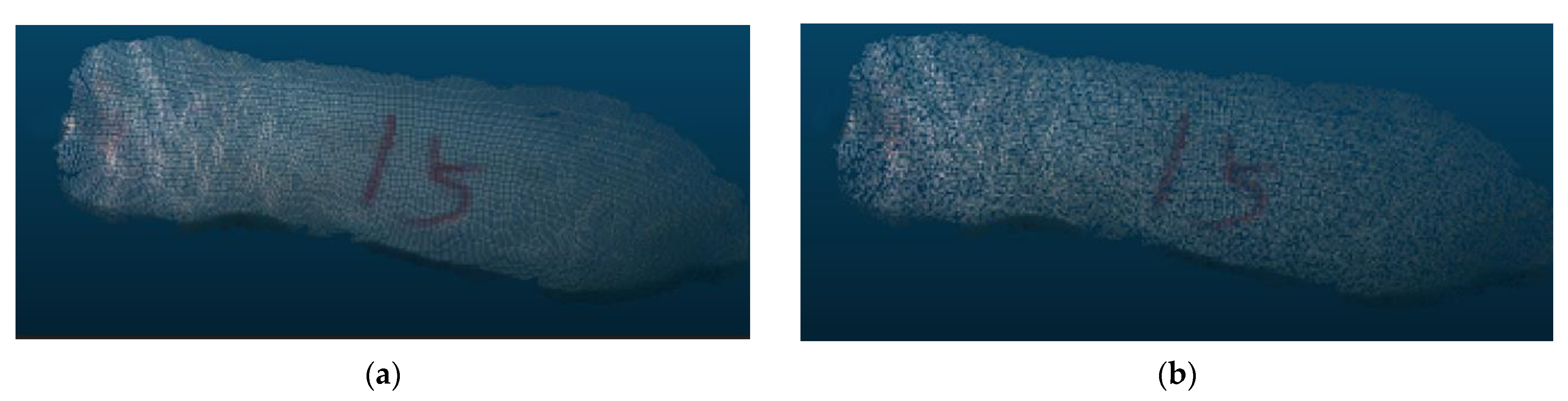

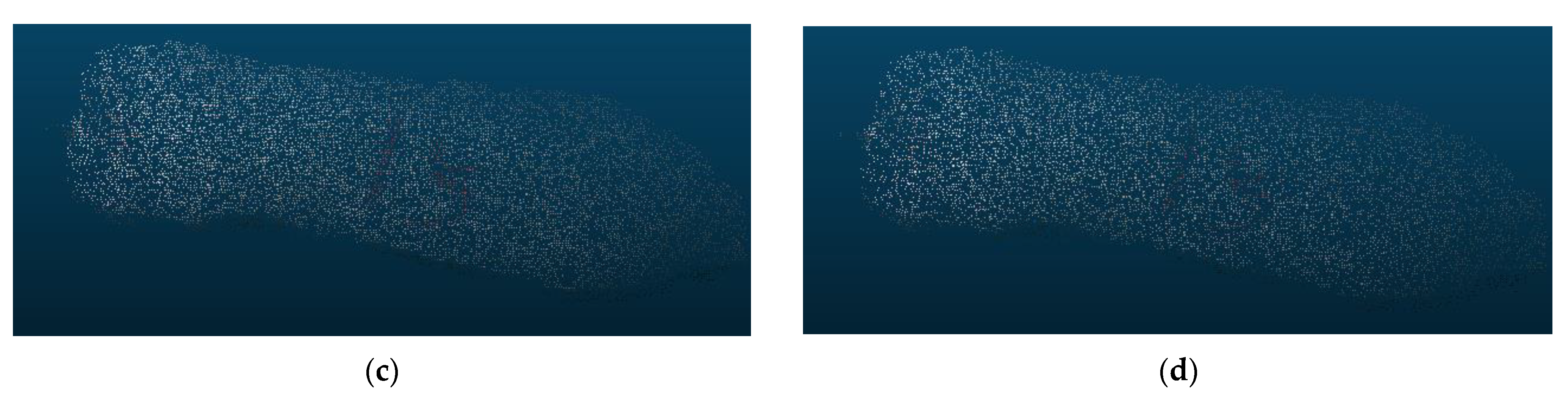

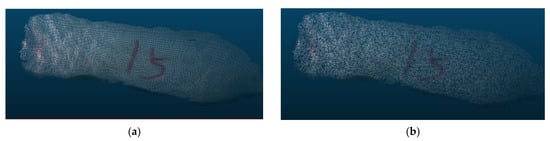

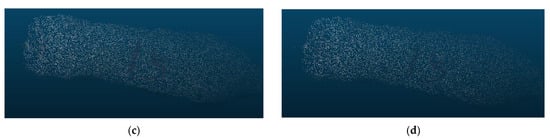

3.3. Analysis of Down-Sampling Results

Selecting an appropriate down-sampling voxel parameter is critical to improving the efficiency of body dimension feature extraction. Our experiments have shown that as the parameter value for the voxel’s regular cube increases, the number of points retained after down-sampling decreases. This parameter value determines whether the down-sampled point cloud remains stable and representative. Figure 19 illustrates the down-sampling effects of different parameter values. When the voxel parameter is set to 0.005, the down-sampling rate is 85%. It ensures both the preservation of point cloud features and a significant reduction in point cloud segmentation computational load. More importantly, the computational efficiency gets enhanced.

Figure 19.

Down-sampling results of point clouds with varying voxel parameters. (a) Voxel parameter of 0.05; (b) voxel parameter of 0.06; (c) voxel parameter of 0.07; (d) voxel parameter of 0.08.

3.4. Extraction Results of Point Cloud on the Back of Pig

In this research, the eigenvalues of envelope volume, envelope area, projection area, shoulder width, belly width, and hip width of the pig’s back were obtained. The partial extraction results of the pig’s back features are shown in Table 1. A Pearson coefficient test was employed to assess the back feature correlation of pigs. The correlation coefficients between different feature parameters are shown in Table 2. Among them, the correlation coefficients between shoulder width and envelope area and projection area are 0.753 and 0.759, respectively. This indicates that there is a substantial correlation between the characteristics.

Table 1.

Part of the pig characteristic parameter.

Table 2.

Pearson correlation coefficient between different parameters.

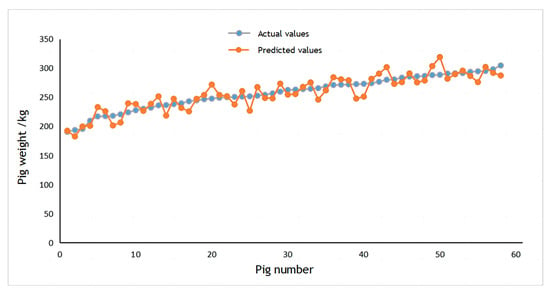

3.5. Validation of Weight Estimation Model

To validate the accuracy of the pig weight estimation model, a comparison was delivered between the actual and estimated values of pig weights. Part of the results for the test dataset are presented in Table 3. It is evident from the table that the relative estimation error for the majority of pigs falls within 6%, which meets the production demands. Nevertheless, due to the relatively smooth surface of the weighing scale at the bottom of the data collection system, certain pigs may lean forward or backward, causing some features to be obscured or distorted. This can hinder the model’s ability to accurately extract the correct feature information, which would make it difficult to achieve precise weight estimation. It would be helpful to integrate a posture detection algorithm within the model for enhancing weight estimation accuracy.

Table 3.

Part of the results for the test dataset.

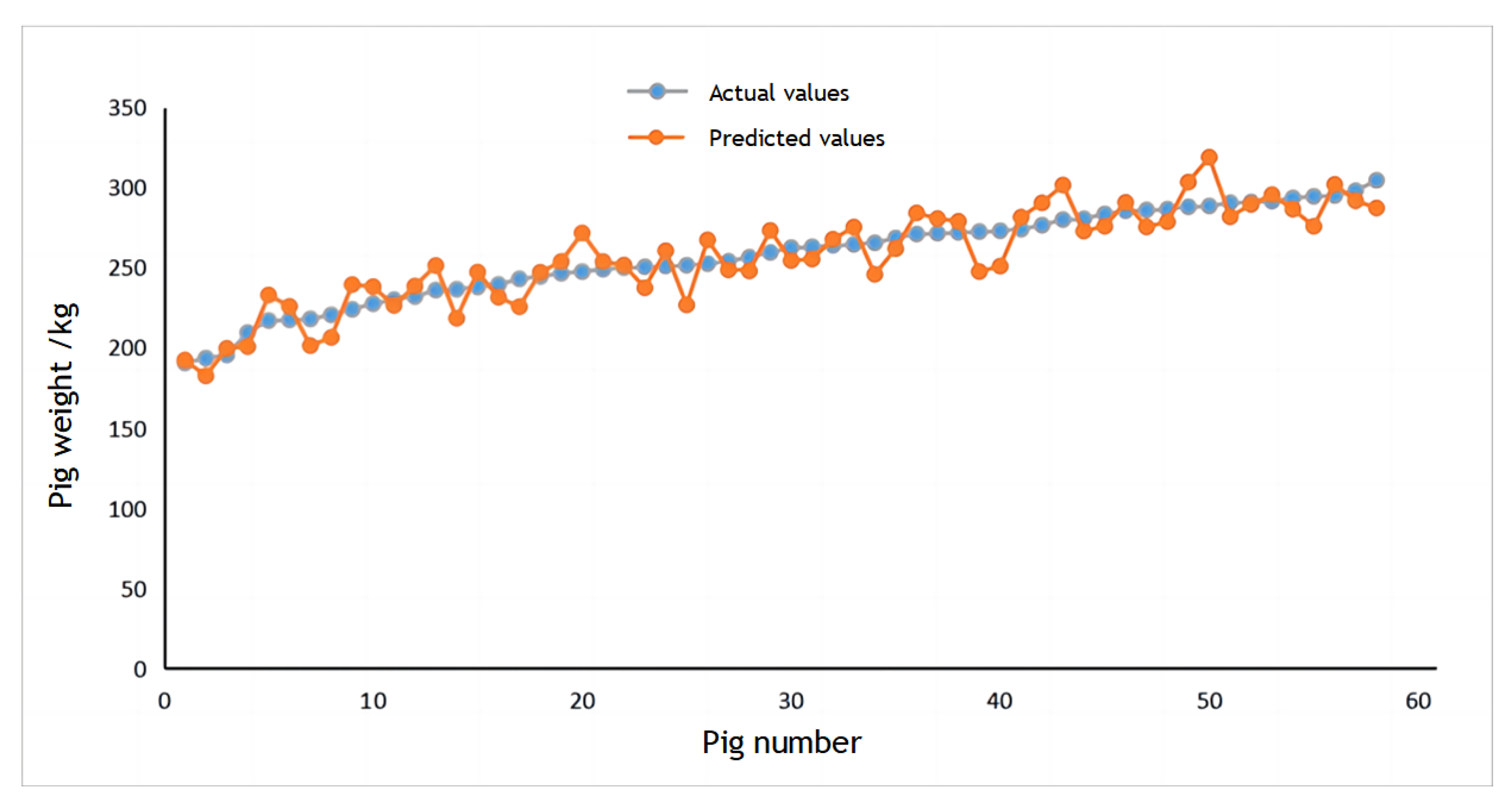

3.6. Comparison of Different Weight Estimation Methods

The dataset consisted of 140 pigs used as training samples for the CNN, with the remaining 58 pigs designated as test samples. The scatter plot between the true and estimated weight of pigs is shown in Figure 20. It demonstrates that the CNN-based pig weight estimation model exhibits a higher degree of accuracy in estimating pig weights.

Figure 20.

Scatter chart for weight estimation.

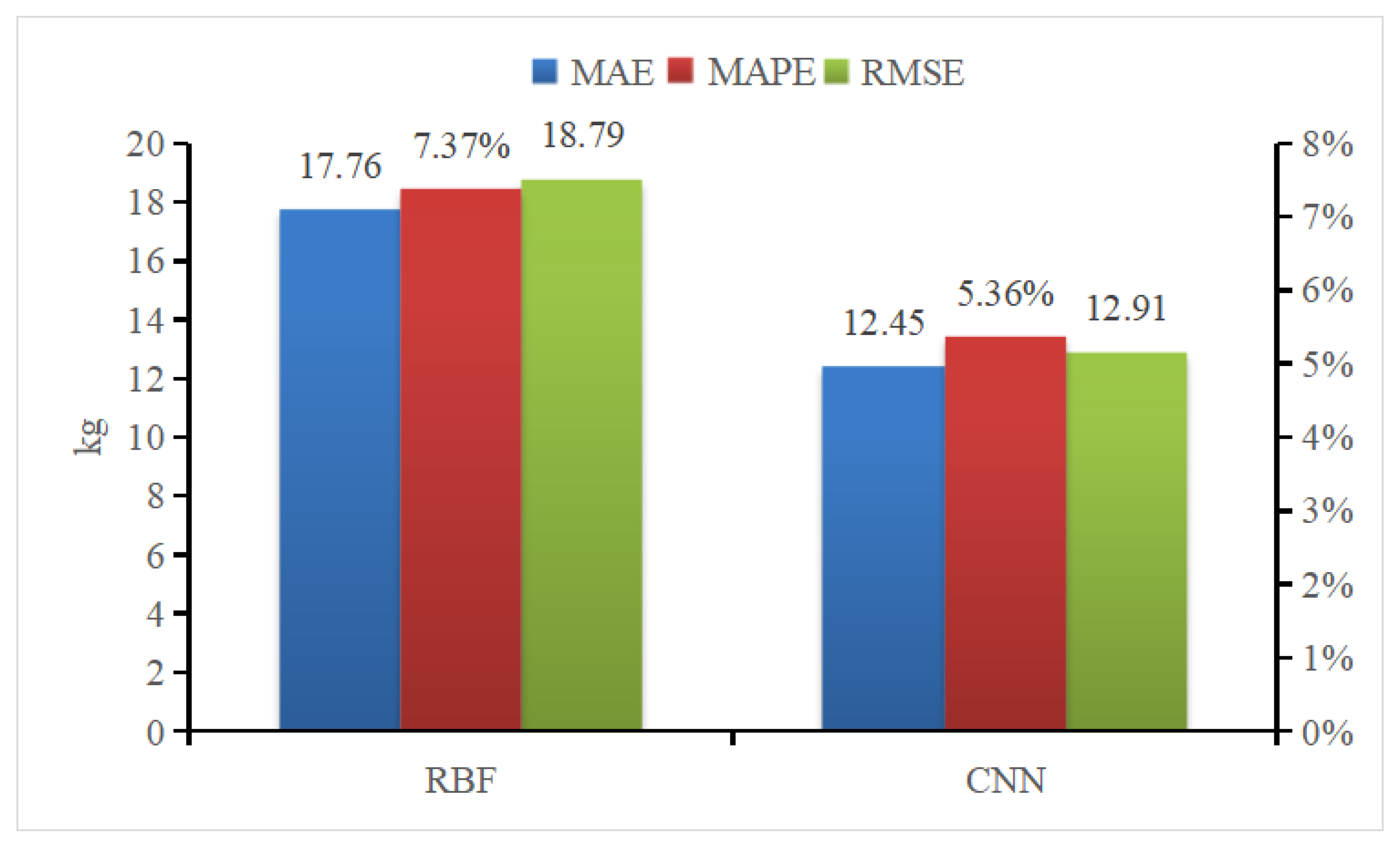

Radial Basis Function (RBF) Neural Networks was selected to compare the efficacy of different weight estimation model. RBF is a one-way propagation neural network with three or more layers that provides the most accurate approximation of nonlinear functions and the greatest global performance [39]. Figure 21 presents the results of the two weight estimation models using mean absolute error (MAE), mean absolute percent error (MAPE), and root mean square error (RMSE) as evaluation parameters. This comparison illustrates that all CNN parameters outperform those of RBF neural networks.

Figure 21.

Comparison of pig weight estimation and evaluation indicators.

4. Discussion

4.1. Comparison with Previous Research

To evaluate the effectiveness and practicality of the algorithm proposed in this research, a comparative analysis is conducted in comparison to previous research. In reference [14], a 2D camera is employed to capture dorsal images of pigs, followed by the extraction of the dorsal image area of pigs. The correlation between dorsal area and weight is investigated, leading to the development of a model for estimating pig weight. In contrast, our proposed approach, building upon the extraction of dorsal projection area, extensively extracts various dorsal body dimension features, including envelope volume, envelope area, projection area, shoulder width, belly width, and hip width. All of these contribute to a more precise regression of pig weight. Leveraging a 3D-based weight estimation system allows for accurate capture of intricate details from various angles, effectively addressing distortions arising from changes in perspective and scale. This system provides enhanced accuracy and reliability in weight estimation. In the realm of 3D image-based weight estimation methodology, reference [20] employs four Kinect cameras to capture point cloud data of pigs, subsequently employing mesh reconstruction and deep neural network (DNN) to construct a weight estimation model. However, the utilization of multiple cameras, while comprehensive in capturing morphological features, presents challenges including high costs, intricate data fusion and processing, spatial constraints, and layout limitations, rendering it unsuitable for deployment in large-scale pig farms.

4.2. Deficiencies and Improvements

The postures of the pigs were found to influence the reliability and accuracy of back feature extraction. The change in pig postures impact the model’s feature extraction, resulting in deviations between the actual feature parameters and the extracted feature parameters, which will then affect the efficacy of the pig weight estimation model. In future research, we will consider the feature differences under various postures and construct different models to estimate the body weight of pigs in different postures.

Furthermore, due to uncontrolled movements of pigs, the acquired point cloud data are susceptible to noise, non-uniform or under-sampling, voids, and omissions, all of which can affect the effectiveness of body dimension parameter extraction. In future work, we plan to integrate multiple consecutive frames of point cloud data to densify and fill in the sparse and missing portions of the pig’s point cloud. To facilitate the practical implementation of non-contact weight estimation for pigs based on 3D imagery, further research will broaden the scope of target subjects and enhance the weight estimation model’s generalization capabilities. Drawing upon the output of pig weight estimation, we intend to assess the growth status of the pigs and offer tailored feeding recommendations. A weight management system was constructed in this research, as illustrated in Figure 22. The weight estimation model can be deployed on application terminals to provide information on pig weight gain status, weight-based feeding recommendations, and alert notifications.

Figure 22.

The weight management system.

5. Conclusions

This study introduces a 3D point cloud-based method for estimating pig weight, so as to address the challenges related pig weight monitoring and their susceptibility to stress. In this method, we separated the pig point cloud from the background using statistical filtering and DBSCAN, thereby resolving the problem of pig and background adhesion. Using convex hull detection, the point cloud data of the pig’s head and tail was removed in order to obtain complete point cloud data of their backs. Voxel down-sampling was used to reduce the number of point cloud data on the backs of pigs and enhance the weight estimation model’s efficiency and real-time performance. The weight estimation model based on back parameter characteristics and CNN was constructed. Also, the MAPE of the weight estimation model is only 5.357%, which could meet the demand of automatic weight monitoring in actual production.

It is worth mentioning that all the data used for training and validation in this method were collected from a real production environment, and only a depth camera above the drive channel was needed to achieve this method. Therefore, the method is easy to popularize and apply. What shall be noticed is that it can provide technical support for pig weight monitoring within both breeding and slaughterhouse settings.

Author Contributions

Z.L.: writing—original draft, review and editing, conceptualization, methodology, equipment adjustment, data curation, software. J.H.: writing—original draft, data curation, software, formal analysis, investigation, conceptualization. H.X.: review and editing, conceptualization, methodology, formal analysis, funding acquisition. H.T.: writing—review and editing, conceptualization. Y.C.: review and editing, conceptualization, formal analysis. H.L.: review and editing, conceptualization, formal analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Projects of Intergovernmental Cooperation in International Scientific and Technological Innovation (Project No. 2017YFE0114400), China.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to acknowledge the support from the Wens Foodstuffs Group Co., Ltd. (Guangzhou, China) for the use of their animals and facilities.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Noya, I.; Aldea, X.; González-García, S.; Gasol, C.M.; Moreira, M.T.; Amores, M.J.; Marin, D.; Boschmonart-Rives, J. Environmental assessment of the entire pork value chain in catalonia—A strategy to work towards circular economy. Sci. Total Environ. 2017, 589, 122–129. [Google Scholar] [CrossRef]

- Secco, C.; da Luz, L.M.; Pinheiro, E.; Puglieri, F.N.; Freire, F.M.C.S. Circular economy in the pig farming chain: Proposing a model for measurement. J. Clean. Prod. 2020, 260, 121003. [Google Scholar] [CrossRef]

- He, W.; Mi, Y.; Ding, X.; Liu, G.; Li, T. Two-stream cross-attention vision Transformer based on RGB-D images for pig weight estimation. Comput. Electron. Agric. 2023, 212, 107986. [Google Scholar] [CrossRef]

- Bonneau, M.; Lebret, B. Production systems and influence on eating quality of pork. Meat Sci. 2010, 84, 293–300. [Google Scholar] [CrossRef]

- Lebret, B.; Čandek-Potokar, M. Pork quality attributes from farm to fork. Part I. Carcass and fresh meat. Animal 2022, 16, 100402. [Google Scholar] [CrossRef]

- Li, J.; Yang, Y.; Zhan, T.; Zhao, Q.; Zhang, J.; Ao, X.; He, J.; Zhou, J.; Tang, C. Effect of slaughter weight on carcass characteristics, meat quality, and lipidomics profiling in longissimus thoracis of finishing pigs. LWT 2021, 140, 110705. [Google Scholar] [CrossRef]

- Jensen, D.B.; Toft, N.; Cornou, C. The effect of wind shielding and pen position on the average daily weight gain and feed conversion rate of grower/finisher pigs. Livest. Sci. 2014, 167, 353–361. [Google Scholar] [CrossRef]

- Valros, A.; Sali, V.; Hälli, O.; Saari, S.; Heinonen, M. Does weight matter? Exploring links between birth weight, growth and pig-directed manipulative behaviour in growing-finishing pigs. Appl. Anim. Behav. Sci. 2021, 245, 105506. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. Automatic weight estimation of individual pigs using image analysis. Comput. Electron. Agric. 2014, 107, 38–44. [Google Scholar] [CrossRef]

- Apichottanakul, A.; Pathumnakul, S.; Piewthongngam, K. The role of pig size prediction in supply chain planning. Biosyst. Eng. 2012, 113, 298–307. [Google Scholar] [CrossRef]

- Dokmanović, M.; Velarde, A.; Tomović, V.; Glamočlija, N.; Marković, R.; Janjić, J.; Baltić, M.Ž. The effects of lairage time and handling procedure prior to slaughter on stress and meat quality parameters in pigs. Meat Sci. 2014, 98, 220–226. [Google Scholar] [CrossRef] [PubMed]

- Bhoj, S.; Tarafdar, A.; Chauhan, A.; Singh, M.; Gaur, G.K. Image processing strategies for pig liveweight measurement: Updates and challenges. Comput. Electron. Agric. 2022, 193, 106693. [Google Scholar] [CrossRef]

- Tu, G.J.; Jørgensen, E. Vision analysis and prediction for estimation of pig weight in slaughter pens. Expert Syst. Appl. 2023, 220, 119684. [Google Scholar] [CrossRef]

- Minagawa, H.; Hosono, D. A light projection method to estimate pig height. In Swine Housing, Proceedings of the First International Conference, Des Moines, IA, USA, 9–11 October 2000; ASABE: St. Joseph, MI, USA, 2000; pp. 120–125. [Google Scholar]

- Li, G.; Liu, X.; Ma, Y.; Wang, B.; Zheng, L.; Wang, M. Body size measurement and live body weight estimation for pigs based on back surface point clouds. Biosyst. Eng. 2022, 218, 10–22. [Google Scholar] [CrossRef]

- Sean, O.C. Research and Implementation of a Pig Weight Estimation System Based on Fuzzy Neural Network. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2017. [Google Scholar]

- Suwannakhun, S.; Daungmala, P. Estimating pig weight with digital image processing using deep learning. In Proceedings of the 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 320–326. [Google Scholar]

- Liu, T.H. Optimization and 3D Reconstruction of Pig Body Size Parameter Extraction Algorithm Based on Binocular Vision. Ph.D. Thesis, China Agricultural University, Beijing, China, 2014. [Google Scholar]

- He, H.; Qiao, Y.; Li, X.; Chen, C.; Zhang, X. Automatic weight measurement of pigs based on 3D images and regression network. Comput. Electron. Agric. 2021, 187, 106299. [Google Scholar] [CrossRef]

- Kwon, K.; Park, A.; Lee, H.; Mun, D. Deep learning-based weight estimation using a fast-reconstructed mesh model from the point cloud of a pig. Comput. Electron. Agric. 2023, 210, 107903. [Google Scholar] [CrossRef]

- Guo, R.; Xie, J.; Zhu, J.; Cheng, R.; Zhang, Y.; Zhang, X.; Gong, X.; Zhang, R.; Wang, H.; Meng, F. Improved 3D point cloud segmentation for accurate phenotypic analysis of cabbage plants using deep learning and clustering algorithms. Comput. Electron. Agric. 2023, 211, 108014. [Google Scholar] [CrossRef]

- Ali, Z.A.; Zhangang, H. Multi-unmanned aerial vehicle swarm formation control using hybrid strategy. Trans. Inst. Meas. Control 2021, 43, 2689–2701. [Google Scholar] [CrossRef]

- Li, J.; Ma, W.; Li, Q.; Zhao, C.; Tulpan, D.; Yang, S.; Ding, L.; Gao, R.; Yu, L.; Wang, Z. Multi-view real-time acquisition and 3D reconstruction of point clouds for beef cattle. Comput. Electron. Agric. 2022, 197, 106987. [Google Scholar] [CrossRef]

- Zhao, Q.; Gao, X.; Li, J.; Luo, L. Optimization algorithm for point cloud quality enhancement based on statistical filtering. J. Sens. 2021, 2021, 7325600. [Google Scholar] [CrossRef]

- Yang, Q.F.; Gao, W.Y.; Han, G.; Li, Z.Y.; Tian, M.; Zhu, S.H.; Deng, Y.H. HCDC: A novel hierarchical clustering algorithm based on density-distance cores for data sets with varying density. Inf. Syst. 2023, 114, 102159. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD, München, Germany, 2 August 1996; pp. 226–231. [Google Scholar]

- Chen, H.; Liang, M.; Liu, W.; Wang, W.; Liu, P.X. An approach to boundary detection for 3D point clouds based on DBSCAN clustering. Pattern Recognit. 2022, 124, 108431. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Ma, B.; Du, J.; Wang, L.; Jiang, H.; Zhou, M. Automatic branch detection of jujube trees based on 3D reconstruction for dormant pruning using the deep learning-based method. Comput. Electron. Agric. 2021, 190, 106484. [Google Scholar] [CrossRef]

- Lu, M.; Xiong, Y.; Li, K.; Liu, L.; Yan, L.; Ding, Y.; Lin, X.; Yang, X.; Shen, M. An automatic splitting method for the adhesive piglets’ gray scale image based on the ellipse shape feature. Comput. Electron. Agric. 2016, 120, 53–62. [Google Scholar] [CrossRef]

- Fernandes, A.F.; Dórea, J.R.; Fitzgerald, R.; Herring, W.; Rosa, G.J. A novel automated system to acquire biometric and morphological measurements and predict body weight of pigs via 3D computer vision. J. Anim. Sci. 2019, 97, 496–508. [Google Scholar] [CrossRef]

- Panda, S.; Gaur, G.K.; Chauhan, A.; Kar, J.; Mehrotra, A. Accurate assessment of body weights using morphometric measurements in Landlly pigs. Trop. Anim. Health Prod. 2021, 53, 362. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Jiang, Q.; Jia, M.; Bi, L.; Zhuang, Z.; Gao, K. Development of a core feature identification application based on the Faster R-CNN algorithm. Eng. Appl. Artif. Intell. 2022, 115, 105200. [Google Scholar] [CrossRef]

- Diao, Z.; Yan, J.; He, Z.; Zhao, S.; Guo, P. Corn seedling recognition algorithm based on hyperspectral image and lightweight-3D-CNN. Comput. Electron. Agric. 2022, 201, 107343. [Google Scholar] [CrossRef]

- Kong, W.; Chen, Y.; Lei, Y. Medical image fusion using guided filter random walks and spatial frequency in framelet domain. Signal Process. 2021, 181, 107921. [Google Scholar] [CrossRef]

- Ma, X.; Luo, W.; Chen, M.; Li, J.; Yan, X.; Zhang, X.; Wei, W. A fast point cloud segmentation algorithm based on region growth. In Proceedings of the 2019 18th International Conference on Optical Communications and Networks (ICOCN), Huangshan, China, 5–8 August 2019; pp. 1–2. [Google Scholar]

- Han, X.; Wang, X.; Leng, Y.; Zhou, W. A plane extraction approach in inverse depth images based on region-growing. Sensors 2021, 21, 1141. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Jung, C.; Chang, Y. Head pose estimation using deep neural networks and 3D point clouds. Pattern Recognit. 2022, 121, 108210. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).