2.1. Modeling the Process of Monitoring and Assessing Musculoskeletal Rehabilitation Exercises

The process of monitoring and evaluating exercise during musculoskeletal rehabilitation can be formalized and described, regardless of the movement tracking system used. This allows the model to be used further in other studies as a basis for creating monitoring and evaluation systems for exercises. To model the process of monitoring and evaluating musculoskeletal rehabilitation exercises, we use the set theory. In the future, during the practical implementation of the model in the form of software, the set theory will allow it to be used without additional transformations, moving from sets and operations to classes and methods implemented in the selected programming language.

The basis of the process being considered is the formation of a set of target points necessary to assess the quality of the exercises performed. In the first phase, the main components (characteristics) of the exercises were analyzed. Let be a multitude of exercises, and be a few exercises from this multitude. Let us denote the trajectory of target point movement that determines the characteristics of exercise as . This trajectory has corresponding unique parameters, including initial and final positions, and movement speed.

The target point

is defined as the set of values of coordinates on three axes:

where

,

, and

are the coordinates of the target point on the axes

X,

Y, and

Z, respectively. Then, the multitude

reflects the dynamics of the target point coordinates’ change in the process of exercise

. The sequence of points

in the set

is ordered as they are received from the tracking system, starting from the first and ending with the last, which completes the exercise.

Each exercise

can be matched by a certain tuple of its parameters:

where

is the point of the human body on three axes, calculated on the basis of polynomials or splines, algorithms of linear regression, or other approaches that provide minimal deviation from the initial target points:

: time interval of the exercise in seconds;

: the number of measurements of the target point;

: the interval in seconds between measurements;

: the boundary values of spatial and space–time characteristics of the movement of the target point of the exercise:

where

is the minimum value of the target point position on the

X axis, similar to

and

for the

Y and

Z axes, respectively;

is the maximum value of the target point position on the X axis, similar to and for the Y and Z axes, respectively;

are the average values of the target point speed along the X, Y, and Z axes.

Each exercise can be matched by its category

, reflecting the specific actions and movements necessary for the qualitative performance of this exercise:

After determining the main objects of the subject area and their properties, the task of assessing the exercise quality is considered.

Let a subset of exercises

be given with reference to trajectories and characteristics of movements (received, for example, under the supervision of the doctor). If a new exercise

of category

, has entered the database, it is compared with the reference exercise

of the same category

by the following formulas:

where

is the average standard deviation of the reference trajectory from the estimated;

: the arithmetic mean of time intervals between measurements of data, in seconds;

: estimate the performance time of the exercise relative to the reference;

: the correctional coefficient of the exercise performance time, allowing one to estimate the excess of the reference time as a satisfactory result, is selected experimentally depending on the exercise ();

: estimate of the Euclidean distance between the minimum and maximum values of the target point in the current and reference exercises;

: the assessment of the difference between the space–time characteristics (mean speed of movement) of the evaluated exercise and the reference exercise [

43].

The maximum quality of the evaluated exercise is achieved when the following conditions are met:

Thus, the evaluation of the exercise is calculated on the basis of the deviation from the values of the recorded reference exercise, or, in its absence, the threshold values are used for all metrics: the ideal trajectory for which the maximum and minimum positions of the target point are set, the recommended average speed, and the time of execution.

An important component of the process of monitoring and evaluating the exercises performed is the automatic determination of the exercise type (category). Two options are possible in the practical implementation of such systems: manual selection of exercises or automatic recognition. The approach described below can be used to automatically determine the category of exercise.

It is necessary to approximate the relationship between the trajectories of the target point and the exercise category using a machine learning method:

Thus,

displays multiple trajectories of target point movement in multiple categories of exercises. In addition to neural networks, other methods discussed in

Section 1.2 may be used. Their effectiveness is evaluated further below.

For the presented model, a modification can be made in the case when not only one target point is tracked as part of the exercise but several. The set of

exercises

in the tracking of

target points take the following form:

That is, subsets are formed that store the trajectories of each of the points. In Formula (6) and in the formulas below, it is necessary to make separate calculations for each of the target points, which can be averaged. Also, in Formula (16), not one trajectory is analyzed but a set of trajectories from all target points.

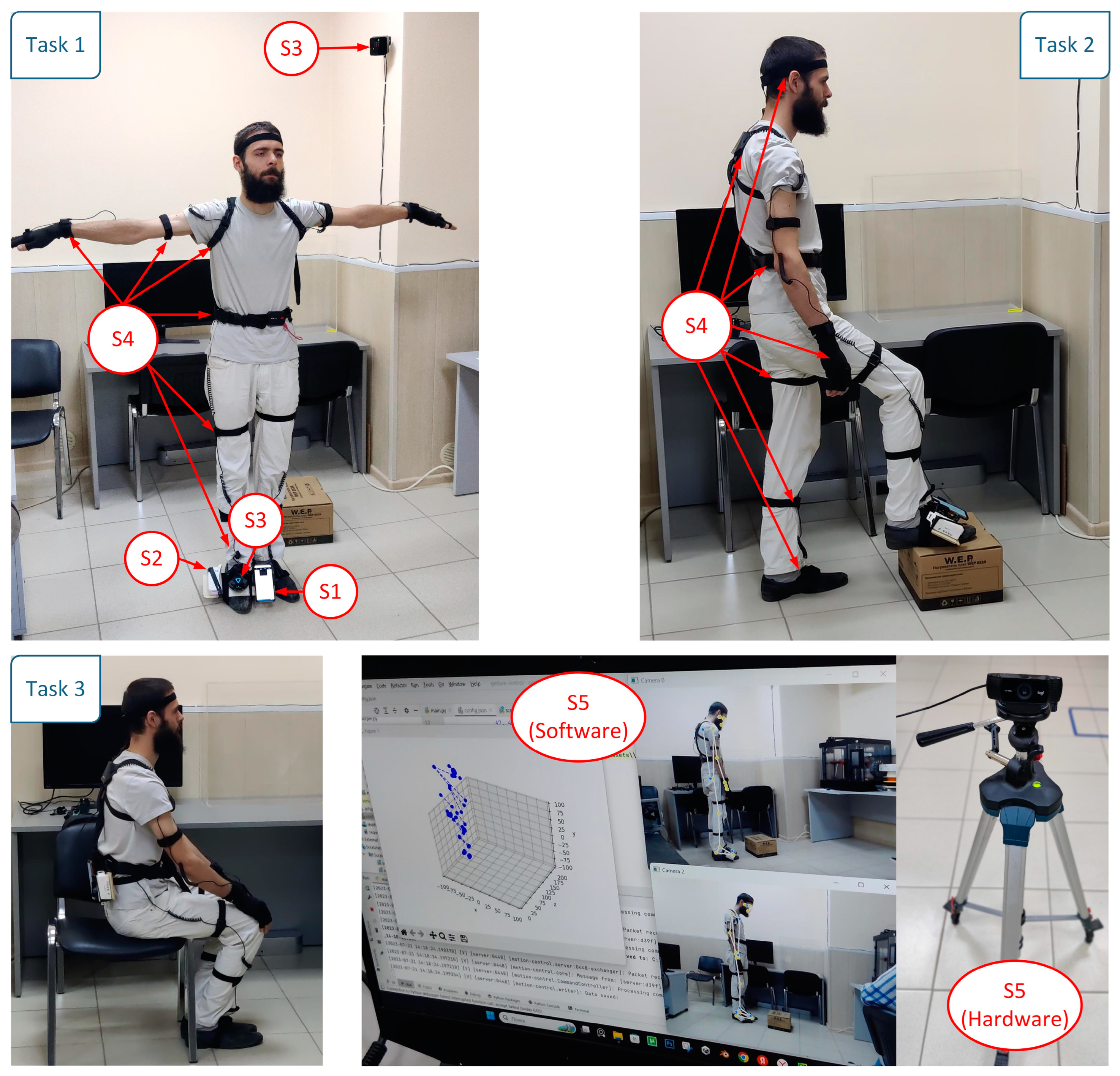

2.2. Data Processing Algorithms from Various Movement Tracking Systems for Exercise Monitoring

The model presented above in a generalized form formalizes the processes of monitoring and evaluating musculoskeletal rehabilitation exercises. For the successful application of this model, it is necessary to prepare the source data obtained from the movement tracking system and make them uniform so that the model can process them. We consider the appropriate algorithms for each tracking system.

2.2.1. Processing Data from Inertial Navigation Systems

A distinctive feature of the INS, based on the calculation of indications from the accelerometer and the gyroscope, is the need to integrate accelerations on three axes, taking into account angles of turning and the impact of the geomagnetic field. All this leads to a huge error in determining the speed and trajectory of the target point movement. The following is a description of the necessary data conversions.

INS form the output data in the acceleration tuple

of the target point on three axes:

where

,

, and

are accelerations along the

X,

Y, and

Z axes, respectively, and

,

, and

are the sets of acceleration values along the corresponding axes. Note that when forming the tuple

it is necessary to record data from the device’s gyroscope to take into account the angle of inclination of the device in space.

Each

-th data measurement is carried out after a period of time

. At the next step, the speed of movement of the device

is determined:

where

,

, and

are the velocities along the

X,

Y, and

Z axes, respectively, and the sets

,

and

are the sets of velocity values along the corresponding axes.

The next step is to obtain increments of the target point trajectories along the three axes. Initially, the variables

,

, and

have zero values (when

).

,

, and

are the sets of values of the points of the target point trajectory along the corresponding axes. At each step with a time interval

, the obtained metrics are

Thus, the tuple

uniquely determines the position of the target point at time

from the start of the record. The set of target points

can then be used in the calculations of the model in

Section 2.1 since its form and content correspond to the format given in (6). Therefore, all calculation formulas in

Section 2.1 are applicable.

Due to the high measurement error, after integrating the initial data and accumulating the error, it may be necessary to apply filtering or data processing algorithms. That is, it is necessary to carry out a certain set of transformations of the

of the initial data of the

inertial navigation system in such a way that the average Euclidean distance

of the deviation of the processed trajectory of the target point from the real trajectory is minimal:

where

,

, and

are the positions of the point along the

X,

Y, and

Z axes, respectively, calculated on the basis of the inertial navigation system data using filtering and signal processing algorithms.

,

,

, and

are the values of the corresponding real coordinates of the target point.

The linear Kalman filter [

44] implemented in the FilterPy library [

45] is used as the main filter in this study. Using this filter, it is possible to carry out a relatively accurate calculation of the speed and trajectory [

46,

47] in accordance with Formulas (19) and (20), as well as to remove noise.

2.2.2. Processing Data from Virtual Reality Systems

Virtual reality trackers and controllers powered by Steam VR Lighthouse technology provide coordinates from all sensors and angles of inclination with high frequency and precision. Thus, a data processing algorithm is not required for this class of systems, as the target point is originally formed in Formula (6). Velocity and acceleration can be obtained by differentiation.

When working with trackers or sensors of virtual reality systems, it is necessary to carry out initial calibration to obtain coordinates normalized relative to the initial position. This process does not cause difficulties, since it consists of saving the coordinate values of target points obtained during calibration and subtracting these values from the current ones, which can be integrated into the data acquisition system.

2.2.3. Processing Data from Motion Capture Suit

As a result of the motion capture suit use, a set of one base point and a multitude of segments (bones) located in relation to it is formed, the position of which is indicated by angles of inclination on three axes. If necessary, the system allows one to record, in addition to changes in the sensor angle, its movement relative to the previous measurement [

23].

Mark the base point on the back of the user as

, the tuple contains coordinates on three axes and angles of turns. At a certain point in time

is given a number of segments (bones)

, the total number of

. For each segment

three values are given:

where

are the characteristics of the turn of the

-th sensor on three axes, relative to the previous measurement.

A multitude of segments is set for each measurement, thus forming a sequence of , containing information about all the movements of the human body model. This sequence is transmitted to a development environment capable of processing recorded animations from motion capture suits.

The next stage of data processing is the selection of target points on the digital model of the human body [

42]. To achieve this, it is necessary to set the size of the human body model (height and length of the limbs), after which a number of target points is set. Each target point

is attached to the nearest segment

of the digital model (and the segment is higher in the skeletal model hierarchy than the target point):

where

is the position of the starting point of the segment in the metric coordinates of the virtual scene, the scales of which are close to the real world;

is the distance between the start point of the segment and the selected target point.

Then, when the segment is shifted, taking into account the fixed distances , changing the position of leads to obtain the current position of the target point. Thus, Formula (24) corresponds in form to (6) and can be used to assess the quality of the exercise.

2.2.4. Processing Data in Computer Vision Systems

The data processing algorithm for tracking the human body by computer vision systems has certain similarities with the algorithm presented in

Section 2.2.3 but has the following features:

There is no reference point;

All points of the human body model have their own coordinates;

The coordinates of body model points are given in relative values (from 0 to 1), in accordance with the position on the frame received from the camera.

These features lead to the need to perform the following conversions on the source data. The first phase of the algorithm involves extracting from the frame

, obtained at time

, a set of points

:

where

are the coordinates of point

in frame

along three axes. Most neural network models position points in two coordinates (

X and

Y) due to the complexity of depth estimation when using a camera. A number of algorithms (for example, MediaPipe) simulate the determination of the

coordinate relative to some reference point, but this value is inaccurate.

Therefore, to accurately determine the position of a person in space along all three axes using several (at least two) cameras, the following is calculated:

where

and

are the position of point

on the first and second cameras, respectively, along the

X axis;

and are the position of point on the first and second cameras, respectively, along the Y axis;

and are the maximum pixel values along the X and Y axes, respectively;

and are coefficients for converting pixels into meters, determined by taking into account the length of the limbs and correlating them with the length of the corresponding recognized segment on the frame.

As a result of the transformation, the target point is formed as follows (27):

The resulting target point format corresponds to Formula (6).

Another approach is to use triangulation, the process of determining a point in three-dimensional space given its projections onto two or more images. To calculate the coordinates of a point in three-dimensional space, it is necessary to know the coordinates of its projections on images and the projective matrices of cameras [

48]. The projective matrix

of a certain camera can be represented as a combination of the matrices

(containing the internal parameters of the camera) and

(rotation), as well as the displacement vector of the

, which describe the change in coordinates from the world coordinate system to the coordinate system relative to the camera:

where

: the coordinates of the camera central point;

: focal length in pixels.

At the base of the three-dimensional reconstruction of the object points by the values of point projection positions on the images from all cameras is the epipolar geometry. It provides a condition for searching for pairs of corresponding points on two images: if it is known that the point

on the plane of the first image corresponds to the point

on the plane of the other image, then its projection must lie on the corresponding epipolar line. According to this condition, for all corresponding pairs of points

, the following relation is true:

where

is a fundamental matrix of size

and rank equal to two.

For some point

, given in three-dimensional space, the following projection formula is valid, expressed in homogeneous coordinates:

where

are the homogeneous coordinates of some point on the plane of the

-th image (obtained from the

-th camera during the second stage), including the position on the image of

(along the

X axis) and

(along the

Y axis);

: the scaling factor;

: the projection matrix of the -th camera obtained earlier.

To simplify calculations, the projection matrix of a camera is often represented in the following form:

where

is the

-th row of the matrix

.

Therefore, Equation (31) can be represented as follows:

The following system of equations is obtained by considering

as a scaling factor:

Since

is a homogeneous representation of coordinates in three-dimensional space, to calculate them, it is necessary to obtain

and

for at least two cameras. To solve the system of Equation (34), there are a large number of implemented triangulation algorithms, for example, L2, direct linear transform, or other approaches implemented in the OpenCV computer vision library [

49]. As a result of their work, a vector of three-dimensional coordinates similar to (28) is also formed.

If, during the exercise, it is necessary to track the movement of the target point only along two coordinates, then it is possible to use Formula (27), without taking into account the second camera and the need to calculate values along the third coordinate axis. An important point of this simplification is that it is necessary to correlate the normalized length of a body segment, obtained from the recognition algorithm (for example, MediaPipe) and expressed in pixels, with the real size of this segment, defined in meters. Using this ratio allows the calculation of all body segments in metric values.

2.3. Application of Machine Learning Algorithms for Analysis and Classification of Musculoskeletal Rehabilitation Exercises

Theoretical studies described in

Section 2.1 and

Section 2.2 on modeling the processes of monitoring and evaluating musculoskeletal rehabilitation exercises and algorithms for processing information from various sources make it possible to collect, analyze, and evaluate user actions in the rehabilitation process. However, problem (16) remains open; that is, it is necessary to determine a machine learning algorithm that allows classifying of user actions or movements as a kind of exercise. The importance of this task lies in the fact that, without an automated determination of the current exercise, it is impossible to correctly assess the quality of its performance since each exercise has its own trajectory of movements and spatio-temporal characteristics.

In

Section 1.2, the main approaches and existing research in the field of the machine learning application in the rehabilitation and tracking of human movements are reviewed. Based on the presented model and algorithms, the procedure for applying machine learning technologies for the analysis and classification of musculoskeletal rehabilitation exercises is described.

For each exercise

, a trajectory of the target points movement

is specified, including tuples of three-dimensional coordinates of one or more points, the number of which is specified by the variable

. Thus, the total dimension of the exercise initial data has the following form:

where

is the number of recorded sets of target points, corresponding to the size of the set

, taking into account the supposition that, within

, the lengths of the trajectories of all target points are equal.

To approximate (16), it is necessary to analyze the dynamics of changes in the position of the target points, and the use of measurement at one point in time does not allow one to determine the exercise since a person can occupy similar positions while performing various exercises. On the other hand, using the entire dataset leads to the problem of a lack of a single dimension for all exercises due to their different durations ().

To solve this problem, the classical approach is to determine the size

of some window

, which selects a fixed-length fragment from the input data sequence. Such fragments of the same size are processed by machine learning algorithms with some shift (step)

until the window extracts the last fragment. This allows one to process time sequences of any length and create a forecast for each of them (in the framework of this study, an exercise category for each fragment). For the original time sequence, the resulting output is obtained:

where

is the number of fragments determined on the basis of the following calculation;

is the number of elements that are used to form complete fragments. Division by

shows how many such complete fragments can exist. The unit in expression (36) determines the possibility of adding the last incomplete fragment, which can be shorter than

if

is not exactly divisible by

(in this case, the last fragment consists of the last

values).

Using expression (36), the entire initial time sequence is processed. Then, the machine learning algorithm required to approximate the expression (16) takes as input a multidimensional vector

of the format

and at the output returns a vector

belonging to a certain category of exercise with size

. The mapping

is specified on the entire set of fragments of target point trajectories; even if the fragment initially does not have an exercise category

, it can be assigned a new category

, to which all unrecognized fragments are assigned [

50].

Next, it is necessary to determine the optimal machine learning algorithm for solving the classification problem. Since multidimensional time sequences are processed, the following algorithms and architectures are chosen as possible solutions:

DecisionTreeClassifier: decision trees for multiclass classification; the input of the algorithm must be an array of the format (the number of examples, the number of features), which requires transformation:

KNeighborsClassifier: k-nearest neighbor classifier; the input data format is identical to decision trees and requires transformation (37);

RandomForestClassifier: a meta estimator that trains a set of decision trees; input data format needs to be converted (37);

NN: multilayer neural networks including dense layers;

LSTM: multilayer recurrent neural networks, including layers of long-term memory;

CNN: convolutional neural networks using 1D convolutional layers (Conv1D) to identify and generalize features in a time sequence;

CNN + Transformer: a combined neural network that first identifies the main features of the data using convolutional layers, then uses the MultiHeadAttention layers to extract from the set all the most important features for the current class. As a basis for the architecture of this network, it is proposed to use MobileViT [

51], which requires a transformation of the input data to the following form:

The presented five architectures of machine learning algorithms make it possible both to identify the best solution in the process of selecting the hyperparameters of each of the architectures (tree depth for the first two algorithms, the number of layers and neurons for the rest), and to determine their applicability for analyzing data on the process of human movement.