Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm

Abstract

1. Introduction

2. Related Work

2.1. Vehicle Detection and Tracking Using In-Vehicle LiDAR

2.2. Vehicle Detection and Tracking with Roadside LiDAR

3. Methodology

3.1. Vehicle Detection Based on ResNet18

3.1.1. BEV Transformation

3.1.2. ResNet18-Based Feature Extraction

3.1.3. 3D Proposals Network

3.1.4. Objective Optimization and Network Training

3.2. Vehicle Tracking at Three Stages

3.2.1. Object Matching for Consecutive Frames Based on the Improved Hungarian Algorithm

| Algorithm 1: Object matching pseudo-code |

| Input: All_object, current_frame_object, previous_frame_object Output: All_object |

|

3.2.2. Object Merger in Fixed Time Intervals

| Algorithm 2: Merging of objects with interrupted trajectories. |

| Input: All_Object Output: Merger_ Object (Object matrix that can be merged) |

|

| Algorithm 3: Whether and satisfy time-space logicality and trajectory similarity |

| Input: Attributes of vehicle . (.start_x, .start_time, .end_x, .end_time); Attributes of vehicle . (.start_x, .start_time, .end_x, .end_time) Output: bool (Whether and satisfy space-time logicality and trajectory similarity) |

|

|

3.2.3. Completion of Missing Trajectories

| Algorithm 4: Complementary algorithm for missing trajectories |

| Input: The object containing the missing trajectory, in the form of a list, with the value of None for the missing trajectory (T_miss) Output: Object after trajectory completion (T) |

|

4. Case Study

4.1. Experimental Preparation

4.1.1. Experimental Dataset Collection

- (1)

- KITTI dataset

- (2)

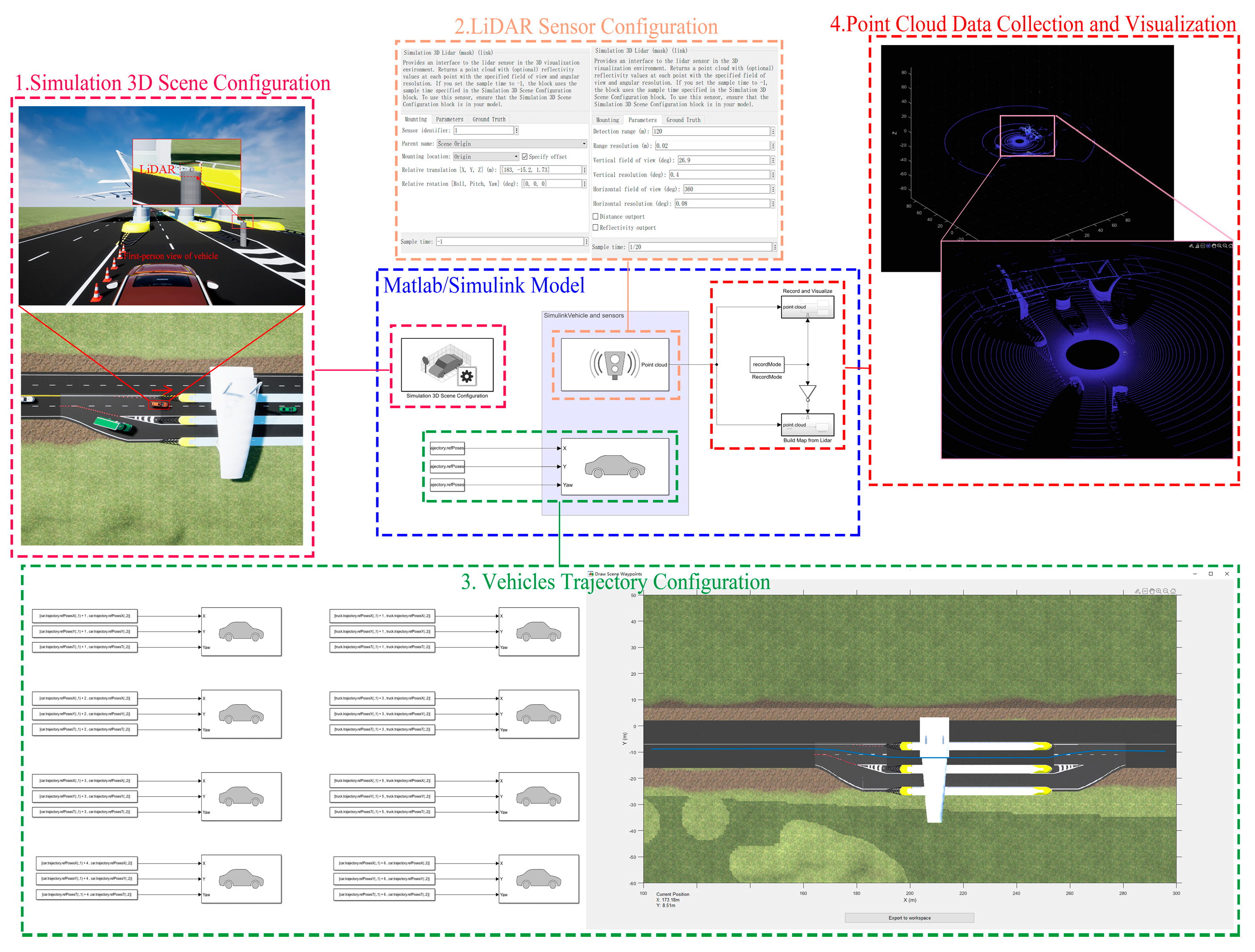

- MATLAB/Simulink dataset

4.1.2. Experimental Environment Configuration

4.2. Experimental Results and Evaluation

4.2.1. Vehicle Detection

- (1)

- Precision:

- (2)

- Recall:

- (3)

- F1-score:

- (4)

- FPS:

4.2.2. Vehicle Tracking

- (1)

- MOTA:

- (2)

- MOTP:

- (3)

- IDSW:

- (4)

- ID-F1:

- (5)

- ATSF:

5. Conclusions and Discussion

5.1. Conclusions

5.2. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Litman, T. Autonomous Vehicle Implementation Predictions: Implications for Transport Planning; The National Academies of Sciences, Engineering, and Medicine: Washington, DC, USA, 2018. [Google Scholar]

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Guo, Z.; Li, H.; Hu, X.; Yuan, J. Dair-v2x: A large-scale dataset for vehicle-infrastructure cooperative 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Orleans, LA, USA, 18–24 June 2022; pp. 21361–21370. [Google Scholar]

- Malikopoulos, A. iDriving (Intelligent Driving); Oak Ridge National Lab.(ORNL): Oak Ridge, TN, USA, 2012. [Google Scholar]

- Zhang, Z.; Zheng, J.; Xu, H.; Wang, X. Vehicle Detection and Tracking in Complex Traffic Circumstances with Roadside LiDAR. Transp. Res. Rec. 2019, 2673, 62–71. [Google Scholar] [CrossRef]

- Jin, X.; Yang, H.; He, X.; Liu, G.; Yan, Z.; Wang, Q. Robust LiDAR-Based Vehicle Detection for On-Road Autonomous Driving. Remote Sens. 2023, 15, 3160. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Yang, X.; Huang, L.; Wang, Y.; Du, R.; Wang, J.; Yang, F. A Prototype of a Cooperative Vehicle Infrastructure System: Proof of Concept—Case Study in Tongji University. In Proceedings of the Transportation Research Board Meeting, Washington DC, USA, 22–26 January 2012. [Google Scholar]

- Lin, C.; Zhang, S.; Gong, B.; Liu, H.; Sun, G. Identification and Tracking of Takeout Delivery Motorcycles using Low-channel Roadside LiDAR. IEEE Sens. J. 2023, 23, 1. [Google Scholar] [CrossRef]

- Liu, H.; Lin, C.; Gong, B.; Liu, H. Lane-level and full-Cycle Multi-Vehicle Tracking Using Low-channel Roadside LiDAR. IEEE Trans. Instrum. Meas. 2023, 72, 1. [Google Scholar] [CrossRef]

- Lin, C.; Wang, Y.; Gong, B.; Liu, H. Vehicle detection and tracking using low-channel roadside LiDAR. Measurement 2023, 218, 113159. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Maldonado, A.; Torres, M.A.; Catena, A.; Cándido, A.; Megías-Robles, A. From riskier to safer driving decisions: The role of feedback and the experiential automatic processing system. Transp. Res. Part F 2020, 73, 307–317. [Google Scholar] [CrossRef]

- Parekh, D.; Poddar, N.; Rajpurkar, A.; Chahal, M.; Kumar, N.; Joshi, G.P.; Cho, W. A Review on Autonomous Vehicles: Progress, Methods and Challenges. Electronics 2022, 11, 2162. [Google Scholar] [CrossRef]

- Lin, C.; Sun, G.; Tan, L.; Gong, B.; Wu, D. Mobile LiDAR Deployment Optimization: Towards Application for Pavement Marking Stained and Worn Detection. IEEE Sens. J. 2022, 22, 3270–3280. [Google Scholar] [CrossRef]

- Liu, H.; Lin, C.; Gong, B.; Wu, D. Extending the Detection Range for Low-Channel Roadside LiDAR by Static Background Construction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7652–7660. [Google Scholar]

- Gujjar, H.S. A Comparative Study of VoxelNet and PointNet for 3D Object Detection in Car by Using KITTI Benchmark. Int. J. Inf. Commun. Technol. Hum. Dev. (IJICTHD) 2018, 10, 28–38. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Rao, G.M.; Nandyala, S.P.; Satyanarayana, C. Fast Visual Object Tracking Using Modified kalman and Particle Filtering Algorithms in the Presence of Occlusions. Int. J. Image Graph. Signal Process. 2014, 6, 43–54. [Google Scholar] [CrossRef]

- Pale-Ramon, E.G.; Morales-Mendoza, L.J.; González-Lee, M.; Ibarra-Manzano, O.G.; Ortega-Contreras, J.A.; Shmaliy, Y.S. Improving Visual Object Tracking using General UFIR and Kalman Filters under Disturbances in Bounding Boxes. IEEE Access 2023, 11, 1. [Google Scholar] [CrossRef]

- Li, S.E.; Li, G.; Yu, J.; Liu, C.; Cheng, B.; Wang, J.; Li, K. Kalman filter-based tracking of moving objects using linear ultrasonic sensor array for road vehicles. Mech. Syst. Signal Process. 2018, 98, 173–189. [Google Scholar] [CrossRef]

- Dey, K.C.; Rayamajhi, A.; Chowdhury, M.; Bhavsar, P.; Martin, J. Vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication in a heterogeneous wireless network–Performance evaluation. Transp. Res. Part C Emerg. Technol. 2016, 68, 168–184. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, H.; Zhao, J.; Liu, H. A Novel Background Filtering Method with Automatic Parameter Adjustment for Real-Time Roadside LiDAR Sensing System. IEEE Trans. Instrum. Meas. 2023, 72, 1. [Google Scholar] [CrossRef]

- Wu, J.; Lv, C.; Pi, R.; Ma, Z.; Zhang, H.; Sun, R.; Song, Y.; Wang, K. A Variable Dimension-Based Method for Roadside LiDAR Background Filtering. IEEE Sens. J. 2022, 22, 832–841. [Google Scholar] [CrossRef]

- Song, X.; Pi, R.; Lv, C.; Wu, J.; Zhang, H.; Zheng, H.; Jiang, J.; He, H. Augmented Multiple Vehicles’ Trajectories Extraction Under Occlusions With Roadside LiDAR Data. IEEE Sens. J. 2021, 21, 21921–21930. [Google Scholar] [CrossRef]

- Jiménez-Bravo, D.M.; Murciego, Á.L.; Mendes, A.S.; Blás, H.S.S.; Bajo, J. Multi-object tracking in traffic environments: A systematic literature review. Neurocomputing 2022, 494, 43–55. [Google Scholar] [CrossRef]

- Guo, S.; Wang, S.; Yang, Z.; Wang, L.; Zhang, H.; Guo, P.; Gao, Y.; Guo, J. A Review of Deep Learning-Based Visual Multi-Object Tracking Algorithms for Autonomous Driving. Appl. Sci. 2022, 12, 10741. [Google Scholar] [CrossRef]

- Jonker, R.; Volgenant, T. Improving the Hungarian assignment algorithm. Oper. Res. Lett. 1986, 5, 171–175. [Google Scholar] [CrossRef]

- Ma, B.; Li, X.; Xia, Y.; Zhang, Y. Autonomous deep learning: A genetic DCNN designer for image classification. Neurocomputing 2020, 379, 152–161. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Miura, K.; Tokunaga, S.; Ota, N.; Tange, Y.; Azumi, T. Autoware toolbox: Matlab/simulink benchmark suite for ros-based self-driving software platform. In Proceedings of the 30th International Workshop on Rapid System Prototyping (RSP’19), New York, NY, USA, 17–18 October 2019; pp. 8–14. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

- RoboSense. RoboSense 125-Laser Beam Solid-State LiDAR: RS-LiDAR-M1 Is Officially on Sales Priced At $1,898; Business Wire: San Francisco, CA, USA, 2020. [Google Scholar]

| Parameter | Parameter Value | Parameter Description |

|---|---|---|

| Seed | 2023 | Random seeds used to disrupt the data set and validation set |

| Epochs | 300 | Number of times needed to train all samples |

| Batch_size | 16 | Batch size of the current node GPU |

| Lr | Initial learning rate | |

| Lr_type | cosin | Types of learning rate schedulers |

| Lr_min | Minimum learning rate | |

| Optimizer | SGD | Optimizer Type |

| Checkpoint_freq | 5 | Breakpoint saving frequency |

| max_objects | 50 | Maximum number of detected objects per frame |

| down_ratio | 4 | Down-sampling rate to reduce computation while maintaining point cloud features |

| Algorithm | KITTI Dataset | MATLAB/Simulink Simulation Dataset | ||||

|---|---|---|---|---|---|---|

| TP | FP | FN | TP | FP | FN | |

| PointNet++ | 536 | 78 | 83 | 483 | 37 | 42 |

| VoxelNet | 557 | 49 | 62 | 487 | 33 | 38 |

| Our method | 608 | 27 | 11 | 520 | 10 | 5 |

| Algorithm | KITTI Dataset | MATLAB/Simulink Simulation Dataset | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | FPS | Precision | Recall | F1-Score | FPS | |

| PointNet++ | 87.29% | 86.59% | 86.94% | 2.1 | 92.88% | 92.00% | 92.44% | 2.8 |

| VoxelNet | 91.91% | 89.98% | 90.94% | 5.0 | 95.67% | 92.76% | 94.20% | 6.7 |

| Our Method | 95.75% | 98.22% | 96.97% | 35.9 | 98.11% | 99.05% | 98.58% | 38.9 |

| Datasets | Method | Evaluation Metrics | ||||

|---|---|---|---|---|---|---|

| MOTA↑ | MOTP↓ | IDSW↓ | ID-F1↑ | ATSF↓ | ||

| KITTI | Traditional Hungarian algorithm | 63.15% | 0.18 | 7 | 68.30% | 0.009 |

| Our method | 88.12% | 0.20 | 0 | 95.16% | 0.018 | |

| Matlab/ Simulink | Traditional Hungarian algorithm | 69.38% | 0.16 | 23 | 65.81% | 0.007 |

| Our method | 90.56% | 0.17 | 4 | 96.43% | 0.021 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.; Sun, G.; Wu, D.; Xie, C. Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm. Sensors 2023, 23, 8143. https://doi.org/10.3390/s23198143

Lin C, Sun G, Wu D, Xie C. Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm. Sensors. 2023; 23(19):8143. https://doi.org/10.3390/s23198143

Chicago/Turabian StyleLin, Ciyun, Ganghao Sun, Dayong Wu, and Chen Xie. 2023. "Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm" Sensors 23, no. 19: 8143. https://doi.org/10.3390/s23198143

APA StyleLin, C., Sun, G., Wu, D., & Xie, C. (2023). Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm. Sensors, 23(19), 8143. https://doi.org/10.3390/s23198143