1. Introduction

The manufacturing industry is a crucial element of a country’s comprehensive national strength, and a foundational industry of its economy. The quality of manufactured goods is a determining factor in the country’s competitiveness and reputation. During the manufacturing production process, quality of the produced goods can be affected to different levels due to technical and environmental factors. Surface defects are often the most recognisable sign of product quality deterioration.

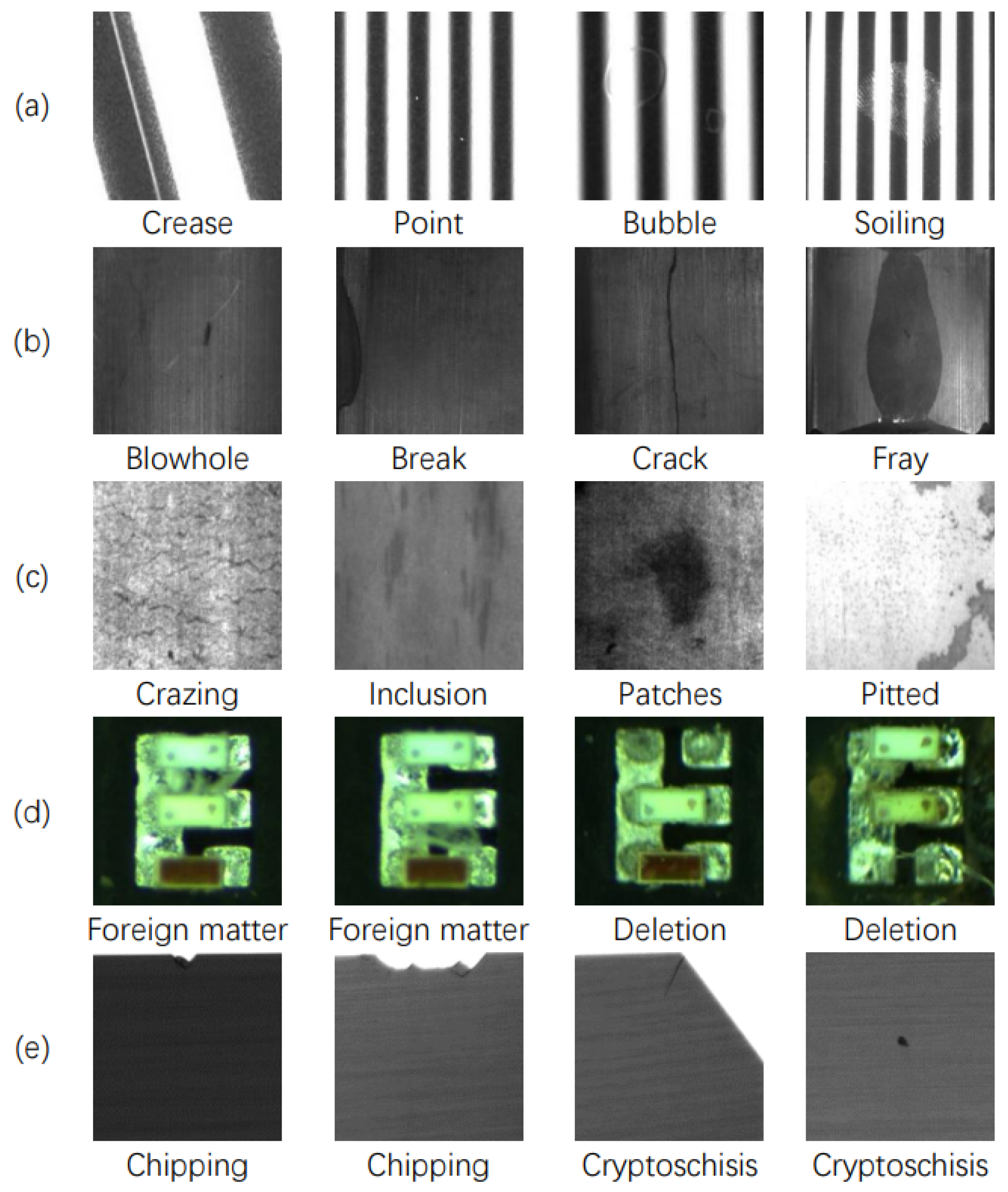

Surface defects can refer to missing, damaged, or deformed product characteristics when compared to normal specimens [

1]. Industrial surface defects are a general term for a range of surface defects that can appear on industrial product materials and affect product quality. Based on surface stiffness, industrial surface defects can be divided into two categories: surface defects of flexible products and surface defects of rigid products. Characteristically, surface defects of flexible products show bubbles, wrinkles, and point defects among others, whereas surface defects of rigid products usually present cracks, chippings, abrasion, and scratches. Common defects are depicted in

Figure 1.

Inspection of industrial surface defects has the potential to enhance product quality while also reducing manufacturing costs and time. Despite this, a large number of industrial products continue to rely on manual inspection, which makes it difficult to effectively control time, cost, and quality. This situation is not conducive to the further development of the manufacturing industry. Therefore, the development of intelligent defect detection technology is essential.

Intelligent defect detection refers to the automated or semi-automated inspection of industrial product surfaces using machine vision technology. This technology can be categorised into traditional method-based and deep learning-based methods. Traditional method-based defect detection techniques typically require manual design of feature extractors and classifiers. However, they are often difficult to adapt to complex and changing industrial scenarios and lack generalisation ability and robustness. Deep learning-based defect detection techniques can use neural networks to automatically learn defect features from a large amount of data. This leads to efficient and accurate defect detection.

However, deep learning-based defect detection techniques also encounter several challenges. Firstly, deep learning models require a large number of labelled, high-quality defect samples for training. However, obtaining these samples can be challenging due to reasons such as cost and difficulty in collecting industrial defect data.

Moreover, industrial surface defects often have a long-tailed distribution, with some defect types accounting for a large proportion of the total number of defects while others account for a small proportion. As a result, defect samples of these types are usually rare, posing a major challenge to the detection task. Deep learning-based defect detection methods face several challenges. To overcome these issues in practice, data augmentation techniques are often employed when the number of defect samples is inadequate to cover all the defect distributions they represent. Traditional data augmentation methods, such as geometric transformations, rotations and flips, are commonly utilised in deep learning models to enhance the model’s generalisation ability. However, this method can solely enhance the existing data, making it complicated to supplement other faulty patterns with additional information. To resolve this, a new approach has been proposed to supplement the defect sample data by constructing synthetic defect images. The technique involves simulating and synthesising defect images with various types, shapes, sizes, and locations utilising artificial intelligence techniques, thereby augmenting the existing dataset. This technique can introduce more unknown defect information into the deep learning model, thereby increasing the accuracy of defect detection. Several studies [

3,

4,

5,

6,

7] have demonstrated that the generated defect data can significantly augment the accuracy of defect detection. Consequently, the technique enhances the training effect and generalisation ability of deep learning models, and its use has expansive prospects and potential application in the manufacturing industry.

In the field of image generation, traditional methods are commonly used to create images via image processing techniques. Within deep learning, generative models fall into several categories including autoregressive models [

8], variational autoencoders [

9], stream-based models [

10], GAN (generative adversarial networks) [

11], and diffusion models [

12]. Since the autoregressive model requires pixel-by-pixel image generation, it has either poor performance or high cost for large-scale image generation. While the variational autoencoder is not as adept as GAN at generating detailed images due to its lack of the adversarial game concept, it generates blurred images. In contrast, the streaming model is computationally expensive and complex. However, GAN is exceptional in its performance and realistic image generation through the concept of adversarial generation, and has gained significant attention in the field of image generation since its proposal in 2014. Generative adversarial networks (GANs) have been widely applied in texture synthesis [

13,

14], image super-resolution [

15,

16,

17,

18], image restoration [

19,

20,

21,

22], image translation [

23,

24,

25], image editing [

26,

27], and multimodal image synthesis [

28,

29,

30]. A diffusion model is a type of generative model that produces high-quality images from noise. The denoising diffusion probabilistic model, introduced in 2020 [

31], is one such model. Diffusion models are interpretable because they are a type of latent variable model. Unlike other latent variable models such as variational autoencoder (VAE), the inference process of diffusion models is typically fixed. Additionally, the latent space of diffusion models is interpretable, allowing us to understand how changes in the latent variables impact the generated outputs. This can be beneficial in applications where we wish to control distinct features of the generated outputs. The intensive research on diffusion models has made it one of the most significant areas of computer vision in recent times. Diffusion models can generate higher-quality images than GANs, without requiring a discriminator model. However, they are slower and more computationally intensive to train. Although there have been many studies on diffusion models, our investigation indicates that no one has yet used them in the field of generating defect images on industrial surfaces.

Currently, there is limited research available on the topic of industrial surface defect generation.

Figure 2 shows the classification of these works. This paper categorises the methods of industrial defect image generation into traditional and deep learning methods. Specifically, traditional methods include generation methods based on computer-aided techniques and digital image processing. Deep learning methods are classified into two categories: generating defect images from noise and generating defect images from images, where generating defect images from images can be further divided into methods that require paired data and methods that do not require paired data. This paper, to our knowledge, is the first review that categorises and summarises both traditional and deep learning methods for defect image generation. As shown in

Figure 3, traditional-based defect image generation methods had relevant works earlier than deep learning-based methods which only began to emerge in 2019. Subsequently, the number of papers published on both traditional-based and deep learning-based defect image generation methods shows a consistent upward trend, suggesting an increased interest in this field. Consequently, it would be beneficial to provide a research summary and analysis of the related works in this field, which can serve as a reference for future researchers in the field of intelligent defect inspection.

This paper provides a comprehensive review and analysis of the methods for generating industrial surface defect images, which are essential for improving the performance and robustness of intelligent defect detection systems.The main contributions of this paper are as follows: Firstly, we propose a novel classification scheme for the methods of industrial defect image generation, based on whether they use traditional or deep learning techniques, and whether they require paired data or not. We also provide a detailed introduction and comparison of the existing methods in each category, highlighting their advantages, disadvantages, and applications. Secondly, we conduct extensive experiments on three representative adversarial networks (DCGAN, Pix2pix, and CycleGAN) and diffusion model (DDPM) to generate defect images on various public datasets.We evaluate the quality and diversity of the generated images using various metrics, such as FID, IS, PSNR, SSIM, and LPIPS. We also present qualitative results to demonstrate the visual effects of different methods. To the best of our knowledge, this is the first work that establishes a benchmark for industrial defect image generation using deep learning methods. Thirdly, we identify the current challenges and limitations of the existing methods for industrial defect image generation, such as data scarcity, data imbalance, defect diversity, defect realism, and defect controllability. We also discuss the possible future research directions and opportunities in this field, such as incorporating domain knowledge, exploiting multimodal data, enhancing model interpretability, and developing end-to-end networks.

The rest of the paper is organised as follows.

Section 2 describes the industrial defect image generation based on traditional methods, while

Section 3 describes the same based on deep learning.

Section 4 discusses commonly used public defect datasets and evaluation metrics for image generation while

Section 5 presents comparative experiments on industrial defect image generation using deep learning networks.

Section 6 highlights the shortcomings of existing methods and suggests future research directions. Finally,

Section 7 concludes the paper.

Figure 4 shows the overall structure of the paper.

3. Deep Learning Based Defective Image Generation

In recent years, several deep learning techniques have been employed in industrial defective image generation due to the rapid advancements in deep learning. For instance, Siu C F et al. [

45] transformed simulated defective images into real defective images using a style migration network, whereas Yun J P et al. [

46] suggested the use of a method based on a conditional convolutional variational autoencoder (CCVAE) to generate defective images. Moreover, the generative adversarial network (GAN)-based defect image generation method has gained considerable traction in research. Therefore, this section primarily aims to summarise and introduce GAN and its related applications to generate defective images.

GAN is an unsupervised deep learning model that revolves around the optimisation process of a two-player game. The generator generates a fake image to deceive the discriminator by learning the feature distribution of the training data, while the discriminator determines whether the input image is a fake image generated by the generator or a real one. Consequently, the optimisation process of GAN can be represented as a large-scale minimisation problem whose objective function is manifested by Equation (

2):

Equation (

2) defines the following variables:

G is the generator,

D is the discriminator,

z is the noise input,

is the generated data,

is the probability distribution representing the likelihood that

x is real data,

denotes the distribution of the real data, and

denotes the distribution of the generated data. The symbol

denotes the distribution of the generated data, while

E represents the expected value. Equation (

2) can be divided into two parts that reflect the generator and discriminator’s respective objective functions, as described in Equations (

3) and (

4).

Equation (

3) seeks to maximise the output of

while minimising the output of

. The discriminator’s ability to distinguish between real and generated data is critical. Similarly, in Equation (

4), the aim is to maximise the output of

to enable the generator to produce data that deceives the discriminator. This means that the generator should produce data that misleads the discriminator. GAN training can be unstable due to its adversarial nature. In order to create a stable GAN that caters to a variety of tasks, several GAN variants exist. Below is an introduction to some of the more commonly used ones.

DCGAN [

70]: DCGAN provides a convolution-based GAN structure, and DCGAN verifies that discriminators can be used for feature extraction and generators can be used for semantic vector computation.

Pix2pix [

24]: Pix2pix is a conditional GAN that introduces labelled images and performs style migration over paired data.

CycleGAN [

71]: CycleGAN contains two GAN structures, the first GAN is responsible for converting the source domain to the target domain and the second GAN is responsible for converting the target domain to the source domain as a way of transforming specific features of an image.

SAGAN [

72]: SAGAN considers global information at each level and does not introduce an excessive number of parameters, finding a good balance between increasing the feeling field and reducing the number of parameters.

StyleGAN [

29]: StyleGAN uses low to high resolution image generation and can control the visual features expressed at each level by modifying the inputs to that level.

The GAN loss has other common loss functions in addition to the adversarial loss mentioned in Equation (

2), and some common losses are described below.

Reconstruction losses:The reconstruction loss is generally used to train the autoencoder to transform at the pixel level with the loss function shown in Equation (

5):

In Equation (

5)

denotes the

n-paradigm,

n generally takes the value of 1 or 2,

x is the input data,

y is the target data,

is the encoder, and

is the decoder.

Cyclic Consistency Loss: The purpose of cyclic consistency loss is to ensure that the translated samples retain the content of their input samples, which usually requires a pair of GAN structures to compute the loss, whose loss function is shown in Equation (

6):

The same

in Equation (

6) denotes the

n paradigm,

n generally takes the value 1 or 2,

,

denote the two generators, and

x and

y denote the source domain data and the target domain data, respectively.

WGAN losses [

73]: The training of GAN is prone to instability and the problem of pattern collapse, the WGAN loss makes the training of GAN more stable by minimising the Wasserstein distance and satisfying the Lipschitz continuity [

74], there are many variants of the WGAN loss, of which Equation (

7) is the most common WGAN loss:

where

refers to uniform sampling along a straight line between a pair of real and generated samples,

is a hyperparameter,

x represents the source domain data, and

z denotes noise.

There are two types of GAN-based defect generation methods, depending on the type of input data provided to the model. One uses input potential noise vectors, while the other uses input images. Correspondingly, there are two methods—one for GANs requiring paired data and the other for GANs not requiring paired data.

3.1. Generating Defect Images from Noise

To enhance the diversity of the original data and to compensate for a small number of defect samples not covered by the distribution of defects they represent, noise can be added to the input data fed into the network to generate a random image.

Figure 9 shows the general flowchart for image generation, where

z represents noise,

G denotes the generator,

D denotes the discriminator,

x is the real defect image, and

refers to the generated defect image.

Xie Yuan [

47] employed the DCGAN model structure and included the discriminator’s output dimension to devise a semi-supervised model for generating defective images. At times, converting the noise into different forms can enhance the model’s learning capability. For instance, Jin Zhang [

48], convoluted the input noise latent space of the network into a Gaussian mixture model to enhance the image generation network’s learning capacity for limited training samples with inter- and intra-class diversity. However, none of these approaches yield high-resolution images, which are attainable through gradual progressive generation when high-quality images are imperative. For example, Dongzeng Tan [

49] proposed the training technique of progressive growth, auxiliary classifiers, and the concept of mutual information maximisation based on DCGAN to accomplish the generation of class-controllable and morphology-adjustable tire defect images, with a specified background condition. Here, progressive growth refers to the model training procedure that commences with a low-resolution image, gradually increasing in resolution, and culminating in the generation of a high-resolution image. The framework illustration is presented in

Figure 10.

To create controllable defect images or improve image quality, one can consider adding conditional information and increasing the number of discriminators or classifiers in the GAN. For instance, He Y et al. [

50] used cDCGAN to generate defect images, whereas Kun Liu et al. [

51] introduced a network structure of NSGGAN to generate defect images with three types of discriminator inputs: the generator-generated image, the real image with defects, and the genuine image without any defects. Meng Q et al. [

52] put forward a two-channel generative adversarial network architecture known as Dual-GAN, where its discriminator verifies the authenticity of the entire image and recognises the local faulty areas, tagging the defect types as well. On the other hand, Guo J et al. [

53] incorporated two fresh connections on SAGAN to balance the output of the two generators and reflect the disparities in output images between different generators. Meanwhile, Liu J et al. [

54] employed conditional GAN and employed feature information obtained from normal samples after passing through the VGG network as the conditional signal to generate defects.

To detect subtle defects or those that have some connection with the background image, an attention mechanism can be introduced to obtain a global receptive field. For instance, Wang C et al. [

4] used TransGAN to generate defect images. Hu et al. [

55] improved the quality of model generation by introducing a structural similarity loss function and an attention module based on ConSinGAN [

75]. When dealing with data imbalance problems, Li W et al. [

56] proposed a new generative adversarial network, EID-GANs, to overcome the challenge of extremely imbalanced data enhancement.

Combining label condition information in GAN with reconstructed label information can enhance the quality of generated data. ACGAN [

76] incorporates both these approaches. Moreover, Liu J et al. [

5] introduced focal loss to ACGAN, which effectively addresses the issue of data imbalance. On the other hand, Chang Jiang et al. [

57] enhanced the structure of ACGAN by branching the last layer of the model’s discriminator’s convolutional feature map. This, after spreading and fully connecting it to the output layers of judging truth and category, improved the model’s fitting ability, and made its parameter set smaller. In addition, they also removed the discriminator’s fully connected layer and added the implicit layer. On the other hand, Yu J et al. [

6] incorporated an auxiliary feature extractor before the generator of the ACGAN model. This extractor takes the extracted multi-granular features and noise as inputs to the generator.

Images can be generated for different defect data based on their characteristics. For instance, Liu Ronghua [

58] captured specific images of industrial defects and encoded their location information. Next, they input the encoded images into StyleGAN to generate new samples, and finally fused the newly generated samples with the location information using an image fusion method.

Table 3 summarizes the methods for generating defect images from noise.

3.2. Generating Defective Images from Images

Although the potential vector input method may introduce some randomness, the quality of the generated images is often inadequate, with intricate parts being especially difficult to produce. Thus, employing an image as input data can retain some essential information from the original image and produce a defect image that is more lifelike and contains more detailed texture information.

3.2.1. Methods Requiring Paired Data

For paired data, a defect image and a corresponding label map must be available. This ensures that the texture information of the background of the input image is preserved to the highest possible extent, leading to the generation of a high-quality defect image. The overall flowchart for the method is depicted in

Figure 11, where

y denotes the labelled image,

x denotes the actual defect image, and

denotes the generated defect image. Each pair comprises the images

y and

x, and the generator and discriminator are denoted by

G and

D, respectively. Pairs of

x and

y are used as inputs for the approach.

Zaman [

59] generated anomalous bone surface images using a Pix2pix model that shares a similar structure to the previously mentioned figure. Qiu [

60] incorporated labeled defect images as inputs to the CycleGAN network to guide the production of defect-free images. Liu [

61] proposed a novel framework for generating defect-free samples using GAN, which employed loss functions and a coding and decoding structure to simulate the network and generated localised defects while maintaining the defect-free region unchanged. They also introduced wavelet fusion to the process. On the other hand, Niu [

77] designed a GAN-based generative network that uses defect masks to control the location of defect generation based on the characteristics of industrial defects. They further constructed a defect direction vector module that controls the intensity of the generated defects and a defect attention module that enhanced attention to the defect region.

Table 4 summarizes the methods that require paired data.

3.2.2. Methods Non-Requiring Pairs of Data

Although methods that utilise paired data have the potential to generate high-quality defect images, in practice, acquiring the necessary paired training data can be challenging and manual labelling is often laborious and time-consuming. In this scenario, an approach similar to CycleGAN that does not require paired data can be employed to produce defect images.

Figure 12 and

Figure 13 demonstrate the general flow of the method that employs unpaired data.

Generally, methods that do not employ paired data use two loops that can be trained to generate defect images without inputting paired data. Here, x denotes the real defect image, n represents the real normal image, y indicates the labeled image, x’ stands for the generated defect image, n’ represents the generated normal image, and y’ is the generated labeled image. G1 and G2 refer to the two generators, while D1 and D2 correspond to the two discriminators.

AS shown in

Figure 14 there are two parts in the cyclic consistency loss: The first part involves transforming an input

x into

using the generator G, then sending both

and the real image

y to the discriminator

. After this,

is transformed into

using the generator F. Both

x and

are then sent to the discriminator

again, which completes the first part of the loop. In the second part, we repeat the process with input

y, which is similar to the first part of the loop. In this context,

y represents the target image and

x represents the original image. The cyclic consistency preserves the background information of

x and only transforms the corresponding transformation domain. The transformation domain refers to the area where

x and

y share certain features. For example, zebra and wild horse images share a transformation domain which is the horse. During network training, it is preferred to update the weights in areas where this can be easily implemented. For instance, This ensures that the background information of the images is preserved by updating the weights in the areas where the images share certain common features.

TsaiD M et al. [

62] then generated the desired defect images directly by CycleGAN. Rippel et al. [

63] improved CycleGAN to a segmented mapping based structure to generate defect images and introduced least squares error and spectral normalisation to improve the stability of training. Niu S et al. [

64] added D2 adversarial loss, i.e., adding an additional discriminator loss, within the GAN with a cyclic consistency structure of the GAN within the D2 adversarial loss, i.e., adding the loss of one more discriminator, which allows the model to generate more defect samples. The defect features generated by these methods are more random, and information such as defect masks can also be introduced into the network to generate the specified defect shapes. For example, Yang B et al. [

7] proposed a conditional generation GAN, which controls the shape, size, angle and other features of defects through defect masks, and also introduces the structure of cyclic consistency, while Wu X et al. [

3] proposed a ResMask GAN network for generating defect images, which uses random masks as part of the network inputs to control the locations and sizes of defect regions, and also designed two discriminative networks. They also designed two discriminators, one for judging the authenticity of the defective part of the image and the other for judging the authenticity of the whole image.

A self-encoder is a semi-supervised or unsupervised neural network which contains two parts, an encoder and a decoder; after the encoder learns the characterisation information of the input data, the corresponding characterisation information can be restored to the input information by the decoder, and its structure is shown in

Figure 15:

This structure can sufficiently extract the feature information of the image, which is very suitable for the image generation field, so the introduction of the self-encoder structure in the GAN framework can help in the generation of faulty images. For example, Hoshi T et al. [

65] added encoder and decoder structures to their generator and discriminator structures within the framework of CycleGAN network and added an attention mechanism between them, while Zhang G et al. [

66] added a weight-sharing self-encoder structure to the CycleGAN model, added adaptive noise to increase the diversity of generated defect samples in training, and added adaptive noise to increase the diversity of generated defect samples in training. By adding adaptive noise to increase the diversity of error samples generated, and adding a spatial and categorical control map to control the category and location of errors in the model,

Figure 16 shows the model structure of the method.

It is also possible to generate only for defective regions, as Yan et al. [

67] did in the Starganv2 [

75] model, which incorporates the Unet network to keep the background morphology unchanged when generating the defective image, and also incorporates the cyclic consistency loss and the target mask loss as a way to constrain the generation of defective samples.

Table 5 summarizes the methods that do not require paired data.

5. Benchmark Experiments and Analysis

Traditional methods are usually based on modelling or require expert a priori knowledge, making it difficult to apply them directly to public datasets. In addition, most of the deep learning methods investigated in this paper do not open source their code or describe the details of their implementation process, so in order to provide reference examples of defect generation methods, this paper will go from the three GAN-based generation methods and the SD(Stable Diffusion) pre-training model based on the Latent Diffusion Model (LDM), respectively, to the SD pre-training model based on the Latent Diffusion Model (LDM), The experiments are analysed from three GAN-based methods and the SD model based on the latent diffusion model (LDM), namely the method with inputs as potential vectors (StyleGAN), the method with inputs as paired data (Pix2pix), the method with inputs as unpaired data (CycleGAN), and the method with a SD model based on LoRA fine-tuning. The dataset required for this experiment should contain normal and abnormal samples as well as the labelled images corresponding to the abnormal samples, considering the size and authenticity of the dataset, this paper adopts the Magnetic-tiledefect datasets (MT Defect Dataset) as the dataset for this experiment.

Diffusion modelling is a recent research hotspot within the field of image generation, and it can be said that diffusion generation models have raised the bar in the field of image generation to a new level, especially when referring to models such as Imagen [

94] and the Latent Diffusion Model (LDM) [

95]. All of these models use the powerful text comprehension capabilities of contrastive language-image pre-training (CLIP) [

96], which is based on contrastive text-image pairs, and the high-fidelity image generation capabilities of diffusion models to achieve the mapping from text to image. Therefore, we believe that the great potential of diffusion models in the field of image generation can be applied to the generation of defect images of industrial surfaces, which can generate high quality defect images while controlling the features of defect image generation, so in this paper we have included two diffusion models to generate defect images in the experiments.

The stabilised diffusion model introduces potential space into the generation process by transforming images into potential vectors to reduce computational complexity and increase efficiency. In this way, it can significantly reduce the time and economic cost of training while maintaining the quality of generation. Although the stabilised diffusion model has a strong image generation capability, its training dataset is mainly focused on natural scenes and cannot be directly applied to industrial surface defect image generation tasks, and the resource cost of re-training is usually prohibitively high. The parameter-efficient fine-tuning (PEFT) method can solve this problem very well, and PEFT can efficiently adapt the model to different downstream application tasks without fine-tuning all parameters of the pre-trained model. There are three main categories of existing PEFT methods: adapter tuning [

97,

98], prefix tuning [

99], and LoRA (low-rank adaptation) [

100]. Adapter tuning introduces additional computation by adding a layer of adapters on top of the original model, which leads to the inference delay problem; while prefix tuning adds a layer of adapters on top of the original model, which leads to the inference delay problem. Prefix tuning is also difficult to optimise and its performance varies non-monotonically with the size of the trainable parameters. The fine-tuning method freezes the original model parameters, represents them, and constrains their updates by low-rank decomposition, which can greatly reduce the memory and storage resource consumption during fine-tuning without introducing additional inference latency. To impose controllable conditional constraints on the diffusion model, ControlNet [

101] proposes a method to enhance SD by adding conditional inputs such as graffiti, edge mapping, segmentation mapping, pose keypoints, etc. to the text-to-image generation process. It can make the generated image closer to the input image, which is a great improvement over the traditional image-to-image generation method, in the industrial surface defect generation task, the mask label can be used as a control condition for the image sampling process to guide the direction of the diffusion model generation, by controlling the region and morphology of the mask, so as to obtain the diversity generation dataset with mask, without manual labelling.

Five defect images are generated by five methods on the dataset that has been doubled by geometric transformations, and the results are shown in

Figure 18:

Observing the defect images generated by the five methods in

Figure 18, it can be seen that the defect images generated by SD+LoRA have the best background texture and defect details and the highest degree of image reproduction, and the conditional constraints can be added to the sampling and diffusion process through the mask-labelled image pairs after the addition of ControlNet to make the generative model able to control the defect generation area and shape, thus obtaining surface defect images with pixel-level labelling. The defect images generated by Pix2pix also have good background texture and defect details, but for some defects with small sample size, the generation effect is average and the generated images are not diversified enough, and the defect morphology and location are relatively homogeneous, while Cyclegan is not as good as Pix2pix in terms of background texture information. Stylegan’s background texture information is the worst, and has some distance from the background of the real image.

Common evaluation metrics are used to calculate the defect images generated by these methods separately, as shown in

Figure 19:

As can be seen from

Figure 19, the diffusion model based SD + LoRA generation method basically performs the best, and is superior to the GAN network in the vast majority of evaluation metrics, and this phenomenon is most obvious in the Fray defects with the smallest number of samples, which is mainly attributed to the powerful generalisation ability of the large model, And only a small number of samples are needed to fine-tune the model, and then we can achieve a good generation effect, in the calculation of image feature similarity FID, LPIPS, KID, IS indicators, SD + LoRA method and SD + LoRA + ControlNet method is basically the same, these two methods to generate the image is basically in the same sample space. While in the calculation of PSNR, SSIM and Sharpness Difference metrics of the construction and pixel differences between images, the method of SD+LoRA+ControlNet is significantly better than the method of SD+LoRA, which is mainly due to the fact that ControlNet is able to achieve controllable generation of defective regions and shapes in measuring the metrics of a single generated image than the random generation of SD+LoRA. In the GAN network, the Pix2pix method, which uses paired data for training, basically performs the best.This is because when paired data are used for training, the model can obtain information such as the texture, features and location of the defects of the image, so as to generate a higher quality defect image. For the two methods, Cyclegan and Stylegan, Cyclegan will perform better than Stylegan in calculating the feature similarity index of the image, For both Cyclegan and Stylegan, when the defects are relatively small or have a relatively regular shape such as bubbles and grey, Cyclegan will perform better than Stylegan in calculating the feature similarity metrics of the image, this is because when the data are not trained, the labelled images are still artificially synthesised and there is some gap between them and the actual defect shapes. Stylegan, on the other hand, learns the original data distribution directly to generate defect images, and therefore generates large or irregular defects that are more similar to the original image defects than Cyclegan. For the distribution-related 1-NN and MMD metrics, the defect samples generated by the SD+LoRA method can be well maintained in the original sample space without the phenomenon of overfitting, and the 1-NN classification accuracy hovers around 40–50%, whereas the training of the GAN will suffer from the phenomenon of overfitting, and the distribution of the generated samples will be more like the distribution of the original data, so the classification accuracy will not be around 50% and, at the same time, the value of the MMD will also be smaller.

In order to make these methods more intuitive, we also carried out the actual classification task, by the classification accuracy to determine the quality of the generated defect images, the MT Defect Dataset normal images and five kinds of defect images according to the 6:2:2 divided into the training set, the validation set and the test set to perform a six-classification task, and then add the same number of the three methods generated by the same number of the five kinds of defective images to the training set, in the Resnet101 model under the co-training of the 100 epoch, select the validation set of which the classification of the highest accuracy of the model to go to the test set to test the accuracy of the accuracy of the test set, as shown in

Table 7.

Classification accuracy after defect image augmentation using all five methods improved compared to the original data, with the method using SD + LoRA + ControlNet having the highest classification accuracy, and pix2pix ahead of a number of GAN networks such as Stylegan. Stylegan had better accuracy than Cyclegan on the validation set and worse accuracy than Cyclegan on the test set, and the classification results are generally consistent with the metrics analysis. This suggests that the good quality of the generated defect images, such as detail information, background texture information and sample distribution, is an area that can influence their classification accuracy for subsequent classification tasks. Generating high quality defect image samples can bring some performance improvement for the subsequent classification and detection tasks, and the improvement is more obvious in the scenarios with smaller sample size.

6. Discussion

6.1. Problems with GAN in Generating Defects

Although GAN has been widely used in the field of image generation, there are some problems with both the GAN itself and its application to the task of generating defects on industrial surfaces:

The GAN may go into an unstable state, causing the generator to output only a certain type of image and the discriminator to be unable to distinguish between true and false, in which case the GAN may produce duplicate or similar images that do not cover the true distribution of the data and produce images that lack diversity.

The GAN may enter an unstable state that causes the generator to output only one type of image and the discriminator to be unable to distinguish between true and false, in which case the GAN may produce duplicate or similar images that do not cover the true distribution of the data and produce images that lack diversity.

GANs require a large amount of data and computational resources to train, and the training process may oscillate or fail to converge, often requiring multiple adjustments to the model hyperparameters to make the model converge, resulting in long training times.

The objective function of GAN is a min-max problem, which needs to balance the adversarial relationship between the generator and the discriminator, and there is no clear evaluation index to measure the generation effect, which makes the GAN not easy to train, and at the same time, the training of the GAN often requires larger data samples, and industrial surface defects tend to have a long-tailed distribution, with more samples of normal samples and certain defects, and the number of defects in some categories is extremely small, even only a few in the extreme environment, which further makes it difficult to train the GAN.

In industrial environments, high-resolution images are often required because they can contain more information and details, but GANs may lose some important features or produce some artefacts and noise during the generation process [

31].

6.2. Advantages and Shortcomings of Diffusion Model in Generating Defects

The diffusion model is a probabilistic-based deep generative model that converts a data distribution into a simple prior distribution by gradually adding noise to the data, and then samples and recovers the data distribution from the prior distribution by learning an inverse denoising process. Diffusion models have demonstrated superior performance in image generation tasks, outperforming traditional methods such as adversarial generative networks (GAN) and variational autoencoders (VAE) [

12]. The diffusion model also beats GAN networks in evaluation metrics in the area of image synthesis [

102].

The stable diffusion model is a pre-trained model trained on 512 × 512 images of the LAION-5B dataset, which is advantageous for generating high-resolution images. This is due to the fact that the potential diffusion model maps the image to potential space using VQ-VAE2 and uses sampling methods such as DDIM [

103] to speed up the sampling process and reduce the number of sampling steps, thus achieving a reduction in computational cost without sacrificing image quality.From the quality of the images generated in the experiments, it can be seen that the diffusion model can be applied to industrial surface defect image generation, which has better stability and interpretability compared to the traditional GAN.

However, for high-resolution image generation tasks, time and economic cost are the main factors limiting the application of the diffusion model. At present, the generation speed of the diffusion model is still not superior to that of the GAN. In terms of model structure, the sampling speed of the diffusion model is relatively slower than that of GAN, and the sampling of GAN only requires one neural network feedforward. The diffusion model requires T-step feedforward. In the experiments, the average time of Pix2pix, Cyclegan, Stylegan model to generate an image of 256 × 256 size is 0.02925 s, 0.07725 s, 0.04969 s, respectively, while the stable diffusion model + LoRA takes 2.28 s to generate an image and the stable diffusion model + LoRA + ControlNet takes 3.67 s to generate an image. Moreover, the StyleGAN-T model proposed by Sauer et al. [

104] is about 30 times faster than the stable diffusion model in the inference stage.

6.3. Shortcomings of Existing Methods for Imaging Defects on Industrial Surfaces

Despite many advances, there are still some shortcomings of the current methods for defect imaging on industrial surfaces:

Existing datasets of industrial surface defects are relatively small, and there is a lack of a common large canonical industrial defect dataset as a benchmark for defect model generation. In addition, all existing deep learning methods require real defect images for training, but in practice the number of certain types of defects is very small, single sample or even zero sample, but the existing methods pay less attention to this point.

Most of the current defect image generation methods are specific to the characteristics of a particular defect image, and once you change a defect image, you have to design a new network or structure, etc., so there is a need to study methods with more generality.

Existing commonly used evaluation metrics generally calculate the gap of a certain aspect to judge the quality of the generated image, but this is not fully applicable to defect images. Defect images are different from general image generation tasks, with problems such as complex defect morphology, large defect size range, and interference from background information, so there is a lack of an index that can evaluate the quality of generated defect images in a more objective and comprehensive way.

6.4. Future Research Directions

By combining the current research progress in defect image generation, it is possible to look forward to future research directions.

Traditional methods have the advantages of speed and interpretability over deep learning methods, so it is also interesting to investigate how to generate images of multiple defect types with similarity to real defects using traditional methods.

Diffusion models have better stability and interpretability than generative adversarial networks (GANs), which are usually based on two neural networks playing off each other, one to generate samples and the other to discriminate between real and generated samples. This approach typically requires a large amount of training data and computational resources, and suffers from training instability and pattern collapse. The diffusion model, on the other hand, is based on traditional mathematical models and has better stability and interpretability. It does not require a large amount of training data and can learn and predict from a small amount of data. In addition, the diffusion model can control its sensitivity and robustness by adjusting the model parameters to adapt to different data distributions and noise situations.

In addition, in practice, the number of a certain defect image may be single digit or even only one, this time how to go through one or more images to generate defect images need to be studied, there is related work with a single defect image generation [

55], the future can be combined with the relevant structures and methods in small-sample learning or zero-sample learning to perform the defect image generation.