1. Introduction

Binocular or stereo vision, used in diverse areas like autonomous driving [

1,

2], robot navigation [

3,

4], 3D reconstruction [

5,

6,

7], and industrial inspection [

8,

9], employs two identical cameras to capture two images of the same target from different vantage points. The disparity between these images enables 3D reconstruction and measurement, the accuracy of which is heavily dependent on the precision of the camera calibration and stereo matching methods, thus making these processes key research subjects in stereo vision. During the camera calibration process, it is crucial to estimate both the intrinsic parameters, such as the focal length and optical centers, and the extrinsic parameters, like the camera’s position and orientation in space. These directly influence the calculation of 3D spatial information [

10]. Camera calibration significantly impacts the accuracy of the reconstruction and measurement, thereby playing a crucial role in binocular vision.

Camera calibration techniques mainly fall into two categories: self-calibration and photogrammetric calibration [

11]. Self-calibration methods, though not needing a calibration object, rely on sequences of images from an uncalibrated scene and often entail complex computations [

12,

13,

14] and low in accuracy [

5,

15]. Conversely, photogrammetric methods, including the popular methods such as Zhang’s [

16], Tsai’s [

17], Bouguet’s [

18], and Heikkila and Silven’s [

19] methods utilize geometric information from a calibration object, such as a checkered board with known geometry, to determine camera parameters. The latter methods minimize

through feature point detection and optimization, providing more accurate information about camera characteristics.

Zhang’s camera calibration method [

16] has gained significant attention due to its simplicity, robustness, and high degree of accuracy. One of the key advantages of Zhang’s method is that it only requires a simple calibration object, such as a checkered board, which is easy to fabricate and measure. This stands in contrast to other photogrammetric methods that may require specialized calibration objects or more complicated patterns. The method is based on the fundamental matrix theory, which determines the intrinsic (i.e., focal length, lens distortion coefficients, and the position of the image center) and extrinsic (i.e., camera position and orientation) parameters of a camera by measuring the geometric relationship between the camera and the calibration board. The method requires at least three pairs of images of a checkered board with known dimensions taken from different positions and orientations within the camera’s field of view (FOV). Then, a corner detection algorithm is employed to detect the corners in each image, i.e., the intersections between the black and white squares, and the image coordinates of the detected corners are matched with their known 3D coordinates in the checkered board. Subsequently, least-squares minimization, i.e., the Levenberg–Marquardt algorithm, is used to search for determining the camera’s intrinsic and extrinsic parameters that yield minimum geometric error between the projected 3D calibration points and their corresponding 2D image points. The lens distortion coefficients, such as radial and tangential distortions, are also estimated and corrected.

Owing to the simplicity, flexibility and robustness of Zhang’s method, many researchers have developed new camera calibration algorithms that incorporate Zhang’s method to improve calibration accuracy. Rathnayaka et al., 2017, proposed two new calibration methods that use a specially designed, colored checkered board pattern and mathematical pose estimation approaches to calibrate a stereo camera setup with heterogeneous lenses, i.e., a wide-angle fish-eye lens and a narrow-angle lens [

11]. One method calculates left and right transformation matrices for stereo calibration, while the other utilizes a planar homography relationship and Zhang’s calibration method to identify common object points in stereo images. Evaluations based on

and image rectification results showed both methods successfully overcome the challenges, with the planar homography approach yielding slightly more accurate results. Wang et al., 2018, proposed a non-iterative self-calibration algorithm to compute the extrinsic parameters of a rotating (yaw and pitch only) and a non-zooming camera [

13]. The algorithm utilizes a single feature point to improve the calibration speed and Zhang’s method was employed in advance to calculate the intrinsic parameters of the camera. Although their results are slightly less accurate than those calibrated by Zhang’s method alone, their work demonstrates its potential for real-time applications where extremely high calibration accuracy is not required. Hu et al., 2020, introduced a calibration approach for binocular cameras that begins with Zhang’s technique to obtain an initial guess of the rotation and translation matrix [

5]. This guess is then refined using singular value decomposition and solved via the Frobenius norm, and is further refined through maximum likelihood estimation, generating a new calculation for the relative position matrix of the binocular cameras. The Levenberg–Marquardt algorithm is employed for additional refinement. Their results show significant improvement over the traditional Bouget’s and Hartley’s algorithms. Liu et al., 2022, proposed a multi-camera calibration stereo calibration method focusing on the high-precision extraction of circular calibration pattern features during the calibration process [

20]. The Franklin matrix, used for sub-pixel edge detection and the image moment method, was used to obtain the characteristic circle center, while the calibration was realized using Zhang’s method. Their work shows that calibration effects can be improved by focusing on the high-precision extraction of calibration features.

Calibration targets also play a significant role in camera calibration. Although using a checkered board pattern as the calibration target does have the advantages, such as simplicity, stability, and a generally higher accuracy than self-calibration, it has the downside of being susceptible to illumination and noise in corner feature extraction and matching. Circular patterns have been proposed to address the problem [

21]. Recently, Wei et al., 2023, presented a camera calibration method using a circular calibration target [

22]. Their method operates in two stages. The first stage involves extracting the centers of the ellipses and formulating the real concentric circle center projection equation by leveraging the cross-ratio invariance of collinear points. The second stage involves solving a system of linear equations to find the other center projection coordinates, taking advantage of the fact that the infinite lines passing through the centers are parallel. Unlike other circular calibration algorithms that directly fit ellipses to estimate the centers of circular feature points, their method not only corrects errors that arise from using the ellipse’s center but also significantly improves the accuracy and efficiency of the calibration process. The proposed method reduces the average

to 0.08 pixels and achieves real-world measurement accuracy of 0.03 mm, making it valuable for high-precision 3D measurement tasks. Recently, Zhang et al., 2023, proposed a flexible calibration method for large-range binocular vision systems for complex construction environments [

23]. The calibration method consists of two stages. In stage 1, lenses are focused closer to the cameras, and non-overlapping fields of view (FOVs) are calibrated using virtual projection point pairs through Zhang’s method and nonlinear optimization. Fourteen sets of clear checkered board images are acquired for this purpose. Then, the lenses are focused on the measurement position in stage 2, where three sets of blurred three-phase shifted circular grating (PCG) images are taken. The final extrinsic parameters for the binocular camera are computed using state transformation matrices for calibration. The method has demonstrated robustness under varying target angles and calibration distances, with a mean

of only 0.0675 pixels and a relative measurement error of less than 1.75% in their experiment.

While Zhang’s method ensures accurate calibration outcomes when viewing a planar target from various angles, the selection of these differing orientations relies largely on empirical knowledge, which could potentially lead to variations in the calibration results [

24]. Furthermore, it is often necessary to manually adjust the calibration object’s position, a process that introduces uncertainties due to instability and shaking, resulting in inconsistent positioning of the calibration plate across images captured by different cameras, and thus introducing inherent errors that directly affect the calibration process’s accuracy [

15]. Moreover, the calibration procedure requires a significant amount of time and effort [

13].

The present study seeks to address numerous key issues related to camera calibration in the process of capturing checkered board images. In particular, it aims to optimize this process by identifying the optimal distance and angle for calibration, consequently enhancing the calibration results. Different from the existing literatures, this study places emphasis on gaining a comprehensive understanding of the factors that affect in the calibration process, specifically the roles of various displacement and orientation factors, and how they might be manipulated independently for better calibration efficiency. This leads to four principal research questions which revolve around understanding the impacts of distance factors (D, H, V) and orientation factors (R, P, Y) on , determining the effects of combined distance and orientation factors on , and exploring the feasibility and efficiency of conducting the calibration process separately for different factor groups. To address these, the research objectives include identifying the most influential distance and orientation factors, investigating the combinations of factors that minimize , and evaluating the efficiency of separately conducting the calibration process for different factor groups. The overarching goal is to develop more efficient and effective calibration procedures for various applications.

2. Methodology

2.1. Experiment Setup

Three different cameras are used in this study. The details of the cameras are presented in

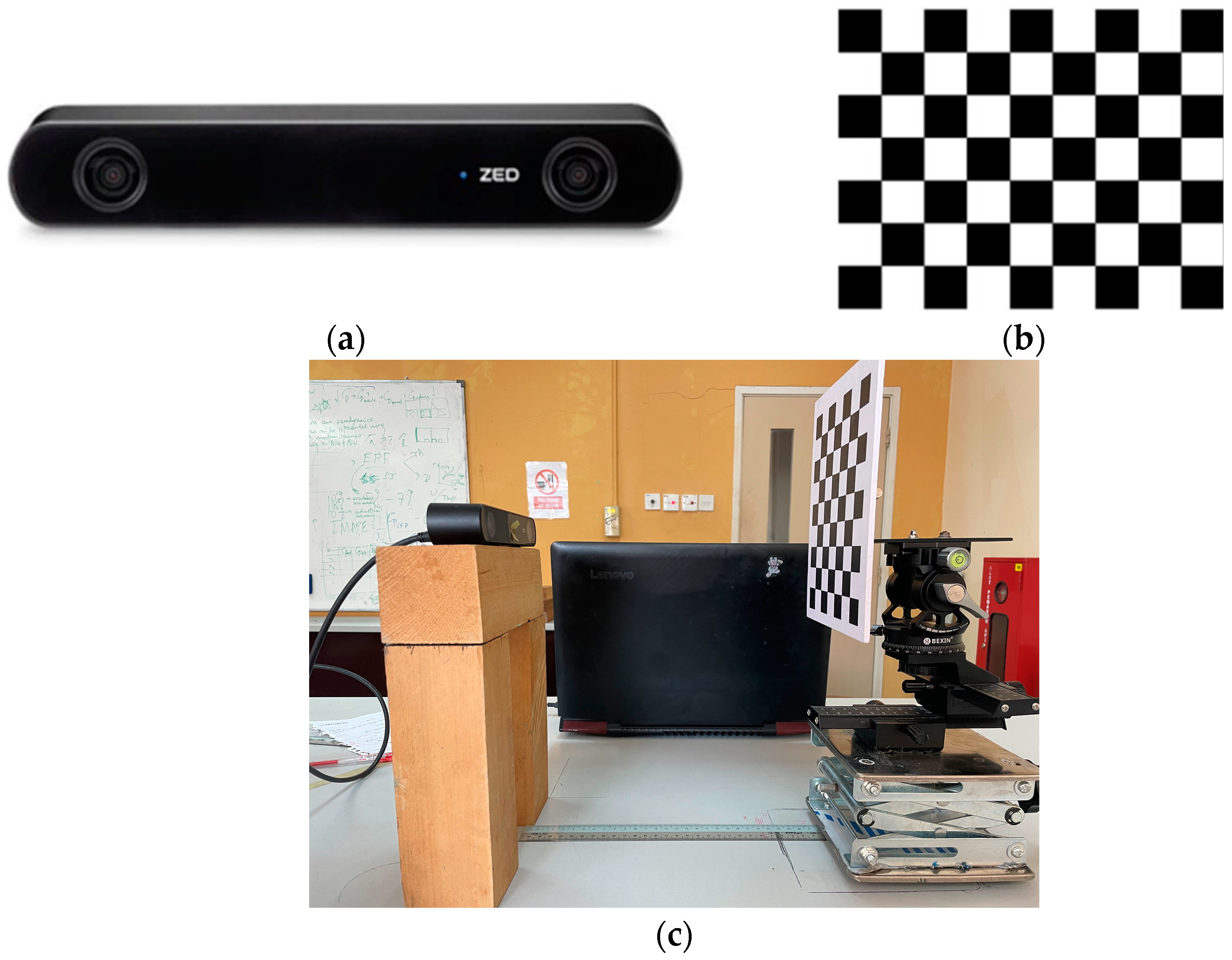

Table 1. However, only the calibration process of the ZED2i binocular camera [

25] is presented in this study.

For camera calibration, a checkered board consisting of 2 cm alternating black and white squares arranged in a grid pattern of nine columns and seven rows is employed, as shown in

Figure 1b. The checkered board is attached to the camera calibration rig as shown in

Figure 1c. The Stereo Camera Calibration Toolbox for MATLAB [

18] is used for calibration, where the inner corner points are detected using the Harris corner algorithm [

26]. The checkered board size is fed to the algorithm and the actual number of detected corner points is 48. The high contrast between black and white aids in accurately detecting and localizing the inner corners of the squares, which are crucial for camera calibration algorithms.

Since binocular camera calibration necessitates capturing images from various directions and angles, a camera calibration rig was designed to securely hold the checkered board, ensuring precise distances and orientations for calibration purposes. The components of the calibration rig and its final assembly are depicted in

Figure 2. The rig comprises four main components: an LD2R dual panoramic gimbal for yaw (Y) and pitch (P) adjustments; a two-way macro frame for left and right (H) and depth, i.e., front and back (D) displacements; a lift table for vertical (V) movements; and an RSP60R axis rotation stage for rotation (R). These adjustments ensure that the checkered board is distributed across different quadrants and acquired from various positions and angles.

2.2. Zhang’s Camera Calibration

Zhang’s calibration method [

16] was used in this study to perform the calibration task due its simplicity and robustness. Mathematically, given the point

represents the coordinates of each corner point on the checkered board in the world coordinate system, its homogeneous coordinate is

. In the pixel coordinate system, this point is denoted by

, while its homogeneous coordinate is

. The relationship between the world coordinate and the image coordinate is described by the camera model, as follows:

where

is the proportionality coefficient and

and

are the extrinsic and intrinsic parameter matrices of the camera, respectively. By assuming that

for the planar board, the equation can be further simplified:

where

is a

homography matrix, defined as follows:

and

is a proportional factor. The homography matrix describes the transformation between two images of the same planar scene. Before calculating the homography matrix for camera calibration using a checkered board, it is necessary to configure the parameters for the world coordinates of the checkered board. This includes details such as the board’s overall size, the size of each square, and the number of detected corner points on the checkered board.

The fundamental constraints for the camera’s intrinsic parameters are as follows:

By using the properties of the rotation matrix , the unit orthogonal vectors and can be obtained. The camera intrinsic parameters can be determined by solving , using Equation (4).

is the error between the reprojected point, obtained by projecting the calibrated image point onto the camera coordinate system and its actual coordinate. This metric is used to assess the accuracy of camera calibration results in this study. As shown in

Figure 3, after calibrating the camera using its intrinsic and extrinsic parameters, the point

is reprojected onto the image plane, resulting in a new point

, with pixel coordinates

. The calibration

is calculated as the Euclidean distance between the reprojected pixel coordinates

and the actual calibrated pixel coordinates

. The formula for the

is given by Equation (5).

2.3. Design of Experiment (DoE)

A 2

k full factorial with replication design of experiment method [

27] is employed to analyze the influence of each factor and their interactions on

in the camera calibration. A 2

k full-factorial DoE is a type of experimental design used to study the effect of

independent factors at 2 levels each (i.e., the low and high bounds) on a response variable. This allows for all possible combinations of the factors at all levels to be investigated, providing a comprehensive view of the entire experimental space.

Figure 4 depicts the

factor design space, where each corner point of the cube represents the combination of the factors

and

. With replication, i.e., by repeating the entire experiment with more than one operating condition, the errors or uncertainties introduced by the other variables can be estimated and the significance and interactions of the independent factors can be revealed.

The factors involved in this study can be divided into two categories: orientation factors (R, P, and Y) and displacement factors (D, V, H). Given that Zhang’s method requires a minimum of three sets of images for calibration, specific variations must be incorporated into each experimental set. Consequently, the calibration experiment must be designed to exclude some factors to accommodate these variations. In this study, the experiments are configured as follows: Method A employs displacement factors for calibration while introducing variations in orientation. Method B utilizes orientation factors for calibration and introduces variations in displacement. Method C incorporates the two most significant factors from both orientation and displacement categories while introducing variations to the remaining factors.

The bounds for displacement and orientation factors are set at ±3 cm and ±10°, respectively. These displacement boundaries are designed to ensure that the checkered board stays within the field of view (FOV) of the camera, considering the minimum distance between the board and the camera. Upward, leftward, and forward displacements are categorized as positive, while downward, rightward, and backward displacements are considered negative. As for the orientation limits, the specific values are chosen based on experimental data to guarantee that all corners of the images captured by the camera can be detected using Zhang’s method. Positive orientations are defined as up pitch, right yaw, and left roll, whereas negative rotations are classified as down pitch, left yaw, and right roll. The convention for sign determination is presented in

Figure 5.

The experiment process starts by generating the 2k full-factorial design points using the factors of interest. For methods A and B, each involves a factor group of three factors with two levels, resulting in a total of = 8 combinations for each experiment. On the other hand, for method C, the two most important factors from each factor group are selected to construct the design points, resulting in = 16 combinations.

Then, Latin Hypercube Sampling (LHS) is used to select 20 points from the remaining factors to introduce variations for Zhang’s method. LHS is used to ensure that the variations introduced by the remaining factors are well distributed within the multidimensional sampling space, allowing them to be treated as random variables in the analysis. The calibration process is presented in

Figure 6.

The interaction effects response model,

, is built to describe the relationship between the factors and the response variable, i.e.,

:

In Equation (6), is the intercept term, which is the baseline level of the response when all factors are zero. The terms are the model coefficients for the main effects of the factors , respectively. Each represents the change in for a unit change in when all other factors are held constant. A high absolute value of indicates that its corresponding factor has a strong influence on , and vice versa. The term denotes the interaction effects between the factors and . Finally, is the random error term, describing the uncertainty or unexplained variability in the model.

Bayesian Information Criterion (BIC) is used to quantify the trade-off between the goodness of fit of the response model and its complexity [

28]:

where

is the maximized value of the likelihood function of the response model,

is the sample size, and

is the number of parameters estimated by the model. The term

is the penalty function for model complexity, to discourage overfitting by keeping the number of

low. The term

is the measure of the goodness of fit of the model to the data, where smaller values indicate a better model fit. In model selection, model with the lowest

is considered the best.

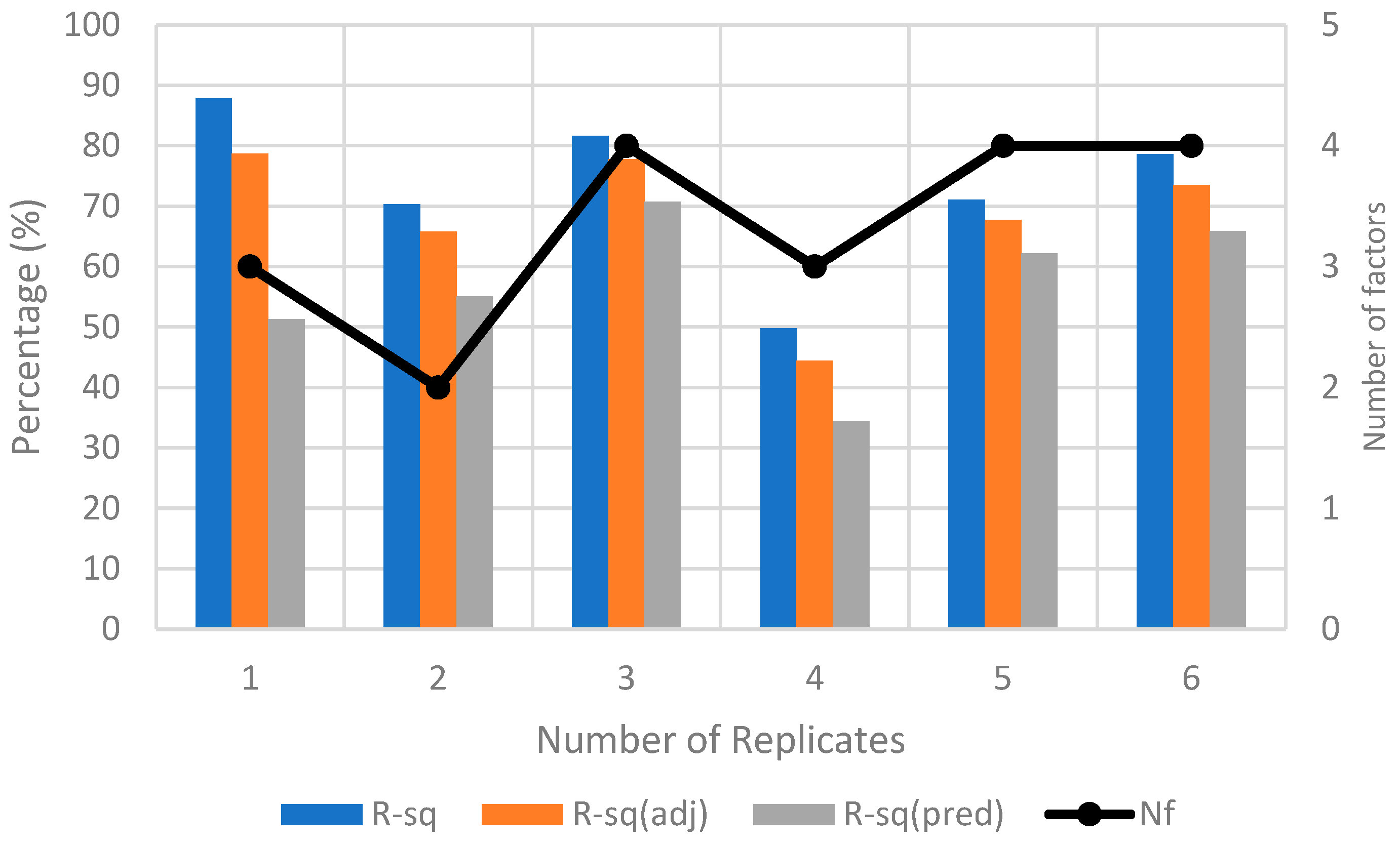

Analysis of variance (ANOVA) is used to determine which factors are statistically significant in the response. The

R2,

R2-adjusted,

R2-predicted, and the number of significant factors (

), are used to judge whether additional replication is needed to yield a stable response. In this study, MINITAB [

29] is used to generate the factorial points and perform the DoE analysis. The experimental procedure is summarized in

Figure 7.

4. Conclusions

This paper adopts Zhang’s camera calibration method, combined with the 2k full-factorial DoE and LHS methods, to minimize in camera calibration. In general, the results of the experiments conducted for methods A, B, and C provide valuable insights into the factors affecting in the calibration process.

For method A, which used only displacement factors for camera calibration, it was found that factors

D,

H, and

V are linearly correlated to

for all three cameras involved in this study. Factor

D was found to be the most influential factor on

for all cameras, followed by V and H for the ZED2i camera, and H and V for the D435i and HBVCAM cameras. However, a closer examination of

Figure 9a reveals that both the H and V factors are equally important. The factorial point with

D =

H =

V = −3 cm exhibited the lowest

, confirming the predictions made by the linear regression model. The experiment demonstrated that by minimizing the values of the displacement factors,

can be reduced by 25% on average compared to Zhang’s method.

On the other hand, for method B, which calibrates using the orientation factors, it was observed that factors and are more influential than on for all cameras. The factorial point with R = −10° and P = Y = 10° resulted in the minimum . The findings were consistent with the predictions made by the response models, highlighting the importance of controlling the orientation factors to minimize . On average, method B can reduce the by 24% compared to Zhang’s method.

For method C, where two of the most influential factors from distance and orientation factors were selected for calibration, the minimum was obtained at the factorial point with R = −10°, P = 10°, and D = V = −3 cm; this is consistent with the results from methods A and B. Although the method requires 10% more images compared to methods A and B combined, method C and produce a consistent minimum with a reduction of more than 30% compared to Zhang’s method.

Comparing the results of methods A, B, and C, it is evident that combining the two most significant factors from methods A and B would also result in the minimum for method C. Specifically, the combination of orientation factors R and P and displacement factors D and V or H can achieve the minimum value for method C. Furthermore, it was found that the calibration process could be conducted separately and more efficiently by using the two factor groups independently to achieve more than 20% improvement in compared to Zhang’s method alone. This approach required fewer calibration images, about 70% and 40% fewer for methods A and B, respectively, compared to method C. For applications where a lower value of is required, method C is recommended.

Systematic camera calibration plays an important role in 3D reconstruction as it greatly influences the accuracy and reliability of the resulting 3D models. Accurate calibration ensures the precise determination of both the intrinsic and extrinsic parameters of a camera. This precision enables the correct mapping of 2D image data to real-world 3D coordinates. Optimizing camera calibration enhances the geometric fidelity of 3D reconstruction, paving the way for a wide range of applications in fields like computer vision, robotics, and augmented reality. Thus, it serves as the cornerstone underpinning the entire process of translating 2D images into accurate 3D reconstructions.

Overall, these findings contribute to a better understanding of the factors affecting in calibration processes, enabling more efficient and effective calibration procedures in various applications. The proposed methods can be used to improve the calibration accuracy for stereo cameras for the applications in object detection and ranging. However, the proposed systematic camera calibration experiment involves a substantial amount of repetitive testing and the capture of numerous calibration checkered board photos, which consumes a significant amount of manpower and time resources. Additionally, camera distortions have a pronounced impact on camera calibration as they alter the geometric properties of camera imaging, subsequently affecting the precise mapping relationship between pixel coordinates and real-world coordinates. These two limiting factors will be subjected to in-depth exploration and discussion in future research.