Improving Estimation of Layer Thickness and Identification of Slicer for 3D Printing Forensics

Abstract

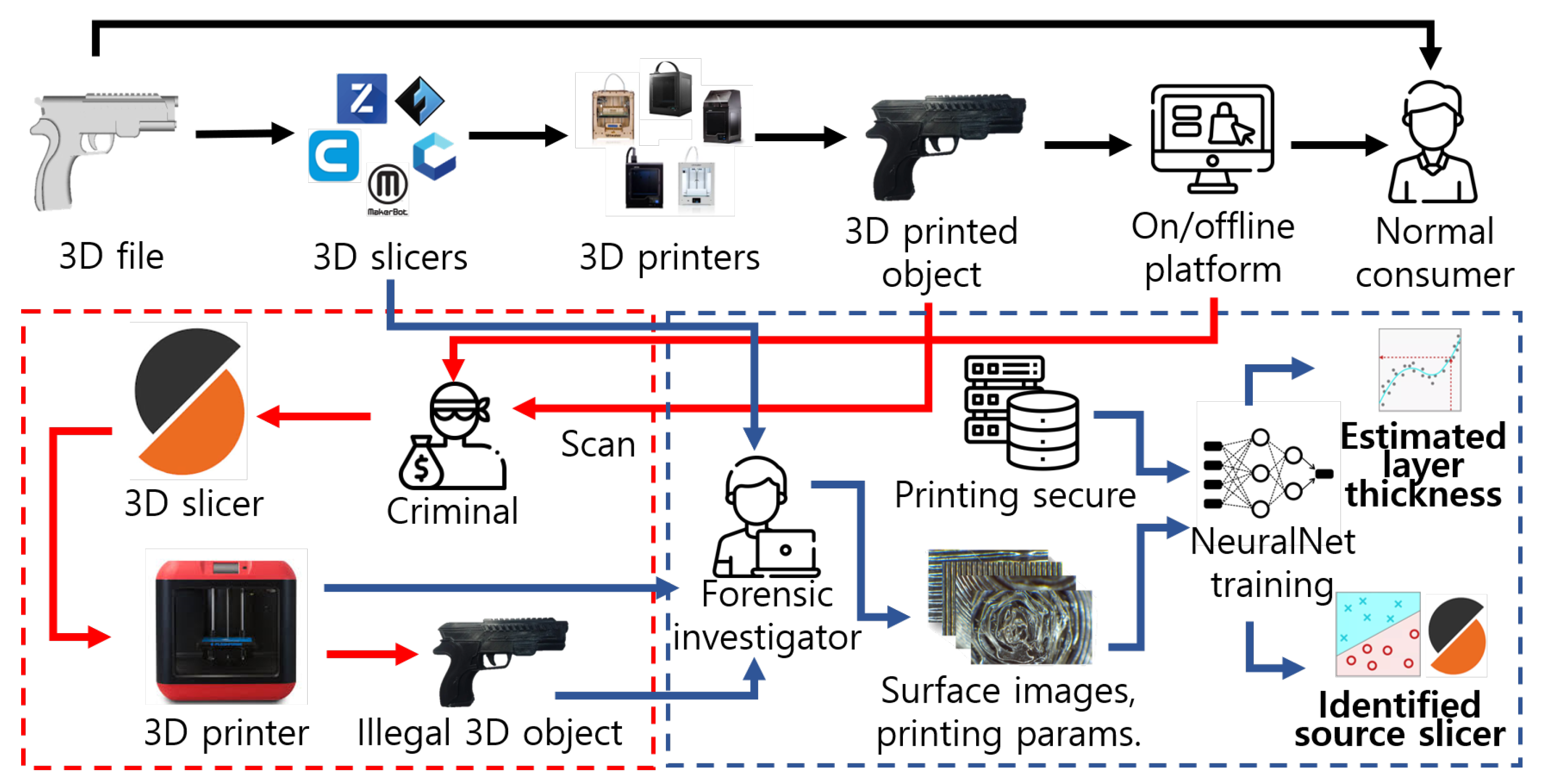

:1. Introduction

- The paper proposes a new regression-based approach using a vision transformer model for layer thickness estimation in 3D printing forensics, which outperforms previous methods and can handle a broader range of data.

- The paper introduces slicing program identification as a new research direction in 3D printing forensics, which can provide valuable clues in identifying the source of 3D printed objects.

- The paper emphasizes the importance of collecting more detailed datasets for the advancement of 3D printing forensics, which can lead to more effective tools and techniques for forensic investigators.

2. Background

2.1. 3D Printer Categorization

2.2. 3D Printing Characterization Factors

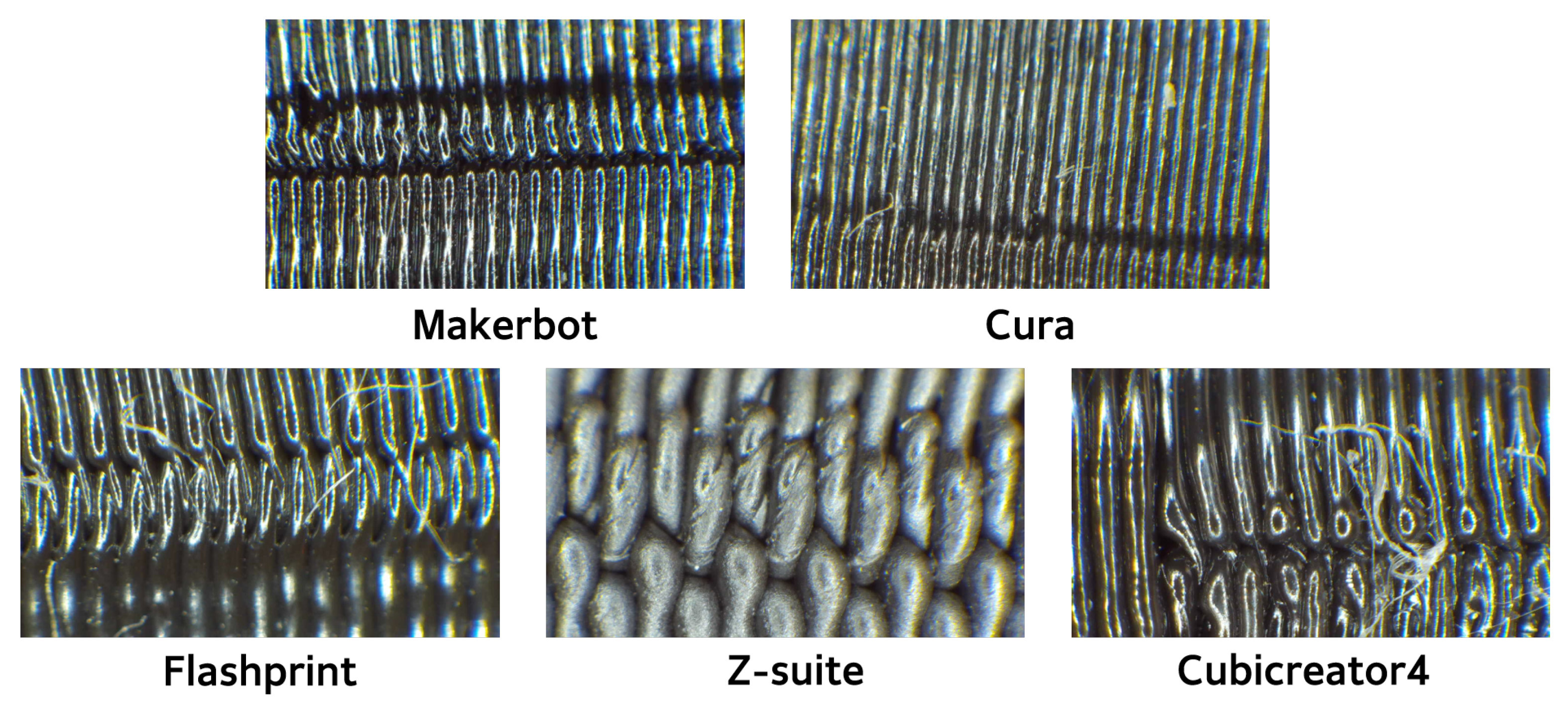

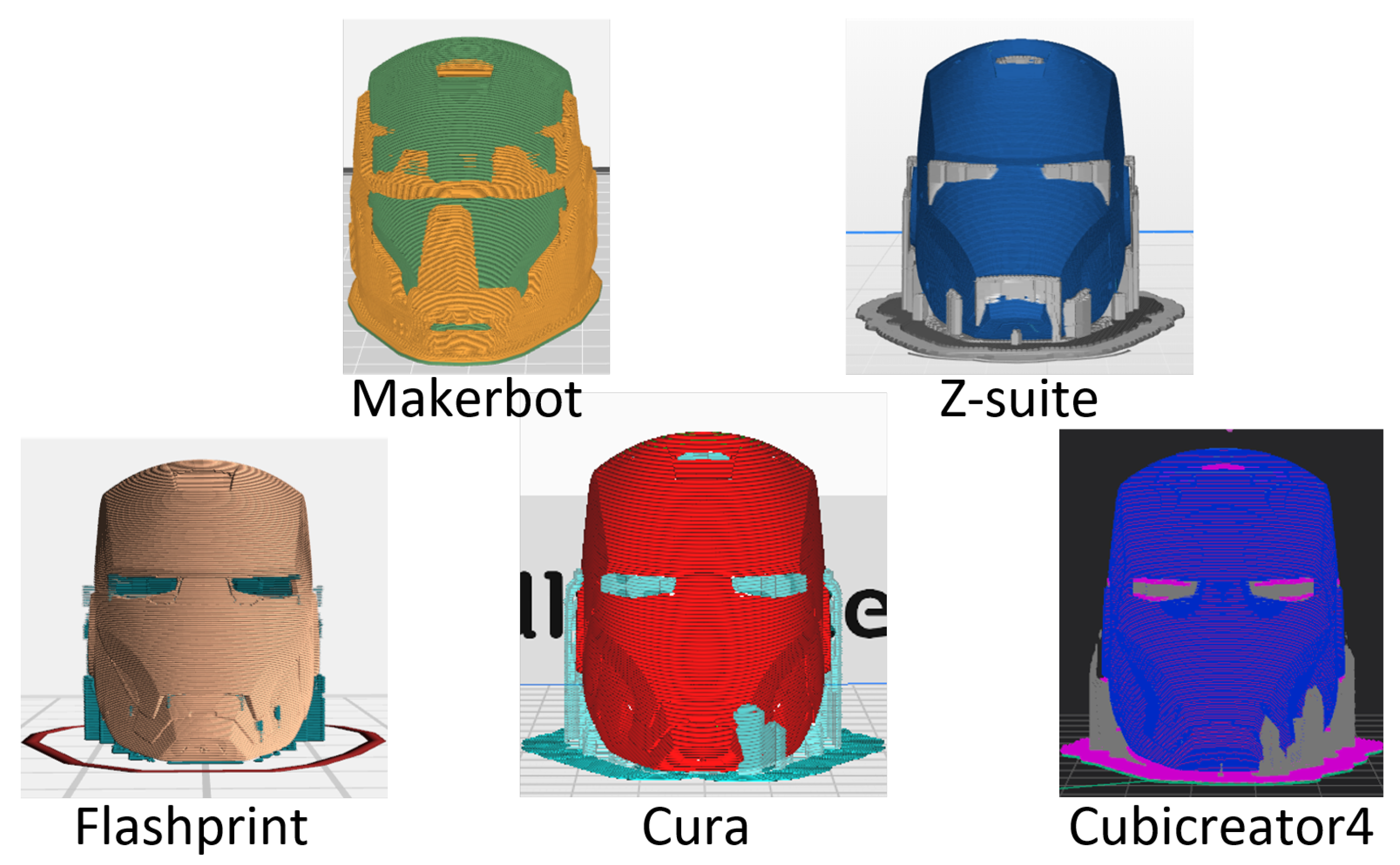

2.2.1. Software Domain

2.2.2. Hardware Domain

2.2.3. Other Factors

3. Methods

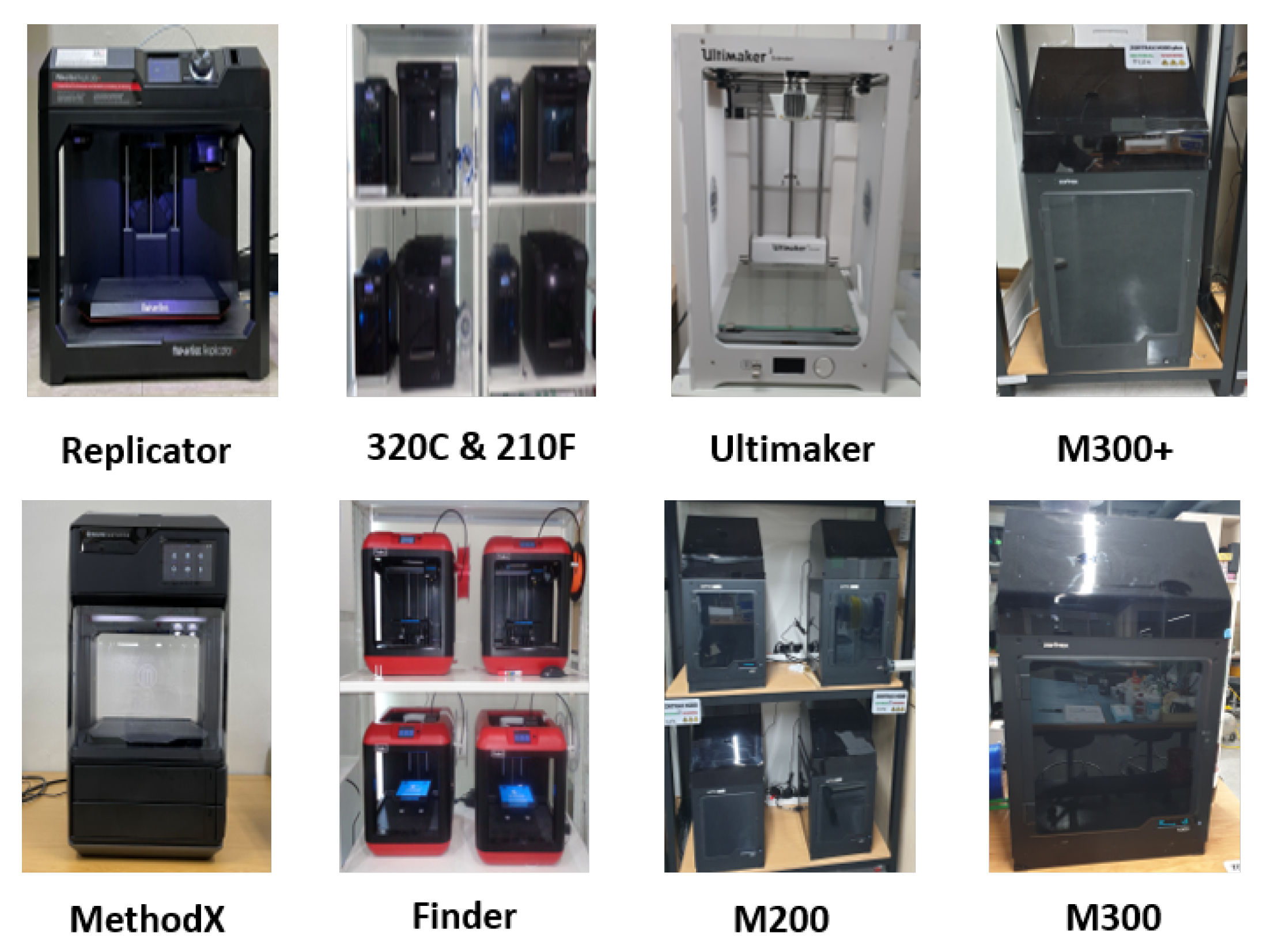

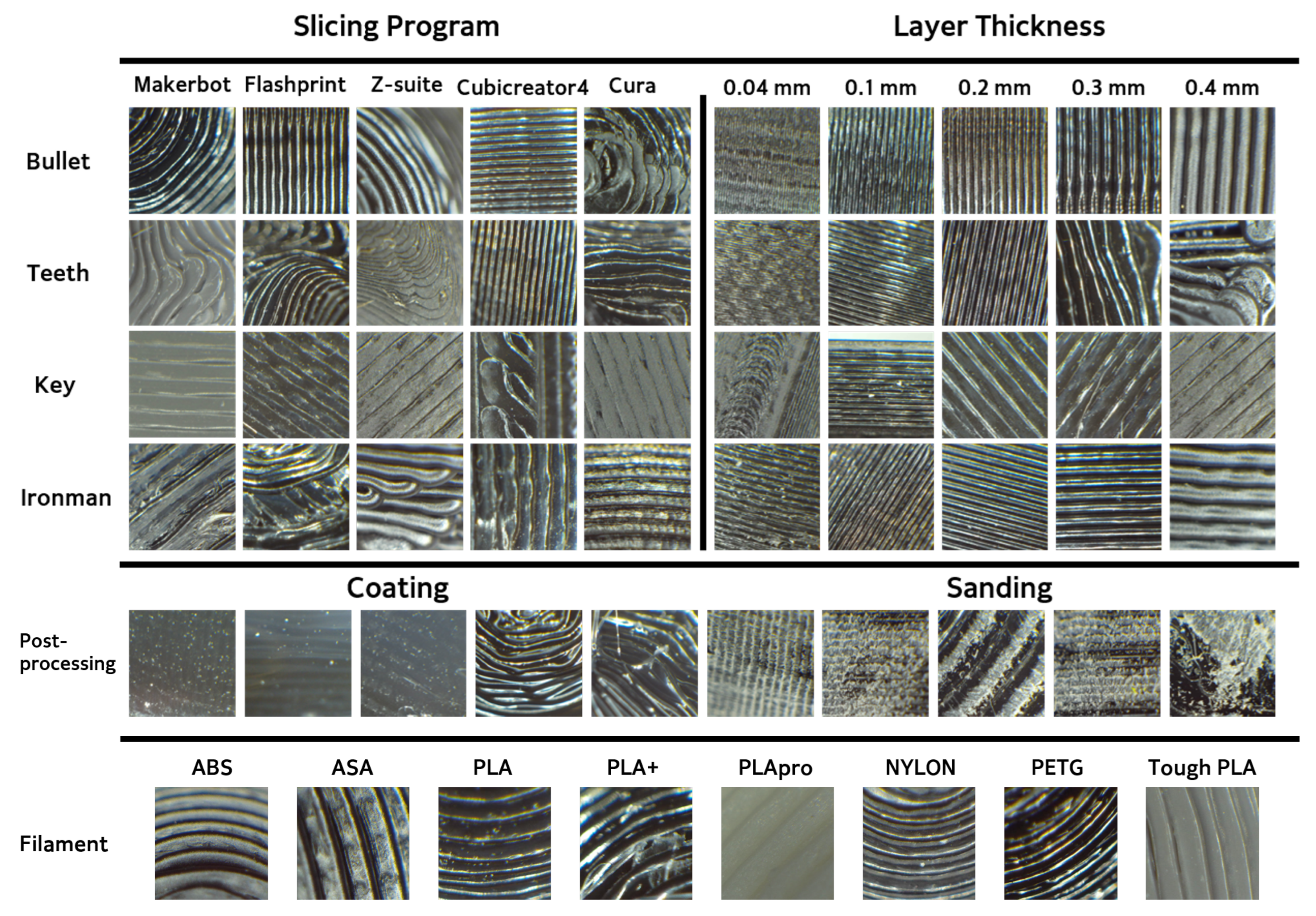

3.1. Collecting Dataset

3.2. Source Attribution Analysis

3.2.1. Slicer Program Identification

3.2.2. Layer Thickness Estimation

3.3. Proposed Methods

3.4. Evaluation Metrics

4. Results

4.1. Layer Thickness Estimation

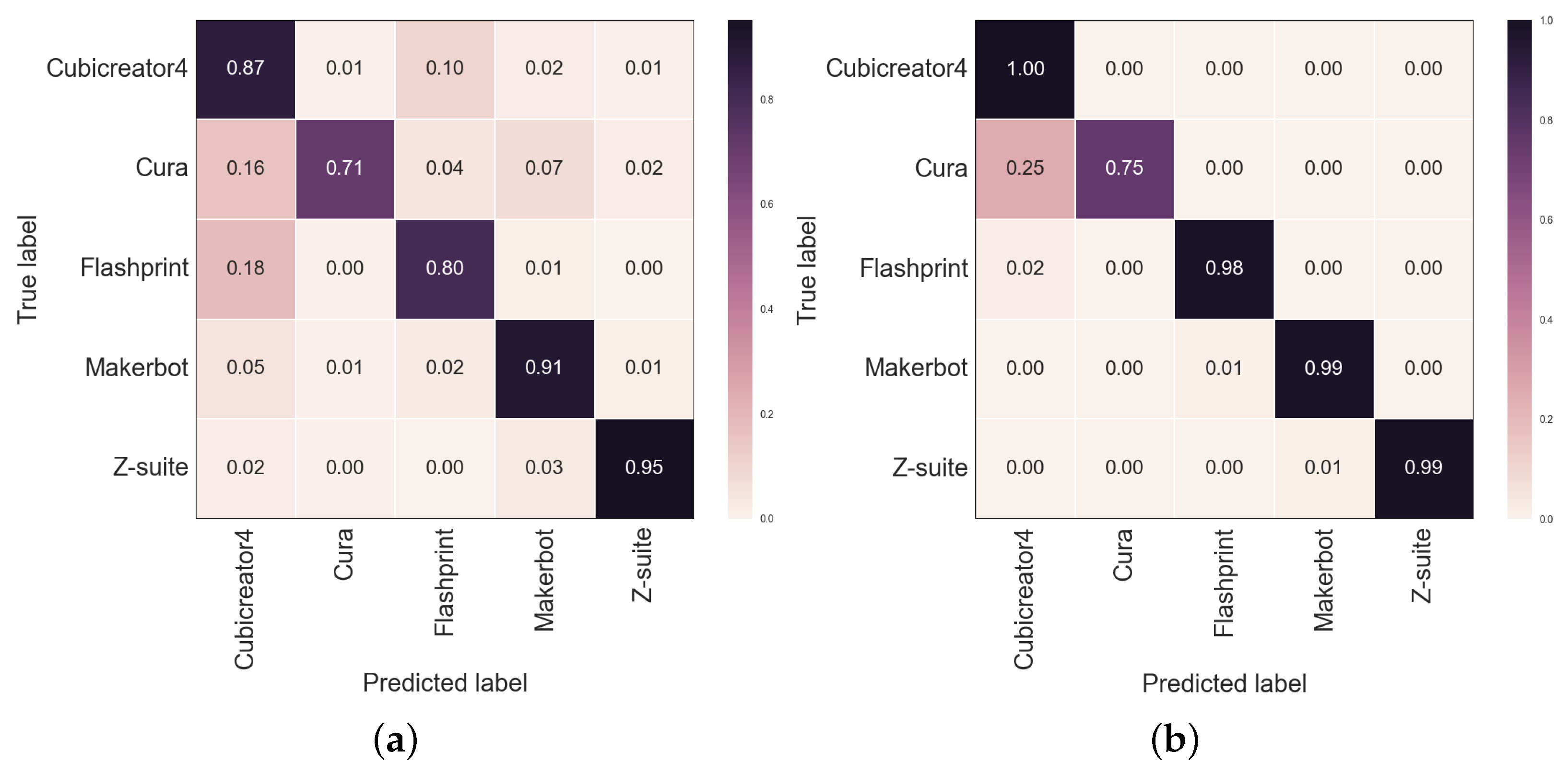

4.2. Slicer Program Identification

4.3. Comparison Result

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shahrubudin, N.; Lee, T.C.; Ramlan, R. An overview on 3D printing technology: Technological, materials, and applications. Procedia Manuf. 2019, 35, 1286–1296. [Google Scholar] [CrossRef]

- Malik, A.; Haq, M.I.U.; Raina, A.; Gupta, K. 3D printing towards implementing Industry 4.0: Sustainability aspects, barriers and challenges. Ind. Robot. Int. J. Robot. Res. Appl. 2022, 49, 491–511. [Google Scholar] [CrossRef]

- House, T.W. FACT SHEET: Biden Administration Celebrates Launch of AM Forward and Calls on Congress to Pass Bipartisan Innovation Act. 2022. Available online: https://www.whitehouse.gov/briefing-room/statements-releases/2022/05/06/fact-sheet-biden-administration-celebrates-launch-of-am-forward-and-calls-on-congress-to-pass-bipartisan-innovation-act/ (accessed on 27 June 2023).

- Hanaphy, P. AI Build Launches New ‘Talk To Aisync’ Software That Prepares 3D Models Using Text Prompts. 2023. Available online: https://amchronicle.com/news/ai-build-introduces-talk-to-aisync-nlp-for-am/ (accessed on 27 June 2023).

- Baier, W.; Warnett, J.M.; Payne, M.; Williams, M.A. Introducing 3D printed models as demonstrative evidence at criminal trials. J. Forensic Sci. 2018, 63, 1298–1302. [Google Scholar] [CrossRef]

- Peels, J. Let’s Kill Disney: A 3D Printing Patent Dispute and a Manifesto. 2022. Available online: https://3dprint.com/289234/lets-kill-disney-a-3d-printing-patent-dispute-and-a-manifesto/ (accessed on 29 June 2023).

- Peels, J. Ethical 3D Printing: 9 Ways 3D Printing Could Aid Criminals. 2016. Available online: https://3dprint.com/298073/ethical-3d-printing-9-ways-3d-printing-can-be-used-for-crime/ (accessed on 29 June 2023).

- Toy, A. Calgary Police Seize Firearms, 3D Printers as Part of National Operation. 2023. Available online: https://globalnews.ca/news/9793960/calgary-police-seize-firearms-3d-printers-national-operation/ (accessed on 29 June 2023).

- Zachariah, B. Criminals Using 3D Printers to Make Fake Registration platesK. 2023. Available online: https://www.drive.com.au/news/criminals-using-3d-printers-to-make-fake-registration-plates/ (accessed on 29 June 2023).

- Rayna, T.; Striukova, L. From rapid prototyping to home fabrication: How 3D printing is changing business model innovation. Technol. Forecast. Soc. Chang. 2016, 102, 214–224. [Google Scholar] [CrossRef]

- Bernacki, J. A survey on digital camera identification methods. Forensic Sci. Int. Digit. Investig. 2020, 34, 300983. [Google Scholar] [CrossRef]

- Ferreira, A.; Navarro, L.C.; Pinheiro, G.; dos Santos, J.A.; Rocha, A. Laser printer attribution: Exploring new features and beyond. Forensic Sci. Int. 2015, 247, 105–125. [Google Scholar] [CrossRef] [PubMed]

- Nie, W.; Han, Z.C.; Zhou, M.; Xie, L.B.; Jiang, Q. UAV detection and identification based on WiFi signal and RF fingerprint. IEEE Sens. J. 2021, 21, 13540–13550. [Google Scholar] [CrossRef]

- Day, P.J.; Speers, S.J. The assessment of 3D printer technology for forensic comparative analysis. Aust. J. Forensic Sci. 2020, 52, 579–589. [Google Scholar] [CrossRef]

- Li, Z.; Rathore, A.S.; Song, C.; Wei, S.; Wang, Y.; Xu, W. PrinTracker: Fingerprinting 3D printers using commodity scanners. In Proceedings of the 2018 ACM Sigsac Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 1306–1323. [Google Scholar]

- Shim, B.S.; Choe, J.H.; Hou, J.U. Source Identification of 3D Printer Based on Layered Texture Encoders. IEEE Trans Multimed. 2023. early access. [Google Scholar] [CrossRef]

- Kubo, Y.; Eguchi, K.; Aoki, R. 3D-Printed object identification method using inner structure patterns configured by slicer software. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–7. [Google Scholar]

- Druelle, C.; Ferri, J.; Mahy, P.; Nicot, R.; Olszewski, R. A simple, no-cost method for 3D printed model identification. J. Stomatol. Oral Maxillofac. Surg. 2020, 121, 219–225. [Google Scholar] [CrossRef]

- Hou, J.U.; Kim, D.G.; Lee, H.K. Blind 3D mesh watermarking for 3D printed model by analyzing layering artifact. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2712–2725. [Google Scholar] [CrossRef]

- Delmotte, A.; Tanaka, K.; Kubo, H.; Funatomi, T.; Mukaigawa, Y. Blind watermarking for 3-D printed objects by locally modifying layer thickness. IEEE Trans. Multimed. 2019, 22, 2780–2791. [Google Scholar] [CrossRef]

- Shim, B.S.; Shin, Y.S.; Park, S.W.; Hou, J.U. SI3DP: Source Identification Challenges and Benchmark for Consumer-Level 3D Printer Forensics. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 1721–1729. [Google Scholar]

- Gao, Y.; Wang, W.; Jin, Y.; Zhou, C.; Xu, W.; Jin, Z. ThermoTag: A hidden ID of 3D printers for fingerprinting and watermarking. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2805–2820. [Google Scholar] [CrossRef]

- Šljivic, M.; Pavlovic, A.; Kraišnik, M.; Ilić, J. Comparing the accuracy of 3D slicer software in printed enduse parts. In IOP Conference Series: Materials Science and Engineering, Proceedings of the 9th International Scientific Conference—Research and Development of Mechanical Elements and Systems (IRMES 2019), Kragujevac, Serbia, 5–7 September 2019; IOP Publishing: Bristol, UK, 2019; Volume 659, p. 012082. [Google Scholar]

- Trincat, T.; Saner, M.; Schaufelbühl, S.; Gorka, M.; Rhumorbarbe, D.; Gallusser, A.; Delémont, O.; Werner, D. Influence of the printing process on the traces produced by the discharge of 3D-printed Liberators. Forensic Sci. Int. 2022, 331, 111144. [Google Scholar] [CrossRef] [PubMed]

- Vaezi, M.; Chua, C.K. Effects of layer thickness and binder saturation level parameters on 3D printing process. Int. J. Adv. Manuf. Technol. 2011, 53, 275–284. [Google Scholar] [CrossRef]

- Zhang, Z.C.; Li, P.L.; Chu, F.T.; Shen, G. Influence of the three-dimensional printing technique and printing layer thickness on model accuracy. J. Orofac. Orthop. Kieferorthopadie 2019, 80, 194–204. [Google Scholar] [CrossRef]

- Alshamrani, A.A.; Raju, R.; Ellakwa, A. Effect of printing layer thickness and postprinting conditions on the flexural strength and hardness of a 3D-printed resin. BioMed Res. Int. 2022, 2022, 8353137. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Xu, Y.; Chang, T.; Tu, Z. Co-scale conv-attentional image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9981–9990. [Google Scholar]

- Peng, F.; Yang, J.; Lin, Z.X.; Long, M. Source identification of 3D printed objects based on inherent equipment distortion. Comput. Secur. 2019, 82, 173–183. [Google Scholar] [CrossRef]

- Daminabo, S.C.; Goel, S.; Grammatikos, S.A.; Nezhad, H.Y.; Thakur, V.K. Fused deposition modeling-based additive manufacturing (3D printing): Techniques for polymer material systems. Mater. Today Chem. 2020, 16, 100248. [Google Scholar] [CrossRef]

- Weller, C.; Kleer, R.; Piller, F.T. Economic implications of 3D printing: Market structure models in light of additive manufacturing revisited. Int. J. Prod. Econ. 2015, 164, 43–56. [Google Scholar] [CrossRef]

- Sammaiah, P.; Krishna, D.C.; Mounika, S.S.; Reddy, I.R.; Karthik, T. Effect of the support structure on flexural properties of fabricated part at different parameters in the FDM process. In IOP Conference Series: Materials Science and Engineering, Proceedings of the International Conference on Recent Advancements in Engineering and Management (ICRAEM-2020), Warangal, India, 9–10 October 2020; IOP Publishing: Bristol, UK, 2020; Volume 981, p. 042030. [Google Scholar]

- Cheesmond, N. Infill Pattern Art—3D Printing Without Walls. 2022. Available online: https://3dwithus.com/infill-pattern-art-3d-printing-without-walls (accessed on 29 June 2023).

- Wojtyła, S.; Klama, P.; Baran, T. Is 3D printing safe? Analysis of the thermal treatment of thermoplastics: ABS, PLA, PET, and nylon. J. Occup. Environ. Hyg. 2017, 14, D80–D85. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Lu, L.; Liu, L.; Sharf, A.; Chen, B. Fabricating QR codes on 3D objects using self-shadows. Comput.-Aided Des. 2019, 114, 91–100. [Google Scholar] [CrossRef]

- Aronson, A.; Elyashiv, A.; Cohen, Y.; Wiesner, S. A novel method for linking between a 3D printer and printed objects using toolmark comparison techniques. J. Forensic Sci. 2021, 66, 2405–2412. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Taipei, Taiwan, 2–4 December 2019; pp. 6105–6114. [Google Scholar]

- Hou, J.U.; Lee, H.K. Layer thickness estimation of 3D printed model for digital multimedia forensics. Electron. Lett. 2019, 55, 86–88. [Google Scholar] [CrossRef]

- Kafle, A.; Luis, E.; Silwal, R.; Pan, H.M.; Shrestha, P.L.; Bastola, A.K. 3D/4D Printing of polymers: Fused deposition modelling (FDM), selective laser sintering (SLS), and stereolithography (SLA). Polymers 2021, 13, 3101. [Google Scholar] [CrossRef]

- Choudhari, C.; Patil, V. Product development and its comparative analysis by SLA, SLS and FDM rapid prototyping processes. In IOP Conference Series: Materials Science and Engineering, Proceedings of the International Conference on Advances in Materials and Manufacturing Applications (IConAMMA-2016), Bangalore, India, 14–16 July 2016; IOP Publishing: Bristol, UK, 2016; Volume 149, p. 012009. [Google Scholar]

- Kluska, E.; Gruda, P.; Majca-Nowak, N. The accuracy and the printing resolution comparison of different 3D printing technologies. Trans. Aerosp. Res. 2018, 3, 69–86. [Google Scholar] [CrossRef]

- Badanova, N.; Perveen, A.; Talamona, D. Study of SLA Printing Parameters Affecting the Dimensional Accuracy of the Pattern and Casting in Rapid Investment Casting. J. Manuf. Mater. Process. 2022, 6, 109. [Google Scholar] [CrossRef]

| Slicer Program | 3D Printer | Layer Thickness Range (mm) | Printing Speed (mm/s) |

|---|---|---|---|

| Makerbot | Method X | 0.2–1.2 | 10–500 |

| Replicator | |||

| Cubicreator4 | 210F | Continuous variable | 5–500 |

| 320C | |||

| Flashprint | Finder | 0.05–0.4 | 10– 200 |

| Z-suite | M200 | 50–200 | |

| M300 | 0.09, 0.14, 0.19, | ||

| M300+ | 0.29, 0.39 | ||

| Cura | Ultimaker | Continuous variable | Continuous variable |

| Name | Temp. | Summary |

|---|---|---|

| PLA | 210 °C | Polylactic Acid. Most widely used filament. Biodegradable material, non-toxic, no flexibility, weakens under humidity |

| PLA+ | 210 °C | Similar composition as that of PLA but has 3 times higher stiffness |

| ABS | 245 °C | Acrylonitrile butadiene styrene. Very sturdy, financial, emits harmful gas during printing |

| Tough PLA | 210 °C | Add properties of ABS with elasticity and stiffness to PLA. High toughness, greater durability |

| Nylon | 250 °C | Flexible, has greater elasticity and gloss than PLA |

| PETG | 230–250 °C | Polyethylene Terephthalate Glycol. Smooth surface finish, high transparency, water/chemical resistance |

| ASA | 245 °C | Acrylonitrile Styrene Acrylate. Material that combines the advantages of ABS with UV and moisture resistance |

| PLA pro | 230 °C | Industrial PLA with high speed performance, good mechanical properties, and adaptability to high-heat environments |

| Approach | Method | Bullet | Iron Man | Key | Teeth | Mean | Standard Deviation |

|---|---|---|---|---|---|---|---|

| Classification | CNN | 0.0765 | 0.0787 | 0.0804 | 0.0759 | 0.0779 | 0.0021 |

| Classification | Transformer | 0.0759 | 0.0793 | 0.0798 | 0.0814 | 0.0791 | 0.0023 |

| Regression | CNN | 0.0451 | 0.0538 | 0.0702 | 0.0535 | 0.0564 | 0.0105 |

| Regression | Transformer | 0.0374 | 0.0523 | 0.0701 | 0.0461 | 0.0529 | 0.0138 |

| Method | Subtask Type | Fusion | Precision |

|---|---|---|---|

| CoaT-Lite Tiny [28] | ✗ | ✗ | 0.880 |

| Filament | ✗ | 0.888 | |

| Device | ✗ | 0.880 | |

| Printer | ✗ | 0.889 | |

| CoaT-Lite Tiny [28] | Printer | ✓ | 0.987 |

| Model | Approach | Description | Rmse Score |

|---|---|---|---|

| Li et al. [15] | Classification | GLCM-SVM | 0.0994 |

| Shim et al. [21] | Classification | CNN-based | 0.0791 |

| Shim et al. [16] | Classification | CFTNet | 0.0773 |

| Ours | Regression | Transformer-based | 0.0529 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shim, B.S.; Hou, J.-U. Improving Estimation of Layer Thickness and Identification of Slicer for 3D Printing Forensics. Sensors 2023, 23, 8250. https://doi.org/10.3390/s23198250

Shim BS, Hou J-U. Improving Estimation of Layer Thickness and Identification of Slicer for 3D Printing Forensics. Sensors. 2023; 23(19):8250. https://doi.org/10.3390/s23198250

Chicago/Turabian StyleShim, Bo Seok, and Jong-Uk Hou. 2023. "Improving Estimation of Layer Thickness and Identification of Slicer for 3D Printing Forensics" Sensors 23, no. 19: 8250. https://doi.org/10.3390/s23198250

APA StyleShim, B. S., & Hou, J.-U. (2023). Improving Estimation of Layer Thickness and Identification of Slicer for 3D Printing Forensics. Sensors, 23(19), 8250. https://doi.org/10.3390/s23198250