1. Introduction

Although there are many machine learning techniques to analyze medical images in various areas, deep learning has become the better method to analyze and interpret medical issues due to its accuracy [

1]. Deep learning is a part of machine learning and is based on artificial neural networks, called deep neural networks because the structure of the neural network consists of multiple inputs, outputs, and hidden layers [

2]. Deep learning is widely known for its application in many areas, and is most important in the analysis and interpretation of medical images [

3], such as classifying melanomas [

4,

5], brain tumors [

6,

7], and eye diseases [

8,

9], to overcome image processing barriers and machine learning methods, although these applications also produce low-level classification accuracy with deep learning due to deep learning models needing a sufficient number of labeled images to perform better [

10]. This will lead to a problem in the performance of deep learning in some fields, especially in the medical field, where the field of medical image analysis suffers from a lack of labeled images, due to the time-consuming and expensive process of labeling images, which requires experts specialized in radiology [

10]. These reasons lead researchers to build computer systems that help experts make decisions and speed up the diagnostic process. Transfer learning is provided to reduce the need for many images and to speed up the training process by transferring knowledge from a previous process and then training it to relatively small datasets for the current task. Transfer learning is often applied to pre-trained models (such as LeNet, Alex-Net, VGG-16, ResNet, etc.) on the ImageNet dataset, which consists of natural images, with large numbers of more than 14 million images distributed over 1000 classes [

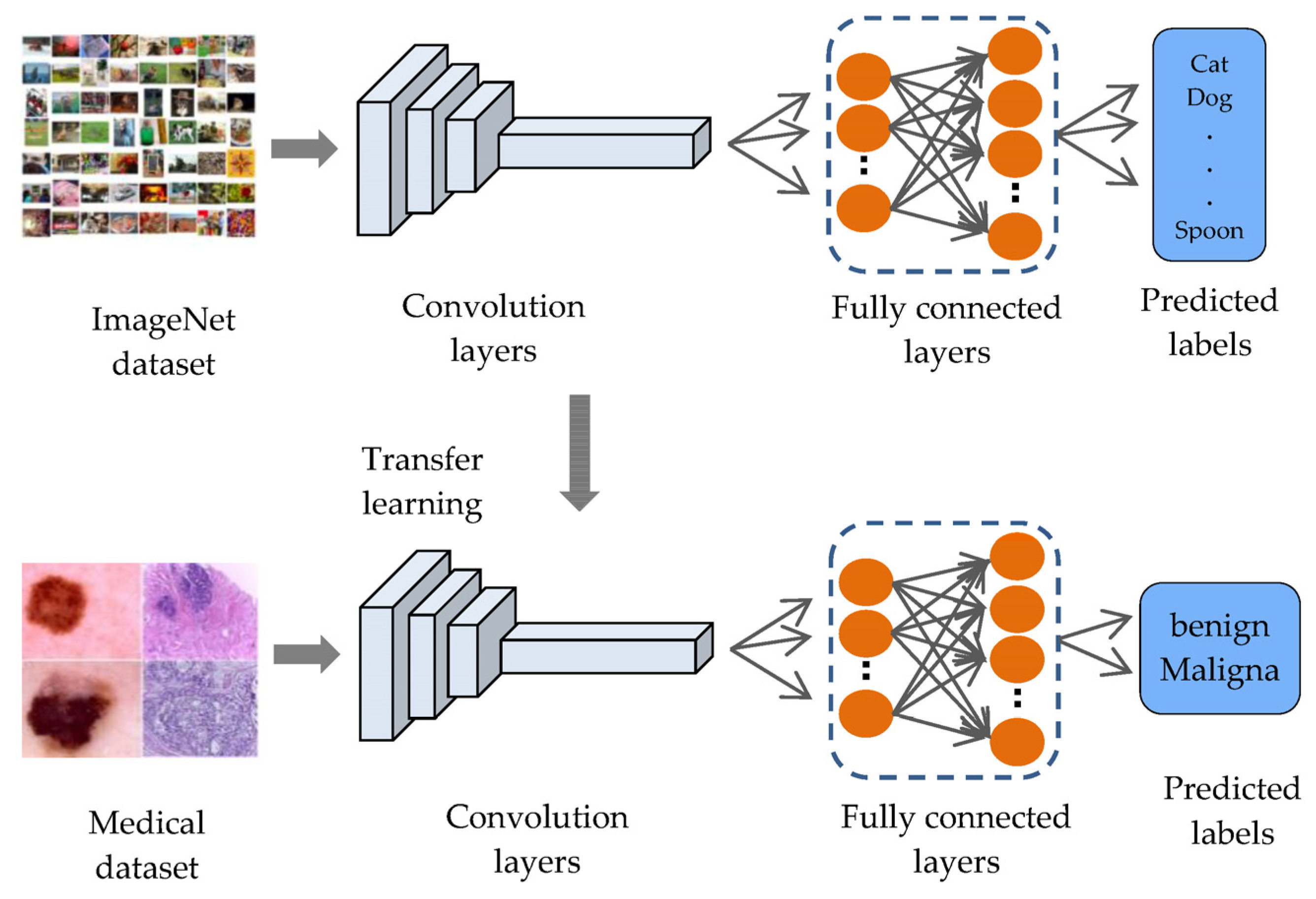

11], such as objects, animals, and humans, to solve. Many tasks are pattern recognition and computer vision. For example, applying transfer learning on ImageNet (face detection, distinguishing types of animals, or distinguishing types of flowers, etc.) can improve the performance of these tasks, because their features are like those in the ImageNet dataset. However, the ImageNet dataset does not contain medical images, resulting in a domain mismatch between the source domain and the target domain as shown in

Figure 1.

In addition, fine-tuning the models for field convergence requires more images due to increasing the number of trainable layers [

12], which causes the problem of overfitting that occurs when models are trained on few images [

13]. Moreover, medical datasets have a shortage of images in the malignant class compared to the number of images in the benign class, which causes an imbalance between the classes of the dataset [

14], and thus causes the model bias problem for the class with the largest number of images.

Deep learning has become the ultimate method for the examination and classification of cancerous diseases, due to its exactness, as there have been many previous works on deep learning approaches, especially transfer learning technology from the pre-trained models such as LeNet, Alex-Net, VGG-16, ResNet, etc. All related works are summarized in

Table 1. V. Shah et al., 2020 [

15], used the models (DenseNet-121, SE-ResNeXt50, ResNet50, and VGG19) to classify the ISIC2020 melanoma dataset images into malignant and benign. ResNet-50, according to sensitivity, specificity, and accuracy, obtained the best results among the other three, with values of 99.7%, 55.67%, and 93.96%, respectively. It is pointless to use a test with low specificity for diagnosis because many people without the disease will show positive results and potentially receive unnecessary diagnostic procedures. C. Li et al., 2021 [

16], applied transfer learning on three models (EfficientNet-B4, vgg16, and ResNet50) for the purpose of classifying melanoma images in the ISIC2020 dataset. They use data augmentation to improve the performance and accuracy of the model; after the training procedure, they had an AUC-ROC score for EfficientNet-B4 of 0.909, which is 3.5% higher than VGG16 and 2.3% higher than Resnet50. They did not experiment with the effect of balancing the classes, because the ISIC2020 dataset suffers from the problem of imbalance between the benign and malignant classes. In addition, the proposed model suffers from the problem of overfitting. R. Zhang, 2021 [

17], used the EfficientNet-B6 model and performed a transfer learning of the model on the ISIC2020 dataset. He obtained an AUC-ROC score of 0.917. His model suffered from an overfitting problem. Z. M. Arkah et al., 2021 [

18], proposed a new approach to transfer learning by training the models (VGG, GoogleNet, ResNet50) from scratch on a large number of unlabeled melanoma images, and then training them on a small number of labeled skin images. They applied their approach to the ISIC 2020 dataset. The ResNet50 achieved an accuracy of 93.7% when training with the proposed method. However, training the models from scratch takes time and requires a very large number of images, so the process of fine-tuning the pre-trained models to some last layer that extracts custom features may lead to better results and less training time.

L. Alzubaidi et al., 2021 [

10], proposed a new model that combines recent advances, trained it from scratch on large datasets of unlabeled medical images, and retrained the model classifier on a small number of labeled images. They applied the model to the ISIC2020 dataset, in addition to using data augmentation techniques, to increase the number of samples. They have experimentally demonstrated that the proposed method can significantly improve the classification performance. The proposed model achieved an F1 score of 98.53% with the proposed method. The process of training from scratch performs better, but it takes a lot of time to train, requires a lot of images to practice well, and you may run into the problem of overfitting that often occurs when designing new models. R. Kaur et al., 2022 [

19], proposed a DCNN that is lightweight and less complex than other recent approaches to classify melanomas with high efficiency. In their study, the model was tested on various cancer samples from the International Skin Imaging Collaboration data stores (ISIC 2016, ISIC2017, and ISIC 2020). The proposed DCNN achieved an average accuracy of 81.41% on ISIC 2016, an 88.23% on ISIC 2017, and 90.48% on ISIC 2020. The designed DCNN model can be further extended to multi-class classification to predict other different types of skin cancers.

S. H. Kassani et al., 2019 [

20], proposed a transfer-learning method on the Xception model to classify the ‘hematoxylin’ and ‘Eosin’ (H&E) spots available for histological breast cancer images in the ICIAR 2018 dataset. To improve performance, they used different stain normalization methods (Reinhard and Macenko). Various data augmentation methods were applied to increase the number of samples. Their proposed model had an average accuracy of 92.50%. The accuracy measure alone is not sufficient to evaluate the model, so other measures such as precision, recall, and F1 score can be used. T. Kausar et al., 2019 [

21], used the VGG16 model to extract features and classify the histological images of breast cancer in the ICIAR2018 dataset. They have normalized H&E images by the Macenko method, as well as by using various methods of data augmentation techniques. Their model is based on images, as opposed to the models that based on patches, so they extracted features from 2048 × 1536 full size images. After that, a SoftMax classifier was trained on the extracted feature set. During their experiments, they achieved an accuracy of 94.3% for multi-category classification. The effect of data set size with or without data augmentation on classification has not been reported. C. P. Nguyen et al., 2019 [

22], solved the problem of the limited number of images in the ICIAR2018 target dataset. To improve classification accuracy, they performed augmentation of the data in the test phase. They obtained a result with 78% accuracy in predicting the test set from four classes. Data augmentation techniques using GAN to generate additional datasets have not been considered. L. Alzubaidi et al., 2021 [

10], sliced all breast cancer histological images in the ICIAR-2018 dataset into 12 non-overlapping patches of 512 × 512 pixels to increase the number of images. Their method achieved an accuracy value of 97.51%. Despite the good results, the process of slicing the image into patches can miss some important information needed to correctly predict the category.

To avoid slicing the images into small patches that may lose some important information to the histological images, data augmentation techniques have been applied only to the entire image, to increase the number of samples and to extract sufficient patterns from the image.

Based on previous studies, it has been noted that all traditional transfer learning methods depend on pre-trained models on the ImageNet, which were used to extract features from them and to take advantage of the knowledge gained from them to classify the images of the new task, and this is not considered logical, because the ImageNet dataset includes natural images, and not medical images, to extract important features that can be used to support the task of classifying targeted medical images. Except for L. Alzubaidi et al., 2021, they trained a model from scratch on unclassified medical images of the same disease and applied transfer learning to classified images, but the training process from scratch also requires the presence of many images in addition to it taking time to train the model. To the best of our knowledge, this is the first work that aims to converge the domains between the source domain and the target domain by unfreezing the last layers that specialize in extracting special features, training them on unclassified medical images of the same disease, and training the classification layers on classified images of the target task, as shown in

Figure 2. This process does not require many images and it does not require training the model from scratch. In addition, most of the previous studies were suffering from the problem of overfitting, so dropout layers by 50% are added to reduce this problem, in addition to using data augmentation techniques to increase the number of samples.

This study aims to converge the domain between the source domain and the target domain by taking advantage of the presence of large quantities of unclassified images of the same type of disease of the target task, and by proposing a novel methodology for transfer learning by fine-tuning the last layers on a large number of unclassified images of the same disease, and on a small number of classified images for the target task, in addition to solving the problem of unbalanced classes. Below is a summary of the most important contributions of this study:

Four novel models were designed based on pre-trained models (Vgg16, Xception, ResNet50, and MobileNetV2), and new layers were added to improve the prediction and classification process, as well as to solve the problem of overfitting.

Proposing a novel approach to transfer learning called DTL to solve the issue of the inefficiency of classified medical images, and the convergence of the field between the source domain and the target domain, by fine-tuning the last layers of the models on unclassified medical images of the same disease and then conducting the transfer learning again on a few classified images, which reduces the need for a large number of classified images. In addition to addressing the problem of the field convergence, because the features extracted from ImageNet are different from the features extracted from the target images.

Using a new method for pre-processing classified breast cancer images, by inserting the entire image into the model, without cropping the images into small patches (patch-wise), in order to preserve important patterns that may be lost while cropping the image into small patches.

Various data augmentation techniques to overcome the problem of unbalanced data and to increase the number of samples is applied.

To demonstrate that transfer learning from the same domain of the target dataset can significantly improve performance.

To validate the validity of the proposed models, they were tested on different medical imaging applications (skin cancer images and breast cancer images) as an example for the purpose of generalization.

The rest of the paper is organized as follows:

Section 2 explains the materials and methods.

Section 3 reports the results and discussions. Lastly,

Section 4 concludes the paper.

4. Conclusions and Future Work

This study presented a proposed approach to solve the problem of the lack of labeled medical images by including transfer learning methods on pre-trained models on ImageNet (VGG16, Xception, ResNet50, MobileNetV2). To obtain the extracted features that are closer to the target task, the last layers of the models are unfrozen and trained on a set of unlabeled images for the same type of disease, and part of the last layers on labeled images, to better improve performance, in addition to the use of data augmentation techniques to increase the number of images and for balancing the classes of the dataset. The proposed approach is applied to classify the images of the ISIC2020 skin cancer dataset into two classes, benign and malignant, and to classify the images of the ICIAR 2018 breast cancer dataset into four classes: invasive carcinoma, in situ carcinoma, benign tumor, and normal tissue.

The obtained results showed an improvement in the performance of the models after fine-tuning them on a large set of unlabeled images and on a small set of labeled images for skin cancer image classification tasks, where the performance of the VGG16 model improved by 0.28%, the Xception model by 10.96%, the ResNet50 model by 15.73%, and the MobileNetV2 model by 10.4% without data augmentation, while improving the VCG16 model by 19.66%, the Xception model by 34.76%, the ResNet50 model by 31.76%, and the MobileNetV2 model by 33.03% with data augmentation. The Xception model obtained the highest performance compared to the rest of the models when classifying skin cancer images in the ISIC2020 dataset, as it obtained accuracy of 96.83%, precision of 96.919%, recall of 96.826%, F1-score of 96.825%, sensitivity of 99.07%, and specificity of 94.58%. To prove that the proposed approach is applicable to more than one type of medical image, the approach is applied to classify the images of the ICIAR 2018 dataset for breast cancer. The Xception model obtained accuracy of 99%, precision of 99.003%, recall of 98.995%, F1-score of 99%, sensitivity of 98.55%, and specificity of 99.14%. We compared this with the use of traditional transfer learning methods with data augmentation technology, which obtained accuracy of 82.48%, precision of 82.798%, recall of 82.840%, F1-score of 82.764%, sensitivity of 74.04%, and specificity of 85.77%, which proves the success of the proposed approach in all our experiments.

The suggestions for future work: For better performance, a larger number of the last layers can be unfrozen and trained on a larger number of unlabeled images. Executing fine-tuning on the models by training the last layers on images like the target images, for example, microscopic images of colon and bone cancer, can be used to improve the performance of the tasks of classifying breast cancer images due to the similarity of the images in the histological structure, which can be used to extract features that are like the features of breast cancer. Some improvements could be made to skin cancer images, such as removing hair from the image, cropping the background, and keeping the area of interest.