Internet-of-Things-Enabled Markerless Running Gait Assessment from a Single Smartphone Camera

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Video Capture

2.3. Reference System and Data Labelling

- Initial contact (IC)—the point at which the foot first contacts the ground.

- Final contact (FC)—the point at which the foot first leaves the ground.

- Contact time (CT)—the total time elapsed between IC and FC (i.e., time foot spent in contact with the ground.

- Swing time (ST)—the time elapsed between an FC event and a proceeding IC (i.e., time foot spent off the ground).

- Step time (StT)—the time elapsed between two IC events.

- Cadence—the number of steps taken per minute of running.

- Knee flexion angle—the angle between lateral mid-shank, lateral knee joint line, and mid-lateral thigh throughout a gait cycle.

- Foot strike location—the angle of the foot during contact with the ground during IC.

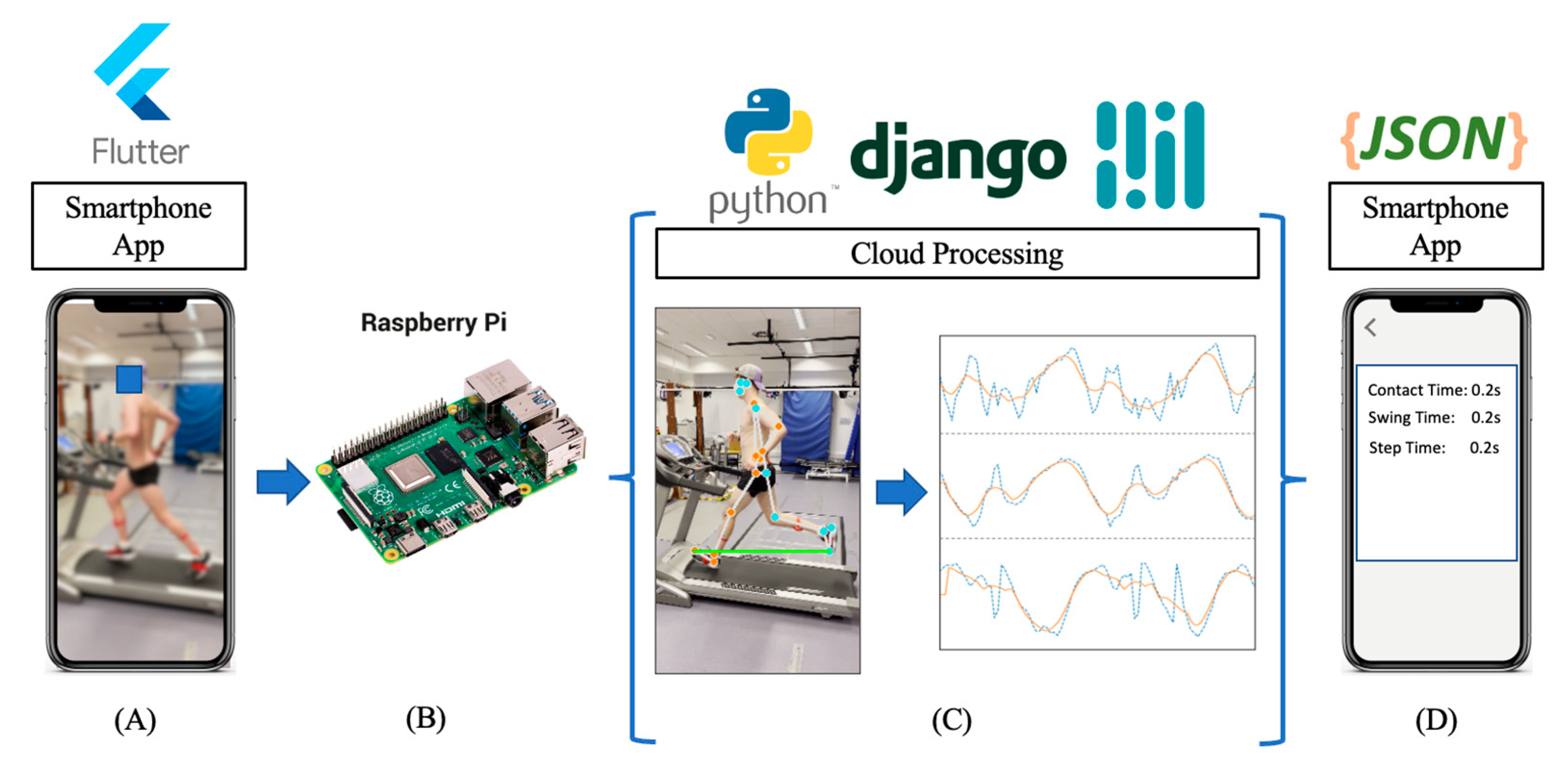

2.4. Proposed Low-Cost Approach

2.4.1. Proposed Infrastructure

2.4.2. Smartphone Application and Cloud Infrastructure

2.5. Feature Extraction

2.5.1. Data Preprocessing

2.5.2. Gait Mechanics: Identifying Key Features

- A dynamic threshold is set at the 90th percentile of the maximum leg extension angle within the signal.

- A zero-crossing gradient maxima peak detection algorithm such as those found within other gait assessment applications, e.g., [41] detects two peaks above the dynamic threshold, IC and FC.

- IC and FC are then distinguished apart by observing the minima of the signal prior to the identified peak. Before an IC, the signal will dip significantly lower than the signal prior to FC due to minimum leg extension (i.e., lowest angle between hip, knee, and ankle; highest flexion) during the swing phase of gait, opposed to a maximum extension during contact [40] (Figure 2).

2.5.3. Temporal Outcomes and Cadence

2.5.4. Knee Flexion Angle

2.5.5. Foot Strike Angle and Location

2.6. Statistical Analysis

3. Results

3.1. Temporal Outcomes

3.2. Cadence

3.3. Knee Flexion Angle

3.4. Foot Angle and Foot Strike Location

3.4.1. Foot Angle

3.4.2. Foot Strike Location

4. Discussion

4.1. Pose Estimation Performance

4.1.1. Temporal Outcomes

4.1.2. Knee Flexion and Foot Strike Angle

5. Limitations and Future Work

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shipway, R.; Holloway, I. Running free: Embracing a healthy lifestyle through distance running. Perspect. Public Health 2010, 130, 270–276. [Google Scholar] [CrossRef] [PubMed]

- Dugan, S.A.; Bhat, K.P. Biomechanics and analysis of running gait. Phys. Med. Rehabil. Clin. 2005, 16, 603–621. [Google Scholar] [CrossRef] [PubMed]

- Agresta, C. Running Gait Assessment. In Clinical Care of the Runner; Elsevier: Amsterdam, The Netherlands, 2020; pp. 61–73. [Google Scholar]

- Daoud, A.I.; Geissler, G.J.; Wang, F.; Saretsky, J.; Daoud, Y.A.; Lieberman, D.E. Foot strike and injury rates in endurance runners: A retrospective study. Med. Sci. Sport. Exerc. 2012, 44, 1325–1334. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hayes, P.; Caplan, N. Foot strike patterns and ground contact times during high-calibre middle-distance races. J. Sport. Sci. 2012, 30, 1275–1283. [Google Scholar] [CrossRef]

- ARDIGO’, L.; Lafortuna, C.; Minetti, A.; Mognoni, P.; Saibene, F. Metabolic and mechanical aspects of foot landing type, forefoot and rearfoot strike, in human running. Acta Physiol. Scand. 1995, 155, 17–22. [Google Scholar] [CrossRef]

- Reinking, M.F.; Dugan, L.; Ripple, N.; Schleper, K.; Scholz, H.; Spadino, J.; Stahl, C.; McPoil, T.G. Reliability of two-dimensional video-based running gait analysis. Int. J. Sport. Phys. Ther. 2018, 13, 453. [Google Scholar] [CrossRef] [Green Version]

- Higginson, B.K. Methods of running gait analysis. Curr. Sport. Med. Rep. 2009, 8, 136–141. [Google Scholar] [CrossRef] [Green Version]

- Benson, L.C.; Räisänen, A.M.; Clermont, C.A.; Ferber, R. Is This the Real Life, or Is This Just Laboratory? A Scoping Review of IMU-Based Running Gait Analysis. Sensors 2022, 22, 1722. [Google Scholar] [CrossRef]

- Bailey, G.; Harle, R. Assessment of foot kinematics during steady state running using a foot-mounted IMU. Procedia Eng. 2014, 72, 32–37. [Google Scholar] [CrossRef] [Green Version]

- Zrenner, M.; Gradl, S.; Jensen, U.; Ullrich, M.; Eskofier, B.M. Comparison of different algorithms for calculating velocity and stride length in running using inertial measurement units. Sensors 2018, 18, 4194. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the pose tracking performance of the azure kinect and kinect v2 for gait analysis in comparison with a gold standard: A pilot study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef] [PubMed]

- Ye, M.; Yang, C.; Stankovic, V.; Stankovic, L.; Cheng, S. Gait phase classification for in-home gait assessment. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 1524–1529. [Google Scholar]

- Anwary, A.R.; Yu, H.; Vassallo, M. Optimal foot location for placing wearable IMU sensors and automatic feature extraction for gait analysis. IEEE Sens. J. 2018, 18, 2555–2567. [Google Scholar] [CrossRef]

- Tan, T.; Strout, Z.A.; Shull, P.B. Accurate impact loading rate estimation during running via a subject-independent convolutional neural network model and optimal IMU placement. IEEE J. Biomed. Health Inform. 2020, 25, 1215–1222. [Google Scholar] [CrossRef] [PubMed]

- Young, F.; Mason, R.; Wall, C.; Morris, R.; Stuart, S.; Godfrey, A. Examination of a Foot Mounted IMU-based Methodology for Running Gait Assessment. Front. Sport. Act. Living 2022, 4, 956889. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Preis, J.; Kessel, M.; Werner, M.; Linnhoff-Popien, C. Gait recognition with kinect. In Proceedings of the 1st International Workshop on Kinect in Pervasive Computing, New Castle, UK, 18–22 June 2012; pp. 1–4. [Google Scholar]

- Springer, S.; Yogev Seligmann, G. Validity of the kinect for gait assessment: A focused review. Sensors 2016, 16, 194. [Google Scholar] [CrossRef]

- D’Antonio, E.; Taborri, J.; Palermo, E.; Rossi, S.; Patane, F. A markerless system for gait analysis based on OpenPose library. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef]

- Viswakumar, A.; Rajagopalan, V.; Ray, T.; Parimi, C. Human gait analysis using OpenPose. In Proceedings of the 2019 fifth international conference on image information processing (ICIIP), Shimla, India, 15–17 November 2019; pp. 310–314. [Google Scholar]

- Tay, C.Z.; Lim, K.H.; Phang, J.T.S. Markerless gait estimation and tracking for postural assessment. Multimed. Tools Appl. 2022, 81, 12777–12794. [Google Scholar] [CrossRef]

- McCann, D.J.; Higginson, B.K. Training to maximize economy of motion in running gait. Curr. Sport. Med. Rep. 2008, 7, 158–162. [Google Scholar] [CrossRef]

- Moore, I.S.; Jones, A.M.; Dixon, S.J. Mechanisms for improved running economy in beginner runners. Med. Sci. Sport. Exerc. 2012, 44, 1756–1763. [Google Scholar] [CrossRef] [Green Version]

- Mason, R.; Pearson, L.T.; Barry, G.; Young, F.; Lennon, O.; Godfrey, A.; Stuart, S. Wearables for Running Gait Analysis: A Systematic Review. Sport. Med. 2022, 53, 241–268. [Google Scholar] [CrossRef]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. Blazepose: On-device real-time body pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- Mroz, S.; Baddour, N.; McGuirk, C.; Juneau, P.; Tu, A.; Cheung, K.; Lemaire, E. Comparing the Quality of Human Pose Estimation with BlazePose or OpenPose. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 8–10 December 2021; pp. 1–4. [Google Scholar]

- Deloitte. Digital Consumer Trends: The UK Cut. Available online: https://www2.deloitte.com/uk/en/pages/technology-media-and-telecommunications/articles/digital-consumer-trends.html (accessed on 8 December 2022).

- Gupta, A.; Chakraborty, C.; Gupta, B. Medical information processing using smartphone under IoT framework. In Energy Conservation for IoT Devices; Springer: Berlin/Heidelberg, Germany, 2019; pp. 283–308. [Google Scholar]

- Roca-Dols, A.; Losa-Iglesias, M.E.; Sánchez-Gómez, R.; Becerro-de-Bengoa-Vallejo, R.; López-López, D.; Rodríguez-Sanz, D.; Martínez-Jiménez, E.M.; Calvo-Lobo, C. Effect of the cushioning running shoes in ground contact time of phases of gait. J. Mech. Behav. Biomed. Mater. 2018, 88, 196–200. [Google Scholar] [CrossRef]

- Pfister, A.; West, A.M.; Bronner, S.; Noah, J.A. Comparative abilities of Microsoft Kinect and Vicon 3D motion capture for gait analysis. J. Med. Eng. Technol. 2014, 38, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Simoes, M.A. Feasibility of Wearable Sensors to Determine Gait Parameters; University of South Florida: Tampa, FL, USA, 2011. [Google Scholar]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nicola, T.L.; Jewison, D.J. The anatomy and biomechanics of running. Clin. Sport. Med. 2012, 31, 187–201. [Google Scholar] [CrossRef] [PubMed]

- Bradski, G.; Kaehler, A. OpenCV. Dr. Dobb’s J. Softw. Tools 2000, 3, 120. [Google Scholar]

- Bressert, E. SciPy and NumPy: An Overview for Developers; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2012. [Google Scholar]

- McKinney, W. pandas: A foundational Python library for data analysis and statistics. Python High Perform. Sci. Comput. 2011, 14, 1–9. [Google Scholar]

- Dicharry, J. Kinematics and kinetics of gait: From lab to clinic. Clin. Sport. Med. 2010, 29, 347–364. [Google Scholar] [CrossRef]

- Trojaniello, D.; Ravaschio, A.; Hausdorff, J.M.; Cereatti, A. Comparative assessment of different methods for the estimation of gait temporal parameters using a single inertial sensor: Application to elderly, post-stroke, Parkinson’s disease and Huntington’s disease subjects. Gait Posture 2015, 42, 310–316. [Google Scholar] [CrossRef]

- Larson, P.; Higgins, E.; Kaminski, J.; Decker, T.; Preble, J.; Lyons, D.; McIntyre, K.; Normile, A. Foot strike patterns of recreational and sub-elite runners in a long-distance road race. J. Sport. Sci. 2011, 29, 1665–1673. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- García-Pinillos, F.; Jaén-Carrillo, D.; Hermoso, V.S.; Román, P.L.; Delgado, P.; Martinez, C.; Carton, A.; Seruendo, L.R. Agreement Between Spatiotemporal Gait Parameters Measured by a Markerless Motion Capture System and Two Reference Systems—A Treadmill-Based Photoelectric Cell and High-Speed Video Analyses: Comparative Study. JMIR Mhealth Uhealth 2020, 8, e19498. [Google Scholar] [CrossRef]

- Di Michele, R.; Merni, F. The concurrent effects of strike pattern and ground-contact time on running economy. J. Sci. Med. Sport 2014, 17, 414–418. [Google Scholar] [CrossRef] [PubMed]

- Padulo, J.; Chamari, K.; Ardigò, L.P. Walking and running on treadmill: The standard criteria for kinematics studies. Muscles Ligaments Tendons J. 2014, 4, 159. [Google Scholar] [CrossRef]

- Young, F.; Stuart, S.; Morris, R.; Downs, C.; Coleman, M.; Godfrey, A. Validation of an inertial-based contact and swing time algorithm for running analysis from a foot mounted IoT enabled wearable. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico City, Mexico, 1–5 November 2021; pp. 6818–6821. [Google Scholar]

- Shirmohammadi, S.; Ferrero, A. Camera as the instrument: The rising trend of vision based measurement. IEEE Instrum. Meas. Mag. 2014, 17, 41–47. [Google Scholar] [CrossRef]

- Powell, D.; Stuart, S.; Godfrey, A. Investigating the use of an open source wearable as a tool to assess sports related concussion (SRC). Physiotherapy 2021, 113, e141–e142. [Google Scholar] [CrossRef]

- Sárándi, I.; Linder, T.; Arras, K.O.; Leibe, B. How robust is 3D human pose estimation to occlusion? arXiv 2018, arXiv:1808.09316. [Google Scholar]

- Tsai, Y.-S.; Hsu, L.-H.; Hsieh, Y.-Z.; Lin, S.-S. The real-time depth estimation for an occluded person based on a single image and OpenPose method. Mathematics 2020, 8, 1333. [Google Scholar] [CrossRef]

- Angelini, F.; Fu, Z.; Long, Y.; Shao, L.; Naqvi, S.M. 2D pose-based real-time human action recognition with occlusion-handling. IEEE Trans. Multimed. 2019, 22, 1433–1446. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Y.; Yang, B.; Wang, B.; Tan, R.T. 3d human pose estimation using spatio-temporal networks with explicit occlusion training. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 10631–10638. [Google Scholar]

- Young, F.; Coulby, G.; Watson, I.; Downs, C.; Stuart, S.; Godfrey, A. Just find it: The Mymo approach to recommend running shoes. IEEE Access 2020, 8, 109791–109800. [Google Scholar] [CrossRef]

- Cavanagh, P.R.; Williams, K.R. The effect of stride length variation on oxygen uptake during distance running. Med. Sci. Sport. Exerc. 1982, 14, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Mercer, J.A.; Vance, J.; Hreljac, A.; Hamill, J. Relationship between shock attenuation and stride length during running at different velocities. Eur. J. Appl. Physiol. 2002, 87, 403–408. [Google Scholar] [CrossRef] [PubMed]

| Left Foot | ||||||

|---|---|---|---|---|---|---|

| Outcome | Mean Predicted | Mean Reference | Mean Error | ICC(2,1) | r | |

| Contact Time (s) | 0.232 | 0.243 | 0.011 | 0.862 | 0.858 | |

| Swing Time (s) | 0.434 | 0.420 | 0.014 | 0.837 | 0.883 | |

| Step Time (s) | 0.682 | 0.671 | 0.010 | 0.811 | 0.845 | |

| Min Predicted | Max Predicted | Min Reference | Max Reference | ICC(2,1) | r | |

| Knee Flexion (°) | 19.9 | 98.9 | 1.4 | 103.1 | 0.961 | 0.975 |

| Foot Strike Loc. (°) | −86.2 | 5.6 | −96.7 | 20.3 | 0.981 | 0.980 |

| Right Foot | ||||||

| Outcome | Mean Predicted | Mean Reference | Mean Error | ICC(2,1) | r | |

| Contact Time (s) | 0.220 | 0.239 | 0.014 | 0.861 | 0.854 | |

| Swing Time (s) | 0.457 | 0.423 | 0.033 | 0.821 | 0.781 | |

| Step Time (s) | 0.689 | 0.665 | 0.024 | 0.751 | 0.769 | |

| Min Predicted | Max Predicted | Min Reference | Max Reference | ICC(2,1) | r | |

| Knee Flexion (°) | 30.4 | 91.1 | 12.5 | 97.1 | 0.979 | 0.982 |

| Foot Strike Loc. (°) | −124.0 | −51.0 | −114.4 | 3.3 | 0.844 | 0.911 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Young, F.; Mason, R.; Morris, R.; Stuart, S.; Godfrey, A. Internet-of-Things-Enabled Markerless Running Gait Assessment from a Single Smartphone Camera. Sensors 2023, 23, 696. https://doi.org/10.3390/s23020696

Young F, Mason R, Morris R, Stuart S, Godfrey A. Internet-of-Things-Enabled Markerless Running Gait Assessment from a Single Smartphone Camera. Sensors. 2023; 23(2):696. https://doi.org/10.3390/s23020696

Chicago/Turabian StyleYoung, Fraser, Rachel Mason, Rosie Morris, Samuel Stuart, and Alan Godfrey. 2023. "Internet-of-Things-Enabled Markerless Running Gait Assessment from a Single Smartphone Camera" Sensors 23, no. 2: 696. https://doi.org/10.3390/s23020696

APA StyleYoung, F., Mason, R., Morris, R., Stuart, S., & Godfrey, A. (2023). Internet-of-Things-Enabled Markerless Running Gait Assessment from a Single Smartphone Camera. Sensors, 23(2), 696. https://doi.org/10.3390/s23020696