A Simple Way to Reduce 3D Model Deformation in Smartphone Photogrammetry

Abstract

:1. Introduction

1.1. Motivation

1.2. Camera Calibration and on-the-Job Calibration in Photogrammetry

1.3. Smartphone Cameras vs. DSLR

1.4. Overview of Smartphone Photogrammetry Applications

2. Materials and Methods

2.1. Research Aim

2.2. IO Stability of Smartphone Cameras

2.3. 3D Model Deformation

3. Results

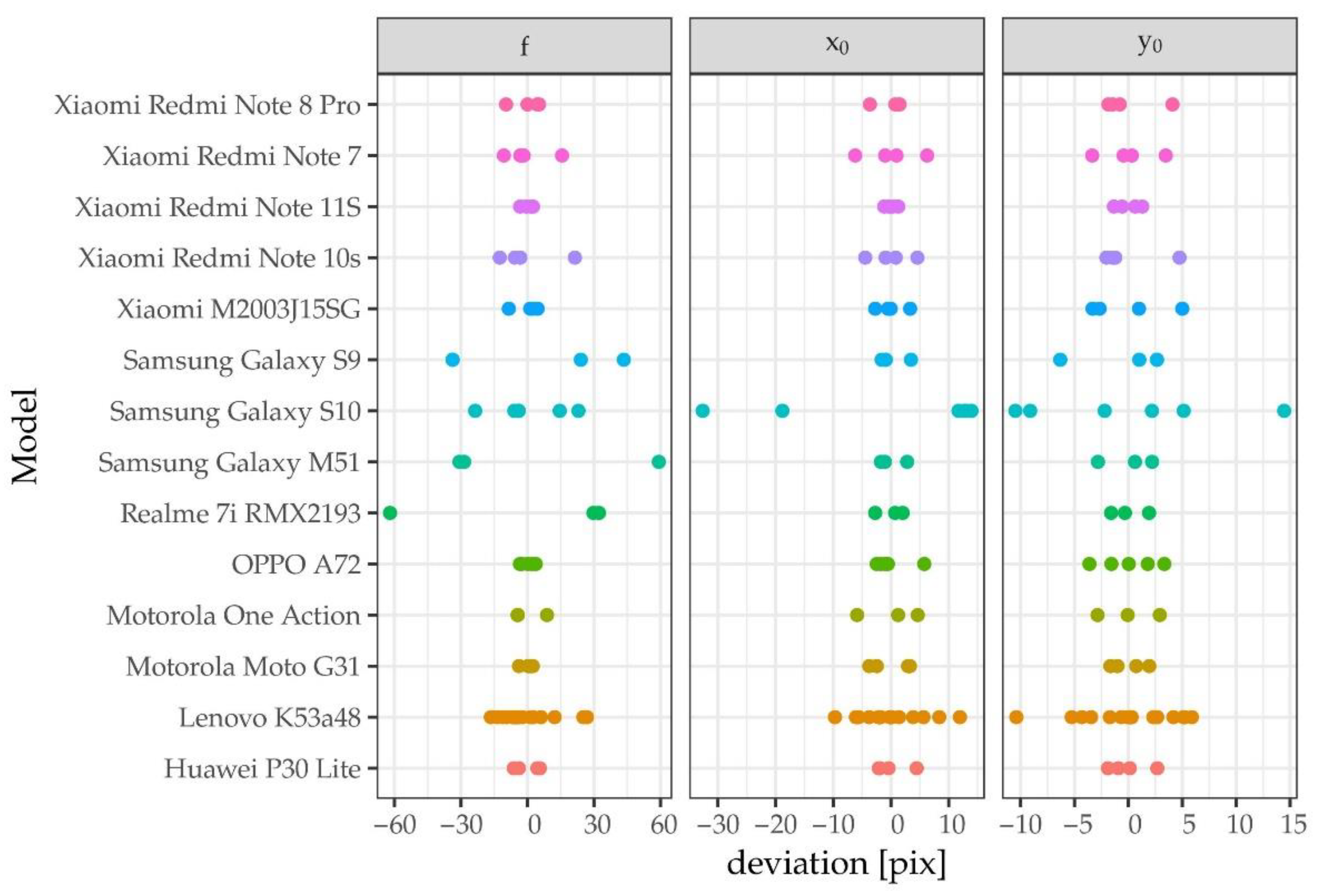

3.1. IO Stability of Smartphone Cameras

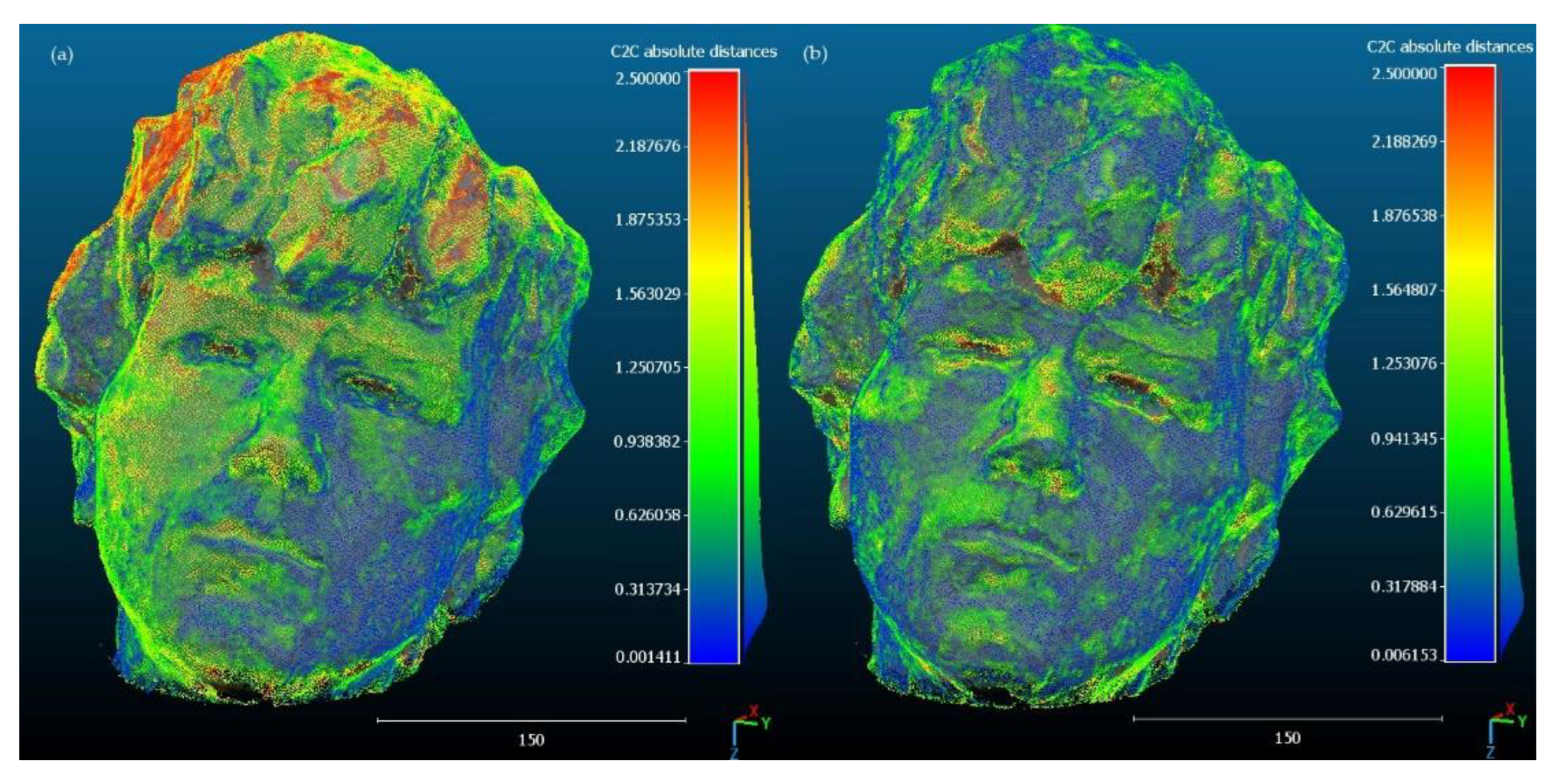

3.2. 3D Model Deformations

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3d Imaging, 3rd ed.; Walter de Gruyter GmbH: Berlin, Germany, 2020. [Google Scholar]

- Brown, D.C. In Close-range camera calibration. Photogram. Eng. 1971, 37, 855–866. [Google Scholar]

- Kraus, K. Photogrammetry. 2: Advanced Methods and Applications, 4th ed.; Dümmler: Bonn, Germany, 1997. [Google Scholar]

- Kolecki, J.; Kuras, P.; Pastucha, E.; Pyka, K.; Sierka, M. Calibration of Industrial Cameras for Aerial Photogrammetric Mapping. Remote. Sens. 2020, 12, 3130. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Fraser, C. ISPRS Commission V Symposium: Image Engineering and Vision Metrology. Photogramm. Rec. 2006, 36, 266–272. [Google Scholar] [CrossRef]

- Luhmann, T.; Fraser, C.; Maas, H.G. Sensor modelling and camera calibration for close-range photogrammetry. ISPRS J. Photogramm. Remote Sens. 2016, 115, 37–46. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Pollefeys, M.; Van Gool, L.; Vergauwen, M.; Verbiest, F.; Cornelis, K.; Tops, J.; Koch, R. Visual Modeling with a Hand-Held Camera. Int. J. Comput. Vis. 2004, 59, 207–232. [Google Scholar] [CrossRef]

- Mohr, R.; Quan, L.; Veillon, F. Relative 3D Reconstruction Using Multiple Uncalibrated Images. Int. J. Robot. Res. 1995, 14, 619–632. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sens. 2019, 12, 22. [Google Scholar] [CrossRef] [Green Version]

- Huang, W.; Jiang, S.; Jiang, W. Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images. Remote Sens. 2021, 13, 4222. [Google Scholar] [CrossRef]

- Hirschmüller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 807–814. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- OpenCV. Open Source Computer Vision Library. 2015. Available online: https://opencv.org (accessed on 14 December 2022).

- Open Drone Map [Computer Software]. 2017. Available online: https://opendronemap.org (accessed on 14 December 2022).

- Vision, A. Meshroom: A 3D Reconstruction Software. 2018. Available online: https://github.com/alicevision/Meshroom (accessed on 14 December 2022).

- Rupnik, E.; Daakir, M.; Deseilligny, M.P. MicMac—A free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef] [Green Version]

- Pix4D SA. Available online: https://www.pix4d.com (accessed on 14 December 2022).

- Agisoft Metashape Professional (Version 1.6.3) (Software). Available online: https://www.agisoft.com (accessed on 14 December 2022).

- Blahnik, V.; Schindelbeck, O. Smartphone imaging technology and its applications. Adv. Opt. Technol. 2021, 10, 145–232. [Google Scholar] [CrossRef]

- Kawahito, S.; Seo, M.-W. Noise Reduction Effect of Multiple-Sampling-Based Signal-Readout Circuits for Ultra-Low Noise CMOS Image Sensors. Sensors 2016, 16, 1867. [Google Scholar] [CrossRef] [Green Version]

- Brandolini, F.; Cremaschi, M.; Zerboni, A.; Degli Esposti, M.; Mariani, G.S.; Lischi, S. SfM-photogrammetry for fast recording of archaeological features in remote areas. AeC 2020, 31, 33–45. [Google Scholar] [CrossRef]

- Liba, N. Making 3D Models Using Close-Range Photogrammetry: Comparison of Cameras and Software. In Proceedings of the 19th International Multidisciplinary Scientific GeoConference SGEM 2019, Sofia, Bulgaria, 30 June–6 July 2019; pp. 561–568. [Google Scholar] [CrossRef]

- Apollonio, F.; Fantini, F.; Garagnani, S.; Gaiani, M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sens. 2021, 13, 486. [Google Scholar] [CrossRef]

- Gaiani, M.; Apollonio, F.I.; Fantini, F. Evaluating Smartphones Color Fidelity and Metric Accuracy for the 3d Documentation of Small Artifacts. ISPRS—Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-2/W11, 539–547. [Google Scholar] [CrossRef] [Green Version]

- Eker, R.; Elvanoglu, N.; Ucar, Z.; Bilici, E.; Aydın, A. 3D modelling of a historic windmill: PPK-aided terrestrial photogrammetry vs smartphone app. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2022, XLIII-B2-2022, 787–792. [Google Scholar] [CrossRef]

- da Purificação, N.R.S.; Henrique, V.B.; Amorim, A.; Carneiro, A.; de Souza, G.H.B. Reconstruction and storage of a low-cost three-dimensional model for a cadastre of historical and artistic heritage. Int. J. Build. Pathol. Adapt. 2022; ahead-of-print. [Google Scholar] [CrossRef]

- Khalloufi, H.; Azough, A.; Ennahnahi, N.; Kaghat, F.Z. Low-cost terrestrial photogrammetry for 3d modeling of historic sites: A case study of the marinids’ royal necropolis city of Fez, Morocco. Mediterr. Archaeol. Archaeom. 2020, 20, 257–272. [Google Scholar] [CrossRef]

- Yilmazturk, F.; Gurbak, A.E. Geometric Evaluation of Mobile-Phone Camera Images for 3D Information. Int. J. Opt. 2019, 2019, 8561380. [Google Scholar] [CrossRef]

- Inzerillo, L. Super-Resolution Images on Mobile Smartphone Aimed at 3D Modeling. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 259–266. [Google Scholar] [CrossRef]

- Shih, N.-J.; Wu, Y.-C. AR-Based 3D Virtual Reconstruction of Brick Details. Remote Sens. 2022, 14, 748. [Google Scholar] [CrossRef]

- Shih, N.-J.; Wu, Y.-C. An AR-assisted Comparison for the Case Study of the Reconstructed Components in two Old Brick Warehouses. In Proceedings of the ISCA 34th International Conference on Computer Applications in Industry and Engineering, Online, 11–13 October 2021; Volume 79, pp. 150–158. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D. Techniques, Tools, Platforms and Algorithms in Close Range Photogrammetry in Building 3D Model and 2D Representation of Objects and Complex Architectures. Comput. Aided. Des. Appl. 2020, 18, 42–65. [Google Scholar] [CrossRef]

- Pan, X.; Hu, Y.G.; Hou, M.L.; Zheng, X. Research on Information Acquisition and Accuracy Analysis of Ancient Architecture Plaque with Common Smart Phone. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W20, 65–70. [Google Scholar] [CrossRef] [Green Version]

- Lewis, M.; Oswald, C. Can an Inexpensive Phone App Compare to Other Methods When It Comes to 3d Digitization of Ship Models. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 107–111. [Google Scholar] [CrossRef] [Green Version]

- Cardaci, A.; Versaci, A.; Azzola, P. 3d Low-Cost Acquisition for the Knowledge of Cultural Heritage: The Case Study of the Bust of San Nicola Da Tolentino. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W17, 93–100. [Google Scholar] [CrossRef] [Green Version]

- Boboc, R.G.; Gîrbacia, F.; Postelnicu, C.C.; Gîrbacia, T. Evaluation of Using Mobile Devices for 3D Reconstruction of Cultural Heritage Artifacts. In VR Technologies in Cultural Heritage; Communications in Computer and Information Science; Duguleană, M., Carrozzino, M., Gams, M., Tanea, I., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 904, pp. 46–59. [Google Scholar] [CrossRef]

- Scianna, A.; La Guardia, M. 3d Virtual Ch Interactive Information Systems for a Smart Web Browsing Experience for Desktop Pcs and Mobile Devices. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 1053–1059. [Google Scholar] [CrossRef] [Green Version]

- Shults, R. New Opportunities of Low-Cost Photogrammetry for Culture Heritage Preservation. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-5/W1, 481–486. [Google Scholar] [CrossRef] [Green Version]

- Shults, R.; Krelshtein, P.; Kravchenko, I.; Rogoza, O.; Kyselov, O. Low-cost Photogrammetry for Culture Heritage. In Proceedings of the 10th International Conference “Environmental Engineering, Vilnius, Lithuania, 27–28 April 2017”; VGTU Technika: Vilnius Gediminas Technical University: Vilnius, Lithuania, 2017. [Google Scholar] [CrossRef]

- Sirmacek, B.; Lindenbergh, R.; Wang, J. Quality Assessment and Comparison of Smartphone and Leica C10 Laser Scanner Based Point Clouds. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 581–586. [Google Scholar] [CrossRef] [Green Version]

- Somogyi, A.; Barsi, A.; Molnar, B.; Lovas, T. Crowdsourcing Based 3D Modeling. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 587–590. [Google Scholar] [CrossRef] [Green Version]

- Sirmacek, B.; Lindenbergh, R. Accuracy assessment of building point clouds automatically generated from iphone images. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-5, 547–552. [Google Scholar] [CrossRef] [Green Version]

- Dussel, N.; Fuchs, R.; Reske, A.W.; Neumuth, T. Automated 3D thorax model generation using handheld video-footage. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1707–1716. [Google Scholar] [CrossRef]

- Stark, E.; Haffner, O.; Kučera, E. Low-Cost Method for 3D Body Measurement Based on Photogrammetry Using Smartphone. Electronics 2022, 11, 1048. [Google Scholar] [CrossRef]

- Matuzevičius, D.; Serackis, A. Three-Dimensional Human Head Reconstruction Using Smartphone-Based Close-Range Video Photogrammetry. Appl. Sci. 2021, 12, 229. [Google Scholar] [CrossRef]

- Shilov, L.; Shanshin, S.; Romanov, A.; Fedotova, A.; Kurtukova, A.; Kostyuchenko, E.; Sidorov, I. Reconstruction of a 3D Human Foot Shape Model Based on a Video Stream Using Photogrammetry and Deep Neural Networks. Future Internet 2021, 13, 315. [Google Scholar] [CrossRef]

- Cullen, S.; Mackay, R.; Mohagheghi, A.; Du, X. The Use of Smartphone Photogrammetry to Digitise Transtibial Sockets: Optimisation of Method and Quantitative Evaluation of Suitability. Sensors 2021, 21, 8405. [Google Scholar] [CrossRef]

- Gurses, M.E.; Gungor, A.; Hanalioglu, S.; Yaltirik, C.K.; Postuk, H.C.; Berker, M.; Türe, U. Qlone®: A Simple Method to Create 360-Degree Photogrammetry-Based 3-Dimensional Model of Cadaveric Specimens. Oper. Neurosurg. 2021, 21, E488–E493. [Google Scholar] [CrossRef]

- Farook, T.H.; Bin Jamayet, N.; Asif, J.A.; Din, A.S.; Mahyuddin, M.N.; Alam, M.K. Development and virtual validation of a novel digital workflow to rehabilitate palatal defects by using smartphone-integrated stereophotogrammetry (SPINS). Sci. Rep. 2021, 11, 8469. [Google Scholar] [CrossRef]

- Foltynski, P.; Ciechanowska, A.; Ladyzynski, P. Wound surface area measurement methods. Biocybern. Biomed. Eng. 2021, 41, 1454–1465. [Google Scholar] [CrossRef]

- Bridger, C.A.; Douglass, M.J.J.; Reich, P.D.; Santos, A.M.C. Evaluation of camera settings for photogrammetric reconstruction of humanoid phantoms for EBRT bolus and HDR surface brachytherapy applications. Phys. Eng. Sci. Med. 2021, 44, 457–471. [Google Scholar] [CrossRef]

- Gallardo, Y.N.; Salazar-Gamarra, R.; Bohner, L.; De Oliveira, J.I.; Dib, L.L.; Sesma, N. Evaluation of the 3D error of 2 face-scanning systems: An in vitro analysis. J. Prosthet. Dent. 2021, S0022391321003681. [Google Scholar] [CrossRef] [PubMed]

- Pavone, C.; Abrate, A.; Altomare, S.; Vella, M.; Serretta, V.; Simonato, A.; Callieri, M. Is Kelami′s Method Still Useful in the Smartphone Era? The Virtual 3-Dimensional Reconstruction of Penile Curvature in Patients with Peyronie's Disease: A Pilot Study. J. Sex. Med. 2021, 18, 209–214. [Google Scholar] [CrossRef] [PubMed]

- Matsuo, M.; Mine, Y.; Kawahara, K.; Murayama, T. Accuracy Evaluation of a Three-Dimensional Model Generated from Patient-Specific Monocular Video Data for Maxillofacial Prosthetic Rehabilitation: A Pilot Study. J. Prosthodont. 2020, 29, 712–717. [Google Scholar] [CrossRef]

- Trujillo-Jiménez, M.A.; Navarro, P.; Pazos, B.; Morales, L.; Ramallo, V.; Paschetta, C.; De Azevedo, S.; Ruderman, A.; Pérez, O.; Delrieux, C.; et al. body2vec: 3D Point Cloud Reconstruction for Precise Anthropometry with Handheld Devices. J. Imaging 2020, 6, 94. [Google Scholar] [CrossRef] [PubMed]

- Barbero-García, I.; Lerma, J.L.; Mora-Navarro, G. Fully automatic smartphone-based photogrammetric 3D modelling of infant’s heads for cranial deformation analysis. ISPRS J. Photogramm. Remote. Sens. 2020, 166, 268–277. [Google Scholar] [CrossRef]

- Barbero-García, I.; Lerma, J.L.; Miranda, P.; Marqués-Mateu, Á. Smartphone-based photogrammetric 3D modelling assessment by comparison with radiological medical imaging for cranial deformation analysis. Measurement 2019, 131, 372–379. [Google Scholar] [CrossRef]

- Barbero-García, I.; Cabrelles, M.; Lerma, J.L.; Marqués-Mateu, Á. Smartphone-based close-range photogrammetric assessment of spherical objects. Photogramm. Rec. 2018, 33, 283–299. [Google Scholar] [CrossRef] [Green Version]

- Hernandez, A.; Lemaire, E. A smartphone photogrammetry method for digitizing prosthetic socket interiors. Prosthetics Orthot. Int. 2017, 41, 210–214. [Google Scholar] [CrossRef]

- Barbero-García, I.; Lerma, J.L.; Marqués-Mateu, Á.; Miranda, P. Low-Cost Smartphone-Based Photogrammetry for the Analysis of Cranial Deformation in Infants. World Neurosurg. 2017, 102, 545–554. [Google Scholar] [CrossRef]

- Koban, K.C.; Leitsch, S.; Holzbach, T.; Volkmer, E.; Metz, P.M.; Giunta, R.E. 3D Bilderfassung und Analyse in der Plastischen Chirurgie mit Smartphone und Tablet: Eine Alternative zu professionellen Systemen? Handchir. Mikrochir. Plast. Chir. 2014, 46, 97–104. [Google Scholar] [CrossRef] [Green Version]

- Lerma, J.L.; Barbero-García, I.; Marqués-Mateu, Á.; Miranda, P. Smartphone-based video for 3D modelling: Application to infant’s cranial deformation analysis. Measurement 2018, 116, 299–306. [Google Scholar] [CrossRef] [Green Version]

- Ge, Y.; Chen, K.; Liu, G.; Zhang, Y.; Tang, H. A low-cost approach for the estimation of rock joint roughness using photogrammetry. Eng. Geol. 2022, 305, 106726. [Google Scholar] [CrossRef]

- Torkan, M.; Janiszewski, M.; Uotinen, L.; Baghbanan, A.; Rinne, M. Photogrammetric Method to Determine Physical Aperture and Roughness of a Rock Fracture. Sensors 2022, 22, 4165. [Google Scholar] [CrossRef]

- An, P.; Tang, H.; Li, C.; Fang, K.; Lu, S.; Zhang, J. A fast and practical method for determining particle size and shape by using smartphone photogrammetry. Measurement 2022, 193, 110943. [Google Scholar] [CrossRef]

- Fang, K.; An, P.; Tang, H.; Tu, J.; Jia, S.; Miao, M.; Dong, A. Application of a multi-smartphone measurement system in slope model tests. Eng. Geol. 2021, 295, 106424. [Google Scholar] [CrossRef]

- An, P.; Fang, K.; Jiang, Q.; Zhang, H.; Zhang, Y. Measurement of Rock Joint Surfaces by Using Smartphone Structure from Motion (SfM) Photogrammetry. Sensors 2021, 21, 922. [Google Scholar] [CrossRef]

- Tavani, S.; Granado, P.; Riccardi, U.; Seers, T.; Corradetti, A. Terrestrial SfM-MVS photogrammetry from smartphone sensors. Geomorphology 2020, 367, 107318. [Google Scholar] [CrossRef]

- Alessandri, L.; Baiocchi, V.; Del Pizzo, S.; Di Ciaccio, F.; Onori, M.; Rolfo, M.F.; Troisi, S. The Fusion of External and Internal 3d Photogrammetric Models as a Tool to Investigate the Ancient Human/Cave Interaction: The La Sassa Case Study. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 1443–1450. [Google Scholar] [CrossRef]

- Dabove, P.; Grasso, N.; Piras, M. Smartphone-Based Photogrammetry for the 3D Modeling of a Geomorphological Structure. Appl. Sci. 2019, 9, 3884. [Google Scholar] [CrossRef] [Green Version]

- Francioni, M.; Simone, M.; Stead, D.; Sciarra, N.; Mataloni, G.; Calamita, F. A New Fast and Low-Cost Photogrammetry Method for the Engineering Characterization of Rock Slopes. Remote Sens. 2019, 11, 1267. [Google Scholar] [CrossRef] [Green Version]

- Saif, W.; Alshibani, A. Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications. Appl. Sci. 2022, 12, 1053. [Google Scholar] [CrossRef]

- Hansen, L.H.; Pedersen, T.M.; Kjems, E.; Wyke, S. Smartphone-Based Reality Capture for Subsurface Utilities: Experiences from Water Utility Companies in Denmark. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLVI-4/W4-2021, 25–31. [Google Scholar] [CrossRef]

- Fauzan, K.N.; Suwardhi, D.; Murtiyoso, A.; Gumilar, I.; Sidiq, T.P. Close-Range Photogrammetry Method for Sf6 Gas Insulated Line (Gil) Deformation Monitoring. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B2-2021, 503–510. [Google Scholar] [CrossRef]

- Moritani, R.; Kanai, S.; Akutsu, K.; Suda, K.; Elshafey, A.; Urushidate, N.; Nishikawa, M. Streamlining Photogrammetry-based 3D Modeling of Construction Sites using a Smartphone, Cloud Service and Best-view Guidance. In Proceedings of the International Symposium on Automation and Robotics in Construction, Kitakyushu, Japan, 26–30 October 2020; pp. 1037–1044. [Google Scholar] [CrossRef]

- Yu, L.; Lubineau, G. A smartphone camera and built-in gyroscope based application for non-contact yet accurate off-axis structural displacement measurements. Measurement 2021, 167, 108449. [Google Scholar] [CrossRef]

- Najathulla, B.C.; Deshpande, A.S.; Khandelwal, M. Smartphone camera-based micron-scale displacement measurement: Development and application in soft actuators. Instrum. Sci. Technol. 2022, 50, 616–625. [Google Scholar] [CrossRef]

- Tungol, Z.P.L.; Toriya, H.; Owada, N.; Kitahara, I.; Inagaki, F.; Saadat, M.; Jang, H.D.; Kawamura, Y. Model Scaling in Smartphone GNSS-Aided Photogrammetry for Fragmentation Size Distribution Estimation. Minerals 2021, 11, 1301. [Google Scholar] [CrossRef]

- Zhu, R.; Guo, Z.; Zhang, X. Forest 3D Reconstruction and Individual Tree Parameter Extraction Combining Close-Range Photo Enhancement and Feature Matching. Remote Sens. 2021, 13, 1633. [Google Scholar] [CrossRef]

- Kujawa, P. Comparison of 3D Models of an Object Placed in Two Different Media (Air and Water) Created on the Basis of Photos Obtained with a Mobile Phone Camera. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2021; Volume 684, p. 012032. [Google Scholar] [CrossRef]

- Van, T.N.; Le Thanh, T.; Natasa, N. Measuring propeller pitch based on photogrammetry and CAD. Manuf. Technol. 2021, 21, 706–713. [Google Scholar] [CrossRef]

- Zhou, K.C.; Cooke, C.; Park, J.; Qian, R.; Horstmeyer, R.; Izatt, J.A.; Farsiu, S. Mesoscopic Photogrammetry with an Unsta-bilized Phone Camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7535–7545. [Google Scholar]

- Wolf, Á.; Troll, P.; Romeder-Finger, S.; Archenti, A.; Széll, K.; Galambos, P. A Benchmark of Popular Indoor 3D Reconstruction Technologies: Comparison of ARCore and RTAB-Map. Electronics 2020, 9, 2091. [Google Scholar] [CrossRef]

- Hellmuth, R.; Wehner, F.; Giannakidis, A. Datasets of captured images of three different devices for photogrammetry calculation comparison and integration into a laserscan point cloud of a built environment. Data Brief 2020, 33, 106321. [Google Scholar] [CrossRef]

- Yang, Z.; Han, Y. A Low-Cost 3D Phenotype Measurement Method of Leafy Vegetables Using Video Recordings from Smartphones. Sensors 2020, 20, 6068. [Google Scholar] [CrossRef]

- Marzulli, M.I.; Raumonen, P.; Greco, R.; Persia, M.; Tartarino, P. Estimating tree stem diameters and volume from smartphone photogrammetric point clouds. For. Int. J. For. Res. 2019, 93, 411–429. [Google Scholar] [CrossRef] [Green Version]

- Collins, T.; Woolley, S.I.; Gehlken, E.; Ch’Ng, E. Automated Low-Cost Photogrammetric Acquisition of 3D Models from Small Form-Factor Artefacts. Electronics 2019, 8, 1441. [Google Scholar] [CrossRef]

- Ahmad, N.; Azri, S.; Ujang, U.; Cuétara, M.G.; Retortillo, G.M.; Salleh, S.M. Comparative Analysis of Various Camera Input for Videogrammetry. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W16, 63–70. [Google Scholar] [CrossRef] [Green Version]

- Chaves, A.G.S.; Sanquetta, C.R.; Arce, J.E.; Dos Santos, L.O.; Moreira, I.M.; Franco, F.M. Tridimensional (3d) Modeling of Trunks and Commercial Logs of Tectona grandis L.f. Floresta 2018, 48, 225–234. [Google Scholar] [CrossRef] [Green Version]

- Mousavi, V.; Khosravi, M.; Ahmadi, M.; Noori, N.; Naveh, A.H.; Varshosaz, M. The Performance Evaluation of Multi-Image 3D Reconstruction Software with Different Sensors. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W5, 515–519. [Google Scholar] [CrossRef] [Green Version]

- Yun, M.; Yeu, Y.; Choi, C.; Park, J. Application of Smartphone Camera Calibration for Close-Range Digital Photogrammetry. Korean J. Remote Sens. 2014, 30, 149–160. [Google Scholar] [CrossRef] [Green Version]

- Johary, Y.H.; Trapp, J.; Aamry, A.; Aamri, H.; Tamam, N.; Sulieman, A. The suitability of smartphone camera sensors for detecting radiation. Sci. Rep. 2021, 11, 12653. [Google Scholar] [CrossRef]

- Haertel, M.E.M.; Linhares, E.J.; de Melo, A.L. Smartphones for latent fingerprint processing and photography: A revolution in forensic science. WIREs Forensic Sci. 2021, 3, e1410. [Google Scholar] [CrossRef]

- Zancajo-Blázquez, S.; González-Aguilera, D.; Gonzalez-Jorge, H.; Hernandez-Lopez, D. An Automatic Image-Based Modelling Method Applied to Forensic Infography. PLoS ONE 2015, 10, e0118719. [Google Scholar] [CrossRef]

- Aldelgawy, M.; Abu-Qasmieh, I. Calibration of Smartphone’s Rear Dual Camera System. Geodesy Cartogr. 2021, 47, 162–169. [Google Scholar] [CrossRef]

- Ataiwe, T.N.; Hatem, I.; Al Sharaa, H.M.J. Digital Model in Close-Range Photogrammetry Using a Smartphone Camera. E3S Web Conf. 2021, 318, 04005. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D. Automated calibration of smartphone cameras for 3D reconstruction of mechanical pipes. Photogramm. Rec. 2021, 36, 124–146. [Google Scholar] [CrossRef]

- Wu, D.; Chen, R.; Chen, L. Visual Positioning Indoors: Human Eyes vs. Smartphone Cameras. Sensors 2017, 17, 2645. [Google Scholar] [CrossRef] [Green Version]

- Akca, D.; Gruen, A. Comparative geometric and radiometric evaluation of mobile phone and still video cameras. Photogramm. Rec. 2009, 24, 217–245. [Google Scholar] [CrossRef]

- Massimiliano, P. Image-based methods for metric surveys of buildings using modern optical sensors and tools: From 2d ap-proach to 3d and vice versa. Int. J. Civ. Eng. Technol. 2018, 9, 729–745. [Google Scholar]

- Smith, M.J.; Kokkas, N. Assessing the Photogrammetric Potential of Cameras in Portable Devices. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B5, 381–386. [Google Scholar] [CrossRef]

- Camera-Calibration-with-Large-Chessboards [an Opensource Software with MIT License]. Available online: Https://Github.Com/Henrikmidtiby/Camera-Calibration-with-Large-Chessboards (accessed on 14 December 2022).

- Cloud Compare (Version 2.13.Alpha) GPL Software. 2022. Available online: https://www.cloudcompare.org/main.html (accessed on 14 December 2022).

| Research Area | Research Papers | Number |

|---|---|---|

| cultural heritage | [23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44] | 22 |

| medical | [45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64] | 20 |

| geomorphology, geotechnology and geology | [65,66,67,68,69,70,71,72,73] | 9 |

| industrial application | [74,75,76,77,78,79] | 6 |

| Camera | IO Stability | Pixel Resolution H × W | Pixel Size [μm] | f [mm] | Mean GSD * [mm] |

|---|---|---|---|---|---|

| Samsung Galaxy S10 | low | 2268 × 4032 | 1.5 | 4.9 | 0.3 |

| Xiaomi Redmi Note 11S | high | 3000 × 4000 | 2.1 | 6.1 | 0.35 |

| Nikon D5200 | very high | 4000 × 6000 | 4.0 | 21.1 | 0.2 |

| Model | Production Year | MAD f [pix] | MAD x0 [pix] | MAD y0 [pix] | Points (f/x0/y0) | Ranking |

|---|---|---|---|---|---|---|

| Xiaomi Redmi Note 11S | 2022 | 1.94 | 0.77 | 0.96 | 2/1/1 | 1 |

| Motorola Moto G31 | 2021 | 1.91 | 3.11 | 1.33 | 1/10/3 | 2 |

| Xiaomi M2003J15SG | 2020 | 4.20 | 1.66 | 3.00 | 4/2/11 | 3 |

| Huawei P30 Lite | 2019 | 5.03 | 2.23 | 1.41 | 6/7/4 | 4 |

| Xiaomi Redmi Note 8 Pro | 2019 | 4.82 | 1.83 | 2.05 | 5/5/8 | 5 |

| OPPO A72 | 2020 | 2.40 | 2.31 | 2.08 | 3/8/9 | 6 |

| Realme 7i RMX2193 | 2020 | 41.33 | 1.83 | 1.28 | 14/4/2 | 7 |

| Samsung Galaxy M51 | 2021 | 39.49 | 1.87 | 1.88 | 13/6/5 | 8 |

| Xiaomi Redmi Note 7 | 2019 | 7.80 | 3.61 | 1.90 | 8/11/6 | 9 |

| Motorola One Action | 2019 | 5.86 | 3.91 | 1.94 | 7/12/7 | 10 |

| Samsung Galaxy S9 | 2018 | 33.76 | 1.73 | 3.16 | 12/3/12 | 11 |

| Xiaomi Redmi Note 10s | 2021 | 10.69 | 2.70 | 2.37 | 10/9/10 | 12 |

| Lenovo K53a48 | 2017 | 9.91 | 4.18 | 3.45 | 9/13/13 | 13 |

| Samsung Galaxy S10 | 2019 | 12.51 | 17.15 | 7.26 | 11/14/14 | 14 |

| SPC | Method | Mean d [mm] | Std d [mm] | Median d [mm] |

|---|---|---|---|---|

| Samsung Galaxy S10 | Pre-calibration | 0.65 | 0.55 | 0.54 |

| Samsung Galaxy S10 | Self-calibration | 0.99 | 0.65 | 0.90 |

| Xiaomi Redmi Note 11S | Pre-calibration | 0.48 | 0.41 | 0.38 |

| Xiaomi Redmi Note 11S | Self-calibration | 0.70 | 0.54 | 0.54 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jasińska, A.; Pyka, K.; Pastucha, E.; Midtiby, H.S. A Simple Way to Reduce 3D Model Deformation in Smartphone Photogrammetry. Sensors 2023, 23, 728. https://doi.org/10.3390/s23020728

Jasińska A, Pyka K, Pastucha E, Midtiby HS. A Simple Way to Reduce 3D Model Deformation in Smartphone Photogrammetry. Sensors. 2023; 23(2):728. https://doi.org/10.3390/s23020728

Chicago/Turabian StyleJasińska, Aleksandra, Krystian Pyka, Elżbieta Pastucha, and Henrik Skov Midtiby. 2023. "A Simple Way to Reduce 3D Model Deformation in Smartphone Photogrammetry" Sensors 23, no. 2: 728. https://doi.org/10.3390/s23020728