1. Introduction

With the continuous development of science and technology, the effective integration of life sciences and engineering sciences has become the main feature of modern science and technology [

1], and meta-heuristic algorithms have flourished under this trend. Currently, optimization objectives in practical engineering applications range from single objective to multi-objective, from continuous to discrete, and from constrained to unconstrained, with complex problems. The drawbacks of traditional optimization algorithms are obviously due to their heavy reliance on initial values and their tendency to fall into local optima. Meta-heuristic optimization algorithms are easy to implement, bypass local optima, are non-derivative mechanisms, and do not require gradient information [

2,

3,

4,

5]. Compared with the two types of algorithms, the performance of meta-heuristic algorithms is outstanding and has received widespread attention from scholars; they have not only played an important role in the field of computing, but have also shown powerful problem-solving capabilities in the fields of military [

6], agriculture [

7] and hydraulic engineering [

8].

Since the series of algorithms such as Particle Swarm Optimization [

9] (PSO), Genetic Algorithm [

10] (GA) and Artificial Bee Colony [

11] (ABC) have been proposed, they are continuously applied in many engineering examples and provide better solutions to the problems [

12]. In the process of rapid development, scholars mainly focus on population initialization, population exploration and how to quickly converge to the global optimum to study meta-heuristic algorithms in depth. Abualigah L et al. proposed a new meta heuristic algorithm in 2021: Aquila Optimizer (AO) [

13]. The algorithm, inspired by the prey-catching behavior of the Aquila, has demonstrated in the generalized arithmetic test that AO has a high performance and high local optimum capability in the exploration and development stages and in the process of handling composite functions compared to 11 algorithms such as GOA, EO, DA, PSO, ALO, GWO, and SSA. However, its local exploitation capability is insufficient and easy to fall into a local optimum and slow convergence, and many scholars have improved it. Wang S et al. [

14] combined the development phase of Harris Hawks Optimization (HHO) with the exploration phase of AO to propose an improved hybrid Aquila Optimizer and Harris Hawks Optimization (IHAOHHO), combining both nonlinear escape energy parameters and stochastic opposition-based learning strategies to enhance the exploration and development of algorithms in standard test functions and industrial engineering design problems, demonstrating strong superior performance and good promise. Verma M et al. [

15] generated a population by a standard AO and a new population by a single-stage genetic algorithm based on the concept of evolution, where binary tournament selection, roulette wheel selection, shuffle crossover and replacement mutations occur to generate a new population. The chaotic mapping criterion is then applied to obtain various variants of the standard AO technique. The standard AO is further improved, yields better results and is applied to engineering design problems. However, the effect of the homogeneity of chaotic systems on population initialization is not considered. Akyol S [

16] used the tangent search algorithm with an intensive phase (TSA) to optimize the AO and proposes the Skyhawk Optimizer tangent search algorithm (AO-TSA). The enhancement phase (TSA) of the tangent search algorithm is used instead of the limited exploration phase to improve the exploitation capability of the Aquila Optimizer (AO), while the local minimum escape phase of the TSA is applied in the AO-TSA to avoid the local minimum stagnation problem. Mahajan S et al. [

17] proposed a new hybrid approach using Aquila Optimizer (AO) and Arithmetic Optimization Algorithm (AOA) by combining AO with Arithmetic Optimization Algorithm (AOA). Efficient search is achieved in high- and low-dimensional problems, further showing that the population-based approach achieves efficient search results in high-dimensional problems. Zhang Y J et al. [

18] similarly combined AO with Arithmetic Optimization Algorithm (AOA) and proposed The Hybrid Algorithm of Arithmetic Optimization Algorithm with Aquila Optimizer (AOAAO). An Individual exploration and development process in the energy parameter E equilibrium population is also introduced, and segmented linear mappings are introduced to reduce the randomness of the energy parameters. The proposed AOAAO is experimentally validated to have a faster convergence speed and higher convergence accuracy.

In addition, AIRassas A M et al. developed an improved Adaptive Neuro-Fuzzy Inference System (ANFIS) via AO: AO-ANFIS [

19] for predicting oil production and experimentally demonstrated that AO significantly improved the prediction accuracy of ANFIS. Abd Elaziz M et al. [

20] proposed a new image of COVID-19 classification framework using DL and a hybrid swarm-based algorithm that use AO as a feature selector to reduce the dimensionality of the image. The experimental results showed that the performance metrics of feature extraction and selection stages are more accurate than other methods. Jnr E O N et al. [

21] proposed a new DWT-PSR-AOA-BPNN model for wind speed prediction and for efficient grid operation by combining the Skyhawk Optimization Algorithm (AOA) with Discrete Wavelet Transform (DWT), Phase Space Reconfiguration (PSR) with Chaos Theory, and Back Propagation Neural Network (BPNN). Meanwhile, AO has been applied to population prediction [

22], PID parameter optimization [

23], power generation allocation [

24], path planning [

25] and other fields. Due to the short time of AO proposal, its slow convergence speed and its tendency to fall into local optimum still need further optimization and improvement. It is important to further improve its performance and make it applicable to more practical problems.

The literature shows that these algorithms can effectively approach the true expectation of a multi-objective problem. The problem of zero search preferences, however, has not attracted much attention from scholars. The effective solution of this problem is the basis for improving the robustness of the AO algorithm and allows the algorithm to be applied to new classes of problems or to propose new optimization algorithms. This is the purpose and significance of this work, in which we propose a new algorithm called Adaptive Aquila Optimizer Combining Niche Thought with Dispersed Chaotic Swarm (NCAAO), based on the recently proposed Aquila optimizer (AO). The research gaps and contributions of the paper can be summarized as follows:

The NCAAO has great potential in solving various engineering problems such as feature selection, image segmentation, image enhancement and engineering design.

The AO and NCAAO have rarely been combined with other available meta-heuristics; therefore, the NCAAO has a great potential to be combined with other algorithms available in the literature.

Hence, a new chaotic mapping is introduced which has better uniformity, better initializes the population, and effectively enhances the efficiency of the algorithm.

This new algorithm is further investigated in terms of search thresholds, which allows the population to adaptively adjust the search space by changing the search threshold. The introduced adaptive weight parameter perturbs the population of individual positions and improves the algorithm local exploitation ability.

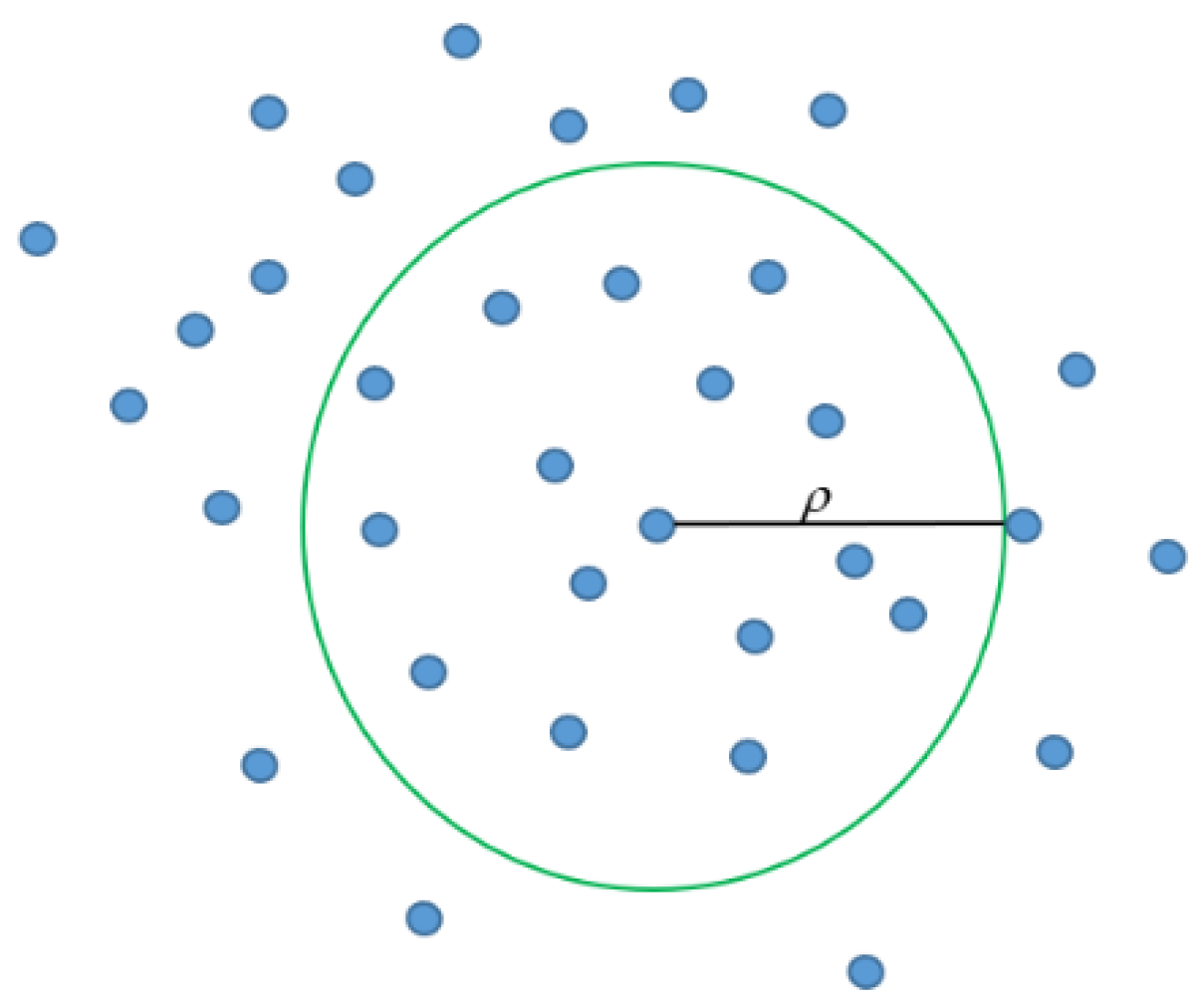

Moreover, for the algorithm to obtain the optimal solution with high accuracy, a communication exchange strategy based on the idea of Niche thought is proposed to ensure the optimization-seeking accuracy and convergence speed of the algorithm by screening elite individuals.

In summary, in order to solve the problem that the convergence of AO is slow and easy to fall into local optimum, firstly and as inspired by the literature [

26], the method of initializing population is adopted to improve the robustness of the algorithm. The Aquila population is initialized by DLCS chaotic mapping. The approach we have used in this study aims to enhance the homogeneity of the Aquila population in the search space and improve the randomness and ergodicity of the population individuals. Second, an adaptive adjustment search strategy for de-searching preferences is proposed to balance global exploitation and local search by the adaptive threshold adjustment method. On this basis, the adaptive location weighting method is introduced to perturb the update of the group individuals’ location and improve the local exploitation ability. The de-search preference adaptive adjustment search strategy can remove the search preference problem effectively to a certain extent and help the algorithm escape from the local optimum quickly. Finally, inspired by literature [

27] and combined with the elite solution mechanism, a communication exchange strategy based on the idea of Niche thought is used to ensure that the goodness of the population is maintained during the iterative process. Through the information exchange among individuals, the global optimum is better selected and the rapid convergence of the scout individuals to the global optimum is promoted to enhance the robustness of the algorithm.

The rest of the paper is structured as follows:

Section 2 describes the principle of AO and studies its module performance.

Section 3 describes the proposed algorithm and analyzes the time complexity of the proposed algorithm.

Section 4 verifies the robustness and applicability of the algorithm through numerical experiments and engineering experiments.

Section 5 summarizes the full text and examines the future research direction.

4. Algorithm Performance Experiments

In this section, the performance of the proposed NCAAO in solving optimization problems is studied. For this purpose, fifteen objective functions of NCAAO were selected from the literature [

36,

37] for solving different types of unimodal, high-dimensional multimodal and fixed-dimensional multimodal modes. The benchmark functions used are detailed in

Table 3. Similarly, three engineering design problems were selected to test the applicability of NCAAO.

In addition, the obtained optimization results from the proposed NCAAO are compared with five well-known optimization algorithms. These competing algorithms include popular methods: Gray Wolf Optimization (GWO) [

38], Whale Optimization Algorithm(WOA) [

39], Harris Hawks Optimization(HHO) [

40], Ant Lion Optimizer(ALO) [

41] and Aquila Optimizer(AO) [

13].

Table 4 shows the values of the control parameters of these algorithms.

To evaluate the performance of optimization algorithms, each of the competing algorithms as well as the proposed NCAAO in 30 independent implementations (with each independent implementation containing 500 iterations) have been implemented on the objective functions. The experimental environment is Windows 10, a 64-bit operating system, the CPU is Inter Core i7-10750H, the main frequency is 2.60 GHz and the memory is 16GB. The algorithm is based on MATLAB R2019a. The simulation results are reported using two criteria: the average of the best solutions obtained (AVG) and the standard deviation of the best solutions obtained (STD).

4.1. Benchmark Set and Compared Algorithms

Table 3 includes five unimodal functions (F1–F5) to test the algorithm development capability, four high-dimensional multimodal functions (F6–F9) to test the algorithm search capability as well as the local optimum avoidance capability, and six fixed-dimensional multimodal modes (F10-F15) that are considered to be a combination of the first two sets of random rotations, shifts and biases. The composite test functions are more similar to the real search space, facilitating the simultaneous benchmarking of algorithm exploration and development [

42]. The ‘w/t/l’ in

Table 3 indicates the number of wins, draws and failures of each algorithm. The algorithms in

Table 3 are shown in

Figure 5 in the graph of the effect of adaptation in two dimensions.

The NCAAO algorithm was tested by the unimodal functions, and the results are shown in

Table 5. As can be seen from the table, the NCAAO algorithm is able to provide very competitive results on the single-peak test function, especially on the F2 function for all dimensions with significant improvements. The proposed algorithm converges to the global optimum of this function, i.e., zero. This proves that the proposed algorithm has a high utilization capacity, which is due to the role of initializing the population with the DLCS chaotic map, helping the NCAAO algorithm to provide high utilization. Therefore, the NCAAO algorithm has a strong development capability.

After proving the exploitation of NCAAO, we will discuss the exploration of this algorithm. The NCAAO algorithm was tested by high-dimensional multimodal functions, and the results are shown in

Table 6. From the table, it can be seen that the NCAAO algorithm is equally competitive with the classical algorithm in finding the optimal values for different dimensions of the F6 and F7 functions. However, the results of finding the optimum for F8 and F9 functions show that the NCAAO algorithm has a stronger search ability and local optimum avoidance ability than other classical algorithms. The excellent exploration of the proposed algorithm is due to the adjustment of adaptive search thresholds and the introduction of adaptive position weighting parameters. Directing a population from one search region into another promotes exploration. This is similar to the crossover operator in GA that highly emphasizes search space exploration.

F10–F15 are the six objective functions that evaluate the ability of the optimization algorithm to handle fixed-dimensional multimodal mode problems. The results of optimizing these objective functions using the proposed NCAAO and the competing algorithms are shown in

Table 7. The proposed NCAAO optimizing F10, F12, F14, and F15 is able to converge to the global optimum of these functions. NCAAO is the first best optimizer for solving F14 and F15. In optimizing the functions of F10, F11, F12 and F13, although the average criterion of NCAAO is similar to some rival algorithms, its standard criterion is more outstanding. Therefore, the proposed NCAAO solves these objective functions more effectively. The analysis of the simulation results shows that the proposed NCAAO has a higher ability to solve the fixed-dimensional multimodal mode optimization problems from F10 to F15 compared with six competing algorithms.

Although the use of AVG and STD indices from the experimental evaluation showed that the NCAAO algorithm outperformed the comparison algorithm, it was not sufficient to demonstrate the superiority of the NCAAO algorithm. Due to the random nature of the metaheuristic, and for a fair comparison, the Wilcoxon rank sum test statistical analysis was used to draw statistically significant conclusions [

43]. Simulation results for testing the proposed NCAAO using five competing algorithms are presented in

Table 8. In this table, when the

p-value is less than 0.05, the proposed NCAAO has a significant advantage over the competing algorithms in this group of objective functions. The

p-values in the table also prove that the advantage is significant in most cases. This proves the high exploitation capability of the proposed algorithm, which is due to a proposed adaptive adjustment strategy of de-searching preferences that allows the algorithm to balance effectively in development and exploration. The effective combination of small habitat techniques allows the algorithm to speed up the process of preference seeking. In addition, the

p-value also shows that the NCAAO algorithm effectively avoids the zero-search preference problem, proving that the results of the algorithm are very competitive.

To evaluate the convergence performance of the NCAAO algorithm, the convergence curves were plotted by selecting the best average value among 30 iterations, as shown in

Figure 6. The convergence curves for the unimodal functions, high-dimensional multimodal functions and fixed-dimensional multimodal functions are shown in the figure. The curves show that for the single-peaked and multi-peaked functions, the NCAAO algorithm has a better ability to find the best and converges faster, which further proves that the algorithm has a better ability to develop as well as explore. As for the multimodal functions, the NCAAO algorithm fluctuates more during the initial iterations and relatively less so in the later stages. The significant decrease of the curve during the iterative process shows that the proposed communication exchange strategy based on the idea of small habitats increases the ability to update the optimal adaptation of the population. The overall iterative curve analysis concludes that the NCAAO algorithm has a stronger convergence behavior relative to the comparison algorithm. It is further demonstrated that NCAAO has a better ability to balance exploitation and exploration.

In summary, the NCAAO algorithm has significantly superior performance compared to other more classical optimization algorithms (e.g., GWO, WOA, HHO, ALO, AO). From the data in

Table 5 and

Table 6, we can see that the performance of the optimization search is better on the unimodal functions as well as the high-dimensional multimodal functions, and there is no significant decrease with the increase of the dimensionality. At the same time, through the convergence analysis in

Figure 6, the NCAAO algorithm has good convergence overall; although the convergence speed is slower in function F8 compared with other functions, it can still find the optimal solution. It can be seen through the fixed-dimensional multimodal function’s test data in

Table 7 that the optimal solution can still be found accurately when the optimal solution is not zero, which reduces the existence of zero search preference. Compared with other algorithms, the NCAAO algorithm has a strong balance of development ability and search ability.

4.2. Engineering Benchmark Sets

In order to further demonstrate that the NCAAO algorithm has better performance in finding the optimum, and to prove the application value of the algorithm in solving engineering problems, the tension/compression spring design problem [

44], the pressure vessel design problem [

45] and the three-bar truss design problem [

46] were selected for solution testing. Salp Swarm Algorithm (SSA) [

47], Whale Optimization Algorithm (WOA) [

39], Gray Wolf Optimization (GWO) [

38], Moth-flame Optimization MFO [

48], Gravitational Search Algorithm GSA [

49], Particle Swarm Optimization (PSO) [

9], Genetic Algorithm (GA) [

10], Tunicate Swarm Algorithm (TSA) [

50] and NCAAO were also selected for comparison with the NCAAO algorithm for optimization testing of engineering examples. The values of the control parameters for these algorithms are likewise described in

Table 4.

4.2.1. Tension/Compression Spring Design Problem

The purpose of the tension/compression spring design problem is to minimize the weight of the pull-pressure spring, which is shown schematically in

Figure 7. The main considerations in the design process are the cross-sectional diameter of the spring (d), the average coil diameter (D) and the number of active coils (Q).

The mathematical model of this problem is as follows:

The NCAAO is applied to this case based on 30 independent runs with 500 group individuals and 500 iterations in each run. Since this benchmark case has some constraints, we need to integrate the NCAAO with a constraint handling technique. The results of NCAAO are compared to those reported for eight algorithms in the previous literature.

Table 9 shows the detailed results of the proposed NCAAO compared to other techniques. Based on the results in

Table 9, it is observed that NCAAO can reveal very competitive results compared to the MFO algorithm. Additionally, the NCAAO outperforms other optimizers significantly. The results obtained show that the NCAAO is capable of dealing with a constrained space.

4.2.2. Pressure Vessel Design Problem

The pressure vessel design problem is a minimization problem, which is shown schematically in

Figure 8. The variables of this case are thickness of shell (

Ts), thickness of the head (

Th), inner radius (

r) and length of the section without the head (

L).

The formulation of this test case is as follows:

Table 10 gives the optimal design results for the pressure vessels. The data in the table shows that NCAAO has given the relatively best results compared with other algorithms. Therefore, NCAAO is proven to be the best optimizer to deal with this problem in this test.

4.2.3. Three-Bar Truss Design Problem

The three-bar truss design problem is a more classical problem that is used to test the performance of numerous algorithms. The schematic diagram of its structure and the relationship between the forces of each part are shown in

Figure 9. The mathematical model of the problem is as follows:

Variable range

where, l = 100 cm, P = 2 KN/cm

2, σ = 2 KN/cm

2.

Table 11 reports the optimum designs attained by NCAAO and the listed optimizers. Inspecting the results in

Table 11, we detected that the NCAAO is the best optimizer in dealing with problems and can attain superior results compared to other techniques.

5. Conclusions and Prospect

In this work, a new meta-heuristic algorithm called NCAAO is proposed for the AO algorithm, which is prone to fall into local optima and has the property of zero-point search preference and slow convergence. In fact, three new modules are integrated into the AO algorithm. The first one is the DLCS chaotic mapping chosen as the initial Skyhawk population generator. The resulting population has a more uniform distribution of individuals, which further clarifies the convergence direction of the algorithm effectively. The second module goes to the adaptive adjustment strategy of the search preferences, which is used to balance global search and local probing by changing the threshold of the Aquila selection work mechanism. Then, the adaptive position weight parameter is introduced to give a perturbation to the group individuals, which in turn updates their positions and improves the algorithm’s local exploitation capability. Finally, in the third module we propose a communication exchange strategy based on the idea of small habitats to better select the global optimum through the information exchange between individuals and to promote the rapid convergence of population individuals toward the global optimum and reduce the search error. Although chaotic mappings have been used in other algorithms, this work took into consideration the homogeneity of chaotic mappings to produce populations. Similarly, the existence of zero search preferences in the AO algorithm was investigated and the new algorithm was further improved.

The following improvements from the theoretical aspect demonstrate that the NCAAO algorithm shows excellent performance in the unified combination of theory and practice:

Chaotic mapping has the characteristics of chaotic characteristics, randomness, ergodicity and regularity; the use of DLCS mapping produces a more uniform population distribution, which is conducive to an efficient search of the overall search space and further clarifies the convergence direction of the algorithm.

Changing the threshold adaptive_p of the Aquila selection work and introducing the dynamic adjustment strategy in the development and exploration process help the algorithm to choose a reasonable strategy for the search area to seek the best and enhance the utilization behavior of NCAAO in the iterative process.

The introduced adaptive location weight parameter ζ, which makes the group individual locations more diverse, further promotes the exploration behavior of NCAAO in the iterative process and has a constructive impact on balancing the exploitation and exploration trends.

The proposed communication exchange strategy based on the idea of small habitats better promotes the optimization of group adaptation. Among them, a penalty mechanism is given to individuals with lower fitness to promote the NCAAO algorithm to better solve the problems of difficult high-dimensional search, local optimal incentive and unclear convergence direction.

The experiments further investigated the development performance, search performance, and local optimum avoidance performance of NCAAO by fifteen objective functions. The results show that NCAAO has good optimization performance. Meanwhile, three engineering application examples further verify that the NCAAO algorithm provides a better solution for practical engineering applications. Statistical analysis by the Wilcoxon rank sum test concludes that NCAAO has statistical significance. Based on the comprehensive study, it can be concluded that the proposed algorithm has significant advantages in solving practical problems and thus can provide different areas for other researchers. The results demonstrate that the NCAAO algorithm has a more intelligent balancing mechanism in the development and exploration process, which greatly circumvents the local optimal solutions and converges quickly toward the optimal solution when solving many different types of problems. At the same time, the existence of search preferences is suppressed, and the ability to jump out of the local optimal solution is significantly improved.

In future research, we plan to investigate the application of the proposed algorithm to problems such as image enhancement, feature extraction and camera calibration. In addition, we will further investigate in depth the effect of introducing chaotic mappings to evolve the initial population on the convergence performance, which is essential to enhance the performance of the algorithm.