Detection and Recognition Algorithm of Arbitrary-Oriented Oil Replenishment Target in Remote Sensing Image

Abstract

1. Introduction

2. Methodology

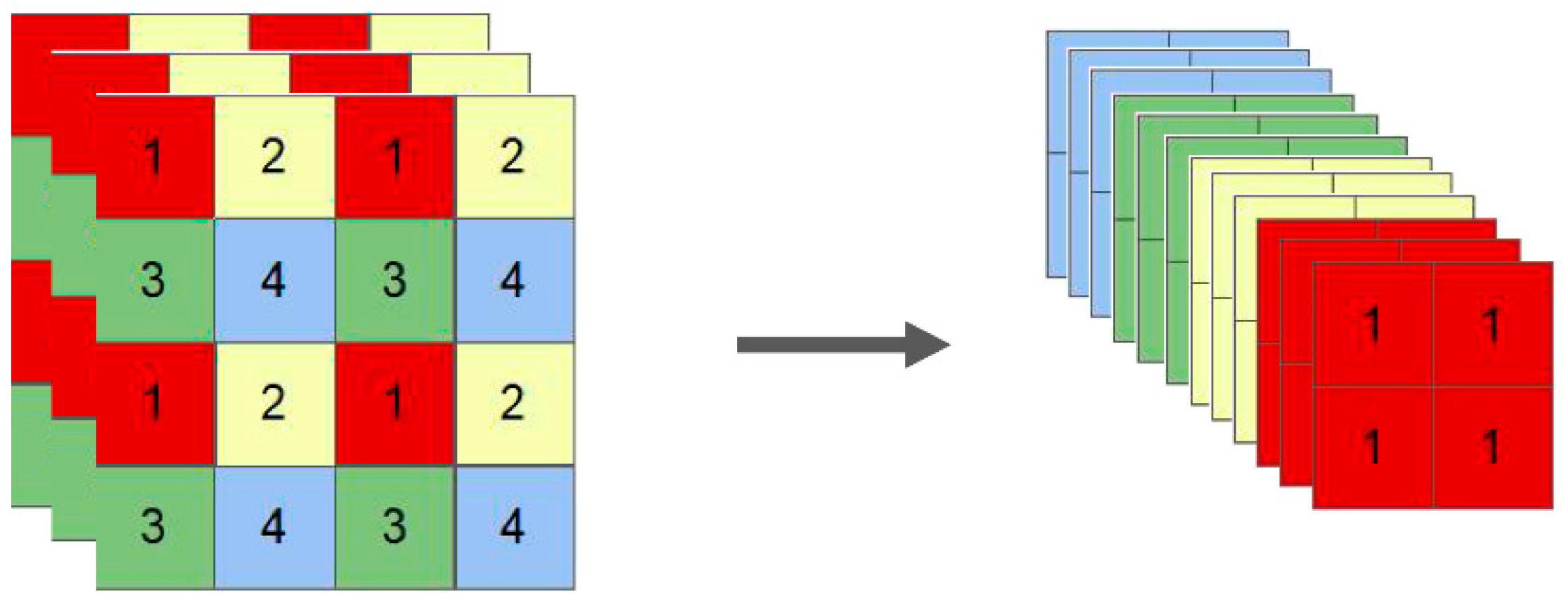

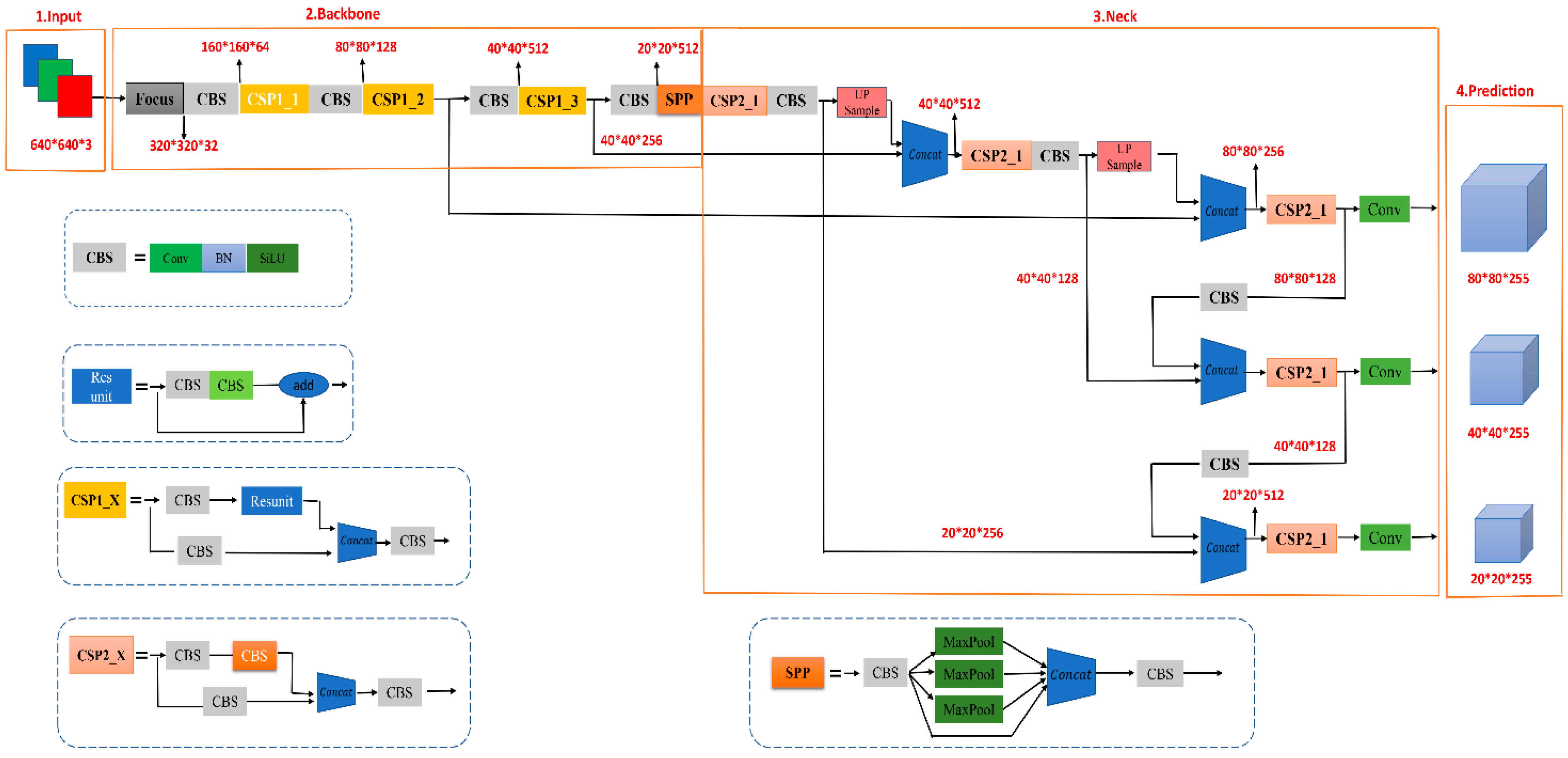

Background of YOLOv5

3. Proposed Method

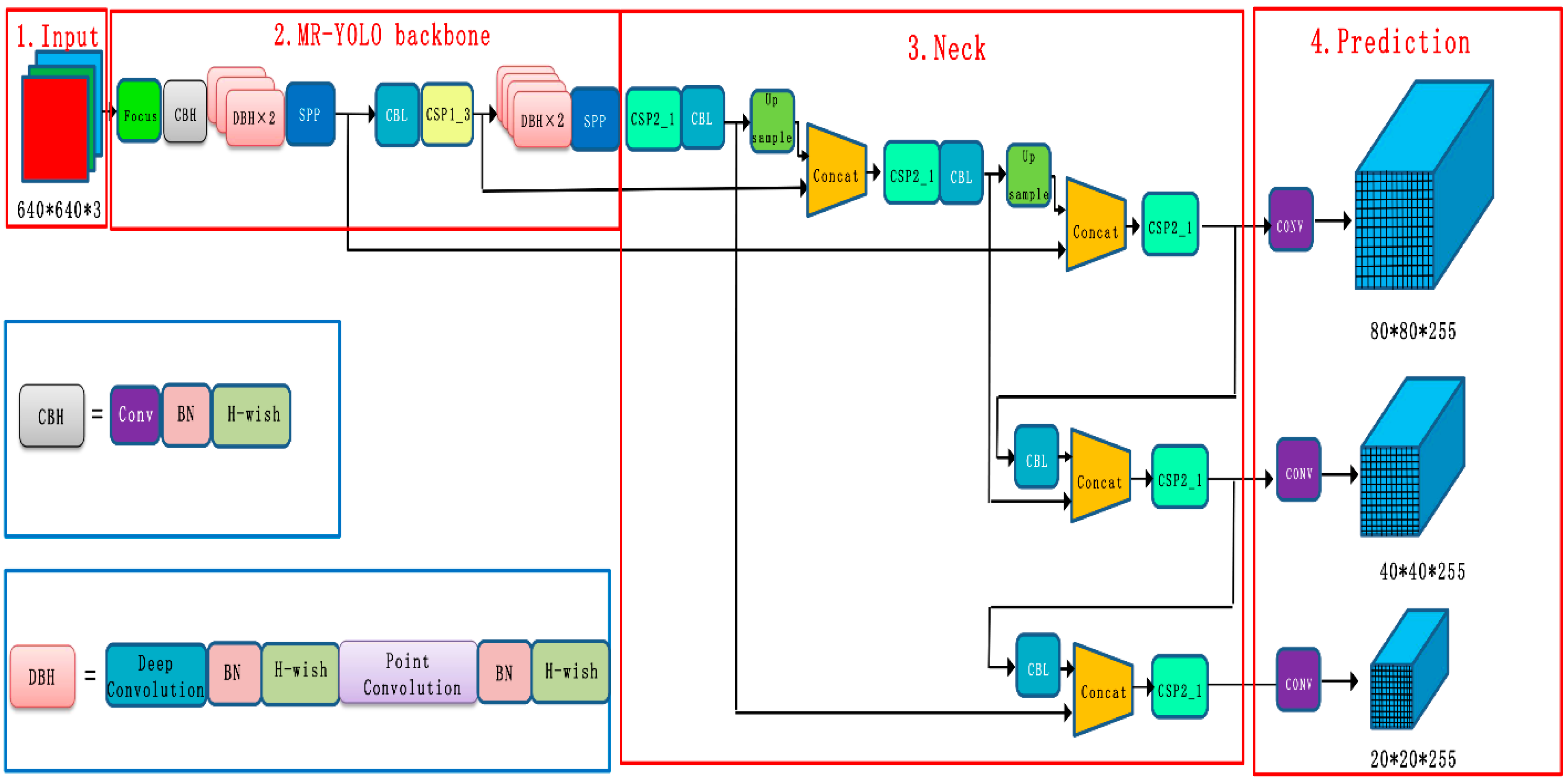

3.1. Improving the MobileNetv3-YOLOv5 Model

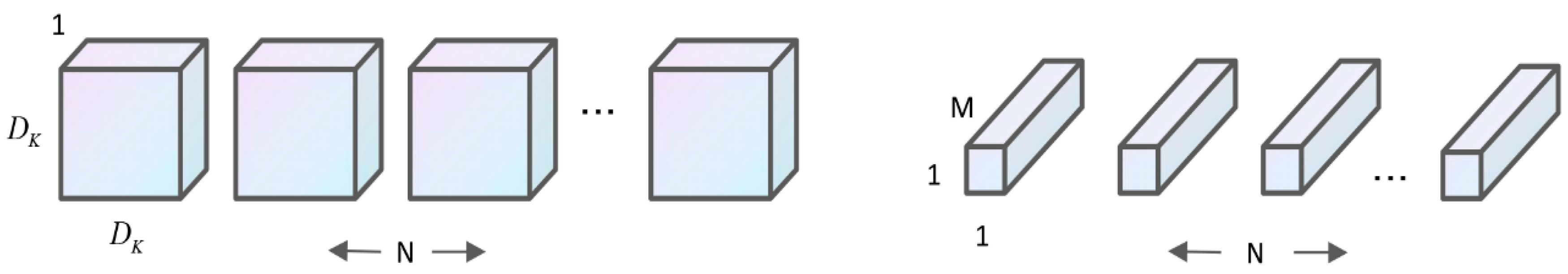

3.1.1. MobileNet Model

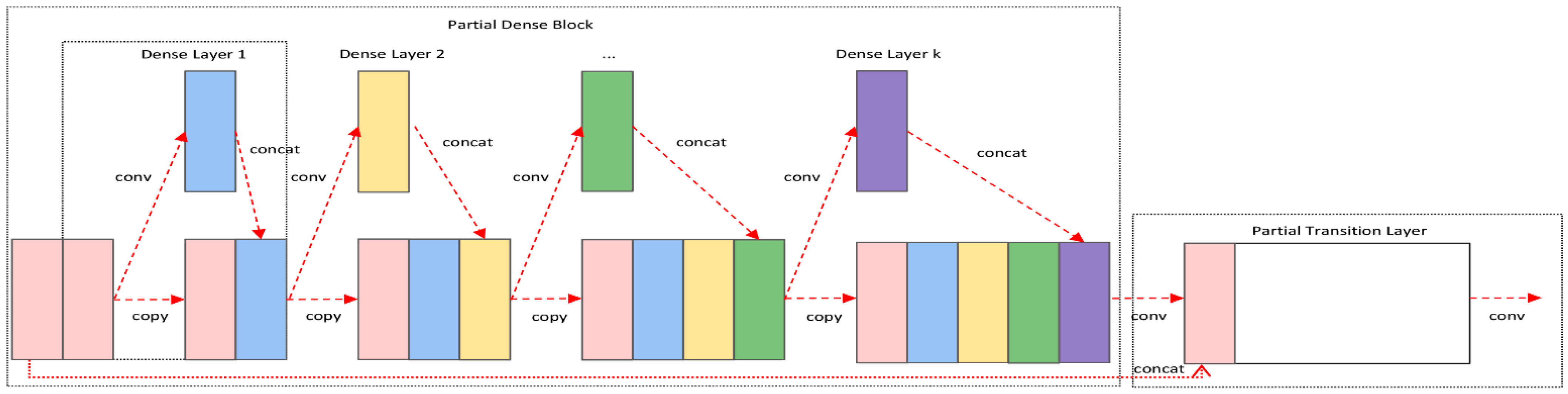

3.1.2. CSPNet Network

3.2. MobileNetv3-YOLOv5 Based Network Model

3.3. MobileNetv3-YOLOv5 Based Network Model

4. Experiments

4.1. Experimental Dataset

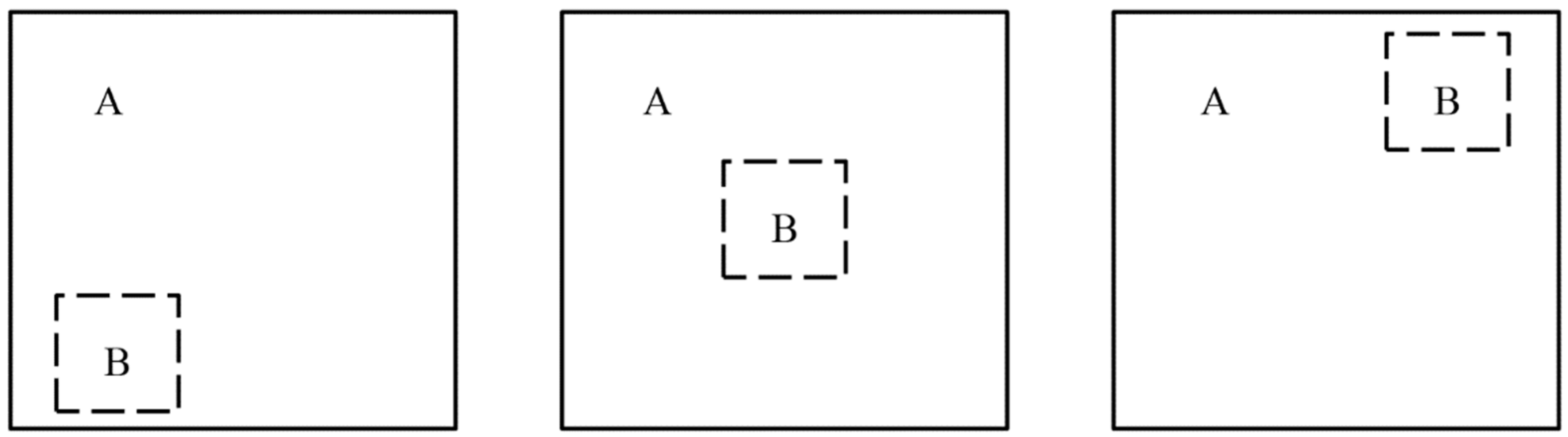

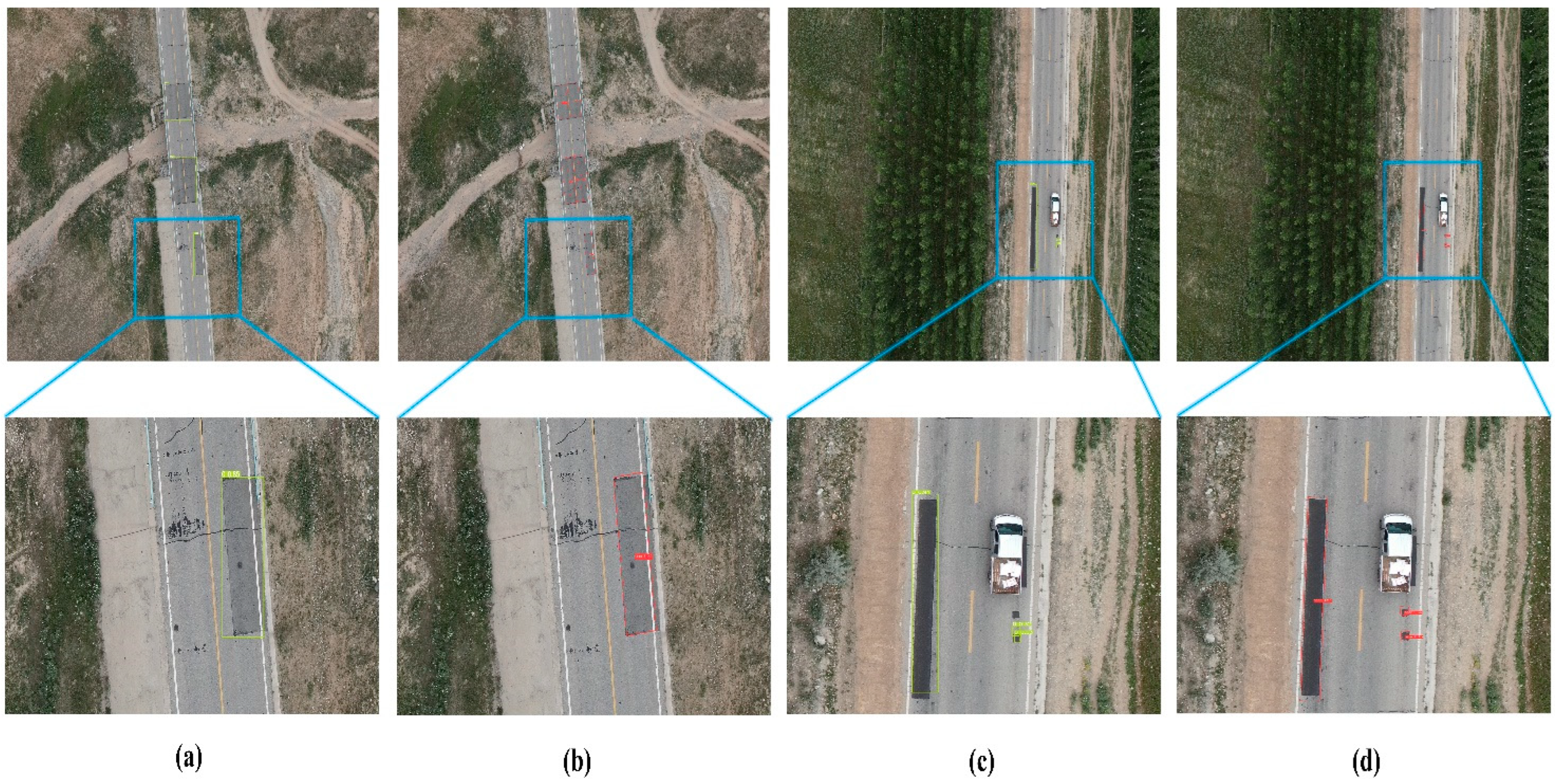

4.2. Long Edge Marking Method

4.3. Experimental Evaluation INDEX

4.4. Experimental Results and Analysis

4.4.1. Effectiveness Experiments

4.4.2. Performance Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mohammad, R.J.; Sami, F.M. A new methodology for non-contact accurate crack width measurement through photogrammetry for automated structural safety evaluation. Smart Mater. Struct. 2013, 22, 035019. [Google Scholar]

- McCrea, A.; Chamberlain, D.; Navon, R. Automated inspection and restoration of steel bridges—A critical review of methods and enabling technologies. Autom. Constr. 2002, 11, 351–373. [Google Scholar] [CrossRef]

- JoonOh, S.; SangUk, H.; SangHyun, L.; Hyoungkwan, K. Computer vision techniques for construction safety and health monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar]

- Chen, J.; Wu, J.; Chen, G.; Dong, W.; Sheng, X. Design and Development of a Multi-rotor Unmanned Aerial Vehicle System for Bridge Inspection. In International Conference on Intelligent Robotics and Applications; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Alex, K.; Ilya, S.; Geoffrey, E.H. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. Trans. Pattern Anal. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Ranjan, V.; Le, H.; Hoai, M. Iterative Crowd Counting; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Berg, A.C.; Fu, C.Y.; Szegedy, C.; Anguelov, D.; Erhan, D.; Reed, S.; Liu, W. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Aided Civ. Infrastruct. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Lei, Z.; Yang, F.; Zhang, D.; Ying, J.Z. Road crack detection using deep convolutional neural network. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Ale, L.; Ning, Z.; Li, L. Road Damage Detection Using RetinaNet. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018. [Google Scholar]

- Ju, H.; Li, W.; Tighe, S.; Zhai, J.; Chen, Y. Detection of sealed and unsealed cracks with complex backgrounds using deep convolutional neural network. Autom. Constr. 2019, 107, 102946. [Google Scholar]

- Rasyid, A.; Albaab, M.; Falah, M.F.; Panduman, Y.; Yusuf, A.A.; Basuki, D.K.; Tjahjono, A.; Budiarti, R.; Sukaridhoto, S.; Yudianto, F. Pothole Visual Detection using Machine Learning Method integrated with Internet of Thing Video Streaming Platform. In Proceedings of the 2019 International Electronics Symposium (IES), Surabaya, Indonesia, 27–28 September 2019. [Google Scholar]

- Kanaeva, I.A.; Ju, A.I. Road pavement crack detection using deep learning with synthetic data. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1019, 012036. [Google Scholar] [CrossRef]

- Maeda, H.; Kashiyama, T.; Sekimoto, Y.; Seto, T.; Omata, H. Generative adversarial network for road damage detection. Comput. Aided Civ. Infrastruct. 2020, 36, 47–60. [Google Scholar] [CrossRef]

- Naddaf-Sh, S.; Naddaf-Sh, M.M.; Zargarzadeh, H.; Kashanipour, A.R. An Efficient and Scalable Deep Learning Approach for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020. [Google Scholar]

- Mandal, V.; Mussah, A.R.; Adu-Gyamfi, Y. Deep Learning Frameworks for Pavement Distress Classification: A Comparative Analysis. arXiv 2020, arXiv:2010.10681. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. IEEE Comput. Soc. 2017, 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, C.Y.; Liao, H.; Wu, Y.H.; Chen, P.Y.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sünderhauf, N. VarifocalNet: An IoU-aware Dense Object Detector. arXiv 2020, arXiv:2008.13367. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Zhao, Y.; Yuan, Z.; Chen, B. Training Cascade Compact CNN With Region-IoU for Accurate Pedestrian Detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3777–3787. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic Anchor Learning for Arbitrary-Oriented Object Detection. arXiv 2020, arXiv:2012.04150. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 2980–2988. [Google Scholar]

- Yu, H.; Zhang, Z.; Qin, Z.; Wu, H.; Li, D.; Zhao, J.; Lu, X. Loss Rank Mining: A General Hard Example Mining Method for Real-time Detectors. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection of Remote Sensing Images from Google Earth in Complex Scenes Based on Multi-Scale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Liao, M.; Shi, B.; Bai, X. TextBoxes++: A Single-Shot Oriented Scene Text Detector. arXiv 2018, arXiv:1801.02765. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, S.; Jin, L.; Xie, L.; Wu, Y.; Wang, Z. Omnidirectional Scene Text Detection with Sequential-free Box Discretization. arXiv 2019, arXiv:1906.02371. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. arXiv 2019, arXiv:1911.09358. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Xian, S.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. arXiv 2019, arXiv:1811.07126. [Google Scholar]

- Qian, W.; Yang, X.; Peng, S.; Guo, Y.; Yan, J. Learning Modulated Loss for Rotated Object Detection. arXiv 2019, arXiv:1911.08299. [Google Scholar] [CrossRef]

| Attribute | Value |

|---|---|

| OS | Ubuntu 20.04 |

| GPU | NVIDIA RTX 3090Ti |

| Memory | 24 GB |

| Deep learning framework | Pytorch1.7 |

| CUDA | 11.0 |

| Lr0 | Momentum | Weight_Decay | Epoch | Batchsize |

|---|---|---|---|---|

| 0.01 | 0.937 | 0.0005 | 300 | 32 |

| Method | MobileNetv3 | CSPNet | CSL | P/% | mAP@0.5/% | mAP@0.95/% |

|---|---|---|---|---|---|---|

| YOLOv5 | 82.7 | 84.1 | 64.5 | |||

| a | √ | 81.9 | 83.5 | 58.3 | ||

| b | √ | 84.5 | 86.2 | 67.1 | ||

| c | √ | 87.3 | 88.1 | 68.8 | ||

| d | √ | √ | 85.3 | 87.8 | 70.4 | |

| e | √ | √ | 89.4 | 89.6 | 71.3 | |

| Ours | √ | √ | √ | 91.1 | 92.4 | 71.9 |

| Model | P | mAP@0.5/% | mAP@0.95/% | FPS/f*s−1 |

|---|---|---|---|---|

| YOLOv3 | 83.2 | 85.3 | 65.1 | 75.9 |

| MobileNetv3 + YOLOv3 | 85.1 | 86.2 | 57.7 | 78.3 |

| MobileNetv3 + YOLOv3 + CSPNet | 86.5 | 87.4 | 66.2 | 74.7 |

| YOLOv3-Tiny | 85.3 | 87.2 | 65.3 | 80.4 |

| YOLOv5s | 82.7 | 84.1 | 64.5 | 131.7 |

| MobileNetv3 + YOLOv5s | 83.4 | 85.5 | 66.3 | 128.5 |

| MobileNetv3 + YOLOv5s + CSPNet | 86.8 | 88.3 | 67.5 | 112.1 |

| MobileNetv3 + YOLOv5s + CSL | 87.1 | 89.3 | 70.9 | 105.8 |

| SwinTransformer + YOLOv5s | 85.4 | 86.7 | 58.3 | 59.5 |

| SwinTransformer + YOLOv5s + CSPNet + CSL | 90.1 | 91.3 | 70.9 | 65.8 |

| Ours | 91.1 | 92.4 | 71.9 | 96.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, Y.; Yang, Q.; Li, L.; Shi, G. Detection and Recognition Algorithm of Arbitrary-Oriented Oil Replenishment Target in Remote Sensing Image. Sensors 2023, 23, 767. https://doi.org/10.3390/s23020767

Hou Y, Yang Q, Li L, Shi G. Detection and Recognition Algorithm of Arbitrary-Oriented Oil Replenishment Target in Remote Sensing Image. Sensors. 2023; 23(2):767. https://doi.org/10.3390/s23020767

Chicago/Turabian StyleHou, Yongjie, Qingwen Yang, Li Li, and Gang Shi. 2023. "Detection and Recognition Algorithm of Arbitrary-Oriented Oil Replenishment Target in Remote Sensing Image" Sensors 23, no. 2: 767. https://doi.org/10.3390/s23020767

APA StyleHou, Y., Yang, Q., Li, L., & Shi, G. (2023). Detection and Recognition Algorithm of Arbitrary-Oriented Oil Replenishment Target in Remote Sensing Image. Sensors, 23(2), 767. https://doi.org/10.3390/s23020767