Real-Time Human Motion Tracking by Tello EDU Drone

Abstract

:1. Introduction

2. Materials and Methods

2.1. Tello EDU Drone

2.2. MediaPipe Framework

2.3. Design and Implementation of Human Motion Tracking

2.3.1. Computer-Based Flight Control

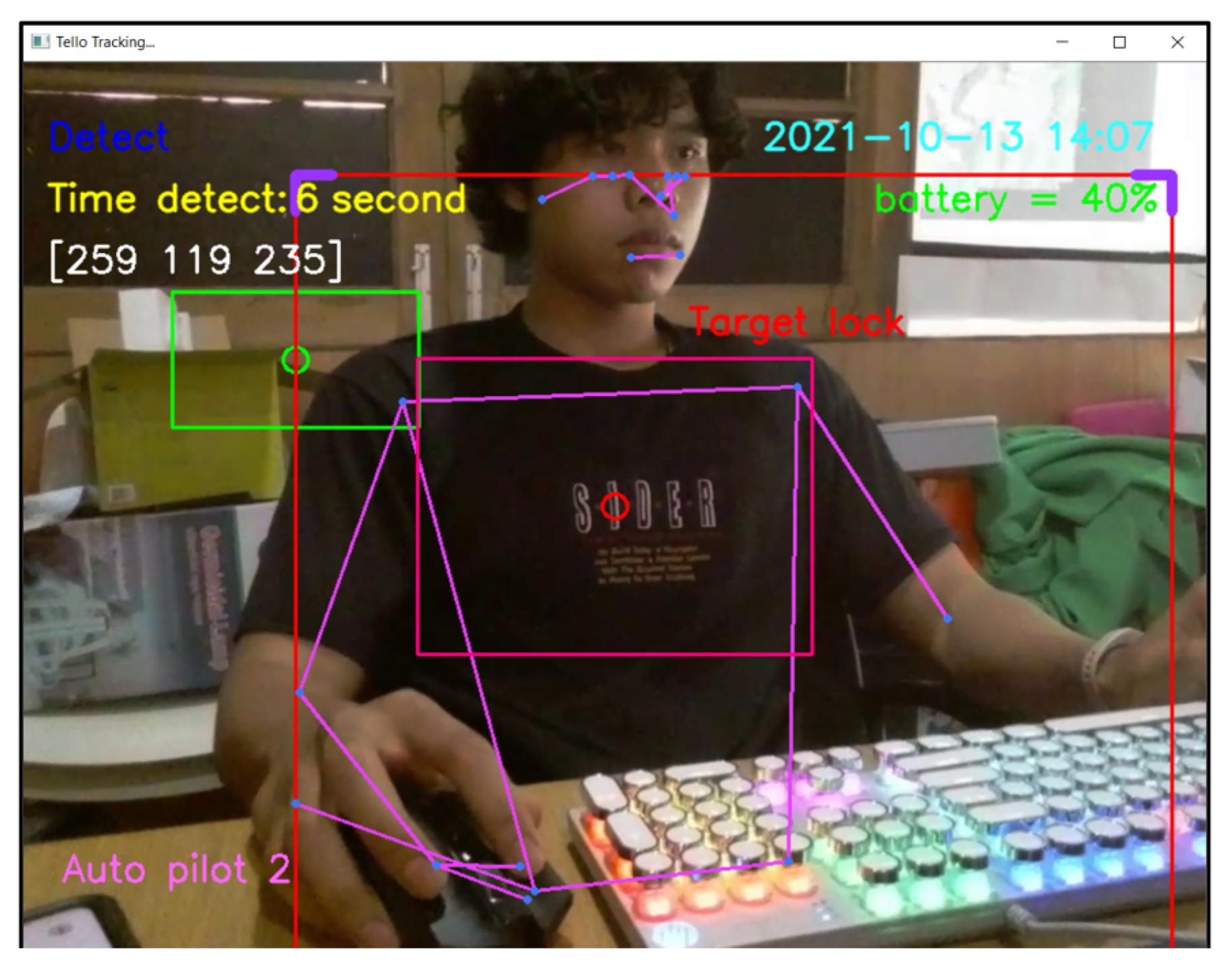

2.3.2. Human-Tracking Flight using the MediaPipe Framework

| Algorithm 1 Human motion tracking algorithm. |

| Input: Stream of pose landmarks Output: Distance vector = initialize , , , , , , , , =0, =0, , , while there are pose landmarks do update a target vector from two consecutive incoming frames by ; ▹ = on a boundary box ; ▹ = on a boundary box without a 150 offset ; ▹ = on a boundary box plus a 150 offset for each target vector frame i do if > 100 then the drone turns left else if < −100 then the drone turns right else do the initialize action of end if if < −55 then the drone moves up else if > 55 then the drone moves down else do the initialize action of end if if > 0 then the drone moves backward else if < 0 then the drone moves forward else do the initialize action of end if end for end while |

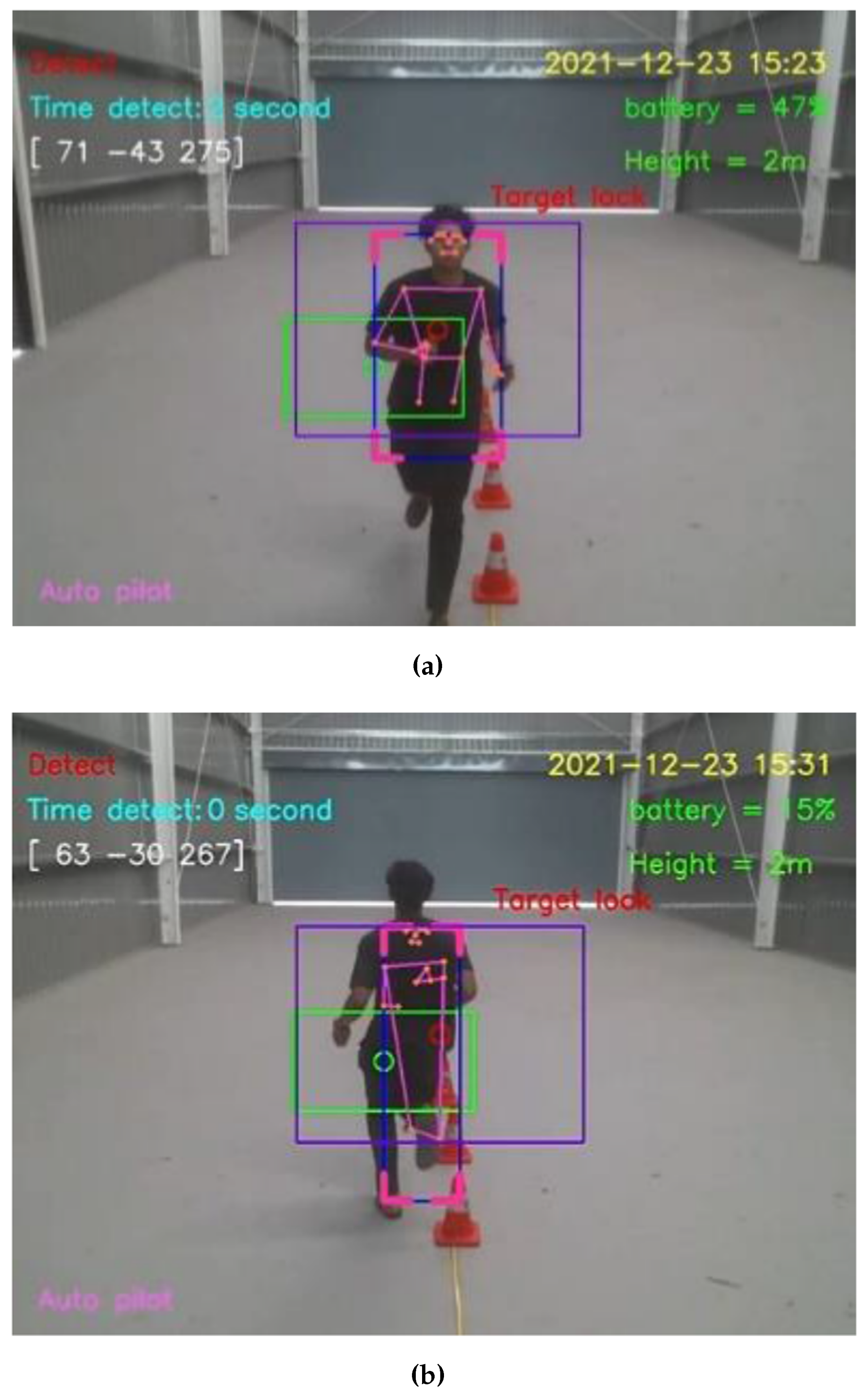

- Scenario of human motion tracking in horizontal direction

- 2.

- Scenario of human motion tracking in the vertical direction

- 3.

- Scenario of human motion tracking in backward and forward directions

2.3.3. Automatic Photo Capture and Alarm Systems

2.3.4. Communication Protocol and Extended Drone Control Ranges

3. Outputs and Experimental Results

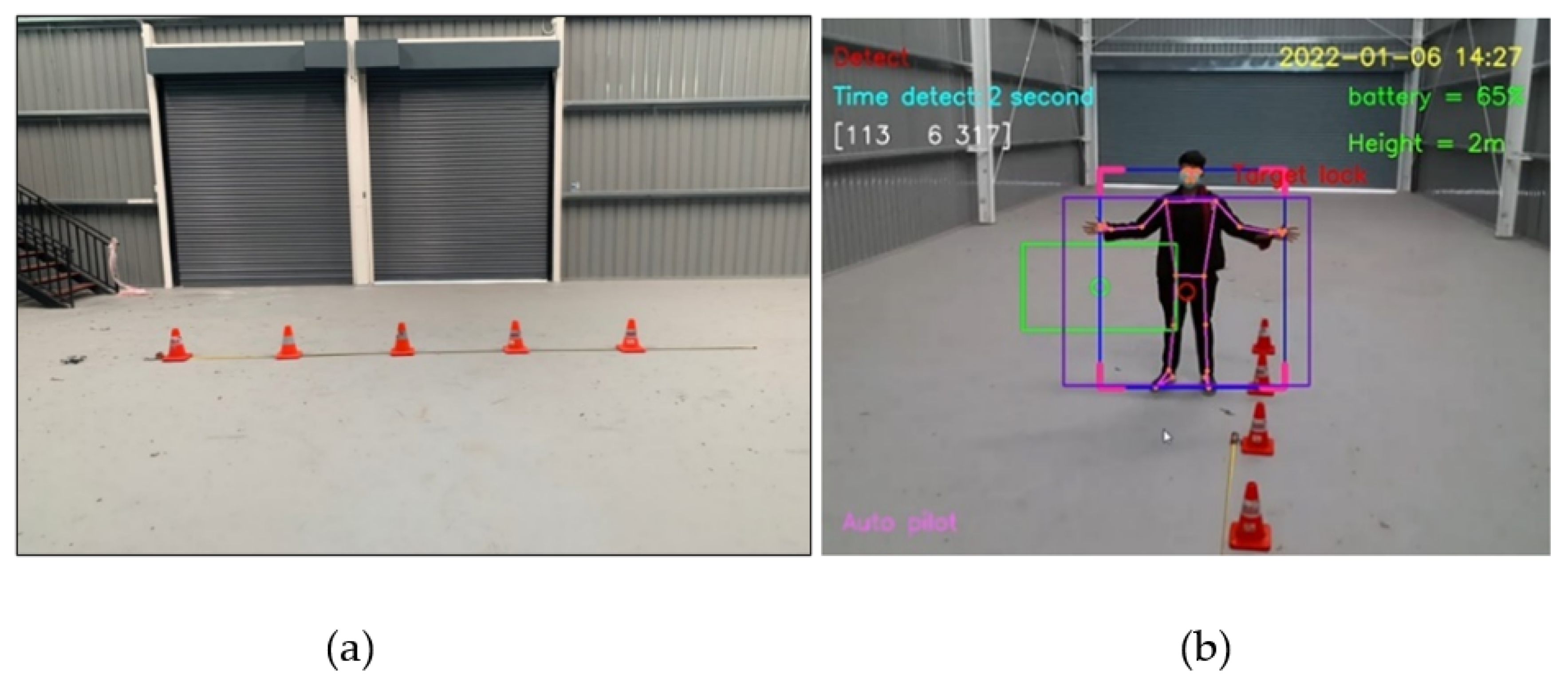

3.1. Proper Distances between Person and Tello EDU Drone

3.2. Target Speed and Direction for Human Motion Tracking by Tello EDU Drone

3.3. Exploration of Light Intensity for Human Motion Detection

3.4. Notification System of Human Motion Tracking

3.5. Fault Detection

3.6. Comparison of Flight-Control Distances between Tello App and Our Design

3.7. Automatic Tracking of Human Motion

- Drone following a person in an indoor–outdoor scenario

- 2.

- Drone following a person in a multi-person scenario

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CCTV | Closed-Circuit Television |

| IP | Internet Protocol |

| UI | User Interface |

| GUI | Graphic User Interface |

| UAV | Unmanned Aerial Vehicle |

References

- Ishii, S.; Yokokubo, A.; Luimula, M.; Lopez, G. ExerSense: Physical Exercise Recognition and Counting Algorithm from Wearables Robust to Positioning. Sensors 2021, 21, 91. [Google Scholar] [CrossRef] [PubMed]

- Khurana, R.; Ahuja, K.; Yu, Z.; Mankoff, J.; Harrison, C.; Goel, M. GymCam: Detecting, Recognizing and Tracking Simultaneous Exercises in Unconstrained Scenes. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 185. [Google Scholar] [CrossRef] [Green Version]

- Koubaa, A.; Qureshi, B. DroneTrack: Cloud-Based Real-Time Object Tracking Using Unmanned Aerial Vehicles Over the Internet. IEEE Access 2018, 6, 13810–13824. [Google Scholar] [CrossRef]

- Wang, L.; Xue, Q. Intelligent Professional Competitive Basketball Training (IPCBT): From Video based Body Tracking to Smart Motion Prediction. In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 7–9 April 2022; pp. 1398–1401. [Google Scholar] [CrossRef]

- Fayez, A.; Sharshar, A.; Hesham, A.; Eldifrawi, I.; Gomaa, W. ValS: A Leading Visual and Inertial Dataset of Squats. In Proceedings of the 2022 16th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 3–5 January 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Lafuente-Arroyo, S.; Martín-Martín, P.; Iglesias-Iglesias, C.; Maldonado-Bascón, S.; Acevedo-Rodríguez, F.J. RGB camera-based fallen person detection system embedded on a mobile platform. Expert Syst. Appl. 2022, 197, 116715. [Google Scholar] [CrossRef]

- Shu, F.; Shu, J. An eight-camera fall detection system using human fall pattern recognition via machine learning by a low-cost android box. Sci. Rep. 2021, 11, 2471. [Google Scholar] [CrossRef] [PubMed]

- De Miguel, K.; Brunete, A.; Hernando, M.; Gambao, E. Home Camera-Based Fall Detection System for the Elderly. Sensors 2017, 17, 2864. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yun, Y.; Gu, I.Y.-H. Human fall detection in videos via boosting and fusing statistical features of appearance, shape and motion dynamics on Riemannian manifolds with applications to assisted living. Comput. Vis. Image Underst 2016, 148, 111–122. [Google Scholar] [CrossRef]

- Nguyen, H.T.K.; Fahama, H.; Belleudy, C.; Pham, T.V. Low Power Architecture Exploration for Standalone Fall Detection System Based on Computer Vision. In Proceedings of the 2014 European Modelling Symposium, Pisa, Italy, 21–23 October 2014; pp. 169–173. [Google Scholar]

- Chaccour, K.; Darazi, R.; El Hassani, A.H.; Andrès, E. From Fall Detection to Fall Prevention: A Generic Classification of Fall-Related Systems. IEEE J. Sens. 2017, 17, 812–822. [Google Scholar] [CrossRef]

- Ahmad Razimi, U.N.; Alkawaz, M.H.; Segar, S.D. Indoor Intrusion Detection and Filtering System Using Raspberry Pi. In Proceedings of the 16th IEEE International Colloquium on Signal Processing and Its Applications (CSPA), Langkawi, Malaysia, 28–29 February 2020; pp. 18–22. [Google Scholar] [CrossRef]

- Nayak, R.; Behera, M.M.; Pat, U.C.; Das, S.K. Video-Based Real-Time Intrusion Detection System Using Deep-Learning for Smart City Applications. In Proceedings of the IEEE International Conference on Advanced Networks and Telecommunications Systems (ANTS), New Delhi, India, 16–19 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Gong, X.; Chen, X.; Zhong, Z.; Chen, W. Enhanced Few-Shot Learning for Intrusion Detection in Railway Video Surveillance. IEEE Trans. Intell. Transp. Syst. 2022, 23, 13810–13824. [Google Scholar] [CrossRef]

- Helbostad, J.L.; Vereijken, B.; Becker, C.; Todd, C.; Taraldsen, K.; Pijnappels, M.; Aminian, K.; Mellone, S. Mobile Health Applications to Promote Active and Healthy Ageing. Sensors 2017, 17, 622. [Google Scholar] [CrossRef] [PubMed]

- Bourke, A.K.; Ihlen, E.A.F.; Bergquist, R.; Wik, P.B.; Vereijken, B.; Helbostad, J.L. A Physical Activity Reference Data-Set Recorded from Older Adults Using Body-Worn Inertial Sensors and Video Technology—The ADAPT Study Data-Set. Sensors 2017, 17, 559. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Munoz-Organero, M.; Ruiz-Blazquez, R. Time-Elastic Generative Model for Acceleration Time Series in Human Activity Recognition. Sensors 2017, 17, 319. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Karatsidis, A.; Bellusci, G.; Schepers, H.M.; De Zee, M.; Andersen, M.S.; Veltink, P.H. Estimation of Ground Reaction Forces and Moments During Gait Using Only Inertial Motion Capture. Sensors 2017, 17, 75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Agrawal, A.; Abraham, S.J.; Burger, B.; Christine, C.; Fraser, L.; Hoeksema, J.M.; Hwang, S.; Travnik, E.; Kumar, S.; Scheirer, W.; et al. The Next Generation of Human-Drone Partnerships: Co-Designing an Emergency Response System. In Proceedings of the Conference on Human Factors in Computing Systems (CHI), Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Zhou, X.; Liu, S.; Pavlakos, G.; Kumar, V.; Daniilidis, K. Human Motion Capture Using a Drone. In Proceedings of the 2018 International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2027–2033. [Google Scholar] [CrossRef] [Green Version]

- RyzeTech.Tello User Manual. Available online: https://dl-cdn.ryzerobotics.com/downloads/Tello/Tello%20SDK%202.0%20User%20Guide.pdf (accessed on 11 September 2022).

- MediaPose. Available online: https://google.github.io/mediapipe/solutions/pose (accessed on 11 September 2022).

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-Device Real-Time Body Pose Tracking. Available online: https://arxiv.org/abs/2006.10204 (accessed on 11 September 2022).

- MediaPipe Holistic. Available online: https://google.github.io/mediapipe/solutions/holistic.html (accessed on 11 September 2022).

- Jabrils. Tello TV. Available online: https://github.com/Jabrils/TelloTV/blob/master/TelloTV.py (accessed on 11 September 2022).

- Schimpl, M.; Moore, C.; Lederer, C.; Neuhaus, A.; Sambrook, J.; Danesh, J.; Ouweh, W.; Daumer, M. Association between Walking Speed and Age in Healthy, Free-Living Individuals Using Mobile Accelerometry—A Cross-Sectional Study. PLoS ONE 2011, 6, e23299. [Google Scholar] [CrossRef] [PubMed]

- Parikh, R.; Mathai, A.; Parikh, S.; Chandra Sekhar, G.; Thomas, R. Understanding and using sensitivity, specificity and predictive values. Indian J. Ophthalmol. 2008, 56, 45–50. [Google Scholar] [CrossRef] [PubMed]

- Zago, M.; Luzzago, M.; Marangoni, T.; De Cecco, M.; Tarabini, M.; Galli, M. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotechnol. 2020, 8, 181. [Google Scholar] [CrossRef] [PubMed]

- Kincaid, M. DJI Mavic Pro Active Track—Trace and Profile Feature. Available online: https://www.youtube.com/watch?v=XiAL8hMccdc&t (accessed on 11 September 2022).

- Truong, V.T.; Lao, J.S.; Huang, C.C. Multi-Camera Marker-Based Real-Time Head Pose Estimation System. In Proceedings of the 2020 International Conference on Multimedia Analysis and Pattern Recognition (MAPR), Hanoi, Vietnam, 8–9 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Alejandro, J.; Cavadas, J. Using Drones for Educational Purposes. Master’s Thesis, Universitat Politècnica de Catalunya· Barcelona Tech—UPC, Catalonia, Spain, 31 October 2019. Available online: http://hdl.handle.net/2117/173834 (accessed on 11 September 2022).

- Hsu, Y.W.; Perng, J.W.; Liu, H.L. Development of a vision based pedestrian fall detection system with back propagation neural network. In Proceedings of the 2015 IEEE/SICE International Symposium on System Integration (SII), Nagoya, Japan, 11–13 December 2015; pp. 433–437. [Google Scholar]

| No. | Distance Conditions (Pixels) | Drone Movement |

|---|---|---|

| 1 | > 100 | Turn left |

| 2 | < −100 | Turn right |

| 3 | −100 < < 100 | No change |

| 4 | < −55 | Move up |

| 5 | > 55 | Move down |

| 6 | −55 < < 55 | No change |

| 7 | > 0 | Move backward |

| 8 | < 0 | Move forward |

| Control Program | Vertical Distance No Wi-Fi | Horizontal Distance No Wi-Fi | Vertical Distance by Wi-Fi | Horizontal Distance by Wi-Fi |

|---|---|---|---|---|

| Tello APP on a smart phone | 7.8 m | 35 m | 30 m | 84.20 m |

| Our program, written in the Python language | 7.3 m | 33 m | 13.40 m | 84.30 m |

| Index List | Zago, 2020 [28] | Yun, 2016 [9] | Miguel, 2017 [8] | Agrawal, 2020 [19] | Zhou, 2018 [20] | Ours |

|---|---|---|---|---|---|---|

| Sensitivity (%) | N/A | 98.55–100 | 96 | N/A | N/A | 96.67–100 |

| Visibility (%) | No | No | No | Yes | No | Yes |

| Image sensors | Webcam | Webcam | Webcam | Multi Drones | DJI Mavic Pro | Tello EDU Drone |

| Resolution (PX) | 1920 × 1080 | N/A | 320 × 240 | N/A | N/A | 960 × 720 |

| Required GPS | No | No | No | Yes | No | No |

| Frame rate (FPS) | N/A | 171 | 7–8 | N/A | 24 | 25 |

| CPU | N/A | Intel® i7 | CortexTM-A7 | N/A | Intel® i7 | Intel® i7 |

| Language | MATLAB | MATLAB | C/C++ | Angular App | N/A | Python |

| Alert system | No | No | Yes | Yes | No | Yes |

| Speed (m/s) | N/A | N/A | N/A | N/A | N/A | 0–2 |

| Target distances from a cam (m) | 2–6 | N/A | N/A | N/A | 1–10 | 1–10 |

| Experiment | A room | A room | A house | A river | Variety | Variety |

| Intensity (lux) | N/A | N/A | N/A | N/A | N/A | 519–41,851 |

| Indoor | Yes | Yes | Yes | No | Yes | Yes |

| Outdoor | No | No | No | Yes | Yes | Yes |

| Propose | Human Fall Detection | Human Fall Detection | Elderly Fall Detection | Search and Rescue | Human Motion Capture | Human Motion Tracking |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boonsongsrikul, A.; Eamsaard, J. Real-Time Human Motion Tracking by Tello EDU Drone. Sensors 2023, 23, 897. https://doi.org/10.3390/s23020897

Boonsongsrikul A, Eamsaard J. Real-Time Human Motion Tracking by Tello EDU Drone. Sensors. 2023; 23(2):897. https://doi.org/10.3390/s23020897

Chicago/Turabian StyleBoonsongsrikul, Anuparp, and Jirapon Eamsaard. 2023. "Real-Time Human Motion Tracking by Tello EDU Drone" Sensors 23, no. 2: 897. https://doi.org/10.3390/s23020897

APA StyleBoonsongsrikul, A., & Eamsaard, J. (2023). Real-Time Human Motion Tracking by Tello EDU Drone. Sensors, 23(2), 897. https://doi.org/10.3390/s23020897